Abstract

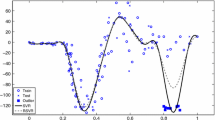

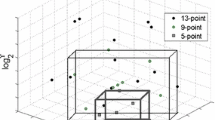

Support vector machine for regression (SVR) is an efficient tool for solving function estimation problem. However, it is sensitive to outliers due to its unbounded loss function. In order to reduce the effect of outliers, we propose a robust SVR with a trimmed Huber loss function (SVRT) in this paper. Synthetic and benchmark datasets were, respectively, employed to comparatively assess the performance of SVRT, and its results were compared with those of SVR, least squares SVR (LS-SVR) and a weighted LS-SVR. The numerical test shows that when training samples are subject to errors with a normal distribution, SVRT is slightly less accurate than SVR and LS-SVR, yet more accurate than the weighted LS-SVR. However, when training samples are contaminated by outliers, SVRT has a better performance than the other methods. Furthermore, SVRT is faster than the weighted LS-SVR. Simulating eight benchmark datasets shows that SVRT is averagely more accurate than the other methods when sample points are contaminated by outliers. In conclusion, SVRT can be considered as an alternative robust method for simulating contaminated sample points.

Similar content being viewed by others

References

Burges CC (1998) A tutorial on support vector machines for pattern recognition. Data Min Knowl Discov 2(2):121–167

Chapelle O (2007) Training a support vector machine in the primal. Neural Comput 19(5):1155–1178

Chen X, Yang J, Liang J, Ye Q (2012) Recursive robust least squares support vector regression based on maximum correntropy criterion. Neurocomputing 97:63–73

Cherkassky V, Ma Y (2004) Practical selection of SVM parameters and noise estimation for SVM regression. Neural Netw 17(1):113–126

Chuang C-C, Lee Z-J (2011) Hybrid robust support vector machines for regression with outliers. Appl Soft Comput 11(1):64–72

Chuang C-C, Su S-F, Jeng J-T, Hsiao C-C (2002) Robust support vector regression networks for function approximation with outliers. IEEE Trans Neural Netw 13(6):1322–1330

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Cui W, Yan X (2009) Adaptive weighted least square support vector machine regression integrated with outlier detection and its application in QSAR. Chemometr Intell Lab Syst 98(2):130–135

Jeng J-T, Chuang C-C, Tao C-W (2010) Hybrid SVMR-GPR for modeling of chaotic time series systems with noise and outliers. Neurocomputing 73(10–12):1686–1693

Liano K (1996) Robust error measure for supervised neural network learning with outliers. IEEE Trans Neural Netw 7(1):246–250

Mountrakis G, Im J, Ogole C (2011) Support vector machines in remote sensing: a review. ISPRS J Photogramm Remote Sens 66(3):247–259

Rousseeuw P, Leroy A (2003) Robust regression and outlier detection. Wiley, New York

Rousseeuw PJ, Van Driessen K (2006) Computing LTS regression for large data sets. Data Min Knowl Discov 12(1):29–45

Shin J, Jin Kim H, Kim Y (2011) Adaptive support vector regression for UAV flight control. Neural Netw 24(1):109–120

Smola A, Schölkopf B (2004) A tutorial on support vector regression. Stat Comput 14(3):199–222

Suykens JAK, De Brabanter J, Lukas L, Vandewalle J (2002a) Weighted least squares support vector machines: robustness and sparse approximation. Neurocomputing 48(1):85–105

Suykens JAK, Gestel TV, Brabanter JD, Moor BD, Vandewalle J (2002b) Least squares support vector machines. World Scientific, Singapore, p 294

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Vapnik V, Vapnik V (1998) Statistical Learning Theory, vol 2. Wiley, New York

Wang L, Jia H, Li J (2008) Training robust support vector machine with smooth Ramp loss in the primal space. Neurocomputing 71(13–15):3020–3025

Wen W, Hao Z, Yang X (2010) Robust least squares support vector machine based on recursive outlier elimination. Soft Comput 14(11):1241–1251

Yang X, Tan L, He L (2014) A robust least squares support vector machine for regression and classification with noise. Neurocomputing 140:41–52

Yuille AL, Rangarajan A (2003) The concave-convex procedure. Neural Comput 15(4):915–936

Zhao Y, Sun J (2008) Robust support vector regression in the primal. Neural Netw 21(10):1548–1555

Zhao Y, Sun J (2010) Robust truncated support vector regression. Expert Syst Appl 37(7):5126–5133

Zhong P (2012) Training robust support vector regression with smooth non-convex loss function. Optim Methods Softw 27(6):1039–1058

Acknowledgments

This work is funded by National Natural Science Foundation of China (Grant Nos. 41371367, 41101433), by SDUST Research Fund, by Joint Innovative Center for Safe And Effective Mining Technology and Equipment of Coal Resources, Shandong Province and by Special Project Fund of Taishan Scholars of Shandong Province.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Communicated by A. Di Nola.

Rights and permissions

About this article

Cite this article

Chen, C., Yan, C., Zhao, N. et al. A robust algorithm of support vector regression with a trimmed Huber loss function in the primal. Soft Comput 21, 5235–5243 (2017). https://doi.org/10.1007/s00500-016-2229-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-016-2229-4