Abstract

Natural hazards have the potential to trigger complex chains of events in technological installations leading to disastrous effects for the surrounding population and environment. The threat of climate change of worsening extreme weather events exacerbates the need for new models and novel methodologies able to capture the complexity of the natural-technological interaction in intuitive frameworks suitable for an interdisciplinary field such as that of risk analysis. This study proposes a novel approach for the quantification of risk exposure of nuclear facilities subject to extreme natural events. A Bayesian Network model, initially developed for the quantification of the risk of exposure from spent nuclear material stored in facilities subject to flooding hazards, is adapted and enhanced to include in the analysis the quantification of the uncertainty affecting the output due to the imprecision of data available and the aleatory nature of the variables involved. The model is applied to the analysis of the nuclear power station of Sizewell B in East Anglia (UK), through the use of a novel computational tool. The network proposed models the direct effect of extreme weather conditions on the facility along several time scenarios considering climate change predictions as well as the indirect effects of external hazards on the internal subsystems and the occurrence of human error. The main novelty of the study consists of the fully computational integration of Bayesian Networks with advanced Structural Reliability Methods, which allows to adequately represent both aleatory and epistemic aspects of the uncertainty affecting the input through the use of probabilistic models, intervals, imprecise random variables as well as probability bounds. The uncertainty affecting the output is quantified in order to attest the significance of the results and provide a complete and effective tool for risk-informed decision making.

Similar content being viewed by others

1 Introduction

The potential of natural hazards to trigger simultaneous failures and, in worse cases, technological disasters [commonly known as Natech accidents (Krausmann and Baranzini 2012)] has progressively nourished the concern of both the scientific community and the public opinion, contributing to increase the awareness toward the intrinsic vulnerability of technological installations to the effect of extreme weather conditions. The gravity of such issues is borne out by projections available on the future trends of global climate, which are expected to lead to an intensification of extreme events. This growth of the risk seems to interest particularly coastal areas as a result of the combination of global sea level rise and the predicted increase of extreme wind and rain events: this arises the risks of flooding along shorelines, threatening numerous technological installations which are been long located on the coast (Evans 2004; Levy and Hall 2005). Several studies already confirm the correctness of these forecasts: a significant increase in heavy precipitation for the twentieth century has been observed on both global and local scales (Trenberth et al. 2007; Maraun et al. 2008). According to the work of Seneviratne et al. (2012), this trend is consistent with the estimated consequences of anthropogenic forcing and is expected to endure in the near future: most geographical areas are not only predicted to be subject to a significant increase of the overall frequency of heavy precipitation, but are also expected to experience higher severity, with a larger proportion of extreme events over the totality of the occurrences (Trenberth et al. 2007). Focusing on a local scale, the number of days with precipitations over 25 mm are expected to increase up to a factor of 3.5 during the winter in the central and southern regions of UK by 2080 (MetOffice 2014). The changes in atmospheric storminess are predicted to affect, together with the increase of mean sea level, the occurrence of extremes in coastal high water levels (Wu et al. 2016). Also in this case the predictions are corroborated by current observations: data collected over ten years (from 1993 to 2003) have shown an average growth rate of 3.1 mm/year for mean sea level (Bindoff et al. 2007). Finally few studies, even if dealing with strong uncertainty, seem to suggest a local upward drift in extreme winds (Rauthe et al. 2010) and winter wind storms (Pinto et al. 2007). In addition to this, the significant increase of utilisation experienced by coastal areas over the twentieth century contributes to increase the risks associated with the occurrence of flooding along shorelines. This trend is expected to endure through the current century with a coastal population estimated to grow of a factor between 1.5 and 4.3 by 2080 (Nicholls et al. 2007): the large and growing presence of communities and industrial facilities in coastal areas contributes to widen the hazardous areas and the related risks, including the increase of Natech accident occurrence. This suggests the need for mitigation measures to enhance both the robustness of existing installations and the design standards for new and more reliable systems. Due to the delicacy of safety issues in the nuclear sector and in light of past events, the nuclear industry must play a key role in the research of new solutions to efficiently tackle the current and future risks posed by natural hazards (Weightman et al. 2011).

The analysis of the vulnerability of nuclear facilities to external hazards, such as those represented by weather extremes, are still few and mainly focused on the reactor safety (Musolas et al. 2015). Less concerns are generally addressed to the spent fuel ponds (SFP, referred as pools in American English) which still have the potential to trigger dramatic accident scenarios in the case of exposure of the spent fuel stored. According to the Nuclear Regulatory Commission, this event, even if unlikely to occur, would have high-impact consequences, eventually causing one or more zirconium fires in the facility (Collins and Hubbard 2001). It is hence essential to focus on the reliability of these systems for a more accurate assessment of the vulnerability of nuclear installations and the identification of effective risk mitigation measures.

A crucial point of risk assessment in the nuclear industry, and more generally in the case of complex technological systems, is represented by the computational and theoretical tools available. The models and methodologies adopted for the analysis should not only be able to depict the overall mechanisms behind the possible accident scenarios, but also provide information useful for long-term decision making as well as for supporting decision makers in the case of imminent danger (Cruz et al. 2004). Furthermore, the approach selected should guarantee an adequate representation of the complexity of the system under study and of the interactions among its subsystems, allowing the analysis of different scenarios and taking explicitly into account the uncertainty affecting the input, with particular regards to climate change projections (Keller 2009), and its propagation within the model. This study proposes a multi-disciplinary model for the robust quantification of the risk exposure of spent nuclear facilities introducing the use of a novel computational tool and methodology able to take into account the imprecision affecting the data (through the use of imprecise probabilities) and thus to quantify the uncertainty affecting the output. The approach adopted is based on the use of graphical models, namely Bayesian Networks (BNs). This choice has been dictated by the capability of BNs to tackle many challenging aspects of the kind of analysis performed: first of all, differently from deterministic methods which still are widely adopted in industry (Jerome Tixier et al. 2002), a probabilistic approach such as BNs offers unquestionable advantages, providing a more realistic and systematic estimate of risk and safety and including the uncertainty affecting the initial knowledge in the analysis. Second, as generally true for graphical models, BNs provide a common intuitive language easily understandable and widely recognized regardless the personal background of the user. This is an essential requirement in view of the strongly interdisciplinary nature of risk analysis of complex systems subject to natural hazards, which implies the collaboration of experts from very different fields of science and industry (e.g. seismologists, hydrologists, meteorologists, process engineers, psychologists, government officials, emergency managers etc.) (Straub 2005). The intuitivity of the approach makes BNs an accessible supporting tool for decision makers, who are expected to be the final users of the type of analysis performed in this study, and can potentially facilitate the communication of risk to the public, filling the communication gap existing between these realities (Sivakumar 2011). Furthermore, in comparison with more common methodologies such as fault tree analysis, BNs present several advantages, overcoming several restrictive assumptions implicit in the fault tree methodology (e.g., the restriction to Boolean logic), providing the adequate representation of complex dependencies among components, including uncertainty in the model and allowing both forward and backward analysis (Bobbio et al. 2001). In light of this, BNs are particularly suitable for capturing the complexity of the systems and the mechanisms behind multiple failures. This allows the analysts to effectively assess the likelihood of domino effects within the facility and to fully understand the possible consequences of simultaneous failures, widely regarded as the most critical threat posed by natural hazards to technological installations. Third, thanks to the availability of algorithms for the analytical computation of inference (i.e. exact inference), BNs are able to handle very small values of probabilities. This capability plays a crucial role in the assessment of low probability-high impact events such as weather extremes and natural hazards. Finally, BNs offer a consistent framework to coherently integrate sources of information of very different natures (e.g. experimental measurements, projections, historical data, computational models etc) thus to combine empirical information with mechanical models and engineering judgement. In particular, thanks to Bayesian statistics which is the mathematical background of this approach, BNs allow the inclusion of subjective information, such as experts opinions, which have an essential role in areas of research strongly affected by uncertainty and lack of data. This is the case of natural hazards for which useful historical data are often not available due to the high variability of both frequencies and consequences.

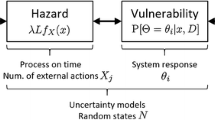

Nevertheless, traditional BNs are mainly restricted to the use of discrete probability values which cannot fully capture the intrinsically aleatory nature of natural events nor the epistemic uncertainty affecting the data available. This limitation, as highlighted in previous studies (Tolo et al. 2016), strongly straitens the ability of the method to model, with equal effectiveness, the information related to both natural hazards and technological installations.

A novel approach is proposed in this study in order to overcome these constraints and provide a complete tool for decision making support. The method adopted allows to integrate within the BN framework different kinds of mathematical models tailored on the features of the variables involved but also their uncertainty, which has the potential to undermine the credibility of the results obtained. Indeed, a meticulous and accurate analysis cannot disregard the degree of uncertainty affecting the data on which the decision is based. This kind of information provides a sort of context for the numerical results obtained, bounding their significance and hence measuring the effectiveness and accuracy of the analysis itself. In other words, the lack of information and hence the ignorance regarding a certain process are themselves an essential part of the information and must be made available to adequately support risk-informed decisions. The efficiency of the computational implementation of such approach, referred to as Enhanced Bayesian Networks (EBNs), is guaranteed by the adoption of robust and cutting-edge numerical methods borrowed from the field of structural reliability. Thanks to this, the tool implemented results suitable for both long-term and near-real time decision making support.

The method proposed is illustrated in this study through the implementation of a network for the vulnerability assessment of nuclear facilities subject to external hazards, and its application to the Sizewell B nuclear power plant facility in East Anglia, UK. In more detail, the framework consists of an Enhanced Bayesian Network which includes discrete variables as well as intervals, random and imprecise random variables (e.g., random variables whose uncertain parameters are intervals) and probabilistically bounded parameters. Furthermore it involves secondary models related to different subjects, from climate change to human error and coastal reliability issues. Probability bounds are calculated for several failure events (and different accident scenarios) associated with the exposure of the spent nuclear fuel stored on site. The results obtained are discussed in order to demonstrate the advantages and limitations of the proposed methodology.

2 Methodology

This section aims to give an overview of the theoretical background of the methodology adopted and to briefly describe the computational tool implemented for its application.

2.1 Bayesian Networks

Bayesian Networks (BNs) are statistical models based on the use of directed acyclic graphs. As any graphical model, BNs present a double nature: on the one hand, the structure of the problem under study is reproduced by a graphical framework, where nodes stand for random variables and edges represent the causal relationship existing between two nodes. On the other hand, the strength of the dependencies between variables is expressed through the introduction of Conditional Probability Distributions (CPDs). These are tensors collecting the numerical parameters of the network, i.e. the conditional probabilities associated with the outcomes of any node of the network given an instantiation of its parents. Conversely, when a node is not linked to any parent it is referred as a root of the network and associated with marginal probability distributions. With regards to the simple example in Fig. 1, the node X1 can be identify as a root of the network as well as the parent of X2 and X3, which are also referred to as its children. BNs satisfy the local Markov condition according to which values of a variable remain conditionally independent of its non-descendants given its parent variables. The main strength of BNs consists of their capability to factorize the joint probability over any set of variables \(\mathbf X =\lbrace X_{1}, X_{2},...,X_{n}\rbrace\) forming the network by simply exploiting the knowledge available regarding their conditional dependencies. Indeed, according to Bayes’ theorem, such joint probability can be expressed as:

where \(p(X_{i}\vert pa(X_{i}))\) is the conditional density function of the node \(X_{i}\) while \(pa(X_{i})\) denotes possible instantiations of its parents. Generally, inference with BNs involves the calculation of the posterior marginal probability for a query variable. The key feature of BNs consists of updating this information when new information become available. This allows not only to update the belief toward a certain event (and is indeed referred as belief updating) on the basis of the information gathered but also to take into account possible what-if scenarios. This is obtained introducing evidence in the network: for example, the evidence \(\mathbf E =\lbrace X_{3}=x_{3,1}\rbrace\) fixes the values of variables \(X_{3}\), assigning to it the outcome \(x_{3,1}\). Hence, the distribution for the query variable \(X_{1}\) given the evidence \(\varvec{E}\) over the variables \(\varvec{X_{E}}=\lbrace X_{1}, X_{3}\rbrace\) can be expressed as:

Several algorithms, both exact and approximate, are available in the literature for the extrapolation of probabilistic information regarding one or more query variables on the basis of the BN structure (process generally known as inference). Exact inference algorithms (e.g., junction tree algorithm) are robust and well-established in scientific literature but generally restricted to the use of crisp values for the network parameters. This implies the discretization of continuous random variables, impoverishing the quality of the information available. Approximate approach is generally based on simulation techniques (e.g., Markov chain Monte Carlo methods), allowing to perform inference on continuous nodes. The main drawbacks of this option are the computational time required for the simulations and the unknown rates of convergence. Moreover, approximate inference presents significant limitations in computing low probabilities of rare events, in particular with regards to near-real-time inference and decision analysis (Straub and Kiureghian 2010). Please refer to the work of Pearl and Russell for a complete overview of Bayesian Networks (Pearl and Russell 2000).

The first studies on BNs were proposed by Judea Pearl and date back to the early eighties (Pearl 1985), but the lack of robust algorithms and computational resources has initially slowed down the development of this technique. Conversely, with the rapid establishment of advanced computer technology, it has attracted large interest in various sectors of science and engineering (Weber et al. 2012). The attractiveness of this approach in the field of risk assessment can be attributed to the capabilities of dealing with very low-probability events (Hanea and Ale 2009), modelling elaborate networks of dependencies (as those characterizing complex systems) (Khakzad et al. 2013) and, most of all, integrating information of different nature, from experimental data to expert judgements (Cheon et al. 2009; Kim et al. 2006). All these aspects make BNs particularly attractive in the study of natural hazards and their interaction with technological installations (Straub 2005; Bayraktarli et al. 2005; Tolo et al. 2014).

2.2 Bayesian Networks Enhanced with system reliability methods

The adoption of exact algorithms for the computation of inference on BNs is generally recognised as a more robust and accurate approach in comparison to approximate analysis. On the other hand, the restrictions of this kind of computation, generally limited to the use of crisp probability values, significantly affect the capability of models to capture reality and hence the information available. This is rarely provided in the form of crisp numbers and results always affected by a certain degree of uncertainty which, if not adequately integrated in the analysis, can easily lead to misleading results. To adapt the data available to the use of exact inference algorithms, a discretization procedure is required for continuous variables: this inevitably depletes the quality of the initial information and subsequently the accuracy of the models. The methodology referred to as Enhanced Bayesian Network (EBN) allows to bypass this practice overcoming the limitations of the traditional approach. It consists of combining BNs with system reliability methods, which are adopted in order to reduce the initial models, containing both discrete and continuous variables, to traditional BNs and thus allowing the computation of exact inference. In this study the existent methodology has been extended to the use of imprecise probabilities, such as intervals and imprecise random variables: if such variables are present in the initial network, the reduced model will not be a traditional BN but a BN including probability bounds. Nevertheless, the propagation of bounds in the network can still be performed adopting exact inference algorithms (Thöne et al. 1997).

The assumption at the basis of the EBN approach is that any node child of one or more non-discrete nodes has to be represented as a function of these variables, hence characterized as domains in the outcome space of its parents. The computation of the conditional probability value of a child node can hence be translated into a reliability problem and solved through the use of well-known system reliability methods. Such a computation automatically releases the child node from the causal links which connect it to its non-discrete parents, allowing the progressive removal of continuous nodes from the network which finally would include only discrete variables.

Taking into account the example in Fig. 2 and considering Eq.(1), the joint probability over the discrete variables D1 and D2 would result:

where \(p(D_{1})\), \(p(D_{2}|D_{1},C_{1})\) are the probability values included in the CPDs of \(D_{1}\) and \(D_{2}\) respectively, while \(f(C_{1})\) is a continuous probability density function associated with the probabilistic node \(C_{1}\). Since \(D_{1}\) is independent from \(C_{1}\) according to the local Markov condition, the integral of interest can be rearranged as:

In light of the initial assumption, \(D_{2}\) results to be a function of its non-discrete parent \(C_1\) for any instantiation of the discrete parent \(D_1\). In other words, each entry of the CPD of \(D_2\) is defined by a domain \(\Omega ^{d2}_{D2,d1}\) in the space of \(C_1\) given \(D1=d1\). Hence, Eq.(4) can be further modified (Straub and Kiureghian 2010):

The formulation of the problem obtained in Eq.(5) is equivalent to that of traditional reliability problems solved adopting reliability methods.

Indeed, various strategies for the solution of this integral are available in the literature, such as numerical integration techniques, Monte Carlo simulations (Hammersley and Handscomb 1964) and asymptotic Laplace expansions (Rackwitz 2001). Common approximate solutions largely adopted in practice are First-Order and Second-Order Reliability Methods (Hasofer and Lind 1974), which ensure low computational costs but generally perform poorly in high dimensional spaces or in the case of strongly non-linear domains. A range of advanced sampling techniques, such as Importance Sampling, Line Sampling, Stratified Sampling etc., have been developed quite recently in order to overcome the limitations of approximate reliability methods on the one hand, reducing the cost associated with the traditional MC approach on the other.

2.3 Proposed computational approach

Differently from previous applications of EBN available in the literature, the methodology has been extended to include intervals and imprecise random variables within the framework of the initial network. The aim of the proposed computational approach is to efficiently accomplish such integration, which implies the resolution of equations similar to that in Eq.(5) also when non-probabilistic variables (i.e. intervals and imprecise random variables) are involved. The field of structural reliability offers a wide range of numerical strategies suitable for the fulfilment of this task: in the computational tool implemented, several of these methods have been selected and fully integrated with the BN approach. It is worth highlighting that the use of structural reliability methods is justified merely by the common numerical configuration of the computation under study [i.e. identification of conditional probability of nodes children of non-discrete variables as for Eq.(5)] and the traditional structural reliability problem, and does not necessarily imply a conceptual correspondence between structural reliability and risk exposure quantification. This introduces two main advantages: on the one hand, the data available can be captured accurately avoiding the introduction of unmotivated assumptions. This would significantly enhance the robustness of the model in comparison with traditional approaches which force the analyst to choose, more or less arbitrarily, a precise distribution not fully justified by the data available. On the other hand, the explicitly depiction of the imprecision affecting the input allows tracking the propagation of this latter within the model, quantifying the degree of uncertainty of the output and providing a crucial information for risk-informed decision making. In light of this, if the initial network contains non-probabilistic but continuous nodes, the reduced network is not a traditional BN containing only crisp parameters but instead includes nodes whose outcomes are associated with probability bounds.

The methodology proposed has been implemented in the general purpose software OpenCossan (Patelli et al. 2012, 2014; Patelli 2016) in an object oriented fashion. This ensures programming flexibility, computational efficiency and allows to avoid code duplication. OpenCossan is a collections of methods and tools under continuous development, coded exploiting the object-oriented Matlab programming environment. It allows to define specialized solution sequences including any reliability method. Furthermore, thanks to the strong flexibility, new reliability methods or optimization algorithms can be easily added. The computational framework is organized in classes, i.e. data structures consisting of data fields and methods together with their interactions and interfaces (Patelli et al. 2012). Objects (i.e., instances of classes) can be then easily aggregated, forming more complex objects and being processed according to the related methods in order to obtain the output of interest. The numerical implementation associated with this study consists mainly of two classes: the first of these, Node, provides the basic input of the graphical model.

According to the nature of the variable represented, four different categories for the Node type can be identified:

-

discrete, including nodes whose CPDs can contain either exact probability values or probability bounds.

-

probabilistic, including continuous nodes whose CPDs contain stochastic variables or vectors of stochastic variables;

-

bounded, embracing nodes enclosing interval variables;

-

imprecise probabilistic, referring to nodes representing imprecise random variables.

The combination of more Node objects allows the construction of the EnhancedBayesianNetwork object, defined by its namesake class. These two classes together provide the graphical and numerical implementation of the Enhanced Bayesian Network model. Their interaction with the reliability methods available in the OpenCossan framework provides the reduction of the initial network to traditional BNs or BNs including probability bounds, according to the procedure described previously.

Several methods, borrowed from the field of structural reliability, are available in the toolbox for the efficient solution of Eq.(5). Generally, the nature of the variables involved, together with the desired degree of accuracy, leads the selection of the method to adopt. In more detail, four cases can be easily identified: only probabilistic and discrete variables involved, only bounded and discrete variables involved, both probabilistic and bounded variables involved and, finally, any kind of variable including imprecise random variables. In the first case Eq.(5) would involve only traditional probabilistic variables and can hence be efficiently solved through the use of both traditional or advanced (e.g., Line Sampling or Important Sampling) MC methods (Koutsourelakis et al. 2004) as well as semi-probabilistic methods such the First Order Reliability method (FORM) (Kiureghian 2005). In the second case, where the input consists of discrete and interval variables, the method proposed by Jiang et al. (2011) and based on the use of convex sets can be adopted. It is opportune to specify that in this case the result of the computation cannot be considered a probability value, conversely provides only a coarse information regarding the possibility of failure. This would lead to overestimate the probability of the event under study, which would be assumed as 1 even if the event is only possible and no more information about its actual likelihood is available. It is hence highly recommendable to avoid this kind of set-up. The third case refers to the presence of probabilistic and interval variables in input and can be computed through the use of two methods available in the toolbox: the first refers to the work of Luo et al. (2009) and is based on the mixture of sets of continuous probability distributions and convex sets of intervals on which a nested minimization problem is computed. The other method available consists of coupling advanced Monte Carlo methods (i.e., Adaptive Line Sampling) with optimization methods in order to estimate the lower and upper bounds of the failure probability (Angelis et al. 2015). Dissimilarly from the previous, this approach allows for both imprecise probability distribution functions (i.e., credal sets) and sets of bounded variables. The main advantage of this approach is the possibility to provide the probability bounds for the event of interest without approximating the limit state function. Moreover it is largely applicable with significant benefits in terms of computational cost: the efficiency of the strategy is independent of the magnitude of the failure probability, which is a large advantage in comparison to traditional approaches such as direct MC, and ensures the feasibility of the computation also in high dimensional spaces with a limited number of samples. Furthermore, this latter method is the only one suitable for problems involving imprecise random variables (e.g., probabilistic variables with uncertain but bounded parameters).

The computation of inference on the reduced network can be carried out through the use of built-in inference algorithms as well as through the interaction of the tool with the Bayes’ Toolbox for MATLAB (Murphy 2001). Figure 3 depicts the main structure of the computational tool implemented.

To sum up, the approach described suggests to process the implemented models following a two-step procedure: first, the network containing probabilistic, non-probabilistic, hybrid and discrete variables is reduced to a BN containing crisp and interval probabilities through the use of numerical methods imported from the field of structural reliability; in a second phase, the inference of the events of interests represented by the model is performed through the use of traditional exact inference algorithms. These two stages of the analysis are associated with different requirements and hence can be characterized by different computational times. The reduction of the network can be considered as part of the model design itself and hence can also not be associated with particular time requirements. On the contrary, since the tool is designed to provide both long-term and near real-time decision making support, the results of inference computation on the network (e.g. the estimation of the probability associated with a certain accident scenario) should be provided within the shortest time possible, offering a valid support also in case of emergency. The proposed approach, thanks to the reduction of the network to only discrete and interval probabilities, reduces the computational costs of inference without affecting the accuracy of the analysis and allows the satisfaction of this requirement.

3 Vulnerability analysis of nuclear facilities

The purpose of the model implemented in this work is to quantify the probability associated to several accident scenarios and failure events involving a fuel pond subject to the threat of flooding events, overcoming the limitations highlighted in a previous study and associated with the adoption of traditional BNs (Tolo et al. 2016). The drawbacks of such an approach were mainly linked to the use of crisp probabilities, which cannot fully represent the aleatory and epistemic uncertainty affecting the variables (a crucial aspect for climate variables and projections); a further restriction is the impossibility to take into account correlation among nodes when causal models are not available for the graphical representation of the dependencies but this information is available only numerically (e.g., through the estimation of correlation factors from experimental data). Both these issues are overcome in the current model: the framework implemented includes probabilistic, interval and hybrid variables as well as probability intervals, fully capturing the information available and its imprecision. Moreover, the method adopted allows to relax the usual BN constraints regarding the variables correlation, including it within the structural reliability analysis when required.

The model proposed takes into account the flood hazard as main source of risk, estimating its evolution over time in light of the effect of climate change. Moreover the consequences of eventual human errors and their interaction with natural hazards are included in the analysis.

Extreme weather events have the capability to affect the facility on different levels. On the one hand, they threaten the safety of the installation directly, for instance leading to accumulation of water in the station, causing the failure of drainage systems or even preventing the access to the facility from the outside. On the other, the primary impact of natural hazards can result in much wider accident scenarios, triggering chains of failures within the station (e.g., the accumulation of water can lead to flood barriers failure thus to the unavailability of emergency generators increasing the possibility of a station black-out).

The model aims to capture an overall picture of the possible accident scenarios. Three sections can be identified in the network according to their task: the first one aims to capture the primary impact of the natural hazards on the facility and thus models the direct interaction between the natural events and the technological installation. It embraces variables related to natural events (e.g., extreme rainfall, extreme high tide etc.) which are directly linked to external subsystems of the facility (e.g., drainage system, outfall etc.). In light of the aleatory nature of weather events and the strong influence of epistemic uncertainty on climate variables predictions, this subset of the network contains most of the non-discrete variables of the overall model.

A second section of the network aims to depict the possible chains of failures internal to the system. Due to the nature of the technological failures here represented, all the variables of this subset are of a discrete nature and, more specifically, boolean. Nevertheless, differently from previous studies, the numerical frame associated with this section captures the uncertainty affecting the data available through the adoption of probability bounds instead of crisp probabilities. This way, the information available in the literature (e.g., regarding the failure rate of industrial machines) is fully represented.

Finally, a third part of the network, gathered from a previous study (Groth and Mosleh 2011) and integrated in the overall model, takes into account the probability of human error which can contribute to the overall growth of risk. This section, shown in Fig. 13, integrates in the framework Performance Influencing Factors (PIFs) enabling analysts to adapt the network to the system under study in light of event data collection and analysis. However data limitations have precluded the further development of this part of the model, which remains consistent with the previous model.

The framework proposed is shown in Fig. 4 and consists of 63 nodes. The marginal probability associated to any event represented by one or more of the nodes can be computed, offering an important insight of the failure mechanisms provoked by the interaction between extreme weather events and the facility and providing the decision maker with useful information for long-term as well as emergency risk management.

In the following, the description of the network and its application to the real-world case-study of Sizewell B nuclear power station in East Anglia is presented. First, a brief description of the facility is proposed, followed by a detailed description of the natural-technological interaction and internal failure sections. For further details on the human error section please consult the previous study (Tolo et al. 2016). Since each node of the network is designed to represent a specific event, if not differently specified, the terms defining each node and the related event are considered interchangeable from now on. Earthquakes or extreme winds and other sources of risks different from flooding fall outside the area of interest of the current model. In spite of this restriction and the application to a specific case-study, the network proposed in this paper can be easily modified to capture the features of different facilities or even adapted to enlarge the range of external hazards included in the analysis.

3.1 Case-study

The case-study selected for the application of the proposed model is the nuclear power station of Sizewell B, operated by EDF and located on the coast of East Anglia in the county of Suffolk, UK. One of the main reasons behind this choice is the particularly interesting location of the facility whose surroundings, according to the flood maps provided by the Environment Agency Environment Agency (2014), are subject to the risk of flooding. In addition to this, the closure of the facility, initially planned for 2035, has been postponed to 2055 to meet EDF’s strategic target (Houlton 2013): this makes even more crucial to evaluate the impact of climate change on the risks to which the facility is and will be subject during its operational life.

Differently from other sites in the UK, such as Magnox and AGR, the strategy for the Sizewell station consists of long-term storage of the spent fuel under water and on-site (Office for Civil Nuclear Security 2004). This clearly sets high capacity requirements, which have led to the construction of a new dry fuel storage to guarantee the necessary storage volume. Finally, the plan for the construction of a new power plant on the area adjacent to the facility under study makes this analysis potentially useful for further developments and applications.

Sizewell B is currently the youngest of the UK nuclear power plants and provides approximately \(\mathrm {3\%}\) of the overall UK’s power demand. The communication with the national grid is realized at three different 400 kV nodes, at Bramford, Norwich and Pelham, to which the on-site electrical substation is connected. On the south of Sizewell B is located the station of Sizewell A, no longer operational, while on the eastern side lie the so called Bent Hills. These are steady sand ridges which reach a maximum height of 10 m in correspondence of the east boundary of the station, sloping down to the shoreline: they act as the sea wall of the station which hence results 100 m distant from the shore. The area surrounding the station on the other directions is mainly marshland subject to the risk of flooding. The entry of the nuclear site is located in correspondence of the access road built at 3.5 m AOD. The spent nuclear fuel is stored together with the new assemblies under water in a stainless steel pool. The depth of water at which the fuel is kept ensures the coverage of the elements for 24 h even in the case of complete loss of power and then cooling. This is provided by a cooling system, separate from that of the reactor, which provides the thermal exchange between the pumped flow and the seawater (default heat-sink). A second heat-sink based on an air-cooling mechanism is also available in case of emergency or failure of the primary cooling circuit. The availability of electrical power on-site binds the working order of the cooling system in the fuel facilities. These are located next to the reactor building and, as all the other sensible buildings, are equipped with fire doors which are expected to act as flood barriers with a design basis of 1 m of water (EDF Energy 2013). A reservoir with a maximum water level of 13.9 m AOD and an invert level of 6.9 m AOD is also located on site (Sizewell 2011).

3.2 Natural-technological interaction section

The aim of this subset of the network (shown in Fig. 5) is to model the direct interaction between external events (i.e., natural hazards) and the nuclear facility, including its surroundings. In light of this, the nodes involved in this section can be categorized according to their task and consequently the nature of the related variables.

Node as WindWavePeriod, WindWaveHeight, SwellPeriod, SwellHeight, IncidentWaveHeight, IncidentWavePeriod, ExtremePrecipitation represent climate variables and are represented by probabilistic nodes (ellipsoidal nodes in Fig. 5). Indeed, the aleatory essence of weather phenomenons such as precipitations or sea conditions, are well depicted by probabilistic variables: large sets of data are available for the implementation of these models and a remarkable part of the scientific literature has been dedicated in the last decades to identify the most suitable distribution types for the representation of natural events such as wave conditions. Conversely, failure events such those involving subsystems of the facility and directly triggered by the natural events, such as OutfallFailure, DischargeFailure, have a boolean nature which can be adequately represented through discrete variables (rectangular nodes in Fig. 5). Similarly, FloodingSurroundings refers to the failure of local flooding defences to keep the water level under a value which can affect the station (i.e., the elevation of the access roads): also this event has an intrinsic boolean nature and hence can be represented by a discrete node. In order to map future risk of flooding, climate change projections regarding future sea and surge level values (respectively SeaLevelRise and SurgeTrend) are included in this section. The inner variability of climate at both global and local scale, regardless human influences, together with the incomplete knowledge of the climate system and the inability to model it perfectly, make any estimate of future climate conditions strongly affected by uncertainty. This plays hence a crucial role in the analysis of future risks when considering the prediction of future behaviours of such aleatory phenomenons: coherently, the predicted values are generally inferred from sophisticated mathematical models which give spatial and temporal details and provide estimations of the uncertainty associated with each variable. To fully capture this information in the model, the related nodes SeaLevelRise and SurgeTrend are characterized by interval variables. The result of the combination of these latter with the probabilistic distributions of extreme high tide and extreme surge baselines are imprecise random distributions (trapezoidal nodes in Fig. 5) which depict the trend of ExtremeHighTide and ExtremeSurge over the time domain analysed. Apart from climate change projections, interval variables are also adopted to characterize several structural parameters considered affected by imprecision (i.e., SeaWallInclination, LocalDefenceHeight, DrainageSystemCapacity, OutfallCapacity, GrossStationArea, FloorAreaRatio, FloodBarriersCapacity, HighTideDuration and SeawallLength).

Conversely from the other nodes mentioned, the function of the discrete nodes TimeScenario and EmissionScenario is quite unique within the model proposed. They can be considered as selectors for scenarios based on a particular time of reference and conjectures about the possible future. This hypothesis, on which the projection inference itself is based, is enclosed in the so called emission scenarios, according to the Special Report on Emissions Scenarios (SRES) nomenclature (Nakicenovic and Swart 2000). Each of these scenarios characterize a different possible future on the basis of greenhouse emissions trends and expected evolution of human activities. All together these scenarios represent a set of comprehensive global narratives, or story-lines, that define local, regional and global socio-economic driving forces of change such as economy, population, technology, energy and agriculture, key determinants of the future emissions pathway.

In the current application the node TimeScenario embraces seven outcome states referring to as many time slices, according to the projections available: 2010–2039, 2020–2049, 2030–2059, 2040–2069, 2050–2079, 2060–2089 and 2070–2099. The node EmissionScenario has been restricted to the medium emission scenario (A1B according to the UKCP09 nomenclature). The assumptions behind the latter refers to an increasingly flourish economy, and a significant population growth peaking in 2050 at 8.7 billion and then declining to 7.1 billion in 2100. From a technological point of view, new technologies are expected to successfully penetrate the market going along with a more balanced use of fossil and non-fossil energy sources.

As previously mentioned, the effect of climate change on the sea conditions is modelled through the connection between the scenario nodes on the one hand, and the nodes SurgeTrend and SeaLevelRise on the other. Hence, to each combination of TimeScenario and EmissionScenario corresponds a specific sea level rise interval stored in the node SeaLevelRise. The SeaLevelRise intervals are defined by bounds equal to the 5th and \(95{\text{th percentile}}\) of the projected values. Similarly, the node SurgeTrend takes into account the predicted trend of surge for the time period of reference. Also in this case the intervals adopted refer to the 5 and \(95{\text{th percentile}}\) projections bounds. Both the sea level rise and surge trend projections have been provided by the UK Climate Projections (UKCP09) MetOffice (2014) up to 2100. The trends given above are combined linearly with the mean of near-present day extreme surge and extreme tide baselines (Figs. 6 and 7) to obtain imprecise random variables for projections of future extreme sea level trends. The current return values related to the extreme high tide for the coast of Sizewell have been provided by the Environment Agency whilst the distribution related to the ExtremeSurge has been gathered from BODC data referring to the East Anglia coast (NOC 2015). Variations related to seasons have not been considered.

Previous studies (Lowe et al. 2009) have shown that it is reasonable to add mean sea level changes linearly to the storminess-driven change component around the UK coast. In light of this, where required (i.e., to compute the probability associated with the nodes OutfallFailure, FloodingSurroundings, FloodingStationArea), the future extreme sea level values have been computed as a linear combination of ExtremeHighTide and ExtremeSurge under the assumption of independence.

Also the event ExtremePrecipitation is directly affected by the time and emission scenario considered. The probabilistic models representing this event have been implemented on the basis of the results of previous studies (Francis 2011), which provide return periods and the related uncertainty according to the Season, TimeScenario and EmissionScenario of reference. Fig. 8 shows the empirical distribution adopted for the 100-year return period ExtremePrecipitation expected for the time slice 2030–2059.

Also extreme wave conditions are expected to be affected by climate change hence be dependent on the time period considered: unfortunately, the studies addressing this topic and the related projections are strongly affected by uncertainty and often incongruous due to contradictory data and limitations of the models adopted (Seneviratne et al. 2012; Leake et al. 2007). For this reason, the nodes related to wave conditions and the node TimeScenario have been considered independent. Similarly, also the emission scenario selected is assumed not to affect the wave characteristics: studies conducted on a local scale on the coast of East Anglia have highlighted how, in the southern part of the region, where the station is located, the adoption of different emission scenarios had only negligible impact on the estimate of the projections for wave conditions (Chini et al. 2010). The same study found offshore extremes not to be significantly affected by sea level rise, which results negligible in comparison to offshore water depths. As a consequence of this, the scenario nodes and sea state nodes (WindWavePeriod, WindWaveHeight, SwellPeriod, SwellHeight, IncidentWaveHeight, IncidentWavePeriod) are not connected in the network and thus assumed independent.

All the nodes of these section are linked to each other on the basis of their causal relationships in order to draw a path of the possible mechanisms of flooding which can affect the facility. In the current model, these can be classified in three categories:

-

1.

Surface water flooding

-

2.

River and tidal flooding

-

3.

Sea wave overtopping

The first of this mechanism of flooding refers to the occurrence of the event DischargeFailure, hence to the impossibility of discharging water from the station leading to its accumulation within the facility area. The failure of the overall discharge system is assumed to occur in the case of failure of both the station drainage system and outfall. The event DrainageSystemFailure is verified when the amount of ExtremePrecipitation overcomes the capacity of the drainage system (DrainageSystemCapacity). Similarly, the station outfall is assumed to fail when the still water level, i.e. combination of ExtremeHighTide and ExtremeSurge, results higher than the OutfallCapacity.

As a consequence of this simultaneous failure, the rainfall water is considered to accumulate within the station perimeter regardless the topological profile of the site. Both OutfallCapacity and DrainageSystemCapacity are parameters assumed to be known with a certain degree of imprecision and are hence represented by intervals centred in the systems design basis values. These have been assumed equal to 300 mm/d for the DrainageSystemCapacity and 5 m for the OutfallCapacity on the basis of the literature available (EDF Energy 2013). The bounds adopted for the interval variables are shown in Table 1.

Since the storm surge consists of a meteorologically-driven component of water level generated by synoptic variations of atmospheric pressure and wind (Wolf 2009), a certain relation between ExtremePrecipitation and ExtremeSurge would be expected. In spite of this, studies focusing on the Eastern British coast stated that it is mainly precipitation in the northern part of the coast that shows dependence with daily maximum sea surge, while for the Anglian coast only low or non-significant dependencies have been found (probably due to the different tide-surge interaction). In light of this, in the current study the relation between ExtremePrecipitation and ExtremeSurge is considered low enough to be approximated with independence (Svensson and Jones 2002).

In addition to the surface water flooding, heavy rainfall and extreme sea level can also lead to other flooding mechanisms able to affect the area surrounding the station, such as river and tidal flooding. The event FloodingSurroundings, which embraces these two contributions, is assumed to occur when the water depth reaches the access road level.

The river flooding is mainly due to the interaction between ExtremePrecipitation and water bodies present in the area. With regards to the case-study analysed, no external models have been adopted: this contribution alone is presumed not able to lead to the FloodingSurroundings according to previous studies (EDF Energy 2013). Nevertheless, existent models and historical data can be easily integrated in the network to represent this mechanism and verify the hypothesis introduced.

Here, the node ExtremePrecipitation is assumed to have the potential to affect the access road, triggering the event FloodingSurroundings, only in association with tidal flooding. This latter is considered to happen when the combination of ExtremeTide and ExtremeSurge overcomes the height of the SeaDefencesHeight. This parameter is represented by a bounded node covering an interval between 3.88 and 4.12 m. On the contrary, thanks to the elevation of the site, tidal flooding does not have the potential to affect the station. Furthermore, the risk of significant tsunamis on European coasts is generally negligible (Kerridge 2005).

Dissimilarly, the sea wave overtopping (Fig. 9) of the station’s sea wall could cause the penetration of sea water in the perimeter of the facility (Kopytko and Perkins 2011) and is hence considered the only coastal flooding mechanism able to contribute to the FloodingStationArea event. The quantification of the risk of flooding from the sea implies modelling the mechanism of discharge of sea water within the station perimeter due to the action of sea waves. This has been realized integrating in the current framework an EBN model previously developed (Tolo et al. 2015) for the quantification of sea wave overtopping hazard. The related sub-part of the network is based on the approach suggested by Reis et al. (2006) and involves 16 nodes of the natural hazard section (i.e., SwellHeight, SwellPeriod, WindWavePeriod, WindWaveHeight, IncidentWavePeriod, IncidentWaveHeight, SeaWallInclination, ParameterA, ParameterB, ParameterC, CrestLevel, SlopeRoughness, SeaWallLength, ScatterParameter, ExtremeHighTide and ExtremeSurge). Waves are assumed to overcome the defences only if the condition \(0\le \dfrac{(CL-SWL)}{rCH_{s}}<1\) is verified, where CL measures the highest elevation of the seawall, SWL the average sea water surface elevation (taking into account both ExtremeHighTide and ExtremeSurge), r the seawall SlopeRoughness, \(H_{s}\) the IncidentWaveHeight (i.e., combination of the significant wave height of wind waves and swell). Similarly, C represents the ratio of the maximum vertical extent of wave up-rush on the structure (Sorensen 2006) to the waves significant height and is a function of the so called surf similarity parameter \(\xi _{p}\). This latter is computed as:

where \(T_{p}\) represents the IncidentWavePeriod (i.e., combination of the peak periods of wind waves and swell). Hence, given seawall features such as SlopeRoughness and SeaWallInclination [\(\alpha\) in Eq.(6)], the overtopping rate can be expressed as:

where g refers to the gravitational acceleration, A and B to empirical coefficients (ParameterA, ParameterB) of the model dependent on the SeaWallInclination (Reis et al. 2008) and \(e_{B}\) to a parameter which represents the scatter about the line of perfect agreement between the predicted and measured values of the mean discharge (ScatterParameter). On the contrary, if the condition \(0\le \dfrac{(CL-SWL)}{rCH_{s}}<1\) is not verified the overtopping rate results equal to zero.

This model allows for bi-modal sea representation which means that the seawall of the facility is assumed exposed to waves generated locally by winds as well as long-period waves associated with distant storms (i.e., swell). Indeed, although swell waves tend to have lower wave heights than wind-sea waves around England and Wales, their much higher periods can lead to higher run-up and overtopping of sea defence (Hawkes et al. 1997). Data regarding swell and bi-modal wave climate around the coast of England are provided by Hawkes et al. (1997): those adopted for this study refer to the Suffolk area and are represented in Fig. 10. On the basis of the information available, a Weibull distribution was adopted for the significant swell height (SwellHeight) with a mean of 0.121 m and standard deviation 0.307 m. For the swell peak periods, an empirical distribution was derived with mean 12.04 s and standard deviation 2.46 s. Generally, for swell conditions, higher waves tend to have shorter periods: a linear correlation coefficient of −0.032 has been computed from the data available. Probabilistic distributions for the wind waves conditions (WindWavePeriod, WindWaveHeight) have been implemented fitting historical data (CEFAS 2014) to generalized extreme value distributions (see Table 2) adopting the maximum likelihood approach. A linear correlation coefficient of 0.29 between the two variables, represented by the continuous line in Fig. 11, has been computed. Since swell waves are not well correlated with local meteorological conditions they results only weakly correlated with either extreme wind-seas or surge water levels (Hawkes et al. 1997).

In light of this, the correlation between ExtremeSurge and swell conditions has been neglected whilst a linear correlation factor of −0.06 has been assumed between WindWaveHeight and SwellWaveHeight (McMillan et al. 2011). Also, a correlation coefficient of 0.659 has been assumed between WindWaveHeight and ExtremeSurge, on the basis of the information provided by Hawkes and Svensson (2003). Since from the data available it is not possible to identify a clear trend for the seasonal variability of waves and swell conditions this has been neglected. A further assumption concerns the direction of the waves, which have been considered to be normally incident to the structure. Finally, no wave transformation models has been adopted. This simplificative hypothesis is expected to result in a strongly conservative approach. The nodes IncidentSignificantHeight and IncidentPeakPeriod are computed according to Eqs.(8) and (9).

where \(H_{s(w)}\), \(T_{p(w)}\) represent the WindWaveHeight and WindWavePeriod, \(H_{s(s)}\) and \(T_{p(s)}\) the SwellHeight and SwellPeriod, and \(m_{0(w)}\) \(m_{0(s)}\) the zero-th moment of the wind-sea and swell spectrum of surface elevation. ParameterA, ParameterB and ParameterC depend on the inclination of the seawall and the waves characteristics. Please refer to Reis et al. (2008) for further details on their computation. All the parameters involved in the calculation are assumed to be affected by imprecision and then represented as bounded nodes. Table 3 shows the interval values adopted as input.

The simultaneous occurrence of the flooding dynamics mentioned can cause the event FloodingStationArea: the facility is assumed flooded when the accumulation of water within its area reaches depths higher than those considered as design basis for the on-site FloodBarrierCapacity. If such a case is verified, the water is assumed to penetrate the buildings of the nuclear island and to affect the subsystems there located. As a consequence of this, chains of internal failures can be triggered according to the mechanisms modelled by the dedicated section of the network.

3.3 Internal failure section

As anticipated in the previous paragraphs, the section modelling the internal chains of events which can lead to simultaneous failures and eventually to the exposure of fuel (Fig. 12) embraces only discrete nodes, coherently with the nature of the events involved. On the other hand, it is worth to clarify that this does not exclude the use of probability intervals in the definition of an event of interest: each state of a discrete node can be associated with probability bounds instead of singular values in case of uncertain or contradictory data. The input associated with this section of the network have been deduced either from previous studies or, more generally, from data available in literature. Where probability bounds were available they have been included in the analysis.

Only if both the cooling system and the emergency supplies are out of order the exposure of the fuel assemblies (SpentFuelExposure) becomes possible. The cooling system of the spent fuel pond is assumed not to function in the case of lack of both OnSiteAC (i.e., electric power produced on-site) and OffSiteAC (i.e., provided by the national grid) (Baranowsky 1985). The first can be unavailable due to either planned, such as refuelling operations, or unplanned power station outages, such as emergency reactor shut-downs. These would cause the failure of the EmergencyPowerSupplies which, for the power plant under study, consist of four emergency diesel generators. These provide power to the safety buses to guarantee the correct functioning of crucial systems in the case of unavailability of other power sources (Kancev and Duchac 2013). Coherently, in the current model the failure of the EmergencyPowerSupplies is a precursor of station blackout in association with the loss of OffSiteAC. In the case of closure of the station, as for the last three time scenarios, the failure of the EmergencyPowerSupplies is a sufficient condition for the lack of OnSiteAC. The probability values associated with the nodes PlannedOutage and UnplannedOutage have been deduced from the occurrence of past events, on the basis of the information provided by EDF Energy (2014). The probability bounds for the events of failure of EmergencyPowerSupplies have been deduced combining the rate of failure to start and to run within an hour from the start of the four independent generators available on-site (Eide et al. 2007). On the other hand, the unavailability of OffSiteAC can be due to the failure of either the OnSiteSubstation or the ExternalPowerGrid (Liscouski and Elliot 2004). This latter event implies the simultaneous failure of the three connection points to which the Sizewell B on-site substation is linked in the 400 kV system (substations at Bramford, Norwich and Pelham). In light of the large distance among the substations, these are considered not to be affected by the eventual extreme weather conditions at Sizewell and are considered independent from each other. The failure rate of the electric substations, regardless external event, has been gathered from the existent literature (Nack 2005). In the case of a general loss of external power grid the nuclear plant is assumed to shut down safely (UnplannedOutage), according to procedures (Maldonado 2004).

A further eventuality modelled by this section of the network is the lack of actions to prevent the exposure of the fuel in the case of malfunctioning of the cooling system. This scenario is summarized by the node EmergencySupplies, which takes into consideration both technological failures and human errors. Both these cases refer to the intervention of operators to refill the pond with high purity water possibly from an external storage tank through the use of third systems (e.g., fire system) (Adorni et al. 2015). Due to the lack of related information, possible losses associated with the storage and the water inventory are not modelled, whilst the only technological failure considered refer to the EmergencyHydrantSystem, which are supposed to be used to pump the water flow into the pond. The malfunctioning of these systems would impede to make-up for the water evaporated from the pond due to overheating. Also the event HumanError is assumed to lead to similar consequences and refers to the lack of action by operators.

Even in the case of occurrence of either HumanError or the malfunction of EmergencyHydrantSystem, the exposure of the spent fuel can still be avoided. Indeed, the depth of water in the pond is designed to ensure thermal inertia, providing adequate coverage for the assemblies up to 24 h in order to give time to act from the outside, for example through the use of fire tenders. Only if also this action is ineffective (DelayInReaction), due for instance to the inaccessibility of the station (i.e., access road flooded), the EmergencySupplies are supposed to fail.

The connection of this part of the model to the former one is guaranteed by the nodes FloodingSurroundings, FloodingStationArea and TimeScenario, which have the capability to directly affect the state of the subsystems of the facility. The flooding in the surrounding area can impede the access to the station by fire tenders, due to its capability to affect the access road. Similarly, the event FloodingStationArea has the potential to cause the malfunction of diesel generators and hydrant systems as previously described. The node TimeScenario allows to select the state of the station, operational or closed, in order to consider the availability of power generation on-site.

Finally, as mentioned, the event HumanError represents the lack of actions by the operators and is modelled according to the study of Groth and Mosleh (2011) through a further section of the model shown in Fig. 13. Table 4 shows the probability bounds adopted for the subsystems failure state, given the absence of flooding in the station.

3.4 Results

The results obtained from the inference computation on the reduced model (Fig. 14) appear to be affected by uncertainty which generally grows along with time, coherently with the uncertainty affecting the climate projections in input. On the contrary, the probability bounds computed for the single events do not show a general monotonic growth. This is due again to the trend of the projections adopted: while the sea level is expected to increase regularly in time, the projections related to the extreme precipitation and surge trend do not show a similar behaviour. In light of this, and considering the major contribution of the tide over the surge on the still water level, the regular growth of the probability bounds of the event FloodingSurroundings shown in Fig. 16, should be attributed to the trend of sea level projections. As shown in the graph, the probability of this events grows from an interval of [6.25 × 10−03, 2.05 × 10−02] in the first time scenario to one of [8.77 × 10−03, 1.10 × 10−01], suggesting the importance of both current and future risks. On the other hand, events linked to the extreme precipitation occurrence (such as FloodingStationArea shown in Fig. 18), are characterized by a far less regular trend of probability in time. As shown in Fig. 15, the probability of SpentFuelExposure shows this kind of trend, which reveals the strong connection between the possibility of exposure of the spent fuel and the occurrence of FloodingStationArea. The probability associated to the overall exposure event is expected to fall in an interval [3.28 × 10−09, 1.16 × 10−08] for the time slice 2010–2039 and [9.08 × 10−09, 2.97 × 10−07] for the period 2070–2099: the upper bound of the probability increases of one order of magnitude along with the time domain considered.

As for the previous case, also the probability bounds computed for the events of failure of the CoolingSystem (Fig. 17) and FloodingStationArea (Fig. 18) fall entirely in a quite negligible region, with a minimum lower bound within an order of magnitude of 10−09 and a maximum upper bound within an order of magnitude of 10−06 (time scenario 2070–2099). The closeness between the probabilities of the two events and their trend suggest the flooding of the station to be the main possible cause of failure of the cooling system.

Looking at the problem from the opposite perspective, it is possible to estimate also the conditional probability of an event of interest. Several What if scenarios have been evaluated, with the aim of better understanding the distribution of the risk within the model.

In the case of CoolingSystem failure, the probability of exposure of the spent fuel (Fig. 19) grows significantly, up to non-negligible values: the smaller values of probability are registered for the time slice 2010–2039 and are equal to [6.20 × 10−03, 2.10 × 10−02] and increase up to [8.73 × 10−03, 1.12 ×10−01] for the period 2070–2099. Similar results are computed in the case of occurrence of the event FloodingStationArea as shown in Fig. 20. Moreover, the trend shown by the probability along with time highlights the importance of the event FloodingSurroundings: in the case of flooding of the station or failure of the cooling system, actions to prevent the SpentFuelExposure are required from outside and can be impeded in the case of flooding of the surrounding area and inaccessibility of the station. On the other hand, due to the low values of the probability of FloodingStationArea, the effect of the simultaneous occurrence of HumanError and FloodingSurroundings on the probability of SpentFuelExposure results slighter (Fig. 21): the maximum values for the probability bounds are registered for the time slice 2070–2099 and define the interval [1.03 × 10−06, 2.72 × 10−06].

4 Interpretation of the results and limitations

Almost all the probability intervals computed fall in a region of negligibility of the risk (order of magnitude lower than 10−06) supporting the robustness of the system. Further investments in the accuracy of the information available would lead to a decrease of the uncertainty of the outputs, hence to more precise results. Moreover, a large contribution to the inaccuracy of the results could come from the introduction of simplificative hypothesis in the model. For instance, the introduction of off-shore in-shore transformation models could sensibly decrease the upper bound of the FloodingStationArea probability. A crucial aspect for the feasibility of this kind of analysis is the availability of suitable data for the definition of input parameters. While information regarding the performance of technological components (hence related to the bottom section of the model) are generally provided in the scientific literature, studies regarding natural hazards, and in particular modelling their future trend in view of the climate change, are still few and often in disagreement. However, it must be noticed that this complication is not uniquely associated with the method proposed in this study, but generally common to any kind of approach aiming to model the interaction between natural events and technological installations. On the contrary, in comparison with more traditional approaches, the method proposed allows the inclusion of the uncertainty of the data available and even of eventual contrasting information, characterizing the output in light of the degree of accuracy determined by the input.

The main drawback of the approach and model developed is the high computational effort which can prevent real-time analysis of the initial network (although it does not affect the computation of inference on the reduced network). Nevertheless, it is worth to highlight that the computation of the inference, thanks to the simplification of the initial model, can be carried out in near-real time and hence can provide support also in the case of emergency as long as the reduction of the initial network has been previously accomplished. Algorithms able to identify the most crucial events in terms of uncertainty propagation are essential in order to tackle effectively the problem.

In light of this, the main target for further research appears to be the implementation of theoretical and computational tools able to map the contributions of the different variables to the overall uncertainty in output, in order to obtain more accurate results at the lowest cost. Also, future efforts will focus on the optimization of the reduction procedure and the identification of the optimal topology for the reduced network, in order to further decrease the computational cost of the analysis.

5 Conclusions

A model for the quantification of the risk of exposure of spent fuel stored in facilities subject to the risk of flooding has been proposed and applied to the real-world case study of Sizewell B nuclear power plant in East Anglia, UK. The approach adopted for the study is based on a novel methodology which allows to overcome the limitations of traditional Bayesian Networks, not renouncing to their potential. The framework implemented captures the unavoidable epistemic uncertainty and aleatory nature of the input through the adoption of discrete variables, probabilistic models, intervals and imprecise random variables. Moreover, it allows to perform the uncertainty propagation within the network, quantifying the uncertainty affecting the output, expressed by probability bounds. These capabilities make the methodology proposed and its computational implementation a complete and effective tool for risk-informed decision making support.

The analysis of the case-study selected has been extended to several time scenarios, mapping the future risks to which the facility is subject in light of the worsening of natural hazards due to climate change. The results highlight a general but not regular growth of the risk along with time. The probability of accidents remains quite low over the time domain considered, whilst the uncertainty in output appears to grow coherently with that affecting the projections adopted as input. What-if scenarios have been considered in order to identify the crucial links of the chain of events leading to the overall failure.

References

Adorni M, Esmaili H, Grant W, Hollands T, Hozer Z, Jackel B, Munoz M, Nakajima T, Rocchi F, Strucic M, Tregoures N, Vokac P (2015) Status report on spent fuel pools under loss-of-coolant accident conditions final report. Technical Report CSNI/R(2015)2, Nuclear Energy Agency, Paris

Baranowsky P (1985) Evaluation of station blackout accidents at nuclear power plants. Technical findings related to unresolved safety issue A-44. Draft report for comment. Technical Report NUREG-1032, Nuclear Regulatory Commission, Washington, DC

Bayraktarli YY, Ulfkjaer J, Yazgan U, Faber MH (2005) On the application of Bayesian probabilistic networks for earthquake risk management. In 9th international conference on structural safety and reliability, Italy, Rome. IOS Press, Amsterdam

Bindoff NL, Willebrand J, Artale V, Cazenave A, Gregory J, Gulev S, Hanawa K, Le Quéré C, Levitus S, Nojiri Y, Shum CK, Talley LD, Unnikrishnan A (2007) Observations: oceanic climate change and sea level. In : Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate Change 2007: the Physical Science Basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change, Cambridge University Press, Cambridge, p 385–432

Bobbio A, Portinale L, Minichino M, Ciancamerla E (2001) Improving the analysis of dependable systems by mapping fault trees into Bayesian networks. Reliab Eng Syst Saf 71(3):249–260

CEFAS (Centre for Environment Fisheries and Aquaculture Science) (2014) Sizewell waverider post recovery data. http://cefasmapping.defra.gov.uk/. 7 Aug 2014

Cheon S-P, Kim S, Lee S-Y, Lee C-B (2009) Bayesian networks based rare event prediction with sensor data. Knowl-Based Syst 22(5):336–343

Chini N, Stansby P, Leake J, Wolf J, Roberts-Jones J, Lowe J (2010) The impact of sea level rise and climate change on inshore wave climate: a case study for east anglia (U.K.). Coast Eng 57(11):973–984

Collins T, Hubbard G (2001) Technical study of spent fuel pool accident risk at decommissioning nuclear power plants. Technical Report NUREG-1738, U.S. Nuclear Regulatory Commission Office of Nuclear Regulatory Research Washington, DC

Cruz AM, Steinberg LJ, Vetere Arellano A, Nordvik J-P, Pisano F (2004) State of the art in natech risk management. Technical Report Rep. EUR. 21292, DG Joint Research Centre, European Commission and United Nations International Strategy for Disaster Reduction, Ispra, Italy

de Angelis M, Patelli E, Beer M (2015) Advanced line sampling for efficient robust reliability analysis. Struct Saf 52:170–182

Der Kiureghian A (2005) First-and second-order reliability methods. Engineering design reliability handbook. CRC Press, Florida, p 14

EDF Energy (2013) EU stress test - Sizewell B. https://www.edfenergy.com/sites/default/files/jer-srt-stt-pub-fin-007_szb_stress_test_v1.1.pdf. 16 May 2013

EDF Energy (2014) Sizewell B community reports. http://www.edfenergy.com/download-centre. 8 Sep 2014

Eide SA, Wierman TE, Gentillon CD, Rasmuson DM, Atwood CL (2007) Industry-average performance for components and initiating events at U.S. commercial nuclear power plants. Technical Report NUREG/CR-6928, U.S. Nuclear Regulatory Commission Office of Nuclear Regulatory Research, Washington, DC 20555-0001

Evans E (2004) Foresight: future flooding— scientific summary. Technical Report URN 04/939, U.K. Government Office for Science. Volume I: future risks and their drivers

Francis T (2011) Extreme precipitation analysis at sizewell: Final report, Met Office, Devon, U.K

Groth K, Mosleh A, (2011) Development and use of a Bayesian network to estimate human error probability. In: ANS PSA, (2011) International Topical Meeting on Probabilistic Safety Assessment and Analysis Wilmington. NC, Wilmington, NC

Hammersley JM, Handscomb DC (1964) Monte carlo methods, 1st edn. Springer, Heidelberg

Hanea D, Ale B (2009) Risk of human fatality in building fires: a decision tool using Bayesian networks. Fire Saf J 44(5):704–710

Hasofer AM, Lind NC (1974) Exact and invariant second-moment code format. J Eng Mech Div 100(1):111–121

Hawkes PJ, Bagenholm C, Gouldby BP, Ewing J (1997) Swell and bi-modal wave climate around the coast of England and Wales. HR Wallingford Limited, Wallingford

Hawkes PJ, Svensson C (2003) Joint probability: Dependence mapping and best practice. Technical Report FD2308/TR1, U.K. Environment Agency, Rotherham

Houlton N (2013) Life extension of the edf energy nuclear fleet. In: Proceedings of the Nuclear Institute Congress 2013, Nuclear Institute, London

Jiang C, Han X, Lu GY, Liu J, Zhang Z, Bai YC (2011) Correlation analysis of non-probabilistic convex model and corresponding structural reliability technique. Comput Method Appl Mech Eng 200(33):2528–2546

Kancev D, Duchac A (2013) European clearinghouse: Events related to emergency diesel generators. Technical Report JRC83672/EUR 26055 EN, European Commission Joint Research Centre Institute for Energy and Transport, Ispra, Italy

Keller CF (2009) Global warming: a review of this mostly settled issue. Stoch Environ Res Risk Assess 23(5):643–676

Kerridge D (2005) The threat posed by tsunami to the U.K. Technical report, Department for Environment, Food and Rural Affairs (DEFRA), Flood Management Division, London, U.K.,

Khakzad N, Khan F, Amyotte P, Cozzani V (2013) Domino effect analysis using Bayesian networks. Risk Anal 33(2):292–306

Kim MC, Seong PH, Hollnagel E (2006) A probabilistic approach for determining the control mode in cream. Reliab Eng Syst Saf 91(2):191–199

Kopytko N, Perkins J (2011) Climate change, nuclear power, and the adaptation-mitigation dilemma. Energy Policy 39(1):318–333