Abstract

We derive optimal bounds on the bias of approximation of unknown mean of the parent population by Tukey’s trimean defined as the weighted average of the sample median and sample quartiles. The bounds are expressed in standard deviation units and the distributions for which the bounds are attained are specified. The results are illustrated with numerical example.

Similar content being viewed by others

1 Introduction

Consider the random sample \(X_1,\ldots ,X_n\) with common cumulative distribution function (cdf) \(F\) and the quantile function \(F^{-1}(u)=\sup \left\{ x\in \mathbb R:F(x)\le u\right\} \) for \(u\in [0,1]\). Assume that the mean \(\mu =EX_1\) and the variance \(\sigma ^2={{\mathrm{Var}}}X_1\) of the parent population are finite, so that

and

Let \(X_{1:n}\le \cdots \le X_{n:n}\) denote the order statistics of the sample \(X_1,\ldots ,X_n\). We consider the problem of estimation of unknown \(\mu \) by \(L\)–statistics, i.e. linear combinations of order statistics. Rychlik (1998) provided \(p\)–norm upper bounds on expectations of \(L\)–statistics and Goroncy (2009) considered lower bounds on positive \(L\)–statistics. In particular, Danielak and Rychlik (2003) considered single order statistics and trimmed means, and their results were strengthened by Danielak (2003) for distributions with decreasing density or failure rate. Raqab (2007) considered left–sided Winsorized means and Bieniek (2014a) extended his results to two–sided Winsorized means. In the context of generalized order statistics, optimal bounds on expectations of arbitrary \(L\)-statistic from bounded populations were derived by Rychlik (2010). Recently Bieniek (2014b) provided bounds on the bias of quasimidranges

i.e. arithmetic means of two fixed order statistics.

In this paper we consider another \(L\)–statistic, namely the sample trimean \(T_n\) introduced by Tukey (1977) as an element of a set of statistical techniques in descriptive statistics called “exploratory data analysis”. The trimean \(T_n\) is defined as

where \(M\) is the sample median and \(H_1\) and \(H_2\) are lower and upper hinges of the sample. The sample median is defined usually as

which can be written in a more compact way as

where \(\left\lfloor x \right\rfloor \) and \(\left\lceil x \right\rceil \) denote the floor and the ceiling functions defined as

The lower (upper) hinge is defined as the median of the lower (upper) half of the sample including sample median. Formally, for simplicity we define

Therefore, the trimean of the sample is

This is an example of “insufficient” but (computationally) quick estimator of \(\mu \) and its advantage over the sample median or any other quasimidranges lies in the fact that trimean combines the central tendency of the sample median with the extremes involved in quartiles. For comparison of the trimean with various trimmed means (including sample median) we refer to the paper of Rosenberger and Gasko (1983). Also see Mosteller (2006) for situations when the usage of quick estimators is more appropriate from economical point of view.

Another, heuristical motivation for considering trimean as an estimator of \(\mu \) comes from the numerical results presented in the above mentioned papers. If we want to estimate \(\mu \) by an \(L\)–statistic involving as small observations as possible then it appears that, heuristically speaking, the best approximation of \(\mu \) by single order statistic is obtained for the sample median, and by two order statistics — for the quasimidrange \(M_{r,s}\) with \(r\approx \frac{n}{4}\) and \(s\approx \frac{3n}{4}\). Therefore if we want to approximate \(\mu \) by linear combination of three order statistics it seems reasonable to use the sample trimean.

In this paper we derive sharp upper and lower bounds for the bias of approximation of \(\mu \) by trimean \(T_n\) expressed in standard deviation units, i.e. on

Since \(T_n\) is a symmetric \(L\)–statistic, then the lower bounds are just negative values of corresponding upper bounds (see Goroncy 2009), so we confine ourselves to the latter ones. The bounds we derive are obtained by the projection method, described in detail in the monograph of Rychlik (2001), which in our case amounts to Moriguti’s approach of the greatest convex minorants. This approach has been used in many of the above mentioned papers and also e.g. by Okolewski and Kałuszka (2008) to provide sharp bounds on expectations of concomitants of order statistics.

The main obstacle one has to overcome is to project the function which has three local maxima onto the convex cone \(\mathcal C\) of nondecreasing square integrable functions on \([0,1]\). Namely, if \(f_{i:n}\) denotes the density function of the \(i\)th order statistic from uniform \(U(0,1)\) distribution, then

where

Therefore, by projection method and Schwartz’ inequality

where \(\overline{\varphi }_{n}\) is the projection of \(\varphi _{n}\) onto \(\mathcal C\) (see Rychlik (1998) Thm. 7). The equality is attained for \(F\) such that

It is well-known Moriguti (1953) that the projection \(\overline{\varphi }_{n}\) is determined as the right-hand derivative of the greatest convex minorant \(\overline{\varPhi }_{n}\) of the distribution function \({\varPhi }_{n}\) defined as

Therefore first we are forced in Sect. 2 to determine monotonicity regions of \(\varphi _{n}\). However, this is not sufficient in order to find \(\overline{\varphi }_{n}\) and in Sect. 3 we consider two auxiliary functions \(g_{n}\) and \(h_{n}\), which determine the projection \(\overline{\varphi }_{n}\) uniquely. In Sect. 4 we apply results of Sect. 3 to determine exact shapes of projections of \(\varphi _{n}\) onto \(\mathcal C\). In Sect. 5 we provide analytical values of bounds on the bias of trimeans, and we illustrate them with their numerical values.

2 Shapes of projected functions

In terms of Bernstein polynomials

we have \(f_{i:n}(u)= nB_{i-1,n-1}(u)\), and putting \(j=\left\lfloor \frac{n}{4} \right\rfloor \), we get

Using the relation for the derivative of a Bernstein polynomial

we get for \(n=2k+1\)

and for \(n=2k\)

The sign changes of such linear combinations are studied with the aid of variation diminishing property of Bernstein polynomials of Schoenberg (1959).

Lemma 1

(VDP) The number of zeros in \((0,1)\) of any linear combination \(\sum _{i=0}^n a_iB_{i,n}\) of Bernstein polynomials does not exceed the number of sign changes in the sequence \(a_0,a_1,\ldots ,a_n\) of its coefficients. Moreover, the first and the last signs of the combination are the same as the signs of the first and the last, respectively, nonzero element of the sequence.

By VDP each \(\varphi _{n}'\) is either positive-negative (\(+\,-\) for short) or \(+-+\,-\) or \(+-+-+\,-\). First we show that the second case is impossible. This follows from part (b) of the next lemma.

Lemma 2

For \(n\ge 3\) we have

-

(a)

\(\varphi _{n}\left( \frac{1}{2} \right) >1\),

-

(b)

\(\varphi _{n}''\left( \frac{1}{2} \right) <0\).

The proof of the lemma is given in the Appendix . Now the function \(\varphi _{n}\) is symmetric with respect to \(\frac{1}{2}\), so \(\varphi _{n}'\left( \frac{1}{2} \right) =0\). But \(\varphi _{n}\) has maximum at \(\frac{1}{2}\) by Lemma 2(b), so it cannot be increasing-decreasing-increasing-decreasing. Therefore, we conclude that \(\varphi _{n}'\) has either one zero at \(\frac{1}{2}\) or it has five zeros \(\theta _1,\ldots ,\theta _5\) such that \(\theta _1<\theta _2<\theta _3=\frac{1}{2}\) and \(\theta _4=1-\theta _2\), \(\theta _5=1-\theta _5\).

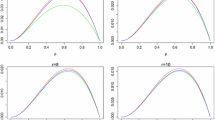

The shapes of \(\varphi _{n}\), \(n\ge 3\), are given in the next Lemma.

Lemma 3

-

(a)

We have \(\varphi _{n}(0)=\varphi _{n}(1)=0\).

-

(b)

For \(3\le n\le 8\), the function \(\varphi _{n}\) is increasing-decreasing.

-

(c)

For \(n\ge 9\), the function \(\varphi _{n}\) is either increasing-decreasing with maximum at \(\frac{1}{2}\) or it has three local maxima (one of them at \(x=\frac{1}{2}\) and two remaining at points symmetric with respect to \(1/2\)) and two local minima.

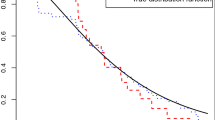

Now, if \(\varphi _{n}\) is increasing-decreasing, then it is well known that the projection \(\overline{\varphi }_{n}\) is of the form

where \(\alpha \) is the only solution to the equation

However, if \(\varphi _{n}'\) has five zeros, then the derivation of \(\overline{\varphi }_{n}\) is much more complicated, and it will be done in the next section with the aid of some auxiliary functions.

3 Auxiliary functions

To determine \(\overline{\varphi }_{n}\) we need to find the greatest convex minorant \(\overline{\varPhi }_{n}\) of \({\varPhi }_{n}\). It is a little bit easier to determine the greatest convex minorant \(\overline{\varPsi }_{n}\) of the function \({\varPsi }_{n}\) defined as

Then \({\varPsi }_{n}(0)={\varPsi }_{n}(1)=0\), and \(\overline{\varphi }_{n}=\overline{\varPsi }_{n}'+1\).

Next, we define and analyze two auxiliary functions \(g_{n}\) and \(h_{n}\) introduced by Bieniek (2014b) for quasimidranges. Let \(\ell _\alpha \) denote the straight line which is tangent to \({\varPsi }_{n}\) at the point \(\alpha \in [0,1]\), i.e.

Let \(g_{n}(\alpha )\) denote the value of \(\ell _\alpha \) at \(x=1\), i.e.

and \(h_{n}(\alpha )\) denote the slope of the straight line passing through the points \((\alpha ,{\varPhi }_{n}(\alpha ))\) and \((1,1)\), i.e.

Let us study the properties of the function \(g_{n}\). We have \(g_{n}(0)=\varphi _{n}(0)-1=-1\) and \(g_{n}(1)=0\). Next, easy differentiation leads to

and so \(g_{n}\) has the same monotonicity properties as \(\varphi _{n}\). Now we find the number of zeros of \(g_{n}\) in \((0,1)\). Note that \(g_{n}(\alpha )=0\) if and only if \(\alpha \) satisfies (5).

Theorem 1

The function \(g_{n}\) has either one or three or five zeros in \((0,1)\).

Proof

We consider the case of odd \(n=2k+1\), \(k\ge 3\). The case of even \(n\) can be treated analogously. Let \(j=\left\lfloor \frac{n}{4} \right\rfloor \). We start with the representation of the distribution function \(F_{k:n}\) of \(k\)th order statistic from uniform distribution on \([0,1]\)

Therefore

Next, using the relation

we derive

Finally by binomial theorem we have \(\sum _{i=0}^{n}B_{i,n}(u)=1\) for \(0\le u\le 1\), and combining this with Eqs. (6) and (8), after some algebra we get

The conclusion of the theorem follows by VDP. \(\square \)

Finally we find the locations of zeros of \(g_{n}\) in \([0,1]\). Note that if \(\varphi _{n}'\) has five zeros \(\theta _1,\ldots ,\theta _5\) then necessarily \(g_{n}(\theta _5)>0\), so due to its monotonicity properties \(g_{n}\) may have at most one zero in each of the intervals \((0,\theta _1)\), \((\theta _1,\theta _2)\), ...\((\theta _4,\theta _5)\) and no zeros in \((\theta _5,1)\).

Lemma 4

-

(a)

For all \(n\ge 3\) we have \(g_{n}\left( \frac{1}{2} \right) >0\);

-

(b)

If \(\varphi _{n}'\) has five zeros, then \(g_{n}(\theta _4)>g_{n}(\theta _2)\).

-

(c)

If \(g_{n}\) has a root \(\alpha _2\in (\theta _1,\theta _2)\), then it must have roots \(\alpha _1\in (0,\theta _1)\) and \(\alpha _3\in (\theta _2,\theta _3)\).

-

(d)

If \(g_{n}\) has a root in \((\theta _3,\theta _5)\), then it has exactly two roots in this interval, and one root in \((\theta _2,\theta _3)\).

Proof

-

(a)

We have \(g_{n}(\frac{1}{2})=\frac{1}{2}\left( \varphi _{n}\left( \frac{1}{2} \right) -1\right) >0\) by Lemma 2(a).

-

(b)

Since \(\varphi _{n}\) is symmetric with respect to \(\frac{1}{2}\) then \(\varphi _{n}(\theta _2)=\varphi _{n}(\theta _4)\) and \(\varphi _{n}(u)>\varphi _{n}(\theta _2)\) for \(u\in (\theta _2,\theta _4)\). Therefore

$$\begin{aligned} {\varPhi }_{n}(\theta _4)-{\varPhi }_{n}(\theta _2)=\int _{\theta _2}^{\theta _4}\varphi _{n}(u)\,\mathrm du> \varphi _{n}(\theta _2)(\theta _4-\theta _2) \end{aligned}$$and

$$\begin{aligned} g_{n}(\theta _4)&= {\varPhi }_{n}(\theta _2)+({\varPhi }_{n}(\theta _4)-{\varPhi }_{n}(\theta _2))+(1-\theta _4)\varphi _{n}(\theta _4)-1\\&>{\varPhi }_{n}(\theta _2)+\varphi _{n}(\theta _2)(\theta _4-\theta _2)+(1-\theta _4)\varphi _{n}(\theta _2)-1=g_{n}(\theta _2). \end{aligned}$$ -

(c)

If \(g_{n}(\alpha _2)=0\) for some \(\alpha _2\in (\theta _1,\theta _2)\), then \(g_{n}(\theta _1)>0\) and \(g_{n}(\theta _2)<0\). The conclusion follows from \(g_{n}(0)<0\) and \(g_{n}(\theta _3)>0\) (see part (a) of this lemma).

-

(d)

Since \(g_{n}(\theta _3)>0\) and \(g_{n}(\theta _5)>0\), the function \(g_{n}\) has even number of zeros in \((\theta _3,\theta _5)\), so it has at least two zeros. Since \(g_{n}\) is decreasing-increasing on \((\theta _3,\theta _5)\), it may have at most two zeros in the interval. If \(g_{n}\) has a root in \((\theta _3,\theta _5)\), then \(g_{n}(\theta _4)<0\), and so by part (b) of the lemma we have \(g(\theta _2)<0\), and \(g_{n}\) has another root in \((\theta _2,\theta _3)\).

For simplicity denote \(\theta _0=0\). By Lemma 4 the locations of zeros of \(g_{n}\) are as follows.

Corollary 1

-

(a)

If \(g_{n}\) has exactly one zero \(\alpha _1\), then \(\alpha _1\in (0,\theta _1)\cup (\theta _2,\theta _3)\).

-

(b)

If \(g_{n}\) has exactly three zeros \(\alpha _1,\alpha _2,\alpha _3\), then either \(\alpha _i\in (\theta _{i-1},\theta _i)\) for \(i=1,2,3\), or \(\alpha _1\in (\theta _2,\theta _3)\), \(\alpha _2\in (\theta _3,\theta _4)\) and \(\alpha _3\in (\theta _4,\theta _5)\).

-

(c)

If \(g_{n}\) has five zeros \(\alpha _1,\ldots ,\alpha _5\), then \(\alpha _i\in (\theta _{i-1},\theta _i)\) for \(i=1,\ldots ,5\).

Now we study some properties of \(h_{n}\). First of all, \(h_{n}(0)=1\) and \(h(1)=0\). Moreover,

so the monotonicity properties of \(h_{n}\) are determined by the signs of \(g_{n}\). Before we determine the shapes of \(h_{n}\) first we study the number of solutions to \(h_{n}(u)=1\) in \((0,1)\). Clearly we have \(h_{n}\left( \frac{1}{2} \right) =1\) and in the next lemma we prove that this is the only solution.

Lemma 5

The equation \(h_{n}(u)=1\) has unique root \(u=\frac{1}{2}\) in \((0,1)\). Moreover \(h_{n}(u)>1\) if and only if \(u\in (0,\frac{1}{2})\).

Proof

We again consider only the case of odd \(n=2k+1\), and the case of \(n\) even is left for the reader. Using (6) and (7) after some algebra we obtain

This time we write \(1=\sum _{i=0}^{n-1}B_{i,n-1}(u)\) which combined with the last equality yields

where

We have \(a_{j+1}>\cdots >a_k\) and \(a_{n-j-1}<\cdots <a_{k+1}\), and since \(k=\left\lfloor n/2 \right\rfloor \), we easily prove that \(a_k>0\) and \(a_{k+1}<0\). Therefore \(a_i>0\) for \(0\le i\le k\), and \(a_i<0\) for \(k+1\le i\le n-1\). By VDP the function \(h_{n}(u)-1\) has exactly one zero in \((0,1)\).

To prove the second statement it suffices to note that \(g_{n}\) is first negative, so \(h_{n}\) is first increasing by (10). Since \(h_{n}(0)=1\), then \(h_{n}(u)>1\) in a neighbourhood of 0. But by part (a) of this lemma, this must be \((0,\frac{1}{2})\). Moreover \(g_{n}\) has at least one zero in \((0,\frac{1}{2})\) and \(g_{n}\left( \frac{1}{2} \right) >0\), so \(g_{n}\) is positive in a neighbourhood of \(\frac{1}{2}\), and \(h_{n}\) is decreasing there. This implies that \(h_{n}(u)<1\) for \(u\in (\frac{1}{2},1)\). \(\square \)

Corollary 2

The function \({\varPsi }_{n}\) has exactly one root at \(\frac{1}{2}\) in \((0,1)\), and it is negative in \((0,\frac{1}{2})\), and positive in \((\frac{1}{2},1)\).

Proof

It suffices to note that \(h_{n}(u)>1\) if and only if \({\varPsi }_{n}(u)>0\), so the signs of \(h_{n}-1\) and \({\varPsi }_{n}\) are the same. \(\square \)

Now we can determine extrema of \(h_{n}\).

Theorem 2

-

(a)

If \(g_{n}\) has exactly one zero \(\alpha _1\), then \(h_{n}\) has global maximum at \(\alpha _1\).

-

(b)

If \(g_{n}\) has exactly three zeros \(\alpha _1,\alpha _2,\alpha _3\), then \(h_{n}\) has local maxima at \(\alpha _1\) and \(\alpha _3\) with global maximum inside \((0,\frac{1}{2})\).

-

(c)

If \(g_{n}\) has five zeros \(\alpha _1,\ldots ,\alpha _5\), then \(h_{n}\) has local maxima at \(\alpha _1,\alpha _3,\alpha _5\) with global maximum at \(\alpha _1\) or \(\alpha _3\).

Proof

If \(g_{n}\) has exactly one zero, then \(g_{n}\) is negative on \((0,\alpha _1)\), and positive otherwise, so by (10), the function \(h_{n}\) is increasing on \((0,\alpha _1)\) and decreasing on \((\alpha _1,1)\). This proves part (a).

Similar analysis proves (b) and (c) except for the location of global maximum. But it suffices to note that by Lemma 5 we have \(h_{n}(u)>1>h_{n}(v)\) for \(u\in (0,\frac{1}{2})\) and \(v\in (\frac{1}{2},1)\). \(\square \)

We close this section with some properties of \(g_{n}\) and \(h_{n}\) useful in the next section.

Lemma 6

-

(a)

If \(g_{n}(\alpha )=0\), then \(h_{n}(\alpha )=\varphi _{n}(\alpha )\).

-

(b)

If \(g_{n}(\alpha )=0\) and \(h_{n}(\alpha )\ge h_{n}(u)\) for \(u\in (\alpha ,1)\), then \({\varPsi }_{n}(u)\ge \ell _\alpha (u)\) for \(u\in (\alpha ,1)\).

-

(c)

If \(\theta \) is any root of \(\varphi _{n}'\) with \(g_{n}(\theta )<0\), and \(\alpha \) is the smallest zero of \(g_{n}\) in \((\theta ,1)\), then \(\varphi _{n}(\theta )<\varphi _{n}(\alpha )\).

Proof

Part (a) is just the definition of \(h_{n}\) and \(g_{n}\).

To prove part (b) recall that if \(g_{n}(\alpha )=0\), then the tangent to \({\varPsi }_{n}\) at \(\alpha \) passes through \((1,0)\), so \(\ell _\alpha \) can be written as

Since \(h_{n}(\alpha )\ge h_{n}(u)\) for \(u\in [\alpha ,1]\), then

To prove (c) it suffices to note that

The first inequality follows from \(g_{n}(\theta )<0\), the second follows from the fact that \(h_{n}\) is increasing on \((\theta ,\alpha )\), and the last equality follows from part (a) of the lemma. \(\square \)

4 Shapes of projections

Now we can determine the projections of \(\varphi _{n}\), \(n\ge 3\). In the proof of the main result of this section we need the following lemma. It was implicitly stated and proved in the proof of Theorem 4.1 of Bieniek (2014b), but here for the convenience of the reader we state and prove it in a little bit more abstract setting.

Consider any continuous function \(\phi :\left( 0,\frac{1}{2} \right) \rightarrow [0,\infty )\) with the following properties: \(\phi (0)=0\), and there exist \(\theta _1,\theta _2\in \left( 0,\frac{1}{2} \right) \) such that \(\phi \) is strictly increasing on \((0,\theta _1)\) and \(\left( \theta _2,\frac{1}{2}\right) \), and strictly decreasing on \((\theta _1,\theta _2)\) with \(\phi (\theta _1)<\phi \left( \frac{1}{2} \right) \) and \(\phi (\theta _2)>0\). Let

denote the antiderivative of \(\phi \).

Let \(\beta _0\) denote the unique point of \((0,\theta _1)\) such that \(\phi (\beta _0)=\phi (\theta _2)\), and fix any \(\eta \in (\beta _0,\theta _1]\). Let \(\gamma _0\) be the unique point of \(\left( \theta _2,\frac{1}{2} \right) \) such that \(\phi (\gamma _0)=\phi (\eta )\). Then for every \(\beta \in [\beta _0,\eta ]\) there exists the unique \(\gamma =\gamma (\beta )\in [\theta _2,\gamma _0]\) such that \(\phi (\gamma )=\phi (\beta )\). Therefore the following function

is well–defined.

Lemma 7

If \(k(\eta )>0\), then the function \(k\) has exactly one zero in \((\beta _0,\eta )\).

Note that \(k(\beta )=0\) is equivalent to the system of equations

Proof

Since the function \(k\) is continuous, it suffices to prove that \(k\) is strictly increasing with \(k(\beta _0)<0\).

Firstly, since \(\phi (u)>\phi (\beta _0)\) for \(u\in (\beta _0,\theta _2)\), then

and therefore \(k(\beta _0)<0\).

Secondly, for given \(\beta _1<\beta _2\) and \(\gamma _1<\gamma _2\), where \(\phi (\beta _i)=\phi (\gamma _i)\), \(i=1,2\), there exist unique \(\delta _1,\delta _2\in (\theta _1,\theta _2)\) such that \(\phi (\delta _i)=\phi (\beta _i)\), \(i=1,2\). Clearly \(\delta _2<\delta _1\). On each of the intervals \((\beta _1,\beta _2)\) and \((\delta _2,\theta _1)\) we have \(\phi (u)>\phi (\beta _1)\), so

since \(\phi (\beta _2)>\phi (\beta _1)\). Therefore

Similarly,

Summing up both inequalities side by side we conclude that \(k(\beta _1)<k(\beta _2)\), so \(k\) is strictly increasing. \(\square \)

The main result of this section is the following theorem.

Theorem 3

-

(a)

If either of the following conditions hold

-

\(g_{n}\) has exactly one root \(\alpha _1\in (0,\theta _1)\),

-

\(g_{n}\) has at least three roots \(\alpha _1,\alpha _2,\alpha _3\in (0,\frac{1}{2})\) with \(h_{n}(\alpha _1)\ge h_{n}(\alpha _3)\),

then

$$\begin{aligned} \overline{\varphi }_{n}(u)= {\left\{ \begin{array}{ll} \varphi _{n}(u), &{} \text {for } 0\le u\le \alpha _1,\\ \varphi _{n}(\alpha _1), &{} \text {for } \alpha _1\le u\le 1. \end{array}\right. } \end{aligned}$$(13) -

-

(b)

Otherwise, i.e. if one of the following conditions hold

-

\(g_{n}\) has exactly one root \(\alpha _3\in (\theta _2,\theta _3)\),

-

\(g_{n}\) has exactly three roots \(\alpha _3,\alpha _4,\alpha _5\in (\theta _2,1)\),

-

\(g_{n}\) has at least three roots \(\alpha _1,\alpha _2,\alpha _3\in (0,\frac{1}{2})\) with \(h_{n}(\alpha _1)<h_{n}(\alpha _3)\),

then

$$\begin{aligned} \overline{\varphi }_{n}(u)= {\left\{ \begin{array}{ll} \varphi _{n}(u), &{} \text {for } 0\le u\le \beta ,\\ \varphi _{n}(\beta ), &{} \text {for} \beta \le u\le \gamma ,\\ \varphi _{n}(u), &{} \text {for } \gamma \le u\le \alpha _3,\\ \varphi _{n}(\alpha _3), &{} \text {for } \alpha _3\le u\le 1,\\ \end{array}\right. } \end{aligned}$$(14)where \((\beta ,\gamma )\), with \(\beta \in (0,\theta _1)\) and \(\gamma \in (\theta _2,\alpha _3)\), is the unique solution to the system of equations

$$\begin{aligned} \frac{{\varPhi }_{n}(\gamma )-{\varPhi }_{n}(\beta )}{\gamma -\beta }=\varphi _{n}(\beta )=\varphi _{n}(\gamma ). \end{aligned}$$(15) -

Proof

First we consider the case when \(g_{n}\) has a single root in \((0,1)\).

If \(g_{n}(\alpha )=0\) for unique \(\alpha \in (0,\theta _1)\), then \(\alpha \) is a point of global maximum of \(h_{n}\). Then \({\varPsi }_{n}\) is convex on \((0,\alpha )\), and by Lemma 6(b) we have \({\varPsi }_{n}(u)\ge \ell _\alpha (u)\) for \(u\in [\alpha ,1]\). Therefore

is the greatest convex minorant of \({\varPsi }_{n}\), and \(\overline{\varphi }_{n}\) is of the form (13).

If \(g_{n}(\alpha )=0\) for unique \(\alpha \in (\theta _2,\theta _3)\), then also \({\varPsi }_{n}(u)\ge \ell _\alpha (u)\) for \(u\ge \alpha \), but \({\varPsi }_{n}\) is not convex on \((0,\alpha )\). But then \(g_{n}(\theta _1)<0\), so by Lemma 6(c) we have \(\varphi _{n}(\theta _1)\le \varphi _{n}(\alpha )\). Now apply Lemma 7 with \(\eta =\theta _1\) and \(\phi =\varphi _{n}\). We have \(\varphi _{n}(u)<\varphi _{n}(\theta _1)\) for \(u\in (\theta _1,\gamma _1)\), so similar computations as in (12) imply that \(k(\theta _1)>0\). Therefore there exists the unique pair \((\beta ,\gamma )\) which satisfies the system (15). Now the function

is the greatest convex minorant of \({\varPsi }_{n}\), and \(\overline{\varphi }_{n}\) is given by (14).

Next, we consider the case of three zeros of \(g_{n}\) with exactly one \(\alpha \in (0,\frac{1}{2})\) and two of them \(\alpha _4,\alpha _5\in (\frac{1}{2},1)\). Then \(h_{n}\) has two local maxima at \(\alpha \) and \(\alpha _5\) with \(\alpha \) being global maximum. By Corollary 1(b) we have \(\alpha \in (\theta _2,\theta _3)\), so again \(g_{n}(\theta _1)<0\), and it suffices to repeat the reasoning for the case of exactly one zero of \(g_{n}\) belonging to \((\theta _2,\theta _3)\).

Finally, we turn to the case of three zeros of \(g_{n}\) inside \((0,\frac{1}{2})\) (and possibly two zeros in \((\frac{1}{2},1)\)). If \(h_{n}(\alpha _1)\ge h_{n}(\alpha _3)\), then \(\alpha _1\) is the point of global maximum of \(g_{n}\), and again the greatest convex minorant of \({\varPsi }_{n}\) is given by (16), and the projection of \(\varphi _{n}\) is of the form (13).

However, if \(h_{n}(\alpha _1)<h_{n}(\alpha _3)\), then \(\alpha _3\) is the point of global minimum of \(h_{n}\) and more thorough analysis is needed. By Theorem 2(b) and (c) the function \(h_{n}\) has local minimum at \(\alpha _2\), so \(h_{n}(\alpha _1)>h_{n}(\alpha _2)\). By Lemma 6(a) the last two conditions are equivalent to \(\varphi _{n}(\alpha _2)<\varphi _{n}(\alpha _1)<\varphi _{n}(\alpha _3)\). Moreover, obviously \(\varphi _{n}(\alpha _2)>\varphi _{n}(\theta _2)\), so \(\varphi _{n}(\alpha _1)>\varphi _{n}(\theta _2)\) as well. Now apply Lemma 7 with \(\eta =\alpha _1\) and \(\phi =\varphi _{n}\). Recall that \(\alpha _1\) satisfies the equation (5), so

and therefore \(g_{n}(\gamma _0)=-k(\alpha _1)\). But \(\gamma _0\in (\alpha _2,\alpha _3)\), so \(g_{n}(\gamma _0)<0\), and \(k(\alpha _1)>0\), and application of Lemma 7 completes the proof of the theorem. \(\square \)

5 Analytical and numerical values of bounds

Once the projections of \(\varphi _{n}\) onto \(\mathcal C\) are found, the determination of values of the bounds

as well as the conditions for their attainability, is easy due to (1) and (2). Therefore the proof of the next result is omitted.

Theorem 4

If any of the conditions of Theorem 3(a) holds, then

where \(\alpha \) is the unique zero of \(g_{n}\) in \((0,\theta _1)\). Otherwise,

where \(\alpha \) is the unique zero of \(g_{n}\) in \((\theta _2,\theta _3)\), and \((\beta ,\gamma )\) is the unique solution to (14).

In both cases the equality is attained for the distribution function \(F\) given by

Remark 1

The inverse \(\varphi _{n}^{-1}\) of \(\varphi _{n}\) should be understood as the inverse of the function \(\varphi _{n}\) restricted to the interior of the set where \(\overline{\varphi }_{n}=\varphi _{n}\). Note that in the second case the distribution function \(F\) attaining the bound has the jump of size \(\gamma -\beta \) at \(x=\frac{\varphi _{n}(\gamma )-1}{B_n}\).

Remark 2

The results of Theorem 4 can be generalized to provide bounds expressed in scale units of \(p\)th central absolute moment with arbitrary \(p\in [1,\infty ]\) instead of \(p=2\) only.

We conclude the paper with numerical values of bounds of Theorem 4, which are presented in Table 1. Note that by (9) and (11) the functions \(g_{n}\) and \(h_{n}\) are polynomials of the degree at most \(n\), so numerical verification of the conditions of Theorem 3 is straightforward. Quite surprisingly, \(B_n\), \(n\ge 3\), is not monotone sequence, but it can be observed that each of the sequences \(B_{4k}\), \(B_{4k+1}\), \(B_{4k+2}\) and \(B_{4k+3}\), \(k\ge 1\), is strictly increasing.

References

Bieniek M (2014a) Comparison of the bias of trimmed and Winsorized means, to appear in Comm. Statist, Theory Methods

Bieniek M (2014b) Optimal bounds on the bias of quasimidranges, submitted

Danielak K (2003) Sharp upper mean-variance bounds for trimmed means from restricted families. Statistics 37:305–324

Danielak K, Rychlik T (2003) Exact bounds for the bias of trimmed means. Aust N Z J Stat 45:83–96

Goroncy A (2009) Lower bounds on positive \(L\)-statistics. Comm Statist Theory Methods 38:1989–2002

Moriguti S (1953) A modification of Schwarz’s inequality with applications to distributions. Ann Math Statistics 24:107–113

Mosteller F (2006) On some useful “inefficient” statistics. In: Hoaglin DG (ed) Selected Papers of Frederick Mosteller. Springer, New York, pp 69–100

Okolewski A, Kałuszka M (2008) Bounds for expectations of concomitants. Stat Papers 49:603–618

Raqab MZ (2007) Bounds on the bias of Winsorized means. Aust N Z J Stat 49:51–60

Rosenberger JL, Gasko M (1983) Comparing location estimators: trimmed means, medians, and trimean. In: Mosteller F, Tukey JW, Hoaglin DC (eds) Understanding robust and exploratory data analysis. Wiley, New York, pp 297–338

Rychlik T (1998) Bounds for expectations of \(L\)-estimates. In: Balakrishnan N, Rao CR (eds) Order statistics: theory & methods. Handbook of statist., vol 16, North-Holland, Amsterdam, pp 105–145

Rychlik T (2001) Projecting statistical functionals, vol 160., Lecture Notes in Statistics Springer-Verlag, New York

Rychlik T (2010) Evaluations of generalized order statistics from bounded populations. Stat Papers 51:165–177

Schoenberg IJ (1959) On variation diminishing approximation methods. In: Langer RE (ed) On numerical approximation. Proceedings of a Symposium, Madison, April 21–23, 1958, The University of Wisconsin Press, Madison, pp 249–274.

Tukey JW (1977) Exploratory data analysis. Addison-Wesley, Boston

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Proof of Lemma 2

Proof

(a) We need to prove that \(\varphi _{n}\left( \frac{1}{2} \right) >1\). For \(n=3,\ldots ,6\) this follows from the fact that \(\varphi _{n}\) is increasing-decreasing with maximum at \(\frac{1}{2}\). For \(n=2k+1\), \(k\ge 3\), by Lemma 2.3 of Bieniek (2014b) we have \(f_{k+1:2k+1}\left( \tfrac{1}{2}\right) >2\). Therefore

For \(n=2k\), \(k\ge 4\), by Lemma A.3 of Bieniek (2014b) we have \(f_{k:2k}\left( \frac{1}{2} \right) =f_{k+1:2k}\left( \frac{1}{2}\right) >2\), and therefore

\(\square \)

Proof

(b) For \(n=3,\ldots ,8\) the statement follows from the fact that \(\frac{1}{2}\) is the point of maximum of \(\varphi _{n}\).

We give the detailed proof for the case \(n=4j+1\), \(j\ge 2\), only. The proofs for the remaining cases are analogous. Differentiating (4) with the aid of (3), and putting \(u=\frac{1}{2}\) we obtain

Therefore \(\varphi _{9}''\left( \frac{1}{2} \right) <0\), and it remains to consider \(j\ge 3\). By the above equality we get

where

Therefore \(\varphi _{4j+1}''\left( \tfrac{1}{2}\right) <0\) is equivalent to

and since clearly

it suffices to prove that

This can be done by easy induction on \(j\). \(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Bieniek, M. Sharp bounds on the bias of trimean. Stat Papers 57, 365–379 (2016). https://doi.org/10.1007/s00362-014-0641-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-014-0641-3