Abstract

Particle tracking velocimetry methods (PTV) have a great potential to enhance the spatial resolution compared to spatial correlation-based methods (PIV). In addition, they are not biased due to inhomogeneous seeding concentration or in-plane and out-of-plane gradients so that the measurement precision can be increased as well. The possibility to simultaneously measure the velocity with the temperature, ph-value, or pressure of the flow at the particle location by means of fluorescent particles is another advantage of PTV. However, at high seeding concentrations, the reliable particle pairing is challenging, and the measurement precision decreases rapidly due to overlapping particle images and wrong particle image pairing. In this paper, it is shown that the particle image information acquired at four or more time steps greatly enhances a reliable particle pairing even at high seeding concentrations. Furthermore, it is shown that the accuracy and precision can be increased by using vector reallocation and displacement estimation using a fit of the trajectory in the case of curved particle paths. The improvements increase the PTV working range as reliable and accurate measurements become possible at seeding concentrations typically used for PIV measurements.

Similar content being viewed by others

1 Introduction

1.1 PIV and PTV

Particle image velocimetry (PIV) is a well-established technique for non-intrusive flow field investigations in transparent fluids. The velocity is estimated within interrogation windows by cross-correlating the images of small tracer particles recorded at time t and \(t+\Updelta t\). For a robust and precise cross-correlation analysis, interrogation windows covering 6–10 particle images are usually required (Raffel et al. 2007). Therefore, the size of the interrogation windows, and thus, the spatial resolution depend on the seeding concentration and the particle image diameter. For the investigation of flows with relatively weak spatial gradients, the technique is very reliable and the measurement accuracy is usually sufficient to estimate spatial derivatives. However, when flows with strong gradients are investigated, the measurements are biased (Kähler et al. 2012a; Westerweel 2008; Keane and Adrian 1992). To better resolve strong flow gradients, the optical magnification can be increased, but this leads to three major problems (Kähler et al. 2006):

-

1.

The particle image density becomes too sparse for spatial cross-correlation methods.

-

2.

The particle image size increases beyond the optimal range for spatial cross-correlation analysis.

-

3.

Correlation-based methods may show bias errors due to a spatial variation in the particle image density.

As PTV does not show the bias error at all (Kähler et al. 2012b), the technique is well suited for accurate flow field measurements at any magnification provided the seeding concentration is sufficiently low for a reliable particle image pairing. At high seeding concentrations, two major random errors need to be taken into account.

-

Errors related to the determination of the particle position.

-

Errors due to wrong particle image pairing

1.2 Particle image positioning

The first error, associated with the particle image positioning, is related to the image recording and the detection algorithms, because with increasing particle image density or particle image size, the probability of overlapping particle images increases. In effect, the accuracy in localizing individual particle images decreases. Maas (1992) has derived an expression that connects the number of individual particle images N p with the number of overlapping particle images N o for circular particle images that are randomly distributed on a sensor with size A:

A crit is the critical area in which a particle image starts to overlap with the boundaries of another particle image. Please note that frequently used variables are listed in Table 1. Typically, the boundaries of the particle images are defined to be at the radial location at which the intensity has decreased to e −2 of the center value. Thus, the critical area is A crit = π D 2, since particle images share the same boundary if the centers have a distance of D. Lei et al. (2012) have shown that the detection of the particle image center is still possible even when the particle image overlap reaches 50 % (L = D/2), which implies that the critical area reduces to A crit = π L 2, with L being the distance of particle image centers that can be separated. Figure 1 illustrates the ratio of overlapping particle images as a function of L for different particle image densities N ppp. Using L as the smallest inter particle image distance that can be resolved, this graph allows for the estimation of the number of vectors that can be gained by a PTV analysis for a given particle image density. The reliability is dependent on the SNR, the particle images size, and the error that the user is willing to accept.

For planar PIV, particle image densities of 0.03 ppp < N ppp < 0.05 ppp are recommended, see Raffel et al. (2007). For D = 2.5 px and N ppp = 0.05 ppp, already more than 20 % of the particle images overlap. For particle images with a diameter of 5 pixels, the overlap ratio reaches 80 %.

For particle tracking algorithms, the particle image density can be reduced by a factor of 10 to get almost the same number of vectors compared to PIV measurements, because a vector is found for each particle image pair (black curves in Fig. 1). In this case, only 5 % of the particle images overlap for D = 2.5 px and N ppp = 0.005. For some applications, this is a big advantage since the contamination of the facility is reduced by the same factor. However, it is generally of interest to accurately measure small scale flow features which cannot yet be resolved by using PTV. Therefore, PTV measurements at high seeding concentrations would be desirable for many flow investigations. In order to increase the reliability of PTV for larger particle image densities, a multi-frame PTV technique is proposed to enhance the robustness and accuracy of the particle image pairing.

1.3 Particle image pairing

The simplest case to match corresponding particle images is a nearest neighbor PTV algorithm (Malik et al. 1993). Since the approach only works for very low particle image densities, artificial neural networks or relaxation methods can be used to minimize a local or global cost function (Pereira et al. 2006) to allow for higher seeding concentrations. Alternatively, Okamoto et al. (1995) presented a spring force model, where particle pairs were identified by searching for the smallest spring force calculated over particles in a certain neighborhood. Probabilistic approaches that take the motion of neighboring particles into account show a very high vector yield at higher particle image densities although on the expense of spatial resolution as the motion of neighboring particles must be correlated. Another method to improve the detection of corresponding particle pairs is the use of a predictor. This predictor can significantly decrease the search area in the second frame and thus improve the match probability of particles. In general, such predictors can be based on theoretically known velocity distributions or experimentally obtained PIV evaluations (Cardwell et al. 2011; Cowen and Monismith 1997; Keane et al. 1995; Takehara et al. 2000).

Recently, Brevis et al. (2011) combined a predictor obtained by PIV with a relaxation PTV algorithm to further enhance the performance. However, in comparison with PIV, the gain in resolution is only minor and does not justify the effort in many cases. A fully PTV-based algorithm was presented by Ohmi and Li (2000), where a case sensitive search radius in the second frame has to be defined to identify possibly matching particles. This is done for all particles detected reliably in the first frame. For each possible match, the algorithm adds the probabilities of similar neighbor vectors using an iterative approach. The threshold for the common motion of the neighboring particles is another parameter that needs to be specified. This two-frame method showed superior results even for high seeding concentrations.

Unfortunately, the accuracy and the robustness of all these two-frame methods are limited by the fact that only two recordings, acquired at t and \(t + \Updelta t\) exist. Thus, only a first order approximation of the velocity can be estimated. For correlation-based methods, different approaches have been recently discussed to overcome this problem. Scharnowski and Kähler (2013) developed a method to use information from neighboring vectors of the same velocity field, obtained by two-frame PIV, to reduce the errors due to stream line curvature. Another approach to further enhance the precision in estimating the flow velocity is multi-pulse or multi-frame techniques, which were already discussed in the early days of digital PIV (Adrian (1991) and references herein). Hain and Kähler (2007) used the information of multiple frames to minimize the random errors at each vector location within an instantaneous PIV vector field by taking vector information from a large time separation for low velocity regions and short time separation for high velocity regions. Sciacchitano et al. (2012) applied an averaging in the correlation planes to increase the robustness of their pyramid correlation algorithm. Lately, Lynch and Scarano (2013) presented an approach to replace and deform the correlation windows according to the estimation of the trajectory of a fluid parcel. The basic idea of the multi-frame methods is to make additional use of the temporal smoothness of the particle image signal.

The same principles can be used to enhance the probability for correct particle image matching in PTV by tracking particles over more than two successive frames. One of the first multi-frame approaches was presented by Nishino et al. (1989) who used four consecutive frames. The smoothness of a particle trajectory was determined to evaluate if a particle path was valid or not. Hassan and Canaan (1991), for example, proposed a nearest neighbor approach with four different frames with equidistant time intervals to enhance the results for bubbly flow. Oulette et al. (2006) used criteria as the minimal acceleration for the third frame, or minimized change in acceleration for the fourth frame, where a modified version of the latter criteria showed the best results. Li et al. (2008) developed a technique using information of previous five frames to determine the particle image position in the sixth frame. Due to the large number of previous frames, their algorithm is very robust to noise and a method was developed to gap even frames with missing particle information. Guezennec et al. (1994) applied a penalty function to prove the path coherence of particle trajectories. Malik et al. (1993) also developed a four-frame method to detect 3D particle trajectories in a volume. The velocity estimates were used to decrease the search radius for the nearest neighbor search in the next frame, thus served as a predictor. This concept is schematically shown in Fig. 2.

The algorithm first determines the displacement between the particle images in frames one and two. The time interval between frame one and two must be small enough to allow for nearly 100 % of valid particle links (cmp. Fig. 3). Next, the predictor is used to decrease the search area in frame three. This allows a larger time interval and thus a larger displacement which gives a higher dynamic velocity range. In addition, the relative error for the displacement will decrease since for valid particle image pairs, the error associated with PTV is only given by the uncertainty in the centroid estimation. A predictor can also be used later in the fourth frame.

Ratio of the number of detected vectors over the number of possible vectors (R 1, left) and ratio of the number valid vectors over the number of detected vectors (R 2, right) for a simple nearest neighbor approach (NN), a weighted nearest neighbor approach (NNW), and the probabilistic approach for two (P2F) and four frames (P4F). The gray line indicates p = 2.31

The underlying idea of the current approach is therefore to combine the information from the neighboring particles with a predictor obtained from the previous image sequence. The aim of this approach is to enhance the precision and accuracy of PTV for highly seeded flows in order to extend both the range of scales that can be resolved (dynamic spatial range), and the dynamic velocity range of the PTV technique. Therefore, we use the probability approach proposed by Ohmi and Li (2000) in combination with a temporal predictor concept based on Malik et al. (1993). This method has the advantage that it can be used for two, four, or even multiple frame particle tracking.

In the following two sections, the concept will be validated using synthetic data. Next, the algorithm is used to evaluate experimental planar time-resolved measurements of the flow over periodic hills. Finally, 3D data obtained in a microfluidic experiment are analyzed to show the performance of the approach for volumetric data sets.

2 Four-frame particle tracking velocimetry

2.1 Monte-Carlo simulation

To verify and validate the algorithm, a Monte-Carlo simulation of a uniform flow without gradients was used. Random particle image positions were simulated using a 256 × 256 pixel space with the number of particle images increasing from N p = 10–20,000. The mean particle image spacing ranges from \(\Updelta x_{0} = \sqrt{{N_{\rm x}N_{\rm y}/N_{\rm p}}} = 1.8\) to 57 pixel, where N x N y corresponds to the image size. The particle image densities range in this case from N p /A = 0.00015–0.3 ppp.

A suitable criterion to compare the performance of PTV algorithms is the ratio of the mean particle image distance to the maximum displacement of the particle images \(p = \Updelta x_{0}/ \Updelta x_{\rm max}\), introduced by Malik et al. (1993). For a displacement of 2.31 times the mean particle spacing, they could detect about 90 % of simulated particle image pairs, R 1 = 0.9, using a nearest neighbor algorithm. The yield, defined as the number of valid vectors divided by the number of detected vectors, was R 2 = 0.97 for their case. On the left side of Fig. 3, the number of detected vectors divided by the number of particles, denoted as R 1, is shown for:

-

a simple nearest neighbor algorithm (NN),

-

a nearest neighbor algorithm with weighting functions (NNW),

-

the probability approach for two frames (P2F)

-

and the probability approach for four frames using a predictor (P4F).

For P4F, the time interval between frames 2 and 3 was five times the time interval of the others. It can be clearly seen that both nearest neighbor approaches provide a vector for nearly each particle image as R 1 is almost unaffected by the mean particle image distance. However, with increasing seeding concentration, that is, smaller distances between particle images, wrong particle links result in an underestimation of the displacement. The ratio R 2 drops significantly; for p = 2.31, only 70 % of valid vectors are determined. If physical knowledge of the flow is available, the detectability could be increased using weighting factors. Therefore, the distance in the predominant direction is multiplied by a factor lower than one, which results in a lower weighting of the distance in that direction for the determination of the nearest neighbor. Using that approach, approximately 90 % of valid vectors can be found at p = 2.31.

The probabilistic algorithm’s performance is superior. For p ≥ 1, over 90 % of possible vectors were found. In addition, the ratio of valid vectors is nearly 100 %. The four-frame algorithm shows an even better performance in finding the right links in the next frames because it determines a predictor from the displacement between frames 1 and 2. It also becomes clear from Fig. 3 that in the case of a uniform flow, nearly every detected vector represents the right velocity (R 2 ≈ 1). At these high particle image densities, the final limitation of the particle tracking is not any longer the tracking algorithm but the ability to determine the particles’ centers reliably (Lei et al. 2012).

2.2 VSJ standard PIV images

Recently developed particle tracking algorithms have been tested using the PIV standard images of the Visualization Society of Japan (Okamoto et al. 2000). The series number 301 provides a shear flow simulated using LES. The time-resolved images have a size of 256 × 256 pixels and contain about 4,000 particles each, which results in a particle image density of N ppp = 0.06. The images are optimized for spatial correlation-based evaluation methods. The maximum displacement is 10 pixels between two images, which gives a ratio of mean particle image spacing to maximum displacement of only p = 0.4.

These images have been used by many other researchers, and a detailed analysis and comprehensive collection of the results throughout the literature can be found in Lei et al. (2012). Ohmi and Li (2000) applied a particle image identification algorithm first and were able to detect approximately 1,000 to 1,300 particles images out of the total 4,000 particle images per frame. They used these detected particle images to test the PTV algorithm and were able to match about 80 % with 98 % (R 2 = 0.98) of exact matches among the detected ones using a frame interval of one. Since the exact particle image positions are known, the PTV algorithms can also be tested using the exact positions for all 4,000 particle images. Unfortunately, this data are not provided by Ohmi and Li (2000). However, for modern algorithms, 98 % (Brevis et al. 2011) and 97 % (Lei et al. 2012) of the possible vectors could be resolved correctly using two successive frames.

Since the first question is whether or not the combination of the probability matching algorithm with a predictor can be used to increase the dynamic range, that is, to allow for larger time differences, the simple nearest neighbor algorithm (NN), the probabilistic two-frame method (P2F), and the four-frame method (P4F) were tested for increasing distances between the frames. In Fig. 4, the ratio of the number of detected vectors over the number of possible vectors (R 1) is shown along with the ratio of the number valid vectors over the number of detected vectors (R 2) for varying frame distances \(\Updelta t_{12}\) and \(\Updelta t_{23}\) for the two -frame and four-frame algorithm, respectively.

Ratio of the number of detected vectors over the number of possible vectors (R 1) and ratio of the number valid vectors over the number of detected vectors (R 2) for a simple nearest neighbor approach (NN) and the probabilistic approach for two (P2F) and four frames (P4F) applied to the VSJ 301 images over frame distance

It can be seen that a simple nearest neighbor approach is already insufficient for \(\Updelta t = 1\). R 1 is larger than one, since the algorithm detects a match for each particle image although some of them leave the light sheet in the next frame and new particles appear. The probabilistic approach using two frames detects about 97 % of particle image matches and has a reliability of 98 % for \(\Updelta t_{12}\). With increasing distance, both R 1 and R 2 drop significantly with only 20 % exact matches out of 70 % detected vectors. If a predictor is used the performance using a \(\Updelta t_{23} = 1\) drops slightly to 95 %, since vectors are only taken into account if a trajectory is found for all frames. However, for all larger time intervals, the performance is superior with about 70 % of vectors found for \(\Updelta t_{23} = 6\) which corresponds to a maximal displacement between frames 2 and 3 of 60 pixels. This decay roughly follows an exponential trend where the ratio of matched vectors for a given separation \(\Updelta t_{23} = n \Updelta t_{12}\) can be determined by \(R_{1}(\Updelta t_{23} = n) = R_{1}(\Updelta t_{12} = 1)^{n}\). This allows for the determination of a trade-off for the time separation on the basis of a quick two-frame analysis.

The other benefit of the four-frame method is its great reliability, since the number of correct matches out of the detected matches does not drop significantly. Even for \(\Updelta t_{23} = 6\), 89 % of the vectors found are correct and only 11 % of the vectors are outliers which can easily be filtered by outlier detection algorithms suited for PTV data (Duncan et al. 2010).

3 Vector reallocation and velocity estimation by the trajectory

3.1 Principles

An inherent limitation of PIV and PTV algorithms using two frames is the fact that the velocity can only be estimated up to the second order accuracy in time (Wereley and Meinhart 2001). This approximation is only valid, if the particle path between the two positions follows a straight line and the velocity is constant. Often, strong spatial and temporal gradients are present, and this assumption is only approximately valid for small displacements. Thus, the time interval between the two frames must be reduced. Unfortunately, this results in a smaller displacement and the relative error of the displacement estimation increases as the absolute uncertainty \(\sigma_{\Updelta{\rm x}}\) for the displacement estimation stays constant. Using multiple frames, a higher order approximation of the velocity is possible.

For an illustration of the bias errors, the same particle path as shown in Fig. 2 is shown in Fig. 5. The time interval between t 1 and t 2 is rather short so that non-linear effects can be neglected. However, the relative error is large for the small displacement. Using the representations of the particle position for t 1 and t 4 gives a larger displacement and helps to reduce the relative error. However, a bias error \(\epsilon_{\Updelta{\rm x}}\) for \(\Updelta x_{14}\) can be clearly seen if the linear approximation is compared to the integral particle path. Another error arises for the vector positioning. As can be seen from the figure, the vector is usually positioned at the half way between two identified particle positions at t 1 and t 4 for a two-frame representation. In this case, a substantial error \(\epsilon_{\rm x}\) can appear compared to the positioning on the trajectory. A reallocation of the vector position onto the trajectory would decrease this error.

3.2 Fitting the particle trajectory

In order to quantify the benefits from the above mentioned vector reallocation and displacement estimation by the particle trajectory, the flow field of a Lamb–Oseen vortex was analyzed. Due to the circular stream lines of this flow field, the effect is maximized. The maximum tangential displacement was chosen to be \(\Updelta_{\rm tan} = 5.5\) pixel at a radial position of r = 31.7 pixel between t 2 and t 3.

In Fig. 6, the difference from the analytical solution for the tangential and radial displacement distributions is shown for the case without any noise or uncertainty related to the particle positions. Therefore, the errors are purely systematic. The red circles represent the simple central differences between t 2 and t 3. As expected, the tangential displacement is underestimated. This underestimation due to the curvature of the particle path reaches 0.034 pixel at the maximum. However, the error related to the radial displacement is much stronger and reaches 0.45 pixel.

Difference to the analytical solution for the tangential (left) and radial (right) displacement distribution for a Lamb–Oseen vortex estimated with central differences for two frames (2F) and a third and second order polynomial using four frames (4F). Results without added noise show the bias errors

For the four-frame method, the particle positions in x and y were fitted by a second and third order polynomial function f X = X(t), f Y = Y(t). For 3D data, the same procedure is used for z with f Z = Z(t) as can be seen in Sect. 4.2. The fitting is based on a least-square regression and was implemented using the fit functionality of Matlab. All data points have the same weight in the present implementation. However, if one considers that some data points are affected by larger errors a lower weight is beneficial. The time separation can also influence the result of the polynomial fit. In general it is favorable to have the time separation as large as possible between frame two and three to decrease the relative error and increase the dynamic velocity range. However, the distance is limited by the out-of-plane loss of particles for 2D measurements and the trajectorie’s curvature.

Two different methods were used for the displacement estimation. First, the displacement was estimated by the gradient of the fitted polynomials at the time instant t = t 2 + (t 3 − t 2)/2. This method is indicated by ’grad’ in the figure. The second method uses the numerical integration of the trajectory to get the path length and is indicated by ’int’.

The best match is achieved for the third order polynomial since this fit can exactly describe the circular trajectories of the particles. Almost no errors appear for the gradient-based displacement estimation in the tangential and radial directions. However, using the integration method, the tangential displacement is underestimated by about 0.013 pixel, whereas the radial component does not show significant errors. Using a second order polynomial fit results in an error of 0.017 pixel for the tangential displacement, and a negligible error for the radial displacement using both the integration and the gradient method.

However, in reality, an uncertainty is related to the particle positions. Since a third order polynomial that fits all positions exactly will be found, if four particle image positions are considered, the displacement estimate using such a fit would result in larger random errors. This can be seen in Fig. 7, where the distribution of the displacement errors are shown for particle image positions associated with a Gaussian-distributed error with a standard deviation of 0.02 pixels. These error levels can easily be reached for elaborate particle detection algorithms and high-quality imaging (Kähler et al. 2012b). The distribution represents the random errors superimposed on the bias errors and thus the total errors as they appear in a real measurement. Since the random error for the tangential velocity is much larger than the bias errors in the present example, the distributions are almost symmetrical around zero. The widest distribution can be seen for the third order polynomial gradient method, as expected. The integration method seems to decrease the random errors as the distribution is much smaller. However, this method still shows errors in the same order of magnitude as the central difference scheme. For the second order polynomial, integration and gradient estimation perform equally well. Here, the smoothing that is inherent to the second order fit is beneficial for the displacement estimation. In comparison with the gradient method, the integration of the path length tends to decrease random errors.

Distribution of the error for the tangential (left) and radial (right) displacement distribution for a Lamb–Oseen vortex estimated with central differences for two frames (2F) and a third and second order polynomial using four frames (4F). Results with added noise with a Gaussian distribution and standard deviation of 0.02 pixels

On the left hand side, the error for the radial velocity also shows much less scatter for the integration method. Since experimental data always show uncertainties, it can be concluded that the second order polynomial provides the best trade-off in avoiding bias errors due to the curvature of the trajectory and random errors due to the uncertainty in the particle image position detection. Therefore, this method is chosen to evaluate the experimental data in Sect. 4.

3.3 VSJ standard PIV images

In Sect. 2.2, it was shown that even for large separation between the frames, the algorithm works quite well on the VSJ standard images. Although the true velocity for the images is not known, the benefit of the vector reallocation can be tested since the exact particle image positions are known for all frames. In Fig. 8, the standard deviation and the mean of the absolute difference between the exact position and the vector position, determined by the second order polynomial fit and the first order approximation, are given for different time separations between frames 2 and 3. It can be seen that the error increases strongly with increasing time separation for the first order approximation. The first order approximation results in a mean error of 0.02 pixel for \(\Updelta t_{23} =2\), which is four times larger than the error for the second order fit. For \(\Updelta t_{23} =6\), the 0.16 pixel mean error for the first order approximation is already six times larger than the error for the second order fit. However, also the systematic error using the second order polynomial fit increases moderately. Since the systematic error is low in comparison with the random error as was shown in the previous section, it is still beneficial to have large time separations to decrease the relative velocity error.

4 Experimental validation

4.1 2D flow over periodic hills

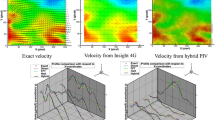

The flow over periodic hills is a common test case for the validation of numerical flow simulations (see ERCOFTAC test case Nr. 81). The numerical prediction is quite difficult, since flow separation and reattachment are not fixed in space and time due to the smooth geometry (Fröhlich et al. 2005). Furthermore, the separated and fully three-dimensional flow from the previous hill impinges the next hill which results in very complex flow features including turbulent splashing, Taylor-Görtler vortices, and a very thin shear layer in the wake flow with developing Kelvin-Helmholtz instabilities. The experiments were performed in a water tunnel at TU Munich. The height h of the hills was 50 mm, and the spacing between them was 9h. A detailed description of the setup can be found in Rapp and Manhart (2011).

Figure 9 shows the velocity vectors for one time instant downstream of the hill top. Black vectors are the result of the two-frame algorithm without trajectory fitting, and green vectors indicate the results of the four-frame method with \(\Updelta t_{23} = 2 \Updelta t_{12}\). In the region of the relatively fast outer flow with straight trajectories and the slow reverse flow, both approaches perform well. However, in the downstream shear layer, where Kelvin-Helmholtz vortex-like structures can be observed, less particle images were matched in all four frames, so that mainly black vectors are visible.

For the current investigation, only 10 images were evaluated. The number of particle images per frame is approximately 1,400. With the central differences using two frames, approximately 970 vectors were found. In comparison with the synthetic data, where almost all particle images could be paired, here the detectability is only 70 %. The main reason is the out-of-plane movement of some particles due to the three-dimensional nature of the flow. The number of vectors is further decreased using the four-frame method as illustrated in Fig. 9. For the equidistant temporal sampling, 500 vectors were found which correspond to a detectability of 40 %. Using larger time intervals between frames 2 and 3, the loss of trajectories increases and the ratio R 1 in Fig. 10 decreases. For a time separation \(\Updelta t_{23} = 4\Updelta t_{12}\), only 20 % of the particles image pairs can be found over all four frames, which is again mainly caused by three-dimensional motion in that region. Therefore, a time separation \(\Updelta t_{23} = 2\Updelta t_{12}\) is considered to be the best compromise for that flow.

A beneficial effect of taking four frames and trajectory fitting is the fact that peak locking is decreased. In Fig. 11, peak locking can be clearly seen for the two-frame method for the vector position as well as for the displacement. As the experiment was inherently setup for PIV and the particle images are small due to the large pixel size of the CMOS sensor, this is expected (Cierpka and Kähler 2012a). The reduction of the peak locking effect in the case of the multi-frame technique is clearly visible. Although each position is attributed to peak locking errors, the total error is smoothed out for the final displacement estimation.

4.2 3D microvortex

The previous section shows that the out-of-plane loss of the particle image pairs in planar measurements limits the performance of the multi-frame evaluation technique in the case of complex 3D flows. As this effect can be completely eliminated by using volumetric recording techniques, such as Astigmatism PTV (APTV), Tomographic PTV, or V3V, for instance, the three-dimensional nature of an electrothermal vortex was investigated using (APTV). This technique enables a fully three-dimensional determination of particle positions within a volume using a single camera (Cierpka et al. 2010). The micro vortex was observed using time-resolved image recording over 140 s in total. For the experimental setup and results, the interested reader is referred to Kumar et al. (2011). Here, only the differences using the various methods for the velocity estimation is of interest. In Fig. 12, some trajectories are shown to give an impression of the vortical motion. The color corresponds to the velocity in the direction of observation (z-direction). In Fig. 13, a two-dimensional representation of a trajectory illustrates the effect of the different evaluation methods. The gray squares indicate the particle positions used to estimate the velocities. The red vectors correspond to the use of conventional central differences for particle positions at t 1 and t 4. The displacement between both time instants is very large which is beneficial for the reduction of the relative measurement error. However, for the position of the red vectors, a large error is visible due to the curvature of the trajectory, see upper left region of the plot where the vectors are positioned further away from the original particle locations. Using central differences between t 2 and t 3 results in better estimations for the vector position. However, the relative error for the velocity would be larger. Due to the experimental uncertainty in the estimation of the particle position, the velocities are often overestimated and reveal a larger scatter compared to the values using t 1 and t 4. The values for the second order polynomial fit are shown in green. Comparing the symbols for the raw particle positions with the vector positions in Fig. 13, it becomes evident that the error is much lower as for the 2Ft 1-4 case (mean/max difference 0.18/5.90 μm), but also lower as for the 2Ft 2-3 case (mean difference 0.02/0.66 μm). From the velocity plot, it can be concluded that the large fluctuations are damped due to smoothing of the position uncertainty, but not as much as for the 2Ft 1–4 case. This is important for the calculation of the acceleration along the trajectories.

5 Conclusion and outlook

The PTV algorithm proposed by Ohmi and Li (2000) was extended for the analysis of multi-frame particle image sequences. The motivation of the investigation was to enhance the robustness and accuracy of PTV at high particle image densities. This allows for the enhancement of the spatial resolution and the range of scales that can be resolved with the technique. The accuracy was improved by using vector reallocation and higher order velocity estimation based on trajectory fitting. The enhancement of the robustness was achieved by combining a weak spatial homogeneity predictor with a coherent temporal predictor approach. The method was validated numerically for 2D and experimentally for 2D and 3D particle distributions. The analysis shows that the main benefits of the multi-frame PTV evaluation technique are as follows:

-

the reliable determination of a predictor which allows for higher seeding concentrations and larger displacements,

-

the more accurate velocity determination by fitting the particle path,

-

the decrease of the positioning error by vector reallocation in case of curved path lines,

-

the exclusion of wrongly detected particle image positions, and

-

the determination of Lagrangian velocities and accelerations.

In the case of planar 2D measurements, the main limitation of the approach is the out-of-plane loss of particle image pairs. In the case of volumetric 3D recording techniques, this limitation can be completely avoided. Therefore, the multi-frame PTV technique is particularly suited for the analysis of 3D particle image fields, recorded with techniques outlined in Scarano (2013); Cierpka and Kähler (2012b).

References

Adrian RJ (1991) Particle-imaging techniques for experimental fluid mechanics. Annu Rev Fluid Mech 23:261–304. doi:10.1146/annurev.fl.23.010191.001401

Brevis W, Niño Y, Jirka G (2011) Integrationg cross-correlation and relaxation algorithms for particle tracking velocimetry. Exp Fluids 50:135–147. doi:10.1007/s00348-010-0907-z

Cardwell N, Vlachos PP, Hole KA (2011) A multi-parametric particle-pairing algorithm for particle tracking in single and multiphase flows. Meas Sci Tech 22:105406. doi:10.1088/0957-0233/22/10/105406

Cierpka C, Kähler CJ (2012a) Cross-correlation or tracking—comparison and discussion. In: 16th international symposium on applications of laser techniques to fluid mechanics, Lisbon, July 9–12

Cierpka C, Kähler CJ (2012b) Particle imaging techniques for volumetric three-component (3D3C) velocity measurements in microfluidics. J Vis 15:1–31. doi:10.1007/s12650-011-0107-9

Cierpka C, Segura R, Hain R, Kähler CJ (2010) A simple single camera 3C3D velocity measurement technique without errors due to depth of correlation and spatial averaging for microfluidics. Meas Sci Tech 21:045401. doi:10.1088/0957-0233/21/4/045401

Cowen EA, Monismith SG (1997) A hybrid digital particle tracking velocimetry technique. Exp Fluids 22:199–211. doi:10.1007/s003480050038

Duncan J, Dabiri D, Hove J, Gharib M (2010) Universal outlier detection for particle image velocimetry (PIV) and particle tracking velocimetry (PTV) data. Meas Sci Tech 21:057002. doi:10.1088/0957-0233/21/5/057002

Fröhlich J, Mellen C, Rodi W, Temmerman L, Leschziner M (2005) Highly resolved large-eddy simulation of separated flow in a channel with streamwise periodic constrictions. J Fluid Mech 526:9–66. doi:10.1017/S0022112004002812

Guezennec YG, Brodkey RS, Trigui N, Kent JC (1994) Algorithms for fully automated three-dimensional particle tracking velocimetry. Exp Fluids 17:209–219. doi:10.1007/BF00203039

Hain R, Kähler CJ (2007) Fundamentals of multiframe particle image velocimetry (PIV). Exp Fluids 42:575–587. doi:10.1007/s00348-007-0266-6

Hassan YA, Canaan RE (1991) Full-field bubbly flow velocity measurements using a multiframe particle tracking technique. Exp Fluids 12:49–60. doi:10.1007/BF00226565

Kähler CJ, Scholz U, Ortmanns J (2006) Wall-shear-stress and near-wall turbulence measurements up to single pixel resolution by means of long-distance micro-PIV. Exp Fluids 41:327–341. doi:10.1007/s00348-006-0167-0

Kähler CJ, Scharnowski S, Cierpka C (2012a) On the resolution limit of digital particle image velocimetry. Exp Fluids 52:1629–1639. doi:10.1007/s00348-012-1280-x

Kähler CJ, Scharnowski S, Cierpka C (2012b) On the uncertainty of digital PIV and PTV near walls. Exp Fluids 52:1641–1656. doi:10.1007/s00348-012-1307-3

Keane RD, Adrian RJ (1992) Theory of cross-correlation analysis of PIV images. App Sci Res 49:191–215. doi:10.1007/BF00384623

Keane RD, Adrian RJ, Zhang Y (1995) Super-resolution particle imaging velocimetry. Meas Sci Tech 6:754–768. doi:10.1088/0957-0233/6/6/013

Kumar A, Cierpka C, Williams SJ, Kähler CJ, Wereley ST (2011) 3D3C velocimetry measurements of an electrothermal microvortex using wavefront deformation PTV and a single camera. Micro Nano 10:355–365. doi:10.1007/s10404-010-0674-4

Lei YC, Tien WH, Duncan J, Paul M, Ponchaut N, Mouton C, Dabiri D, Rösgen T, Hove J (2012) A vision-based hybrid particle tracking velocimetry (PTV) technique using a modified cascade correlation peak-finding method. Exp Fluids. doi:10.1007/s00348-012-1357-6

Li D, Zhang Y, Sun Y, Yan W (2008) A multi-frame particle tracking algorithm robust against input noise. Meas Sci Tech 19:105401. doi:10.1088/0957-0233/19/10/105401

Lynch K, Scarano F (2013) A high-order time-accurate interrogation method for time-resolved PIV. Meas Scie Tech 24:035305. doi:10.1088/0957-0233/24/3/035305

Maas HG (1992) Digitale Photogrammetrie in der dreidimensionalen Strömungsmesstechnik. PhD thesis, ETH Zürich

Malik NA, Dracos T, Papantoniou DA (1993) Particle tracking velocimetry in three-dimensional flows. Exp Fluids 15:279–294. doi:10.1007/BF00223406

Nishino K, Kasagi N, Hirata M (1989) Three-dimensional particle tracking velocimetry based on automated digital image processing. J Fluids Eng 111:384–391. doi:10.1115/1.3243657

Ohmi K, Li HY (2000) Particle-tracking velocimetry with new algorithms. Meas Sci Tech 11:603–616. doi:10.1088/0957-0233/11/6/303

Okamoto K, Hassan YA, Schmidl WD (1995) New tracking algorithm for particle image velocimetry. Exp Fluids 19:342–347. doi:10.1007/BF00203419

Okamoto K, Nishio S, Kobayashi T, Saga T, Takehara K (2000) Evaluation of the 3D-PIV standard images (PIV-STD project). J Vis 3:115–123. doi:10.1007/BF03182404

Oulette NT, Xu H, Bodenschatz E (2006) A quantitative study of three-dimensional Langrangian particle tracking algorithms. Exp Fluids 40:301–313. doi:10.1007/s00348-005-0068-7

Pereira F, Stüer H, Graff EC, Gharib M (2006) Two-frame 3D particle tracking. Meas Sci Tech 17:1680–1692. doi:10.1088/0957-0233/17/7/006

Raffel M, Willert C, Wereley ST, Kompenhans J (2007) Particle image velocimetry. A practical guide. Springer, Berlin

Rapp C, Manhart M (2011) Flow over periodic hills: an experimental study. Exp Fluids 51:247–269. doi:10.1007/s00348-011-1045-y

Scarano F (2013) Tomographic PIV: principles and practice. Meas Sci Tech 24:012001. doi:10.1088/0957-0233/24/1/012001

Scharnowski S, Kähler CJ (2013) On the effect of curved streamlines on the accuracy of PIV vector fields. Exp Fluids 54:1435. doi:10.1007/s00348-012-1435-9

Sciacchitano A, Scarano F, Wieneke B (2012) Multi-frame pyramid correlation for time-resolved PIV. Exp Fluids 53:1087–1105. doi:10.1007/s00348-012-1345-x

Takehara K, Adrian RJ, Etoh GT, Christensen KT (2000) A Kalman tracker for super-resolution PIV. Exp Fluids 29:S034–S041. doi:10.1007/s003480070005

Wereley ST, Meinhart CD (2001) Second-order accurate particle image velocimetry. Exp Fluids 31:258–268. doi:10.1007/s003480100281

Westerweel J (2008) On velocity gradients in PIV interrogation. Exp Fluids 44:831–842. doi:10.1007/s00348-007-0439-3

Acknowledgments

The financial support from the European Community’s Seventh Framework program (FP7/2007-2013) under Grant agreement No. 265695 and from the German Research Foundation (DFG) under the individual grants program KA 1808/8 is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of the Topical Collection on Application of Laser Techniques to Fluid Mechanics 2012.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Cierpka, C., Lütke, B. & Kähler, C.J. Higher order multi-frame particle tracking velocimetry. Exp Fluids 54, 1533 (2013). https://doi.org/10.1007/s00348-013-1533-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-013-1533-3