Abstract

We consider the problem of approximating a function f from an Euclidean domain to a manifold M by scattered samples \((f(\xi _i))_{i\in \mathcal {I}}\), where the data sites \((\xi _i)_{i\in \mathcal {I}}\) are assumed to be locally close but can otherwise be far apart points scattered throughout the domain. We introduce a natural approximant based on combining the moving least square method and the Karcher mean. We prove that the proposed approximant inherits the accuracy order and the smoothness from its linear counterpart. The analysis also tells us that the use of Karcher’s mean (dependent on a Riemannian metric and the associated exponential map) is inessential and one can replace it by a more general notion of ‘center of mass’ based on a general retraction on the manifold. Consequently, we can substitute the Karcher mean by a more computationally efficient mean. We illustrate our work with numerical results which confirm our theoretical findings.

Similar content being viewed by others

1 Introduction

Let \(f :\Omega \subset \mathbb {R}^s \rightarrow M\) with M a Riemannian manifold be an unknown function and we only know its values at a set of distinct points \((\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\). We are concerned with finding an approximant to f. Such an approximation problem for manifold-valued data arises in numerical geometric integration [23], diffusion tensor interpolation [7] and more recently in fast online methods for dimensionality reduced-order models [5]. In a Stanford press release associated with the aeronautical engineering publications [3–5], it is emphasized that being able to accurately and efficiently interpolate on manifolds is a key to fast online prediction of aerodynamic flutter, which, in turn, may help saving lives of pilots and passengers.

In the aforementioned references it was implicitly assumed that the data points \((f(\xi _i))_{i\in \mathcal {I}} \in M\) are close enough so that they can all be mapped to a single tangent space \(T_pM\) by the inverse exponential map \(\log \). In these previous works the base point \(p \in M\) is typically one of \((f(\xi _i))_{i\in \mathcal {I}}\) and the choice can be quite arbitrary. In this setting, the problem simply reduces to a linear approximation problem on the tangent space \(T_pM\). To approximate the value \(f(x) \in M\), use any standard linear method (polynomial, spline, radial basis function etc.) to interpolate the values \((\log (p,(f(\xi _i)))_{i\in \mathcal {I}}\subset T_pM\) at the abscissa \((\xi _i)_{i\in \mathcal {I}}\), then evaluate the interpolant Q at the desired value x, and finally apply the exponential map to get the approximation \(f(x) \sim \exp (p,Q(x))\).

This ‘push-interpolate-pull’ technique only works when all the available data points \((f(\xi _i))_{i\in \mathcal {I}}\) fall within the injectivity radius of the point p, and the method only provides an approximation for f(x) if x is near p. In this case the problem is local, and the topology of the manifold plays no role. One may then question what would be the difference if one uses the push-interpolate-pull approach but with the exponential map replaced by an arbitrary chart. With the exponential map, the push-interpolate-pull method respects the symmetry, if any, of the manifold. However, it is not clear what is the practical advantage of the latter and if respecting symmetry is not the main concern, one is free to replace the exponential map by a retraction (see, e.g., [1, 11]) on the manifold.

The problem is more challenging when we have available data points \((f(\xi _i))_{i\in \mathcal {I}} \in M\) scattered at different parts of the manifold, and the manifold has a nontrivial topology. In this setting, we desire an approximation method with the following properties:

-

(i)

The method is well-defined as long as the scattered data sites are reasonably close locally, but can otherwise be far apart globally.

-

(ii)

The approximant should provide a decent approximation order when f is sufficiently smooth. Since standard approximation theory (see for example chapter 3 of [9]) tells us that for linear data there are methods which provide an accuracy of \(O(h^m)\) when f is \(C^m\) smooth, and \(h=\min _{i\ne j} |\xi _i-\xi _j|\) is the global meshwidth, it is natural to ask for a method for manifold-valued data with the same accuracy order.

-

(iii)

The approximant itself should be continuous.

-

(iv)

The approximant should be efficiently computable for any given x.

Such a method ought to ‘act locally but think globally’, meaning that the approximating value should only depend on the data \(f(\xi _i)\) for \(\xi _i\) near x, but yet the approximant should be continuous and accurate for all x in the domain. This forces the method to be genuinely nonlinear.

In the shift-invariant setting, i.e. when \(\Omega = \mathbb {R}^s\) and \((\xi _i)_{i\in \mathcal {I}}=h\mathbb {Z}^s\), the above problem was solved successfully first by a subdivision technique [13, 32] and more recently by a method based on combining a general quasi-interpolant with the Karcher mean [14]. Even more recently, the work [17] used a projection-based approach to generalize B-spline quasi-interpolation for regularly spaced data. In either case, it was shown that an approximation method for linear data can be suitably adapted to manifold-valued data without jeopardizing the accuracy or smoothness properties of the original linear method. This kind of (smoothness or approximation order) equivalence properties are analyzed by a method known as proximity analysis. It is also shown that in certain setups, the smoothness or approximation order equivalence can breakdown in unexpected ways [10, 11, 17, 30–32].

Note that all the previous work assumes ‘structured data’ in the sense that samples are taken on a regular grid or the nodes of a triangulation of a domain. However, there exist several applications (for instance in model reduction applications [3–5]) where such structured samples are not available but only scattered samples are provided.

The case of scattered data interpolation has not been treated so far and in this paper we provide a solution to the above problem in the multivariate scattered data setting. More precisely we combine the ideas of [14, 16] with the classical linear theory of scattered data approximation [29] to arrive at approximants which satisfy (i)-(iv) above.

We give a brief outline of this work. The following Sect. 2 presents a brief overview of approximation results for scattered data approximation of Euclidean data. Then in Sect. 3 we present our generalization of the linear theory to the manifold-valued setting. This section also contains our main result regarding the approximation power of our nonlinear construction. It turns out that our scheme retains the optimal approximation rate as expected from the linear case. This is formalized in Theorem 3.5. In this theorem the dependence of the approximation rate on the geometry of M is made explicit and appears in form of norms of iterated covariant derivatives of the \(\log \) function of M. To measure the smoothness of an M-valued function we utilize a smoothness descriptor introduced in [16] and which forms a natural generalization of Hölder norms to the manifold-valued setting. Our results also hold true for arbitrary choices of retractions. We discuss this extension in Sect. 3.3. Finally in Sect. 4 we present numerical experiments for the approximation of functions with values in the sphere and in the manifold of symmetric positive definite (SPD) matrices. In all cases the approximation results derived in Sect. 3 are confirmed. We also examine an application to the interpolation of reduced order models and compare our method to the method introduced in [6] where it turns our that our method delivers superior approximation power.

2 Scattered data approximation in linear spaces

In this section we present classical results concerning the approximation of scattered data in Euclidean space. Our exposition mainly follows the monograph [29].

2.1 General setup

We start by describing a general setup for scattered data approximation. Let \(\Omega \subset \) be an open domain and \(\bar{\Omega }\) its closure. Let \(\Xi = (\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\subset \mathbb {R}^s\) be a set of sites and \(\Phi :=(\varphi _i)_{i\in \mathcal {I}}\subset C_c(\bar{\Omega },\mathbb {R})\) a set of basis functions, where \(C_c(\bar{\Omega },\mathbb {R})\) denotes the set of compactly supported real-valued continuous functions on \(\bar{\Omega }\). The linear quasi-interpolation procedure, applied to a continuous function \(f:\bar{\Omega }\rightarrow \mathbb {R}\), is defined as

Essential for the approximation power of the operator \(\mathbf {Q}\) is the property that polynomials up to a certain degree are reproduced:

Definition 2.1

The pair \((\Xi ,\Phi )\) reproduces polynomials of degree k if

where \(\mathcal {P}_k(\mathbb {R}^s)\) denotes the space of polynomials of (total) degree \(\le k\) on \(\mathbb {R}^s\).

If \((\Xi ,\Phi )\) reproduces polynomials of degree \(k\ge 0\) then necessarily

Definition 2.2

We define the local meshwidth \(h:\bar{\Omega } \rightarrow \mathbb {R}_{\ge 0}\) by

where

and \(|\cdot |\) denotes the Euclidean norm.

The following example gives a simple procedure to interpolate univariate data with polynomial reproduction degree 1.

Example 2.3

Let \(\Omega =(0,1)\), \(0=\xi _1<\xi _2\dots <\xi _n=1\) and

These functions, also known as hat functions, reproduce polynomials up to degree 1. The local meshwidth for \(\xi _i\le x < \xi _{i+1}\) is

We define the \(C^k\) seminorm of \(f:\Omega \rightarrow \mathbb {R}\) on \(U\subset \Omega \) by

where for \(\mathbf {l}=(l_1,\dots ,l_s)\in \mathbb {N}^s\) we define \(|\mathbf {l}|:=\sum _{i=1}^s l_i\) and

Additionally we define \(\partial ^{\mathbf {l}}f=f\) if \(\mathbf {l}=(0,\dots ,0)\). To estimate the approximation error at \(x\in \Omega \) we use the set

Note that if \(\Omega \) is convex we have \(\Omega _x\subset \Omega \) for all \(x\in \Omega \). The following result gives a bound for the approximation error at \(x\in \Omega \) in terms of the local meshwidth, the polynomial reproduction degree and the \(C^k\) seminorm of f on \(\Omega _x\).

Theorem 2.4

Let \(\Omega \subset \mathbb {R}^s\) be an open domain, \(k>0\) a positive integer, \(\Xi = (\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\subset \mathbb {R}^s\) a set of sites and \(\Phi =(\varphi _i)_{i\in \mathcal {I}}\subset C_c(\bar{\Omega },\mathbb {R})\) a set of basis functions. Assume that \((\Xi ,\Phi )\) reproduces polynomials of degree smaller than k. Then there exists a constant \(C>0\), depending only on k and s such that for all \(f\in C^k(\Omega ,\mathbb {R})\) and \(x\in \Omega \) with \(\Omega _x\subset \Omega \) and \(|f|_{C^k(\Omega _x,\mathbb {R})}<\infty \) we have

We omit a proof here as a general theorem will be proven in the next section. If \(\Omega _x \varsubsetneq \Omega \) we can consider an extension operator \(E_x:C^k(\Omega ,M)\rightarrow C^k(\Omega \cup \Omega _x,M)\). Then the estimate (4) with a constant C also depending on the domain \(\Omega \) holds true (see Remark 3.6). In contrast to other results, such as those in [29], Theorem 2.4 poses no restrictions on the sites \(\Xi = (\xi _i)_{i\in \mathcal {I}}\). In the following subsection we will show how the approximation results in [29] follow as a corollary to the previous Theorem 2.4.

2.2 Moving least squares

In this subsection, we will follow [29] and show how to construct a set of compactly supported basis functions \(\Phi =(\varphi _i)_{i \in \mathcal {I}}\) with polynomial reproduction degree k for a set of sites \(\Xi = (\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\subset \mathbb {R}^s\) which is \((k,\delta )\)-unisolvent.

Definition 2.5

A set of sites \(\Xi = (\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\subset \mathbb {R}^s\) is called \((k,\delta )\)-unisolvent if there exists no \(x\in \bar{\Omega }\) and \(p\in \mathcal {P}_k(\mathbb {R}^s)\) with \(p\ne 0\) and \(p(\xi _i)=0\) for all \(i \in \mathcal {I}\) with \(|\xi _i-x|\le \delta \).

Let \(\alpha :[0,\infty ) \rightarrow [0,1]\) with \(\alpha (0)=1,\ \alpha (z)=0\) for \(z\ge 1\) and \(\alpha '(0)=\alpha '(1)=0\), e.g. the Wendland function defined by

For every \(i\in \mathcal {I}\) and \(x\in \bar{\Omega }\) we define

where \(p \in \mathcal {P}_k(\mathbb {R}^s)\) is chosen such that the basis functions \(\Phi =(\varphi _i)_{i \in \mathcal {I}}\) satisfy the polynomial reproduction property (2) of degree k, i.e.

As \(\Xi \) is \((k,\delta )\)-unisolvent the left hand side of (6) can be regarded as an inner product of p and q. Furthermore \(q\rightarrow q(x)\) is a linear functional on \(\mathcal {P}_k(\mathbb {R}^s)\). Hence by the Riesz representation theorem there exists a unique polynomial \(p\in \mathcal {P}_k(\mathbb {R}^s)\) with (6).

Note that choosing a basis on \(\mathcal {P}_k(\mathbb {R}^s)\) one can compute p by solving a linear system of equations. Hence we can compute \(\varphi (x)\) in a reasonable time and \(\mathbf {Q}\) satisfies (iv) from the introduction. In [29] it is shown that the function \(\varphi \) has the same smoothness as \(\alpha \). This shows that \(\mathbf {Q}\) satisfies (iii) from the introduction.

The theory in [29] considers a quasi-uniform set of sites \(\Xi \) as defined below.

Definition 2.6

The pair \((\Xi ,\delta )\) is called quasi-uniform with constant \(c>0\) if

where \(q_{\Xi }\) is the separation distance defined by

For a shorter notation we introduce the symbol \([n] := \{1,\dots , n\}\).

Example 2.7

Let \(\Omega =(-1,1)\) and \(k, n\in \mathbb {N}\). For \(i \in [n]\) we choose the node \(\xi _i\) uniformly at random in the interval \((-1+2(i-1)/n,-1+2i/n)\) and \(\delta =2(k+1)/n\). Then the set of sites \(\Xi =(\xi _i)_{i \in [n]}\) is \((k,\delta )\)-unisolvent. However, in order to make \(\Xi \) quasi-uniform the constant \(c>0\) can get arbitrarily large as \(q_\Xi \) can get arbitrarily small.

Example 2.8

Let \(\Omega =(-1,1)\) and \(k,n\in \mathbb {N}\). For \(i \in [n]\) we choose the node \(\xi _i\) uniformly at random in the interval \([-1+(4i-3)/(2n),-1+(4i-1)/(2n)]\) and \(\delta =(2k+3)/n\). Then the set of sites \(\Xi =(\xi _i)_{i\in [n]}\) is \((k,\delta )\)-unisolvent and quasi-uniform with \(c=2(2k+3)\).

The following theorem is the main result of [29] regarding the approximation with moving least squares.

Theorem 2.9

[29] Let \(\Omega \subset \mathbb {R}^s\) be an open and convex domain, \(k>0\) a positive integer, \(\Xi = (\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\subset \mathbb {R}^s\) a \((k-1,\delta )\)-unisolvent set of sites and \(\Phi =(\varphi _i)_{i\in \mathcal {I}}\subset C_c(\bar{\Omega },\mathbb {R})\) the basis functions as defined in (5). Let \(\delta >0\) and assume that \((\Xi ,\delta )\) is quasi-uniform with \(c>0\). Then there exists a constant \(C>0\), depending only on c, k and s such that for all \(f\in C^k(\Omega ,\mathbb {R})\) with \(|f|_{C^k(\Omega ,\mathbb {R})}<\infty \) we have

We show that Theorem 2.9 is a consequence of Theorem 2.4.

Proof

Note that \(h(x)=\sup _{i\in \mathcal {I}(x)} |\xi _i - x| \le \delta \). Due to [29, Theorem 4.7(2)] \(\sup _{i\in \mathcal {I}(x)}|\varphi _i(x)|\) can be bounded by a constant depending only on k, c and s. We now show that \(|\mathcal {I}(x)|\) can be bounded by a constant depending only on c and s. Note that

Hence the pairwise disjoint balls with centers \((\xi _i)_{i \in \mathcal {I}(x)}\) and radii \(q_{\Xi }\) lie in the ball with center x and radius \(\delta +q_{\Xi }\). As the fraction \((\delta +q_{\Xi })/q_{\Xi }\) is bounded from above by \((1+c)\) we have that \(\sup _{x \in \Omega }\ |\ \mathcal {I}(x)|\) is bounded by the number of balls with radius 1 that fit into a ball of radius \((1+c)\). In the following we use the letter C as a symbol for a constant whose value may change from equation to equation. By Theorem 2.4 we have,

\(\square \)

3 Scattered data approximation in manifolds

The present section extends Theorems 2.4 and 2.9 to the case of manifold-valued functions \(f:\Omega \rightarrow M\) with a Riemannian manifold M. First, in Sect. 3.1 we present a geometric generalization of the operator \(\mathbf {Q}\) to the manifold-valued case. The construction is based on the idea to replace affine averages by a Riemannian mean as studied e.g. in [21]. Subsequently, in Sect. 3.2 we study the resulting approximation error. Finally, in Sect. 3.3 we extend our results to the case of more general notions of geometric average, induced by arbitrary retractions as studied e.g. in [15].

3.1 Definition of Riemannian moving least squares

In this section we consider a Riemannian manifold M with distance metric \(d :M\times M \rightarrow \mathbb {R}_{\ge 0}\) and metric tensor g, inducing a norm \(|\cdot |_{g(p)}\) on the tangent space \(T_pM\) at \(p\in M\).

Extending the classical theory which we briefly described in Sect. 2 we now aim to construct approximation operators for functions \(f:\Omega \rightarrow M\). We follow the ideas of [14, 16, 26–28] where the sum in (1) is interpreted as a weighted mean of the data points \((f(\xi _i))_{i\in \mathcal {I}(x)}\). Due to (3) this is justified.

For weights \(\Gamma =(\gamma _i)_{i\in \mathcal {I}}\subset \mathbb {R}\) with \(\sum _{i\in \mathcal {I}}\gamma _i = 1\) and data points \(\mathcal {P}=(p_i)_{i\in \mathcal {I}}\subset M\) we can define the Riemannian average

One can show [21, 28] that \(av_M(\Gamma ,\mathcal {P})\) is a well-defined operation, if the diameter of the set \(\mathcal {P}\) is small enough:

Theorem 3.1

([2, 28]) Given a weight sequence \(\Gamma =(\gamma _i)_{i\in \mathcal {I}}\subset \mathbb {R}\) with \(\sum _{i\in \mathcal {I}}\gamma _i = 1\). Let \(p_0\in M\) and denote for \(\rho >0\) by \(B_\rho \) the geodesic ball of radius \(\rho \) around \(p_0\). Then there exist \(0<\rho _1\le \rho _2<\infty \), depending only on \(\sum _{i\in \mathcal {I}}|\gamma _i|\) and the geometry of M such that for all points \(\mathcal {P}=(p_i)_{i\in \mathcal {I}}\subset B_{\rho _1}\) the functional \(\sum _{i\in \mathcal {I}}\gamma _i d(p,p_i)^2\) assumes a unique minimum in \(B_{\rho _2}\).

Whenever the assumptions of Theorem 3.1 hold true, the Riemannian average is uniquely determined by the first order condition

which could alternatively be taken as a definition of the weighted average in M, see also [28].

We can now define an M-valued analogue for Eq. (1).

Definition 3.2

Denoting \(\Phi (x):=(\varphi _i(x))_{i\in \mathcal {I}}\subset \mathbb {R}\) and \(f(\Xi ):=(f(\xi _i))_{i\in \mathcal {I}}\subset M\) we define the nonlinear moving least squares approximant

It is clear that in the linear case this definition coincides with (1). Furthermore it is easy to see that the smoothness of the basis functions \(\Phi =(\varphi _i)_{i\in \mathcal {I}}\) gets inherited by the approximation procedure \(\mathbf {Q}^M\), see e.g. [27]. Hence our approximation operator \(\mathbf {Q}^M\) satisfies (iii) from the introduction.

Remark 3.3

We wish to emphasize that the approximation procedure as defined in Definition 3.2 is completely geometric in nature. In particular it is invariant under isometries of M. In mechanics this leads to the desirable property of objectivity. The push-interpolate-pull described in the introduction does in general not have this property.

3.2 Approximation error

We now wish to assess the approximation error of the nonlinear operator \(\mathbf {Q}^M\) and generalize Theorem 2.4 to the M-valued case.

3.2.1 The smoothness descriptor of manifold-valued functions

Two basic things need to be considered to that end. First we need to decide how we measure the error between the original function f and its approximation \(\mathbf {Q}^Mf\). This will be done pointwise using the geodesic distance \(d:M \times M \rightarrow \mathbb {R}_{\ge 0}\) on M. Slightly more subtle is the question what is the right analogue to the term \(\Vert f\Vert _{C^k(\Omega ,\mathbb {R})}\) in the manifold-valued case? In [16] a so-called smoothness descriptor has been introduced to measure norms of derivatives of M-valued functions. Its definition requires the notion of covariant derivative in a Riemannian manifold. With \(\frac{D}{dx^l}\) we denote the covariant partial derivative along f with respect to \(x^l\). That is, given a function \(f:\Omega \rightarrow M\) and a vector field \(W:\Omega \rightarrow TM\) attached to f, i.e., \(W(x)\in T_{f(x)}M\) for all \(x\in \Omega \). Then in coordinates on M, the covariant derivative of W in \(x^l\) reads

where we sum over repeated indices and denote with \({\Gamma }_{ij}^{r}\) the Christoffel symbols associated to the metric of M [8]. For iterated covariant derivatives we introduce the symbol \(\mathcal {D}^{{\mathbf {l}}}f\) which means covariant partial differentiation along f with respect to the multi-index \(\mathbf {l}\) in the sense that

Note that (9) differs from the usual multi-index notation, which cannot be used because covariant partial derivatives do not commute. The smoothness descriptor of an M-valued function is defined as follows.

Definition 3.4

For a function \(f:\Omega \rightarrow M\), \(k\ge 1\) and \(U\subset \Omega \) we define the k-th order smoothness descriptor

The smoothness descriptor as defined above represents a geometric analogue of the classical notions of Hölder norms and seminorms. Note that, even in the Euclidean case (for instance \(M=\mathbb {R}\)) the expression \(\Theta _{k,U}(f)\) is not equal to the Hölder seminorm \(|f|_{C^k(U,\mathbb {R})}\), as additional terms are present in the definition of \(\Theta _{k,U}(f)\). But we have the implications

In the proof of Theorem 3.5 it will become clear why the additional terms in \(\Theta _{k,U}(f)\) are needed in the case of general M where, in contrast to \(M=\mathbb {R}\), higher order covariant derivatives of the logarithm mapping need not vanish, compare also Remark 3.7 below.

3.2.2 Further geometric quantities

Coming back to the anticipated generalization of Theorem 2.4, we also aim to quantify exactly to which extent the approximation error depends on the geometry of M. To this end let \(\log (p,\cdot ) :M \rightarrow T_pM\) be the inverse of the exponential map at p. Denote by \(\nabla _1\), \(\nabla _2\) the covariant derivative of a bivariate function with respect to the first and second argument, respectively. In particular, for \(l\in \mathbb {N}\) we will require the derivatives

and their norms

3.2.3 Main approximation result

We now state and prove our main result.

Theorem 3.5

Let \(\Omega \subset \mathbb {R}^s\) be an open domain, \(k>0\) a positive integer, \(\Xi = (\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\subset \mathbb {R}^s\) a set of sites and \(\Phi =(\varphi _i)_{i\in \mathcal {I}}\subset C_c(\bar{\Omega },\mathbb {R})\). Assume that \((\Xi ,\Phi )\) reproduces polynomials of degree smaller than k. Then there exists a constant \(C>0\), depending only on k and s, such that for all \(f\in C^k(\Omega ,M)\) and \(x\in \Omega \) with \(\Omega _x\subset \Omega \) and \(\Theta _{k,\Omega _x}(f)<\infty \) we have

Remark 3.6

If \(\Omega _x \varsubsetneq \Omega \) we can consider an extension operator (see e.g. [19]) \(E_x:C^k(\Omega ,M)\rightarrow C^k(\Omega \cup \Omega _x,M)\). Then the estimate (10) with f(y) replaced by \(E_xf(y)\) and a constant C also depending on the domain \(\Omega \) holds true.

Proof

We shall use the balance law (8) which implies that we can write

Now we consider the function \(G:\Omega \times \Omega \rightarrow TM\) defined by

Since, for fixed \(x\in \Omega \), the function G maps into a linear space we can perform a Taylor expansion of G in y around the point \((x,x)\in \Omega \times \Omega \) and obtain

where for any \(l=(l_1,\dots ,l_s) \in \mathbb {N}^s\) and \(z=(z_1,\dots ,z_s) \in \mathbb {R}^s\) we define

and

We insert (13) into (11) and get the following expression for \(\varepsilon (x)\):

Exchanging summation order in (15) yields

We will show that \((I)=0\) and \((II)=O(h(x)^k)\) which implies our claim.

Let us start by showing that \((I)=0\). As a first observation we note that, due to (3), we can write

We claim that \((I_{\mathbf {l}})=0\) for all \(\mathbf {l}\in \mathbb {N}^s\) with \(|\mathbf {l}\;|<k\). Indeed, pick \(x_*\in \Omega \) arbitrary. Then, by the polynomial reproduction property (2) and \(k\le m+1\) we get

Setting \(x_*= x\) in (16) yields

which proves the desired claim.

We now move on to prove our second claim, namely that \((II)=O(h(x)^k)\). To this end we need to estimate, for any \(\mathbf {l} \in \mathbb {N}^s\) with \(|\mathbf {l}\;|=k\) the quantity

To this end we consider, for fixed \(\mathbf {l}\) and \(i\in \mathcal {I}(x)\), the quantity \(R_{\mathbf {l}}(x,\xi _i)\). In the following we use the letter C as a symbol for a constant whose value may change from equation to equation. Inserting Definition (12) and using the chain rule we obtain that

which can be estimated by

Inserting estimate (18) into the definition (14) of \(R_{\mathbf {l}}\) we get that

Finally, putting (19) into (17) we get that

Summing up over all \(\mathbf {l}\in \mathbb {N}^s\) with \(|\mathbf {l}\;| = k\) we get the desired estimate. \(\square \)

Two remarks are in order regarding Theorem 3.5.

Remark 3.7

Clearly, in the linear case higher order (i.e. higher than order 1) derivatives of the logarithm mapping \(\log (p,q)=q-p\) vanish. Using this fact it is easy to see that our proof of Theorem 3.5 reduces to Theorem 2.4 in the linear case.

Remark 3.8

The estimate in Theorem 3.5 completely separates the error contributions of f and of the geometry of M. We thus see that the only geometric quantity which influences the approximation consists of iterated covariant derivatives of the logarithm mapping.

Using Theorem 3.5 we can now state and prove a geometric generalization to Wendland’s main theorem on moving least squares approximation, e.g., Theorem 2.9.

Theorem 3.9

Let \(\Omega \subset \mathbb {R}^s\) be an open and convex domain, \(k>0\) a positive integer, \(\Xi = (\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\subset \mathbb {R}^s\) a \((k-1,\delta )\)-unisolvent set of sites and \(\Phi =(\varphi _i)_{i\in \mathcal {I}}\subset C_c(\bar{\Omega },\mathbb {R})\) the basis functions as defined in (5). Let \(\delta >0\) and assume that \((\Xi ,\delta )\) is quasi-uniform with \(c>0\). Then there exists a constant \(C>0\), depending only on c, k and s such that for all \(f\in C^k(\Omega ,M)\) with \(\Theta _{k,\Omega }(f)<\infty \) we have

Proof

The proof proceeds exactly as the proof of Theorem 2.9, using Theorem 3.5 instead of Theorem 2.4. \(\square \)

Our approximation operator \(\mathbf {Q}^M\) satisfies (i) and (ii) from the introduction.

3.3 Generalization to retraction pairs

The computation of the quasi-interpolant \(\mathbf {Q}^Mf(x)\) requires the efficient computation of the exponential and logarithm mapping of M. For many practical examples of M this is not an issue, however in certain cases (for instance the Stiefel manifold [15]) it is computationally expensive to compute the exponential or logarithm function of a given manifold. Then, alternative functions can sometimes be used. This idea is formalized by the concept of retraction pairs.

Definition 3.10

([15], see also [1, 12]) A pair (P, Q) of smooth functions

is called a retraction pair if

In general P may only be defined locally around M, and Q around the diagonal of \(M\times M\).

Example 3.11

Certainly, the pair \((\exp ,\log )\) satisfies the above assumptions [8], and therefore forms a retraction pair. Let \(S^m=\{x\in \mathbb {R}^{m+1}||x|=1\}\) be the m-dimensional sphere. Here we can define a retraction pair (P, Q) by

where \(\langle \cdot ,\cdot \rangle \) is the standard inner product. We refer to [1] for further examples of retraction pairs for several manifolds of practical interest.

Given a retraction pair (P, Q), we can construct generalized quasi-interpolants \(\mathbf {Q}^{(P,Q)}f(x)\) based on the first order condition (8), which defines a geometric average based on (P, Q) via

The results in [15] show that this expression is locally well-defined. The construction above allows us to define a geometric quasi-interpolant based on an arbitrary retraction pair as follows.

Definition 3.12

Given a retraction pair (P, Q) and denoting \(\Phi (x):=(\varphi _i(x))_{i\in \mathcal {I}}\subset \mathbb {R}\) and \(f(\Xi ):=(f(\xi _i))_{i\in \mathcal {I}}\subset M\) we define the nonlinear moving least squares approximant

The following generalization of Theorem 3.5 holds true.

Theorem 3.13

Let \(\Omega \subset \mathbb {R}^s\) be an open domain, \(k>0\) a positive integer, \(\Xi = (\xi _i)_{i\in \mathcal {I}}\subset \bar{\Omega }\subset \mathbb {R}^s\), \(\Phi =(\varphi _i)_{i\in \mathcal {I}}\subset C_c(\bar{\Omega },\mathbb {R})\) and (P, Q) a retraction pair. Assume that \((\Xi ,\Phi )\) reproduces polynomials of degree smaller than k. Then there exists a constant \(C>0\), depending only on k and s, such that for all \(f\in C^k(\Omega ,M)\) and \(x\in \Omega \) with \(\Omega _x\subset \Omega \) and \(\Theta _{k,\Omega _x}(f)<\infty \) we have

Proof

The proof is completely analogous to the proof of Theorem 3.5 with \(\log \) replaced by Q. \(\square \)

4 Numerical examples

In this section we describe the implementation and an application for our approximation operator \(\mathbf {Q}^M\). In Sect. 4.1 we briefly explain for several manifolds how to compute the Riemannian average. In Sect. 4.2 we present two examples of approximations of manifold-valued functions. Finally in Sect. 4.3 we apply our approximation operator on parameter dependent linear time-invariant systems.

4.1 Computation of Riemannian averages

In this section we briefly explain how the Riemannian average can be computed. For data points \(\mathcal {P}=(p_i)_{i\in \mathcal {I}}\subset M\) with weights \((\gamma _i)_{i\in \mathcal {I}}\subset \mathbb {R}\) we use the iteration \(\phi :M\rightarrow M\) defined by

By (1.5.1)–(1.5.3) of [21] for data points close to each other this iteration converges linearly to the Riemannian average with convergence rate bounded by \(\sup _{i,j\in \mathcal {I}} d^2(p_i,p_j)\) times a factor that depends only on the sectional curvature of M. If \(\mathcal {P}=(p_i)_{i\in \mathcal {I}(x)}=(f(\xi _i))_{i\in \mathcal {I}(x)}\) and \(f\in C^1(\Omega ,M)\) we have

Hence, the convergence rate is bounded by \(|f|^2_{C^1(\Omega ,M)}h(x)^2\) times a constant depending only on the sectional curvature of M. Therefore, our approximation operator \(\mathbf {Q}^M\) satisfies (iv) from the introduction. To perform the iteration (24) we only need to know the exponential and logarithm map of the manifold. For the manifolds of practical interest there are explicit expressions for log and exp available. For the sphere we have for example

With the usual metric on the space of SPD matrices (see e.g. [24]) the exponential and logarithm map are

where \({\text {Exp}}\) denotes the matrix exponential and \({\text {Log}}\) the matrix logarithm. See [20, 25] for a survey of different methods for the computation of the Karcher mean of SPD matrices.

The exponential and logarithm map on the space of invertible matrices are

An alternative way to compute the Karcher mean is to use Newton-like methods. See [22] for the computation of the Karcher mean on the sphere and the space of orthogonal matrices using Newton-like methods.

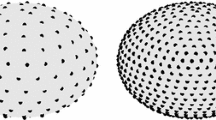

4.2 Interpolation of a sphere-valued and a SPD-valued function

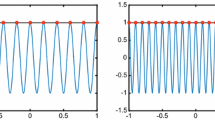

In this section we present two examples for the interpolation of manifold-valued functions. For both interpolations we use the hat functions defined in Example 2.3. Then \(Q^Mf\) is a piecewise geodesic function. Figures 1 and 2 illustrate that the approximation error can be bounded by a constant times the squared local meshwidth as stated in Theorem 3.9.

Example 4.1

We consider the function \(f:[0,1] \rightarrow S^2\) defined by

and the nodes \(\{0\} \cup \{2^{-j}|j\in \{0,1,\dots ,6 \}\}\).

Example 4.2

Let \(\Omega =(0,1)\), \((\xi _1,\ldots ,\xi _7)=(0,0.1,0.3,0.35,0.5,0.8,1)\) and \(f(x)=\cos (x\pi /2)A0+\sin (x\pi /2)A1\), where A0 and A1 are randomly chosen SPD matrices. To measure the error we used the geodesic distance \(d(X,Y)=\Vert {\text {Log}}(X^{-1/2}YX^{-1/2})\Vert _{F}\), where F denotes the Frobenius norm.

4.3 Approximation of reduced order models (ROMs)

In [6], Amsallem and Farhat present a fast method for solving parameter dependent linear time-invariant (LTI) systems. They assume that the solution is known for a few sets of parameters and interpolate the matrices of a reduced order models to get the matrix of a reduced order model for a new set of parameters. Some of these matrices live in a nonlinear space. They choose the ‘push-interpolate-pull’ technique to interpolate the data. We present an adaptation of this method, based upon the theory in this paper, for approximating reduced order models (ROMs). As we will see in some cases our adaptation yields reliable result whereas the original ROM approximation method fails.

We start by introducing linear time invariant systems. Then we present the ROM approximation method and our adaptation.

4.3.1 Linear time-invariant systems

In a parameter dependent LTI system as in [6] we assume that for each \(x \in \Omega \) there exists a unique solution \(z_x :[0,T] \rightarrow \mathbb {R}^q\) of

where \(A:\Omega \rightarrow GL(n)\), with GL(n) the set of invertible matrices of size \(n \times n\), \(B:\Omega \rightarrow \mathbb {R}^{n \times p},\ C :\Omega \rightarrow \mathbb {R}^{q \times n},\ D:\Omega \rightarrow \mathbb {R}^{q \times p}\) and \(u:[0,T] \rightarrow \mathbb {R}^p\). Typically \(z_x\) is an output functional of a dynamical system with control function u and \(n \gg p,q\). Furthermore we assume that A, B, C and D are continuous. For \(k<n\) we define the compact Stiefel manifold by

where \(I_k\) denotes the \(k\times k\) identity matrix. Let \(U,V:\Omega \rightarrow St(n,k)\) define test and trial bases, respectively. The state vector \(w_x(t)\) will be approximated as a linear combination of column vectors of V(x), i.e. \(w_x(t) \approx V(x)\bar{w}_x(t)\) where \(\bar{w}_x\) will be defined by substituting \(w_x\) by \(V(x)\bar{w}_x\) and multiplying Eq. (26 27) from the left by \(U(x)^T\). Hence we get the system of equations

where all operations on matrix-valued functions are defined pointwise. Multiplying Eq. (28 29) from the left by \((U^TV(x))^{-1}\) yields the new LTI system

where \(\mathcal {A}:\Omega \rightarrow \mathbb {R}^{k \times k}\), \(\mathcal {B}:\Omega \rightarrow \mathbb {R}^{k \times p}\), \(\mathcal {C}:\Omega \rightarrow \mathbb {R}^{q \times k}\) and \(\mathcal {D}:\Omega \rightarrow \mathbb {R}^{q \times p}\) are

The aim is that \(\bar{z}_x\) has the same properties and features as \(z_x\). Furthermore k should be much smaller than n so that \(\bar{z}_x(t)\) can be computed (with the help of the matrices \(\mathcal {A}\), \(\mathcal {B}\), \(\mathcal {C}\) and D) significantly faster than \(z_x(t)\).

Let \(O(k):=\{Q \in \mathbb {R}^{k \times k}|QQ^T=I_k \}\) be the space of orthogonal matrices. Note that a coordinate transformation \(\bar{U}(x)=UQ(x)\) where \(Q(x) \in O(k)\) is an orthogonal matrix does not change the matrices \(\mathcal {A},\dots ,\mathcal {D}\) nor the solution \(\bar{z}_x\). Similarly a coordinate transformation \(\bar{V}(x)=VQ(x)\) where \(Q(x) \in O(k)\) is an orthogonal matrix transforms the solution \(\bar{z}_x\) isometrically. We therefore introduce the equivalence relation

The corresponding space St(n, k) / O(k) is also known as the Grassmannian.

Given a parameter dependent LTI-system and assume that for each choice of the parameter \(x\in \Omega \) there exists a ROM with matrices \(U(x),V(x),\mathcal {A}(x),\mathcal {B}(x),\mathcal {C}(x)\). Furthermore assume that the maps \(x\mapsto \Pi (U(x))\) and \(x\mapsto \Pi (V(x))\) where \(\Pi :St(n,k) \rightarrow St(n,k) / O(k)\) denotes the natural projection, are continuous. The maps U, V, \(\mathcal {A}\), \(\mathcal {B}\) and \(\mathcal {C}\) on the other hand do not have to be continuous. The problem of interpolating reduced-order models (ROMs) is: given the reduced order models \((\mathcal {A}(\xi _i),\mathcal {B}(\xi _i),\mathcal {C}(\xi _i))\) for several parameters \(\xi _i,\; i \in \mathcal {I}\) and \(x\in \Omega \) find an approximation for the reduced order model \((\mathcal {A}(x),\mathcal {B}(x),\mathcal {C}(x))\).

As we are aiming for a fast method the running time should be independent of n. In addition to the matrices \((\mathcal {A}(\xi _i),\mathcal {B}(\xi _i),\mathcal {C}(\xi _i))\) we can also use precomputed matrices of size \(\ll n\).

4.3.2 The ROM approximation method

We sketch the method proposed by Amsallem and Farhat in [6]. The algorithm is divided into two steps. In the first step we construct a continuous function \(V^c:\Omega \rightarrow St(n,k)\) with \(V^c\sim V\). To this end we choose \(V_0\in \mathbb {R}^{n \times k}\) such that \(V(x)^TV_0\in \mathbb {R}^{k\times k}\) is invertible for all \(x\in \Omega \). In [6] the matrix \(V_0\) is chosen by \(V_0:=V(\xi _{i})\) for some \(i \in \mathcal {I}\). Then we define f by

for all \(V:\Omega \rightarrow St(n,k)\) where \(\mathcal {P}_{O(k)}\) denotes the closest point projection onto O(k) defined below.

Definition 4.3

We define the closest point projection \(\mathcal {P}_{O(k)}:GL(k) \rightarrow O(k)\) by

To compute the closest point projection onto O(k) in a stable way, one can use the iteration (see e.g. [18])

The next lemma shows that f can be regarded as a map from \(\Omega \) into St(n, k) / O(k).

Lemma 4.4

If \((\tilde{V},V)\) we have

Proof

By the definition of the equivalence relation (36) there exists \(Q:\Omega \rightarrow O(k)\) such that \(\tilde{V}=VQ\). Note that it is enough to prove that

for all \(x\in \Omega \). By left invariance of the closest point projection with respect to orthogonal matrices we have

\(\square \)

Next we prove that f(V) has the same smoothness as \(\Pi (V)\).

Proposition 4.5

Assume that \(\Pi (V):\Omega \rightarrow St(n,k)/O(k)\) is k times differentiable. Then the map \(f(V):\Omega \rightarrow St(n,k)\) is k-times differentiable as well.

Proof

By the assumption there exists a k times differentiable function \(\bar{V}\) with \(\bar{V}\sim V\). By Lemma 4.4 we have \(f(V)=f(\bar{V})\). By the smoothness of the closest point projection we have that \(f(\bar{V})\) is k times differentiable. \(\square \)

Replacing V by \(V^c=f(V)\) in (32 33 34 35) we can define continuous matrix-valued functions \(\mathcal {A}^c,\mathcal {B}^c,\mathcal {C}^c\) for the reduced order models by

where \(P(x)=\mathcal {P}_{O(k)}(V(x)^TV_0)\) for all \(x\in \Omega \). In step 2 the data \(\mathcal {A}^c(x)\) is approximated with respect to the space GL(k) [see (25) for the logarithm and exponential map on GL(k)].

The ROM approximation algorithm interpolates the data \(\mathcal {A}^c(\xi _{i})\) using the ‘push-interpolate-pull’ technique, i.e. it chooses an \(i_0 \in \mathcal {I}\) and maps the data \(\mathcal {A}^c(\xi _{i})\) by the logarithm map \(\log \) with base point \(\mathcal {A}^c(\xi _{i_0})\) to the tangent space at \(\mathcal {A}^c(\xi _{i_0})\). Then the new data is approximated with a method for linear spaces and finally mapped back to the manifold by the exponential map \(\exp \).

The data \(\mathcal {B}^c(x)\) and \(\mathcal {C}^c(x)\) is approximated with a method for linear spaces.

We present an adaptation of the ROM approximation algorithm by using the approximation operator \(\mathbf {Q}^M\) defined in 3.2 to interpolate the data \(\mathcal {A}(x)\) in GL(k).

4.3.3 Numerical experiments

In Section 5.1 of [6] a simple academic example where the ROM approximation method yields good results is shown. In the next example we can only get a reasonable approximation if we use our adaptation.

Example 4.6

In this example we consider an interpolation of LTI-systems without a reduction. Hence we can set \((\mathcal {A},\mathcal {B},\mathcal {C})=(A,B,C)\) and omit step 1 of the ROM Approximation Algorithm. Let

where \(g(x)=4\sin (\pi x)\) and \(\xi _i=\frac{i}{4}\) for \(i \in \{-2,-1,0,1,2\}\). We choose \(i_0=0\) and the hat functions \(\varphi _i(x)\) from Example 2.3 as basis functions. The error for A measured in the Frobenius norm for the ROM-Approximation and its adapted algorithm are illustrated in Fig. 3. An illustration why the ROM approximation method has a large error is shown in Fig. 4. As the matrices A(x) are two dimensional rotation matrices we can see them as points on a circle. The Riemannian average of points \(A_1\) and \(A_2\) with weights \(\lambda _1=\lambda _2=0.5\) is M. However if we transform the points by the logarithm to the tangent space at \(A_0\), interpolate on this tangent space and transform back by the exponential map we get a different point \(\tilde{M}\ne M\).

Illustration for the explanation of the large error in Fig. 3

Example 4.7

We consider the values \(n=3,\ p=q=1\) and the matrices \(A(x):=A_0+xA_1\), \(B(x):=B\) and \(C(x):=C\) for all \(x\in [-1,1]\) where

We set U(x) and V(x) equal to the first 2 columns of the orthogonal matrices of the singular value decomposition of A(x). We choose the data sites from Example 2.8 with \(k=2\). The \(L^\infty \)-norm of the error made in Step 2 of Algorithm 2 is shown in Fig. 5. As we can see the convergence rate is as predicted by Theorem 3.9.

References

Absil, P.A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press (2009)

Afsari, B.: Riemannian \({L}^p\)-center of mass: existence, uniqueness and convexity. Proc. Am. Math. Soc. 139, 655–673 (2011)

Amsallem, D., Cortial, J., Carlberg, K., Farhat, C.: A method for interpolating on manifolds structural dynamics reduced-order models. Int. J. Num. Methods Eng. 80(9), 1241–1258 (2009)

Amsallem, D., Cortial, J., Farhat, C.: Towards real-time computational-fluid-dynamics-based aeroelastic computations using a database of reduced-order information. Am. Inst. Aeronaut. Astronaut. J. 48(9), 2029–2037 (2010). doi:10.2514/1.J050233

Amsallem, D., Farhat, C.: Interpolation method for adapting reduced-order models and application to aeroelasticity. Am. Inst. Aeronaut. Astronaut. J. 46(7), 1803–1813 (2008). doi:10.2514/1.35374

Amsallem, D., Farhat, C.: An online method for interpolating linear parametric reduced-order models. SIAM J. Sci. Comput. 33(5), 2169–2198 (2011)

Arsigny, V., Fillard, P., Pennec, X., Ayache, N.: Geometric means in a novel vector space structure on symmetric positive definite matrices. SIAM J. Matrix Anal. Appl. 29(1), 328–347 (2007)

Do Carmo, M.P.: Riemannian Geometry. Birkhäuser, Boston (1992)

Ciarlet, P.G.: The finite element method for elliptic problems. Soc. Ind. Appl. Math. (2002)

Duchamp, T., Xie, G., Yu, T.P.-Y.: Single basepoint subdivision schemes for manifold-valued data: time-symmetry without space-symmetry. Found. Comput. Math. 13(5), 693–728 (2013)

Nava-Yazdani, E.E., Yu, T.P.Y.: On Donoho’s log-exp subdivision scheme: choice of retraction and time-symmetry. Multiscale Model. Simul. 9(4), 1801–1828 (2011)

Grohs, P.: Smoothness equivalence properties of univariate subdivision schemes and their projection analogues. Num. Math. 113(2), 163–180 (2009)

Grohs, P.: Approximation order from stability for nonlinear subdivision schemes. J. Approx. Theor. 162(5), 1085–1094 (2010)

Grohs, P.: Quasi-interpolation in Riemannian manifolds. IMA J. Num. Anal (2012)

Grohs, P.: Geometric multiscale decompositions of dynamic low-rank matrices. Compu. Aided Geometr. Des. 30(8), 805–826 (2013)

Grohs, P., Hardering, H., Sander. O.: Optimal a priori discretization error bounds for geodesic finite elements. Found. Comput. Math. (2014)

Grohs, P., Sprecher, M.: Projection-based quasiinterpolation in manifolds. Technical Report 2013-23, Seminar for Applied Mathematics, ETH Zürich, Switzerland (2013)

Higham, N.J.: Computing the polar decomposition with applications. SIAM J. Sci. Comput. 7, 1160–1174 (1986)

Hörmander, L.: The Analysis of Linear Partial Differential Operators: Vol 1.: Distribution Theory and Fourier Analysis. Springer (1983)

Jeuris, B., Vandebril, R., Vandereycken, B.: A survey and comparison of contemporary algorithms for computing the matrix geometric mean. Electr. Trans. Num. Anal (2012)

Karcher, H.: Mollifier smoothing and Riemannian center of mass. Commun. Pure Appl. Math. 30, 509–541 (1977)

Krakowski, K., Hüper, K., Manton, J.H.: On the computation of the Karcher mean on spheres and special orthogonal groups. Conference Paper, Robomat (2007)

Marthinsen, A.: Interpolation in Lie groups. SIAM J. Num. Anal. 37(1), 269–285 (1999)

Moakher, M., Zéraï, M.: The Riemannian geometry of the space of positive-definite matrices and its application to the regularization of positive-definite matrix-valued data. J. Math. Imaging Vis. 40(2), 171–187 (2011)

Rentmeesters, Q., Absil, P.A.: Algorithm comparison for Karcher mean computation of rotation matrices and diffusion tensors. Signal Processing Conference, Barcelona (2011)

Sander, O.: Geodesic finite elements for Cosserat rods. Int. J. Num. Methods Eng. 82(13), 1645–1670 (2010)

Sander, O.: Geodesic finite elements on simplicial grids. Int. J. Num. Methods Eng. 92(12), 999–1025 (2012)

Sander, O.: Geodesic finite elements of higher order. Institut f++r Geometrie und praktische Mathematik, Preprint 356, RWTH Aachen (2013)

Wendland, H.: Scattered Data Approximation. Cambridge University Press (2004)

Xie, G., Yu, T.P.Y.: Smoothness equivalence properties of interpolatory Lie group subdivision schemes. IMA J. Num. Anal. 30(3), 731–750

Xie, G., Yu, T.P.-Y.: Smoothness equivalence properties of general manifold-valued data subdivision schemes. Multiscale Model. Simul. 7(3), 1073–1100 (2008)

Xie, G., Yu, T.P.Y.: Approximation order equivalence properties of manifold-valued data subdivision schemes. IMA J. Num. Anal. 32(2), 687–700 (2012)

Acknowledgments

Open access funding provided by [Austrian university].

Author information

Authors and Affiliations

Corresponding author

Additional information

The research of PG and MS was supported by the Swiss National Fund under Grant SNF 140635. TY is supported in part by National Science Foundation Grant DMS 0915068.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Grohs, P., Sprecher, M. & Yu, T. Scattered manifold-valued data approximation. Numer. Math. 135, 987–1010 (2017). https://doi.org/10.1007/s00211-016-0823-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-016-0823-0