Abstract

We consider a class of multi-player games with perfect information and deterministic transitions, where each player controls exactly one non-absorbing state, and where rewards are zero for the non-absorbing states. With respect to the average reward, we provide a combinatorial proof that a subgame-perfect \(\varepsilon \)-equilibrium exists, for every game in our class and for every \(\varepsilon > 0\). We believe that the proof of this result is an important step towards a proof for the more general hypothesis that all perfect information stochastic games, with finite state space and finite action spaces, have a subgame-perfect \(\varepsilon \)-equilibrium for every \(\varepsilon > 0\) with respect to the average reward criterium.

Similar content being viewed by others

1 Introduction

We consider a subclass of stochastic games with finite state and action spaces. Shapley (1953) introduced the class of zero-sum stochastic games. He proved that such games have a value, under the assumption that there is a positive stopping probability after each move by the players, or similarly, under the assumption that stage rewards are discounted. Mertens and Neyman (1981) demonstrated that every such game also has a value with respect to the average reward. Vieille (2000a, b) showed that all non-zerosum two-player stochastic games have an \(\varepsilon \)-equilibrium for the average reward, for every \(\varepsilon >0\).

It follows from the result of Mertens (1987) that multi-player stochastic games with deterministic transitions and with perfect information admit an \(\varepsilon \)-equilibrium for the average reward, for every \(\varepsilon >0\). Thuijsman and Raghavan (1997) showed that they even admit a 0-equilibrium. These \(\varepsilon \)-equilibria are however not always subgame-perfect. An example by Solan and Vieille (2003) demonstrated that a subgame perfect 0-equilibrium need not exist.

The question remains open whether subgame perfect \(\varepsilon \)-equilibria exist, for every \(\varepsilon > 0\). In this paper, we consider a subclass of stochastic games, where each player controls exactly one non-absorbing state, and where non-zero rewards can only be obtained by entering an absorbing state. The technical novelty of this class is that it combines two features that both make it hard to analyse the game: (1) payoffs can be negative in the absorbing states, (2) it may be impossible to move from one non-absorbing state to another non-absorbing state immediately. The technique to deal with these difficulties builds further on those in Flesch et al. (2010a, b), and Kuipers et al. (2013), and may be flexible enough to deal with the entire class of perfect information stochastic games.

For more results on related models, we refer to e.g. Flesch et al. (2014), Flesch and Predtetchinski (2015a, b), Kuipers et al. (2009), Laraki et al. (2013), Purves and Sudderth (2011), and Ummels (2005).

2 The class \(\mathcal {G}\) and playing a subgame perfect \(\varepsilon \)-equilibrium

2.1 The model

We consider the class \(\mathcal {G}\) of games, given by

-

(1)

a nonempty set of players \(N=\{1,\ldots ,n\}\);

-

(2)

exactly two states associated with each player \(t\in N\): one non-absorbing state identified with t, and one absorbing state denoted by \(t^*\); the set of absorbing states is denoted by \(N^*\), and the set of all states is denoted by \(S = N\cup N^*\);

-

(3)

for each state \(t\in N\), a set of states \(A(t) \subseteq N\cup \{t^*\}\) with \(t^*\in A(t)\) and \(t\notin A(t)\); for each state \(t^*\in N^*\), the set \(A(t^*)\) is defined as \(A(t^*) = \{t^*\}\);

-

(4)

for each player \(t\in N\), an associated (reward) vector \(r(t) \in \mathbb {R}^N\).

A game in \(\mathcal {G}\) is to be played at stages in \(\mathbb {N}\) in the following way. At any stage m one state is called active. If \(t\in N\) is active, then player t announces a state in A(t), and the announced state will be active at the next stage. The rewards to the players are zero when this happens. If \(t^*\in N^*\) is active, then the unique state \(t^*\in A(t^*)\) will be active at the next stage (thus, \(t^*\) will be active forever). The stage rewards to the players when this happens are according to r(t), and since r(t) will be the reward at every subsequent stage, r(t) is also the (expected) average reward. The game starts with an initial state \(s\in S\).

We assume complete information (i.e. the players know all the data of the game), full monitoring (i.e. the players observe the active state and the action chosen by the active player), and perfect recall (i.e. the players remember the entire sequence of active states and actions).

Playing a game in \(\mathcal {G}\) can be interpreted as making an infinite walk in the directed graph \(G = (S,E)\), where \(E = \{(x,y)\mid x\in S { and} y\in A(x)\}\). In this paper, whenever we refer to an ordered pair (x, y) as an edge, it is implicit that \(x\in S\) and \(y\in A(x)\), i.e. we mean that (x, y) is an element of E.

Let H be a subgraph of G, and denote the edge-set of H by E(H).

Plans. A plan in H is an infinite sequence of states \(g = (t_m)_{m\in \mathbb {N}}\) (where \(\mathbb {N} = \{1,2, \ldots \}\)), such that \((t_m,t_{m+1}) \in E(H)\) for all \(t_m\in \mathbb {N}\). A plan in G is simply called a plan. A plan is interpreted as a prescription for play for a game with initial active state \(t_1\). The set of states that become active during play if plan g is executed is denoted by \({\textsc {S}}(g)\subseteq S\), i.e. \({\textsc {S}}(g) = \{t\in S\mid \exists m\in \mathbb {N}: t_m = t\}\), and the set of players that become active during play is denoted by \({\textsc {N}}(g)\subseteq N\), i.e. \({\textsc {N}}(g) = \{t\in N\mid \exists m\in \mathbb {N}: t_m = t\}\). Notice that, if the initial state of g is an element of \(N^*\), then g is of the form \((t^*, t^*, \ldots )\), with \(t^*\in N^*\). Also, if plan g contains a state in \(N^*\), say \(t^*\), and the initial state of g is an element of N, then we must have \(t\in N(g)\) and there must be a stage M with \(t_M = t\) and with \(t_m = t^*\) for all \(m>M\). This is interpreted as a prescription for t to announce his absorbing state \(t^*\) at stage M. We say that the plan absorbs at t if this is the case. If \({\textsc {S}}(g) \subseteq N\), then we say that the plan is non-absorbing. We denote by \(\phi _t(g)\) the average reward to player t when play is according to g, i.e. \(\phi _t(g) = r_t(x)\) if g absorbs at x, and \(\phi _t(g) = 0\) if g is non-absorbing. The initial state of plan g is denoted by \({\textsc {first}}(g)\).

Paths. A path (or history) in H is a finite sequence \(p = (t_m)_{m=1}^k\) with \(k\ge 1\), such that \((t_m,t_{m+1}) \in E(H)\) for all \(m\in \{1,\ldots ,k-1\}\). A path in G is simply a path. The number \(k-1\) is called the length of the path. The initial state \(t_1\) of path p is denoted by \({\textsc {first}}(p)\) and the final state \(t_k\) is denoted by \({\textsc {last}}(p)\). If the length of the path is at least 1, i.e. if the path contains at least one edge, we allow ourselves to say that p is a path from \(t_1\) to \(t_k\). We will sometimes want to concatenate a number of paths to make a longer path or a plan, or we may want to concatenate a finite number of paths and a plan to make another plan. We allow concatenation if \(p^1,p^2,\ldots p^m\) are paths that satisfy \({\textsc {last}}(p^k) = {\textsc {first}}(p^{k+1})\) for all \(k\in \{1,\ldots ,m-1\}\). The concatenation of these paths is denoted by \(\langle p^1,p^2,\ldots p^m\rangle \) and it represents the path that follows the prescription of \(p^1\) from \({\textsc {first}}(p^1)\) to \({\textsc {last}}(p^1) = {\textsc {first}}(p^2)\), then follows the prescription of \(p^2\) until \({\textsc {last}}(p^2) = {\textsc {first}}(p^3)\) is reached, and so on, until \({\textsc {last}}(p^m)\) is reached. Also, if g is a plan with \({\textsc {first}}(g) = {\textsc {last}}(p^m)\), then the plan that first follows the prescription of \(\langle p^1,p^2,\ldots , p^m\rangle \), and then switches to g is denoted by \(\langle p^1,\ldots , p^m,g\rangle \). Finally, if we have an infinite number of paths \(p^1, p^2, \ldots \) with the property \({\textsc {last}}(p^k) = {\textsc {first}}(p^{k+1})\) for all \(k\in \mathbb {N}\), then \(\langle p^1,p^2,\ldots \rangle \) represents the path or plan that subsequently follows the prescription of \(p^1\), \(p^2\), etc. (The concatenation of an infinite number of paths is still a path if only finitely many of them have positive length). Let P denote the set of all possible paths, and for \(t\in N\), let \(P^t\) denote the set of all paths with endpoint t.

Strategies. A strategy \(\pi ^t\) for player t is a decision rule that, for any path \(p\in P^t\), prescribes a probability distribution \(\pi ^t(p)\) over the elements of A(t). We use the notation \(\Pi ^t\) for the set of strategies for player t. A strategy \(\pi ^t\in \Pi ^t\) is called pure if every prescription \(\pi ^t(p)\) places probability 1 on one of the elements of A(t). We use the notation \(\Pi \) for the set of joint strategies \(\pi = (\pi ^t)_{t\in N}\) with \(\pi ^t\in \Pi ^t\) for \(t\in N\). A joint strategy \(\pi = (\pi ^t)_{t\in N}\) is pure if \(\pi ^t\) is pure for all \(t\in N\), in which case we say that play is deterministic.

In this paper, we will sometimes define joint strategies by formulating a prescription of play for several stages of the game, possibly for the entire game, which holds only so long as players execute actions that are assigned a positive probability by the prescription. In the event that a player chooses an action that is assigned probability 0, a revised prescription is formulated. Specifically, we will consider two types of prescribed play, called default mode and threat mode.

Default mode is characterized by a plan g. During default mode, one of the players on g is active and he is required to follow the prescription of g. Play will stay in default mode characterized by g during the entire game, provided that players indeed follow the plan.

Threat mode is characterized by a triple (g, v, x), where g and v are plans and where x is a player located on g, such that \({\textsc {first}}(v) \in A(x)\) and such that the state on g following the first occurrence of x differs from \({\textsc {first}}(v)\). During threat mode, the active player is either located on g before the first occurrence of x or it is the first occurrence of x. If the active player is located before x, then he is required to follow the prescription of g. If the active player is the first occurrence of x on g, then he is required to perform a lottery, in which to place probability \(\varepsilon \) on the switch to v, and probability \(1-\varepsilon \) on the continuation of g (The requirement that \({\textsc {first}}(v)\) differs from the state following x on g ensures that the lottery places positive probability on two different options). Threat mode ends after the lottery and play returns to default mode characterized by either g or v, depending on the outcome of the lottery.

The triple (g, v, x) that characterizes threat mode is always chosen such that there exists \(y\in N\) located on g after the first occurrence of x on g, and such that \(\phi _y(v) < \phi _y(g)\). The interpretation here is that y is the threatened player and that the threat consists of the possible switch from plan g to plan v.

Here, we will not say how a revised prescription is formulated, once a player plays an action that has probability 0 according \(\pi \). This is postponed until the proof of our main Theorem 2.1.

Expected rewards. Consider a joint strategy \(\pi \in \Pi \) and a path \(p\in P\). Suppose that the game has developed along the path p and that state \({\textsc {last}}(p)\) is now active. Suppose further that all players, starting at \({\textsc {last}}(p)\), follow the joint strategy \(\pi \), taking p as the history of the game. Denote the overall probability of absorption at t by \(\mathbb {P}^{p,\pi }(t)\). In our model, where non-zero rewards only occur in absorbing states, we see that the expected average reward for player t exists, and that it can be expressed as

If \(\pi \) is a pure joint strategy, then following \(\pi \) results in deterministic play, which can be described by a plan. If we denote this induced plan by \(g^{p,\pi }\), then the expected average reward is given by

If a joint strategy is given in terms of prescribed play, then it is sufficient to know the mode of play (default or threat) at \({\textsc {last}}(p)\) and the data to describe that mode. If play is in default mode according to plan g, then the expected reward for an arbitrary player \(t\in N\) is given by

If play is in threat mode according to (g, v, x), then the expected reward for an arbitrary player \(t\in N\) is given by

Equilibria. Still consider the joint strategy \(\pi \in \Pi \) and a game that has developed along the path \(p\in P\). The joint strategy \(\pi =(\pi ^t)_{t\in N}\in \Pi \) is called a (Nash) \(\varepsilon \) -equilibrium for path p, for some \(\varepsilon \ge 0\), if

which means that, given history p, no player t can gain more than \(\varepsilon \) by a unilateral deviation from his proposed strategy \(\pi ^t\) to an alternative strategy \(\sigma ^t\). The joint strategy \(\pi \) is called an \(\varepsilon \) -equilibrium for initial state \(s\in N\) if \(\pi \) is an \(\varepsilon \)-equilibrium for path (s). The joint strategy \(\pi \) is called a subgame-perfect \(\varepsilon \) -equilibrium if \(\pi \) is an \(\varepsilon \)-equilibrium for every path \(p\in P\).

2.2 Strategic concepts

We now describe some strategic concepts, necessary to compute and describe a subgame perfect \(\varepsilon \)-equilibrium for a game in the class \(\mathcal {G}\).

For \(\alpha \in \mathbb {R}^N\), a plan g, and a player \(x\in N\), we say that x is \(\alpha \) -satisfied by g if \(\phi _x(g) \ge \alpha _x\). We define

We say that plan g is \(\alpha \) -viable if \({\textsc {N}}(g) \subseteq {\textsc {sat}}(g,\alpha )\). This means that, if play is according to g, every player t that becomes active during play will receive an average reward of at least \(\alpha _t\). Notice that a plan of the form \(g =(t^*,t^*, \ldots )\) with \(t^*\in N^*\) is trivially \(\alpha \)-viable, since \({\textsc {N}}(g) = \emptyset \). For every state \(t\in S\), we denote the set of \(\alpha \)-viable plans g with \({\textsc {first}}(g) = t\) by \({\textsc {viable}}(t,\alpha )\). Notice that, for \(t^*\in N^*\), the set \({\textsc {viable}}(t^*,\alpha )\) consists of only the plan \((t^*,t^*,\ldots )\).

Not all \(\alpha \)-viable plans are equally credible as prescriptions for play. Let \(t\in N\), let \(u\in A(t)\), and let \(g\in {\textsc {viable}}(u,\alpha )\). Imagine that plan g is proposed as a prescription for play and suppose that t is located on g. Then player t may prefer to announce u when he becomes active, instead of following the prescription of g. Indeed, if the other players keep restarting plan g each time that t announces u, and if player t keeps announcing u when he becomes active, then play will not absorb and player t may profit from this. The following definition of admissible plans is meant to select the \(\alpha \)-viable plans, where such a deviation by player t is not possible, not profitable for t, or can be countered with a credible threat by one of the other players.

For \(t\in N\), and a plan \(g\in {\textsc {viable}}(u,\alpha )\) with \(u\in A(t)\), say that g is \((t,u,\alpha )\) -admissible if at least one of the following holds:

-

AD-i: \(t\notin {\textsc {N}}(g)\) or g is non-absorbing;

-

AD-ii: \(\alpha _t > 0\);

-

AD-iii: \(t\in {\textsc {N}}(g)\) and there exists a pair (x, v), such that \(x\ne t\) is a player who resides on g in the part from u to the first occurrence of t, such that v is an \(\alpha \)-viable plan with \({\textsc {first}}(v) \in A(x)\), such that \({\textsc {first}}(v)\) is not the state following the first occurrence of x on plan g, and such that \(x,t\notin {\textsc {sat}}(v,\alpha )\).

We denote the set of plans that are \((t,u,\alpha )\)-admissible by \({\textsc {admiss}}(t,u,\alpha )\).

Condition AD-i describes the situation, where the announcement of u by player t is not possible (the case \(t\notin {\textsc {N}}(g)\)) or would yield the same average reward as g (the case that g is non-absorbing). Condition AD-ii describes the situation, where this deviation, if it is possible, would yield a strictly lower average reward for t, as the non-absorbing plan yields zero average reward, while g yields an average reward of at least \(\alpha _t > 0\). Condition AD-iii describes the situation, where a player x with \(x\ne t\) and participating in the non-absorbing plan created by t’s deviation to u, has the threat of switching to an \(\alpha \)-viable plan v with \(t,x\notin {\textsc {sat}}(v,\alpha )\). Now, if prescribed play is not exactly g, but if player x is required to place a very small probability on a switch to v, the first time he is active, then play will still be according to g with very high probability if players follow the prescription. Player t will not be able to profit from continuous deviation to u, as this would eventually result in a switch to v with probability 1 and \(t\notin {\textsc {sat}}(v,\alpha )\). Player x will not be tempted to increase the probability of a switch to v, since \(x\notin {\textsc {sat}}(v,\alpha )\).

Example 2.1

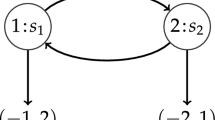

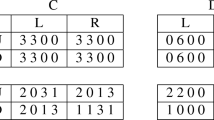

Figure 1 represents two games,Footnote 1 each with two players, 1 and 2. Only the two nodes that correspond to the non-absorbing states of these players are depicted, and the vectors below these states are the rewards that will be obtained if the controlling player chooses his absorbing state. For both games, the arc from 2 to 1 indicates that \(1\in A(2)\), hence \(A(2) = \{1,2^*\}\), and the other arc indicates \(2\in A(1)\), hence \(A(1) = \{2,1^*\}\).

For game I, let us take \(\alpha _t = r_t(t)\) for \(t=1,2\), i.e. \(\alpha _1 = 1\) and \(\alpha _2 = -1\). Then, every plan that absorbs at 2 is \(\alpha \)-viable. The plan \((1,1^*,1^*,\ldots )\) is also \(\alpha \)-viable, but any plan in which player 2 is active and that absorbs at 1 is not \(\alpha \)-viable, since such a plan does not \(\alpha \)-satisfy player 2. The two non-absorbing plans \((1,2,1,2,\ldots )\) and \((2,1,2,1,\ldots )\) are not \(\alpha \)-viable, since player 1 is active in both plans and is not \(\alpha \)-satisfied by them.

All plans in \({\textsc {viable}}(2,\alpha )\) are \((1,2,\alpha )\)-admissible, since \(\alpha _1 = 1 > 0\), i.e. condition AD-ii is satisfied. All plans in \({\textsc {viable}}(1,\alpha )\) are \((2,1,\alpha )\)-admissible. For the plan \((1,1^*,1^*,\ldots )\) this is true, since 2 is not active in the plan, hence the plan satisfies AD-i. For the plans in \({\textsc {viable}}(1,\alpha )\) that absorb at 2 this is true, since these plans satisfy AD-iii, which is seen by letting \((x,v) = (1,(1^*,1^*,\ldots ))\).

For game II, let us also take \(\alpha _t = r_t(t)\) for \(t=1,2\), i.e. \(\alpha _1 = \alpha _2 = -2\). Here, it is clear that every plan is \(\alpha \)-viable. Any plan that starts at 1 and absorbs at 2 is not \((2,1,\alpha )\)-admissible however, since conditions AD-i, AD-ii, and AD-iii are all three violated for such plans. The plans \((1,1^*,1^*,\ldots )\) and \((1,2,1,2,\ldots )\) are \((2,1,\alpha )\)-admissible, as they both satisfy AD-i. By symmetry of the example, any plan that starts at 2 and absorbs at 1 is not \((1,2,\alpha )\)-admissible, but the plans \((2,2^*,2^*,\ldots )\) and \((2,1,2,1,\ldots )\) are. \(\square \)

Update procedure. We now propose a method for updating one coordinate of a vector \(\alpha \in \mathbb {R}^N\). For \(t\in N\), \(u\in A(t)\), and \(\alpha \in \mathbb {R}^N\), we define

We define further,

and

We use the convention \(\min \emptyset = \infty \), so that \(\beta (t,u,\alpha )\) and \(\delta (t,\alpha )\) are well defined. Moreover, by the definition of \(\delta (t,\alpha )\), the set \(B(t,\alpha )\) is always nonempty. The update of vector \(\alpha \) is done by replacing coordinate \(\alpha _t\) by \(\delta (t,\alpha )\).

Example 2.2

Let us apply the updating procedure to the two games depicted in Fig. 1, with the initial \(\alpha \)-values from Example 2.1.

For game I, we chose \(\alpha _1 = 1\) and \(\alpha _2 = -1\). Let us first update the state controlled by player 1. We trivially have \(\beta (1,1^*,\alpha ) = r_1(1) = 1\) and we have \(\beta (1,2,\alpha ) = \phi _1(2,2^*,2^*,\ldots ) = r_1(2) = 2\), since \((2,2^*,2^*,\ldots )\) is a minimizing \((1,2,\alpha )\)-admissible plan. Thus, \(\delta (1,\alpha ) = \max (1,2) = 2\), and the updated vector is given by \(\alpha ^*_1 = 2\) and \(\alpha ^*_2 = -1\). Let us now update the updated vector \(\alpha ^*\). This time, we update the state controlled by player 2. We trivially have \(\beta (2,2^*,\alpha ) = r_2(2) = -1\) and one can verify that \(\beta (2,1,\alpha ) = \phi _1(1,2,2^*,2^*,\ldots ) = r_2(2) = -1\). Thus, \(\delta (2,\alpha ) = \max (-1,-1) = -1\), and we observe that this update had no effect. One can verify that an update of the already updated state controlled by 1 will have no further effect either.

For game II, we chose \(\alpha _1 = \alpha _2 = -2\). Let us denote \(\alpha ^0 =\alpha \), and for \(i>0\), let us use the notation \(\alpha ^i\) for the vector that results after an update of \(\alpha ^{i-1}\). We choose to update first the state controlled by player 1. We trivially have \(\beta (1,1^*,\alpha ) = r_1(1) =-2\), and the findings in Example 2.1 show that \(\beta (1,2,\alpha ) = \min \left( \phi _1(2,1,2,1,\ldots ), \phi _1(2,2^*,2^*,\ldots )\right) = -1\). Thus, \(\delta (1,\alpha ) = -1\) and \(\alpha ^1 = (-1,-2)\). We now set \(\alpha = \alpha ^1\) and we continue with an update of the state controlled by player 2. We have \(\beta (2,2^*,\alpha ) = r_2(2) = -2\). Notice that \((1,2,1,2, \ldots )\) is the only \((2,1,\alpha )\)-admissible plan. (The plan \((1,1^*,1^*,\ldots )\) is not \((2,1,\alpha )\)-admissible ‘anymore’, due to the previous update.) Thus, we have \(\beta (2,1,\alpha ) = \phi _2(1,2,1,2, \ldots ) = 0\). It follows that \(\alpha ^2 = (-1,0)\). We set \(\alpha = \alpha ^2\). Notice that only the two non-absorbing plans \((1,2,1,2,\ldots )\) and \((2,1,2,1,\ldots )\) are now \(\alpha \)-viable. They are also respectively \((2,1,\alpha )\)-admissible and \((1,2,\alpha )\)-admissible. Then \(\beta (1,2,\alpha ) = \phi _1(2,1,2,1, \ldots ) = 0\), which means that we obtain \(\alpha ^3 = (0,0)\) by updating the state controlled by 1 for the second time. After this, no update will have any further effect. \(\diamondsuit \)

2.3 Main result

In Sects. 3 and 4, we analyze the update procedure. The analysis demonstrates that, starting with an appropriate initial vector \(\alpha ^0 \in \mathbb {R}^N\), repeated application of the update procedure will produce, in a finite number of iterations, a vector \(\alpha ^*\in \mathbb {R}^N\), such that \(\delta (t,\alpha ^*) = \alpha _t^*\) for all \(t\in N\). The existence of such a ‘fixed point’ \(\alpha ^*\) is proven in Theorem 4.16 and it allows for the construction of a subgame-perfect \(\varepsilon \)-equilibrium for all \(\varepsilon > 0\). The following theorem is our main result.

Theorem 2.1

There exists a subgame perfect \(\varepsilon \)-equilibrium for every game in class \(\mathcal {G}\) and every \(\varepsilon > 0\).

The iterative procedure in this paper differs from those in earlier papers in the following respects. In comparison with results for games where all payoffs in absorbing states are non-negative, such as in Kuipers et al. (2009) and Flesch et al. (2010a), or where the payoffs are lower semi-continuous as in Flesch et al. (2010b), the main role is played by the set of all viable plans, whereas in the current paper we need to consider the more sophisticated concept of admissible plans. The need for this was already clear from the game in Solan and Vieille (2003) (see game I in Example 2.1), where the unique type of subgame perfect \(\varepsilon \)-equilibrium requires randomization where a player puts a small but positive probability on a suboptimal action. This is reflected in condition AD-iii of the definition of admissible plans. The only other paper where a similar type of iteration was applied to a game with possibly negative payoffs is Kuipers et al. (2013), which deals with a much smaller class of games and for which most of the concepts introduced in Sects. 3 and 4 were not needed.

Further, we would like to relate our main result, Theorem 2.1, to two recent existence results. The first one is the existence result in Flesch and Predtetchinski (2015a) for more general perfect information games. Their main result implies, in the context of our model, that a subgame-perfect \(\varepsilon \)-equilibrium exists if the number of non-absorbing plans is countable.Footnote 2 In our model this condition is very restrictive, and is typically violated in games which have two non-disjoint cycles of non-absorbing states. As an example in which the condition does hold, we refer to the game by Solan and Vieille in Solan and Vieille (2003) (see game I in Example 2.1), which has only one non-absorbing plan from the initial state.

Another existence result that we mention is the one in Flesch and Predtetchinski (2015b). Their result implies, in the context of our model, that a subgame-perfect 0-equilibrium exists in pure strategies provided that the following conditionFootnote 3 holds for every cycle c of non-absorbing states: the set \(S^*(c)\) of all absorbing states that can eventually be reached from the cycle c can be partitioned into two sets \(S^*_-(c)\) and \(S^*_+(c)\) such that (1) \(S^*_+(c)\) is non-empty, (2) all payoffs in the states in \(S^*_+(c)\) are positive, (3) each player prefers the payoff in any state in \(S^*_+(c)\) to that in any state in \(S^*_-(c)\). Intuitively, the players would like to get absorption in a state in \(S^*_+(c)\), even though they can still disagree in which one exactly, so this condition would allow to safely ignore absorbing states having a negative payoff.

2.4 Proof of Theorem 2.1

I: Description of a joint strategy. Take a game in \(\mathcal {G}\). As we remarked, a vector \(\alpha ^*\in \mathbb {R}^N\) exists such that \(\delta (t,\alpha ^*) = \alpha _t^*\) for all \(t\in N\). The fact that \(\delta (t,\alpha ^*)\) is finite for all \(t\in N\) implies that \(\beta (t,u,\alpha ^*)\) is finite for all \(t\in N\) and all \(u\in A(t)\). It follows that \({\textsc {viable}}(t,\alpha ^*)\) is non-empty for all \(t\in N\) and that \({\textsc {admiss}}(t,u,\alpha ^*)\) is non-empty for all \(t\in N\) and \(u\in A(t)\).

We can thus choose a plan \(g^t\in {\textsc {viable}}(t,\alpha ^*)\) for all \(t\in N\). We can also choose, for all \(t\in N\) and all \(u\in A(t)\), a plan \(g^{tu} \in {\textsc {admiss}}(t,u,\alpha ^*)\) such that \(\phi _t(g^{tu}) = \beta (t,u,\alpha ^*)\). In case the choice \(g^{tu}\) violates both AD-i and AD-ii, and therefore satisfies AD-iii, we can make the additional choice of a player \(x^{tu} \in N{\setminus } \{t\}\) and an \(\alpha ^*\)-viable plan \(v^{tu}\), such that \({\textsc {first}}(v^{tu}) \in A(x^{tu})\), such that \({\textsc {first}}(v)\) is not the state following the first occurrence of \(x^{tu}\) on plan \(g^{tu}\), such that \(x^{tu}\) resides on the non-absorbing plan that would result if player t were to deviate from \(g^{tu}\) by announcing u every time he is active, and such that \(x^{tu},t\notin {\textsc {sat}}(v^{tu},\alpha ^*)\).

Now, if \(s\in N\) is the initial state of the game, then we start the game in default mode with plan \(g^s\) as initial prescription. Whenever a deviation from prescribed play is detected, say that player t deviates to u, then we check if plan \(g^{tu}\), associated with the pair (t, u), satisfies AD-i or AD-ii. If so, then revised play will be in default mode according to the (newly) prescribed plan \(g^{tu}\). If not, then AD-iii is satisfied, and play will resume in threat mode according to the triple \((g^{tu},v^{tu},x^{tu})\). Let us denote the joint strategy described in this way by \(\pi \).

II: Verification. We will verify that \(\pi \) is a subgame perfect \(2\varepsilon M\)-equilibrium, where \(M = \max \{|r_i(t)| \mid i,t\in N\}\).

Let \(y\in N\) and let \(\sigma ^y\) be a strategy for player y. Let \(\sigma \) denote the joint strategy where player y uses strategy \(\sigma ^y\) and all players \(t\ne y\) use strategy \(\pi ^t\). We will prove that \(\psi ^p_y(\sigma ) \le \psi ^p_y(\pi ) + 2 \varepsilon M\) for every path p.

If there exists a mixed strategy for the deviating player such that he profits more than \(2\varepsilon M\), then there also exists a pure strategy for him with this property. Therefore, we may further assume that \(\sigma ^y\) is a pure strategy.

Now, let p be an arbitrary path and suppose that play has developed along p. Suppose further that strategy \(\sigma \) is used after p. We will prove that player y can profit at most \(2\varepsilon M\) in expectation from this, compared to playing strategy \(\pi ^y\).

For a path q with \({\textsc {last}}(q) = y\), say that \(\sigma \) deviates at q if \(\sigma ^y(q)\) and \(\pi ^y(q)\) are not the same probability distributions. Say that player y deviates (during play according to \(\sigma \)) whenever play according to \(\sigma \) develops along a path q such that \(\sigma \) deviates at q.

We divide the proof in three cases, depending on the type and number of deviations by player y after p. In each case, we bound the conditional expected reward of the deviating player y, or we prove that the case has probability 0 of happening.

IIa: First assume that player y deviates a finite number of times and that, at the last deviation, \(\sigma ^y\) assigns probability 1 to an action \(u\in A(y)\) that is assigned probability 0 in \(\pi ^y\) (hence the last deviation causes a revision of prescribed play).

If the plan \(g^{yu}\) satisfies AD-i or AD-ii, then after y’s last deviation, play will resume in default mode with prescription \(g^{yu}\). The plan \(g^{yu}\) was selected such that \(\phi _y(g^{yu}) = \beta (y,u,\alpha ^*)\). By definition of \(\delta (y,\alpha ^*)\), we have \(\beta (y,u,\alpha ^*) \le \delta (y,\alpha ^*)\), and by the properties of \(\alpha _y^*\), we have \(\delta (y,\alpha ^*) = \alpha _y^*\). It follows that the expected reward for player y, after deviations, is \(\phi _y(g^{yu}) \le \alpha _y^*\).

If the plan \(g^{yu}\) violates both AD-i and AD-ii, then after y’s last deviation, play will resume in threat mode according to the triple \((g^{yu},v^{yu},x^{yu})\). Since no further deviations will take place, plan \(g^{yu}\) will be executed with probability \(1-\varepsilon \) and with an expected average reward of at most \(\alpha _y^*\) for player y; plan \(v^{yu}\) will be executed with probability \(\varepsilon \) and with an expected average reward strictly less than \(\alpha _y^*\) for player y, since \(y\notin {\textsc {sat}}(v^{yu},\alpha ^*)\). Thus, the expected average reward for y, after deviations, is strictly less than \((1-\varepsilon ) \alpha _y^*+ \varepsilon \alpha _y^*= \alpha _y^*\).

Let us now demonstrate that player y has an expected average reward of at least \(\alpha _y^*- 2\varepsilon M\) if he follows \(\pi ^y\). If y becomes first active during default mode, it means that prescribed play is deterministic according to an \(\alpha ^*\)-viable plan, say g. Then y’s average reward will be \(\phi _y(g)\) and since \(y\in {\textsc {N}}(g)\), we have indeed \(\phi _y(g) \ge \alpha _y^*> \alpha _y^*- 2\varepsilon M\). If player y becomes active during threat mode, then play is according one of the plans \(g^{tu}\). If the first occurrence of player \(x^{tu}\) comes before y on plan \(g^{tu}\), then there is no chance of a switch to \(v^{tu}\) anymore, and the average reward for player y will be \(\phi _y(g^{tu}) \ge \alpha _y^*> \alpha _y^*- 2 \varepsilon M\). If the first occurrence of \(x^{tu}\) comes after y or if \(x^{tu}=y\), then there is probability \(1-\varepsilon \) that plan \(g^{tu}\) will be executed, with an average reward of at least \(\alpha _y^*\) for player y. There is also a probability of \(\varepsilon \) that \(v^{tu}\) will be executed, with an average reward of at least \(-M\) for player y. Thus indeed, the expected average reward for player y is at least \((1-\varepsilon ) \alpha _y^*- \varepsilon M \ge \alpha ^*_y - 2\varepsilon M\).

IIb: Now assume that player y deviates a finite number of times after p, and that, at the last deviation, player y chooses an action \(u\in A(y)\) that is played with positive probability according to \(\pi \) (hence the last deviation does not cause a revision of prescribed play). This implies that, at the last deviation by y, the game is in threat mode, and that according to \(\pi \), player y is supposed to use a lottery to determine his action. Say that the game is in threat mode according to the triple (g, v, y).

Notice that the player to cause a revision of play is never the one assigned the task of performing a lottery in revised play. Therefore, player y did not deviate in the period between the stage at the end of p and the stage at which he is supposed to perform the lottery. So, the last deviation of y is in fact his only deviation in the relevant time period, and after p, play is in threat mode according to (g, v, y). We conclude that the expected average reward for y by following \(\pi \) is \((1-\varepsilon ) \phi _y(g) + \varepsilon \phi _y(v)\).

Since we assume that the last deviation of y has positive probability under \(\pi \), there are precisely two possibilities for that action. If player y deviates by choosing continuation of g (with probability 1, as \(\sigma ^y\) is pure), then plan g will be executed entirely (with probability 1) and the average reward for player y, after deviations, is \(\phi _y(g)\). If player y deviates by choosing \({\textsc {first}}(v)\), then plan v will be executed with probability 1 and the average reward for player y, after deviations, is \(\phi _y(v)\). In both cases, the reward is bounded by \(\phi _y(g)\), since \(\phi _y(v) < \alpha _y^*\le \phi _y(g)\).

For this case, it now remains to see that \(\phi _y(g) \le (1-\varepsilon ) \phi _y(g) + \varepsilon \phi _y(v) + 2\varepsilon M\). Indeed, we have

IIc: Let us finally investigate the possibility that player y deviates infinitely many times. As this implies infinite play along non-absorbing states, the average reward to all players will be zero if this happens. If \(\alpha _y^*\ge 0\), then player y will profit at most \(2 \varepsilon M\), as he will receive at least \(\alpha _y^*- 2 \varepsilon M\) by sticking to the plan. So we can assume that \(\alpha _y^*< 0\). Notice that, if player y deviates infinitely many times, he causes infinitely many times a revision of prescribed play. Each time at such a revision, it will be checked if the plan \(g^{yu}\) satisfies AD-i or AD-ii, where u is the state to which y deviates. By the choice of \(g^{yu}\) as a minimizer for \(\beta (y,u,\alpha ^*)\), we have \(\phi _y(g^{yu}) = \beta (y,u,\alpha ^*) \le \delta (y,\alpha ^*) = \alpha _y^*< 0\). This implies that \(g^{yu}\) is an absorbing plan. Also, since y will deviate again, we must have \(y\in {\textsc {N}}(g^{yu})\). This means that AD-i is violated, and clearly AD-ii is violated too, by our assumption \(\alpha _y^*< 0\). Thus, only AD-iii is satisfied, and play will resume in threat mode according to the triple \((g^{yu},v^{yu}, x^{yu})\).

We conclude that, in the event that \(\sigma \) deviates infinitely many times during play and \(\alpha _y^*< 0\), play will enter threat mode infinitely many times. Each time that play enters threat mode, there is probability \(\varepsilon \) that a switch to one of the plans \(v^{yu}\) is made. Thus, with probability 1, such a switch is eventually made. Notice that after the switch, player y will not become active again, since \(y\notin {\textsc {sat}}(v^{yu},\alpha ^*)\), and therefore \(y\notin {\textsc {N}}(v^{yu})\). This directly contradicts that player y deviates infinitely many times. We thus see that the event of infinitely many deviations during play has probability 0 if \(\alpha _y^*< 0\).

This completes the proof of Theorem 2.1.

Example 2.3

For game I, a fixed point \(\alpha ^*_1 = 2\) and \(\alpha ^*_2 = -1\) was calculated in Example 2.2. All \(\alpha ^*\)-viable plans absorb at 2, so we define \(g^1 = g^{21} = (1,2,2^*,2^*,\ldots )\) and \(g^2 = g^{12} = (2,2^*,2^*,\ldots )\). Plan \(g^{12}\) is \((1,2,\alpha ^*)\)-admissible, since it satisfies both AD-i and AD-ii. Plan \(g^{21}\) is \((2,1,\alpha ^*)\)-admissible, since it satisfies AD-iii. In order to complete our choices, we choose player 1 in the role of ‘threat-player’ \(x^{21}\) and plan \((1^*, 1^*,\ldots )\) in the role of ’threat-plan’ \(v^{21} = (1^*, 1^*,\ldots )\).

With these choices, the proof of Theorem 2.1 now prescribes absorption at 2 whenever player 2 is active (execute either \(g^2\) or \(g^{12}\)). If the game starts at 1, then player 1 is initially supposed to announce 2 with probability 1 (execute \(g^1\)). Every next time he becomes active, player 1 is supposed to announce 2 (execute \(g^{21}\)) with probability \(1-\varepsilon \) and to absorb at 1 (execute plan \(v^{21}\)) with probability \(\varepsilon \). (Player 2 apparently refused to absorb at 2, and play is now in threat mode.)

For game II, the fixed point is \(\alpha ^*_1 = 0\) and \(\alpha ^*_2 = 0\). Here, only the two non-absorbing plans \((1,2,1,2,\ldots )\) and \((2,1,2,1,\ldots )\) are \(\alpha ^*\)-viable. We therefore choose \(g^1 = (1,2,1,2,\ldots )\) and \(g^2 = (1,2,1,2,\ldots )\). We do not make any further choices. (Since it is not a deviation to announce the other player, it is unnecessary to choose the plans \(g^{21}\) and \(g^{12}\)).

With these choices, the proof of theorem 2.1 now prescribes the players to always announce each other, indefinitely. Deviation from the plan means absorption, which is indeed not profitable for the deviating player. \(\square \)

Of the restrictions imposed on our class \(\mathcal {G}\) of games, the requirement that each player control just one non-absorbing state seems especially severe. The following example is to demonstrate that at least one specific attempt to deal with multiple states per player does not work.

Example 2.4

The update procedure could be applied to the 1-player game in figure 2, as if the two states were controlled by two different players, say 1 and \(1^\prime \). If we initiate the update procedure with \(\alpha _t = -1\) for \(t = 1, 1^\prime \), then we find that \(\alpha \) is in fact a fixed point. The construction of theorem 2.1 then allows, for any \(\varepsilon > 0\), for the strategy profile in which player 1 absorbs immediately with probability 1, in any subgame. So clearly, the construction of Theorem 2.1 fails here. \(\diamondsuit \)

3 Semi-stable vectors and their properties

The purpose of this section is to provide a sufficient condition for \(\alpha \in \mathbb {R}^N\) that guarantees the existence of a \((t,u,\alpha )\)-admissible plan for every \(t\in N \) and every \(u\in A(t)\).

For \(\alpha \in \mathbb {R}^N\), \(t\in N\) and \(u\in S\), let us say that t is \(\alpha \) -safe at u if \(u\in A(t)\) and if \(t\in {\textsc {sat}}(g,\alpha )\) for all \(g\in {\textsc {viable}}(u,\alpha )\). For \(t^*\in N^*\), it will be convenient to say that \(t^*\) is \(\alpha \)-safe at \(t^*\). We define, for all \(t\in S\),

For \(\alpha \in \mathbb {R}^N\) and \(X\subseteq N\), we define

We also define

and for \(\alpha \in \mathbb {R}^N\), we define

We say that an edge \(e = (x,y)\) is an \(\alpha \) -exit from X if \(x\in X\) and \(y\in S{\setminus } X\), and if, for all \(g\in {\textsc {viable}}(y,\alpha )\),

We say that e is a trivial \(\alpha \)-exit from X if \(x\in \textsc {{esc}}(X,\alpha )\) and a non-trivial one if \(x\in X{\setminus } \textsc {{esc}}(X,\alpha )\).

We now say that \(\alpha \in \mathbb {R}^N\) is semi-stable if \({\textsc {safestep}}(x,\alpha ) \ne \emptyset \) for all \(x\in N\), and if there exists a non-trivial \(\alpha \)-exit from X for every \(X\in \mathcal {X}(\alpha )\). The set of semi-stable vectors in \(\mathbb {R}^N\) is denoted by \(\Omega \).

Example 3.1

Let us illustrate the definitions of this section with the 4-player game depicted above. Notice that \(X = \{1,2\}\) is the unique element of \(\mathcal {C}\), and thus the only candidate for an element of \(\mathcal {X}(\alpha ) = \mathcal {P}(\alpha ) \cap \mathcal {E}(\alpha ) \cap \mathcal {C}\).

For \(\rho = (-1,1,0,0)\), we have \(2\in {\textsc {pos}}(X,\rho ) \cap \textsc {{esc}}(X,\rho )\), hence \(X\notin \mathcal {E}(\rho )\). Thus, \(\mathcal {X}(\rho ) = \emptyset \). Then trivially, for every set in \(Y\in \mathcal {X}(\alpha )\), there exists a non-trivial \(\rho \)-exit from Y. As \({\textsc {safestep}}(t,\rho ) \supseteq \{(t,t^*)\} \ne \emptyset \) for all \(t\in \{1,2,3,4\}\), we see that \(\rho = (-1,1,0,0)\) is semi-stable in a trivial manner.

For \(\alpha = (-1,2,0,0)\), we have \({\textsc {pos}}(X,\alpha ) = \{2\} \ne \emptyset \), hence \(X\in \mathcal {P}(\alpha )\). We further have \({\textsc {pos}}(X,\alpha ) \cap \textsc {{esc}}(X,\alpha ) = \{2\} \cap \{1\} = \emptyset \), hence \(X\in \mathcal {E}(\alpha )\). Thus, \(\mathcal {X}(\alpha ) = \{X\}\). Let us verify that the edge (2, 3) is a non-trivial \(\alpha \)-exit from X. Notice that the plan \((3,4,4^*,4^*,\ldots )\) is the only plan \(v\in {\textsc {viable}}(3,\alpha )\) that satisfies the condition \(\textsc {{esc}}(X,\alpha ) \subseteq {\textsc {sat}}(v,\alpha )\), as it is the only plan in \({\textsc {viable}}(3,\alpha )\) that \(\alpha \)-satisfies player 1. We see that also player 2 is \(\alpha \)-satisfied by the plan \((3,4,4^*,4^*,\ldots )\). Therefore, we have, for all \(v\in {\textsc {viable}}(3,\alpha )\): \(\textsc {{esc}}(X,\alpha ) \subseteq {\textsc {sat}}(v,\alpha ) \Rightarrow 2\in {\textsc {sat}}(v,\alpha )\). Then indeed, (2, 3) is a non-trivial \(\alpha \)-exit from X, as also the condition \(2\in X{\setminus } \textsc {{esc}}(X,\alpha )\) and \(3\in S{\setminus } X\) is satisfied. It can further be checked that \({\textsc {safestep}}(t,\alpha ) \ne \emptyset \) for all \(t\in \{1,2,3,4\}\). Thus, \(\alpha = (-1,2,0,0)\) is semi-stable.

For \(\omega = (0,2,0,0)\), we have \({\textsc {pos}}(X,\omega ) = \{2\} \ne \emptyset \) and \({\textsc {pos}}(X,\omega ) \cap \textsc {{esc}}(X,\omega ) = \{2\} \cap \emptyset = \emptyset \), hence \(X\in \mathcal {E}(\omega )\). Thus, \(\mathcal {X}(\omega ) = \{X\}\). Here, plan \(v = (3,3^*,3^*,\ldots )\) satisfies the trivial condition \(\emptyset = \textsc {{esc}}(X,\omega ) \subseteq {\textsc {sat}}(v,\omega )\) and violates the condition \(2\in {\textsc {sat}}(v,\omega )\), hence the implication \(\textsc {{esc}}(X,\omega ) \subseteq {\textsc {sat}}(v,\omega ) \Rightarrow 2\in {\textsc {sat}}(v,\omega )\) is violated. Therefore, the edge (2, 3) is not an \(\omega \)-exit from X. One can check that the edges \((2,2^*)\) and \((1,1^*)\) are no \(\omega \)-exits from X either. Then, there does not exist a (non-trivial) \(\omega \)-exit from X. The vector \(\omega = (0,2,0,0)\) is therefore not semi-stable.

Remark The vector \(\alpha = (-1,2,0,0)\) can be obtained by updating player 2 with respect to the vector \(\rho = (-1,1,0,0)\). The updated value of 2 for player 2 can be associated with action \(1\in A(2)\), followed by the minimizing \((2,1,\rho )\)-admissible plan \((1,2,3,4,4^*,4^*,\ldots )\). We see that, under the logic of the updating procedure, it is in this example smart for player 2 to announce state 1 before possibly announcing another state at a later stage of the game, as this will prevent possible absorption at 3. We see in this example also how the update from \(\rho \) to \(\alpha \) naturally creates an \(\alpha \)-exit from X. \(\diamondsuit \)

Lemma 3.1

We have \(\rho \in \Omega \), where \(\rho \) is the vector defined by \(\rho _t = r_t(t)\) for all \(t\in N\).

Proof

For the vector \(\rho \), we have \(t^*\in {\textsc {safestep}}(t,\rho )\) for all \(t\in N\). Hence, \({\textsc {safestep}}(t,\rho ) \ne \emptyset \) for all \(t\in N\).

For any \(X\subseteq N\), we have \(\textsc {{esc}}(X,\rho ) = X\), since \(t^*\in {\textsc {safestep}}(t,\rho )\) for all \(t\in X\). Now, let \(X\in \mathcal {P}(\rho )\), i.e. let \(X\subseteq N\) be such that \({\textsc {pos}}(X,\rho )\ne \emptyset \). Then \(\textsc {{esc}}(X,\rho ) \cap {\textsc {pos}}(X,\rho ) = {\textsc {pos}}(X,\rho ) \ne \emptyset \), hence \(X\notin \mathcal {E}(\rho )\). It follows that \(\mathcal {X}(\rho ) = \mathcal {P}(\rho ) \cap \mathcal {E}(\rho ) \cap \mathcal {C} = \emptyset \). Then trivially, a non-trivial \(\rho \)-exit from X exists for all \(X\in \mathcal {X}(\rho )\). Thus, \(\rho \in \Omega \). \(\square \)

Lemma 3.2

Let \(\alpha \in \Omega \). Then \(\textsc {{esc}}(X,\alpha ) \ne \emptyset \) for all \(X\in \mathcal {P}(\alpha )\).

Proof

Let \(X\in \mathcal {P}(\alpha )\) and suppose that \(\textsc {{esc}}(X,\alpha ) = \emptyset \). Then \({\textsc {safestep}}(x,\alpha ) \subseteq X\) for all \(x\in X\), and thus, \({\textsc {safestep}}(x,\alpha ) \subseteq A(x)\cap X\) for all \(x\in X\). We further have \({\textsc {safestep}}(x,\alpha ) \ne \emptyset \) for all \(x\in X\), by the fact that \(\alpha \in \Omega \). It follows that \(A(x)\cap X \supseteq {\textsc {safestep}}(x,\alpha ) \ne \emptyset \) for all \(x\in X\), which proves that \(X\in \mathcal {C}\). We also trivially have \(X\in \mathcal {E}(\alpha )\), since we suppose \(\textsc {{esc}}(X,\alpha ) = \emptyset \). Then \(X\in \mathcal {P}(\alpha ) \cap \mathcal {E}(\alpha ) \cap \mathcal {C} = \mathcal {X}(\alpha )\). Now, since \(\alpha \in \Omega \) and \(X\in \mathcal {X}(\alpha )\), there exists a non-trivial \(\alpha \)-exit from X, say (x, y). Since (x, y) is an \(\alpha \)-exit from X, we have for all \(v\in {\textsc {viable}}(y,\alpha )\),

The leftside of the implication is always true, since supposedly \(\textsc {{esc}}(X,\alpha ) = \emptyset \). It follows that \(x \in {\textsc {sat}}(v,\alpha )\) for all \(v\in {\textsc {viable}}(y,\alpha )\), hence \(y \in {\textsc {safestep}}(x,\alpha )\). But this implies \(x\in \textsc {{esc}}(X,\alpha )\), which contradicts that \(\textsc {{esc}}(X,\alpha ) = \emptyset \). \(\square \)

For any subgraph H of G and a subset X of the vertex set V(H) of H, say that X is an ergodic set of H if

-

(i)

for all \(x,y\in X\), there exists a path p in H from x to y with \({\textsc {N}}(p) \subseteq X\), and

-

(ii)

for all \(x\in X\) and \(y\in V(H){\setminus } X\), there is no path in H from x to y.

The following lemma is an easy result in graph theory. It is stated without proof.

Lemma 3.3

Let \(H = (V(H),E(H))\) be a (directed) graph, such that for every vertex \(x\in V(H)\), there exists \(y\in V(H)\) with \((x,y)\in E(H)\). Then, for every \(x\in V(H)\), there is a path from x to an element of an ergodic set of H.

For \(\alpha \in \Omega \), define the graph \(G(\alpha )\) as the graph with vertex set S and edge set \(\{(x,y) \in E \mid y\in {\textsc {safestep}}(x,\alpha )\}\). Notice that, for all \(\alpha \in \Omega \) and for all \(t^*\in N^*\), the singleton \(\{t^*\}\) is an ergodic set of the graph \(G(\alpha )\), since \((t^*,t^*)\) is a path in \(G(\alpha )\) from \(t^*\) to \(t^*\) and since there is no edge leaving the set \(\{t^*\}\). The definition of ergodic set implies that different ergodic sets of a graph are disjoint. Therefore, any ergodic set of \(G(\alpha )\) is either a singleton from the set \(N^*\) or a subset of N. The following corollary follows directly from Lemma 3.2.

Corollary 3.4

Let \(\alpha \in \Omega \). If \(X\subseteq N\) is an ergodic set of \(G(\alpha )\), then \({\textsc {pos}}(X,\alpha ) = \emptyset \).

An immediate insight from Corollary 3.4 is that, for all \(\alpha \in \Omega \), a non-absorbing plan v is \(\alpha \)-viable if \({\textsc {N}}(v)\) is a subset of an ergodic set of \(G(\alpha )\).

Lemma 3.5

Let \(\alpha \in \Omega \), let p be a path in \(G(\alpha )\), and let g be an \(\alpha \)-viable plan such that \({\textsc {first}}(g) = {\textsc {last}}(p)\). Then the plan \(\langle p,g\rangle \) is \(\alpha \)-viable.

Proof

Write \(p = (z_i)_{i=1}^k\) with \(k\ge 1\). Define, for all \(i\in \{1,\ldots ,k\}\), the plan \(g_i = \langle (z_i,\ldots ,z_k),g\rangle \). We prove by induction on i that all plans \(g_i\) with \(i\in \{1,\ldots , k\}\) are \(\alpha \)-viable. Trivially, the plan \(g_k = g\) is \(\alpha \)-viable. Now assume that \(g_{i+1}\) is \(\alpha \)-viable with \(i<k\). Then \({\textsc {N}}(g_i){\setminus } \{z_i\} \subseteq {\textsc {N}}(g_{i+1}) \subseteq {\textsc {sat}}(g_{i+1},\alpha ) = {\textsc {sat}}(g_i,\alpha )\). Thus, to prove that \({\textsc {N}}(g_i) \subseteq {\textsc {sat}}(g_i,\alpha )\), i.e. to prove that \(g_i\) is \(\alpha \)-viable, it suffices to show that \(z_i\in {\textsc {sat}}(g_i,\alpha )\).

We have \(z_{i+1} \in {\textsc {safestep}}(z_i,\alpha )\), since p is a path in \(G(\alpha )\). It follows that \(z_i\in {\textsc {sat}}(g_{i+1},\alpha )\), since \(g_{i+1}\) is an \(\alpha \)-viable plan with \({\textsc {first}}(g_{i+1}) = z_{i+1}\). Because \({\textsc {sat}}(g_i,\alpha ) = {\textsc {sat}}(g_{i+1},\alpha )\), we indeed obtain \(z_i\in {\textsc {sat}}(g_i,\alpha )\), \(\square \)

The existence of \(\alpha \)-viable plans for \(\alpha \in \Omega \) is now an easy consequence of previous results.

Lemma 3.6

Let \(\alpha \in \Omega \). Then, for all \(t\in N\), a plan g in \(G(\alpha )\) exists with \(g \in {\textsc {viable}}(t,\alpha )\).

Proof

Let \(t\in N\). We have \({\textsc {safestep}}(x,\alpha ) \ne \emptyset \) for all \(x\in N\), since \(\alpha \in \Omega \). Thus, the graph \(G(\alpha )\) satisfies the conditions of Lemma 3.3. Therefore, there is a path p in \(G(\alpha )\) from t to an element x of an ergodic set X of \(G(\alpha )\). By the properties of an ergodic set, a path q in \(G(\alpha )\) exists from x to x, and with \({\textsc {N}}(q) \subseteq X\). First we prove that the plan \(\langle q,q,\ldots \rangle \) is \(\alpha \)-viable. Indeed, this is true by definition if the plan \(\langle q,q,\ldots \rangle \) is absorbing, i.e. if \(x\in N^*\) and \(q = (x,x)\). Otherwise, we have \(X\subseteq N\), and \({\textsc {pos}}(X,\alpha ) = \emptyset \) follows by Corollary 3.4. The plan \(\langle q,q,\ldots \rangle \) is then \(\alpha \)-viable, due to the fact that it is non-absorbing, hence it gives average reward 0 to all players. Finally, it follows that \(g := \langle p,q,q,\ldots \rangle \in {\textsc {viable}}(t,\alpha )\), by Lemma 3.5. \(\square \)

The main result of this section concerns the existence of admissible plans.

Lemma 3.7

Let \(\alpha \in \Omega \). Then, for all \(t\in N\) and for all \(u\in A(t)\), \({\textsc {admiss}}(t,u,\alpha ) \ne \emptyset \).

Proof

Let \(t\in N\) and \(u\in A(t)\). By Lemma 3.6, \({\textsc {viable}}(u,\alpha ) \ne \emptyset \). If \(v\in {\textsc {viable}}(u,\alpha )\) exists with \(t\notin {\textsc {N}}(v)\), then \(v\in {\textsc {admiss}}(t,u,\alpha )\) since v satisfies AD-i, and we are done. Assume further that \(t\in {\textsc {N}}(v)\) for all \(v\in {\textsc {viable}}(u,\alpha )\). Notice that this implies \(t\in {\textsc {sat}}(v,\alpha )\) for all \(v\in {\textsc {viable}}(u,\alpha )\). Thus, \(u\in {\textsc {safestep}}(t,\alpha )\), i.e. (t, u) is an edge of \(G(\alpha )\).

Define

and define \(X = Y\cup \{u,t\}\). We claim that \(\textsc {{esc}}(X,\alpha ) \subseteq \{t\}\). To prove our claim, we let \(x\in X{\setminus } \{t\}\), and we will show that \(y\in X\) for all \(y\in {\textsc {safestep}}(x,\alpha )\). So, let \(y\in {\textsc {safestep}}(x,\alpha )\). If \(y=t\), then trivially \(y\in X\). If \(y\ne t\), then there is a path in \(G(\alpha )\) from u to y not containing t. Indeed, if \(x=u\), then \((x,y) = (u,y)\) is such a path, and if \(x\ne u\), then there is a path p in \(G(\alpha )\) from u to x not containing t by the fact that \(x\in X{\setminus } \{t,u\} \subseteq Y\), and \(\langle p,(x,y)\rangle \) is then a path in \(G(\alpha )\) from u to y not containing t. Thus, \(y\in Y\subseteq X\) as claimed. We now distinguish between the cases \({\textsc {pos}}(X,\alpha ) = \emptyset \) and \({\textsc {pos}}(X,\alpha ) \ne \emptyset \).

First assume that \({\textsc {pos}}(X,\alpha ) = \emptyset \). Notice that, for all \(x\in X\), an element \(y\in X\) exists, such that (x, y) is an edge of \(G(\alpha )\). In particular also, (t, u) is an edge of \(G(\alpha )\). Then it is possible to construct a non-absorbing plan g with \({\textsc {first}}(g) = u\), with \({\textsc {N}}(g) \subseteq X\), and such that every edge of g is in the edge-set of \(G(\alpha )\). Then \(g\in {\textsc {viable}}(u,\alpha )\) by the assumption that \({\textsc {pos}}(X,\alpha ) = \emptyset \), and by the fact that a non-absorbing plan gives reward 0 to all players. Since the non-absorbing plan g satisfies condition AD-i, it also follows that \(g\in {\textsc {admiss}}(t,u,\alpha )\).

Now assume that \({\textsc {pos}}(X,\alpha ) \ne \emptyset \), i.e. assume that \(X\in \mathcal {P}(\alpha )\). We then have \(\textsc {{esc}}(X,\alpha ) \ne \emptyset \) by Lemma 3.2, so we must have \(\textsc {{esc}}(X,\alpha ) = \{t\}\). If \(t\in {\textsc {pos}}(X,\alpha )\), then \({\textsc {admiss}}(t,u,\alpha ) = {\textsc {viable}}(u,\alpha ) \ne \emptyset \), where the equality is by the fact that AD-ii is satisfied by all plans in \({\textsc {viable}}(u,\alpha )\) and the inequality is by Lemma 3.6. So we can further assume that \(t\notin {\textsc {pos}}(X,\alpha )\). Under this assumption, we have \(\textsc {{esc}}(X,\alpha ) \cap {\textsc {pos}}(X,\alpha ) = \emptyset \), i.e. \(X\in \mathcal {E}(\alpha )\). We also have that \(A(x)\cap X \ne \emptyset \) for all \(x\in X\), which follows from our earlier observation that, for all \(x\in X\), an element \(y\in X\) exists, such that (x, y) is an edge of \(G(\alpha )\). Thus, we have \(X\in \mathcal {C}\).

We see that \(X\in \mathcal {P}(\alpha ) \cap \mathcal {E}(\alpha ) \cap \mathcal {C} = \mathcal {X}(\alpha )\). Then, by the fact that \(\alpha \in \Omega \), a non-trivial \(\alpha \)-exit from X exists, say (x, y). By definition of an \(\alpha \)-exit, we have, for all \(g\in {\textsc {viable}}(y,\alpha )\),

Since \(\textsc {{esc}}(X,\alpha ) = \{t\}\), this translates to, for all \(g\in {\textsc {viable}}(y,\alpha )\),

We have \(x\in X{\setminus } \textsc {{esc}}(X,\alpha )\), since the \(\alpha \)-exit (x, y) is non-trivial. This implies \(y\notin {\textsc {safestep}}(x,\alpha )\), since \(y\in S{\setminus } X\). Thus, we can choose \(v\in {\textsc {viable}}(y,\alpha )\) with \(x\notin {\textsc {sat}}(v,\alpha )\). We must then also have \(t\notin {\textsc {sat}}(v,\alpha )\), since \(t\in {\textsc {sat}}(v,\alpha ){\,\,\,\implies \,\,\,}x\in {\textsc {sat}}(v,\alpha )\).

We choose \(z\in S\) such that (x, z) is an edge of \(G(\alpha )\), which is possible by the fact that \(\alpha \in \Omega \). Notice that \(x\in X{\setminus } \{t\}\). Indeed, we have \(x\ne t\), since \(x\in X{\setminus }\textsc {{esc}}(X,\alpha )\) and \(t\in \textsc {{esc}}(X,\alpha )\). It follows that \(z\in Y\cup \{t\} \subseteq X\) by the properties of the set Y.

We also choose \(h\in {\textsc {viable}}(z,\alpha )\), which is possible by Lemma 3.6.

Now, in case \(x=u\), we define \(g := \langle (x,z),h\rangle \). In case \(x\in X{\setminus } \{u\}\), we actually have \(x\in X{\setminus } \{u,t\} \subseteq Y\), and we can choose a path q in \(G(\alpha )\) from u to x with \(t\notin {\textsc {N}}(p)\). We then define \(g := \langle q,(x,z),h\rangle \). We claim that \(g\in {\textsc {admiss}}(t,u,\alpha )\).

By Lemma 3.5, we have \(g \in {\textsc {viable}}(u,\alpha )\). Notice that the first occurrence of x in this plan is before the first occurrence of t. Moreover, we have \(y\in A(x)\) and a plan \(v\in {\textsc {viable}}(y,\alpha )\) with \(t,x\notin {\textsc {sat}}(v,\alpha )\). Also notice that \({\textsc {first}}(v) = y\) and the state z, which follows the first occurrence of x in g, are different states, since \(y\in S{\setminus } X\) and \(z\in X\). This demonstrates that g satisfies condition AD-iii of admissibility, hence \(g\in {\textsc {admiss}}(t,u,\alpha )\). \(\square \)

Corollary 3.8

Let \(\alpha \in \Omega \) and let \(t\in N\). Then \(\alpha _t \le \delta (t,\alpha ) < \infty \).

Proof

We have \({\textsc {safestep}}(t,\alpha ) \ne \emptyset \), by the fact that \(\alpha \in \Omega \). We can then choose \(u\in {\textsc {safestep}}(t,\alpha )\), and we obtain \(\alpha _t \le \beta (t,u,\alpha ) \le \delta (t,\alpha )\). By Lemma 3.7, the minimization \(\beta (t,u,\alpha ) = \min \{\phi _t(v) \mid v\in {\textsc {admiss}}(t,u,\alpha )\}\) is done over a non-empty set, hence \(\beta (t,u,\alpha )\) is finite for all \(u\in A(t)\), which demonstrates that \(\delta (t,\alpha ) < \infty \). \(\square \)

Lemma 3.9

Let \(\alpha \in \Omega \) and \(t\in N\). Let \(\delta \) be the vector that results from \(\alpha \) when coordinate \(\alpha _t\) is replaced with \(\delta (t,\alpha )\). Then

-

(i)

\({\textsc {viable}}(u,\delta )\subseteq {\textsc {viable}}(u,\alpha )\) for all \(u\in N\),

-

(ii)

\({\textsc {safestep}}(u,\delta ) \supseteq {\textsc {safestep}}(u,\alpha )\) for all \(u\in N{\setminus } \{t\}\),

-

(iii)

\(B(t,\alpha ) \subseteq {\textsc {safestep}}(t,\delta ) \subseteq {\textsc {safestep}}(t,\alpha )\),

-

(iv)

\(\textsc {{esc}}(X,\alpha ){\setminus }\{t\} \subseteq \textsc {{esc}}(X,\delta ){\setminus }\{t\}\) for all \(X\subseteq N\),

-

(v)

\(\textsc {{esc}}(X,\alpha ) \subseteq \textsc {{esc}}(X,\delta )\) for all \(X\subseteq N\) with \(t\notin X\) or \(t\in \textsc {{esc}}(X,\delta )\).

Proof

Proof of (i): By Corollary 3.8 we have \(\delta \ge \alpha \). Then, if a plan is \(\delta \)-viable, it is obviously also \(\alpha \)-viable.

Proof of (ii): Let \(u\in N{\setminus }\{t\}\), and let \(x\in {\textsc {safestep}}(u,\alpha )\). Choose an arbitrary plan \(g\in {\textsc {viable}}(x,\delta )\). Then \(g\in {\textsc {viable}}(x,\alpha )\) by (i), and since \(x\in {\textsc {safestep}}(u,\alpha )\), it follows that \(u\in {\textsc {sat}}(g,\alpha )\). Since \(u\ne t\), we have \(\alpha _u = \delta _u\), hence \(u\in {\textsc {sat}}(g,\delta )\). It follows that \(x\in {\textsc {safestep}}(u,\delta )\).

Proof of (iii), part 1: Let \(u\in B(t,\alpha )\). Choose \(g\in {\textsc {viable}}(u,\delta )\). To prove that \(u\in {\textsc {safestep}}(t,\delta )\), it suffices to show that \(t\in {\textsc {sat}}(g,\delta )\). Case 1: If \(t\notin {\textsc {N}}(g)\), then \(g\in {\textsc {admiss}}(t,u,\alpha )\), since g is \(\alpha \)-viable by Lemma 3.9-(i) and satisfies condition AD-i. Then \(\phi _t(g) \ge \beta (t,u,\alpha ) = \delta (t,\alpha )\), where the inequality follows by the definition of \(\beta (t,u,\alpha )\), and the equality follows by the choice of \(u\in B(t,\alpha )\). So indeed, \(t\in {\textsc {sat}}(g,\delta )\). Case 2: If \(t\in {\textsc {N}}(g)\), then \(t\in {\textsc {sat}}(g,\delta )\) follows immediately from the definition of a \(\delta \)-viable plan.

Proof of (iii), part 2: Now let \(u\in {\textsc {safestep}}(t,\delta )\). Choose \(g\in {\textsc {viable}}(u,\alpha )\). We need to show that \(t\in {\textsc {sat}}(g,\alpha )\). Case 1: If \(t\notin {\textsc {N}}(g)\), then \(g\in {\textsc {viable}}(u,\delta )\), since \(\alpha _x = \delta _x\) for all \(x\in {\textsc {N}}(g)\). By the fact that \(u\in {\textsc {safestep}}(t,\delta )\), it follows that \(t\in {\textsc {sat}}(g,\delta )\). Then also \(t\in {\textsc {sat}}(g,\alpha )\), since \(\alpha _t\le \delta _t\). Case 2: If \(t\in {\textsc {N}}(g)\), then \(t\in {\textsc {sat}}(g,\alpha )\) is trivial.

Proof of (iv): This follows immediately from (ii).

Proof of (v): If \(t\notin X\), then

If \(t\in \textsc {{esc}}(X,\delta )\), then

\(\square \)

Corollary 3.10

Let \(\alpha \in \Omega \), let \(t\in N\), and let \(\delta \) be the vector that results from \(\alpha \) when coordinate \(\alpha _t\) is replaced with \(\delta (t,\alpha )\). Then \({\textsc {safestep}}(x,\delta ) \ne \emptyset \) for all \(x\in N\).

Proof

For all \(x\in N{\setminus }\{t\}\), we have \({\textsc {safestep}}(x,\delta ) \supseteq {\textsc {safestep}}(x,\alpha )\ne \emptyset \), where the inclusion follows from Lemma 3.9-(ii), and the inequality follows from the fact that \(\alpha \in \Omega \). We also have \({\textsc {safestep}}(t,\delta ) \supseteq B(t,\alpha ) \ne \emptyset \), where the inclusion follows from Lemma 3.9-(iii). \(\square \)

4 Stable vectors and their properties

In the previous section we showed that for all \(\alpha \in \Omega \), the updated vector, say \(\delta (\alpha )\), is finite and satisfies \(\delta (\alpha ) \ge \alpha \). If we could also prove \(\delta (\alpha )\in \Omega \) for all \(\alpha \in \Omega \), then it would be an easy corollary to establish a ‘fixed point’ in \(\Omega \), i.e. the existence of a vector \(\alpha ^*\in \Omega \) with the property \(\delta (t,\alpha ^*) = \alpha ^*_t\) for all \(t\in N\).

We begin this section with an example of \(\alpha \in \Omega \) and \(\delta (\alpha ) \notin \Omega \). The example demonstrates that, if we initiate the updating process with a vector in \(\Omega \), the process may terminate with a vector that is not finite.

This ‘negative result’ will motivate the rather intricate definition of the set \(\Omega ^*\) of stable vectors, later in this section. The set \(\Omega ^*\) will be a subset of \(\Omega \), so that all results derived in Sect. 3 will also hold for all \(\alpha \in \Omega ^*\). Most importantly however, we will be able to prove that \(\delta (\alpha )\in \Omega ^*\) for all \(\alpha \in \Omega ^*\).

Example 4.1

For the game depicted in Fig. 3, let us set \(\alpha _1 = 2\) and \(\alpha _2 = \alpha _3 = \alpha _4 = \alpha _5 = 0\). We claim that \(\alpha \) is semi-stable: It is easy to verify that the condition \({\textsc {safestep}}(x,\alpha ) \ne \emptyset \) for all \(x\in N\) is satisfied. Moreover, the collection \(\mathcal {X}(\alpha )\) consists of only the set \(\{1,2,3\}\), and one can verify that the edge (3, 4) is a non-trivial \(\alpha \)-exit from \(\{1,2,3\}\).

Corollary 3.8 now predicts that any update on \(\alpha \) will be finite. However, the vector that results after the update of the state controlled by player 4 is not semi-stable. The updated vector, say \(\tilde{\alpha }\), is given by \(\tilde{\alpha }_1 = \tilde{\alpha }_4 = 2\) and \(\tilde{\alpha }_2 = \tilde{\alpha }_3 = \tilde{\alpha }_5 =0\). Observe now that \(\{1,2,3\} \in \mathcal {X}(\tilde{\alpha })\) and that \(3\in \textsc {{esc}}(\{1,2,3\},\tilde{\alpha })\). Thus, the edge (3, 4) is a trivial \(\tilde{\alpha }\)-exit from \(\{1,2,3\}\). As there is no other serious candidate for a non-trivial \(\tilde{\alpha }\)-exit from \(\{1,2,3\}\), we conclude that \(\tilde{\alpha }\) is not semi-stable.

Any update on \(\tilde{\alpha }\) is still finite, even though Corollary 3.8 does not apply. However, two consecutive updates of the vector \(\tilde{\alpha }\) will result in a vector that is not finite anymore. If we first update the state controlled by 3, then its new value will be \(\beta (3,1,\tilde{\alpha }) = 1\): indeed, any \(\tilde{\alpha }\)-viable plan with initial state 1 and with a reward lower than 1 for player 3 must absorb at 5, which is not \((3,1,\tilde{\alpha })\)-admissible. If we subsequently update the state controlled by 2, we see that there are no (2, 3)-admissible plans anymore, hence the value of 2 becomes infinite. The reader may wish to verify that a different order of updates does not solve the problem. \(\square \)

Let \(\alpha \in \Omega \), \(X\subseteq N\) and \(Z\subseteq X\). We say that an edge (x, y) is an \((\alpha ,Z)\)–exit from X if \(x\in X\) and \(y\in S{\setminus } X\), and if, for all \(v\in {\textsc {viable}}(y,\alpha )\),

We say that a sequence of edges \(\mathbbm {e} = (x_i,y_i)_{i=1}^k\) is an \(\alpha \)-exit sequence from X if, for all \(i\in \{1,\ldots ,k\}\), the edge \((x_i,y_i)\) is an \((\alpha ,\{x_1,\ldots ,x_{i-1}\})\)–exit from X. For technical reasons, we allow \(k=0\), i.e. the empty sequence will also be called an \(\alpha \)-exit sequence from X. We say that the \(\alpha \)-exit sequence from X is trivial if \(x_i\in \textsc {{esc}}(X,\alpha )\) for all \(i\in \{1,\ldots ,k\}\). We say that it is non-trivial if the sequence is non-empty and if there exists \(i\in \{1,\ldots ,k\}\) such that \(x_i\in X{\setminus } \textsc {{esc}}(X,\alpha )\). We say that the \(\alpha \)-exit sequence from X is positive if the sequence is non-empty and if there exists \(i\in \{1,\ldots ,k\}\) such that \(x_i\in {\textsc {pos}}(X,\alpha )\).

We now say that a vector \(\alpha \in \mathbb {R}^N\) is stable if \({\textsc {safestep}}(x,\alpha ) \ne \emptyset \) for all \(x\in N\) and if a positive \(\alpha \)-exit sequence from X exists for all \(X\in \mathcal {X}(\alpha )\). We denote the set of stable vectors in \(\mathbb {R}^N\) by \(\Omega ^*\).

Example 4.2

Let us illustrate the definitions of this section with the 3-player game in Fig. 4. Notice that \(X = \{1,2,3\}\) is the unique element of \(\mathcal {C}\), and thus the only candidate for an element of \(\mathcal {X}(\alpha ) = \mathcal {P}(\alpha ) \cap \mathcal {E}(\alpha ) \cap \mathcal {C}\).

We set \(\alpha = (0,2,-1)\). Then we have \({\textsc {pos}}(X,\alpha ) = \{2\} \ne \emptyset \), hence \(X\in \mathcal {P}(\alpha )\). We further have \({\textsc {pos}}(X,\alpha ) \cap \textsc {{esc}}(X,\alpha ) = \{2\} \cap \{3\} = \emptyset \), hence \(X\in \mathcal {E}(\alpha )\). Thus, \(\mathcal {X}(\alpha ) = \{X\}\).

Let us first verify that the edge \((1,1^*)\) is a non-trivial \(\alpha \)-exit from X. We need to check that, for all \(v\in {\textsc {viable}}(1^*,\alpha )\), we have \(\{3\} = \textsc {{esc}}(X,\alpha ) \subseteq {\textsc {sat}}(v,\alpha ) \Rightarrow 1\in {\textsc {sat}}(v,\alpha )\). The only plan in \({\textsc {viable}}(1^*,\alpha )\) is \(g = (1^*,1^*,\ldots )\), and we have indeed \(\{3\} \subseteq {\textsc {sat}}(g,\alpha ) \Rightarrow 1\in {\textsc {sat}}(g,\alpha )\), since player 3 is not \(\alpha \)-satisfied by plan g. Then \((1,1^*)\) is a non-trivial \(\alpha \)-exit from X, as also the condition \(1\in X{\setminus } \textsc {{esc}}(X,\alpha )\) and \(1^*\in S{\setminus } X\) is satisfied.

Let us next verify that the edge \((2,2^*)\) is an \((\alpha ,\{1\})\)-exit from X. We need to check that, for all \(v\in {\textsc {viable}}(2^*,\alpha )\), we have \(\{1,3\} = \{1\}\cup \textsc {{esc}}(X,\alpha ) \subseteq {\textsc {sat}}(v,\alpha ) \Rightarrow 2\in {\textsc {sat}}(v,\alpha )\). The only plan in \({\textsc {viable}}(2^*,\alpha )\) is \(g = (2^*,2^*,\ldots )\), and we have indeed \(\{1,3\} \subseteq {\textsc {sat}}(g,\alpha ) \Rightarrow 2\in {\textsc {sat}}(g,\alpha )\), since player 1 is not \(\alpha \)-satisfied by plan g. Then \((2,2^*)\) is an \((\alpha ,\{1\})\)-exit from X.

We see that \(((1,1^*))\) and \(((1,1^*),(2,2^*))\) are both non-trivial \(\alpha \)-exit sequences from X. The latter sequence is a positive \(\alpha \)-exit sequence from X. It follows that the vector \(\alpha = (0,2,-1)\) is stable, since also the condition \({\textsc {safestep}}(t,\alpha ) \ne \emptyset \) for all \(t\in \{1,2,3\}\) is satisfied.

Remark The vector \(\alpha = (0,2,-1)\) can be obtained in two updates from the vector \(\rho = (-1,1,-1)\) (the vector defined by \(\rho _t = r_t(t)\) for all \(t\in N\)). In the example, each update adds to the edge-sequence from X, which is typically what happens if an element \(t\in X\) is updated and if \(B(t,\alpha )\subseteq \) X. In Example 4.1, we saw how an \(\alpha \)-exit from X can disappear by an update of \(t\notin \) X. However, such an update outside X cannot make a positive \(\alpha \)-exit sequence disappear. \(\diamondsuit \)

The following lemma states some elementary facts about exit sequences.

Lemma 4.1

Let \(X\subseteq N\) and let \(\alpha \in \Omega \).

-

(i)

If \(\mathbbm {e}\) is a non-empty \(\alpha \)-exit sequence from X, then its first edge is an \(\alpha \)-exit from X.

-

(ii)

If \(\mathbbm {e}\) is an \(\alpha \)-exit sequence from X, and if (x, y) is an edge of \(\mathbbm {e}\) with \(x\in \textsc {{esc}}(X,\alpha )\), then the sequence \(\overline{\mathbbm {e}}\) obtained from \(\mathbbm {e}\) by deleting the edge (x, y) is also an \(\alpha \)-exit sequence from X. Moreover, if \(\mathbbm {e}\) is a positive \(\alpha \)-exit sequence from X and if \(X\in \mathcal {X}(\alpha )\), then \(\overline{\mathbbm {e}}\) is also a positive \(\alpha \)-exit sequence from X.

-

(iii)

If a non-trivial \(\alpha \)-exit sequence from X exists, then a non-trivial \(\alpha \)-exit from X exists.

-

(iv)

If \(\mathbbm {e}\) and \(\mathbbm {f}\) are both \(\alpha \)-exit sequences from X, then the concatenation of these two sequences, denoted by \((\mathbbm {e},\mathbbm {f})\), is also an \(\alpha \)-exit sequence from X. If moreover, \(\mathbbm {e}\) or \(\mathbbm {f}\) is a positive \(\alpha \)-exit sequence from X, then also \((\mathbbm {e},\mathbbm {f})\) is a positive \(\alpha \)-exit sequence from X.

-

(v)

If \(\mathbbm {e}\) is a positive \(\alpha \)-exit sequence from X and if \(X\in \mathcal {X}(\alpha )\), then \(\mathbbm {e}\) is non-trivial.

Proof

Proof of (i): If \(\mathbbm {e}\) is a non-empty \(\alpha \)-exit sequence from X, then its first edge is by definition an \((\alpha ,\emptyset )\)–exit from X. That is also the definition of an \(\alpha \)-exit from X.

Proof of (ii): Let \(\mathbbm {e} = (x_i,y_i)_{i=1}^k\) be an \(\alpha \)-exit sequence from X. Suppose \(h\in \{1,\ldots ,k\}\) is such that \(x_h\in \textsc {{esc}}(X,\alpha )\). Then we have, for all \(j\in \{1,\ldots , k\}\), that

Now, by the definition of an \((\alpha ,Y)\)–exit from X and by the fact that \((x_j,y_j)\) is an \((\alpha ,\{x_1,\ldots ,x_{j-1}\})\)–exit from X, it follows that edge \((x_j,y_j)\) is an \((\alpha ,\{x_1,\ldots ,x_{j-1}\}{\setminus } \{x_h\})\)–exit from X, for all \(j\in \{1,\ldots , k\}\); thus also for all \(j\ne h\). This means that the sequence \(\overline{\mathbbm {e}}\), obtained from \(\mathbbm {e}\) by deleting edge \((x_h,y_h)\) from it, is also an \(\alpha \)-exit sequence from X.

Moreover, if \(X\in \mathcal {X}(\alpha )\), then \(\textsc {{esc}}(X,\alpha ) \cap {\textsc {pos}}(X,\alpha ) = \emptyset \). So in this case we have \(x_h\notin {\textsc {pos}}(X,\alpha )\), and it follows that the sequence \(\overline{\mathbbm {e}}\) is positive if \(\mathbbm {e}\) is positive.

Proof of (iii): Let \(\mathbbm {e} = (x_i,y_i)_{i=1}^k\) be a non-trivial \(\alpha \)-exit sequence from X. Let h be the smallest index with \(x_h\in X{\setminus } \textsc {{esc}}(X,\alpha )\). Denote by \(\overline{\mathbbm {e}}\) the edge-sequence obtained from \(\mathbbm {e}\) by deleting all edges \((x_i,y_i)\) from \(\mathbbm {e}\) with \(i<h\). Then the edge-sequence \(\overline{\mathbbm {e}}\) is an \(\alpha \)-exit sequence from X, by (ii). The first edge of \(\overline{\mathbbm {e}}\) (i.e. \((x_h,y_h)\)) is an \(\alpha \)-exit from X, by (i).

Proof of (iv): Let \(\mathbbm {e} = (x_i,y_i)_{i=1}^k\) and \(\mathbbm {f} = (x_i,y_i)_{i=k+1}^\ell \) be two \(\alpha \)-exit sequences from X. We need to prove that \((x_i,y_i)_{i=1}^\ell \) is an \(\alpha \)-exit sequence from X. To see this, let \(i\in \{1,\ldots , \ell \}\) and let \(v\in {\textsc {viable}}(y_i,\alpha )\) be such that \(\{x_1,\ldots , x_{i-1}\} \cup \textsc {{esc}}(X,\alpha ) \subseteq {\textsc {sat}}(v,\alpha )\). If \(i\le k\), we use the fact that \((x_i,y_i)\) is an \((\alpha ,\{x_1,\ldots ,x_{i-1}\})\)–exit from X to deduce that \(x_i\in {\textsc {sat}}(v,\alpha )\). If \(i>k\), we use the fact that \((x_i,y_i)\) is an \((\alpha ,\{x_{k+1},\ldots ,x_{i-1}\})\)–exit from X to deduce that \(x_i\in {\textsc {sat}}(v,\alpha )\).

If moreover, one of the sequences \(\mathbbm {e} \) or \(\mathbbm {f}\) is a positive \(\alpha \)-exit sequence from X, then one of these sequence contains an edge (x, y) with \(x\in {\textsc {pos}}(X,\alpha )\). Obviously, \((\mathbbm {e}, \mathbbm {f})\) also contains the edge (x, y), and is therefore a positive \(\alpha \)-exit sequence from X.

Proof of (v): Let \((x_i,y_i)_{i=1}^k\) be a positive \(\alpha \)-exit sequence from \(X\in \mathcal {X}(\alpha )\). Thus, there exists \(i\in \{1,\ldots ,k\}\) with \(x_i\in {\textsc {pos}}(X,\alpha )\). Then \(x_i\notin \textsc {{esc}}(X,\alpha )\), since \({\textsc {pos}}(X,\alpha ) \cap \textsc {{esc}}(X,\alpha ) = \emptyset \) by the fact that \(X\in \mathcal {X}(\alpha ) \subseteq \mathcal {E}(\alpha )\). \(\square \)

Lemma 4.2

We have \(\rho \in \Omega ^*\subseteq \Omega \), where, \(\rho \) is the vector defined by \(\rho _t = r_t(t)\) for all \(t\in N\).

Proof

For the vector \(\rho \), we demonstrated in the proof of Lemma 3.1 that \({\textsc {safestep}}(t,\rho )\ne \emptyset \) for all \(t\in N\). We also demonstrated that \(\mathcal {X}(\rho ) = \emptyset \), so trivially, a positive \(\rho \)-exit sequence from X exists for all \(X\in \mathcal {X}(\rho )\). Thus, \(\rho \in \Omega ^*\).

Since for \(X\in \mathcal {X}(\alpha )\), a positive \(\alpha \)-exit sequence from X is non-trivial [by Lemma 4.1-(v)], and since the existence of a non-trivial \(\alpha \)-exit sequence from X implies the existence of a non-trivial \(\alpha \)-exit from X [by Lemma 4.1-(iii)], it follows that any stable vector is also semi-stable. Thus, \(\Omega ^*\subseteq \Omega \). \(\square \)

Let us fix \(\alpha \in \Omega ^*\) and \(t\in N\). Let further \(\delta \) denote the update of \(\alpha \), where \(\alpha _t\) is replaced by \(\delta (t,\alpha )\). It follows from Lemma 4.2 and Corollary 3.10 that \(\delta \) satisfies the condition \({\textsc {safestep}}(x,\delta ) \ne \emptyset \) for all \(x\in N\). In this section, we will demonstrate that \(\delta \) also has the property that a positive \(\delta \)-exit sequence from X exists, for all \(X\in \mathcal {X}(\delta )\). Let us partition the set \(\mathcal {X}(\delta )\) into two subsets.

We first deal with the set \(\mathcal {V}(t,\delta )\). We will prove that its elements are all present in \(\mathcal {X}(\alpha )\), which implies the existence of a positive \(\alpha \)-exit sequence from X for all \(X\in \mathcal {V}(t,\delta )\), as \(\alpha \in \Omega ^*\). We then proceed by proving that, for all \(X\in \mathcal {V}(t,\delta )\), every \(\alpha \)-exit sequence from X is also a \(\delta \)-exit sequence from X.

Lemma 4.3

We have \(\mathcal {V}(t,\delta ) \subseteq \mathcal {X}(\alpha )\).

Proof

Let \(X\in \mathcal {V}(t,\delta )\). We need to prove that \(X\in \mathcal {X}(\alpha ) = \mathcal {P}(\alpha ) \cap \mathcal {E}(\alpha ) \cap \mathcal {C}\).

Proof that \(X\in \mathcal {P}(\alpha )\): We claim that \(t\notin {\textsc {pos}}(X,\delta )\). This is trivial if \(t\notin X\). Otherwise, we have \(t\in \textsc {{esc}}(X,\delta )\), since \(X\in \mathcal {V}(t,\delta )\). We also have \(\textsc {{esc}}(X,\delta )\cap {\textsc {pos}}(X,\delta ) = \emptyset \), by the fact that \(X\in \mathcal {V}(t,\delta ) \subseteq \mathcal {X}(\delta ) \subseteq \mathcal {E}(\delta )\). Now \(t\notin {\textsc {pos}}(X,\delta )\) follows from the combination of these facts.

Then \({\textsc {pos}}(X,\alpha ) = {\textsc {pos}}(X,\delta ) \ne \emptyset \), where the equality is by the fact that \(\alpha _x = \delta _x\) for all \(x\in X{\setminus } \{t\}\) and the fact that \(\alpha _t \le \delta _t \le 0\).

Proof that \(X\in \mathcal {E}(\alpha )\): We have \(t\notin X\) or \(t\in \textsc {{esc}}(X,\delta )\) by the fact that \(X\in \mathcal {V}(t,\delta )\). Then \(\textsc {{esc}}(X,\alpha ) \subseteq \textsc {{esc}}(X,\delta )\) by Lemma 3.9-(v). It follows that

where the inclusion is by the fact that \(\textsc {{esc}}(X,\alpha ) \subseteq \textsc {{esc}}(X,\delta )\) and \({\textsc {pos}}(X,\alpha ) = {\textsc {pos}}(X,\delta )\), and the equality is by the fact that \(X\in \mathcal {V}(t,\delta ) \subseteq \mathcal {X}(\delta ) \subseteq \mathcal {E}(\delta )\).

Proof that \(X\in \mathcal {C}\): Obviously, we have \(X \in \mathcal {C}\), since \(X\in \mathcal {V}(t,\delta ) \subseteq \mathcal {X}(\delta ) \subseteq \mathcal {C}\). \(\square \)

Lemma 4.4

For \(X\in \mathcal {V}(t,\delta )\), every (positive) \(\alpha \)-exit sequence from X is a (positive) \(\delta \)-exit sequence from X.

Proof

Let \(X\in \mathcal {V}(t,\delta )\) and let \(\mathbbm {e} = (x_i,y_i)^k_{i=1}\) be an \(\alpha \)-exit sequence from X. Choose \(j\in \{1,\ldots ,k\}\), let \(g\in {\textsc {viable}}(y_j,\delta )\) and assume that \(\{x_1,\ldots , x_{j-1}\} \cup \textsc {{esc}}(X,\delta ) \subseteq {\textsc {sat}}(g,\delta )\). We will prove that \(x_j\in {\textsc {sat}}(g,\delta )\).

We have \(\textsc {{esc}}(X,\alpha ) \subseteq \textsc {{esc}}(X,\delta )\) by Lemma 3.9-(v). Therefore,

Then \(x_j \in {\textsc {sat}}(g,\alpha )\), by the fact that \((x_j,y_j)\) is an \((\alpha ,\{x_1,\ldots ,x_{j-1}\})\)–exit from X, and by the fact that \(g\in {\textsc {viable}}(y_j,\delta ) \subseteq {\textsc {viable}}(y_j,\alpha )\). If \(x_j\ne t\), then \(x_j \in {\textsc {sat}}(g,\delta )\) follows because \(\alpha _{x_j} = \delta _{x_j}\). If \(x_j = t\), then \(x_j \in {\textsc {sat}}(g,\delta )\) follows from the assumption \(\{x_1,\ldots ,x_{j-1}\} \cup \textsc {{esc}}(X,\delta ) \subseteq {\textsc {sat}}(g,\delta )\) and the fact that \(t\in \textsc {{esc}}(X,\delta )\).