Abstract

Key message

A novel reparametrization-based INLA approach as a fast alternative to MCMC for the Bayesian estimation of genetic parameters in multivariate animal model is presented.

Abstract

Multi-trait genetic parameter estimation is a relevant topic in animal and plant breeding programs because multi-trait analysis can take into account the genetic correlation between different traits and that significantly improves the accuracy of the genetic parameter estimates. Generally, multi-trait analysis is computationally demanding and requires initial estimates of genetic and residual correlations among the traits, while those are difficult to obtain. In this study, we illustrate how to reparametrize covariance matrices of a multivariate animal model/animal models using modified Cholesky decompositions. This reparametrization-based approach is used in the Integrated Nested Laplace Approximation (INLA) methodology to estimate genetic parameters of multivariate animal model. Immediate benefits are: (1) to avoid difficulties of finding good starting values for analysis which can be a problem, for example in Restricted Maximum Likelihood (REML); (2) Bayesian estimation of (co)variance components using INLA is faster to execute than using Markov Chain Monte Carlo (MCMC) especially when realized relationship matrices are dense. The slight drawback is that priors for covariance matrices are assigned for elements of the Cholesky factor but not directly to the covariance matrix elements as in MCMC. Additionally, we illustrate the concordance of the INLA results with the traditional methods like MCMC and REML approaches. We also present results obtained from simulated data sets with replicates and field data in rice.

Similar content being viewed by others

Introduction

Estimation of variance components and associated breeding values is an important topic in classic (e.g., Piepho et al. 2008; Oakey et al. 2006; Bauer et al. 2006) and in Bayesian (e.g., Wang et al. 1993; Blasco 2001; Sorensen and Gianola 2002; Mathew et al. 2012) single-trait mixed model context. Similarly, multi-trait models have been proposed in both settings (e.g., Bauer and Léon 2008; Thompson and Meyer 1986; Korsgaard et al. 2003; Van Tassell and Van Vleck 1996; Hadfield 2010). Multi-trait analyses can take into account the correlation structure among all traits and that increases the accuracy of evaluation. However, this gain in accuracy is dependent on the absolute difference between the genetic and residual correlation between the traits (Mrode and Thompson 2005). This evaluation accuracy will increase as the differences between these correlations become high (Schaeffer 1984; Thompson and Meyer 1986). Persson and Andersson (2004) compared single-trait and multi-trait analyses of breeding values and they showed that multi-trait predictors resulted in a lower average bias than the single-trait analysis. Estimation of genetic and residual covariance matrices are the main challenging problem in multi-trait analysis in mixed model framework. However, in Bayesian analysis of multi-trait animal models, inverse-Wishart distribution is the common choice as the prior distribution for those unknown covariance matrices. The use of inverse-Wishart prior distribution for covariance matrix guarantees that the resulting covariance matrix will be positive definite (that is, invertible). However, the use of inverse-Wishart prior distribution is quite restrictive, because then one gives same degrees of freedom for all components in the covariance matrix (Barnard et al. 2000). Moreover, it is often difficult to suggest prior distributions that can be used for common situations. Matrix decomposition approach presented in this paper assigns independent priors for elements in the Cholesky factor.

Markov Chain Monte Carlo (MCMC) methods are a popular choice for Bayesian inference of animal models (Sorensen and Gianola 2002). Often, inference using MCMC methods is challenging for a non-specialist. Although there are various packages available for Bayesian inference which are based on MCMC methods (e.g., MCMCglmm, Hadfield 2010; BUGS, Lunn et al. 2000; Stan, Stan Development Team 2014), most of these packages are not easy to use and computationally expensive. Among these packages, MCMCglmm seems to be easy to implement and computationally inexpensive. As an alternative to MCMC methods one can use the recently implemented non-sampling-based Bayesian inference method, Integrated Nested Laplace Approximation (INLA, Rue et al. 2009). INLA methodology is comparatively easy to implement, but less flexible than MCMC methods (Holand et al. 2013).

Canonical transformation is a common matrix decomposition technique in multi-trait animal models to simultaneously diagonalize the genetic covariance matrix and make residual covariance matrix to identity matrix (see e.g., Ducrocq and Chapuis 1997). After transformation, best linear unbiased prediction (BLUP) values can be calculated independently for each trait using univariate animal model and then back transformed to obtain benefits of multi-trait analysis. However, common requirement in canonical transformation is that covariance matrices need to be known before the transformation. Therefore, it cannot be applied for variance component estimation—with unknown genetic and residual covariance matrices. Here, as an improvement, we introduce another kind of decomposition approach, where elements of the transformation matrix are estimated simultaneously together with the other mixed model parameters allowing us to apply this transformation also for the case of variance component estimation. This kind of modified Cholesky decomposition approach is required to perform multi-trait analysis in INLA (see Bøhn 2014). The closely related decomposition approach has been presented in Pourahmadi (1999, 2000, 2011) and Gao et al. (2015). Also our approach is somewhat related to factor analytic (FA) models (e.g. Meyer 2009; Cullis et al. 2014) and which was first introduced in a breeding context by Piepho (1997, 1998).

In this paper, we illustrate this approach to estimate genetic and residual covariance matrices with INLA and compare the obtained estimates with those from REML (Patterson and Thompson 1971) and MCMC approaches using simulated and real data sets. With the recent development of new low-cost high-throughput DNA sequencing technologies, it is now possible to obtain thousands of single nucleotide polymorphism (SNP) markers covering the whole genome, at the same time, in many animal and plant breeding programs often the detailed pedigree information is available. So we present results obtained using the marker data (real dataset) along with estimates obtained using pedigree information (simulated data) in this study. We also outline a more simple approach to simulate correlated traits based on the additive relationship matrix.

Models and methods

Model

We consider the multi-trait mixed model by Henderson and Quaas (1976). Let vector \({\mathbf {y}}_{1}\) represent the \(n_{1}\) observations for trait 1, \({\mathbf {y}}_{2}\) represent the \(n_{2}\) observations for trait 2 and \({{\mathbf {y}}_{n}}\) represent the \(n_{n}\) observations for trait n. Then the multi-trait mixed linear model for n traits can be written as:

\({\varvec{\beta }}_{{i}}\) is a vector of fixed effects associated with trait \(i\), \({{\mathbf {u}}_{i}}\) is a vector of random additive genetic effects associated with trait \(i\), \({{\varvec{\epsilon }}_{i}}\) is a vector of error terms, which are independently normally distributed with mean zero and variance \(\sigma ^2_{e}\). Moreover, \({{\mathbf {X}}_{i}}\) and \({{\mathbf {Z}}_{i}}\) are known incidence matrices for the fixed effects and the random effects for the trait \(i\), respectively. Then, the multi-trait mixed model for \(n\) traits can be represented as follows:

Let \({\varvec{\beta }}=[{\varvec{\beta }}^{\prime }_{1},{\varvec{\beta }}^{\prime }_{2}\ldots {\varvec{\beta }}^{\prime }_{n}]^{\prime},\) \(\mathbf {u=[u^{\prime}}_{1},\mathbf {u^{\prime}}_{2}\ldots \mathbf {u^{\prime}}_{n}]^{\prime},\) \(\varvec{\epsilon =[\epsilon ^{\prime }}_{1},\varvec{\epsilon ^{\prime }}_{2}\ldots \varvec{\epsilon ^{\prime }}_{n}]^{\prime }\) and \(\mathbf {y}\) contains traits \(\mathbf {y}_{1}\mathbf {\ldots }\) \(\mathbf {y}_{n}\). In our study we considered three correlated traits so \(i = 1, 2, 3\). Then mixed model equation (MME) for the model (1) is:

Here, \({\mathbf {R}}\) and \({\mathbf {G}}\) are covariance matrices associated with the vector \({\varvec{\epsilon }}\) of residuals and vector \(\mathbf {u}\) of random effects. If \(\mathbf {R}_{0}\) (of order \(3 \times 3\)) is the residual covariance for the three traits then \(\mathbf {R}\) can be calculated as \({\mathbf {R}}={\mathbf {R}}_{0}\otimes {\mathbf {I}}\) (here ‘\(\otimes\)’ is the Kronecker product of two matrices and \(\mathbf {I}\) is the identity matrix). Similarly, the genetic covariance matrix \(\mathbf {G}\) can be calculated as \({\mathbf {G}}={\mathbf {G}}_{0}\otimes {\mathbf {A}}\). Here, \(\mathbf {A}\) is the additive genetic relationship matrix (p. 763 in Lynch and Walsh 1998) and \(\mathbf {G}_{0}\) is a \(3 \times 3\) additive genetic (co)variance matrix. For the Bayesian inference with MCMCglmm package using model (1) one need to specify the conditional distribution for the data (\(\mathbf {y}\)) and prior distribution for the unknown parameters. So the conditional distribution of data \(\mathbf {y}\), given the parameters assumed to follow a multivariate normal distribution:

The additive genetic effects (\({\mathbf {u}}_{i}`s\)) were assigned multivariate normal distributions with a mean vector of zeros, \(\mathbf 0\), as:

and the residuals (\({\varvec{\epsilon }}_{i}`s\)) were assumed to follow,

where \(\mathbf I\) is an identity matrix. In Bayesian analysis fixed effects also have a prior and here \({\varvec{\beta }}\) was assigned a vague, large-variance Gaussian prior distribution.

Reparametrization of trivariate animal model in INLA

Steinsland and Jensen (2010) showed that animal models are latent Gaussian Markov random field (GMRF) models with a sparse precision matrix (inverse of the additive relationship matrix, \(\mathbf {A}^{-1}\)), and can be analyzed in INLA framework. Mathew et al. (2015), Larsen et al. (2014) and Holand et al. (2013) used INLA for Bayesian inference of univariate animal models, while in a recent study, Bøhn (2014) showed how to analyze a bivariate animal model using INLA. Unlike MCMCglmm and ASReml-R (Butler et al. 2007), analysis of multivariate animal model is not straightforward in R-INLA. For multivariate inference in INLA, we first assumed a trivariate distribution as a set of univariate Gaussian distributions, then we used the multiple likelihood feature in INLA and the recently implemented ‘copy’ feature (Martins et al. 2013) to fit our trivariate animal model with separate likelihoods (but which share few common parameters). The ‘copy’ feature in INLA allows us to estimate dependency parameters between traits. For the INLA analysis of the trivariate animal model we can reparametrize our model for the observation vector \(\mathbf {y}\) as follows:

Here, \(\mathbf {y}_{1}, \mathbf {y}_{2}, \mathbf {y}_{3}\) are the traits and \({\mathbf {\kappa }}_{i,j}\) defines the dependency between additive effects (\({\mathbf {a}}_{i}`s\)), moreover, \({\mathbf {\alpha }}_{i,j}\) defines the dependency between the error terms (\({\mathbf {e}}_{i}`s\)). For the Bayesian inference one needs to assign prior distribution for the unknown parameters. The additive genetic effects (\({\mathbf {a}}_{i}`s\)) for each trait were assigned multivariate normal distributions with a mean vector of zeros, \(\mathbf {0}\), as:

whereas the residuals (\({\mathbf {e}}_{i}`s\)) were assumed to follow a multivariate normal distribution as follows:

where \(\mathbf I\) is an identity matrix. The hyperparameters (\({\mathbf {\sigma }}^2_{a_{i}},{\mathbf {\sigma }}^2_{e_{i}}\)) were assigned inverse-Gamma prior (0.5, 0.5) distributions and the dependency parameters (\(\kappa _{i,j},\alpha _{i,j}\)) were assumed to follow Gaussian distributions with mean 0 and variance 10. Thus we define the observation vector \(\mathbf {y}\) for the trivariate animal model as:

Here \({\mathbf {u}}={{\mathbf {W}}_{a}{\mathbf{a}}}\), is the additive genetic term and \({\varvec{\epsilon }}={{\mathbf {W}}_{e}{\mathbf{e}}}\), is the residual term. Moreover, Cholesky factor

and

Here, \(\mathbf {a}_1,\mathbf {a}_2\) and \(\mathbf {a}_3\) are the additive effects for each traits in the reparametrized scale. Moreover, \(\mathbf {A}\) is additive relationship matrix calculated from the pedigree information and \(\mathbf {\sigma }^2_{{a}_{i}}\), \(i = 1, 2, 3\) are the additive genetic variances for each trait. Hence,

Here,

and

is the additive genetic covariance matrix for the traits in the transformed scale. Thus, the additive genetic effects (\({\mathbf {u=W}_{{a}}{\mathbf{a}}}\)) follow a multivariate normal distribution (Eq. 5) with a mean vector of zeros, \(\mathbf 0\), as:

Here, \(\mathbf {G}_{0}\) is the additive genetic (co)variance matrix. Similarly

Here we have independent error terms (\({\mathbf {e}}_i` s\)) for each trait, so the covariance matrix \(\mathbf {I}\) is an identity matrix. Hence, the residuals (\({\varvec{\epsilon }}\)) follow a multivariate normal distribution (Eq. 6) with a mean vector of zeros, \(\mathbf {0}\), as follows:

Here, \(\mathbf {R}_{0}\) is the residual genetic (co)variance matrix.

To extend this method for more than three traits (say, n traits) can be done by modifying the terms of Eq. (9), so that the additive genetic Cholesky factor \({\mathbf {W}_{a}}\) is a Kronecker product of \(n\times n\) lower triangular matrix with I and \({\mathbf {\Sigma }}_{{X}_{a}}\) is the additive genetic block matrix containing n blocks. For example, number of dependency parameters required for a \(4 \times 4\) Cholesky factor is already \(n(n-1)/2=4\times 3/2=6\).

As an additional supplementary material we provide the R scripts we used for the INLA analysis.

Back transformation in INLA

INLA analysis returns the marginal posterior distributions of the hyperparameters (\({\mathbf {\sigma }}^2_{a_{i}`s},{\mathbf {\sigma }}^2_{e_{i}`s}\)) and the dependency parameters (\(\kappa_{i,j}`s,\alpha _{i,j}`s\)) for the reparametrized model (Eq. 7). So one need to perform the back transformation after the INLA analysis in order to obtain (co)variance components in the original scale. Let \({\mathbf {\sigma }}^2_{u_{i}}\), \({\mathbf {\sigma }}^2_{\epsilon _{i}}\), where \(i = 1, 2, 3\) and \({\mathbf {\sigma }}_{u_{ij}}\), \({\mathbf {\sigma }}_{\epsilon _{ij}}\), where \(i,j = 1, 2, 3\) be the (genetic and residual) variance and (genetic and residual) covariance components, respectively, in the original scale. First, calculate the approximated posterior marginal distribution for the hyperparameters (\({\mathbf {\sigma }}^2_{a_{i}`s},{\mathbf {\sigma }}^2_{e_{i}`s}\)) and the dependency parameters (\(\kappa_{i,j}`s,\alpha _{i,j}`s\)) by sampling from their joint distribution using the ‘inla.hyperpar.sample’ (Martins et al. 2013) function. Then, following Eq. (9) the genetic variance components can be calculated using the posterior distributions as, \({\mathbf {\sigma }}^2_{{u}_{1}}={\mathbf {\sigma }}^2_{{a}_{1}}\), \({\mathbf {\sigma }}^2_{{u}_{2}}=\kappa ^2_{1,2}\sigma ^2_{{a}_1} +{\mathbf {\sigma }}^2_{{a}_2}\) and \({\mathbf {\sigma }}^2_{{u}_{3}}={\mathbf {\kappa }}^2_{1,3}\sigma ^2_{{a}_1}+{\mathbf {\kappa }}^2_{2,3}\sigma ^2_{{a}_2}+{\mathbf {\sigma }}^2_{{a}_3}\). Similarly the genetic covariance components can be obtained as, \({\mathbf {\sigma }}_{{u}_{12}}={\mathbf {\kappa }}_{1,2}\sigma ^2_{{a}_1}\), \({\mathbf {\sigma }}_{{u}_{13}}={\mathbf {\kappa }}_{1,3}\sigma ^2_{{a}_1}\), and \({\mathbf {\sigma }}_{u_{23}}={\mathbf {\kappa }}_{1,2}\kappa _{1,3}\sigma ^2_{{a}_1}+{\mathbf {\kappa }}_{2,3}\sigma ^2_{{a}_2}\). The same procedure can be used to calculate the residual (co)variance components using Eq. (11). R scripts for the back transformation can be found in the supplementary material.

Example analyses

Simulated dataset with high heritability

To validate our new algorithm we developed a simulated pedigree data. In this, we considered a base population of 50 unrelated lines, wherein each of the 25 seed parents were mated with 25 pollen donors resulting in total 625 individuals (in total 675 individuals, including the base population). Additive genetic relationship matrix (\(\mathbf {A}\)) was calculated from the pedigree information. In our current study, we simulated three quantitative traits by summing up the additive genetic effects \(\mathbf {a}\) and the noise \(\mathbf {e}\). Thus, the vector of phenotypic observations (three traits) was calculated as:

Here the vectors \(\mathbf {a}\), \(\mathbf {e}\) were drawn from \({\mathcal {MVN}}({\mathbf {0}},{\mathbf {G}}_{0}\otimes {\mathbf {A}})\) and \({\mathcal {MVN}}({\mathbf {0}},{\mathbf {R}}_{0}\otimes {\mathbf {I}})\), respectively. In order to simulate correlated traits with relatively high heritability (\(h^2 \geqslant 0.5\)), we used

as the genetic covariance matrix and the residual covariance matrix between the three traits. The three simulated traits had heritabilities \(\approx 0.50, 0.60\) and 0.70, respectively. Let \({\mathbf {G}}={\mathbf {G}}_{0}\otimes {\mathbf {A}}\) and \({\mathbf {R}}={\mathbf {R}}_{0}\otimes {\mathbf {I}}\), then we used the Cholesky decomposition of the covariance matrices \(\mathbf {G}\) and \(\mathbf {R}\) to draw samples from the multivariate normal distribution. Hence, the random additive effect \(\mathbf {a}\) was calculated as \({\mathbf {a}}={\mathbf {Pz}_{{a}}}\), where \({\mathbf {z}_{{a}}\sim {\mathcal {MVN}}(\mathbf {0},\mathbf {I})}\) and \(\mathbf {P}\) is the Cholesky factor \(\mathbf {PP^{\prime }=G}\); whereas, the residuals \(\mathbf {e}\) was calculated as \({\mathbf {e}}={\mathbf {Tz}_{{e}}}\), where \({\mathbf {z}_{{e}}\sim {\mathcal {MVN}}(\mathbf {0},\mathbf {I})}\) and \(\mathbf {T}\) is the Cholesky factor \(\mathbf {TT^{\prime }=R}\).

Simulated dataset with low heritability

We also analyzed another simulated correlated dataset with low heritability (\(h^2\approx 0.2\)) and negative covariances between the traits, in order to show how these methods perform when the heritability is relatively low. To simulate the dataset we considered the same pedigree information from the high heritability dataset but, with different covariance matrices. For the simulation we considered

as the genetic and residual covariance matrices, respectively. The correlated phenotypes were simulated as explained before and the three traits had heritabilities \(\approx\)0.20, 0.20 and 0.22, respectively.

Field data

In our study we analyzed the recently published rice (Oryza sativa) dataset (Spindel et al. 2015) and we selected three traits, grain yield (YLD), flowering time (FL) and plant height (PH) from 2012 dry season for the analysis. The population was genotyped with 73,147 markers using genotyping-by-sequencing method and we selected 323 lines where both the phenotypic and genotypic informations were available (see Spindel et al. 2015 for more details). So we used the available marker information for the estimation of genetic (co)variance components and the realized genomic relationship matrix (\(\mathbf {M}\)) was obtained from the marker information using R-package ‘rrBLUP’ (Endelman 2011). For the real data analysis, we considered the marker data instead of the pedigree information, so in model (1) the vector of random effects (\(\mathbf {u}\)) were assumed to follow a normal distribution according to Eq. (5) as

Here, \(\mathbf {M}\) is realized genomic relationship matrix calculated from the marker information and \(\mathbf {G}_{0}\) is the genetic (co)variance matrix.

Analyses and results

Simulated data with replicates

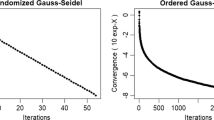

In multi-trait analysis using iterative algorithms, it is often difficult to find suitable starting values for the parameters of interest. However, by performing test-runs using single-trait data one could find suitable starting values for the variance components. The (co)variance components were estimated using MCMCglmm, R-INLA and ASReml-R packages. For MCMC analysis using MCMCglmm package, we considered a total chain length of 50,000 iterations with a burning period of 10,000 iterations. The MCMCglmm package assign inverse-Wishart prior distribution for the random and residual covariance matrices. In our MCMC analysis, we used identity matrix as the scaling matrix of the prior distribution (ones for the variances and zeros for the covariances) assigned for the genetic covariance matrix (\({\mathbf {G}}_{0}\)) and for the residual covariance matrix (\({\mathbf {R}}_{0}\)) between the three traits. Moreover, we specified the degree of belief parameter (d) as 1 for the inverse-Wishart prior distribution. By default MCMCglmm uses the scaling matrix values as the starting values. For the REML analysis we used ones as the variances and zeros as the covariances for the genetic covariance matrix (\({\mathbf {G}}_{0}\)) as the starting values; whereas, for the residual covariance matrix (\({\mathbf {R}}_{0}\)) we used half of the phenotypic variance matrix of the data as initial values (ASReml-R default). The total computation time for the simulated dataset using MCMCglmm package was around 10 min and INLA took around 4 min, whereas the time for ASReml-R package was around 1 min. The INLA approach we used in the current study was not able to analyze bigger datasets (around 1000 lines), mainly due to the lack of memory on our system. We used a Linux system with 8GB RAM for our calculations. However, it is possible to analyze such large datasets using computers with more memory size or arguably one can use the option ‘inla.remote()’ to run R-INLA on a remote server with more memory size.

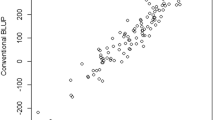

We used 50 simulation replicates for each simulated dataset to calculate the variance and covariance components using different estimation methods. In order to compare the accuracy of different estimation methods, we calculated the estimation error (difference between the true and estimated values) using 50 simulation replicates for the (co)variance components and then we plotted the box plots for the estimation errors to visualize the estimation accuracy of different methods. We show those box plots for the estimation errors for the variance (Fig. 1) and covariance (Fig. 2) components for the simulated dataset with high heritability. The Y-axis scale in those plots corresponds to the differences between the true simulated values and the estimated values, whereas the X-axis corresponds to different estimation methods. In order to calculate the estimation errors, for MCMC we used posterior mode, whereas for INLA we used the posterior mean estimates. From Figs. 1 and 2, it can be concluded that, different methods were able to provide similar estimates. We also plotted the box plots for the estimation errors for the variance (Fig. 3) and covariance (Fig. 4) for the dataset with low heritability. However, for the low heritability dataset the MCMC and INLA approaches provided variance estimates closer to true values than the REML method. The narrow-sense heritability estimates for the simulated datasets using 50 simulation replicates are shown in Table 4. Here we did not account the covariances between the traits in order to calculate the heritability. The narrow-sense heritability (\(h^2\)) was calculated for each trait separately as \({h}^2 = V_a/(V_a+V_e)\), where \(V_a\) and \(V_e\) are the additive genetic variance and error variance of the particular trait, respectively.

Box plots for the estimation error (difference between the true and estimated values) of the variance components using 50 simulation replicates with the high heritability dataset. Here the Y-axis scale corresponds to the difference between the true simulated values and the estimated values, whereas X-axis corresponds to different estimation methods

Box plots for the estimation error (difference between the true and estimated values) of the covariance components using 50 simulation replicates with the high heritability dataset. Here the Y-axis scale corresponds to the difference between the true simulated values and the estimated values, whereas X-axis corresponds to different estimation methods

Box plots for the estimation error (difference between the true and estimated values) of the variance components using 50 simulation replicates with the low heritability dataset. Here the Y-axis scale corresponds to the difference between the true simulated values and estimated values, whereas X-axis corresponds to different estimation methods

Box plots for the estimation error (difference between the true and estimated values) of the covariance components using 50 simulation replicates with the low heritability dataset. Here the Y-axis scale corresponds to the difference between the true simulated values and the estimated values, whereas X-axis corresponds to different estimation methods

Additionally, instead of covariances we report the estimated genetic and residual correlation coefficients as well as the 95 % empirical confidence intervals (in brackets) for each trait in Table 1. From Table 1 it is clear that the Bayesian methods were able to provide better estimates (closer to the true simulated values) for the additive genetic correlation coefficients than the REML approach with the low heritability dataset. One probable reason could be that the prior influence is higher with the low heritability dataset. We also performed univariate analyses using INLA with the simulated low heritability dataset (see Table 2). Both univariate and multivariate analyses gave very similar results, however in multivariate analysis one can account for and estimate the covariances between the traits.

Heritability and breeding values are of great interest to breeders in order to plan an efficient breeding program. In our study, we also calculated the correlation coefficients between the estimated and true breeding values using different estimation methods. We used average over 50 simulation replicates for both datasets to calculate the correlation coefficients. For the high heritability dataset, the correlation coefficients were 0.85, 0.84 and 0.83 for REML, MCMC and INLA methods, respectively. However, for the low heritability dataset the correlation coefficients were relatively low being 0.64, 0.65 and 0.58 for REML, MCMC and INLA, respectively.

Field data

We chose the same starting values for the simulated data and real data in our REML analysis. For MCMC analysis we used empirical phenotypic variance of each trait as the variances and zeros as the covariance as the scale matrix of the prior distribution, whereas, starting values for other parameters were set randomly. For the REML analysis we chose the same starting values that we used for the simulated dataset. Both REML and Bayesian methods gave similar results in our analysis using real dataset (Table 3). Due to numerical problems caused by the large differences among the traits’ phenotypic variances, before the INLA analysis we standardized each phenotypic vector to zero mean and unit variance, and after the analysis we rescaled the (co)variance components into the original scale. However, for MCMC and REML analysis we used the original scale. Our results showed that there is a negative genetic covariance between the traits plant height (PH) and yield (YLD). Additionally, as expected, the traits days to flowering (FL) and yield (YLD) showed a negative genetic covariance in our analysis. We also calculated the narrow-sense heritability for both datasets and Table 4 summarizes those results. Our narrow-sense heritability estimates for the real dataset are in concordance with the heritability estimates reported by Spindel et al. (2015) for the univariate animal model using REML. The total computation time for real dataset using INLA was around three minutes, whereas the MCMCglmm took around five hours. The main reasons for the expensive computation time with MCMCglmm are, firstly, the realized genomic relationship matrix were calculated outside the package, whereas, for the pedigree information MCMCglmm has built in functions in order to handle the covariance matrix more efficiently. Secondly, the realized genomic relationship matrix from the marker information is more dense than the pedigree-based additive relationship matrix.

Discussion

Multi-trait analysis of mixed models tend to be powerful and provide more accurate estimates than the single-trait analysis because the former method can take into account the underlying correlation structure found in a multi-trait dataset. However, Bayesian and non-Bayesian inference of multi-trait mixed model analysis are complex and computationally demanding. In this study, we explained how to do Bayesian inference of a multivariate animal model using recently developed INLA and the counter part MCMC, while comparing the results with the commonly used REML estimates. Our results show that reparametrization-based INLA approach can be used as a fast alternative to MCMC methods for the Bayesian inference of multivariate animal model. The reparametrization approach, that was here applied for INLA analysis, can be used also more generally together with other tools to speed up the multi-trait animal model computations.

Drawback of the reparametrization-based approach is that priors are assigned for elements in the Cholesky factor instead of the original covariance matrix. Thus, here it is not possible to make a direct comparison between the MCMC and INLA results due to the differences in the prior distributions, however, it is possible to compare both approaches if we choose the same prior distributions. For the MCMC analysis we used inverse-Wishart distributions for the covariance matrices; whereas, for INLA we used Gaussian prior distribution for the elements in the Cholesky factor (i.e., dependence parameters) (\({\mathbf {\kappa }}_{ij}`s,{\mathbf {\alpha }}_{ij}`s\)) and inverse-Gamma distribution for the decomposition variance components (\({\mathbf {\sigma }}^2_{a_{i}`s},{\mathbf {\sigma }}^2_{e_{i}`s}\)). Our results show that the REML estimates are in concordance with MCMCglmm and INLA. We want to emphasize that in our examples, the analyzed data sets were large and we did not encounter any problems. In general, identifiability is a problem in mixed model analyses with small data (Mathew et al. 2012). However, Bayesian methods are in better positions because they can at least find such problems (that posterior distribution has multiple modes) more easily than REML (which provides a single point-estimate).

Nowadays, molecular markers are widely used in animal and plant breeding programs as a valuable tool for genetic improvement. Therefore, we also showed how to estimate genetic parameters in a multivariate animal model using molecular marker information with the reparametrization-based INLA approach and frequentist framework. Finally, our results imply that the reparametrization-based INLA approach can be used as a fast alternative to MCMC methods in order to estimate genetic parameters with a multivariate animal model using pedigree information as well as with molecular marker information.

Author contribution statement

BM, AH, PK, JL and MS were involved in the conception and design of the study. BM performed the simulations and preprocessing of the data. BM and AH implemented the method, and performed the statistical analyses. BM drafted the manuscript. BM, AH, JL and MS participated in the interpretation of results. All the authors critically revised the manuscript.

References

Barnard J, McCulloch R, Meng XL (2000) Modeling covariance matrices in terms of standard deviations and correlations, with application to shrinkage. Stat Sin 10:1281–1312

Bauer A, Léon J (2008) Multiple-trait breeding values for parental selection in self-pollinating crops. Theor Appl Genet 116:235–242

Bauer AM, Reetz TC, Léon J (2006) Estimation of breeding values of inbred lines using best linear unbiased prediction (BLUP) and genetic similarities. Crop Sci 46:2685–2691

Blasco A (2001) The Bayesian controversy in animal breeding. J Anim Sci 79:2023–2046

Bøhn ED (2014) Modelling and inference for Bayesian bivariate animal models using Integrated Nested Laplace Approximations. Master’s thesis, Department of Mathematical Sciences, Norwegian University of Science and Technology, Trondheim, Norway. http://www.diva-portal.org/smash/get/diva2:730496/FULLTEXT01.pdf

Butler D, Cullis BR, Gilmour A, Gogel B (2007) ASReml-R reference manual. Queensland Department of Primary Industries and Fisheries, Brisbane

Cullis BR, Jefferson P, Thompson R, Smith AB (2014) Factor analytic and reduced animal models for the investigation of additive genotype-by-environment interaction in outcrossing plant species with application to a Pinus radiata breeding programme. Theor Appl Genet 127:2193–2210

Ducrocq V, Chapuis H (1997) Generalizing the use of the canonical transformation for the solution of multivariate mixed model equations. Genet Sel Evol 29:205–224

Endelman JB (2011) Ridge regression and other kernels for genomic selection with r package rrBLUP. Plant Genome 4:250–255

Gao H, Wu Y, Zhang T, Jiang L, Zhan J, Li J, Yang R (2015) Multiple-trait genome-wide association study based on principal component analysis for residual covariance matrix. Heredity 114:428–428

Hadfield JD (2010) MCMC methods for multi-response generalized linear mixed models: the MCMCglmm R package. J Stat Softw 33:1–22

Henderson C, Quaas R (1976) Multiple trait evaluation using relatives’ records. J Anim Sci 43:1188–1197

Holand AM, Steinsland I, Martino S, Jensen H (2013) Animal models and integrated nested Laplace approximations. G3 3:1241–1251

Korsgaard IR, Lund MS, Sorensen D, Gianola D, Madsen P, Jensen J et al (2003) Multivariate Bayesian analysis of Gaussian, right censored Gaussian, ordered categorical and binary traits using Gibbs sampling. Genet Sel Evol 35:159–184

Larsen CT, Holand AM, Jensen H, Steinsland I, Roulin A (2014) On estimation and identifiability issues of sex-linked inheritance with a case study of pigmentation in swiss barn owl (Tyto alba ). Ecol Evol 4:1555–1566

Lunn DJ, Thomas A, Best N, Spiegelhalter D (2000) WinBUGS—a Bayesian modelling framework: concepts, structure, and extensibility. Stat Comput 10:325–337

Lynch M, Walsh B (1998) Genetics and analysis of quantitative traits, vol 1. Sinauer, Sunderland

Martins TG, Simpson D, Lindgren F, Rue H (2013) Bayesian computing with INLA: new features. Comput Stat Data Anal 67:68–83

Mathew B, Bauer A, Koistinen P, Reetz T, Léon J, Sillanpää MJ (2012) Bayesian adaptive Markov chain Monte Carlo estimation of genetic parameters. Heredity 109:235–245

Mathew B, Léon J, Sillanpää MJ (2015) Integrated nested Laplace approximation inference and cross-validation to tune variance components in estimation of breeding value. Mol Breed 35:1–9

Meyer K (2009) Factor-analytic models for genotype × environment type problems and structured covariance matrices. Genet Sel Evol 41:1–21

Mrode R, Thompson R (2005) Linear models for the prediction of animal breeding values, 2nd edn. CABI Publishing, Oxfordshire

Oakey H, Verbyla A, Pitchford W, Cullis B, Kuchel H (2006) Joint modeling of additive and non-additive genetic line effects in single field trials. Theor Appl Genet 113:809–819

Patterson D, Thompson R (1971) Recovery of inter-block information when block sizes are unequal. Biometrika 58:545–554

Persson T, Andersson B (2004) Accuracy of single-and multiple-trait REML evaluation of data including non-random missing records. Silv Genet 53:135–138

Piepho HP (1997) Analyzing genotype-environment data by mixed models with multiplicative terms. Biometrics 53:761–766

Piepho HP (1998) Empirical best linear unbiased prediction in cultivar trials using factor-analytic variance-covariance structures. Theor Appl Genet 97:195–201

Piepho HP, Möhring J, Melchinger A, Büchse A (2008) BLUP for phenotypic selection in plant breeding and variety testing. Euphytica 161:209–228

Pourahmadi M (1999) Joint mean-covariance models with applications to longitudinal data: unconstrained parameterisation. Biometrika 86:677–690

Pourahmadi M (2000) Maximum likelihood estimation of generalised linear models for multivariate normal covariance matrix. Biometrika 87:425–435

Pourahmadi M (2011) Covariance estimation: the GLM and regularization perspectives. Stat Sci 26:369–387

Rue H, Martino S, Chopin N (2009) Approximate Bayesian inference for latent Gaussian models by using integrated nested Laplace approximations. J R Stat Soc B 71:319–392

Schaeffer LR (1984) Sire and cow evaluation under multiple trait models. J Dairy Sci 67:1567–1580

Sorensen D, Gianola D (2002) Likelihood, Bayesian and MCMC methods in quantitative genetics. Springer, New York.

Spindel J, Begum H, Akdemir D, Virk P, Collard B, Redoña E, Atlin G, Jannink JL, McCouch SR (2015) Genomic selection and association mapping in rice Oryza sativa: effect of trait genetic architecture, training population composition, marker number and statistical model on accuracy of rice genomic selection in elite, tropical rice breeding lines. PLoS Genet 11:e1004982

Stan Development Team (2014) Stan modeling language users guide and reference manual. Version 2.7.0

Steinsland I, Jensen H (2010) Utilizing Gaussian Markov random field properties of Bayesian animal models. Biometrics 66:763–771

Thompson R, Meyer K (1986) A review of theoretical aspects in the estimation of breeding values for multi-trait selection. Livest Prod Sci 15:299–313

Van Tassell C, Van Vleck LD (1996) Multiple-trait Gibbs sampler for animal models: flexible programs for Bayesian and likelihood-based (co)variance component inference. J Anim Sci 74:2586–2597

Wang C, Rutledge J, Gianola D (1993) Marginal inferences about variance components in a mixed linear model using Gibbs sampling. Genet Sel Evol 25:1–22

Acknowledgments

We thank Jarrod D. Hadfield for helping us with the implementation of our model using MCMCglmm package. We are also grateful to Håvard Rue and Ingelin Steinsland for answering questions about INLA. The authors would like to thank the editor and two anonymous reviewers as well as Karin Woitol for their suggestions and comments which helped us to improve our manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by H. Iwata.

Petri Koistinen: Posthumous co-authorship.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Mathew, B., Holand, A.M., Koistinen, P. et al. Reparametrization-based estimation of genetic parameters in multi-trait animal model using Integrated Nested Laplace Approximation. Theor Appl Genet 129, 215–225 (2016). https://doi.org/10.1007/s00122-015-2622-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00122-015-2622-x