Abstract

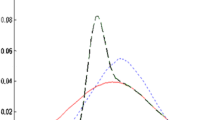

This paper investigates the effectiveness of factorial speech processing models in noise-robust automatic speech recognition tasks. For this purpose, the paper proposes an idealistic approach for modeling state-conditional observation distribution of factorial models based on weighted stereo samples. This approach is an extension to previous single-pass retraining for ideal model compensation which is extended here to support multiple audio sources. Non-stationary noises can be considered as one of these audio sources with multiple states. Experiments of this paper over the set A of the Aurora 2 dataset show that recognition performance can be improved by this consideration. The improvement is significant in low signal-to-noise energy conditions, up to 4 % absolute word recognition accuracy. In addition to the power of the proposed method in accurate representation of state-conditional observation distribution, it has an important advantage over previous methods by providing the opportunity to independently select feature spaces for both source and corrupted features. This opens a new window for seeking better feature spaces appropriate for noisy speech, independent from clean speech features.

Similar content being viewed by others

References

M. Afify, X. Cui, Y. Gao, Stereo-based stochastic mapping for robust speech recognition. IEEE Trans. Audio Speech Lang. Process. 17, 1325–1334 (2009)

J. Baker, L. Deng, J. Glass, S. Khudanpur, C. Lee, N. Morgan, D. O’Shaughnessy, Developments and directions in speech recognition and understanding, part 1 [DSP education]. IEEE Signal Process. Mag. 26, 75–80 (2009)

C.M. Bishop, Pattern Recognition and Machine Learning, vol. 4 (Springer, New York, 2007)

M. Brookes, Voicebox: Speech Processing Toolbox for MATLAB. (1997). http://www.ee.ic.ac.uk/hp/staff/dmb/voicebox/voicebox.html

L. Deng, J. Droppo, A. Acero, Enhancement of log Mel power spectra of speech using a phase-sensitive model of the acoustic environment and sequential estimation of the corrupting noise. IEEE Trans. Speech Audio Process. 12, 133–143 (2004)

P.S. Dwyer, Some applications of matrix derivatives in multivariate analysis. J. Am. Stat. Assoc. 62, 607–625 (1967)

B.J. Frey, L. Deng, A. Acero, T.T. Kristjansson, ALGONQUIN: iterating Laplace’s method to remove multiple types of acoustic distortion for robust speech recognition. Eurospeech 2001, 901–904 (2001)

M.J.F. Gales, Model-Based Techniques for Noise Robust Speech Recognition (University of Cambridge, Cambridge, 1995)

M.J.F. Gales, S.J. Young, Robust continuous speech recognition using parallel model combination. IEEE Trans. Speech Audio Process. 4, 352–359 (1996)

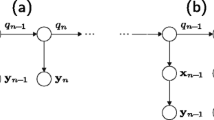

Z. Ghahramani, M.I. Jordan, Factorial hidden Markov models. Mach. Learn. 29, 245–273 (1997)

J.R. Hershey, S.J. Rennie, J. Le Roux, Factorial models for noise robust speech recognition, in Techniques for Noise Robustness in Automatic Speech Recognition, ed. by T. Virtanen, R. Singh, B. Raj (Wiley, New York, 2012), pp. 311–345. https://merl.com/publications/docs/TR2012-002.pdf

J.R. Hershey, S.J. Rennie, P.A. Olsen, T.T. Kristjansson, Super-human multi-talker speech recognition: a graphical modeling approach. Comput. Speech Lang. 24, 45–66 (2010)

H.G. Hirsch, D. Pearce, The Aurora experimental framework for the performance evaluation of speech recognition systems under noisy conditions, in ASR2000-Automatic Speech Recognition: Challenges for the New Millenium ISCA Tutorial and Research Workshop (ITRW) (2000)

V. Leutnant, R. Haeb-Umbach, An analytic derivation of a phase-sensitive observation model for noise robust speech recognition. Interspeech 2009, 2395–2398 (2009)

J. Li, L. Deng, Y. Gong, R. Haeb-Umbach, An overview of noise-robust automatic speech recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 22, 745–777 (2014)

J. Li, L. Deng, D. Yu, Y. Gong, A. Acero, A unified framework of HMM adaptation with joint compensation of additive and convolutive distortions. Comput. Speech Lang. 23, 389–405 (2009)

B. Logan, P.J. Moreno, Factorial Hidden Markov Models for Speech Recognition: Preliminary Experiments (Cambridge Research Laboratory, Cambridge, 1997)

D.J.C. Mackay, Introduction to Monte Carlo methods, in Learning in Graphical Models, vol. 89, ed. by M.I. Jordan (Springer, Dordrecht, 1998), pp. 175–204

M.H. Radfar, R.M. Dansereau, Single-channel speech separation using soft mask filtering. IEEE Trans. Audio Speech Lang. Process. 15, 2299–2310 (2007)

S.T. Roweis, Factorial models and refiltering for speech separation and denoising, in Eighth European Conference on Speech Communication and Technology (2003)

S.M. Siddiqi, G.J. Gordon, A.W. Moore, Fast state discovery for HMM model selection and learning, in International Conference on Artificial Intelligence and Statistics (2007), pp 492–499

R.C. Van Dalen, Statistical Models for Noise-Robust Speech Recognition (University of Cambridge, Cambridge, 2011)

S.X. Wang, Maximum Weighted Likelihood Estimation (University of British Columbia, Vancouver, 2001)

S. Young, G. Evermann, D. Kershaw, G. Moore, J. Odell, D. Ollason, V. Valtchev, P. Woodland, The HTK Book, vol. 3 (Cambridge University Engineering Department, Cambridge, 2009)

Acknowledgments

The authors would like to thank to Mohammad Ali Keyvanrad, Dr. Omid Naghshineh Arjmand and Dr. Adel Mohammadpour for their valuable arguments and suggestions in this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

An erratum to this article is available at http://dx.doi.org/10.1007/s00034-016-0326-3.

Appendix: Extending the EM Algorithm for Modeling Mixture of Gaussians Based on Weighted Samples

Appendix: Extending the EM Algorithm for Modeling Mixture of Gaussians Based on Weighted Samples

In the E-step of the EM algorithm for weighted particles, particle weights have no effect on the component responsibility equations. By considering particle weights as the replicating order of the particles (similar to (24)), we see that this replication has no effect on the component responsibilities to each particle. Therefore, component responsibilities are calculated without considering particle weights by the old parameter set as in E-step of the standard EM algorithm for GMMs:

where the normalization constant is \(\mathop \sum \nolimits _{k=1}^K \pi _{k}^{\prime } \mathcal {N}\left( {\varvec{y}_{l} ;\varvec{\mu }_{k}^{\prime } , \varvec{\Sigma }_{k}^{\prime } } \right) \).

For the M-step, the following optimization problem must be solved:

Using the method of Lagrange multiplier for satisfying the constraint for component priors, we have the following objective function for optimization:

Taking the derivative g with respect to \(\varvec{\mu }_{k} \) results in:

Now (30) is easily obtained for updating \(\varvec{\mu }_{{{k}}} \) by setting this derivative to zero. For estimating \(\varvec{\Sigma }_{{{k}}} \), according to [6] the derivative takes the following form:

in which the \(\varvec{\mu }_{k} \) is estimated by (30). Setting it to zero, we obtain:

Then (31) is obtained for estimating \(\varvec{\Sigma }_{{{k}}} \) in which when the number of samples are significant, there is no need for adjusting the estimator for bias. Finally for \(\pi _{k} \) we have:

by using the assumption \(\mathop \sum \nolimits _{k=1}^K \pi _{k} =1\) and considering \(\gamma _{l} \left( k \right) \) as a valid conditional probability mass function, \(\lambda \) is calculated by:

Now we can eliminate \(\lambda \) from (39) by (40) which leads to (32) for updating \(\pi _{k} .\)

Rights and permissions

About this article

Cite this article

Khademian, M., Homayounpour, M.M. Modeling State-Conditional Observation Distribution Using Weighted Stereo Samples for Factorial Speech Processing Models. Circuits Syst Signal Process 36, 339–357 (2017). https://doi.org/10.1007/s00034-016-0310-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-016-0310-y