Summary

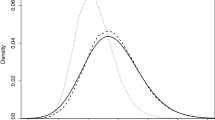

Independent measurements are taken from distinct populations which may differ in mean, variance and in shape, for instance in the number of modes and the heaviness of the tails. Our goal is to characterize differences between these different populations. To avoid pre-judging the nature of the heterogeneity, for instance by assuming a parametric form, and to reduce the loss of information by calculating summary statistics, the observations are transformed to the empirical characteristic function (ECF). An eigen decomposition is applied to the ECFs to represent the populations as points in a low dimensional space and the choice of optimal dimension is made by minimising a mean square error. Interpretation of these plots is naturally provided by the corresponding density estimate obtained by inverting the ECF projected on the reduced dimension space. Some simulated examples indicate the promise of the technique and an application to the growth of Mirabilis plants is given.

Similar content being viewed by others

References

Barry J., and Diggle P. (1995). Choosing the smoothing parameter in a Fourier approach to nonparametric deconvolution of a density estimate.Nonparametric Statistics,4, 223–232.

Besse, P., Caussinus, H., Ferré, L., Fine, J. (1986). Principal component analysis and optimisation of graphical displays.Statistics,19, 2, 301–312.

Besse, P., and Ramsay, J. (1986). Principal component analysis of sample functions.Psychometrika,51, 285–311.

Boularan, J., Ferré, L., and Vieu, P. (1995). Growth curves: a two stage nonparametric approach.J. Statistical Planning Inference,38, 327–350.

Cardot, H., Ferraty. F and Sarda, P. (1999). Functional linear model.Statist.Probab.Let.,45, 11–22.

Caussinus, H., and Ferré, L. (1989). Analyse an composantes principales d’individus définis par les paramètres d’un modèle.Statistique et Analyse des Donnees,14, 3, 19–28.

Caussinus, H., and Ferré, L. (1992). Comparing the parameters of a model for several units by means of principal components analysis.Comp. Stat. Data Anal,13, 269–280.

Chiu, S.T. (1991). Bandwidth selection for kernel density estimation.Ann. Statist,4, 1883–1905.

Chiu, S.T. (1996). A comparative review of bandwidth selection for kernel density estimation.Statistica Sinica,6, 129–145.

Csorgo, S. (1981). Limit behavior of the empirical characteristic function.Ann. Prob.,9, 130–144.

Csorgo, S. (1985). Rates of the uniform convergence for the empirical characteristic function.Acta Sci. Math.,48, 97–102.

Diggle, P., and Hall, P. (1993). A Fourier approach to nonparametric deconvolution of a density estimate.J. R. Statist. Soc. B,55, 2, 523–531.

Ferraty, F., and Vieu, P. (2002). The functional nonparametric model and application to spectrometric data.Computational Statistics, to be published.

Ferré, L., and Yao, A.F. (2000). Functional sliced inverse regression analysis. Preprint. Pub. Lab. Stat. Prob. Toulouse, LSP-2000–14.

Ferré, L. (1989). Choix de la dimension de représentation optimale pour certains types d’analyses en composantes principales.C.R. Acad. Sc. Paris,309, Serie I, 959–964.

Ferre, L. (1995). Improvement of some multivariate estimates by reduction of the dimensionality.J. Mult. Analysis, 54, 147–162.

Feuerverger, A., and Mureika, R.A. (1977). The empirical characteristic function and its applications.Ann. Statist.,5, 1, 88–97.

Fine, J. (1987). On the validity of the perturbation method in asymptotic theory.Statistics,3, 401–414.

Gasser, T., and Kneip, A. (1991). Analysis of sample curves. InNonparametric Functional Estimation and Related Topics, (Ed. G. G. Roussas), Dordrecht: Kluwer, pp99–110.

Jones, M.C., and Rice, J. (1992). Displaying the important features of large collections of similar curves.Amer. Statist.,46, 140–5.

Kneip A., and Utikal K.J. (2001). Inference for density families using functional principal component analysis. J. Am. Statist. Ass.,96, N 454, 519–532.

Mahiedine, A. (1993).Estimation de la fonction charactéristique. PhD Thesis, Universite de Paris VI, Paris, France.

Préda, C, and Saporta, G. (2000). Régression PLS sur un processus stochastique.Pub. IRMA, Lille 2000,40, VII.

Ramsay, J.O., and Silverman, B.W. (1997).Functional Data Analysis. Berlin: Springer.

Silverman, B.W., and Rice, J. (1991). Estimating the mean and covariance structure when data are curves.J. R. Statist. Soc. B,53, 223–243.

Zhang, C.H. (1990). Fourier methods for estimating mixing densities and distributions.Ann. Math. Statist,39, 1289–1302.

Acknowledgement

we are grateful to an Associate Editor and the referees for comments which have substantially improved this paper.

Author information

Authors and Affiliations

Corresponding authors

Appendices

A APPENDIX: The expression for the MSE

Recall from the definitions given in Section 2 above that m is the ECF, the super-script mc denotes the centered version, the tilde \(\tilde{m}\) denotes the version standardized by the variance matrix. We suppose that ni and n grow at the rate. From (4) and the strong law of large numbers we have the a.s. expansion \(\tilde{m}_i(t)=\psi_i(t)+n^{-\frac{1}{2}}e_i\) with error \(e_i=O(\sqrt{{\rm{lnln}}n})\).

We can express (7) by

Equation (13) allows the use of the perturbation theory for linear operators for non-analytic perturbations (for an exposition see Fine, 1987) and leads to expressions for the eigen elements of M as a function of those of Ψ where \(\Psi=\sum\nolimits_{i=1}^N\frac{n_i}{n}\psi_i^c(t)\psi_i^c(t)'\). Denote by λj the eigen values ofΨ ranged in decreasing order and assumed to be distinct, and by Pj the orthogonal eigen projector associated with λj; correspondingly \(\widehat{\lambda}_j\) and \(\widehat{P}_j\) denote the eigen value and projections of M.

We have the following a.s. expansions: for j = 1 to p,

and

where

and where [A]− denotes the Moore Penrose generalized inverse of A.

Consequently

Now, for l = 1, …, p,

At the price of simple, but tedious, calculations using equations (14) to (18)

Furthermore

and

For any Hermitian matrices A and B and for any centred random matrix U,

where ⊗ here denotes the Kronecker product of matrices. When expanding MSEl, this property is applied to A = Pk and B = Pj, for k ≠ j, and to \(U=\psi_i^c(t)e_i^c(t)' + e_i^c(t)\psi_i^c(t)'\) . Note also

Finally the following result is obtained

The estimator the unknown parameters by their estimates and by taking into account the bias of the estimators of the eigen values given by:

Finally it follows that

B APPENDIX: The parameters of the mixture example

The mixtures in the simulation example of Section 3.3 have no more than 3 components. They are defined by the 3 means, standard deviations, and mixture probabilities.

Rights and permissions

About this article

Cite this article

Ferré, L., Whittaker, J. Application of the empirical characteristic function to compare and estimate densities by pooling information. Computational Statistics 19, 169–192 (2004). https://doi.org/10.1007/BF02892055

Published:

Issue Date:

DOI: https://doi.org/10.1007/BF02892055