Abstract

Climate change assessments rely upon scenarios of socioeconomic developments to conceptualize alternative outcomes for global greenhouse gas emissions. These are used in conjunction with climate models to make projections of future climate. Specifically, the estimations of greenhouse gas emissions based on socioeconomic scenarios constrain climate models in their outcomes of temperatures, precipitation, etc. Traditionally, the fundamental logic of the socioeconomic scenarios—that is, the logic that makes them plausible—is developed and prioritized using methods that are very subjective. This introduces a fundamental challenge for climate change assessment: The veracity of projections of future climate currently rests on subjective ground. We elaborate on these subjective aspects of scenarios in climate change research. We then consider an alternative method for developing scenarios, a systems dynamics approach called ‘Cross-Impact Balance’ (CIB) analysis. We discuss notions of ‘objective’ and ‘objectivity’ as criteria for distinguishing appropriate scenario methods for climate change research. We distinguish seven distinct meanings of ‘objective,’ and demonstrate that CIB analysis is more objective than traditional subjective approaches. However, we also consider criticisms concerning which of the seven meanings of ‘objective’ are appropriate for scenario work. Finally, we arrive at conclusions regarding which meanings of ‘objective’ and ‘objectivity’ are relevant for climate change research. Because scientific assessments uncover knowledge relevant to the responses of a real, independently existing climate system, this requires scenario methodologies employed in such studies to also uphold the seven meanings of ‘objective’ and ‘objectivity.’

Similar content being viewed by others

1 Introduction

Philosophy of science can make contributions to climate science. In this case, we do so by inspecting and evaluating alternative methods for producing scenarios used in projecting future climate and, potentially, the impacts of a changing climate (Winsberg 2010; Douglas 2007, 2008). The United Nations’ Intergovernmental Panel on Climate Change (or IPCC) serves as the authoritative body for assessing and synthesizing interdisciplinary research on climate change. In periodic Assessment Reports, the IPCC provides a full picture of timely scientific findings—including human influences on the climate system anticipated over the twenty-first century. For such work, the IPCC assessment process needs scenarios of potential socioeconomic developments around the globe, which are used in conjunction with various climate model runs in order to make projections of future climate (Hibbard et al. 2007). Socioeconomic scenarios may be thought of as possible future states of the world and are needed to provide, inter alia, estimations of emissions of greenhouse gasses to constrain climate models in their outcomes of temperatures, precipitation, etc. More specifically, scenarios, which include factors like population size, technological capacities, and energy use, are used to project greenhouse gas emissions such as carbon dioxide, methane, and ozone that are then fed into climate models as inputs. Different scenarios—for example, ones that differ in the population growth of India and its technological levels—could yield very different emission outcomes for some target year such as 2050; these would serve as contrasting greenhouse gas inputs into the climate models, thus yielding either a higher or lower projection for temperature in 2050.

The above description of scenarios developed and used in the IPCC assessment process requires input from many experts spanning multiple fields. Thus IPCC Assessment Reports are a massive exercise in social knowledge building, of which scenarios are only one part. These scenarios are vetted by many experts and are subject to the rigors of economic and earth system modeling. However, since the late 1990s, the fundamental logic of the scenarios—that is, the logic that makes these scenarios plausible—has been developed and prioritized under conditions that are subjective. This introduces a fundamental challenge for IPCC assessment: The veracity of projections of future climate currently rests on very subjective ground. In this paper, we examine the foundation of these scenarios and compare two methods of utilizing a diversity of expert judgment/opinion to develop them. The two methods are group consensus building (Intuitive logics) versus the elicitation of independent experts (Cross-Impact Balances). Through this comparison, we arrive at an evaluation of these different exercises in social knowledge building for scenarios used in scientific assessments. In this paper, we note that we are not evaluating the validity of different methods of building scenarios overall, but rather strictly in relation to the needs of scientific assessments, such as those taken up by the IPCC. Such assessments are boundary objects at the science-policy interface, where scientists aim to convey major conclusions from scientific research with policy implications to decision-makers. The arguments in our analysis do not treat the validity of scenario-building methods in alternative contexts such as for business planning or military strategizing. Because of the critical linkage that socioeconomic scenarios play in the IPCC context, we believe that the specification of these scenarios should be subject to standards of rigor and objectivity that are similar to that expected of conventional scientific studies.

This paper is organized into eight parts. First, we introduce the background for building scenarios in climate change research, and second, describe necessary steps for all methods that develop such scenarios. Third, we elaborate on the subjective (i.e., qualitative or narrative) aspects of scenarios in climate change research, and we introduce and discuss the dominant method for developing these qualitative scenarios, a group consensus approach which is called ‘Intuitive Logics.’ Fourth, we turn our attention to an alternative method, which is called ‘Cross-Impact Balance’ (CIB) analysis. Fifth, we discuss objectivity as a criterion for distinguishing superior qualitative scenario generation methods for scientific assessments of climate change. Sixth, we examine a defense of Intuitive Logics in this context. Seventh, we weigh the various claims for objective and subjective qualities of scenarios for scientific assessments such as that undertaken by the IPCC. And finally, we arrive at conclusions for which scenario methodology should be preferred.

1.1 Socioeconomic scenarios in climate change research

Climate change is an intertemporal, coupled human and natural system problem of such scale that conventional tools for policy analysis, which would be appropriate on shorter time frames, such as extrapolating future trends from historical projections, fail (Morgan et al. 1999). Instead, scenarios depicting highly contrasted possibilities for the future—that is, very different from each other and the present—are more appropriate for understanding the implications of climate change. Historically, alternative scenarios of greenhouse gas emissions due to human activities were tightly coupled to climate model simulations (Girod et al. 2009). However, as models specializing in socioeconomic change have matured [so-called integrated assessment (IA) models], there has been a division of labor between IA and general circulation modeling (Hibbard et al. 2007). IA models now treat explicitly analyses of socioeconomic change, while general circulation modeling treats explicitly the responses of the climate system.

Scientific theories and laws enable scientists to predict or anticipate outcomes in the world given some set of working assumptions. Since the First Assessment Report of the IPCC, IA model results have served as the working assumptions for general circulation models (Hibbard et al. 2007). However, IA models also require some set of working assumptions to arrive at their results. In preparation for the Third Assessment Report, the IPCC commissioned a Special Report on Emissions Scenarios (Nakicenovic et al. 2000). This report demonstrated a technique for selecting input assumptions for IA models—e.g., those representing future states of population, energy use, and economic growth—that is known as the ‘Story and Simulation’ approach (Alcamo 2001, 2008). Storylines qualitatively describe alternative futures (or scenarios) and take on a narrative form, while ‘simulation’ refers to an IA model that quantifies the descriptions (Carter et al. 2007; Raskin et al. 2005; Rounsevell and Metzger 2010). The scenario is considered the conjoined product of the story and the simulation. In this paper, our focus is on the techniques for how part of these conjoined products, the stories, the qualitative descriptions of a scenario, are constructed.

In general, there are two fundamental challenges for these storylines and their final products, socioeconomic scenarios: (1) demonstrating internal consistency and (2) ensuring that a small set of selected scenarios are sufficiently comprehensive—that is, that important possible futures have not been left out (Schweizer and Kriegler 2012), either because they were unimagined or subjectively deemed undesirable for further investigation. Different schools of thought pertaining to scenarios address these challenges in different ways (Bradfield et al. 2005). In this paper, we critically examine two methods that could be used to develop storyline (or narrative) components of scenarios for climate change research. We first treat the dominant school employed in environmental change assessments and used for scenarios for IPCC assessments, which is Intuitive Logics (EEA 2009; Rounsevell and Metzger 2010). Second, we treat a new method for developing narratives, which is CIB analysis (Weimer-Jehle 2006). It should be noted that for global socioeconomic scenarios developed for IPCC assessments, only three methods have been demonstrated for developing storylines: Intuitive Logics (Nakicenovic et al. 2000), CIB analysis (Schweizer and Kriegler 2012; Schweizer and O’Neill 2013), and scenario discovery (Rozenberg et al. 2013). We focus on the CIB method because it is a substitutable improvement to Intuitive Logics (Kosow 2011), which is the reigning approach to developing scenarios discussed by the IPCC. In contrast, the notion of scenario discovery (Lempert 2012) has important differences from both Intuitive Logics and CIB that make a discussion of its respective strengths and limitations tangential to the discussions of objectivity elaborated below.

In this paper, we are not evaluating the validity of different methods of building scenarios overall, but rather strictly in relation to the needs of scientific assessments, such as those taken up by the IPCC. Such assessments are boundary objects at the science-policy interface, where scientists aim to convey major conclusions from scientific research with policy implications to decision-makers. The arguments in our analysis do not treat the validity of different scenario methods in alternative contexts such as for business planning or military strategizing.

We focus on the climate change case because among other global change research fields—i.e., land use, water, biodiversity—climate is the most mature. It has set an example for coordinated international science-policy research in other fields, including for the development and use of scenarios. Additionally, it is the only global change field that produces regular Assessment Reports (Clark 2013), and as a result, has developed a rather sophisticated view of scenarios in many respects, which we discuss in Sect. 7. Because of the critical linkage that socioeconomic scenarios play in the IPCC context, we believe that the specification of socioeconomic storylines for such purposes should be subject to similar standards of rigor and objectivity as conventional scientific studies.

2 Necessary steps for developing storyline scenarios

Regardless of the method used to develop them, and regardless of how detailed the scenarios are, all storyline aspects of scenarios describe alternative plausible contexts for possible future change. Thus all storyline scenarios require the specification of scenario characteristics (also called variables, driving forces, factors, or elements) as well as alternative outcomes for these characteristics. The storyline, or narrative, describes why the particulars of the scenario should be considered plausible, such as the interrelation between scenario variables.

In both Intuitive Logics and CIB analysis, scenario authors exercise judgment for selecting scenario variables, selecting alternative outcomes for the variables, and describing how the outcomes and variables are interrelated if at all. The main differences between the methods are how the judgments for variables, their outcomes, and their interrelations are recorded and processed. Intuitive Logics methods approach these issues holistically, while CIB analysis approaches them formally (Tietje 2005), mechanically (Dawes 2001), or analytically. In turn, these differences affect how plausible scenarios are identified. Moreover, differences in the elicitation of judgments, information processing, and the selection of plausible scenarios translate to different orientations toward confronting uncertainty. These differences also bear implications for objectivity and, ultimately, for the appropriateness of these different methods for developing scenarios for scientific assessments.

3 The traditional scenario-building approach: the Intuitive Logics method

3.1 Introduction to Intuitive Logics

Intuitive Logics approaches were developed in think tanks such as SRI International and RAND for war games (Aligica 2004) and in corporations such as General Electric and Shell for business planning (Millett 2009; Wack 1985). Intuitive Logics are still used widely for scenario studies including for environmental change assessments (Bishop et al. 2007; EEA 2009; Rounsevell and Metzger 2010). They involve gathering experts who presumably know best about the situation or issue (Ogilvy and Schwartz 1998; Schweizer 2010). The basic idea is to collect a group of people with various expertise in the desired fields believed to be relevant, and to have the group meet together and engage in what Jungermann and Thuring called ‘disciplined intuition’ (quoted in Bradfield et al. 2005, p. 806) to arrive at a handful of distinct stories (Wilson 1998 cited in Mietzner and Reger 2004, p. 59).

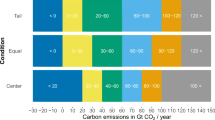

The ‘discipline’ of this method follows a very basic three-step recipe. As a group, scenario authors (i) brainstorm and discuss key uncertainties and the ‘driving forces’ behind alternative outcomes for the future. (ii) From this group brainstorm, two key uncertainties are prioritized by the group. Two polar outcomes are also assigned to each uncertainty, such as ‘strong’ and ‘weak.’ This results in four distinguishing futures as shown in Fig. 1. (iii) Stories for these alternative futures are then fleshed out in a focus group setting, where the group is free to elaborate on as many scenario variables as desired; however, the outcomes of scenario variables are constrained by the quadrant in which the scenario is placed, due to the fixed polar outcomes of the two key uncertainties. Additionally, it should be noted that Intuitive Logics resist the assignment of likelihood estimates to the scenarios (Millett 2009). By convention, Intuitive Logics scenarios are presented as equally plausible with no comment on their respective likelihoods. In the scenarios used by past IPCC reports, the following were identified as the two key uncertainties: Global trends for economic and political organization (e.g. globalization) and global trends for the priority of development policy (Nakicenovic et al. 2000). The polar outcomes for economic and political organization were high globalization versus protectionism (regionalism), while for development policy, the polar outcomes were conventional economic development versus sustainable development.

In the IPCC case, after these two driving forces were identified for the scenarios, the four scenario types were used as a backdrop for further elaboration by scenario authors as a group with detail for global trends in population growth, economic growth, global trends for the energy intensity of economies, global trends for the carbon intensity of primary energy sources, global trends for the availability of fossil fuels, and global trends for changes in land use. As noted previously, the specific outcomes for these additional scenario variables were determined by the larger contexts of the future worlds established by Intuitive Logics. For example, the scenario authors decided that plausible scenarios for the A2 world would describe a future with high energy- and carbon-intensity due to continued reliance on cheap fossil fuels under conventional development. A2 also depicts slower global economic growth due to a reversal of globalization, which results in high global population growth. In contrast, scenario authors decided plausible scenarios for the B1 world would describe a future with low energy- and carbon-intensity due to a shift away from fossil fuels under sustainable development. B1 also depicts high economic growth due to globalization, which results in low global population growth.

3.2 Strengths of Intuitive Logics

The success of Intuitive Logics approaches can be attributed to two major strengths. First, because the ‘discipline’ of the method is so basic, it is highly flexible and easy to implement. The second strength is rooted in the claim by Stephen Millett that “a discontinuous future cannot be reliably forecasted, but it can be imagined” (2003, p. 18). In other words, the failure of extrapolations of historical trends to account for discontinuities such as social fads or technological breakthroughs is a primary justification for embracing the creative approach of Intuitive Logics for exploring different possibilities for the future—even in scientific environmental assessments. As noted in the report entitled Ecosystems and human well-being: Scenarios, which was prepared for the Millennium Ecosystem Assessment,

[S]cenario analysis requires approaches that transcend the limits of conventional deterministic models of change. Predictive modeling is appropriate for simulating well-understood systems over sufficiently short times (Peterson et al. 2003). But as complexity increases and the time horizon lengthens, the power of prediction diminishes. ...The development of methods to blend quantitative and qualitative insight effectively is at the frontier of scenario research today. [A reference is then made to a figure that represents the story-and-simulation approach, where scenarios exist at the boundary between ‘stories’ and ‘models.’] ...[Qualitative] narrative offers texture, richness, and insight, while quantitative analysis offers structure, discipline, and rigor. The most relevant recent efforts are those that have sought to balance these (Raskin et al. 2005, p. 40).

3.3 Limitations of Intuitive Logics in the context of climate change research

However, there are four chief problems with building scenarios with Intuitive Logics methods in the context of IPCC or other environmental assessments.

The first problem is that of reproducibility, or replicability. As candidly acknowledged by the originator of the notion that storylines could be fruitfully linked with simulations, Joseph Alcamo,

A keystone of scientific credibility is the reproducibility of an experiment or analysis. For this reason it is significant that the storylines produced in the [Intuitive Logics] scenario exercises do not meet this benchmark. Storylines are usually developed through a group process in which the assumptions and mental models of the storyline writers remain unstated. Therefore the storyline is difficult if not impossible to reproduce. This lack of reproducibility reinforces the impression that storylines are ‘unscientific’ even though they may be based on a more sophisticated concept ... than portrayed by any mathematical model” (2008, pp. 141–142).

The second problem is that of complexity. The dynamics of socioeconomic change are information intensive, involving the correlations and relationships between a large set of variables, and likely too complicated for individuals or groups to hold in mind and manipulate, all at one time—and yet, this is precisely what experts in Intuitive Logics are asked to provide holistically in the form of aggregate, complete, plausible scenarios.Footnote 1 As discussed previously in Sect. 1.1, in climate change research, the storylines developed through Intuitive Logics are appended to simulations performed by IA models. In addition to the constraining role that the stories can play for the integrated assessment models, the story-and-simulation pairing is tacit acknowledgment of the complexity limitation for stories, as the simulations are also seen as a mechanism for verifying the internal consistency of the stories. However, simulation alone does not correct for additional limitations of Intuitive Logics.

The third problem is that of sampling the space of possible futures, which is potentially extremely large. With Intuitive Logics, the convention to focus on two key uncertainties is the primary strategy for selecting a small sample.Footnote 2 Unlike statistics, where the aim for sampling is that the sample be typical and representative of a real population, sampling in Intuitive Logics is done for the purpose of demonstrating that uncertainties for the future are irreducible. In other words, the purpose of the four alternative futures is to convey that perfect foresight does not exist and that any particular rendition of the future could be just as persuasive as an alternative. Since this is the main purpose, it is viewed as distracting (or worse, misleading) information to provide any estimates of likelihood for the scenarios. Additionally, only a few scenarios would be needed to demonstrate this point—two or four scenarios are viewed as just as sufficient as large numbers of scenarios. Therefore, the working sampling assumption in Intuitive Logics is that the selection of two key uncertainties will result in four contrasted futures that are sufficiently different and comprehensive for the purposes of exploring the future and contemplating the ramifications of alternative outcomes. Contrast this with the statistical view, where samples of sufficiently large sizes (e.g. \(N \ge 30\)) are preferred.

This working sampling assumption in Intuitive Logics might be acceptable were it not for the fourth problem, which is bias. Psychological research has not supported the Intuitive Logics claim that scenarios imagined creatively should be expected to be superior to scenarios developed analytically. Such bias operates at the levels of both individuals and groups developing scenarios. Setting aside the potential problem of active, conscious bias, psychologists have noted that unconscious bias in the holistic judgments of complex systems is very real. At the level of individuals, this is because the human mind is limited in its capability to process multifactor interdependencies, and is easily distracted by trivial matters.

The late psychologist Dawes (1988) chronicled a number of cognitive biases that are the result of biased heuristics; two that are directly relevant to Intuitive Logics are availability and what is described by Morgan and Henrion (1990) as overconfidence. Availability is the tendency to overestimate the probability of phenomena that are familiar. A number of scholars have noted this tendency and dubbed it the ‘conjunction fallacy’ (Schoemaker 1993; Tversky and Kahneman 1983). More specifically, availability reflects the judgment that ever more detailed events are more likely than less detailed ones; however, mathematically speaking, the opposite is true. For example, consider the following experiment carried out by A. Tversky and D. Kahneman. Study participants were presented with a personality sketch of a character named Linda and then asked which of the following statements was more likely to be true: (a) Linda is a bank teller, or (b) Linda is a bank teller and is active in the feminist movement. The vast majority of respondents (85 %) selected the second statement as more probable, even though Linda’s membership to the plainly described set of ‘bank tellers’ will be more likely than her membership to the conjoint of the sets ‘bank tellers’ and ‘active feminists.’

The second key heuristic is overconfidence, which is the tendency to underestimate the likelihood of outcomes perceived as extreme; in other words, under uncertainty, people systematically report confidence intervals for their judgments that are too narrow. This observation is based on a substantial body of literature regarding how well-calibrated humans are as probability assessors. Such experiments would ask study participants to report their degree of uncertainty about information that is verifiable but not easy to recall (or perhaps simply unknown) for the study participant. Examples of calibration questions include the populations of countries (verifiable by consulting an almanac), dates of historical events (verifiable by consulting a history book), or the meanings of unusual words (verifiable by consulting a dictionary or appropriate glossary) (Morgan and Henrion 1990). In their summary of ten studies, Morgan and Henrion showed a repeated pattern: When study participants reported their highest confidence for an unknown quantity or definition (the 98 % confidence interval), rather than being incorrect approximately 2 % of the time, respondents were wrong anywhere from 5 to 50 % of the time (the median across studies being incorrect responses with high confidence 30 % of the time).

The unconscious cognitive biases of availability and overconfidence are not limited to isolated unknowns or simple statements in experimental settings. W. Grove and P. Meehl also looked at errors in judgments in a variety of professional contexts, such as likelihood judgments of parole violations or violent criminal recidivism, and psychological diagnoses of psychosis or neurosis. In their review of over 40 years of research on the predictive power of clinical versus mechanical methods, they concluded,

The clinical method relies on human judgment that is based on information contemplation and, sometimes, discussion with others .... The mechanical method involves a formal, algorithmic, objective procedure (e.g., [an] equation) to reach the decision. Empirical comparison of the accuracy of the two methods ([based on] 136 studies over a wide range of predictors) shows that the mechanical method is almost invariably equal to or superior to the clinical method (quoted in Dawes 2001, p. 2049).

It is notable that Grove and Meehl state that “discussion with others” does not correct for individual judgment errors. This refers to a well-documented problem known as groupthink. There are serious problems that arise when groups deliberate with the aim of reaching consensus on a difficult topic. The most serious is that group deliberation “often produces worse decisions than can be obtained without deliberation” (Solomon 2006, p. 31). As Morgan and Henrion put it in the context of groups trying to arrive at agreed-upon probabilistic judgments, there is “considerable evidence that... face-to-face interaction between group members can create destructive pressures of various sorts, such as domination by particular individuals for reasons of status or personality unrelated to their ability as probability assessors“ (Morgan and Henrion 1990, p. 165).

As philosopher of science Miriam Solomon describes this evidence, peer pressure, pressure to reach consensus, subtle pressures from those in authority, and the salience of particularly vocal group members who may anchor decisions, can lead dissenting individuals to change their minds, and significantly, not to share their knowledge of contrary evidence. These factors can lead groups into making poor decisions and to reduce their options. Importantly, writes Solomon, it does “not help much if individuals ‘try harder’ to be unbiased or independent; we are unaware of, and largely unable to resist, the social factors causing groupthink and related phenomena” (2006, p. 32). She cites James Surowiecki: “Too much communication, paradoxically, can actually make the group as a whole less intelligent” (2004, p. xix).

In case there is any doubt that these biases appear to affect complex socioeconomic scenario modeling as standardly performed for climate change research, one need look no further than the experience of long-term (i.e. multi-decadal) energy demand forecasting. ‘Best estimates’ for such forecasts at the national level have been consistently wrong (O’Neill and Desai 2005; Shlyakhter et al. 1994), at times off by a factor as large as four (Smil 2003 cited in Morgan and Keith 2008). Although it is unclear to what extent Intuitive Logics were integrated into such forecasts, they were certainly produced with complex simulations, where judgments had to be applied by modelers to different combinations of model assumptions. With the forecasts being consistently wrong, this is congruous with the aforementioned cognitive bias of overconfidence, since the ‘best’ forecasts were spectacularly wrong well over 2 % of the time. Morgan and Keith also argued that there is evidence that the cognitive bias of availability was at play, since projections for primary energy demand in the US for the year 2000—produced even as recently as 1980—systematically underestimated the uptake of energy efficient technologies. Such a serious oversight would have been consistent with past experience, since prior to 1980, energy efficiency was a low priority due to low energy prices. However, much of the decrease in US primary energy use from 1980 to 2000 is explained by the wide adoption of more efficient technologies. Thus the results from complex scenario modeling will only be as good as their input assumptions, which, we argue, will further be limited by the ability to correct for cognitive biases on the part of the modeler.

Thus, while there are benefits to using the Intuitive Logics approach, when doing it for predictive/anticipatory scientific work, it may not be optimal. The goals and uses of scenarios for assessment bodies such as the IPCC demand more scientific, more objective approaches only now emerging.

4 An alternative scenario-building approach: the CIB method

In contrast to the Intuitive Logics approach, under which various experts are assembled to estimate uncertainties, driving forces, and narratives through dialogue as a group, other scenario approaches exist that are more systematic. In this paper, the specific method we contrast with Intuitive Logics is an example of a systematic approach and is called Cross-Impact Balance (CIB) analysis. The CIB method was selected because, as discussed in Sect. 1.1, it would be an appropriate substitute to Intuitive Logics in the Story and Simulation approach (Kosow 2011). Additionally, its inner workings are publicly accessible, which is an important aspect of its overall objectivity (discussed in more detail in Sect. 5).

4.1 Introduction to CIB analysis

Like Intuitive Logics, the whole point of doing a CIB analysis is to arrive at narrative descriptions for alternative scenarios that are plausible. The ultimate aim of the CIB method is “a more promising division of labor between man and method” (Weimer-Jehle 2006, p. 338). It revolves around collecting pairwise semi-quantitative judgments from experts about relationships, influences, and correlations between driving forces, gathered in a transparent and orderly way. These judgments—for example, concerning the socioeconomic determinants of greenhouse gas emissions—can then be used to generate a very large number of scenarios (as opposed to only four under the Intuitive Logics approach) whose respective levels of internal consistency can be evaluated with a simple mathematical algorithm.Footnote 3 A significant virtue of the CIB method is that the internal consistency of possible futures can be measured. Additionally, internally consistent futures are discovered by systematically scanning very large numbers of scenarios (on the order of 10 billionFootnote 4), which can better ensure that important surprising, unexpected scenarios are not overlooked, as they might be through the Intuitive Logics method.Footnote 5

CIB differs from Intuitive Logics by explicitly representing judgments about distinct scenario variables, their alternative outcomes, and the relationships among the alternative outcomes of the variables. These judgments can be collected from experts or gathered through literature review. The collected judgments are then used to evaluate the internal consistency of any particular scenario consisting of those variables and alternative outcomes. Thus in CIB, what makes a scenario plausible is not a subjective judgment of the scenario authors but instead its demonstrated internal consistency. In the case of global socioeconomic scenarios, such variables could be average global wealth level \(=\) high, average educational attainment across the globe \(=\) high, global population \(=\) low. For any CIB analysis, the following three steps must be completed:

Step 1: Specification of scenario variables and their alternative outcomes. CIB analysis requires specific judgments about system dynamics. Therefore specifying scenario variables and their alternative outcomes, or states, is required. For example, let us consider a simple scenario consisting of only three variables conceptualized at a highly aggregated scale (such as at a global or continental-region level): population, income per capita, and educational attainment defined as the proportion of the population with post-primary education. In this example, let us consider three possible states for each variable: low, medium, or high.

Step 2: Collecting judgments for relationships among the variables and their alternative outcomes. Once the scenario context, or system, has been defined, the variables and their states can be organized in a cross-impact matrix, as shown in Fig. 2. This organization is useful for systematically recording judgments about how any given variable state would be expected to directly influence target states for other variables. Rows represent given states, or variable states that would exert an influence upon each intersecting state across columns. Columns represent target states, or variable states that would receive influences. In other words, rows represent variable states acting as impact sources and columns represent variable states acting as impact sinks.

A cross-impact matrix for the simple scenario example of population, income per capita, and educational attainment conceptualized at a highly aggregated level. Numerical judgments are from Schweizer and O’Neill (2013). Highlighted rows correspond to influences associated with the following combination of outcomes: low global trend for population, high global trend for income per capita, and low global trend for educational attainment. The mismatch of outcomes that correspond to self-consistency (downward and upward facing arrows at bottom of figure) and impact balances indicate that this is an inconsistent scenario

The cells of the CIB matrix contain numerical judgments about how variable states in the rows (or impact sources) exert direct influences on variable states in the columns (or impact sinks). For each cell in a judgment section (circled in Fig. 2), one considers the cross-impact question,

If the only information you have about the system is that [given variable] X has state x, would you evaluate the direct influence of X on [target variable] Y as a clue that [variable] Y has state y (promoting influence) or as a clue that [variable] Y does not have state y (restricting influence)? (Weimer-Jehle 2006, p. 339)

For example, the number recorded in the cell labelled in Fig. 2 pertains to the direct influence of a high outcome given for income per capita upon the medium target outcome for educational attainment. Judgments can then be recorded according to an ordinal scale, where positive scores represent ‘promoting’ influences and negative scores ‘restricting’ influences. The stronger the direct influence, the greater the magnitude of the judgment. A judgment of 0 indicates that given variable state x has no direct influence on target variable state y.

Step 3: Evaluating the internal consistency of scenarios. In CIB analysis, internal consistency for any scenario is determined by a logical check for self-consistency (Weimer-Jehle 2006). Self-consistency is an important property for stable scenarios (von Reibnitz 1988), or scenarios that describe long-term trends, which is precisely what socioeconomic scenarios in climate change research aim to do. In CIB analysis, each variable outcome in a given scenario is associated with a set of direct influences, which is represented by a subset of the expert judgments (see the shaded rows in Fig. 2). Whenever some given combination of outcomes evokes a set of direct influences that also promote the said combination, the scenario has demonstrated its self-consistency and is deemed internally consistent. Scenarios that fail the self-consistency test could do so for a number of reasons: They could be highly unlikely (on empirical or theoretical grounds), physically impossible, or logically inconsistent. The mathematical algorithm employed for CIB does not distinguish between these reasons for failing the self-consistency test, as the result is always the same: The direct influences unique to \(P =-P\), where \(P\) is some assumed scenario. Since \(P =-P\) is a contradiction, all scenarios that fail the self-consistency test are considered internally inconsistent to some degree (which some might interpret as a measure of lower likelihood), which can be quantified with an inconsistency score. Here, we present an example of this consistency assessment with the three socioeconomic variables population, income per capita, and educational attainment. This particular example is based on Schweizer and O’Neill (2013), where educational attainment is defined as the percentage of the global population with post-primary education.

As shown in Fig. 3, an example of an internally consistent combination of outcomes for these socioeconomic variables appears on the left in case (a). The scenario would be high educational attainment, high income per capita, and low population. This is because the direct influences between these trends at highly aggregated scales are relatively well understood, and the set of influences can be shown to be self-consistent. For this simple case, when educational attainment trends are high, one could expect the outcome for average income per capita to also be high. When income trends and educational attainment trends are both high, one could expect the population trend to be low.Footnote 6 Low trends for population might also weakly encourage the high trend for average income per capita. The high income trend also encourages continued high educational attainment. Thus this combination of outcomes for the socioeconomic variables passes the self-consistency test and would constitute a scenario that is internally consistent.

Consider now a slightly different combination, one where educational attainment is low, income per capita is high, and population is low. In case (b), in Fig. 3, it can be seen that this combination is no longer self-consistent, as low trends for educational attainment would not be expected to encourage the high trend for average income per capita nor the low trend for population. Similarly, for reasons previously stated, a high trend for income per capita would not be expected to encourage a low trend for educational attainment. Because this combination of outcomes does not pass the test for self-consistency, it is an example of a combination that is internally inconsistent.

CIB analysis can record a score for the severity of the internal inconsistency of a scenario. A general property of inconsistent scenarios is that some (if not all) of the associated influences are not self-consistent. For each scenario possible, CIB analysis records an inconsistency score by measuring the worst discrepancy between the self-consistency assumption and the net tendency of the influences associated with the given scenario. Inconsistency measurement is made possible by the quantified pairwise judgments for direct influences between variable states. Thus the matrix in Fig. 2 also acts as a database for sets of influences that would be associated with any particular given scenario (see shaded rows) and can demonstrate the simple mathematical calculations performed on values in the matrix to obtain the internal consistency score for any scenario.

To provide an example of inconsistency scoring, we will consider the scenario depicted in Fig. 3, case (b). The combination of outcomes for this scenario is low trend for population, high trend for income per capita, and low trend for educational attainment. In Fig. 2, the highlighted rows correspond to the set of influences that would be associated with this combination of outcomes. Each possible target outcome for each variable, which is represented in each column, is influenced to a different extent by the outcomes in the given scenario. To uncover the net effect of the given scenario, quantities for the direct influences upon each target outcome must be combined. Impact balances shown at the bottom of Fig. 2 sum the relevant given influences together for each target outcome. Thus the net effect of the given scenario is revealed by the set of impact balances for each target element. For each target element, the maximum impact balance corresponds to the target outcome that is most strongly promoted. The upward facing arrows at the bottom of Fig. 2 show the expected target outcomes for each element according to the given scenario. These outcomes are compared to the initially assumed, given scenario (downward facing arrows at the bottom of Fig. 2). The inconsistency score for the given scenario is the maximum difference between the outcome according to impact balances and the outcome for self-consistency. For this reason, perfectly internally consistent combinations, which meet the condition of self-consistency, always have an inconsistency score of 0. For the example in Fig. 2, the given combination is internally inconsistent and has an inconsistency score of 33 (\(33 = 13 - (-20)\)). This result was expected even before any quantities were assigned to the influences in the given combination (cf. Fig. 3).

Since inconsistency scoring can be done for any scenario, large numbers of scenarios can be scored and then ranked. This provides groupings for scenarios that have good or poor internal consistency. Groups of scenarios with good internal consistency could be more closely investigated to uncover their specific variable states as well as the specific variable interrelationships that make the scenarios self-consistent. Such scenarios would be considered highly plausible.

In short, to make the distinctions between the CIB and Intuitive Logics methods exceedingly clear, CIB elicits from experts pairwise judgments for correlations and mutual influences among scenario variables. A cross-impact matrix, such as the one shown in Fig. 2, can be used to collect such judgments. With the above example of a simple scenario for alternative outcomes for global population, global income per capita, and global educational attainment, CIB elicits judgments about the interrelations of these variables and their alternative outcomes in a pair-wise fashion by asking experts to record their judgments in matrix cells. A mathematical algorithm then processes these numerical judgments to search for internally consistent combinations of outcomes for the three scenario variables. In contrast, Intuitive Logics elicits holistic, aggregate judgments for plausible scenarios by asking experts in a focus group setting, “Consider a future context where the world is globalized and focused on sustainable development [this hypothetical context is drawn from Fig. 1]. What scenario for global population, global income per capita, and global educational attainment would be plausible in that context?” The group of experts would then discuss outcomes that they find plausible and come to a consensus. This scenario context question would then be repeated to the group for the three alternative future contexts according to an Intuitive Logics matrix, such as that shown in Fig. 1. Thus CIB is a semi-quantitative procedure, while Intuitive Logics asks for a verbal analysis.

4.2 Strengths of CIB analysis

There are three major strengths to CIB analysis, which we discuss in this section. First, the CIB method makes it possible to inspect experts’ judgments as well as specific variable interrelationships that make scenarios plausible. Second, it shares the benefit of ‘imagination’ with Intuitive Logics—that is, possible futures that are highly contrasted from each other and from the present are identified. Third, the CIB method demonstrates the internal consistency (or lack thereof) of scenarios. It can also demonstrate that internally consistent scenarios were identified from a scan of a very large number of scenarios (on the order of 10 billion). By design, the manner in which CIB demonstrates internal consistency diminishes the influence of cognitive limitations on storyline scenarios. Each of these strengths is elaborated below.

The first significant virtue of the CIB method permits the analysts, experts, and any member of the public (that is, any party who did not participate directly in developing the scenarios) to examine how the conclusions of a particular analysis were arrived at. More specifically, in comparison to Intuitive Logics, CIB represents and preserves for open viewing the networks of direct influences for any scenario (as the reader can see in Sect. 4.1) as well as explores a very large number of scenarios possible. These strengths are direct improvements to two limitations of Intuitive Logics: (i) ensuring internal consistency in the face of complexity and (ii) under-sampling the vast space of possible futures.

The second major strength of CIB is similar to the ‘imagination’ benefit of Intuitive Logics. An important objective of scenario analysis is exploration, as scenarios can potentially help users consider surprising developments or discontinuities (Bradfield et al. 2005; EEA 2009). At first blush, one might question whether the results of a CIB analysis would hew too closely to extrapolating past trends, especially with the simple population example discussed previously to introduce CIB. But actually, the CIB method facilitates discovery and exploration in major ways, which is made clear by a recent application in a more sophisticated scenario analysis (Schweizer and O’Neill 2013). The sophisticated application, which shows the full strengths of CIB in practice, is discussed below. By systematically exploring a much larger space of possible scenarios (which, under the conditions of many scenario studies, may span the full space of scenarios combinatorially possible; see Sect. 4.1, footnote 4), we find that the CIB method can explore further than Intuitive Logics by uncovering unexpected combinations of possible outcomes.

Consider a new application of the CIB method to climate scenarios by Schweizer and O’Neill (2013). They first surveyed a range of researchers to establish which socioeconomic variables should be prioritized in the IPCC’s new socioeconomic scenarios. They then used the resulting set of 13 factors (some of which are currently not represented in any IA models featured in IPCC reports) as a basis for a further elicitation of expert opinion regarding the correlations and influences of these factors upon each other. The CIB matrix, consisting of \(13 \times 13\) factors, each with three possible outcomes—specifically, low, medium, or high long-term trends—required 1,404 judgments. The matrix was sufficiently large that the authors elicited judgments from a variety of researchers concerning only the segments of the matrix involving their expertise. They followed a judgment protocol developed by Schweizer and Kriegler (2012), on which they briefed experts on prior to the elicitation. Schweizer and O’Neill (2013) found that experts were able to provide 108 judgments each in about one hour. Some were able to provide more (216 judgments) in the same amount of time. [Further details about the elicitation of expert judgments are available in Schweizer and O’Neill (2013).] Compare this to developing scenarios under the Intuitive Logics approach, where experts typically participate in a multi-day workshop and may have to travel a great distance to participate.

The resulting CIB analysis, which explored the consistency of all possible combinations of the 13 variable values and trends, resulted in approximately 1.5 million scenarios. Schweizer and O’Neill (2013) then focused on the 1,000 most consistent scenarios as evaluated by the decomposed judgments collected independently from a variety of experts. Their analysis revealed some surprises about the five new archetypes for socioeconomic scenarios that are of interest to the IPCC (O’Neill et al. 2013). Significantly, these most consistent combinations were interestingly different from one another in how they achieved the final state of the future. One of the most interesting and surprising of their results is that a new socioeconomic factor, ‘quality of governance,’ seems to play a key role in future outcomes for ‘socioeconomic challenges to adaptation.’ In this context, adaptation refers to the ability of societies to implement responses to a changing climate that moderate harm or take advantage of benefits. The factor ‘quality of governance’ was introduced as a new socioeconomic variable because it was prioritized among 25 independent experts in an online survey. For the more detailed expert elicitation, ‘quality of governance’ was then defined in accordance with the six Worldwide Governance Indicators developed by the World Bank (World Bank 2011). Examples of Worldwide Governance Indicators include Political Stability and Absence of Violence as well as Control of Corruption. Independent experts specializing in a variety of fields (e.g. demography, economics, agricultural economics, education) reported that they would expect quality of governance, as a socioeconomic factor, to interact directly with outcomes for their respective socioeconomic factors.

This particular finding is significant for two reasons. First, socioeconomic scenarios for climate change research have traditionally described trends in governance and overarching policy priorities superficially—in the words of Lee Lane and David Montgomery, “seem[ing] to float in thin air without grounding in theory or data” (2012 ms., p. 6). To be fair, dynamics that could represent changes in governance have been challenging to incorporate in many IA models (Costanza et al. 2007). Additionally, Schweizer and Kriegler (2012) point out that one of the main purposes of storylines is to represent contextual details for scenarios that models do not, or cannot. Thus the lack of experience with modeling governance may have motivated the relegation of such details to intuitively derived storylines. However, the CIB results show that regardless of the reasons for these cursory treatments, this practice may no longer be acceptable. This is because quality of governance may be a significant driver for socioeconomic outcomes that make successful adaptation to climate change more difficult. Therefore, in scenarios for climate change research, representations of governance may demand better grounding in data and theory.

Second, and perhaps even more surprisingly, the CIB analysis arrived at this result with the vast majority of its judgments supplied independently by disciplinarily diverse experts who do not model ‘quality of governance’ quantitatively but can appreciate its influences qualitatively. In other words, this finding is remarkable because most of the experts who provided judgments had no experience in how to formally represent governance in their own modeling, and all experts’ judgments were collected independently. Here we see the invaluable contribution of the CIB methods as compared to results from Intuitive Logics: CIB can elicit ‘odd’ or ‘marginalized’ information about a scenario—in this case, the socioeconomic variable, ‘quality of governance,’ which turned out to be extremely significant to the dynamics of the scenarios overall. The information that led to this conclusion arose though a process that fulfilled the three conditions for groups to make good judgments, namely independence, diversity, and decentralization, qualities that are enacted with CIB methods. In contrast, such marginalized information is almost inevitably lost in the Intuitive Logics process, simply due to the group dynamics, as we shall discuss in Sect. 5.2.

The third and final major strength of the CIB method is that internal consistency scoring is possible for a very large number of scenarios [in the studies by Schweizer and Kriegler (2012) as well as Schweizer and O’Neill (2013), such internal consistency scoring was achieved for all scenarios possible combinatorially]. Through this more comprehensive scoring and scenario comparison, CIB provides a thoroughgoing and much more complete check on internal consistency that is not available through Intuitive Logics. Additionally, the scoring algorithm takes pressure off any expert brains contemplating specific socioeconomic futures to test consistency, and puts it on computers that effortlessly check large numbers of scenarios for the desired consistency. Bearing in mind the cognitive burdens and biases associated with Intuitive Logics, which will be examined further in Sect. 5, off-loading the holistic information-processing job from the human brain and onto a computer significantly diminishes the influence of cognitive biases on scenarios.

4.3 Limitations of CIB analysis

However, of course there are some limitations of CIB analysis. Although the method is flexible, the number of variables that can be taken into account will be limited for practical reasons. More specifically, current computing power limits the ability of CIB analysis to investigate more than 10 billion (\(10^{10})\) scenarios comprehensively (Weimer-Jehle 2010). Therefore, if one is interested in a comprehensive scan of all scenarios possible combinatorially, CIB can only be used for scenarios that can be qualitatively understood with a moderate number of variables and alternative outcomes.Footnote 7 For this same reason, one can only construct what its originator calls “rough scenarios” (Weimer-Jehle 2006, p. 359). Weimer-Jehle, the originator of the CIB method, also notes that since expert data not only supplies the information for the pairwise judgments but also structures the logic of the system itself, CIB analysts should keep the uncertainties surrounding such data firmly in mind. Because the analysis relies so completely on expert judgments, it is crucial that those judgments be gathered in such a way that they are serious and carefully considered, and not “the result of little reflected guessing” (Weimer-Jehle 2006, p. 359; see Sects. 3.1 and 3.2).Footnote 8

An additional possible drawback to the CIB approach is that it may be more demanding for the analyst, or party convening the scenario exercise, than the Intuitive Logics method: Surveys of experts take time, effort, and commitment of the CIB analysts, plus the cooperation and commitment of experts completing the surveys. All of this, versus the relatively simple task of inviting experts together for a workshop or meeting to discuss a variety of possible scenarios—as is done for the Intuitive Logics approach—seems to require a distinct difference of effort by the conveners of the two approaches. In addition, it is possible that more is demanded of the experts in question; in the CIB method, they are put on the spot and asked to semi-quantify forced judgments in pairwise interactions or correlations, which can be a daunting task in comparison to an open discussion of the same. On the other hand, much is also demanded of experts in the Intuitive Logics method; they are required to entertain various large-scale collections of variables in alternate global scenarios. It may be too early to say which approach is more demanding, but the CIB method, as carried out in the climate case by Schweizer and O’Neill (2013), proved to be fairly demanding, as it required developing instruments and protocols to define the scope of the scenarios as well as to collect 1,404 distinct judgments, recruitment of experts to participate in the exercise asynchronously, and data entry of the experts’ responses into a CIB software package. We may wish to say that this level of detail is actually the strength of the approach; nevertheless, that does not change the fact that it is costly to achieve.

5 Meanings of ‘objectivity’ as the criteria for distinguishing superior scenario generation methods for scientific assessments

Because scenarios in IPCC assessments aim to convey scientifically credible information, we contrast the Intuitive Logics and CIB methods with respect to the criteria of objectivity. We chose these criteria because objective methods in science are customarily taken to provide a path to understanding real and independently-existing things, properties, and processes.

5.1 Different meanings of objectivity

There are several quite distinct meanings of the terms ‘objective’ and ‘objectivity’ that have been explored in detail by philosophers of science such as Helen Longino, Heather Douglas, and Elisabeth Lloyd. Thus, ‘objective’ may mean: (1) public, (2) detached, (3) unbiased, which are methodologically oriented. Two additional meanings are more metaphysically oriented: (4) independently existing from us, and (5) real or “really real” (Lloyd 1995).Footnote 9 Additionally, objectivity often makes reference to social operations and relations, which include (6) procedural objectivity and (7) interactive or structural objectivity (Douglas 2004; Longino 1990). We shall examine the CIB and Intuitive Logics approaches with respect to each of these meanings of ‘objective’ and ‘objectivity’ in Sect. 5.2, following our introductory discussion of each of these meanings here in more detail.

The term ‘objective’ sometimes means (1) public, publicly accessible, observable, or intersubjectively or publicly available for inspection, at least in principle.Footnote 10 In other words, an object or action is ‘objective’ (1) when it is performed, perceived, or existing in open view, or openly accessible. Scientists are often very concerned about this methodological form of objectivity, due to its importance in persuading others, and its role in the public accessibility of scientific evidence.

Alternatively, ‘objective’ also sometimes means (2) detached, disinterested, independent from will or wishes, or impersonal (Douglas 2004; Lloyd 1995). In detached objectivity (2), one’s values should not blind one to the existence of unpleasant evidence, because one is invested in a particular view (Douglas 2007, p. 133).

This meaning is closely related to another, that is, (3) unbiased. To clarify, if a person is being objective (3) in the sense of ‘unbiased,’ then she can be making mistakes, but they will fall randomly with relation to the outcome of interest, and not lean in a particular direction. The notion of being unbiased here is basically statistical. A person can have a stake in the outcome of events and still be unbiased. For example, a father can hope that it doesn’t rain at his daughter’s wedding, and thus fail to be detached or disinterested, but still be unbiased, i.e., objective (3), in his estimation of this result. Nevertheless, there are problems of cognitive biases, discussed earlier, which do routinely affect our judgment. Even though a scientist may not lean in a particular direction consciously, and may deliberately be committed to remaining unbiased, biases such as availability and overconfidence can still affect the outcomes of a study or experiment, because these mental processes are operating below the conscious level, i.e., at the level where information is processed unconsciously. Such cognitive biases are systematic, particular, and undesired. Because these psychological, unconscious biases that ordinarily affect our judgment are real, they should be taken into account when setting up and evaluating judgments, and methods to evaluate judgments.

In sum, the first three meanings of ‘objective’—public, detached, and unbiased—pertain to methodology; they are understood as ways that scientists can conduct investigations in order to gain access to scientific facts or truths.

Another meaning of ‘objective’ has more to do with the shape of reality itself: independently or separately existing from us (4). It is distinct from the final meaning: real, or really existing (5) (Lloyd 1995).Footnote 11 You can tell the difference between independently existing and real by considering dreams. They are real, they really exist, but they do not exist independently from us. Scientists are usually in the business of trying to discover or explore that which is real, and that which is independently existing from us, that is, things that are ‘out there in the world’: facts, events, mechanisms, and processes.

There are long-standing philosophical disputes about whether and how science may produce knowledge of independently existing things or processes—indeed, whether there exist any at all—but we shall lay them aside for the much more modest purposes of this paper (Boyd 1983; Fine 1986; Lipton 2004; Peirce 1878; van Fraassen 1980). In a more pedestrian fashion, there is customarily understood to be a very important but usually unspoken link between the first three meanings of ‘objective’ and the next two: the first three, methodological meanings—public (1), detached (2), and unbiased (3)—are believed to be the means and methods that lead to knowledge of the next two, independently existing things (4) (should there be such) and real events, mechanisms, and processes (5) (Lloyd 1995). Finally, the socially oriented meanings of ‘objectivity,’ procedural objectivity (6) and interactive or structural objectivity (7), can be used to reinforce the methodological support provided by the first three meanings of ‘objective.’

For example, consider procedural objectivity (6), which “occurs when a process is set up such that regardless of who is performing that process, the same outcome is always produced” (Douglas 2007, p. 134; drawn from Megill 1994; Porter 1992). Such a socially organized processing of information, experiment, or devices will produce reliable outcomes no matter who is doing the processing, or operating the method. This forced anonymity or interchangeability of the processor precludes the individual processors’ biases and wishes from influencing the outcome of the process—no matter what they are. Procedural objectivity thus embodies and reinforces two of the previously discussed forms of ‘objective’: detached (2), i.e., disengagement from the desired results, and unbiased (3), or being statistically neutral in terms of mistakes made relative to a true value, due to the lack of influence of an experimenter’s own conscious or unconscious biases. Thus, this type of processing, ‘procedurally objective’ processing, provides a set of extremely powerful virtues, which we will discuss below in comparing CIB with Intuitive Logics.

Procedural objectivity (6) is also closely related to replicability, i.e., the reproducibility of the same experiment, observational procedure, or process, for the purposes of testing or confirming an idea, theory, model or hypothesis. Replicability is a standard requirement in nearly every field of the empirical and some theoretical sciences. The notion that other researchers or observers should be able to repeat a set of instructions or procedures for measurement or observation, (i.e., participate in procedural objectivity (6) regarding observations), and then reproduce relevantly similar results as previous observers, is built into the notion of public objectivity (1), and its central place in scientific methodology. Because replicability also involves having other scientists perform ‘the same’ procedures, there is an assumption that the scientists undergoing such a procedure will be objective in the sense of unbiased (3); it is assumed that they will conduct the procedures fairly, and any mistakes will tend to fall fairly to either side, and not be biased in one direction, i.e., objective (3), unbiased.

Finally, we consider ‘interactive,’ ‘structural,’ or ‘transformative’ objectivity (7), the concept of agreement achieved by intense debate or discussion among peers in the scientific community, where the emphasis is on the degree to which “both its procedures and its results are responsive to the kinds of criticisms described,” according to Longino (1990, p. 76; see also Hull 1988). These criticisms include critiques of evidence, experimental design, and theory, as well as background assumptions that underpin all these factors. “Interactive objectivity occurs when an appropriately constituted group of people meet and discuss what the outcome should be,” writes Douglas (2007, p. 135). Such a social vision of objectivity requires a community of interlocutors and also standards of their engagement.

In sum, adherence to the first three meanings of ‘objective’—the methodological meanings, public, detached, and unbiased, [as well as replicability, which is made possible by the social-methodological meaning, procedural objectivity (6)]—is taken to be important in the practice of most of the sciences; it is a promise to gain real knowledge of reality itself.Footnote 12 Whether adherence to meaning (7), interactive or structural objectivity, is methodologically effective or not, gets at the core of the issues relating to CIB versus Intuitive Logics methods in scenario building, which we discuss in the next section.

5.2 Comparison of Intuitive Logics and CIB with respect to objectivity

Significantly, the CIB method is more objective than Intuitive Logics in several of the distinct ways mentioned above. First, the CIB method has significantly more (6) procedural objectivity, which finally makes it possible for the development of storyline scenarios to be replicable. As discussed in the previous section, replicability refers to the notion that other researchers or observers should be able to repeat a set of instructions or procedures for measurement or observation, and then reproduce relevantly similar results as previous observers. Here, CIB, which is procedurally objective (6), and also virtually completely replicable thereby, wins out hands down over Intuitive Logics, which, because it involves the vagaries of group interactions of human beings, is not replicable with regard to its results. This procedural objectivity also enables the CIB method to be more ‘objective’ under other meanings of the term.

For instance, CIB is more ‘objective’ in the sense of being objective (1), public, accessible, intersubjective, and publicly available for inspection. The Intuitive Logics methods involve convening a small set of people, usually experts in various fields, to build scenarios. In contrast, when one builds scenarios using a CIB methodology, there could, in theory, be hundreds of experts providing documented input for scenarios. Between these two methodologies, there is a sharp difference between the access that others not present during the building of the scenario, such as outside policy-makers and scientists, have to the information used to develop the scenarios, and the processing of that information. More specifically, in the case of CIB methods, all of the expert judgment values, as well as the final estimations of the internal consistency of the scenarios, are objective (1), available for public access and inspection at any time.

In the Intuitive Logics case, in contrast, the final scenarios are available for public inspection, as well as possibly some or all of the discussions leading to those final scenarios, potentially through some transcript offering a narrative account, but no calculations or estimations involving any complex correlations. In Intuitive Logics, the complex expert judgments regarding the aggregate effects of variables affecting the final scenarios, and especially their combinations and correlations, remain in the experts’ minds completely inaccessible to the public, due to the fact that they are not made explicit, nor are they explicitly calculated while narrative versions of the scenarios are fleshed out.

Remember here that the CIB methods involve eliciting only pairwise expert judgments regarding the pairwise correlations of two variables at a time, which avoid the complex and concluding multi-variable judgments of the sort made in Intuitive Logics contexts, which can involve as many as ten, fifteen or more correlated variables simultaneously. As we discussed above, the thoughts and judgments concerning complex, aggregate sets of correlations among variables made in Intuitive Logics contexts are not explicitly calculated by anyone in the Intuitive Logics discussions, and so remain unavailable for public inspection at any time, and are thus not objective (1), accessible and public, even if a meticulous narrative account is made available of the Intuitive Logics process. The contrast between Intuitive Logics and the CIB methods is a stark one: all of the expert judgments used in CIB calculations of the complex correlations, interrelations between variables, and the grand conclusions regarding the consistencies of a particular set of variables, are recorded explicitly and completely accessible and available for public inspection, and thus objective in the public (1) sense. Meanwhile, almost none of these are available in Intuitive Logics contexts, except for a few key variables and the aggregate, general conclusions of the full storyline scenarios at the end.

We would like to note a significant virtue of the CIB methods at this point, which is unavailable to Intuitive Logics methods. In addition to being available to public inspection, the expert pairwise judgments that contribute to the CIB analyses are also easily challengeable and revisable at any time. That is, any judgments regarding pairwise variable values recorded for a CIB analysis can be updated and modified piecemeal, as new information or analyses develop, unlike the Intuitive Logics situations, which would require the re-convening of the entire expert panels. This makes the CIB methods more flexible and responsive to improvements in both data and theory. The ease and openness of the judgments helps establish the ‘public’ objectivity (1) of the CIB method, while also boosting the scientific credibility and power of the method through its ability to handle new scientific evidence. Note that this ease of revision also enhances structural objectivity (7). The community’s responsiveness to criticism plays the vital role in this form of objectivity, and here we can see the CIB method’s facilitation of the updating and criticism needed for objectivity (7) (Longino 1990).

Procedural objectivity (6) also enables CIB to be more objective under meaning (2), more detached, as well as meaning (3), less biased. A given expert’s conscious attachment or unconscious cognitive bias towards a given result or variable value is muted by this method, through the procedure of having their expert opinion elicited only about pairwise correlations between individual variables, and not about trends or massive correlations between interdisciplinary collections of variables. The particular procedural objectivity of CIB bolsters the detached and less biased meanings of objectivity in two ways. First, the expert’s possibly biased opinions involving the direction of the change of the overall scenarios due to some particular factor(s) are not counted or surveyed, or if untoward biases are included, they are visible and modifiable by others. Second, the requirement that judgments used to arrive at full scenarios be recorded pairwise is a disaggregation technique, and these have been shown to improve the calibration of judgments of quantities unknown to participants in psychological experiments but known in reality, such as “How many packs of Polaroid color film were used in the USA in 1970?” (Armstrong 1978 cited in Morgan and Keith 2008; MacGregor and Armstrong 1994 cited in Morgan and Keith 2008). The implication of these studies is that for judgments of large, unknown quantities with high uncertainty (and here it should be noted that socioeconomic scenarios include judgments about ranges and rates of change for future, unknown quantities that span the globe as well as large continental regions and sometimes countries), disaggregation of judgments corrects for more of the individual cognitive biases discussed in Sect. 3.3, compared to eliciting such judgments from individuals holistically.

With the Intuitive Logics methodologies, under which there is often unaided or minimally aided (and always aggregate or holistic) judgment being elicited, it is a situation in which biases may even be seen to be encouraged. With CIB, it is the opposite. Through the requirement to record each expert’s judgments in each judgment cell, unconscious cognitive bias is counteracted through disaggregation. Additionally, through the public display of judgments in judgment cells, any personal and theoretical biases are made more obvious. Thus, any untoward biases, whether unconscious or conscious, can be managed and revealed through the process of recording disaggregated judgments and through comparisons with other expert judgments. Under the Intuitive Logics methods, such biases are simply incorporated into the resulting scenarios without any chance for piecemeal revision or improvement. Through procedural objectivity (6), the CIB approach thus leads to more detached and less biased scenarios, that is, more ‘objective’ (2) and (3) scenarios.

Despite Intuitive Logics’ clear weaknesses for ‘objective’ meanings (1) public, (2) detached, (3) unbiased, and (6) procedurally objective, Intuitive Logics’ proponents may attempt to claim superiority with respect to ‘objective’ meaning (7), interactive or structural objectivity, due to its invitation to include a variety of experts as a group in the scenario building process. As mentioned above, the details of group membership and interactions are crucial for understanding interactive or structural objectivity (7). For example, how diverse should the group be, and with what expertise? Both Intuitive Logics and CIB are subject to the worry, “Whose judgments are behind the scenarios?” Both methods also require the input of judgments from a variety of disciplines. A big difference between the Intuitive Logics and CIB methods is that CIB makes these judgments objective (1), publicly accessible, and able to be updated piecemeal, as just discussed, while Intuitive Logics masks or precludes this ability by design. Discussion of structural objectivity (7) for CIB is thus more compelling due to its public accessibility (1).

A further issue facing any claims of structural objectivity (7) of Intuitive Logics is that we have abundant reason for expecting problems of ‘groupthink’ biases, discussed in 3.3, to arise in the context of Intuitive Logics scenario building. This is because Intuitive Logics, unlike CIB, requires the direct, face-to-face interactions of a small group of experts (Janis 1972).

Solomon’s analysis of why groupthink biases discussed in Sect. 3.3 work to lead results astray is very interesting. She first summarizes Surowiecki’s discovery of three important conditions for a group to make an epistemically good aggregate judgment: independence, diversity, and decentralization. ‘Independence’ means that each person makes a judgment on his or her own, while ‘diversity’ requires that the individuals making the judgments are sufficiently diverse in both knowledge and perspective, which varies case by case. Finally, ‘decentralization’ means that the actual process of aggregating information treats each person’s judgment equally: no expert or authority is more heavily weighted in the group.

Solomon then analyzes why this set of conditions is effective at producing the epistemically superior outcomes that it does. Significantly, she notes that the various pressures that are typical in group settings, reviewed above, as well as the salience of vocal group members, can lead to the suppression of important information. Consider the fact, she says, that aggregated individual judgments are often the best predictors, and the fact that such aggregates include all of the opinions of the individuals in the group, in their full diversity (2006, pp. 35–36). Such individual opinions “are often based on particular pieces of information that may not be generally known,” she writes (2006, p. 36). It is when these individuals in their full diversity are overruled or suppressed by groupthink, Solomon argues, that information is lost to the group. It is in just this way that simple and plain aggregation preserves information that is lost by group interaction, the latter of which therefore becomes ‘less intelligent.’ Solomon sums up: “Dissent (i.e., different assessments by different individuals) is valuable because it preserves and makes use of all the information available to the community” (2006, pp. 36–37).

Now consider this sort of mechanism of aggregate knowledge production with regard to the Intuitive Logics and CIB methods. Surowiecki’s three key requirements for epistemically superior outcomes are: independence, diversity, and decentralization. Our concern with the disappearance or neglect during analysis and deliberation of what we can call ‘marginalized facts or judgments’—in brief, ‘marginalized information,’—facts known by people in the group who are discouraged for whatever reason from voicing their knowledge through the means listed above—would apply to nearly all Intuitive Logics scenario building methods.