Abstract

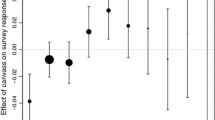

We demonstrate the centrality of high quality personal interactions for successfully overcoming the collective action problem of voter mobilization, and highlight the need for attention to treatment quality before making substantive inferences from field experiments. We exploit natural variation in the quality of voter mobilization phone calls across call centers to examine how call quality affects voter mobilization in a large-scale field experiment conducted during the 2010 Election. High quality calls (from call centers specializing in calling related to politics) produced significant increases in turnout. In contrast, low quality calls (from multi-purpose commercial call centers) failed to increase turnout. Furthermore, we offer caution about using higher contact rates as an indication of delivery quality. Our treatment conditions with higher contact rates had no impact on turnout, suggesting an unfavorable trade-off between quantity of contacts and call quality.

Similar content being viewed by others

Notes

The house effects literature largely focuses on how the training of interviewers influences how they interact with respondents, and what influence that has on the data collected (Leahey 2008; Smith 1978,1982; Viterna and Maynard 2002). For example, highly trained and well-monitored interviewers are typically given more latitude to engage the respondent in a more conversational tone, and to act extemporaneously though the use probes and feedback to encourage the respondent. This approach can increase the quality of the data collected by reducing the amount of missing data due to breakoffs, refusals, and “do not know” responses. Commercial polling firms tend to give less latitude to interviewers, and have more standardized procedures, than academic polling centers.

The states were Florida, Iowa, Illinois, Maine, Michigan, Minnesota, New Mexico, New York, Ohio, Pennsylvania, and South Carolina.

Our partner organization is a not-for-profit non-partisan 501(c)3 charitable organization whose mission includes increasing voter participation. The name of the organization is withheld in accordance with our partnership agreement. The partnership agreement specified unrestricted publication rights using the data from this experiment, thus avoiding the potential for selection bias in reported results when organizations control the release of information (Nickerson 2011).

The results of any experiment are necessarily specific to the context in which they are conducted. Conducting this experiment in partnership with a civic organization makes it more realistic, but means our subjects are not fully representative of all registered voters. That said, the experiment contains a broad cross-section of the electorate across a diverse set of states (see Table S1 in the Supplemental Materials available online).

Due to the capacity of the call center in treatment A, slightly fewer subjects were assigned. Consequently, more subjects were assigned to the expected high quality calls in Treatment B.

Survey research call centers might be a fifth operating model. However, using survey research call centers in this experiment was not possible due to capacity and cost constraints. The higher costs suggest that they might deliver even higher quality calls, although cost is not a guarantee of quality.

Nickerson (2007) expresses similar expectations about the quality of phone calls made by fundraising call centers.

Voters who did not appear on the post-election voter rolls were coded as non-voters. We cannot exclude voters who drop from the voter rolls, because the administrative process for removing a record from the voter rolls is conditional on non-voting under the National Voter Registration Act of 1993. If the treatment increases turnout, it makes voters more likely to remain on the rolls. Thus, exclusion of non-voters from both the treatment and control groups will bias the estimate of the treatment effect.

The ATE (and CACE) estimates use a fixed effects estimator to account for the stratification of the random assignment detailed in the Supplemental Materials online.

The CACE reflects the influence of the treatment among those who are successfully treated, (sometimes referred to as a “treatment on treated effect” or “average treatment among the treated effect”). The CACE is the ATE divided by the response rate. In regression analyses, the CACE is estimated using random assignment to the treatment conditions as instruments for successful delivery of the treatment in instrumental variable regression (Gerber and Green 2000; Angrist et al. 1996).

Measuring cooperation with later items in the call script leads to very similar conclusions. Each call center used slightly different codes for call dispositions, so minor measurement error may be introduced by these coding differences.

Among many potential sources of heterogeneity, voter mobilization treatments are more likely to be attenuated by the mobilization efforts of other groups in the 2010 mid-term general election than in the low salience elections in which many field experiments are conducted. We also refrain from using cost per net vote as a basis of comparison since the calls in the experiment received discounts that bias this comparison based on the number of calls and our partner organization’s status as a major (potential) client.

Using a slightly different set of 10 field experiments on voter mobilization calls from commercial call centers, Ha and Karlan’s (2009) meta-analysis calculates a 0.9 percentage point weighted mean CACE. Substitution of this meta-analysis estimate for the Green and Gerber (2008) meta-analysis leads to similar substantive conclusions.

References

American Association for Public Opinion Research (AAPOR). (2011). Standard definitions: Final dispositions of case codes and outcome rates for surveys (7th ed.). Washington: AAPOR.

Angrist, Joshua D., Imbens, G. W., & Rubin, D. B. (1996). Identification of causal effects using instrumental variables. Journal of the American Statistical Association, 91(434), 444–455.

Atkeson, L., Adams, A., Bryant, L., Zilberman, L., & Saunders, K. (2011). Considering mixed mode surveys for questions in political behavior: Using the internet and mail to get quality data at reasonable costs. Political Behavior, 33, 161–178.

Bedolla, L. G., & Michelson, M. R. (2012). Mobilizing inclusion: Transforming the electorate through get-out-the-vote campaigns. New Haven: Yale University Press.

Dahl, R. A. (1956). A preface to democratic theory. Chicago: University of Chicago Press.

Dale, A., & Strauss, A. (2007). Don’t forget to vote: Text message reminders as a mobilization tool. American Journal of Political Science, 53(4), 787–804.

Gerber, A. S., & Green, D. P. (2000). The effects of personal canvassing, telephone calls, and direct mail on voter turnout: A field experiment. American Political Science Review, 94, 653–664.

Gerber, A. S., & Green, D. P. (2001). Do phone calls increase voter turnout? A field experiment. Public Opinion Quarterly, 65(1), 75–85.

Gerber, A. S., & Green, D. P. (2005a). Correction to Gerber and Green (2000), replication of disputed findings, and reply to Imai. American Political Science Review, 99(2), 301–313.

Gerber, A. S., & Green, D. P. (2005b). Do phone calls increase voter turnout? An update. Annals of the American Academy of Political and Social Science, 601(1), 142–154.

Gerber, A. S., & Rogers, T. (2009). Descriptive social norms and motivation to vote: Everybody’s voting and so should you. Journal of Politics, 71(1), 178–191.

Gerring, John. (2001). Social science methodology: A criterial framework. New York: Cambridge University Press.

Green, Donald P., & Gerber, A. S. (2008). Get out the vote! (2nd ed.). Washington: Brookings.

Ha, S. E., & Karlan, D. S. (2009). Get-out-the-vote phone calls: Does quality matter? American Politics Research, 37(2), 353–369.

Keeter, S., Kennedy, C., Dimock, M., Best, J., & Craighill, P. (2006). Gauging the impact of growing nonresponse on estimates from a national RDD telephone survey. Public Opinion Quarterly, 70(5), 759–779.

Klofstad, C. A., McDermott, R., & Hatemi, P. K. (2011). Do bedroom eyes wear political glasses? The role of politics in human mate attraction. Evolution and Human Behavior, 33(2), 100–108.

Leahey, E. (2008). Methodological memes and mores: Toward a sociology of social research. Annual Review of Sociology, 34, 33–53.

Malhotra, N., Michelson, M. R., Rogers, T., & Valenzuela, A. A. (2011). Text messages as mobilization tools: The conditional effect of habitual voting and election salience. American Politics Research, 39(4), 664–681.

Mann, C. B. (2011). Preventing ballot roll-off: A multi-state field experiment addressing an overlooked deficit in voting participation. New Orleans: Annual Meeting of the Southern Political Science Association.

Michelson, M. R., Bedolla, L. G., & McConnell, M. A. (2009). Heeding the call: The effect of targeted two-round phone banks on voter turnout. Journal of Politics, 71(4), 1549–1563.

Nickerson, D. W. (2006). Volunteer phone calls can increase turnout: Evidence from eight field experiments. American Politics Research, 34(3), 271–292.

Nickerson, D. W. (2007). Quality is job one: Professional and volunteer voter mobilization calls. American Journal of Political Science, 51(2), 269–282.

Nickerson, D. W. (2008). Is voting contagious? Evidence from two field experiments. American Political Science Review, 102(1), 49–57.

Nickerson, D. W. (2011). When the client owns the data. The experimental political scientist: Newsletter of the APSA experimental section, 2(2), 5–6.

Nickerson, D. W., & Rogers, T. (2010). Do you have a voting plan? Implementation intentions, voter turnout, and organic plan making. Psychological Science, 21(2), 194–199.

Olson, M. (1965). The logic of collective action: Public goods and the theory of groups. Cambridge: Harvard University Press.

Olson, K., & Bilgen, I. (2011). The role of interviewer experience on acquiesence. Public Opinion Quarterly, 75(1), 99–114.

Panagopoulos, Costas. (2011). Thank you for voting: Gratitude expression and voter mobilization. Journal of Politics, 73(3), 707–717.

Porter, T. (1995). Trust in numbers. Princeton: Princeton Univ. Press.

Sinclair, B., McConnell, M. A., & Green, D. P. (2012). Detecting spillover effects: Design and analysis of multi-level experiments. American Journal of Political Science, 5(4), 1055–1069.

Smith, T. W. (1978). In search of house effects: A comparison of responses to various questions by different survey organizations. Public Opinion Quarterly, 42(4), 443–463.

Smith, T. W. (1982). House effects and the reproducibility of survey measurements: A comparison of the 1980 GSS and the 1980 American national election study. Public Opinion Quarterly, 46, 54–68.

Viterna, J. S., & Maynard, D. W. (2002). How uniform is standardization? Variation within and across survey research centers regarding protocols for interviewing. In D. W. Maynard, H. Houtkoop-Steenstra, N. C. Schaeffer, & J. van der Zouwen (Eds.), Standardization and tacit knowledge: Interaction and practice in the survey interview. New York: Wiley.

Weisberg, H. F. (2005). The total survey error approach. Chicago: University of Chicago Press.

Acknowledgments

We thank our partner organization for the support that made this research possible. We thank the editors, anonymous reviewers, Stephen Ansolabehere, Donald Green, John Love, Frank Sansom, Brian Shaffner, and the participants in the Political Science Faculty Colloquium at the University of Miami for their helpful comments. An earlier version of this paper was presented at the 2012 Midwest Political Science Association Conference. This research was conducted under University of Miami Human Subjects Research Office Protocol #20110124. Replication data is available at http://www.sites.google.com/site/christopherbmann. All errors are the responsibility of the authors.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Mann, C.B., Klofstad, C.A. The Role of Call Quality in Voter Mobilization: Implications for Electoral Outcomes and Experimental Design. Polit Behav 37, 135–154 (2015). https://doi.org/10.1007/s11109-013-9264-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11109-013-9264-y