Abstract

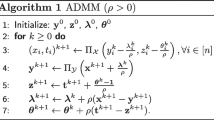

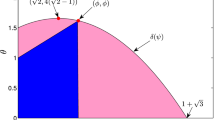

In this article, we propose an algorithm, nesta-lasso, for the lasso problem, i.e., an underdetermined linear least-squares problem with a 1-norm constraint on the solution. We prove under the assumption of the restricted isometry property (rip) and a sparsity condition on the solution, that nesta-lasso is guaranteed to be almost always locally linearly convergent. As in the case of the algorithm nesta, proposed by Becker, Bobin, and Candès, we rely on Nesterov’s accelerated proximal gradient method, which takes \(O(\sqrt {1/\varepsilon })\) iterations to come within \(\varepsilon > 0\) of the optimal value. We introduce a modification to Nesterov’s method that regularly updates the prox-center in a provably optimal manner. The aforementioned linear convergence is in part due to this modification. In the second part of this article, we attempt to solve the basis pursuit denoising (bpdn) problem (i.e., approximating the minimum 1-norm solution to an underdetermined least squares problem) by using nesta-lasso in conjunction with the Pareto root-finding method employed by van den Berg and Friedlander in their spgl1 solver. The resulting algorithm is called parnes. We provide numerical evidence to show that it is comparable to currently available solvers.

Similar content being viewed by others

References

Afonso, M., Bioucas-Dias, J., Figueiredo, M.: Fast image recovery using variable splitting and constrained optimization. IEEE Trans. Image Process. 19(9), 2345–2356 (2010)

Afonso, M., Bioucas-Dias, J., Figueiredo, M.: Fast frame-based image deconvolution using variable splitting and constrained optimization. In: IEEE/SP 15th Workshop on Statistical Signal Processing, 2009. SSP ’09, pp. 109–112 (2009)

Barzilai, J., Borwein, J.: Two point step size gradient method. IMA J. Numer. Anal. 8(1), 141–148 (1988)

Beck, A., Teboulle, M.: Fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2(1), 183–202 (2009)

Becker, S., Bobin, J., Candès, E. J.: nesta: a fast and accurate first-order method for sparse recovery. SIAM J. Imag. Sci. 4(1), 1–39 (2011)

Bertsekas, D.P.: Nonlinear Programming. Belmont, MA (1999)

Birgin, E., Martínez, J., Raydan, M.: Nonmonotone spectral projected-gradient methods on convex sets. SIAM J. Optim. 10(4), 1196–1211 (2000)

Bobin, J., Stark, J.-L., Ottensamer, R.: Compressed sensing in astronomy. IEEE J. Sel. Top. Signal Process. 2(5), 718–726 (2008)

Candès, E.J.: The restricted isometry property and its implications for compressed sensing. C. R. Math. Acad. Sci. Paris 346(9–10), 589–592 (2008)

Candès, E.J., Tao, T.: The Dantzig selector: statistical estimation when p is much larger than n. Ann. Stat. 35(6), 2313–2351 (2007)

Candès, E.J., Romberg, J., Tao, T.: Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 59(8), 1207–1223 (2006)

Chen, S., Donoho, D.L., Saunders, M.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20(1), 33–61 (1998)

Dembo, R.S., Eisenstat, S.C., Steihaug, T.: Inexact newton methods. SIAM J. Numer. Anal. 19(2), 400–408 (1982)

Donoho, D.L.: For most large underdetermined systems of linear equations the ℓ1-norm solution is also the sparsest solution. Commun. Pure Appl. Math. 59(6), 797–829 (2006)

Duchi, J., Shalev-Shwartz, S., Singer, Y., Chandra, T.: Efficient projections onto the ℓ1-ball for learning. Proc. Int. Conf. Mach. Learn. (ICML ’08) 25(307), 272–279 (2008)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression. Ann. Stat. 32(2), 407–499 (2004)

Figueiredo, M., Nowak, R., Wright, S.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 1(4), 586–597 (2007)

Figueiredo, M., Nowak, R., Wright, S.: Sparse reconstruction by separable approximation. IEEE Trans. Signal Process. 577, 2479–2493 (2009)

Friedman, J., Hastie, T., Höfling, H., Tibshirani, R.: Pathwise coordinate optimization. Ann. Appl. Stat. 1(2), 302–332 (2007)

Fuchs, J.J.: On sparse representations in arbitrary redundant bases. IEEE Trans. Inf. Theory 50(6), 1344 (2004)

Garey, M.R., Johnson, D.S.: Computers and Intractability. A Guide to the Theory of NP-Completeness. W.H. Freeman, New York (1979)

Hale, E.T., Yin, W., Zhang, Y.: A fixed-point continuation method for ℓ1-regularized minimization with applications to compressed sensing. Rice University Technical Report (2007)

Hale, E.T., Yin, W., Zhang, Y.: Fixed-point continuation for ℓ1-minimization: methodology and convergence. SIAM J. Optim. 19(3), 1107–1130 (2008)

Hennenfent, G., Herrmann, F.J.: Sparseness-constrained data continuation with frames: applications to missing traces and aliased signals in 2/3-D. SEG Tech. Program Expanded Abstracts 24(1), 2162–2165 (2005)

Hennenfent, G., Herrmann, F.J.: Simply denoise: wavefield reconstruction via jittered undersampling. Geophysics 73(3), V19–V28 (2008)

Natarajan, B.K.: Sparse approximate solutions to linear systems. SIAM J. Comput. 24(2), 227–234 (1995)

Nesterov, Y.: A method for solving the convex programming problem with convergence rate O(1/k 2). Dokl. Akad. Nauk SSSR 269(3), 543–547 (1983)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Program. 103(1), 127–152 (2005)

Nesterov, Y.: Gradient methods for minimizing composite objective function. CORE Discussion Paper 2007/76 (2007)

Osborne, M.R., Presnell, B., Turlach, B.A.: On the lasso and its dual. J. Comput. Graph. Stat. 9(2), 319–337 (2000)

Osborne, M.R., Presnell, B., Turlach, B.A.: A new approach to variable selection in least squares problems. IMA J. Numer. Anal. 20(3), 389–403 (2000)

Ponomarev, S.P.: Submersions and preimages of sets of measure zero. Sib. Math. J. 28(1), 153–163 (1987)

Romberg, J.: Imaging via compressive sensing. IEEE Trans. Signal Process. 25(2), 14–20 (2008)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc., Ser. B Stat. Methodol. 58(1), 267–288 (1996)

Tropp, J.A.: Just relax: convex programming methods for identifying sparse signals in noise. IEEE Trans. Inf. Theory 52(3), 1030–1051 (2006)

Tseng, P.: On accelerated proximal gradient methods for convex-concave optimization. Preprint (2008)

van den Berg, E., Friedlander, M.P.: Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31(2), 890–912 (2008/09)

van den Berg, E., Friedlander, M.P., Hennenfent, G., Herrmann, F.J., Saab, R., Yilmaz, Ö.: Algorithm 890: sparco: a testing framework for sparse reconstruction. ACM Trans. Math. Softw. 35(4), 16 (2009)

Wen, Z., Yin, W., Goldfarb, D., Zhang, Y.: A fast algorithm for sparse reconstruction based on shrinkage, subspace optimization and continuation. SIAM J. Sci. Comput. 32(4), 1832 (2010)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for l 1 minimization with applications to compressed sensing. SIAM J. Imag. Sci. 1(1), 143–168 (2008)

Yu, Y.L.: Nesterov’s optimal gradient method. LLL, Jul. 30 2009 (2009). http://webdocs.cs.ualberta.ca/~yaoliang/Non-smooth%20Optimization.pdf

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gu, M., Lim, LH. & Wu, C.J. ParNes: a rapidly convergent algorithm for accurate recovery of sparse and approximately sparse signals. Numer Algor 64, 321–347 (2013). https://doi.org/10.1007/s11075-012-9668-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-012-9668-5