Abstract

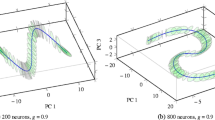

Cortical circuitry shows an abundance of recurrent connections. A widely used model that relies on recurrence is the ring attractor network, which has been used to describe phenomena as diverse as working memory, visual processing and head direction cells. Commonly, the synapses in these models are static. Here, we examine the behaviour of ring attractor networks when the recurrent connections are subject to short term synaptic depression, as observed in many brain regions. We find that in the presence of a uniform background current, the network activity can be in either of three states: a stationary attractor state, a uniform state, or a rotating attractor state. The rotation speed can be adjusted over a large range by changing the background current, opening the possibility to use the network as a variable frequency oscillator or pattern generator. Finally, using simulations we extend the network to two-dimensional fields and find a rich range of possible behaviours.

Similar content being viewed by others

References

Abbott, L. F., Varela, J. A., Sen, K., & Nelson, S. B. (1997). Synaptic depression and cortical gain control. Science, 275(5297), 220–224.

Barbieri, F., & Brunel, N. (2007). Irregular persistent activity induced by synaptic excitatory feedback. Frontiers in Computer Neuroscience, 1, 5. doi:10.3389/neuro.10.005.2007.

Ben-Yishai, R., Bar-Or, R. L., & Sompolinsky, H. (1995). Theory of orientation tuning in visual cortex. Proceedings of the National Academy of Sciences of the United States of America, 92, 3844–3848.

Ben-Yishai, R., Hansel, D., & Sompolinsky, H. (1997). Traveling waves and the processing of weakly tuned inputs in a cortical network model. Journal of Computational Neuroscience, 4, 57–77.

Compte, A., Brunel, N., Goldman-Rakic, P. S., & Wang, X. J. (2000). Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cerebral Cortex, 10(9), 910–923.

Dayan, P., & Abbott, L. F. (2001). Theoretical neuroscience: Computational and mathematical modeling of neural systems. Cambridge: MIT.

Ferster, D., & Miller, K. D. (2000). Neural mechanisms of orientation selectivity in the visual cortex. Annual Review of Neuroscience, 23, 441–471. doi:10.1146/annurev.neuro.23.1.441.

Goldberg, J. A., Rokni, U., & Sompolinsky, H. (2004). Patterns of ongoing activity and the functional architecture of the primary visual cortex. Neuron, 42(3), 489–500.

Hansel, D., & Sompolinsky, H. (1998). Modeling feature selectivity in local cortical circuits. In C. Koch, & I. Segev (Eds.), Methods in neuronal modeling: From ions to networks (chap. 13, pp. 499–567). Cambridge: MIT.

Harris, R. E., Coulombe, M. G., & Feller, M. B. (2002). Dissociated retinal neurons form periodically active synaptic circuits. Journal of Neurophysiology, 88(1), 188–195. doi:10.1152/jn.00722.2001.

Kenet, T., Bibitchkov, D., Tsodyks, M., Grinvald, A., & Arieli, A. (2003). Spontaneously emerging cortical representations of visual attributes. Nature, 425(6961), 954–956. doi:10.1038/nature02078.

Markram, H., & Tsodyks, M. (1996). Redistribution of synaptic efficacy between neocortical pyramidal neurons. Nature, 382(6594), 807–810. doi:10.1038/382807a0.

McNaughton, B. L., Battaglia, F. P., Jensen, O., Moser, E. I., & Moser, M. B. (2006). Path integration and the neural basis of the ’cognitive map’. Nature Reviews Neuroscience, 7(8), 663–678. doi:10.1038/nrn1932.

Owen, M. R., Laing, C. R., & Coombes, S. (2007). Bumps and rings in a two-dimensional neural field: Splitting and rotational instabilities. New Journal of Physics, 9, 378.

Puccini, G. D., Sanchez-Vives, M. V., & Compte, A. (2007). Integrated mechanisms of anticipation and rate-of-change computations in cortical circuits. PLoS Computers in Biology, 3(5), e82. doi:10.1371/journal.pcbi.0030082.

Samsonovich, A., & McNaughton, B. L. (1997). Path integration and cognitive mapping in a continuous attractor neural network model. Journal of Neuroscience, 17(15), 5900–5920.

Senn, W., Wannier, T., Kleinle, J., Lüscher, H. R., Müller, L., Streit, J., et al. (1998). Pattern generation by two coupled time-discrete neural networks with synaptic depression. Neural Computation, 10(5), 1251–1275.

Somers, D. C., Nelson, S. B., & Sur, M. (1995). An emergent model of orientation selectivity in cat visual cortical simple cells. Journal of Neuroscience, 15(8), 5448–5465.

Thomson, A. M., & Lamy, C. (2007). Functional maps of neocortical local circuitry. Frontiers in Neuroscience, 1(1), 19–42. doi:10.3389/neuro.01.1.1.002.2007.

Tsodyks, M. V., & Markram, H. (1997). The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proceedings of the National Academy of Sciences of the United States of America, 94(2), 719–723.

Tsodyks, M., Pawelzik, K., & Markram, H. (1998). Neural networks with dynamic synapses. Neural Computation, 10(4), 821–835.

van der Meer, M. A. A., Knierim, J. J., Yoganarasimha, D., Wood, E. R., & van Rossum, M. C. W. (2007). Anticipation in the rodent head direction system can be explained by an interaction of head movements and vestibular firing properties. Journal of Neurophysiology, 98(4), 1883–1897. doi:10.1152/jn.00233.2007.

van Rossum, M. C. W., van der Meer, M. A. A., Xiao, D., & Oram, M. W. (2008). Adaptive integration in the visual cortex by depressing recurrent cortical circuits. Neural Computation, 20(7), 1847–1872. doi:10.1162/neco.2008.06-07-546.

Wang, Y., Markram, H., Goodman, P. H., Berger, T. K., Ma, J., & Goldman-Rakic, P. S. (2006). Heterogeneity in the pyramidal network of the medial prefrontal cortex. Nature Neuroscience 9(4), 534–542. doi:10.1038/nn1670.

Zhang, K. (1996). Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: A theory. Journal of Neuroscience 16(6), 2112–2126.

Acknowledgements

LY was funded by the EPSRC through the Neuroinformatics Doctoral Training Centre. MvR was supported by HFSP and the EPSRC. We thank Jesus Cortes, Dmitri Bibitchkov and Mike Oram for discussion.

Author information

Authors and Affiliations

Corresponding author

Additional information

Action Editor: X.-J. Wang

Appendix

Appendix

In the appendices the details of the stability analysis are presented.

1.1 A.1 Stability of the homogeneous solution

We first analyse the broad solution, that is, the solution for which all m(θ,t) are positive and all neurons remain above threshold. Writing the functions in Fourier series form, \(\hat{m}(\theta,t)=\sum_{n}\hat{m}_{n}(t)e^{2in\theta}\), \(\hat{p}(\theta,t)=\sum_{n}\hat{p}_{n}(t)e^{2in\theta}\), and using that 2 cos(2z) = e 2iz + e − 2iz, we obtain for the governing equations, Eqs. (5), the following expansion:

Since Fourier components are orthonormal, we can compare coefficients, to obtain

These equations are used below for the stability analysis. The equations for the steady state solution can be found by setting the derivative to be zero in Eq. (5), yielding

From the Fourier series expansion, Eq. (9), we can see that the only possible solution for M(θ) is of the form α + βcos(2θ) + γsin(2θ). Without loss of generality, we assume M(θ) = M 0 + M 1cos(2θ), with M 0,M 1 ≥ 0. In order to ensure that the steady state solution is in the broad profile regime, we additionally require \(M(\theta)>0,\,\forall\theta\in[-\frac{\pi}{2},\frac{\pi}{2}]\), so M 1 < M 0. Using numerical simulations we found that inhomogeneous solutions, (M 1 > 0) where they exist, were unstable, although this is analytically intractable. Here, we only consider the homogeneous case, which is given by

Since we require that \(M_{0}^{\pm}>0\), we have that for supra-threshold input B > 0, only \(M_{0}^{+}\) is a possible homogeneous steady state solution. Unless otherwise noted, M 0 always refers to \(M_{0}^{+}\) (the negative root, where needed, is referred to as \(M_{0}^{-}\)). The existence of \(M_{0}^{-}\) as a valid stationary state requires J 0 > 1 and B ≤ 0, although these conditions are not sufficient to ensure the stability of such a state. Finally note that for zero average synaptic connectivity, J 0 = 0, one has M 0 = B.

To examine the stability of the steady state solution, we linearise the Fourier components described in Eq. (9) about the fixed point M 0 and P 0 from Eq. (11), and consider small deviations away from the fixed point, in Fourier space, in order to assess whether or not the steady state is stable. We write the Fourier components as \(\hat{m}_{n}(t)=m_{n}(t)+M_{n}\) and \(\hat{p}_{n}(t)=p_{n}(t)+P_{n}\), where M 0, P 0 are the steady state values, and \(M_{n}=P_{n}=0,\,\forall n\ne0\). We linearise the equations about the steady state solution (M 0,P 0), neglecting higher order terms to obtain

This is a particularly advantageous form, since for any n the time evolution of each equation pair (m n (t) and p n (t)) decouples from all other equation pairs. For the steady state solution (M 0,P 0) to be stable, small perturbations m n and p n must tend to zero as t→ ∞ for all n. We consider first the equations for |n| > 1, namely:

Clearly, all small perturbations m n →0 as t→ ∞. This also means that p n →0 as t→ ∞ because \(M_{0}>-\frac{1}{\tau_{d}U}\) and P 0 > 0. Next, we consider the cases where |n| ≤ 1.

For n = 0, we have the following equation, written in matrix form

The eigenvalues λ of the matrix D determine whether the solution is stable. If the two solutions for λ are negative, then perturbations m 0 and p 0 will die out, i.e. the steady state solutions will be stable to homogeneous fluctuations in firing rate and release probability. For B > 0, the steady state solution (M 0,P 0) is always stable to homogeneous fluctuations in firing rate and release probability, and so does not undergo amplitude instability.

Next, we consider the stability to perturbations m 1 and p 1 (the stability to perturbations m − 1and p − 1 being identical). We call these perturbations “transverse fluctuations” in firing rate and release probability (Hansel and Sompolinsky 1998), and, following the treatment for homogeneous fluctuations, we need to find the eigenvalues λ of the matrix

This equation includes dependence on J 0 due to the inclusion of M 0 and P 0, and so is more complicated to analyse the stability, since it cannot be done in isolation of J 0. If we define μ = 1 + τ d UM 0, then the values for the eigenvalues λ are given by

The region where the largest eigenvalue is less than zero, is given by satisfying one of two conditions. Either we require that J 2 < 2μ, or we require that \(2\mu<J_{2}<2\mu^{2}\) and \(\tau_{d}(J_{2}-2\mu)<2\tau_{0}\mu^{2}\). Since we are assuming that \(\frac{\tau_{d}}{\tau_{0}}>1\), this leads to the following conditions for the steady state solution (M 0,P 0) being stable to transverse fluctuations in the activity and release probability:

or,

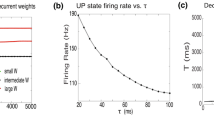

We used these equations to draw the boundary line to the region of stable homogeneous solutions in the phase portrait in Fig. 2.

Equation (12) are only a good approximation when terms of size O(m n (t)p j (t)) can be neglected which requires, as much as possible, for the rectification to have minimal effect. In network simulations, these terms are small when the simulation operates in the broadly tuned regime—i.e. where the rectification has little effect. Thus when the real part of the eigenvalues from Eq. (13) is zero, |m ±1|,|p ±1| remain unchanged, and the rotation speed is given by the imaginary part of Eq. (13).

1.2 A.2 Stability of the stationary bump

Another stationary state is the bump state, Fig. 1(B). Following previous treatments (Hansel and Sompolinsky 1998), we define its width θ c to be the angle such that, M(θ) = 0 for all θ > θ c (assuming, without loss of generality, that the profile is centred on θ 0 = 0). We then can write the stationary state solution as

where A and θ c can be determined numerically. The stability condition of this state is intractable analytically, and so a numerical approach is used. Hereto we discretise the network into n neurons as

Here, we have that \(J_{ij}=\frac{J_{0}}{n}+\frac{J_{2}}{n}\cos\left(\frac{2\pi(i-j)}{n}\right)\), which is equivalent to the continuous formulation, with m(i,t) and p(i,t) defined on ℕ×ℝ. Again, the steady state solution has the following form

where ϕ is the profile’s centre. To examine the stability we consider the largest eigenvalue of the Jacobian of the system, evaluated at the stationary state. The Jacobian matrix K is of size 2n×2n, with matrix elements as follows, for 1 ≤ i,k ≤ n,

where δ i,j is the Kronecker delta and H is the Heaviside step function. Unlike the continuous formulation, the stationary state is not fully rotationally symmetric, in particular, the stability of stationary states must be considered for \(\phi\in[0,\frac{\pi}{n}]\). If the Jacobian has an eigenvalue less than zero for a specific ϕ, then the corresponding state is stable. The results are used to plot the parameter region where the bump profile is stable in Fig. 2 (green lines).

1.3 A.3 Subtractive depression approximation

At low activity levels, p(θ,t) is always close to one and does not vary greatly in comparison to its mean value. As result we can approximate that the synaptic depression removes a fixed amount, rather than a fraction of synaptic resource. This “subtractive depression” simplifies the equations. We write the governing equations as follows, with a small value of ε

The steady state solution P(θ) now takes the form P(θ) = 1 − εM(θ). We note that as M(±θ c ) = 0, we have that

We use this to express for M′(θ) as

Following the example of Hansel and Sompolinsky (1998), we suppose that the activity and synaptic resource profiles keep their form, but are displaced some small amount δψ m and δψ P respectively, a so called transverse perturbation. We write:

We want to consider how the small perturbations evolve over time. Neglecting terms of higher order, we can use Eq. (15) to write, for small δψ m (t) and δψ P (t), and for |θ| < θ c

Using P(θ) = 1 − εM(θ), we obtain

where \(M'(\theta)\ne0\), for \(x_{1}(\theta)=\frac{1}{\pi}\int_{-\theta_{c}}^{\theta_{c}}J(\theta-\theta')M'(\theta')d\theta'\), \(x_{2}(\theta)=\frac{1}{\pi}\int_{-\theta_{c}}^{\theta_{c}}J(\theta-\theta')M(\theta')M'(\theta')d\theta'\) and J(θ) = \(\frac{1}{\pi}(J_{0}+J_{2}\cos(2\theta)\), we have \(M(\theta)=A\left[\cos(2\theta)-\right.\) \(\left.\cos(2\theta_{c})\right]_{+}\). For |θ| < θ c we then have

Both x 1(θ) and x 2(θ) are proportional to M′(θ), leaving Eq. (17) independent of θ. We can examine the stability to transverse fluctuations of the system by writing \(\frac{d}{dt}\left(\begin{array}{c} \delta\psi_{m}(t)\\ \delta\psi_{P}(t)\end{array}\right)=D\left(\begin{array}{c} \delta\psi_{m}(t)\\ \delta\psi_{P}(t)\end{array}\right)\), where matrix D is evaluated at some \(\theta\ne0\), |θ| < θ c as

The corresponding stability criterion is shown by the black line in Fig. 3(A).

Rights and permissions

About this article

Cite this article

York, L.C., van Rossum, M.C.W. Recurrent networks with short term synaptic depression. J Comput Neurosci 27, 607–620 (2009). https://doi.org/10.1007/s10827-009-0172-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-009-0172-4