Abstract

A receptive field constitutes a region in the visual field where a visual cell or a visual operator responds to visual stimuli. This paper presents a theory for what types of receptive field profiles can be regarded as natural for an idealized vision system, given a set of structural requirements on the first stages of visual processing that reflect symmetry properties of the surrounding world. These symmetry properties include (i) covariance properties under scale changes, affine image deformations, and Galilean transformations of space–time as occur for real-world image data as well as specific requirements of (ii) temporal causality implying that the future cannot be accessed and (iii) a time-recursive updating mechanism of a limited temporal buffer of the past as is necessary for a genuine real-time system. Fundamental structural requirements are also imposed to ensure (iv) mutual consistency and a proper handling of internal representations at different spatial and temporal scales. It is shown how a set of families of idealized receptive field profiles can be derived by necessity regarding spatial, spatio-chromatic, and spatio-temporal receptive fields in terms of Gaussian kernels, Gaussian derivatives, or closely related operators. Such image filters have been successfully used as a basis for expressing a large number of visual operations in computer vision, regarding feature detection, feature classification, motion estimation, object recognition, spatio-temporal recognition, and shape estimation. Hence, the associated so-called scale-space theory constitutes a both theoretically well-founded and general framework for expressing visual operations. There are very close similarities between receptive field profiles predicted from this scale-space theory and receptive field profiles found by cell recordings in biological vision. Among the family of receptive field profiles derived by necessity from the assumptions, idealized models with very good qualitative agreement are obtained for (i) spatial on-center/off-surround and off-center/on-surround receptive fields in the fovea and the LGN, (ii) simple cells with spatial directional preference in V1, (iii) spatio-chromatic double-opponent neurons in V1, (iv) space–time separable spatio-temporal receptive fields in the LGN and V1, and (v) non-separable space–time tilted receptive fields in V1, all within the same unified theory. In addition, the paper presents a more general framework for relating and interpreting these receptive fields conceptually and possibly predicting new receptive field profiles as well as for pre-wiring covariance under scaling, affine, and Galilean transformations into the representations of visual stimuli. This paper describes the basic structure of the necessity results concerning receptive field profiles regarding the mathematical foundation of the theory and outlines how the proposed theory could be used in further studies and modelling of biological vision. It is also shown how receptive field responses can be interpreted physically, as the superposition of relative variations of surface structure and illumination variations, given a logarithmic brightness scale, and how receptive field measurements will be invariant under multiplicative illumination variations and exposure control mechanisms.

Similar content being viewed by others

1 Introduction

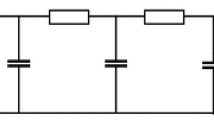

When light reaches a visual sensor such as the retina, the information necessary to infer properties about the surrounding world is not contained in the measurement of image intensity at a single point, but from the relationships between intensity values at different points. A main reason for this is that the incoming light constitutes an indirect source of information depending on the interaction between geometric and material properties of objects in the surrounding world and on external illumination sources. Another fundamental reason why cues to the surrounding world need to be collected over regions in the visual field as opposed to at single image points is that the measurement process by itself requires the accumulation of energy over non-infinitesimal support regions over space and time. Such a region in the visual field for which a visual sensor and or a visual operator responds to visual input or a visual cell responds to visual stimuli is naturally referred to as a receptive field (Hubel and Wiesel 1959, 1962) (see Fig. 1).

A receptive field is a region in the visual field for which a visual sensor/neuron/operator responds to visual stimuli. This figure shows a set of partially overlapping receptive fields over the spatial domain with all the receptive fields having the same spatial extent. More generally, one can conceive distributions of receptive fields over space or space–time with the receptive fields of different size, different shape, and orientation in space as well as different directions in space–time, where adjacent receptive fields may also have significantly larger relative overlap than shown in this schematic illustration

If one considers the theoretical and algorithmic problems of designing a vision system that is going to make use of incoming reflected light to infer properties of the surrounding world, one may ask what types of image operations should be performed on the image data. Would any type of image operation be reasonable? Specifically, regarding the notion of receptive fields, one may ask what types of receptive field profiles would be reasonable? Is it possible to derive a theoretical model of how receptive fields “ought to” respond to visual data?

Initially, such a problem might be regarded as intractable unless the question can be further specified. It is, however, possible to study this problem systematically using approaches that have been developed in the area of computer vision known as scale-space theory (Iijima 1962; Witkin 1983; Koenderink 1984; Koenderink and Doorn 1992; Lindeberg 1994a, b, 2008; Sporring et al. 1996; Florack 1997; ter Haar Romeny 2003). A paradigm that has been developed in this field is to impose structural constraints on the first stages of visual processing that reflect symmetry properties of the environment. Interestingly, it turns out to be possible to substantially reduce the class of permissible image operations from such arguments.

The subject of this article is to describe how structural requirements on the first stages of visual processing as formulated in scale-space theory can be used for deriving idealized models of receptive fields and implications of how these theoretical results can be used when modelling biological vision. A main theoretical argument is that idealized models for linear receptive fields can be derived by necessity given a small set of symmetry requirements that reflect properties of the world that one may naturally require an idealized vision system to be adapted to. In this respect, the treatment bears similarities to approaches in theoretical physics, where symmetry properties are often used as main arguments in the formulation of physical theories of the world. The treatment that will follow will be general in the sense that spatial, spatio-chromatic, and spatio-temporal receptive fields are encompassed by the same unified theory.

An underlying motivation for the theory is that due to the properties of the projection of three-dimensional objects to a two-dimensional light sensor (retina), the image data will be subject to basic image transformations in terms of (i) local scaling transformations caused by objects of different sizes and at different distances to the observer, (ii) local affine transformations caused by variations in the viewing direction relative to the object, (iii) local Galilean transformations caused by relative motions between the object and the observer, and (iv) local multiplicative intensity transformations caused by illumination variations (see Fig. 2). If the vision system is to maintain a stable perception of the environment, it is natural to require the first stages of visual processing to be robust to such image variations. Formally, one may require the receptive fields to be covariant under basic image transformations, which means that the receptive fields should be transformed in a well-behaved and well-understood manner under corresponding image transformations (see Fig. 3). Combined with an additional criterion that the receptive field must not create new structures at coarse scales that do not correspond to simplifications of corresponding finer scale structures, we will describe how these requirements together lead to idealized families of receptive fields (Lindeberg 2011) in good agreement with receptive field measurements reported in the literature (Hubel and Wiesel 1959, 1962; DeAngelis et al. 1995; DeAngelis and Anzai 2004; Conway and Livingstone 2006).

Visual stimuli may vary substantially on the retina due to geometric transformations and lighting variations in the environment. Nevertheless, the brain is able to perceive the world as stable. This figure illustrates examples of natural image transformations corresponding to (left column) variations in scale, (middle column) variations in viewing direction, and (right column) relative motion between objects in the world and the observer. A main subject of this paper is to present a theory for visual receptive fields that make it possible to match receptive field responses between image data that have been acquired under different image conditions, specifically involving these basic types of natural image transformations. To model the influence of natural image transformations on receptive field responses, we first approximate the possibly nonlinear image transformation by a local linear transformation at each image point (the derivative), which for these basic image transformations correspond to (i) local scaling transformations, (ii) local affine transformations, and (iii) local Galilean transformations. Then, we consider families of receptive fields that have the property that the transformation of any receptive field within the family using a locally linearized image transformation within the group of relevant image transformations is still within the same family of receptive fields. Such receptive field families are referred to as covariant receptive fields. The receptive field family is also said to be closed under the relevant group of image transformations

Consider a vision system that is restricted to using rotationally symmetric image operations over the spatial image domain only. If such a vision system observes the same three-dimensional object from two different views, then the backprojections of the receptive fields onto the surface of the object will in general correspond to different regions in physical space over which corresponding information will be weighed differently. If such image measurements would be used for deriving correspondences between the two views or performing object recognition, then there would be a systematic error caused by the mismatch between the backprojections of the receptive fields from the image domain onto the world. By requiring the family of receptive fields to be covariant under local affine image deformations, it is possible to reduce this amount of mismatch, such that the backprojected receptive fields can be made similar when projected onto the tangent plane of the surface by local linearizations of the perspective mapping. Corresponding effects occur when analyzing spatio-temporal image data (video) based on receptive fields that are restricted to being space–time separable only. If an object is observed over time by two cameras having different relative motions between the camera and the observer, then the corresponding receptive fields cannot be matched unless the family of receptive fields possesses sufficient covariance properties under local Galilean transformations

Specifically, explicit functional models will be given of spatial and spatio-temporal response properties of LGN neurons and simple cells in V1, which will be compared to related models in terms of Gabor functions (Marcelja 1980; Jones and Palmer 1987b, a), differences of Gaussians (Rodieck 1965), and Gaussian derivatives (Koenderink and Doorn 1987; Young 1987; Young et al. 2001; Young RA, Lesperance 2001; Lindeberg 1994a, b, 1997, 2011). For chromatic input, the model also accounts for color-opponent spatio-chromatic cells in V1. Notably, the diffusion equations that describe the evolution properties over scale of these linear receptive field models are suitable for implementation on a biological architecture, since the computations can be expressed in terms of communications between neighboring computational units, where either a single computational unit or a group of computational units may be interpreted as corresponding to a neuron or a group of neurons.

Compared to previous approaches of learning receptive field properties and visual models from the statistics of natural image data (Field 1987; van der Schaaf and van Hateren 1996; Olshausen and Field 1996; Rao and Ballard 1998; Simoncelli and Olshausen 2001; Geisler 2008; Hyvärinen et al. 2009; Lörincz et al. 2012), the proposed theoretical model makes it possible to determine spatial and spatio-temporal receptive fields from first principles and thus without need for any explicit training stage or gathering of representative image data. In relation to such learning-based models, the proposed theory provides a normative approach that can be seen as describing the solutions that an ideal learning-based system may converge to, if exposed to a sufficiently large and representative set of natural image data. For these reasons, the presented approach should be of interest when modelling biological vision.

We will also show how receptive field responses can be interpreted physically as a superposition of relative variations of surface structure and illumination variations, given a logarithmic brightness scale, and how receptive field measurements will be invariant under multiplicative illumination variations and exposure control mechanisms. Despite the image measurements fundamentally being of an indirect nature, in terms of reflected light from external objects subject to unknown or uncontrolled illumination, this result shows how receptive field measurements can nevertheless be related to inherent physical properties of objects in the environment. This result therefore provides a formal justification for using receptive field responses as a basis for visual processes, analogous to the way linear receptive fields in the fovea, LGN and V1 provide the basic input to higher visual areas in biological vision.

We propose that these theoretical results contribute to an increased understanding of the role of early receptive fields in vision. Specifically, if one aims at building a neuro-inspired artificial vision system that solves actual visual tasks, we argue that an approach based on the proposed idealized models of linear receptive fields should require a significantly lower amount of training data compared to approaches that involve specific learning of receptive fields or compared to approaches that are not based on covariant receptive field models. We also argue that the proposed families of covariant receptive fields will be better at handling natural image transformations as resulting from variabilities in relation to the surrounding world.

In their survey of our knowledge of the early visual system, Carandini et al. (2005) emphasize the need for functional models to establish a link between neural biology and perception. Einhäuser and König (2010) argue for the need for normative approaches in vision. This paper can be seen as developing the consequences of such ways of reasoning by deriving functional models of linear receptive fields using a normative approach. Due to the formulation of the resulting receptive fields in terms of spatial and spatio-temporal derivatives of convolution kernels, it furthermore becomes feasible to analyze how receptive field responses can be related to properties of the environment using mathematical tools from differential geometry and thereby analyzing possibilities as well as constraints for visual perception.

1.1 Outline of the presentation

The treatment will be organized as follows: Sect. 2 formulates a set of structural requirements on the first stages of visual processing with respect to symmetry properties of the surrounding world and in relation to internal representations that are to be computed by an idealized vision system. Then, Sect. 3 describes the consequences of these assumptions with regard to intensity images defined over a spatial domain, with extensions to color information in Sect. 4. Sect. 5 develops a corresponding theory for spatio-temporal image data, taking into account the special nature of time-dependent image information.

Section 6 presents a comparison between spatial and spatio-temporal receptive fields measured from biological vision to receptive field profiles generated by the presented spatial, spatio-chromatic, and spatio-temporal scale-space theories, showing a very good qualitative agreement. Section 7 describes how a corresponding foveal scale-space model can be formulated for a foveal sensor to account for a spatially dependent lowest resolution with suggestions for extensions in Sect. 8.

Section 9 relates the contributions in the paper to previous work in the area in a retrospective manner, and Sect. 10 concludes with a summary and discussion, including an outline of further applications of how the presented theory can be used for modelling biological vision.

2 Structural requirements of an idealized visual front end

The notion of a visual front end refers to a set of processes at the first stages of visual processing, which are assumed to be of a general nature and whose output can be used as input to different later-stage processes, without being too specifically adapted to a particular task that would limit the applicability to other tasks. Major arguments for the definition of a visual front end are that the first stages of visual processing should be as uncommitted as possible and allow initial processing steps to be shared between different later-stage visual modules, thus implying a uniform structure on the first stages of visual computations (Koenderink et al. 1992; Lindeberg 1994b, Sect. 1.1).

In the following, we will describe a set of structural requirements that can be stated concerning (i) spatial geometry, (ii) spatio-temporal geometry, (iii) the image measurement process with its close relationship to the notion of scale, (iv) internal representations of image data that are to be computed by a general purpose vision system, and (v) the parameterization of image intensity with regard to the influence of illumination variations.

The treatment that will follow can be seen as a unification, abstraction and extension of developments in the area of scale-space theory (Iijima 1962; Witkin 1983; Koenderink 1984; Koenderink and Doorn 1992; Lindeberg 1994a, b, 2008; Sporring et al. 1996; Florack 1997; ter Haar Romeny 2003) as obtained during the last decades, see Sect. 9.2 and (Lindeberg 1996, 2011; Weickert et al. 1999; Duits et al. 2004) for complementary surveys. It will then be shown how a generalization of this theory to be presented next can be used for deriving idealized models of receptive fields by necessity, including new extensions for modelling illumination variations in the intensity domain. Specifically, we will describe how these results can be used for computational neuroscience modelling of receptive fields with regard to biological vision.

2.1 Static image data over spatial domain

Let us initially restrict ourselves to static (time-independent) data and focus on the spatial aspects: If we regard the incoming image intensity \(f\) as defined on a 2D image plane \(f :{\mathbb R}^2 \rightarrow {\mathbb R}\) with Cartesian image coordinatesFootnote 1 denoted by \(x = (x_1, x_2)^T\), then the problem of defining a set of early visual operations can be formulated in terms of finding a family of operators \(\mathcal{T}_s\) that are to act on \(f\) to produce a family of new intermediate image representationsFootnote 2

which are also defined as functions on \({\mathbb R}^2\), i.e., \(L(\cdot ;\; s) :{\mathbb R}^2 \!\rightarrow \! {\mathbb R}\). These intermediate representations may be dependent on some parameter \(s\), which in the simplest case may be one-dimensional or under more general circumstances multi-dimensional.

2.1.1 Linearity and convolution structure

If we want these the initial visual processing stages to make as few irreversible decisions as possible, it is natural to initially require \(\mathcal{T}_s\) to be a linear operator such thatFootnote 3

holds for all functions \(f_1, f_2 :{\mathbb R}^2 \rightarrow {\mathbb R}\) and all real constants \(a_1, a_2 \in {\mathbb R}\). This linearity assumption implies that any special properties that we will derive for the internal representation \(L\) will also transfer to any spatial, temporal, or spatio-temporal derivatives of the image data, a property that will be essential regarding early receptive fields, since it implies that different types of image structures will be treated in a similar manner irrespective of what types of linear filters they are captured by.

Furthermore, if we want all image positions \(x \in {\mathbb R}^2\) to be treated similarly, such that the visual interpretation of an object remains the same irrespective of its location in the image plane, then it is natural to require the operator \(\mathcal{T}_s\) to be shift invariant such that

holds for all translation vectors \(\Delta x \in {\mathbb R}^2\), where \(S_{\Delta x}\) denotes the shift (translation) operator defined by \((\mathcal{S}_{\Delta x} f)(x) = f(x-\Delta x)\). Alternatively stated, the operator \(\mathcal{T}_s\) can be said to be homogeneous across space.Footnote 4

The requirements of linearity and shift invariance together imply that the operator \(\mathcal{T}_s\) can be described as a convolution transformationFootnote 5 (Hirschmann and Widder 1955)

of the form

for some family of convolution kernels \(T(\cdot ;\; s) :{\mathbb R}^2 \rightarrow {\mathbb R}\).

To be able to use tools from functional analysis, we will initially assume that both the original signal \(f\) and the family of convolution kernels \(T(\cdot ;\; s)\) are in the Banach space \(L^2({\mathbb R}^N)\), i.e. that \(f \in L^2({\mathbb R}^N)\) and \(T(\cdot ;\; s) \in L^2({\mathbb R}^N)\) with the norm

Then, also the intermediate representations \(L(\cdot ;\; s)\) will be in the same Banach space and the operators \(\mathcal{T}_s\) can be regarded as well defined.

2.1.2 Image measurements at different scales

The reduction in the first stage of visual processing to a set of convolution transformations raises the question of what types of convolution kernels \(T(\cdot ;\; s)\) could be regarded as natural? Specifically, we may consider convolution kernels with different spatial extent. A convolution kernel having a large spatial support can generally be expected to have the ability to respond to phenomena at coarser scales, whereas a convolution kernel with a small spatial support is generally needed to capture fine-scale phenomena. Hence, it is natural to associate a notion of scale with every image measurement. Let us therefore assume that the parameter \(s\) represents such a scale attribute and let us assume that this scale parameter should always be nonnegative \(s \in {\mathbb R}_+^N\) with the limit case when \(s \downarrow 0\) corresponding to an identity operation

Hence, the intermediate image representations \(L(\cdot ;\, s)\) can be regarded as a family of derived representations parameterized by a scale parameter \(s\).Footnote 6

2.1.3 Structural requirements on a scale-space representation

Semigroup and cascade properties For such image measurements to be properly related between different scales, it is natural to require the operators \(\mathcal{T}_s\) with their associated convolution kernels \(T(\cdot ;\; s)\) to form a semigroup

Then, the transformation between any two different and orderedFootnote 7 scale levels \(s_1\) and \(s_2\) with \(s_2 \ge s_1\) will obey the cascade property

i.e., a similar type of transformation as from the original image data \(f\). An image representation having these properties is referred to as a multi-scale representation.

Self-similarity Regarding the choice of convolution kernels to be used for computing a multi-scale representation, it is natural to require them to be self-similar over scale (scale invariant) in the sense that each kernel \(T(\cdot ;\; s)\) can be regarded as a rescaled version of some prototype kernel \(\bar{T}(\cdot )\). In the case of a scalar scale parameter \(s \in {\mathbb R}_+\), such a condition can be expressed as

with \(\varphi (s)\) denoting a monotonously increasing transformation of the scale parameter \(s\). For the case of a multi-dimensional scale parameter \(s \in {\mathbb R}_+^N\), the requirement of self-similarity over scale can be generalized into

where \(\varphi (s)\) now denotes a non-singular \(2 \times 2\)-dimensional matrix regarding a 2D image domain and \(\varphi (s)^{-1}\) its inverse. With this definition, a multi-scale representation with a scalar scale parameter \(s \in {\mathbb R}_+\) will be based on uniform rescalings of the prototype kernel, whereas a multi-scale representation based on a multi-dimensional scale parameter might also allow for rotations as well as non-uniform affine deformations of the prototype kernel.

Together, the requirements of a semigroup structure and self-similarity over scales imply that the parameter \(s\) gets both a (i) qualitative interpretation of the notion of scale in terms of an abstract ordering relation due to the cascade property in Eq. (9) and (ii) a quantitative interpretation of scale, in terms of the scale-dependent spatial transformations in Eqs. (10) and (11). When these conditions are simultaneously satisfied, we say that the intermediate representation \(L(\cdot ;\; s)\) constitutes a candidate for being regarded as a (weak) scale-space representation.

Infinitesimal generator For theoretical analysis, it is preferable if the scale parameter \(s\) can be treated as a continuous parameter and if image representations at adjacent scales can be related by partial differential equations. Such relations can be expressed if the semigroup possesses an infinitesimal generator (Hille and Phillips 1957; Pazy 1983)

and imply that the image representations at adjacent scales can be related by an evolution equation of the form

where we would preferably like the operator \(\mathcal{B}\) to be a partial differential operator. The infinitesimal generator is the natural correspondence to a derivative operator for semigroups.

In Eq. (13), we have for simplicity assumed the scale parameter \(s\) to be a scalar (one-dimensional) parameter. For a multi-parameter scale space with a scale parameter of the form \(s = (s_1, \dots , s_N)\), an analogous concept can be defined in terms of the directional derivative of the semigroup along any positive direction \(u = (u_1, \dots , u_N)\) in the parameter space

where each \(\mathcal{B}_k\) \((k = 1 \ldots N)\) constitutes the infinitesimal generator for the parameter \(s_k\) along the unit direction \(e_k\) in the \(N\)-dimensional parameter space

and with the notion of a “positive direction” in parameter space similar as in footnote 7.

Smoothing property: non-enhancement of local extrema A further requirement on a scale-space representation is that convolution with the scale-space kernel \(T(\cdot ;\; s)\) should correspond to a smoothing transformation in the sense that coarser-scale representations should be guaranteed to constitute simplifications of corresponding finer scale representations and that new image structures must not be created at coarser scales \(L(\cdot ;\; s)\) that do not correspond to simplifications of corresponding structures in the original data \(f\).

For one-dimensional signals \(f :{\mathbb R}\rightarrow {\mathbb R}\), such a condition can be formalized as the requirement that the number of local extrema or equivalently the number of zero-crossings in the data must not increase with scale and is referred to as non-creation of local extrema (Lindeberg 1990). For higher-dimensional signals, however, it can be shown that there are no non-trivial linear transformations guaranteed to never increase the number of local extrema in an image (Lifshitz and Pizer 1990; Lindeberg 1990).

For higher-dimensional image data, a particularly useful generalization of this notion is that local extrema must not be enhanced with increasing scale (non-enhancement of local extrema). In other words, if at some scale level \(s_0\) a point \((x_0;\; s_0)\) is a maximum (minimum) over the spatial domain \(x\), i.e., for the mapping \(x \mapsto L(x;\; s_0)\), then the derivative with respect to scale at this point must not be positive (negative). For a scale-space representation based on a scalar scale parameter, we should hence require (Lindeberg 1990, 1996):

For a multi-parameter scale space, a corresponding requirement on a scale-space representation is that if a point \((x_0;\; s_0)\) is local maximum (minimum) of the mapping \(x \mapsto L(x;\; s_0)\), then for every positive direction in the \(N\)-dimensional parameter space, the directional derivative of the semigroup \((\mathcal{D}_u L)(x;\; s)\) according to Eq. (14) must satisfy (Lindeberg 2011):

As will be described later, this condition constitutes a strong restriction on what convolution kernels \(T(\cdot ;\; s)\) can be regarded as scale-space kernels.

Nonnegativity and normalization Regarding the convolution kernels \(T(\cdot ;\; s)\), it is natural to require that any scale-space kernel should be nonnegative

and have unit mass (unit \(L^1\)-norm)

Nonnegativity follows from the requirement of non-creation of new zero-crossings with increasing scale for one-dimensional signals. Normalization to unit \(L^1\)-norm can be derived as a consequence of the requirement of non-enhancement of local extrema.

2.1.4 Requirements regarding spatial geometry

Rotational symmetry For a multi-scale representation based on a scalar scale parameter \(s \in {\mathbb R}_+\), it is natural to require the scale-space kernels to be rotationally symmetric

for some one-dimensional function \(h(\cdot ;\; s) :{\mathbb R}\rightarrow {\mathbb R}\). Such a symmetry requirement can be motivated by the requirement that in the absence of further information, all spatial directions should be equally treated (isotropy).

For a scale-space representation based on a multi-dimensional scale parameter, one may also consider a weaker requirement of rotational invariance at the level of a family of kernels, for example regarding a set of elongated kernels with different orientations in image space. Then, although the individual kernels in the filter family are not rotationally symmetric as individual filters, a collection or a group of such kernels may nevertheless capture image data of different orientation in a rotationally invariant manner, for example if all image orientations are explicitly represented or if the receptive fields corresponding to different orientations in image space can be related by linear combinations.

Affine covariance When considering surface patterns that are being deformed by the perspective transformation from the surface of an object to the image plane, a restriction to rotationally symmetric kernels only will, however, interfere with the image deformations that occur if the viewing direction varies in relation to the surface normal. If we approximate the geometry of an image deformation by the derivative of the perspective mapping and assume that there are no illumination variations, then such an image deformation can be modelled by an affine transformation

corresponding to

where \(A\) is a \(2 \times 2\) matrix and \(b \in {\mathbb R}^2\) a constant offset. Specifically, we can at any image point regard such an affine transformation as a local linear approximation of the perspective mapping.

A natural requirement on an idealized vision system that observes objects whose projections on the image plane are being deformed in different ways depending on the viewing conditions is that the vision system should be able to relate or match the different internal representations of external objects that are acquired under different viewing conditions. Such a requirement is natural to enable a stable interpretation of objects in the world under variations of the orientation of the object relative to the observer, to enable invariance under variations of the viewing direction.

Hence, if an internal representation \(L(\cdot ;\; s)\) of an image pattern \(f\) has been computed with a (possibly multi-parameter) scale parameter \(s\), we would like the vision system to be able to match this internal representation to the internal representation \(L'(\cdot ;\; s')\) of an affine transformed image pattern \(f'\) computed with a different (possibly multi-parameter) scale parameter \(s'\)

corresponding to

as reflected in the commutative diagram in Fig. 4, where \(s' = A(s)\) denotes some appropriate transformation of the scale parameter. This requirement is referred to as affine covariance. Within the class of linear operators \(\mathcal{T}_s\) over a two-dimensional image domain, it is, however, not possible to realize such an affine covariance property within a scale-space concept based on a scalar scale parameter. For two-dimensional image data, such affine covariance can, however, be accomplished within a three-parameter linear scale space.

2.2 Time-dependent image data over a spatio-temporal domain

Regarding spatio-temporal image data \(f(x, t)\), which we assume to be defined on a 2+1D spatio-temporal domain \({\mathbb R}^2 \times {\mathbb R}\) with \(x = (x_1, x_2)^T\) denoting image space and \(t\) denoting time, it is natural to inherit the above-mentioned symmetry requirements expressed for the spatial domain. Hence, corresponding structural requirements as stated in Sects. 2.1.1, 2.1.2, and 2.1.3 should be imposed on a spatio-temporal scale space, with space \(x \in {\mathbb R}^2\) replaced by space–time \((x, t) \in {\mathbb R}^2 \times {\mathbb R}\) and with the scale parameter now encompassing also a notion of temporal scale \(\tau \), such that the multi-dimensional scale parameter \(s\) will be of the form \(s = (s_1, \ldots , s_N, \tau )\).

2.2.1 Additional requirements regarding spatio-temporal geometry

Galilean covariance For time-dependent image data, it is natural to also take into explicit account the basic fact that objects may move relative to the observer. Specifically, constant velocity motion

where \(v = (v_1, v_2)^T\) denotes the image velocity, is referred to as a Galilean transformation of space–time

corresponding to

If we assume that the image intensities at corresponding image points remain constant over time \(t\) (the constant brightness assumption),Footnote 8 such a Galilean model can be regarded as a local linear approximation of a more general motion field \(x(t) = (x_1(t), x_2(t))^T\).

Analogously to the previously described affine covariance property over a spatial domain, a desirable property of an idealized vision system is that it should be able to compute an internal representation \(L(x, t;\; s)\) of a spatio-temporal pattern \(f(x, t)\) that can be related or matched to the internal representation of some other spatio-temporal pattern \(f'(x', t')\) that moves with a different velocity \(v\) relative to the observer. Therefore, we would like to have the ability to relate an internal representation of this pattern \(L'(x', t';\; s')\) to the internal representation \(L(x, t;\; s)\) of the original pattern for some appropriately transformed scale parameter \(s' = G_v(s)\):

corresponding to

as illustrated in the commutative diagram in Fig. 5. Such a property is referred to as Galilean covariance.

Commutative diagram for a spatio-temporal scale-space representation computed under a Galilean transformation of space–time. Such a constant velocity motion may, for example, represent a local linear approximation of the projected motion field for corresponding image points under relative motions between objects in the world and the visual observer

Again, within the class of linear transformations \(\mathcal{T}_s\), it is not possible to realize such a Galilean covariance property within a spatio-temporal scale concept based solely on a scalar spatial scale parameter \(s \in {\mathbb R}\) and a scalar temporal scale parameter \(\tau \in {\mathbb R}\). As will be shown later, Galilean covariance can, however, be achieved within a four-parameter linear spatio-temporal scale space.

Temporal causality When dealing with time-dependent image data, another structural requirement arises because of the basic fact that the future cannot be accessed. Hence, for any real-time computer vision system or a biological organism that interacts with the world, the convolution kernel must be time-causal in the sense that its values must be zero regarding any access to the future

When analyzing pre-recorded video data in an off-line situation, we may, however, decide to relax this condition to simplify the computations.

2.2.2 Specific constraints regarding a real-time system

Time recursivity and temporal memory When dealing with spatio-temporal image data in a real-time setting, we cannot expect the vision system to have direct access to all information from the past, since we cannot assume a computer vision system or a biological organism to store a complete recording of all visual information it has seen.

If we assume that the vision system should compute internal image representations at different temporal scales, the only reasonable approach will therefore be that these computations have to be expressed in terms of computations on some internal temporal buffer \(M(x, t)\), which we assume is to be much more condensed than a complete video recording of the past. Such an internal representation is referred to as a temporal memory, and the restriction of the set of possible computations to a combination of the current image data \(f(x, t)\) with such a compact temporal memory \(M(x, t)\) is referred to as time recursivity. Specifically, this temporal memory \(M(x, t)\) must be updated over time \(t\) according to some time-recursive model.

Given the assumption that the vision system should compute an internal scale-space representation \(L(x, t; s, \tau )\) at different temporal scales \(\tau \) (where we have now changed the notation and separated the spatial scale parameter \(s\) from the temporal scale parameter \(\tau \)), a particularly attractive solution is if this internal representation can also serve as the internal temporal memory \(M(x, t;\; \tau )\) for corresponding temporal scales. Let us therefore require that the spatio-temporal scale-space representation \(L(x, t; s, \tau )\) should be updated according to a time-recursive evolution equation over scale and time of the form (Lindeberg 2011, section 5.1.3, page 57)

for any pair of scale levels \(s_2 \ge s_1\) and any two time moments \(t_2 \ge t_1\), where

-

the kernel \(U\) performs the update on the internal representation \(L\) while simultaneously respecting a cascade property for \(L\) over spatial scales \(s\) and

-

the kernel \(h\) incorporates new information from the new image data \(f(x, t)\) that arrive between \(t= t_1\) and \(t= t_2\).

Non-enhancement of local extrema in a time-causal and time-recursive setting When formalizing the notion of a smoothing operation in a time-causal and time-recursive context, where the internal temporal scale levels \(\tau \) are also used as the internal temporal buffer of past information, it turns out to be both useful and necessary to reformulate the requirement of non-enhancement of local extrema in the following way, to take into the fact that at any temporal moment \(t_0\), we will have access to image data over space \(x\), spatial scales \(s\), and temporal scales \(\tau \), but no direct access to image data in the future or from the past:

If at some spatial scale \(s_0\) and time moment \(t_0\) a point \((x_0, \tau _0)\) is a local maximum (minimum) for the mapping \((x, \tau ) \rightarrow L(x, t_0;\; s_0, \tau )\), then for every positive direction \(u = (u_1, \ldots , u_N, u_{N+1})\) in the \(N+1\)-dimensional space consisting of the \(N\)-dimensional spatial scale parameter \(s\) complemented by time \(t\), the directional derivative \((\mathcal{D}_u L)(x, t;\; s, \tau )\) of the spatio-temporal scale-space representation in this direction \(u\) must satisfy (Lindeberg 2011, equations (79)–(80), page 52):

This formulation constitutes a generalization of the non-enhancement condition (18) from a regular multi-parameter scale space to a time-recursive multi-parameter scale space. Both of these formulations imply a strong smoothing effect over spatial scales \(s\). For a non-causal multi-parameter scale-space applied to space–time in a non-recursive setting where time \(t\) is treated in an essentially similar way as space \(x\), non-enhancement of local extrema according to (18) implies a strong evolution property over temporal scales \(\tau \). The conceptual difference with this time-recursive formulation is that the strong temporal smoothing property, as imposed by non-enhancement of local extrema, is instead expressed in terms of the evolution properties over time \(t\) and not over temporal scales \(\tau \).

Notably, this formulation of a temporal evolution property has an interesting interpretation of enforcing a smooth (stabilizing) temporal behavior of the internal representation \(L(x, t;\; s, \tau )\) of the surrounding world as the spatio-temporal data \(f(x, t)\) varies over time \(t\).

2.3 Influence of illumination variations

The above-mentioned symmetry requirements essentially refer to the geometry of space and space–time and its relation to image measurements over non-infinitesimal regions over space or space–time as formalized into the notion of a scale-space representation. Regarding the actual image intensities, these have so far been assumed to be given beforehand.

We may, however, consider different ways of parameterizing the intensity domain. Essentially, any monotonic intensity transformation will preserve the ordering of the intensity values from dark to bright. The perceptual impression of an image may, however, be substantially different after a nonlinear intensity transformation. Hence, one may ask whether we should assume the image data \(f\) to be proportional to image irradiance \(f \sim I\) (in units of power per unit area), some self-similar power of image irradiance \(f \sim I^{\gamma }\) or whether there is a better choice?

Logarithmic brightness scale Given the huge range of brightness variations under imaging natural conditions (a range corresponding to a factor of the order of \(10^{10}\) between the darkest and brightest cases for human vision), it is natural to represent the image brightness on a logarithmic scale:

Such a logarithmic scale is also reflected in the construction of most visual sensors (cameras), where aperture steps and exposure times are logarithmically distributed to handle the large range of brightness variations that occur under varying illumination conditions. A local adaptation of the sensitivity of the photoreceptors to an average illumination level can also be seen as implementing an approximation of a logarithmic transformation, provided that both the baseline and the sensitivity regarding deviations from the baseline are adapted in a corresponding manner.

2.3.1 Behavior under illumination variations: spatial image data

In this section, we will express properties of a logarithmic brightness scale in relation to a physical illumination model and image measurements in terms of receptive fields.

Projection model Consider a planar perspective camera model with \(X = (X_1, X_2, X_3)^T\) denoting world coordinates with the \(X_3\)-direction perpendicular to the image plane and with the image coordinates \((x_1, x_2)^T\) for simplicity expressed in units of the focal length \(f\), leading to the perspective projection equations (assuming that \(X_3 > 0\))

Let us furthermore assume that the incoming light is collected by a thin lens with diameter \(d\).

Model for image irradiance Then, given that the image irradiance \(I\) is proportional to the surface radiance \(R\) along the direction from a point \(X\) on the surface toward its projection \(X_\mathrm{im} = (x_1, x_2, 1)^T \times f\) on the image plane

or more specifically (Horn 1986, page 208)

with the ratio \(\tilde{f} = f/d\) referred to as the effective f-number, and with a spatially varying reduction in image intensities toward the periphery of the image (natural vignetting) determined by the geometric factorFootnote 9 \(\cos ^4 \phi (X)\) with

From this expression, it is clear that the proportionality constant in Eq. (38) depends on (i) the internal geometry of the visual sensor as captured by the constant \(C_\mathrm{cam}(\tilde{f})\) and (ii) the angle \(\phi (x)\) between the viewing direction and the surface normal of the image plane.

Model for surface reflectance Let us next assume that the surface reflectance \(R\) in the direction from the point \(X = (X_1, X_2, X_3)^T\) on the surface toward its projection \(X_\mathrm{im} = (x_1, x_2, 1)^T\) on the image planed can be modelled as proportional to an albedo factor \(\rho \) determined by the surface material and the amount of incoming illumination \(i\)

with the implicit assumption that the same amount of light is emitted along all directions from the surface.

This model has a similar behavior as Lambertian surface model, with the extension that the surface may be regarded as “gray” by not reflecting all incident light. Please note, however, that this reflectance model constitutes a substantial simplification of the bidirectional reflectance function and does not comprise, e.g., specularities or materials with diffraction grating effects.

For an illumination field that is not determined by a point source only, the entity \(i(X)\) can be seen as the integration of the incoming light \(i(X, \theta , \varphi )\) from all directions on the northern hemisphere \(H\) defined by the spherical coordinates \(\theta \in [0, \pi /2]\) and \(\varphi \in [0, 2 \pi ]\) relative to the surface normal at \(X\) such that

where the factor \(\cos \theta \) accounts for foreshortening and the factor \(\sin \theta \) is the integration measure for spherical coordinates.

Combined brightness model By combining the illumination model in Eqs. (39) and (41) with the logarithmic brightness scale in Eq. (36) and by redefining the functions \(\rho (X)\) and \(i(X)\) such that their values for three-dimensional world coordinates \(X\) can be accessed from corresponding projected image coordinates \(x\) according to \(\rho (x)\) and \(i(x)\), we obtain

which provides an explicit model for how the image brightness \(f\) depends on

-

(i)

properties of surfaces of objects in the world as condensed into the spatially dependent albedo factor \(\rho (x)\) with the implicit understanding that this entity may in general refer to different surfaces in the world depending on the viewing direction \((x_1, x_2, 1)^T\) and thus the image position \(x = (x_1, x_2)^T\),

-

(ii)

properties of the illumination field as reflected in the spatially dependent illumination \(i(x)\), which also may refer to the amount of incoming light on different surfaces in the world depending on the value of \(x\),

-

(iii)

geometric properties of the camera as condensed into a dependency on the effective \(f\)-number \(\tilde{f}\) captured by \(C_\mathrm{cam}(\tilde{f})\), and

-

(iv)

a geometric natural vignetting effect of the explicit form \(V(x) = V(x_1, x_2) = - 2 \log (1 + x_1^2 + x_2^2 )\).

In the following, we shall develop consequences of this image formation model concerning invariance properties to the effective \(f\)-number and multiplicative illumination transformations, given the specific choice of a logarithmic brightness scale.

Invariance to the effective f-number A noteworthy property of the model in Eq. (43) is that if we disregard effects of focal blur (not modelled here), then the influence due to the internal focal distance \(f\) and the diameter \(d\) of the camera will be cancelled, if we differentiate this expression with respect to space \(x\)

where \(\alpha = (\alpha _1, \alpha _2)\) constitutes a multi-index notation. Hence, with a logarithmic brightness scale (and disregarding effects of focal blur), any spatial derivative operator will be invariant to variations in the effective f-number (as well as other multiplicative exposure parameters).

Invariance to multiplicative illumination transformations Moreover, if we consider image measurements from the same scene using a different illumination field \(i'(x)\) proportional to the original illumination field

then it follows that the influence of \(C_\mathrm{illum}\)

will also be cancelled after spatial differentiation

Therefore, with a logarithmic brightness scale, any spatial derivative operator will be invariant to multiplicative illumination transformations. The influence of the constant \(\log C_\mathrm{illum}\) will also disappear after filtering with a kernel having integral zero, i.e., equal positive and negative contributions.

Relative measurements of physical entities Furthermore, regarding, e.g., any first-order derivative \(\partial _{x_k}\) with \(k\) equal to 1 or 2

the interpretation of this first-order spatial derivative operator is that it responds to relative variations of the physical entities surface albedo \(\rho (x)\) and the illumination \(i(x)\) (where we assume these quantities to always be strictly positive and never becoming equal to zero):

-

For a smooth surface with a spatially dependent surface pattern \(\rho (X)\), the first term \(\partial _{x_k} \rho /\rho \) reflects inherent relative spatial variations of this surface pattern as deformed by the perspective projection model in analogy with the affine deformation model (24).

-

The second term \(\partial _{x_k} i/i\) reflects relative spatial variations in the illumination field \(i\) as arising from the interaction between the external illumination field \(i(X, \theta (X), \varphi (X))\) and the local surface geometry \((\theta (X), \varphi (X))\) at every surface point \(X\) according to (42).

-

The third term \((\partial _{x_k} V)(x) = (\partial _{x_k} V)(x_1, x_2) = 4 x_k/(1 + x_1^2 + x_2^2)\) constitutes a geometric bias due to vignetting effects inherent to the camera. (Please note that the image coordinates in this treatment are expressed in units of the focal length with \(|x| = \sqrt{x_1^2 + x_2^2} \ll 1\) in the central field of view.) This term will disappear for a spherical camera geometry.

If the surface albedo \(\rho (x)\) and the illumination field \(i(x)\) are also measured on a logarithmic scale, then the algebraic relationship between derivatives of image intensity \(f\) and derivatives of the physical entities \(\rho (x)\) and \(i(x)\) will be simple also for any order of differentiation

Invariance properties of spatial receptive fields involving spatial derivatives There is an interesting relationship between the cancelling of multiplicative illumination transformations in Eq. (44) and image measurements in terms of receptive fields. If we consider the derived internal scale-space representation \(L\) of a signal \(f\) and compute any spatial derivative of this representation according to

then it follows that the effect of any multiplicative illumination transformation will be invisible to image measurements in terms of receptive fields \(\partial _{x^{\alpha }} \mathcal{T}_s\) that involve spatial derivatives. Similarly, besides effects of focal blur, the intensity dependency due to variations of the effective \(f\)-number \(\tilde{f}\) will also cancel. Hence, with a logarithmic brightness scale, image measurements in terms of receptive fields that involve spatial derivatives (or more generally any receptive field with its integral equal to zero) will be invariant under multiplicative illumination transformations and exposure conditions, with the latter corresponding to variations of the exposure time, the aperture and the ISO number of the sensor in a digital camera, or the diameter of the pupil and the photosensitivity of the photoreceptors in biological vision. The remaining response is a superposition of relative variations in surface patterns and illumination variations, with a position-dependent bias due to the vignetting effect.

It should be noted, however, that some care is needed concerning the differentiability properties of the image data. For images acquired from a natural world, there will in general be discontinuities in image brightness \(f\), due to discontinuities in depth, surface orientation, illumination, or the albedo of the surface patterns, which implies that we would generally expect to obtain strong spikes in the output if plain derivative operators would be applied to natural image data. The use of receptive field-based derivative operations, however, regularizes this problem. For the families of smoothing kernels \(T(\cdot ;\, s)\) that can be derived from the requirement of non-enhancement of local extrema, it can be shown that the scale-space representation \(L(\cdot ;\, s)\) will indeed become infinitely differentiable after any non-infinitesimal amount of smoothing \(s > 0\) if we assume bounded brightness data \(|f(x)| < C\). Hence, the output from the receptive field-based derivative operators \(\partial _{x^{\alpha }} T(\cdot ;\, s)\) will always be well defined and the validity of the results in Eqs. (44) and (50) can be formally established with \((\partial _{x^{\alpha }} f)(x)\) replaced by \((\partial _{x^{\alpha }} L)(x;\; s)\):

Indeed, the notion of receptive field-based derivative approximations can be regarded as necessary to make these computations of image derivatives valid. The assumption of linearity as a basic scale-space axiom in Eq. (2) can also be motivated from the form of this expression, by making it possible to interpret the receptive field responses as a linear superposition of relative variations in surface patterns and relative variations in the illumination field. Such an interpretation would not be possible if the smoothing operator \(\mathcal{T}_s\) would be nonlinear.

Scale-space properties of receptive field measurements involving spatial derivatives Due to the linearity property, receptive field measurements involving spatial derivatives \(\partial _{x^{\alpha }} L\) will possess essentially similar scale-space properties over scales as possessed by the zero-order scale-space representation \(L\) of the original illumination pattern \(f\) as described in Sect. 2.1.3, with the main difference that the limit case in Eq. (7) when the scale parameter \(s\) tends to zero has to be replaced by

provided that the image data \(f\) have sufficient differentiability properties.

2.3.2 Behavior under illumination variations: spatio-temporal image data

Invariance properties of spatial receptive fields involving spatio-temporal derivatives For spatio-temporal image data, the corresponding image formation model becomes

if we allow the effective \(f\)-number to depend on time \(t\). If we measure such spatio-temporal image data using a spatio-temporal receptive field with a spatio-temporal scale parameter \(s = (s_1, \ldots , s_N, \tau )\) that involves integration over both space \(x\) and time \(t\), and if we differentiate such a representation with respect to both space and time

then it follows that the influence of the possibly time-dependent effective \(f\)-number will be cancelled after any spatial derivative operation with \(|\alpha | > 0\) (and so will the influence be of any other possibly time-dependent multiplicative exposure control mechanism).

Regarding temporal derivatives, it follows that the influence of the vignetting effect \(V(x)\) will be cancelled by any temporal derivative operator with \(\beta \ge 0\). The temporal derivative operator will also suppress the effect of any other solely spatial illumination variation.

Galilean covariant temporal derivative concept When considering temporal derivatives of spatio-temporal data computed for an object that moves with image velocity \(v = (v_1, v_2)^T\) relative to the observer, it is natural to consider velocity-adapted temporal derivatives \(\partial _{\bar{t}}\) along the direction of motion according to

so as to obtain a temporal derivative concept that commutes with Galilean transformations. Such velocity-adapted temporal derivatives make it possible to compute Galilean covariant image representations based on receptive fields involving temporal derivatives, in analogy with the previous treatment of Galilean covariance in connection with Eq. (31).

2.3.3 Summary regarding intensity and illumination variations

To summarize, this analysis shows that with image intensities parameterized on a logarithmic brightness scale and provided that the smoothing operation \(\mathcal{T}_s\) has sufficient regularizing properties to make the computation of image derivatives well defined, receptive field responses in terms of spatial and spatio-temporal derivatives have a direct physical interpretation as the superposition of

-

relative variations in the albedo of the observed surface patterns corresponding to the term \(\partial _{x^{\alpha } t^{\beta }} \left( \mathcal{T}_s \, \log \rho (x) \right) \) in (54), and

-

relative variations in the illumination field corresponding to the term \(\partial _{x^{\alpha } t^{\beta }} \left( \mathcal{T}_s \, \log i(x) \right) \) in (54)

with a geometric bias caused by vignetting effects that disappears for temporal derivatives with \(\beta > 0\). Moreover, such receptive field measurements are invariant under multiplicative illumination transformations as well as other multiplicative exposure control mechanisms.

3 Spatial domain with pure intensity information

We shall now describe how the structural requirements on an idealized vision system as formulated in Sect. 2.1 restrict the class of possible image operations at the first stages of visual processing. For image data \(f :{\mathbb R}^2 \rightarrow {\mathbb R}\) defined over a two-dimensional spatial domain, let us assume that the first stage of visual processing as represented by the operator \(\mathcal{T}_s\) should be (i) linear, (ii) shift invariant, and (iii) obey a semigroup structure over spatial scales \(s\), where we also have to assume (iv) certain regularity properties of the semigroup \(\mathcal{T}_s\) over scale \(s\) in terms of Sobolev normsFootnote 10 to guarantee sufficient differentiability properties with respect to space \(x \in {\mathbb R}^2\) and scale \(s\). Let us furthermore require (v) non-enhancement of local extrema to hold for any smooth image function \(f \in C^{\infty }({\mathbb R}^2) \cap L^1({\mathbb R}^2)\).

Then, it can be shown (Lindeberg 2011, Theorem 5, page 42) that these conditions together imply that the scale-space family \(L\) must satisfy a diffusion equation of the form

with the notation \(\nabla _x = (\partial _{x_1}, \partial _{x_2})^T\) for the gradient operator, and with initial condition \(L(\cdot ;\; 0) = f(\cdot )\) for some positive semi-definite \(2 \times 2\) covariance matrix \(\varSigma _0\) and for some 2D vector \(\delta _0\), where the covariance matrix \(\varSigma _0\) describes the shape of the underlying smoothing kernel and the vector \(\delta _0\) describes the spatial offset or the drift velocity of a non-symmetric smoothing kernel. In terms of convolution transformations, this scale space can equivalently be constructed by convolution with affine and translated Gaussian kernels

which for a given \(\varSigma _s = s \, \varSigma _0\) and a given \(\delta _s = s \, \delta _0\) satisfy the diffusion equation (56).

3.1 Gaussian receptive fields

If we require the corresponding convolution kernels to be rotationally symmetric, then it follows that they will be Gaussians

with corresponding Gaussian derivative operators

(with \(\alpha = (\alpha _1, \alpha _2)\) where \(\alpha _1\) and \(\alpha _2\) denote the order of differentiation in the \(x_1\)- and \(x_2\)-directions, respectively) as shown in Fig. 6 with the corresponding one-dimensional Gaussian kernel and its Gaussian derivatives of the form:

Such Gaussian functions have been previously used for modelling biological vision by Young (1987), who has shown that there are receptive fields in the striate cortex that can be well modelled by Gaussian derivatives up to order four. More generally, these Gaussian derivative operators or approximations thereof can be used as a general basis for expressing image operations such as feature detection, feature classification, surface shape, image matching, and image-based recognition (Iijima 1962; Witkin 1983; Koenderink 1984; Koenderink and Doorn 1992; Lindeberg 1994a, b, 1998a, b, 2008; Florack 1997; Schiele and Crowley 1996, 2000; Lowe 1999, 2004; Chomat et al. 2000; ter Haar Romeny 2003; Linde and Lindeberg 2004, 2012; Bay et al. 2008). Specifically, this receptive field model makes it possible to compute scale-invariant image features and image descriptors (Crowley 1981; Crowley and Stern 1984; Lindeberg 1998a, b, 1999, 2013; Lowe 1999, 2004; Schiele and Crowley 2000; Chomat et al. 2000; Bay et al. 2008). Other necessity results concerning Gaussian and Gaussian derivative kernels have been presented by Iijima (1962), Koenderink (1984), Koenderink and Doorn (1992), Babaud et al. (1986), Yuille and Poggio (1986), Lindeberg (1990, 1994b, 1996), and Florack and Haar Romeny (1992).

Spatial receptive fields formed by the 2D Gaussian kernel with its partial derivatives up to order two. The corresponding family of receptive fields is closed under translations, rotations, and scaling transformations, meaning that if the underlying image is subject to a set of such image transformations, then it will always be possible to find some possibly other receptive field such that the receptive field responses of the original image and the transformed image can be matched

3.2 Affine-adapted Gaussian receptive fields

If we relax the requirement of rotational symmetry into a requirement of mirror symmetry through the origin, then it follows that the convolution kernels must instead be affine Gaussian kernels

where \(\varSigma \) denotes any symmetric positive semi-definite \(2 \times 2\) matrix. This affine scale-space concept is closed under affine transformations, meaning that if we for two affine-related images

define corresponding scale-space representations according to

then these scale-space representations will be related according to (Lindeberg 1994b; Lindeberg and Gårding 1997)

where

In other words, given that an image \(f_L\) is affine transformed into an image \(f_R\), it will always be possible to find a transformation between the scale parameters \(s_L\) and \(s_R\) in the two domains that make it possible to match the corresponding derived internal representations \(L(\cdot ;\; s_L)\) and \(R(\cdot ;\, s_R)\).

Figure 7 shows a few examples of such kernels in different directions with the covariance matrix parameterized according to

with \(\lambda _1\) and \(\lambda _2\) denoting the eigenvalues and \(\theta \) the orientation. Directional derivatives of these kernels can in turn be obtained from linear combinations of partial derivative operators according to

This “steerability” property is a basic consequence of the definition of directional derivatives and has been popularized for image processing applications by Freeman and Adelson (1991).

Spatial receptive fields formed by affine Gaussian kernels and directional derivatives of these, here using three different covariance matrices \(\varSigma _1\), \(\varSigma _2\), and \(\varSigma _3\) corresponding to the directions \(\theta _1 = \pi /6\), \(\theta _2 = \pi /3\), and \(\theta _3 = 2\pi /3\) of the major eigendirection of the covariance matrix and with first- and second-order directional derivatives computed in the corresponding orthogonal directions \(\varphi _1\), \(\varphi _2\), and \(\varphi _3\). The corresponding family of receptive fields is closed under general affine transformations of the spatial domain, including translations, rotations, scaling transformations, and perspective foreshortening (although this figure only illustrates variabilities in the orientation of the filter, thereby disregarding variations in both size and degree of elongation)

With respect to biological vision, the affine Gaussian kernels as well as directional derivatives of these can be used for modelling receptive fields that are oriented in the spatial domain, as will be described in connection with Eq. (111) in Sect. 6. For computational vision, they can be used for computing affine invariant image features and image descriptors for, e.g., cues to surface shape, image-based matching, and recognition (Lindeberg 1994b; Lindeberg and Gårding 1997; Baumberg 2000; Mikolajczyk and Schmid 2004; Tuytelaars and Gool 2004; Lazebnik et al. 2005; Rothganger et al. 2006).

Figure 8 shows the distributions of affine receptive fields of different orientations and degrees of orientation as they arise from local linearizations of a perspective projection model if we assume that the set of surface directions in the world is on average uniformly distributed in the world and if the distributions of the local surface patterns on these object surfaces are in turn without dominant directional bias and uncoupled to the orientations of the local surface patches. In our idealized model of receptive fields, all these receptive fields can be thought of as being present at every position in image space and corresponding to a uniform distribution on a hemisphere.

Distributions of affine Gaussian receptive fields corresponding to a uniform distribution on a hemisphere regarding (top) zero-order smoothing kernels and (bottom) first-order derivatives. In the most idealized version of the theory, one can think of all affine receptive fields as being present at any position in the image domain. When restricted to a limited number of receptive fields in an actual implementation, there is also an issue of distributing a fixed number of receptive fields over the spatial coordinates \(x\) and the filter parameters \(\varSigma \)

3.3 Necessity of derived receptive fields in terms of derivatives

Due to the linearity of the differential equation (57), which has been derived by necessity from the structural requirements, it follows that also the result of applying a linear operator \(\mathcal{D}\) to the solution \(L\) will satisfy the differential equation, however, with a different initial condition

The result of applying a linear operator \(\mathcal{D}\) to the scale-space representation \(L\) will therefore satisfy the above-mentioned structural requirements of linearity, shift invariance, the weaker form of rotational invariance at the group levelFootnote 11 and non-enhancement of local extrema, with the semigroup structure (8) replaced by the cascade property

Then, one may ask whether any linear operator \(\mathcal{D}\) would be reasonable? From the requirement of scale invariance, however, if follows that the operator \(\mathcal{D}\) must not be allowed to have non-infinitesimal support, since a non-infinitesimal support \(s_0 > 0\) would violate the requirement of self-similarity over scale (10) and it would not be possible to perform image measurements at a scale level lower than \(s_0\). Thus, any receptive field operator derived from the scale-space representation in a manner compatible with the structural arguments must correspond to local derivatives. In the illustrations above, partial derivatives and directional derivatives up to order two have been shown.

For directional derivatives that have been derived from elongated kernels whose underlying zero-order convolution kernels are not rotationally symmetric, it should be noted that we have aligned the directions of the directional derivative operators to the orientations of the underlying kernels. A structural motivation for making such an alignment can be obtained from a requirement of a weaker form of rotational symmetry at the group level. If we would like the family of receptive fields to be rotationally symmetric as a group, then it is natural to require the directional derivative operators to be transformed in a similar way as the underlying kernels.

4 Spatial domain with color information

To define a corresponding scale-space concept for color images, the simplest approach would be by computing a Gaussian scale-space representation for each color channel individually. Since the values of the color channels will usually by highly correlated, it is, however, preferable to decorrelate the dependencies by computing a color-opponent representation. Such a representation is also in good agreement with human vision, where a separation into red/green and yellow/blue color-opponent channels takes place at an early stage in the visual pathways.

4.1 Gaussian color-opponent receptive fields

Given three RGB channels obtained from a color sensor, consider a color-opponent transformation of the form (Hall et al. 2000)

where yellow is approximated by the average of the \(R\) and \(G\) channels \(Y = (R + G)/2\) and \(f = (R + G + B)/3\) is defined as a channel of pure intensity information. Then, a Gaussian color-opponent scale-space representation \((C^{(1)}, C^{(2)})\) can be defined by applying Gaussian convolution to the color-opponent channels \((c^{(1)}, c^{(2)})^T\):

Figure 9 shows equivalent spatio-chromatic receptive fields corresponding to the application of Gaussian derivative operators according to (59) to such color-opponent channels. Figure 10 shows examples of applying corresponding directional derivatives according to (69).

In Hall et al. (2000), Linde and Lindeberg (2004, 2012), and Sande et al. (2010), it is shown how such spatio-chromatic receptive fields in combination with regular spatial receptive fields can constitute an effective basis for object recognition.

Another type of Gaussian color model has been proposed by Koenderink and later used by Geusebroek and his co-workers (Burghouts and Geusebroek 2009) with receptive fields defined over the spectrum of wavelengths in the color spectrum, corresponding to zero-, first-, and second-order derivatives with respect to wavelength.

5 Spatio-temporal image data

5.1 Non-causal spatio-temporal receptive fields

Let us first apply a similar way of reasoning as in Sect. 3 with space \(x \in {\mathbb R}^2\) replaced by space–time \((x, t)^T \in {\mathbb R}^2 \times {\mathbb R}\) and disregarding temporal causality, thereby allowing unlimited access to information over both space and time. Given image data \(f :{\mathbb R}^2 \times {\mathbb R}\rightarrow {\mathbb R}\) defined over a 2+1D spatio-temporal domain, let us therefore again assume that the first stage of visual processing as represented by the operator \(\mathcal{T}_s\) should be (i) linear, (ii) shift invariant, and (iii) obey a semigroup structure over both spatial and temporal scales \(s\), where we also assume (iv) certain regularity properties of the semigroup \(\mathcal{T}_s\) over scale \(s\) in terms of Sobolev normsFootnote 12 to guarantee sufficient differentiability properties with respect to space \(x\), time \(t\) and spatio-temporal scales \(s\). Let us furthermore require (iv) non-enhancement of local extrema to hold for any smooth image function \(f \in C^{\infty }({\mathbb R}^2 \times {\mathbb R}) \cap L^1({\mathbb R}^2 \times {\mathbb R})\) and for any positive scale direction \(s\).

Then, it follows from (Lindeberg (2011), Theorem 5, page 42) that the scale-space representation over a 2+1D spatio-temporal domain must satisfy

for some \(3 \times 3\) covariance matrix \(\varSigma _0\) and some 3D vector \(\delta _0\) with \(\nabla _{(x, t)} = (\partial _{x_1}, \partial _{x_2}, \partial _t)^T\).

In terms of convolution kernels, the zero-order receptive fields will then be spatio-temporal Gaussian kernels

with \(p = (x, t)^T = (x_1, x_2, t)^T\),

where (i) \(\lambda _1\), \(\lambda _2\), and \(\theta \) determine the spatial extent, (ii) \(\lambda _t\) determines the temporal extent, (iii) \(v = (v_1, v_2)^T\) denotes the image velocity and (iv) \(\delta \) represents a temporal delay and corresponding to a coupling between the spatial and temporal dimensions of the form

where \(\bar{g}(t;\; \tau , \delta )\) denotes a one-dimensional Gaussian kernel over time with temporal extent \(\tau \) and temporal delay \(\delta \). From the corresponding Gaussian spatio-temporal scale space

spatio-temporal derivatives can then be defined according to

with corresponding velocity-adapted temporal derivatives

as illustrated in Figs. 12 and 13 for the case of a 1+1D space–time. Motivated by the requirement of Galilean covariance, it is natural to align the directions \(v\) in space–time for which these velocity-adapted spatio-temporal derivatives are computed to the velocity values used in the underlying zero-order spatio-temporal kernels, since the resulting velocity-adapted spatio-temporal derivatives will then be Galilean covariant. Such receptive fields or approximations thereof can be used for modelling spatio-temporal receptive fields in biological vision (Lindeberg 1997, 2001, 2011; Young et al. 2001; Young RA, Lesperance 2001) and for computing spatio-temporal image features and image descriptors for spatio-temporal recognition in computer vision (Zelnik-Manor and Irani 2001; Laptev and Lindeberg 2003, 2004a, b; Laptev et al. 2007; Willems et al. 2008).

Parameterization of the spatio-temporal covariance matrix for the Gaussian spatio-temporal scale space in terms of the spatial eigenvalues \(\lambda _1\) and \(\lambda _2\) with the associated orientation \(\theta \) for the purely spatial covariance matrix, the image velocity \(v = (v_1, v_2)^T\), and the amount of temporal smoothing \(\lambda _t\)

Velocity-adapted spatio-temporal kernels \(g_{\bar{x}^{\alpha }\bar{t}^{\gamma }}(x, t;\; s, \tau , v, \delta )\) up to order two obtained from the Gaussian spatio-temporal scale space in the case of a 1+1D space–time (\(s = 1\), \(\tau = 1\), \(v = 0.75\), \(\delta = 2\)) (horizontal axis: space \(x\), vertical axis: time \(t\))

Transformation property under Galilean transformations Under a Galilean transformation of space–time (27), in matrix form written

corresponding to

the corresponding Gaussian spatio-temporal representations are related in an algebraically similar way (64)–(66) as the affine Gaussian scale space with the affine transformation matrix \(A\) replaced by a Galilean transformation matrix \(G_v\). In other words, if two spatio-temporal image patterns \(f_L\) and \(f_R\) are related by a Galilean transformation encompassing a translation \(\Delta p = (\Delta x_1, \Delta x_2, \Delta t)^T\) in space–time

and if corresponding spatio-temporal scale-space representations are defined according to

for general spatio-temporal covariance matrices \(\varSigma _L\) and \(\varSigma _R\) of the form (77), then these spatio-temporal scale-space representations will be related according to

where

and

5.2 Time-causal spatio-temporal receptive fields

If we on the other hand with regard to real-time biological vision want to respect both temporal causality and temporal recursivity, we obtain different families of receptive fields. Specifically, two different families of time-causal receptive fields can be derived depending on whether we require (i) a continuous semigroup structure over a continuum of temporal scales or (ii) fixate the temporal scale levels to be discrete a priori.

Time-causal semigroup Given the requirements of (i) linearity and (ii) spatial and temporal shift invariance, we require the scale-space kernels to be (iii) time-causal and require the visual front end to be (iv) time recursive in the sense that the internal image representations \(L(x, t;\; s, \tau )\) at different spatial scales \(s\) and temporal scales \(\tau \) do also constitute a sufficient internal temporal memory \(M(x, t)\) of the past, without any further need for temporal buffering. To adapt the convolution semigroup structure to a time-recursive setting, we require the spatio-temporal scale-space concept

to be generated by a (v) two-parameter semigroup over spatial scales \(s\) and time \(t\)

Then, it can be shown (Lindeberg 2011, Theorem 17, page 78) that provided we impose (vi) certain regularity properties on the semigroup in terms of Sobolev norms to ensure differentiability (Lindeberg 2011, Appendix E), then (vii) the time-recursive formulation of non-enhancement of local extrema in Eq. (34) with respect to a continuum of both spatial and temporal scale levels implies that the semigroup must satisfy the following system of diffusion equations

In terms of receptive fields, this spatio-temporal scale space can be computed by convolution kernels of the form

where

-

\(g(x - v t;\; s;\; \varSigma )\) is a velocity-adapted 2D affine Gaussian kernel with spatial covariance matrix \(\varSigma \) and

-

\(\phi (t;\; \tau )\) is a time-causal smoothing kernel over time with temporal scale parameter \(\tau \), which is related to the regular one-dimensional Gaussian kernel according to \(\phi (t;\; \tau ) = - \partial _{\tau } g(\tau ;\; t)\). (Please note the shift of the order of the arguments between \(\phi \) and \(g\).)

From these kernels, spatio-temporal partial derivatives and velocity-adapted derivatives can be computed in a corresponding manner (81) and (82) as for the Gaussian spatio-temporal scale-space concept. Figures 14 and 15 show examples of such time-causal spatio-temporal kernels with their partial spatio-temporal derivatives in the space–time separable case with \(v = 0\)