Abstract

Observations show that the surface diurnal temperature range (DTR) has decreased since 1950s over most global land areas due to a smaller warming in maximum temperatures (T max) than in minimum temperatures (T min). This paper analyzes the trends and variability in T max, T min, and DTR over land in observations and 48 simulations from 12 global coupled atmosphere-ocean general circulation models for the later half of the 20th century. It uses the modeled changes in surface downward solar and longwave radiation to interpret the modeled temperature changes. When anthropogenic and natural forcings are included, the models generally reproduce observed major features of the warming of T max and T min and the reduction of DTR. As expected the greenhouse gases enhanced surface downward longwave radiation (DLW) explains most of the warming of T max and T min while decreased surface downward shortwave radiation (DSW) due to increasing aerosols and water vapor contributes most to the decreases in DTR in the models. When only natural forcings are used, none of the observed trends are simulated. The simulated DTR decreases are much smaller than the observed (mainly due to the small simulated T min trend) but still outside the range of natural internal variability estimated from the models. The much larger observed decrease in DTR suggests the possibility of additional regional effects of anthropogenic forcing that the models can not realistically simulate, likely connected to changes in cloud cover, precipitation, and soil moisture. The small magnitude of the simulated DTR trends may be attributed to the lack of an increasing trend in cloud cover and deficiencies in charactering aerosols and important surface and boundary-layer processes in the models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Most of the observed increase in global average temperatures since the mid-20th century has been attributed to the observed increase in anthropogenic greenhouse gases (GHGs) (IPCC 2007). One distinct climate feature associated with this warming over land is the widespread decrease of diurnal temperature range (DTR) due to a larger warming in minimum air temperature (T min) than in maximum air temperature (T max) (Karl et al. 1993; Easterling et al. 1997). Observational analyses have attributed the reduction of DTR to increases primarily of cloud cover and secondarily of precipitation and soil moisture (e.g., Dai et al. 1999). The observed DTR trends may be affected by the large-scale effects of increased GHGs and aerosols (Zhou et al. 2007, 2008) and also regional changes in land surface properties (Collatz et al. 2000; Zhou et al. 2004; 2007).

Surface temperature extremes have likely been affected by human activities and many indicators of temperature extremes show changes that are consistent with warming (IPCC 2007). Global coupled atmosphere-ocean general circulation models (AOGCMs) are generally able to reproduce the observed warming in the 20th century when natural (i.e., volcanic aerosols and solar variability) and anthropogenic (i.e., GHGs and sulfate aerosols) forcings are included (IPCC 2007). The global mean temperature changes in the first half century were partly a response to natural forcings but the warming in the later half century has mostly resulted from anthropogenic forcing (e.g., Meehl et al. 2004). The models also show a decrease in DTR but of magnitude much smaller than that observed (Stenchikov and Robock 1995; Stone and Weaver 2002, 2003; Karoly et al. 2003; Braganza et al. 2004; Zhou et al. 2009).

Although many studies have been made of the attribution of global warming to anthropogenic forcing (IPCC 2007), the question remains as to whether the observed changes in DTR are primarily attributable to human activities. This paper analyzes both observed and simulated diurnal cycle of temperature changes to attempt to clarify whether their differences are consequence of deficiencies in the models and to enhance the interpretation of anthropogenic causes of such changes. It focuses on detecting and attributing anthropogenic signals in the simulated and observed diurnal cycle of temperature from 1950 to 1999, by comparing the latter 20th century climate simulations from 12 global coupled AOGCMs with observations. Most of these models were used in the fourth assessment report (AR4) of the Intergovernmental Panel on Climate Change (IPCC). Observed and simulated T max, T min and DTR changes and their associated variables are analyzed.

2 Model simulations and observational data

We analyze monthly means of daily T max, T min, and DTR data from AOGCM simulations during the 20th century (20C3M), which are available with different spatial resolutions at monthly or daily scales from the data portal of PCMDI (http://www-pcmdi.llnl.gov/), NCAR (http://www.earthsystemgrid.org/) and GFDL (http://nomads.gfdl.noaa.gov/). The 20C3M simulations include time-evolving historical changes in anthropogenic (e.g., GHGs, sulfate aerosols) and/or natural (solar irradiance and volcanic aerosols) forcings. Most of the models have performed multi-member ensemble simulations, which are divided into two groups (Table 1): one with anthropogenic and natural forcings (referred to as ALL) and the other with only natural forcings (referred to as NAT). In total, there are 36 ALL simulations from 12 models and 12 NAT simulations from 3 of these models. Averaging over multiple members enhances the forcing signal and reduces noise from internal variability and errors from individual models (IPCC 2007). For most cases, we simply averaged the 36 or 12 simulations to derive the multi-model ensemble mean and its standard deviation (STD). To evaluate the performance of individual models, we also performed similar averaging for each model if there are more than one ensemble run.

The model simulated temperature changes were compared with monthly mean values (°C) of observed daily T max, T min, and DTR anomalies (relative to the 1961–1990 mean) at 5° longitude × 5° latitude grid boxes over global land areas (Vose et al. 2005). This observational dataset has improved spatial coverage and data homogeneity for the period 1950–2004. It has data over 70% of the land areas for all years during the base period of 1961–1990 but the coverage gradually decreases toward both ends of the record.

To attribute changes in the temperatures, observed and simulated total cloud cover (TCC, percent sky cover) and precipitation (P, mm/day) were analyzed. A global dataset of observed monthly TCC anomalies relative to the 1961–1990 means at resolution of 1° × 1° for 1950–2004 was derived by merging the CRU_TS_2.02 data and 3-hourly synoptic surface observations (Dai et al. 2006; Qian et al. 2006). This merged TCC was adjusted to have the same mean over an overlap period 1977–1993, and the unreliable data within the contiguous United States for the period 1995–2004 were replaced by military observations (Dai et al. 2006). A global monthly P dataset at resolution of 2.5° × 2.5° for 1948–2004 was obtained from Chen et al. (2001). Cross validation of this dataset showed stable and improved performance of the gauge-based analysis for all seasons and over most land areas. Monthly anomalies of the observed P and the simulated TCC and P data were created relative to the climatology of the period 1961–1990. Similarly we also created monthly anomalies of simulated surface downward longwave radiation (DLW, W/m2), surface downward shortwave radiation (DSW, W/m2), and precipitable water (PRW, kg/m2, i.e., total water vapor content in an atmospheric column) in the models.

For all the variables, the monthly anomalies were first spatially re-mapped into the 5° × 5° grid box of the observed temperature data and were then aggregated to generate annual and decadal anomaly time series. Our analyses will focus only on regions where the observations of DTR are available. We selected and used 499 grid boxes in land that have at least 7 months of data for each year, which were averaged to calculate the annual mean, and at least 30 years of data during the period 1950–1999 in the observations (Fig. 1). The use of 7-month and 30-year thresholds is a reasonable compromise between the length of the observing period, data completeness, and spatial coverage. For the 499 grid boxes, on average only 3% of their annual anomalies from 1950 to 1999 were estimated from 7 to 11 monthly anomalies instead of using 12 months of data for each year. We assume that uncertainties due to data incompleteness are randomly distributed and thus have minor impacts on our results when averaged over large areas. Although sampling is relatively complete across mid-latitudes, the tropical and polar land remains underrepresented because of a relative lack of data (Vose et al. 2005).

The study region consisting of 499 grid boxes of 5° longitude × 5° latitude that have at least 7 months of data for each year and at least 30 years of data during the period 1950–1999 in the observed temperatures (Vose et al. 2005). These grid boxes were classified into 5 climate zones: moist tropic (1), arid (2), humid middle latitude (3), continental (4), and polar (5), based on the world map of the Koppen-Geiger climate classification (Kottek et al. 2006). In total, there are 48 grid boxes for moist tropic climates, 112 for arid climates, 109 for humid middle latitude climates, 189 for continental climates, and 41 for polar climates. Grid boxes in gray have no data or too many missing values

Most coupled AOGCMs have reproduced the observed warming and identified anthropogenic signals at global and continental scales (IPCC 2007) and so our analyses are performed at these scales. Due to the limited data coverage over Africa, South America and Antarctica, we classify the 499 boxes into 5 climate zones (Fig. 1) to represent changes at large scales over different climate zones (instead of continental scales) based on the latest world map of the Koppen-Geiger climate classification (Kottek et al. 2006), given the dependence of DTR trends on climate zones (Zhou et al. 2007, 2008). The Koppen-Geiger climate classification is one of the most widely used climate classification systems based on observed temperature and precipitation; it assigns the world climate at any land site to one of five general categories—moist tropic, arid, humid middle latitude, continental, and polar, each with finer subdivisions that are not used here. The global and climate zonal average time series are calculated using area-weighted averaging over land (including ice-coved land) and the percentage of land area for coastal grid boxes. We assess the anthropogenic contribution to the DTR changes and evaluate how successfully the ALL simulations capture major observational features primarily at global and climate zone scales. A two-tailed student’s t test was used to test whether linear trends or regression coefficients estimated using least squares fitting differ significantly from zero.

3 Results

3.1 Variability and trends in observed and simulated temperatures

3.1.1 Geographic patterns of trends

Figure 2 shows the observed annual T max, T min, and DTR trends for the 499 grid boxes from 1950 to 1999. T max increased in most regions, with a pronounced and statistically significant warming trend in northwestern North America and middle latitude Asia, except a small or negative trend in Mexico, northeastern Canada, southern parts of U.S., Europe, and China, and northern Argentina. Tmin increased significantly across all areas except for northern Mexico and northeastern Canada. The large warming in T min and the small warming or the cooling in T max, have decreased DTR significantly over most land areas, especially in east Asia, the West African Sahel, northern Argentina, and part of the Middle East, Australia and U.S. An increase in DTR was scattered over few grid cells (e.g., in northeastern Canada and southern Argentina).

Spatial patterns of linear trend in the observed annual T max, T min and DTR over land from 1950 to 1999. Only 499 grid boxes defined in Fig. 1 were used. Stripped areas are where the trends are statistically significant (p < 0.05)

The simulated temperature trends in ALL during the period 1950–1999 (Fig. 3) show a significant and widespread warming trend in T max and T min, especially in high latitudes. T min also shows a strong warming in arid and semi-arid regions in tropics and middle latitudes. The stronger warming in T min than T max results in a significant decreasing trend in DTR over most regions, with the largest decrease in northern high latitudes, central Africa, the Arabian Peninsula, southwestern Asia, Australia, and Argentina. In contrast, NAT shows a widespread cooling trend in T max and T min and an increasing trend in DTR over most regions (Fig. 4).

Same as Fig. 3 but for the multi-model ensemble means in NAT

The simulated temperature trends in ALL generally capture the observed large-scale features (e.g., the warming in T max and T min and the decrease in DTR, with most of the changes within the range of one STD), while those in NAT can not reproduce any of these features. Grid by grid comparisons between the observed data and that simulated in ALL exhibit some differences in both the magnitude and spatial patterns. Some of the differences are expected as difficulties remain in attributing simulated temperature changes at small scales (IPCC 2007). Other possible factors for such differences are discussed in Sect. 4.

3.1.2 Global and large-scale averages

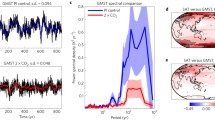

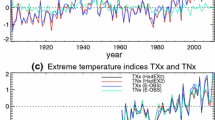

Figure 5 shows the global mean annual T max, T min, and DTR anomaly time series for the multi-model ensemble mean and its one STD, along with the observations, averaged over the 499 grid boxes from 1950 to 1999. Most of the observed T max and T min are within the range of one STD of the ALL simulations. The observed trends of T max, T min, and DTR are +0.124, +0.224, and −0.099°C per decade, respectively, and the corresponding simulated trends in ALL are +0.115, +0.137, and −0.022°C per decade. The observed and simulated trends are all statistically significant (p < 0.05). In contrast, NAT simulations show a very small cooling trend in T max and T min (−0.051 and −0.048°C per decade, respectively) and a negligible trend in DTR (−0.003°C per decade). The simulated T max and T min in ALL generally capture the observed variability, at a warming rate comparable to that observed for Tmax but only about 61% of that observed for T min. Consequently, the simulated DTR trend is very small, only about 22% of that observed. Short-term decreases in T max and T min associated with three major volcanic eruptions (i.e., Agung, El Chichon, and Pinatubo) are evident in both the observations and simulations, as shown in global mean surface temperature anomalies in Fig. 9.5 in IPCC (2007). Note that NAT has only 12 simulations and thus has a larger estimation uncertainty than ALL.

Global mean annual T max, T min and DTR anomalies relative to the period 1961–1990, as observed (in black) and as obtained from multi-model mean simulations in ALL (in red) and NAT (in blue) for the period 1950–1999. Shaded regions represent one STD in ALL and NAT and those in light blue represent the overlap between ALL and NAT. Each simulation was sampled so that coverage corresponds to that of the observations. The cooling associated with three major volcanic eruptions (Agung, El Chichon, and Pinatubo) are evident on shorter time scales. The linear trends are listed and those marked with “*” are statistically significant (p < 0.05). A 5-point (i.e., 5-year) running averaging was applied for visualization purpose only, with the first and last 2 year values applied using a recycling boundary condition

The histogram of the global mean temperature trends for the 36 ALL simulations and the 12 NAT simulations, together with the observed trends, is shown in Fig. 6. The observed trend in T max is located in the middle of the ALL simulations but is far outside the range of NAT simulations, indicating that the ALL trend of T max is comparable to the observations. The observed trend in T min is located at the upper edge of the ALL trends and also far away from the NAT trends, suggesting that the ALL trend of T min is much smaller than that observed. Consequently, the simulated DTR trend is much smaller than that observed, i.e., less than any of the distribution elements of the ALL and NAT trends. To assess the performance by each individual model, we plotted the temperature trend by model in Fig. 7. Most models in ALL reproduce the observed T max trend but largely underestimate the observed T min trends. Among the 12 models, ECHO-G, GFDL-CM2.0, GFDL-CM2.1 and MIROC3.2 (medres) generally have the trends of T max and T min smaller than other models. For DTR, CSIRO-Mk3.0, CSIRO-Mk3.5, ECHO-G, MIROC3.2 (medres) and MIROC3.2 (hires) have the smallest trends, i.e., the warming in T max and T min is comparable. The simulated DTR trends in ALL are smaller than those observed, mainly due to the simulated T min trend being much smaller than that observed. NAT again shows small and opposite trends relative to ALL.

Histogram of global mean linear trends (°C/10 years) of T max, T min and DTR over the 499 grid boxes from the 36 ALL simulations and the 12 NAT simulations, together with the observed trends, for the period of 1950–1999. Each simulation was sampled so that coverage corresponds to that of the observations

Global mean linear trends (°C/10 years) of T max, T min and DTR over the 499 grid boxes for each model in ALL and NAT, together with the observed trends, for the period of 1950–1999. An ensemble mean was produced for each model if more than one ensemble member is available. Each model simulation was sampled so that coverage corresponds to that of the observations. Values labeled on the top (or bottom) of each box represent the trends

Figure 8 show the decadal anomaly time series of T max, T min, and DTR averaged over each climate zone from 1950 to 1999 for the multi-model ensemble mean and the observations. The large-scale averages over climate zones generally exhibit similar features as the global average (Fig. 5) but with a greater variability. In ALL, T max generally follows the observed while T min has trends much smaller than the observed for most climate zones. The observed DTR decreases most in arid and polar climates and least in moist tropic climates, while the simulated DTR in ALL decreases most in high latitudes and least in moist tropic and humid middle latitude climates. Overall the simulated temperature trends in ALL, although smaller than observed, are distinct from those in NAT.

The large uncertainty range for the individual simulations (Figs. 5, 8), especially those of NAT, suggests that current models generally simulate large-scale natural internal variability and also capture the cooling associated with volcanic eruptions on shorter time scales. Despite their differences in physics and forcings applied, the models clearly distinguish between the temperatures of ALL and NAT. The ALL simulations with anthropogenic forcings capture most of the temperature changes observed in global and large-scale averages, but the NAT simulations with only natural forcings differ distinctly from those observed at these scales.

3.2 Spatial dependence of temperature trends on precipitation

Observational analyses show that there is a strong geographic dependence of trends of DTR and T min on the climatology of precipitation (Zhou et al. 2007, 2008). To test whether the simulated temperatures have similar features, we classified the 499 grid boxes into 7, 11, 15, 19, and 23 precipitation zones, respectively, from dry to wet, in terms of the observed amount of climatological annual precipitation (referred to as \( \overline{P} , \) mm/day) (Chen et al. 2001), and then analyzed the spatial dependence of temperature trends on \( \overline{P} \) by precipitation zone, as done in Zhou et al. (2008). For each classification, to ensure comparable samples in each precipitation zone, we first ranked \( \overline{P} \) of the 499 grid boxes in ascending order, and then divided them equally into 7, 11, 15, 19, and 23 precipitation zones, respectively, each with the about same number of grid boxes.

Figure 9 shows the spatial dependence of zonal average trends of annual T max, T min, and DTR on the zonal average \( \overline{P} \) in terms of 15 precipitation zones. For the observations, there is a statistically significant correlation (p < 0.05) between \( \overline{P} \) and the trends of T min and DTR, while the correlation between \( \overline{P} \) and the trend of T max is weak. The observed trend of T min (DTR) generally decreases (increases) linearly with \( \overline{P} , \) indicating that the lower the amount of \( \overline{P} , \) the stronger the warming in T min and the larger the DTR reduction. Similar dependence of the simulated trends in T min and DTR on \( \overline{P} \) are also seen in ALL while NAT displays the opposite in T min and a much weaker correlation in DTR. Unlike the observations, the ALL and NAT simulations also show a strong correlation between the trend of T max and \( \overline{P} . \) The estimated slope shown in Fig. 9 reflects the zonal difference in temperature trends per unit change in the amount of \( \overline{P} . \) In ALL, the slopes of T max (−0.011) is comparable to the observed (−0.016) while the slope of T min (−0.014) is much smaller than the observed (−0.030). Hence the slope of DTR (0.004) is only 28% of the observed (0.014), consistent with previous results.

Dependence of zonal average linear trends (°C/10 years) of annual T max, T min and DTR on zonal average climatological annual precipitation in the observations and simulations by precipitation zone during the period 1950–1999. Here only the results for the 15 precipitation zones are shown. A linear regression line was fit between the precipitation and temperature trends. The correlation coefficients (R) are listed and those marked with “*” are statistically significant (p < 0.05)

We also examined the results in terms of 7, 11, 19, and 21 precipitation zones (Table 2). The spatial dependence of temperature trends on \( \overline{P} \) is robust across all classifications. These results indicate that most of the observed long-term DTR trends since 1950 are generally larger over regions with less precipitation such as arid and semi-arid systems. Again, the ALL simulations generally capture the essential features of the spatial dependence of observed trends on precipitation while the NAT simulated trends exhibit distinct features.

3.3 Changes in temperatures and their relations with cloud cover and precipitation

Increased cloud cover, precipitation, and soil moisture have been widely used as major factors to explain the worldwide reduction of DTR in the last several decades (e.g., Dai et al. 1997, 1999). To attribute the temperature changes, we analyzed the trends and interannual variations of T max, T min, and DTR and their association with TCC and P for the observations and the multi-model ensemble mean at the global scale and by climate zone. Soil moisture also damps T max and thus DTR by enhancing evaporative cooling through evapotranspiration but it is not included in this analysis due to limited observations and substantial inter-model variations resulting from differences in land surface models. As soil moisture influences the magnitude and spatial patterns of DTR and its variability (e.g., Fig. 9 in Zhou et al. 2008), its omission in our statistical analyses may result in some variations of DTR unexplained, but such effects may at least to some extent be compensated by the DTR’s association with TCC and P, both of which are strongly related to soil moisture. Table 3 lists the linear trends for the observed and simulated anomaly time series from 1950 to 1999. ALL shows statistically significant trends in temperatures (T max, T min, and DTR) as those observed, while NAT exhibits significant cooling trends in T max and T min and no apparent trends in DTR. TCC generally increases in the observed but decreases in ALL, and its trend is statistically significant in three climate zones in the observed and four zones in ALL (p < 0.05). Note that large uncertainties exist in the observed TCC trends which were calculated from fewer grid boxes due to missing data, especially before 1971. For P, negative trends exist in the observed but are only significant for the global mean and tropic climates, while ALL shows a positive trend in middle to high latitudes. NAT however has a minor and statistically insignificant trend in TCC and P.

Figure 10 shows the global average annual anomalies of DTR, P, and TCC from 1950 to 1999 in the observations and simulations. The significant negative DTR trend is evident in ALL and the observations, but not in NAT. In general, relative to the long-term negative trend in DTR and/or trends in TCC/P if any, one can see visually an inverse correlation between DTR and TCC/P. Simply correlating DTR and TCC/P in Fig. 10 to quantify their statistical relationship may result in spurious association due to the presence of strong trends (Granger and Newbold 1974; Gujarati 1995). To reduce the possibility of such spurious association, we differentiated the original time series (i.e., removing the long-term trends) and then estimated the relationship between changes in temperatures and those in TCC/P. For example, after differentiating the original time series, the new DTR time series represent DTR changes relative to the previous year. We realize that this simple method probably is not the best approach for every time series that is statistically very complex, but it is easy to apply and understand and previous studies have showed that it is effective and can give a good basis for the analysis (e.g., Zhou et al. 2001, 2003, 2007, 2008). Furthermore, given the non-stationary properties of the data involved and the pitfalls associated with use of standard statistical techniques, applications of other advanced statistical techniques are not warranted. There is a statistically significant negative correlation between variations in DTR and those in TCC/P for the global mean time series (Fig. 10; Table 4). Similar relationships are also evident for all climate zones (Table 4), consistent with previous studies (e.g., Dai et al. 1999). The regression coefficient (β 1) represents changes in DTR given a unit change in TCC/P. The β 1 is larger for TCC/P over drier climate and smaller over wetter climates in the observations—suggesting a stronger sensitivity of DTR to TCC/P over regions with less precipitation. ALL shows similar features except that the TCC coefficient is also large for continental climates.

Global mean annual anomalies of DTR (°C), precipitation (mm/day) and cloud cover (%) for the simulated and observed over the 499 grid boxes from 1950 to 1999. The observed time series for DTR-TCC (left panel) were averaged from fewer grid boxes because the observed cloud data have missing data over some of the 499 grid boxes, especially before 1971. The values of R 2 as in Table 4 are listed and those marked with “*” are statistically significant (p < 0.01). R 2 was calculated after differentiating the original time series. The time series of data were normalized by subtracting their mean divided by their standard deviation (for visualization purpose only)

Since the DTR-TCC/P correlation is negative and DTR has been widely decreasing (as shown in Tables 3, 4 and previous studies, e.g., Dai et al. 1999), the trends in TCC/P are expected to be positive. In fact, TCC over many regions and P in tropical climates have been observed to be increasing. However, coincident decreasing trends in DTR and TCC are observed over some regions such as China and the Sahel (e.g., Kaiser 1998; Liu et al. 2004; Zhou et al. 2007) and are seen globally over the ALL simulations, suggesting that the longer term variability/trend has different connections between DTR and TCC/P than the interannual variability.

3.4 Changes in simulated temperatures and their relations with surface radiation forcings

Surface radiation will likely change in a warmer and wetter climate. Some similarities in the spatial patterns between the trends in DTR and those in DSW/DLW suggest that changes in DLW and DSW may help largely explain the simulated DTR decrease. Table 5 show the global and climate zonal mean linear trends simulated from 1950 to 1999. In ALL, DLW increases significantly by 0.72–0.98 W/m2 per decade over all climate zones while DSW decreases significantly by 0.35–0.48 W/m2 per decade, less than half of the DLW increases. In NAT, DLW and DSW both decrease significantly.

Figure 11 compares the global mean annual time series of DTR with DSW/DLW for the multi-model ensemble mean. Evident trends are seen in DSW and DLW. After the linear trends are removed, the DSW variability explains about 61% of the DTR variance in ALL (more than TCC and P), indicating a dominant effect of DSW on DTR. Furthermore, the downward trends in DSW and DTR match each other closely in the ALL case, suggesting that the decreases in DTR are induced primarily by the decreases in DSW. No DLW-DTR correlation is found. We also analyzed annual variations in T max, T min, and DTR and their statistical association with DLW/DSW in ALL by climate zone (Table 6). Evidently, variations in DLW explain most of the variations in T max (≥68%) and T min (≥86%) while variations in DSW explain most of the variance in DTR (22–75%). The increase in DLW warms T max and T min at a comparable rate and thus has a minor impact on DTR except in polar climates. As expected, DSW explains more variance of DTR than TCC in most climate zones.

Global mean annual anomalies of DTR (°C), DLW (W/m2), DSW (W/m2), and PRW (kg/m2) simulated in ALL and NAT over the 499 grid boxes from 1950 to 1999. The values of R 2 as in Tables 6 and 7 are listed and those marked with “*” are statistically significant (p < 0.01). R 2 was calculated after differentiating the original time series. The time series of data were normalized as done in Fig. 10 (for visualization purpose only)

We separated cloud versus non-cloud effects by examining relationships between temperatures and DSW/DLW under all-sky and clear-sky conditions (Table 6). Clear-sky DLW explains more T max and T min variance than does all-sky DLW. It has a statistically significant positive correlation with DTR in arid and humid middle latitude climates, while all-sky DLW has no correlation with DTR for all climate zones except polar climates. Clear-sky DSW shows comparable but slightly weaker correlations with T max, T min and DTR than all-sky DSW. There are some small differences in β 1 for DLW/DSW between clear-sky versus all-sky conditions. The decrease of TCC slightly reduces DLW but substantially enhance DSW and thus the clear-sky DSW/DLW trends are larger than those of all-sky values (Table 5).

PRW is expected to be enhanced for a warming climate. The global and climate zonal mean linear trends in PRW from 1950 to 1999 show an widespread increase in ALL, with a magnitude decreasing poleward, but little changes in NAT (Table 5). Variations in PRW can explain 93% (32%) of the variance of global mean DLW (DSW) in ALL while NAT does not show such a relationship (Fig. 11). We similarly examined trends and statistical relations for DLW/DSW versus PRW under clear-sky and all-sky conditions by climate zone (Table 7). PRW exhibits trends that differ slightly between all-sky and clear-sky conditions (Table 5). It explains 78–95% (2–23%) of the variance in DLW (DSW) under all-sky conditions and 66–80% (15–58%) under clear-sky conditions. The DLW-PRW association differs little under both sky conditions but the DSW-PRW association is much stronger under clear-sky than all-sky conditions.

In summary, surface radiation forcings change significantly from 1950 to 1999 when anthropogenic forcings were included in the 20th century climate simulations. Enhanced DLW warms global land T max and T min but at a comparable rate and thus has little effect on DTR, while DSW strongly influence DTR. Statistically, variations in DLW explain most of the warming in T max and T min and variations in DSW explain most of the DTR decrease.

4 Discussion

The diurnal cycle of temperature over land is maintained by daytime solar heating and nighttime radiative cooling—the nighttime minimum temperature is largely controlled by downward longwave radiation while the daytime maximum temperature is strongly affected by surface solar heating and partitioning of sensible and latent heat (e.g., Dai et al. 1999; Makowski et al. 2008). Its amplitude can be changed through changes of surface energy and hydrological balances controlled by atmospheric composition, clouds, aerosols, land properties (e.g., soil moisture and vegetation), and surface and boundary-layer conditions. Understanding such changes can help explain why DTR changes but doing so requires examining the diurnal cycle of surface radiation and energy fluxes (e.g., hourly observations or model outputs) and other related variables, most of which are not available. Since the ALL simulations show small decreases in TCC, sensible and latent heat fluxes and the models do not change their land use/cover, this study focuses on changes in the surface radiation that are expected to largely determine the simulated DTR.

The surface and atmosphere are warmed primarily by the GHGs enhanced DLW as amplified by the associated increase of atmospheric water vapor content (i.e., PRW). This enhanced PRW as a solar absorber also reduces DSW (Table 7; Fig. 11). Changes in DSW can also result from changes in cloud cover and aerosols. The presence of cloudiness can significantly reduce surface solar heating. Increasing aerosols scatter or absorb incoming solar radiation and thus reduce DSW. Our clear-sky results and the strong DTR-DSW correlation indicate that enhanced aerosols and PRW both contribute to the ALL changes in DTR (Tables 5, 6, 7 and Fig. 11). Although the simulated DTR changes are much smaller than those observed, the ALL simulations with anthropogenic forcings capture most of the essential features of observed temperature changes at global and large scales, whereas the NAT simulations with natural forcings only do not simulate such features. Our results indicate that the model simulated DTR decreases may be mainly a result of large-scale effects of increased GHGs and direct effects of aerosols.

The simulated DTR trend in ALL is much smaller than that observed, possibly mainly attributed to the lack of an increased trend in cloudiness that has been observed in many areas (Dai et al. 1999). The surface latent and sensible heat fluxes show a small decrease and thus only offset slightly the DSW decrease due to increased water vapor and aerosols. The magnitude of the DSW decrease is as large as half of the increase in DLW in the ALL case (Table 5), but T max still has a warming trend only slightly lower than that in T min (Table 3). The simulated trend in T max is comparable to the observations but the simulated trend in T min is much smaller in the models than that observed (Figs. 5, 6, 7, 8). Very likely, the small DTR trend in ALL may result mainly from the underestimated T min trend due to the lack of increased cloudiness, which acts as a blanket at night to warm the surface (i.e., the increase of DLW). In addition, the models are limited in describing realistic effects of aerosols (including their properties) and cloud-aerosol interactions and thus in simulating changes in surface radiation (Ruckstuhl and Norris 2009; Wild 2009). For example, the observed large decreases in DTR over eastern China, where cloudiness decreased and aerosols increased substantially (Kaiser 1998; Liu et al. 2004; Zhou et al. 2008), and the observed solar “dimming” and “brightening” over Europe (Ruckstuhl and Norris 2009; Wild 2009), are not realistically simulated. Considering large uncertainties in simulating changes in clouds, aerosols, and hydrological variables (e.g., precipitation, soil moisture) in the models, very likely the missing increasing trend in the simulated cloudiness is a major reason to explain the small DTR trend in the models.

The models may be deficient in reproducing realistic diurnal responses of temperatures to given changes in DLW and DSW and thus underestimating DTR changes. Considering that daytime latent heat and sensible heat fluxes are closely coupled to DSW, a decrease in DSW often leads to a decrease in the latent and sensible heat, which is simulated in ALL but too small in magnitude. The models show a comparable warming of T max and T min with the change of DLW. However, surface air temperature is expected to be most sensitive to changes in surface radiative forcing under cold stable conditions and consequently nighttime and high-latitude temperatures, hence DTR should correlate with DLW (e.g., Pielke and Matsui 2005; Betts 2006; Dickinson et al. 2006; IPCC 2007; Zhou et al. 2007, 2008). The weaker modeled correlation between DLW and DTR (i.e., the comparable effect of DLW on T max and T min) suggests that the models may not realistically characterize the expected asymmetric diurnal response of T max and T min to the pronounced and persistent DLW forcing. A strong spatial dependence of temperature trends on precipitation is seen for both T max and T min in the models but only for T min in the observations (Table 2; Fig. 9), indicating also that the atmosphere is strongly coupled with surface temperature for both daytime and nighttime in the models while observations only support such coupling at nighttime. These results, together with their weak diurnal cycle of simulated climatological temperatures, suggest deficiencies in characterization by the models of important surface and boundary-layer processes.

Some other factors may also contribute to the small simulated DTR changes. The models simulate the general pattern of DTR but their diurnal cycle of temperature is much weaker than observed, in many regions by as much as 50%, likely contributing to the smallness of simulated DTR trends (IPCC 2007). The multiple-model ensemble mean, which should be amplifying the major signals and reducing the errors in individual models, also smoothes the internal variability (IPCC 2007), often reducing the magnitude of the variability from that of individual models (Figs. 5, 6, 7, 8), while the observations, like one realization of the simulations, should have larger variability. In addition, the models have structural and parametric uncertainties and so may differ from the observations in both their forcing and boundary conditions.

Despite the aforementioned uncertainties, the ALL simulations differ distinctly from those of NAT in their changes in temperatures and in associated variables (e.g., surface radiation and PRW) and the observed DTR trends are outside the range of simulated natural internal variability. It is very unlikely that the observed increases in PRW over many regions would result from natural forcings only. Wild et al. (2007) found a strong connection in the observations between changes in DTR and those in surface solar radiation (from dimming to brightening) during the 1980s and with the increasing GHGs. Liu et al. (2004) and Makowski et al. (2009) reported a similar DSW-DTR connection. Considering the substantial spatiotemporal variability in cloud cover and precipitation, Zhou et al. (2008) suggest that enhanced GHGs and aerosols as a steady and global forcing are likely to largely determine the observed long-term DTR trends at large spatial scales. The large decrease in DTR over arid and semi-arid systems may reflect stronger effects of increased GHGs and associated changes in soil moisture and water vapor on DTR over drier regions (Zhou et al. 2008). We speculate that the observed widespread decrease in DTR might result from the global radiation changes (due to anthropogenic changes in GHGs and aerosols) and additional strong regional effects of anthropogenic forcing that the models can not realistically simulate, likely connected to changes in cloud cover, precipitation, and soil moisture. Evidently the ALL simulations reproduce the global signal much better than the regional variations.

5 Conclusions

We have analyzed the trends and variability in T max, T min, and DTR over land in observations and multiple model simulations for the later half of the 20th century. When anthropogenic and/or natural forcings are included, the simulated DTR reproduces many observational features. The simulated T max trend is comparable to the observations, but the simulated T min trend is much smaller than that observed; as a result, the simulated global mean DTR trend is only about 22% of that observed. The spatial dependence of T min and DTR trends on climatological precipitation are seen in both the observations and simulations. When only natural forcings are used, none of the observed features are simulated and models generally show opposite trends associated with volcanic aerosols.

The model simulated warming in T max and T min and the general decrease in DTR may reflect large-scale effects of enhanced global GHGs and direct effects of aerosols. The strong and persistent increase in DLW, which mainly reflects GHGs effects of a warmer and wetter atmosphere and to some extent of a warmer surface, is the dominant global forcing in explaining the simulated warming of T max and T min from 1950 to 1999, while its effect on DTR is very small. Decreases in DSW due to enhanced aerosols and PRW contribute most to the simulated decreases in DTR. Although the magnitude of the simulated DTR decrease is much smaller than the observed, the models generally reproduce the substantial warming in both T max and T min and the reduction of DTR, in response to enhanced global-scale anthropogenic forcings, and the observed DTR decreasing trends are shown to be outside the range of natural internal variability estimated from the models. The much larger observed decrease in DTR suggests the possibility of additional regional effects of anthropogenic forcing that the models can not realistically simulate, likely connected to changes in cloud cover, precipitation, and soil moisture.

The small DTR changes simulated in the models may be mainly attributed to the lack of an increase in cloud cover and deficiencies in realistically representing (a) diurnal responses of T min and T max to given changes in DLW and DSW (especially the increase in DLW) (b) changes in clouds and aerosols (and their properties and interactions) and their impacts on DLW and DSW, and (c) some important surface and boundary-layer processes in the models. Some uncertainties also exist in our calculation of linear and regional average trends and time series analysis because of gaps in spatial coverage and temporal limitations in the data and the simplicity of our statistical methods. Our results and conclusions may be representative mainly over regions where this analysis had the most observations.

References

Betts AK (2006) Radiative scaling of the nocturnal boundary layer and the diurnal temperature range. J Geophys Res 111:D07105. doi:10.1029/2005JD006560

Braganza K, Karoly DJ, Arblaster JM (2004) Diurnal temperature range as an index of global climate change during the twentieth century. Geophys Res Lett 31:L13217. doi:10.1029/2004GL019998

Chen M, Xie P, Janowiak JE, Arkin PA (2001) Global land precipitation: a 50-year monthly analysis based on gauge observations. J Hydrometeor 3:249–266

Collatz GJ, Bounoua L, Los SO, Randall DA, Fung IY, Sellers PJ (2000) A mechanism for the influence of vegetation on the response of the diurnal temperature range to changing climate. Geophys Res Lett 27:3381–3384

Dai A, Del Genio AD, Fung IY (1997) Clouds, precipitation, and temperature range. Nature 386:665–666

Dai A, Trenberth KE, Karl TR (1999) Effects of clouds, soil moisture, precipitation, and water vapor on diurnal temperature range. J Clim 12:2451–2473

Dai A, Karl TR, Sun B, Trenberth KE (2006) Recent trends in cloudiness over the United States: a tale of monitoring inadequacies. Bull Am Met Soc 87(5):597–606

Dickinson RE et al (2006) The community land model and its climate statistics as a component of the community climate system model. J Clim 19:2302–2324

Easterling DR et al (1997) Maximum and minimum temperature trends for the globe. Science 277(5324):364–367. doi:10.1126/science.277.5324.364

Granger CWJ, Newbold P (1974) Spurious regressions in econometrics. J Econometrics 2:111–120

Gujarati DN (1995) Basic econometrics, 3rd edn. McGraw-Hill, New York, ISBN 0-07-025214-9

IPCC (2007), Climate change 2007: the physical science basis, contribution of working group I to the fourth assessment report of the IPCC (ISBN 978 0521 88009-1), Cambridge University Press, Cambridge

Kaiser DP (1998) Analysis of total cloud amount over China. Geophys Res Lett 25(19):3599–3602

Karl T et al (1993) A new perspective on recent global warming: asymmetric trends of daily maximum and minimum temperature. Bull Am Meteorol Soc 74(6):1007–1023

Karoly DJ, Braganza K, Stott PA, Arblaster JM, Meehl GA, Broccoli AJ, Dixon KW (2003) Detection of a human influence on North American climate. Science 302:1200–1203

Kottek M, Grieser J, Beck C, Rudolf B, Rubel F (2006) World Map of the Köppen-Geiger climate classification updated. Meteorol Z 15:259–263. doi:10.1127/0941-2948/2006/0130

Liu B, Xu M, Henderson M, Qi Y, Li Y (2004) Taking China’s temperature: daily range, warming trends, and regional variations, 1955–2000. J Clim 17:4453–4462. doi:10.1175/3230.1

Makowski K, Wild M, Ohmura A (2008) Diurnal temperature range over Europe between 1950 and 2005. Atmos Chem Phys 8:6483–6498

Makowski K, Jaeger EB, Chiacchio M, Wild M, Ewen T, Ohmura A (2009) On the relationship between diurnal temperature range and surface solar radiation in Europe. J Geophys Res 114:D00D07. doi:10.1029/2008JD011104

Meehl GA, Washington WM, Ammann CM, Arblaster JM, Wigley TML, Tebaldi C (2004) Combinations of natural and anthropogenic forcings in twentieth-century climate. J Clim 17:3721–3727

Pielke RA Sr, Matsui T (2005) Should light wind and windy nights have the same temperature trends at individual levels even if the boundary layer averaged heat content change is the same? Geophys Res Lett 32:L21813. doi:10.1029/2005GL024407

Qian T, Dai A, Trenberth KE, Oleson KW (2006) Simulation of global land surface conditions from 1948 to 2004. Part I: forcing data and evaluation. J Hydrometeorol 7:953–975

Ruckstuhl C, Norris JR (2009) How do aerosol histories affect solar “dimming” and “brightening” over Europe?: IPCC-AR4 models versus observations. J Geophys Res 114:D00D04. doi:10.1029/2008JD011066

Stenchikov GL, Robock A (1995) Diurnal asymmetry of climatic response to increased CO2 and aerosols: forcings and feedbacks. J Geophys Res 100:26211–26227

Stone DA, Weaver AJ (2002) Daily maximum and minimum temperature trends in a climate model. Geophys Res Lett 29(9):1356. doi:10.1029/2001GL014556

Stone DA, Weaver AJ (2003) Factors contributing to diurnal temperature range trends in twentieth and twenty-first century simulations of the CCCma coupled model. Clim Dyn 20:435–445

Vose RS, Easterling DR, Gleason B (2005) Maximum and minimum temperature trends for the globe: an update through 2004. Geophys Res Lett 32:L23822. doi:10.1029/2005GL024379

Wild M (2009) How well do IPCC-AR4/CMIP3 climate models simulate global dimming/brightening and twentieth-century daytime and nighttime warming. J Geophys Res 114:D00D11. doi:10.1029/2008JD011372

Wild M, Ohmura A, Makowski K (2007) Impact of global dimming and brightening on global warming. Geophys Res Lett 34:L04702. doi:10.1029/2006GL028031

Zhou L, Tucker CJ, Kaufmann RK, Slayback D, Shabanov NV, Myneni RB (2001) Variations in northern vegetation activity inferred from satellite data of vegetation index during 1981–1999. J Geophys Res 106:20069–20083

Zhou L, Kaufmann RK, Tian Y, Myneni RB, Tucker CJ (2003) Relation between interannual variations in satellite measures of northern forest greenness and climate between 1982 and 1999, J Geophys Res 108(D1). doi:10.1029/2002JD002510

Zhou L, Dickinson RE, Tian Y, Fang J, Li Q, Kaufmann RK, Tucker CJ, Myneni RB (2004) Evidence for a significant urbanization effect on climate in China. Proc Natl Acad Sci USA 101(26):9540–9544

Zhou L, Dickinson RE, Tian Y, Vose R, Dai Y (2007) Impact of vegetation removal and soil aridation on diurnal temperature range in a semiarid region—application to the Sahel. Proc Natl Acad Sci USA 104(46):17937–17942

Zhou L, Dai A, Dai Y, Vose RS, Zou C-Z, Tian Y, Chen H (2008) Spatial patterns of diurnal temperature range trends on precipitation from 1950 to 2004, Clim Dyn. doi: 10.1007/s00382-008-0387-5

Zhou L, Dickinson RE, Dirmeyer P, Dai A, Min S-K (2009) Spatiotemporal patterns of changes in maximum and minimum temperatures in multi-model simulations. Geophys Res Lett 36:L02702. doi:10.1029/2008GL036141

Acknowledgments

This study was supported by the NSF grant ATM-0720619 and the DOE grant DE-FG02-01ER63198. We acknowledge PCMDI, NCAR and GFDL for collecting and archiving the model data, and would like to thank Dr. Seung-Ki Min for providing ECHO-G NAT simulations. The National Center for Atmospheric Research is sponsored by the National Science Foundation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Zhou, L., Dickinson, R.E., Dai, A. et al. Detection and attribution of anthropogenic forcing to diurnal temperature range changes from 1950 to 1999: comparing multi-model simulations with observations. Clim Dyn 35, 1289–1307 (2010). https://doi.org/10.1007/s00382-009-0644-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-009-0644-2