Abstract

The adoption of standards to improve interoperability in the automotive, aerospace, shipbuilding and other sectors could save billions. While interoperability standards have been created for a number of industries, problems persist, suggesting a lack of quality of the standards themselves. The issue of semantic standard quality is not often addressed. In this research we take a closer look at the quality of semantics standards, development processes, and survey the current state of the quality of semantic standards by means of a questionnaire that was sent to standards developers. This survey looked at 34 semantic standards, and it shows that the quality of semantic standards for inter-organizational interoperability can be improved. Improved standards may advance interoperability in networked business. Improvement of semantic standards requires transparency of their quality. Although many semantic standard development organisations already have quality assurance in place, this research shows that they could benefit from a quality measuring instrument.

Similar content being viewed by others

Introduction

As early as 1993, a number of businesses and governments alike were aware of the importance of standards for ensuring interoperability (Rada 1993). Today, in an increasingly interconnected world, interoperability is more important than ever, and interoperability problems are very costly. Studies of the US automobile sector, for example, estimate that insufficient interoperability in the supply chain adds at least $1 billion in additional operating costs, of which 86% is attributable to data exchange problems (Brunnermeier and Martin 2002). The adoption of standards to improve interoperability in the automotive, aerospace, shipbuilding and other sectors could save billions (Gallaher et al. 2002).

Although interoperability standards have been created for a range of industries (Zhao et al. 2005), problems persist, suggesting a lack of quality of the standards themselves, and the processes by which they are developed. In 2009, the European Commission recognized the importance of quality of standards and set a policy to “increase the quality, coherence and consistency of ICT standards” (European Commission 2009). Sherif et al. (2005) state that their paper on standards’ quality was the first to address this topic, albeit only for technical standards. But what about semantic standards? Even though they are important in the creation of inter-organizational interoperability and solving data exchange problems, is there a need to measure the quality of semantic standards?

The ability to measure the quality of semantic standards can be useful to compare the quality of different standards, but also to (im)prove the quality of individual standards. Regarding semantic standards, Markus et al. (2006) asserts “the success of vertical information systems standards diffusion is affected by the technical content of the developed standard, …”. In other words, the quality of a standard is directly correlated to its adoption. However, adoption involves acceptance and implementation, and does not necessarily mean that interoperability will be achieved. In other words not all successful standards (high adoption) are high quality standards that lead to interoperability.

Despite the importance of standards in the evolution of information and communication technology (Lyytinen and King 2006), the issue of semantic standard quality is not often addressed (Folmer et al. 2009). In this research we take a closer look at the quality of semantics standards and their development processes, and survey the current state of quality and adoption of semantic standards through a survey of standards developers.

The remainder of this paper will present the definitions used, research structure, the survey population and the results of this survey, before answering the main research question in the concluding section.

Quality of standards defined

“Standards, like the poor, have always been with us” (Cargill 1989; Cargill and Bolin 2007). Arguably the most used definition of a standard is the definition used by ISO and IEC (De Vries 2006; Spivak and Brenner 2001; Van Wessel 2008): “A standard is a document, established by consensus and approved by a recognized body, that provides, for common and repeated use, rules, guidelines, or characteristics for activities or their results, aimed at the achievement of the optimum degree of order in a given context.” In our research we focus on semantic standards, a relatively new area of standardization. Semantic standards reside at the presentation and application layer of the OSI model (Steinfield et al. 2007). They include business transaction standards, inter-organizational information system (IOS) standards, ontologies, vocabularies, messaging standards, and vertical industry standards. Often, semantic standards involve XML representations of information, but the key value of the standard lies in its description of the meaning of data and process information to achieve semantic interoperability. Semantic standards differ from other type of standards (like technical standards) by, for example:

-

Development approach: Most semantic standards are developed outside traditional Standard Development Organizations (e.g. ISO) (Rada and Ketchell 2000), resulting in a wide range of standard development organisations per industry (RosettaNet for the electrotechnical industry, HL7 for health care, etc.), each having their own development and maintenance approach.

-

Context dependencies: Both the content and development approaches of semantic standards are highly dependent on the context. Examples of context factors are, among others, regulatory, governance structure, government participation, IT maturity of an industry and the market situation.

We define the quality of a semantic standard as: its ability to achieve its intended purpose—semantic interoperability—effectively and efficiently. A high quality standard is, or has a high chance to become, an effective and efficient solution for an interoperability problem; a low quality standard does not solve the problem for which it is designed, cannot be implemented efficiently, or has little chance to become adopted. All phases of the lifecycle of a standard (Söderström 2004) may influence quality. Moreover, quality deals with both intrinsic aspects (the document) and situational aspects (environment) of the standard. This definition applies Juran’s definition of quality—fitness for use (Juran and Gryna 1988)—to the semantic standards domain, and is in line with the ISO 9126 software quality definition: the totality of characteristics of an entity that bear on its ability to satisfy stated and implied needs (ISO/IEC 2001). In the end, high quality semantic standards involve network externalities, avoid lock-ins, increase variety of systems products, trade facilitation and reduce transaction costs (Blind 2004). More importantly, they solve or lower economic and social problems, such as the $1 billion imperfect interoperability costs of US automotive industry (Brunnermeier and Martin 2002) or the calculated 98,000 losses of life caused by lack of interoperability in care IT systems (Venkatraman et al. 2008).

Research approach

Research framework

As the starting point for this research, a research framework was developed. The starting point was the main research question defined in generic terms: Is there an urgent need, based on the current situation and experienced problems, for a solution? In relation to the subject of semantic standards quality, this can be formulated as: Is there, based on the current standards development processes and experienced interoperability and adoption problems, a need to elicit the quality of semantic standards? In this research, such elicitation involves a quality measurement instrument for semantic standards.

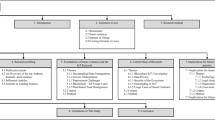

The main research question consists of four concepts that are formulated as propositions in our research framework. The research framework including propositions is depicted in Fig. 1.

Proposition for the current standards development process

One of the issues in this research is whether the quality of semantic standards can be improved. Therefore it is interesting to know whether the current standards development processes include steps focused on quality aspects of standards, and whether there is room for further improvement.

Proposition 1

The quality of standards can be increased by improving the standards development process.

Proposition for the interoperability problem

If there is room for quality improvement, it would only make sense if it leads to better semantic interoperability.

Proposition 2

Improved quality of standards leads to improved interoperability.

Proposition for the adoption problem

Even if high-quality semantic standards have a great semantic interoperability potential, such potential only materializes when the standard is actually being adopted in processes and systems.

Proposition 3

High-quality standards will have a better chance of being adopted.

Proposition for the desired assessment and visibility of quality

If the interoperability and adoption problems (addressed in propositions 2 and 3) were proven to be related to the quality of standards, then we need to verify whether the proposed solution could contribute to solving these problems. Is transparency of the quality of the standard valuable for standards developers? And, if so, do standards developers value an instrument for this?

Proposition 4

There is a need to make the quality of the standard visible by assessment.

We conducted a survey to test these propositions. Surveys are often appropriate for problem clarification and verification of problem relevance. They offer a way of getting more stakeholders involved and getting structured and comparable results in a time-efficient manner (Creswell 2009). Other methods, such as interviews or focus groups, would limit us too much in the scope of standards and respondents.

Our research addresses semantic standards in general, so that the survey had to include a broad range of semantic standards. The intended respondents were standards developers from Standard Setting Organisations (SSO). Unfortunately, to the best of our knowledge, no up-to-date list of semantic standards exists. Nevertheless the authors’ professional networks were activated and a list of possible respondents was set up, mainly from the Netherlands, Belgium, and Germany. In order to get additional and more international respondents, literature on semantic standards was assessed. Following other research (Zhao et al. 2005), xml.org was used. This list was enhanced with other semantic standards that are mentioned in literature (Hasselbring 2000; Markus et al. 2006; Nelson et al. 2005; Steinfield et al. 2007). The Internet was searched for standards developers involved in those standards. In cases where the standards developers could not be identified, we decided to send the invitation to the general e-mail address of the development organisation.

The survey was designed using generally accepted principles to set up surveys (Creswell 2009; Maxwell 2005). The questionnaire consisted of a set of questions representing the propositions, and additional items to get background information (see Table 1). For comparability reasons, only closed questions are used, with the exception of three open (control) questions on the background of the respondent (see appendix A). Invitations were limited to three people per semantic standard. All questions used the same five-point scale (Strongly disagree/Disagree/Partly disagree and Partly agree/Agree/Strongly agree). Several questions were deliberately formulated as a negation.

Subsequently, the survey results for each statement were analyzed and related to the propositions. A correlation analysis was done to find additional insights. The results section of this paper will relate the survey results to the propositions, and possible explanations for the results are given.

Approach limitations

Our research approach implies several limitations. First, this research does not address the factors that influence quality, although the characteristics of the development process of standards is expected to influence the quality of the standard, just as the management process of the standard and other context dependencies. This aspect is out of scope for this research, but addressed by other research like the research on best practices for standardization resulting in a model for development and management of semantic IS standards called BOMOS (Folmer and Punter 2011). Another limitation is the broad definition and invitation of semantic standards, resulting in a heterogeneous group of semantic standards, making it hard to generalize our results to all individual or sub-groups of semantic standards. Finally, although we broadly defined quality as “fitness for use”, implying the relation with achieving interoperability; we are aware that in practice different views on quality exist. Some relate quality solely to the specification document, while others see quality as the adoption success of the standard. To align the respondents, the survey started with presenting a definition on quality, but still we are aware that some respondents will use a different view on quality.

The chosen approach is aimed to find out the practical relevance and issues related to quality of semantic standards, and therefore deliberately contains broad definitions. The limitations of our research can be addressed in follow-up in-depth research, but will only become relevant when the propositions of our research are supported.

Survey population

The survey was held from August 25th to September 25th 2009. In total, 111 persons were invited, of which 48 responded, yielding a response rate of 43.6%. These 48 respondents represent 34 different semantic standards. These 34 standards constitute a wide collection of semantic standards, both international (e.g. HL7) and national (e.g. SETU), both governmental (e.g. StUF) and industry (e.g. Chem eStandards), and across different industry domains (e.g. healthcare, education, tourism, agriculture, finance, etc.).

Table 2 lists the semantic standards covered in this survey, including an industry segmentation (based on SAP Industry Solution Maps (SAP 2009), and the number of respondents (N). This comprehensive list of standards is also published on semanticstandards.org, and can be used as a starting point for semantic standards (Folmer 2009).

Results

The detailed survey results, including correlation analysis of the question results, are presented in appendix B. Significant correlations and other survey results that contribute to the propositions are mentioned when we review the propositions in this section.

Proposition 1

The quality of standards can be increased by improving the current standards development process.

This proposition was agreed upon by 64.6% of the respondents, while 8.3% disagreed. Other results show that quality is embedded within development organisations as 77.1% of the respondents have quality assurance as an explicit part of their standards development process. And 81.3% already have some kind of minimum quality check in place before a standard is released. Although the survey did not ask for specifics, this quality check could be in the form of a final review before a new (version of the) standard is released.

It seems that current standards contain avoidable errors since 45.8% of the respondents stated that new or updated releases of their standard include corrections to avoidable mistakes in previous versions. This correlates to the statement about whether the quality of the standard can be improved (P = 0.29; p = 0.05). This result suggests there is considerable room for improvement within the current standards. But, is there any value in additional quality? This question is covered by the second proposition.

Proposition 2

Improved quality of standards leads to improved interoperability.

A substantial 66.7% of the respondents agreed with this proposition; only 8.4% disagreed. An even higher percentage (89.6%) viewed that a minimum quality level is a necessary requirement for interoperability. These figures lead us to conclude that the respondents correlate the quality of a standard with the achieved interoperability. At the same time, 64.6% disagreed with the statement that the achieved interoperability is affected by the limitations of the standard. Respondents seemed to anticipate and accept the interoperability level achieved. Only 10.4% said that interoperability is worse than expected. This satisfaction, or acceptance, of achieved interoperability may seem surprising in relation to interoperability problems in practice. However it might be explained by the population of the survey, consisting of standards developers. Responses from standards implementers or users might lead to different results, because they might have a different opinion of interoperability in practice.

We also see a positive correlation between a standard’s achieved interoperability and whether there is quality assurance as part of the development process of the standard: Standards that have quality assurance as part of their current development process also have a minimum quality check in place (P = 0.57; p = 0.00), and rank high on the achieved interoperability (P = 0.32; p = 0.03). The data also show that where the quality of the standard could be improved the achieved interoperability is actually worse than expected (P = 0.32; p = 0.03). These results all confirm the positive correlation between quality and the achieved interoperability. This is in line with the literature suggesting that the need for interoperability is one of the key drivers for the development of standards (Nelson et al. 2005). Will the same hold for adoption, that is, will quality improvement increase adoption rates? This is addressed by the third proposition.

Proposition 3

High-quality standards will have a better chance of being adopted.

Although 60.4% saw a relation between design choices of the standard and the adoption of the standard, this proposition is not completely supported by this survey. The results show more diverse opinions on this topic; 37.5% both agreed and disagreed with the question whether adoption will be more successful when the quality of the standard is known, proven sufficient, or improved.

Also, several respondents annotated their responses stating that certain factors other than quality are more critical to the adoption of the standard:

“Don’t forget the important role of communities and the community owner(s) (dominant players). Often these companies have a strong influence on the adoption and quality of standards.”

“The degree of adoption depends on many other things than just the quality of the standard.”

“So, although improving the standard itself is always a worthy goal, if the action to be transacted cannot be agreed upon, then adoption will always be limited.”

Even so, 83.3% thought a minimum quality level of the standard is needed for high adoption rates. A significant correlation is present for the following: In the cases where the adoption is rated better than expected, design choices of the standard have influenced adoption (P = 0.48; p = 0.00), and the adoption of the standard will be more successful if the quality is known, proven sufficient or improved (P = 0.42; p = 0.00). This suggests dependence between adoption and the quality of the standard which is also supported by case studies. Both the MISMO case within the mortgage industry (Markus et al. 2006) and the RosettaNet case within the electro technical industry (Boh et al. 2007) reported a similar relation between adoption and the content of the standard. Also in a recent innovation-centric adoption and diffusion framework for standards, standards characteristics are included as area that impacts adoption (Wapakabulo Thomas 2010). Our conclusion is that a standard’s quality is seen as necessary but is not really an adequate condition for adoption. But, will knowledge about a standard’s quality contribute to quality improvement? This is addressed by the fourth proposition.

Proposition 4

There is a need to make the quality of the standard visible by assessment.

The majority (54.2%) agreed with the statement that it is difficult to improve the standard if the quality is not known (14.6% disagreed). Another 85.5% considered it helpful to have some kind of instrument to make quality transparent, thereby supporting this proposition.

A notable 81.3% would use a quality measurement instrument, when available. Interesting to see is that standards which focus on quality are potential tools for users of such an instrument: Standards that have quality assurance as part of their current development process also have a minimum quality check in place (P = 0.57; p = 0.00), and score high on the achieved interoperability (P = 0.32; p = 0.03), and will use an instrument to measure the quality when available (P = 0.40; p = 0.00). And also those respondents who agreed to the statement that improved quality might lead to improved interoperability, thought that it is helpful to have some kind of instrument to gain insight into the quality of the standard (P = 0.30; p = 0.04). This goes even further than the proposition; not only is there a need to make the quality visible by assessment, but also some kind of instrument would be welcomed to assess quality.

Remarkably, the majority of respondents who already deploy a quality check before a standard’s release also use some kind of instrument to measure the quality of the standard (P = 0.39; p = 0.01), and they would welcome a newly developed instrument and would use it when available (P = 0.38; p = 0.01).

Finally, there is no negative correlation between the current use of an instrument to measure the quality of the standards, and whether a new instrument would be welcomed. This suggests that respondents see room for enhancement or improvement of their quality assurance.

Discussion

The results of our study indicate that quality of standards is not properly addressed in current standardization practice, and this reduces standards’ quality, and therefore interoperability. What are the possible reasons for this? A thorough analysis of such causes requires further research. At this point, however, we can present three possible explanations.

First, developing standards, much like enterprise interoperability, is not considered to be a profession yet (Oude Luttighuis and Folmer 2010). Even though considerable standardization experience and professionalism in formal standardization bodies is present, the semantic standards realm is characterized by a wide range of development processes. Most semantic standards are developed by a specific domain organization, and are outside of the traditional standard development organisations. This disperses standards development knowledge and experience and limits the proliferation of such experience and re-use of process and product components. This effect is increased by the fact that most standards developers are domain experts. Domain knowledge is crucial to standards development, but differs from general standardization expertise. Moreover, education and certification, so common for other professions, are hardly available or required in the standardization field. On the positive side, standards developers are intrinsically motivated (Teichmann 2010) and eager to improve the quality of their standards when appropriate knowledge and tools are available, as shown by the survey results. Adequate assessment of the impact of this factor would require further research and might yield opportunities for a more mature standardization profession.

Second, notwithstanding the role of standards in acquiring interoperability, they do not yet rank high on a CEO’s or a CIO’s priority list. Related topics to standardization, though, such as enterprise integration, business process improvement and reducing enterprise costs have been priorities among many CIOs’ (McDonald 2010; Park and Ram 2004). However, this does not seem to result in standardization, as a topic on its own, being given high priority.

A third possible explanation of the survey results is that, even though standards developers may think interoperability could be improved, current interoperability levels satisfy current business needs. Thus there could be a discrepancy between the supply side (standards developers) and the demand side (end users) regarding standards quality and the importance of interoperability. This research focuses on the supply side, and therefore cannot reflect the viewpoint of the demand side. An imperfect standard (from the viewpoint of standards developers) might be quite acceptable to the end user. Lower interoperability levels might satisfy current needs. This argument nevertheless deserves some nuancing. The respondents surveyed are standards developers, who have diverse backgrounds but include standards users (Zhao et al. 2005). Some are employed by software vendors. Others work for user organizations. So, at least some user perspective may be expected to have been included in the survey. Needless to say, to assess the impact of imperfect standards and interoperability on the demand side, we need to extend our study to end users.

Conclusions & further research

Our main research question was:

Is there, based on the current standards development processes and experienced interoperability and adoption problems, a need to elicit the quality of semantic standards?

We interpret the survey results as a positive answer to this question. The results of the survey show that basic procedures for quality are in place in the standardization process. Most standards developers see a need for further improvement of the quality of standards and for instruments and tools that can aid in the assessment and measurability of standard quality. Figure 2 summarizes our conclusions.

Achieving inter-organizational interoperability may lead to significant cost savings, performance improvements and efficiency gains. A wealth of semantic standards have been developed for various industry sectors. We can conclude that there is a need for wider use of measures and tools to assess the quality of semantic standards. Currently there is no instrument available in the literature that enables the measurement of semantic standards quality (Folmer et al. 2009). A parallel can be drawn with the early days of Software Engineering when software development processes were informally defined and software product quality was not measured according to standard frameworks. Our current research is aimed at developing a framework for semantic interoperability standards, expressed in SMML as the domain specific language (Garcia et al. 2009). Such a framework should deal with both intrinsic aspects (the specification) as well as the situational aspects (external environment) of the standard. The quality of a standard is related to the adoption of the standard and achieved interoperability.

A widely adopted framework can make the quality of semantic standards assessable by standards developers and end users, i.e. the businesses that adopt and depend on semantic standards for interoperability. This is a much needed tool, as more and more new and competing semantic standards are being introduced. Without quality enhancement, standardization may become a failing paradigm, as argued by Cargill and Bolin (2007).

References

Bhattacharyya, G. K., & Johnson, R. A. (1977). Statistical concepts and methods. New York: Wiley.

Blind, K. (2004). The economics of standards; theory, evidence, policy. Cheltenham: Edward Elgar.

Boh, W. F., Soh, C., & Yeo, S. (2007). Standards development and diffusion: A case study of RosettaNet. Communications of the ACM, 50(12), 57–62.

Brunnermeier, S. B., & Martin, S. A. (2002). Interoperability costs in the US automotive supply chain. Supply Chain Management, 7(2), 71–82.

Cargill, C. F. (1989). Information technology standardization: Theory, process, and organizations. Bedford: Digital.

Cargill, C. F., & Bolin, S. (2007). Standardization: A failing paradigm. In S. Greenstein & V. Stango (Eds.), Standards and public policy (pp. 296–328). Cambridge: Cambridge University Press.

Creswell, J. W. (2009). Research design—Qualitative, quantitative, and mixed methods approaches (3rd ed.). Los Angeles: Sage.

De Vries, H. J. (2006). IT standards typology. In K. Jakobs (Ed.), Advanced topics in information technology standards and standardization research, vol. 1 (pp. 1–26). Hershey: Idea Group Publishing.

European Commission. (2009). Modernising ICT standardisation in the EU—The way forward. Retrieved 2010-01-20. from http://ec.europa.eu/enterprise/newsroom/cf/document.cfm?action=display&doc_id=3152&userservice_id=1&request.id=0.

Folmer, E. (2009). SemanticStandards.org. Retrieved 12–12, 2009, from www.semanticstandards.org.

Folmer, E., & Punter, M. (2011). Management and development model for open standards (BOMOS) version 2. The Hague: Netherlands Open in Connection.

Folmer, E., Berends, W., Oude Luttighuis, P., & Van Hillegersberg, J. (2009). Top IS research on quality of transaction standards, a structured literature review to identify a research gap. Paper presented at the 6th International Conference on Standardization and Innovation in Information Technology, Tokyo, Japan.

Gallaher, M. P., O’Conner, A. C., & Phelps, T. (2002). Economic impact assessment of the international standard for the exchange of product model data (STEP) in transportation equipment industries (No. RTI Project Number 07007.016).

Garcia, F., Ruiz, F., Calero, C., Bertoa, M. F., Vallecillo, A., Mora, B., et al. (2009). Effective use of ontologies in software measurement. The Knowledge Engineering Review, 24, 23–40.

Hasselbring, W. (2000). The role of standards for interoperating information systems. In K. Jakobs (Ed.), Information technology standards and standardization: A global perspective (pp. 116–130). Hershey: Idea Group Publishing.

ISO/IEC. (2001). ISO/IEC 9126–1 Software engineering—Product quality—Part 1: Quality model.

Juran, J. M., & Gryna, F. M. (Eds). (1988). Juran’s quality control handbook (4th ed.). McGraw-Hill.

Lyytinen, K., & King, J. L. (2006). Standard making: A critical research frontier for information systems research. MIS Quarterly, 30, 405–411.

Markus, M. L., Steinfield, C. W., Wigand, R. T., & Minton, G. (2006). Industry-wide information systems standardization as collective action: The case of U.S. residential mortgage industry. MIS Quarterly, 30, 439–465.

Maxwell, J. A. (2005). Qualitative research design—An interactive approach, vol. 42 (2nd ed.). Thousand Oaks: Sage.

McDonald, M. P. (2010). Leading in times of transition: The 2010 CIO agenda. Retrieved 01-12-2010, from http://blogs.gartner.com/mark_mcdonald/2010/01/19/leading-in-times-of-transition-the-2010-cio-agenda/.

Nelson, M. L., Shaw, M. J., & Qualls, W. (2005). Interorganizational system standards development in vertical industries. Electronic Markets, 15(4), 378–392.

Oude Luttighuis, P., & Folmer, E. (2010). Equipping the enterprise interoperability problem solver. In Y. Charalabidis (Ed.), Interoperability in digital public services and administration; Bridging E-government and E-business (pp. 339–354). Hershey: Information Science Reference.

Park, J., & Ram, S. (2004). Information systems interoperability: What lies beneath? ACM Transactions on Information Systems, 22(4), 595–632.

Rada, R. (1993). Standards: The language for success. Communications of the ACM, 36(12), 17–23.

Rada, R., & Ketchell, J. (2000). Sharing standards: Standardizing the European information society. Communications of the ACM, 43(3), 21–25.

SAP. (2009). Industry-specific business maps. Retrieved 11–09, 2009, from http://www.sap.com/solutions/businessmaps.

Sherif, M. H., Egyedi, T. M., & Jakobs, K. (2005). Standards of quality and quality of standards for telecommunications and information technologies. Paper presented at the Proceedings of the 4th International Conference on Standardization and Innovation in Information Technology, 2005, Geneva.

Söderström, E. (2004). Formulating a general standards life cycle. Lecture Notes in Computer Science, 3084, 263–275.

Spivak, S. M., & Brenner, F. C. (2001). Standardization essentials principles and practice. New York: Dekker.

Steinfield, C. W., Wigand, R. T., Markus, M. L., & Minton, G. (2007). Promoting e-business through vertical IS standards: Lessons from the US home mortgage industry. In S. Greenstein & V. Stango (Eds.), Standards and public policy (pp. 160–207). Cambridge: Cambridge University Press.

Teichmann, H. (2010). Effectiveness of technical experts in international standardization. Paper presented at the 15th EURAS Annual Standardisation Conference “Service Standardization”, Lausanne.

Van Wessel, R. M. (2008). Realizing business benefits from company IT standardization; case study research into the organizational value of IT standards, towards a company IT standardization management framework. Tilburg: CentER Tilburg University.

Venkatraman, S., Bala, H., Venkatesh, V., & Bates, J. (2008). Six strategies for electronic medical records systems. Communications of the ACM, 51(11), 140–144.

Wapakabulo Thomas, J. (2010). Data-exchange standards and international organizations—Adoption and diffusion. Hershey: Information Science Reference.

Zhao, K., Xia, M., & Shaw, M. J. (2005). Vertical e-business standards and standards developing organizations: A conceptual framework. Electronic Markets, 15(4), 289–300.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Boris Otto

Appendices

Appendix A—survey design

The survey consists of a number of general questions, not specifically related to the research question, and are mentioned within Table 3.

In order to disambiguate the terms quality and semantic standards and to usher the respondent into the details of the survey, a general introduction text was added to the survey:

The main research question for this survey has been: Is there, based on the current standards development processes and experienced interoperability and adoption problems, a reason to develop more knowledge about quality of standards?

The scope of the survey has been limited to semantic standards, which include business transaction standards, ontologies, vocabularies, messaging standards, and vertical industry standards. In most cases, these semantic standards are based on XML syntax, but the core of such standards is their description of the meaning of data and process.

Respondents were expected to be involved in the development or maintenance of a semantic standard. The name of the semantic standard is asked for in question 1, as well as the intended purpose (interoperability problem) of the standard.

In all questions the term standard should be read as the standard that you are involved in. Another term often used in the questionnaire is quality. It is defined as a standard’s ability to achieve its purpose, in other words, its fitness for achieving semantic interoperability. This implies that quality deals with both intrinsic aspects (the specification) and situational aspects (external environment) of the standard.

Appendix B—survey results

Below, Tables 4, 5, 6, and 7 show the survey results for each of the four research issues, respectively.

Table 8 shows the results of a correlation analysis. On both axes the sixteen statements are projected. The table shows the Pearson correlation coefficient (P) for each pair of questions. This correlation coefficient measures the strength of a linear relation between two variables. A correlation between two variables is significant when the significance probability (p-value) equals or is smaller than the level of significant α (Bhattacharyya and Johnson 1977). The correlation analysis has been done with an α of .05 and .01. Correlations which were found highly significant at the .01 level are indicated with **, and correlations which were found significant at the .05-level are indicated with *. Where significance is proven by the p-value, the correlation coefficient (P) represents the strength of the linear relation between the two variables. When the correlation coefficient (P) is negative (−), a negative relation is found, meaning that a higher score of one of the two variables is connected to a lower score of one of the other the two variables.

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Folmer, E., Oude Luttighuis, P. & van Hillegersberg, J. Do semantic standards lack quality? A survey among 34 semantic standards. Electron Markets 21, 99–111 (2011). https://doi.org/10.1007/s12525-011-0058-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12525-011-0058-y