Abstract

Deficits in the perception of social stimuli may contribute to the characteristic impairments in social interaction in high functioning autism (HFA). Although the cortical processing of voice is abnormal in HFA, it is unclear whether this gives rise to impairments in the perception of voice gender. About 20 children with HFA and 20 matched controls were presented with voice fragments that were parametrically morphed in gender. No differences were found in the perception of gender between the two groups of participants, but response times differed significantly. The results suggest that the perception of voice gender is not impaired in HFA, which is consistent with behavioral findings of an unimpaired voice-based identification of age and identity by individuals with autism. The differences in response times suggest that individuals with HFA use different perceptual approaches from those used by typically developing individuals.

Similar content being viewed by others

Although impairments in social interaction, verbal and non-verbal communication, and repetitive-restricted behavior are the more conspicuous defining characteristics of autism (American Psychiatric Association 1994), atypical perceptual abilities and responses to stimuli are other characteristic features (Gustafsson 1997; Happe 1999). Perceptual discriminative abilities in the auditory and visual domains have been found to be either enhanced or diminished in autism (Bertone et al. 2005; Samson et al. 2006). Many individuals with autism show aversive reactions to everyday sounds (Kern et al. 2006; Rosenhall et al. 1999) and to tactile (Cascio et al. 2008) and visual stimuli (Talay-Ongan and Wood 2000).

Knowledge of how stimuli are processed in autism is important for both theoretical and clinical reasons. For instance, insight into atypical perceptual features may provide a powerful theoretical framework for the perceptual impairments and their neural etiologies in autism (Bertone and Faubert 2006; Mottron et al. 2006). At a clinical level, social perception, such as perception of voices and faces, is an important channel for non-verbal communication (Boucher et al. 2000) since both voices and faces contain information about a person’s gender, age, and emotional states. Typically developing neonates respond preferentially to voices (Eisenberg 1976) and can recognize the affective content of vocal tones at the age of 6 months (Walker-Andrews 1988), underlining the developmental importance of intact perception of social stimuli. In contrast, children with autism show no preference for their mother’s voices as opposed to other speech stimuli (Klin 1991) and show no preference for speech sounds as opposed to electronic sounds (Kuhl et al. 2005).

Some authors have argued that the impairments of social perception in autism are an extension of an impaired Theory of Mind in autism (ToM) (Golan et al. 2006; Rutherford et al. 2002). The ToM theory states that people with autism have a selective difficulty in inferring the mental states of others, as measured by False Believe tasks (Baron-Cohen et al. 1985), the Reading the Mind in the Eyes Test (Baron-Cohen et al. 2001), and the Reading the Mind in the Voice Test (Rutherford et al. 2002). The latter test requires the affective content of vocalizations to be named, which is more difficult for people with autism. However, these tests do not assess perceptual capabilities but rather test socioemotional and mentalizing skills in autism.

In the visual domain, several studies have found that when individuals with autism process facial expressions (Critchley et al. 2000) or neutral faces (Pierce et al. 2001; Schultz et al. 2000), cortical areas outside the fusiform face area are activated, areas that are normally activated during the processing of non-face objects. In a behavioral study with familiar faces, children with autism were less able to identify familiar faces than their typically developing counterparts (Boucher et al. 1998). Their memory for neutral faces was found to be impaired as well (Hauck et al. 1998). Yet, these studies did not address perceptual abilities per se. That is, these findings may reflect different perceptual approaches rather than perceptual deficits. Support for the theory that individuals with autism have a different perceptual approach comes from the finding that when children with autism look at familiar faces, they pay attention to facial features different from those looked at by typically developing children (Langdell 1978). Moreover, the ability of children with HFA to recognize faces is affected less by face inversion than it is in controls (Hobson et al. 1988). This suggests that faces are processed analytically in autism rather than holistically, as is the case in typically developing children.

While less research attention has been paid to the processing of auditory social stimuli, the studies performed so far have confirmed the predictions of ToM that mental state inferences based on vocalizations are impaired in autism (Golan et al. 2006; Rutherford et al. 2002). Further, the cortical processing of neutral voices (Gervais et al. 2004) and complex voice-like sounds by individuals with autism (Boddaert et al. 2003, 2004) was found to occur outside the superior temporal sulcus area, which is the voiceselective area in normal individuals. In contrast, non-vocal sounds were processed identically in individuals with autism and controls. Thus, the pattern of findings for the cortical processing of voices is remarkably similar to that for the cortical processing of faces in autism. Yet, behavioral studies have not provided clear evidence of an impaired perception of auditory social stimuli that extends beyond mental state related impairments. As with the identification of familiar faces, children with autism are less able than controls to recognize familiar voices (Boucher et al. 1998). Yet, it is not clear whether these differences reflect perceptual-discriminatory impairments or post-sensory high-level processes. Evidence suggesting that different high-level processes are activated in autism comes from research showing that the listening preferences of infants with autism tend to be non-socially directed (Klin 1991; Kuhl et al. 2005). Moreover, children with autism fail to orient to naturally occurring social stimuli, including verbal and non-verbal stimuli (Dawson et al. 1998).

It is not clear to what extent the abnormal cortical processing of voices reflects perceptual impairments, such as gender identification. In the visual domain, gender perception is affected in autism. In a paradigm that required matching videotaped sequences to photographs of men and women, individuals with autism were found to have difficulty identifying a person’s gender from their face (Hobson 1987). In a more direct paradigm, children with autism had greater difficulty identifying the gender of faces in silent movie fragments than controls (Giovannelli 2006). Yet, in the auditory domain, impairments in social perception are mainly due to the inability to recognize emotion in voices (Golan et al. 2006; Rutherford et al. 2002).

The aim of the current study was to investigate whether the abnormal cortical processing of voices in HFA results in an impaired ability to identify the gender of speakers from their voices. Therefore, we designed an auditory discrimination task in which voices were parametrically altered in gender, such that female voices gradually changed to male voices and vice versa. This approach would be very sensitive for detecting differences in the perception of gender, since the parametric manipulation avoids ceiling effects that might arise from using just two categories of natural voices (i.e. male or female) without gradual overlap. We presumed that differences in the perceived gender of a voice between children with autism and controls would reflect perceptual-discriminatory capabilities. Furthermore, we recorded response times and presumed that differences in response times would reflect the underlying processes: that is, we presumed that longer response times would reflect greater task difficulty. Specifically, longer response times for the control group would imply that the task itself is more difficult, while longer reaction times for the HFA group would imply that the participants with HFA find the task more difficult.

Methods

Participants

Twenty children and adolescents with HFA (ages 12–17) participated in this study as well as 20 controls (ages 12–17) matched for age, gender and IQ. Audiometric screening found all participants to have normal hearing thresholds (<20 dB hearing loss) across the audiometric frequencies (250–8000 Hz) and middle ear function was within normal limits. Handedness was assessed using the Edinburgh Handedness Inventory (Oldfield 1971). All participants were assessed for verbal IQ, performance IQ, and full-scale IQ, using the Weschler Intelligence scale for Children III (WISC III) (Wechsler 2000; Wechsler 2002). In the control group, IQ was prorated using four subtests of the WISC III (Vocabulary, Similarities, Block Design and Picture completion) (Sattler 2001). No significant differences in age, gender, handedness, and IQ measures were found between the two groups (See Table 1).

The participants with HFA were recruited from referrals to the outpatient unit of Karakter Child and Adolescent Psychiatry University Center Nijmegen. The clinical diagnosis of autism was established according to the DSM-IV criteria for autistic disorder (American Psychiatric Association 1994) on the basis of a series of clinical assessments which included a detailed developmental history, clinical observation, and medical work-up by a child psychiatrist, and cognitive testing by a clinical child psychologist. Clinical diagnoses were confirmed with the Autism Diagnostic Interview—Revised (Lord et al. 1994), as assessed by a clinical psychologist trained to research standards who had not been involved in the diagnostic process. Exclusion criteria were any general medical condition affecting brain function, neurological disorders, and substance abuse.

Control participants were recruited from local schools. To exclude psychiatric disorders or learning problems, CBCL and TRF questionnaires (Achenbach 1991) were completed by the parents/caretakers and school teachers. None of the control participants had scores on the CBCL and TRF in the clinical range. The study was approved by the Medical Ethical Committee (Commissie Mensgebonden Onderzoek Arnhem Nijmegen). Informed consent was obtained from all participants and their parents.

Procedure

The second author administered the voice gender perception protocol and performed audiometric screening in one 45-min session. Participants were tested individually. In the perception protocol, sound fragments consisting of single words were presented in a sound shielded room using the stimulus delivery software package Presentation on a personal computer (Dell 810). A closed circumaural headphone (Sennheiser EH250) delivered the sounds at a fixed normal speech volume of approximately 60 dB. Participants were instructed to listen to the voice fragments and to chose, by pushing a button, whether the fragment was of a male or female voice. Participants were instructed to react as quickly and accurately as they could. Response times and the psychometric function of gender classification were recorded on line.

Instruments

Since voice-based gender inferences are usually unambiguous, ceiling effects of natural voice classification were anticipated. Therefore, the acoustic characteristics of the voice fragments were parametrically manipulated to alter the encapsulated gender information using the software package Praat (Boersma, P. and Weenink, D. Praat: doing phonetics by computer. Version 4.4.12 www.praat.org). Perception of gender in human voices is based on two main characteristics: median pitch and formants. The median pitch is predominantly determined by the length of the vocal chords, such that the longer vocal cords of men give rise to lower sounds. The resonant frequencies, or formants, are mainly determined by the size and shape of the vocal tract, including the tongue, pharynx, and laryngeal, oral and nasal cavities. The smaller vocal tract in women yields a different distribution of formants, making it possible to correctly classify a speaker’s gender even when the median pitch is atypical, for example, a man with a high voice or a woman with a low voice.

To create voice fragments that gradually changed from masculine to feminine and vice versa, single word speech fragments were taken from radio plays and transformed into 10 subsequent categories by shifting the formant ratio and median pitch in equal amounts to a maximum of 1.2 formant-shift-ratio and +250 Hz median-pitch-shift to convert male voices and to a maximum of 1/1.2 formant-shift-ratio and −140 Hz median-pitch-shift to convert female voices into masculine voices. Only neutral non-emotional single word speech fragments were selected. The speech fragments had an average duration of 1.5 s, with a 2-s pause between subsequent fragments. All voice fragments were played at random so that information from the preceding voice fragment was uninformative for future gender judgments. In total, 400 voice fragments were used, with 40 fragments being played for each morphing category: 20 originally male and 20 originally female fragments. The transformed fragments were tested among 8 psychology students to ensure that the transformed masculine fragments indeed sounded feminine and vice versa. The transformed male voices were found to sound feminine and vice versa, but as the transformation increased further, the voices tended to sound more computer-like and less human. The more computer-like sound quality likely reflects artifacts that arise from the effects of phase incoherence, unnatural phase dispersion, and high spectral variance (Hui Ye Young 2004).

Statistical Analysis

This study focused on two outcome parameters: ‘accuracy of gender perception’ and ‘response time for gender perception’. These two dependent variables were combined into one multivariate analysis of variance (MANOVA) for conservation of alpha error. Independent variables were Participant group as a between-subject variable and Manipulation and Gender as within-subject variables. Manipulation consisted of the 10 increasing steps in which voices typical for one gender were transformed to the other, while Gender represented the transformation of either originally masculine or originally feminine voices. The factor Measure represented the two dependent variables ‘accuracy of gender perception’ and ‘response time for gender perception’. SPSS for Windows (Release 14.0) was used for statistical analysis and significance test were two-tailed and evaluated at an alpha level of 0.05.

Results

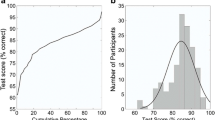

Table 2 shows a summary of the results. As expected, we found a significant main effect for manipulation, thus morphed male voices were indeed perceived as feminine and morphed female voices were perceived as masculine by both groups of participants. Most importantly, however, was a significant Manipulation by Measure by Gender by Participant group interaction effect. This four way interaction effect indicated task manipulation effects between the participant groups that differed between the measurements (‘accuracy of gender perception’ and ‘response time for gender perception’). To explore these differences, four separate MANOVAs were run for the dependent variables ‘accuracy of gender perception’ and ‘response time for gender perception’ with either male voice or female voice. The MANOVAs with accuracy of gender perception of manipulated male voices, accuracy of gender perception of manipulated female voices, and response time for gender perception of manipulated female voices did not reveal any group effects (see Table 3). Thus, the finding of main interest is that the perception of morphed voices did not differ between both participant groups, indicating preserved discriminatory skills for gender information in voices in autism (see also Figs. 1 and 2).

Gender perception: male to female voice. It depicts the percentage of voice fragments perceived as male as a function of voice manipulation. In category 1–4, the majority of voice fragments were perceived as male but the proportion decreased gradually, while in the more transformed categories 5–10 the majority of voice fragments were perceived as female. The transition point lies between 4 and 5. There was no difference in voice perception between the two groups of participants (F(9,342) = 1.011, p = 0.431)

Gender perception: female to male voice. It depicts the percentage of voice fragments perceived as female as a function of voice manipulation. In category 1–6, the majority of voice fragments were perceived as male. The transition point lies between 6 and 7. There was no difference in voice perception between the two groups of participants (F(9,342) = 1.126, p = 0.343)

However, the MANOVA on response time for gender perception of manipulated male voices showed a significant Manipulation by Participant group effect (F(9,342) = 2.349, p = 0.014). Tests of the within-subjects contrast showed that the groups differed in the extent of linearity (F(1,38) = 6.478, p = 0.015). Figure 2 shows that the response times in the control group increased linearly, whereas the response curve in the HFA group resembled a quadratic function, first increasing and then flattening at the top to decrease again. This might be indicative of different perceptual processes in the two groups. As the extent of voice manipulation increased, the ‘naturalness’ of the voices decreased, which is reflected by a linear increase in the response time in the control group. In contrast, in the HFA group the response times seemed to be mainly determined by the task difficulty, with the highest response times occurring halfway through the test, when the gender of the voice fragments was least determined (Fig. 3).

Response times for gender perception: male to female. It depicts the response time per voice category for transformed male voices. The response times differ significantly for the extent of linearity (F(1,38) = 6.478; p = 0.015), indicating that the perceptual process was different in individuals with autism and typically developing controls

The response times for transformed female voices showed a greater variation than those for transformed male voices, which may be due to the fact that the acoustical parameters of female voices have a greater interspeaker variability than male voices; the discussion session will discuss the potential origin of this difference in more detail (Fig. 4).

Discussion

In the current study we investigated the auditory social perceptual capabilities of individuals with HFA and age, IQ, and gender-matched typically developing controls, using voice fragments that were parametrically manipulated to change the speaker’s gender. Although cortical voice processing has been found to be abnormal in autism (Gervais et al. 2004), it was not clear whether this reflected an impaired ability to perceive the social characteristics of voices in autism. In our voice gender paradigm, we found no differences in voice gender perception between children and adolescents with HFA and typically developing children and adolescents. Since we used a sensitive parametric study design to avoid ceiling effects, these negative findings indicate that individuals with HFA have an intact ability to discern the gender of a voice. This suggests that the impairments of auditory social perception shown by these individuals are confined to mentalization/emotion related impairments as predicted by impaired ToM in HFA. Extraction of gender, identity, and age information from voices is not impaired in HFA. Rutherford et al. (2002) found that people with autism could adequately infer speakers’ age from vocalizations, although they did have difficulties perceiving the affective content. Boucher and colleagues (2000) reported a comparable ability to discriminate unfamiliar voices between participants with autism and participants without autism. We furthermore found significant differences between the participant groups in the response times of the transformed male voices. While the response time increased linearly with increasing male voice manipulation in the controls, the response time curve of HFA group resembled a parabola, possibly indicating that different higher-level processes were used to perform the perceptual task. The involvement of different higher level processes during the performance of social perceptual tasks in autism has been reported, mostly related to directing attention to socially relevant clues (Dawson et al. 1998; Pierce et al. 1997) and analytic or piecemeal rather than holistic processing of social stimuli (Pelphrey et al. 2002) (for a review see Jemel et al. 2006). Thus, people with HFA may use a different, less-socially directed, perceptual approach even though the perception of social stimuli per se is not affected.

Gervais and colleagues proposed that an abnormal processing of voices might be one of the factors underlying the social anomalies in autism because (1) voices provide relevant social information about others, and (2) they found abnormal cortical activation in the voice selective superior temporal sulcus (STS) in autism for voice sounds with neutral affect compared to environmental sounds (Gervais et al. 2004). The STS is part of the hierarchically organized auditory system and is thought to be specialized for extracting auditory object features, such as speaker-related clues, and for transmission of this information to other areas for multimodal integration (Belin et al. 2000). The problems with extracting social information from vocalizations in autism seem to be confined to the perception of affective content, while gender, age, and identity perception seem unimpaired. Since Gervais et al. used voice fragments with neutral affect, it seems unlikely that the cortical processing abnormalities observed reflected an impaired perception of affect in autism. Then, how can the discrepancy between the cortical perceptual pattern and the behavioral perceptual pattern in HFA be explained? First, in general, cortical processing is not equivalent to behavioral performance in a one-to-one manner, as exemplified by the fact that children with a hemispherectomy in early life may show a remarkable degree of sensorimotor function (Holloway et al. 2000). Second, cortical activation may be less strongly correlated with behavioral performance in individuals with autism than in typically developing individuals because different perceptual approaches may activate other cortical areas rather than give rise to perceptual deficits per se (Jemel et al. 2006). Evidence for this assumption comes from data on face processing in autism, in which the perceptual approach was studied using partially covered photographs of faces (Joseph and Tanaka 2003), inverted faces (Teunisse and de Gelder 2003) and infrared eye-trackers (Klin et al. 2002; Pelphrey et al. 2002). These studies support the idea that people with autism have a locally oriented perception to facial components and utilize different scan paths that focus more on non-relevant features, such as ears or hair, and on lower regions of the face than controls, while perceptual abilities need not be impaired (Jemel et al. 2006). Further evidence for the idea that perceptual approaches mediate abnormal cortical activation in autism comes from the finding that activation of the fusiform gyrus is correlated with the amount of time spent fixating on the eyes of face stimuli in an fMRI task (Dalton et al. 2005).

The result of the current study, in which different response times for the transformed male voices were found, are consistent with individuals with HFA having a different perceptual approach from typically developing individuals. When performing a social discrimination task, participants with autism were equally able to identify the gender of voice fragments and the response time curve seemed to be a function of task difficulty: fast response times at both ends of the psychometric curve when gender was unambiguous and slower response times halfway the curve when gender was at its changing point and thus most ambiguous. In contrast, in the control group, the response time seemed to be a function of voice manipulation and increased as the naturalness of the voice fragments decreased.

In the present study, some limitations have to be taken into account. First, the response times for the transformed female voices were more variable than those for male voices in both groups of participants. This could be due to the nature of the stimuli, that is, morphing male to female voices gives a smoother transition than morphing female to male voices. Indeed, there are acoustic differences between male and female voices that could give rise to different ‘morphing characteristics’ (Mendoza et al. 1996). The spectral tilt of female voices is lower than that of male voices as a consequence of greater levels of aspiration noise, which causes the female voice to have a more “breathy” quality than the male voice (Mendoza et al. 1996). Furthermore, male voices show less interspeaker variation in spectral tilt, aspiration noise, and first-formant bandwidth, probably as a consequence of more complete glottal closure in males, leading to less energy loss at the glottis (Hanson and Chuang 1999). Thus, the greater variation in acoustic parameters in female voices may make it more difficult to transform female voices into male voices, which are characterized by a relative absence of spectral tilt, aspiration noise, and first formant bandwidth variation. The greater variation in response time in both participant groups for the transformed female voice fragments (as opposed to the male voice fragments) may thus be a reflection of the greater variety of acoustic parameters in female voices. Second, future studies might incorporate additional variables, such as measures of Theory of Mind, to examine whether the different perceptual pattern observed in the current study can be explained by a difference in mentalizing ability between both the two groups of participants. Third, possible differences in attention between the two groups of participants could give rise to different response patterns. Yet, potential differences in attention between the two groups of participants in the current study would be evident as differences in response time. Since the average response times did not differ between the groups, overall differences in attention are not likely to have influenced the results.

To conclude, the difference in response times between participants with HFA and typically developing participants could be interpreted as a consequence of different perceptual processes in HFA analogous to the different perceptual processes involved in face recognition in these individuals, in combination with the absence of impairments in extracting social information from voices. The concept that individuals with HFA have intact perceptual capabilities but different perceptual processes has implications for psychological models of HFA, since research should not selectively focus on whether people with autism are able to perceive social stimuli, but rather focus on whether people with autism direct their attention toward relevant features of social stimuli in real-life situations.

References

Achenbach T. M. (1991). Manual for the child behavior checklist. Burlington: University of Vermont.

American Psychiatric Association. (1994). Diagnostic and statistical manual of mental disorders. Washington, DC: American Psychiatric Association.

Baron-Cohen, S., Leslie, A. M., & Frith, U. (1985). Does the autistic-child have a theory of mind. Cognition, 21, 37–46.

Baron-Cohen, S., Wheelwright, S., Hill, J., Raste, Y., & Plumb, I. (2001). The “Reading the Mind in the Eyes” test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry and Allied Disciplines, 42, 241–251.

Belin, P., Zatorre, R. J., Lafaille, P., Ahad, P., & Pike, B. (2000). Voice-selective areas in human auditory cortex. Nature, 403, 309–312.

Bertone, A., & Faubert, J. (2006). Demonstrations of decreased sensitivity to complex motion information not enough to propose an autism-specific neural etiology. Journal of Autism and Developmental Disorders, 36, 55–64.

Bertone, A., Mottron, L., Jelenic, P., & Faubert, J. (2005). Enhanced and diminished visuo-spatial information processing in autism depends on stimulus complexity. Brain, 128, 2430–2441.

Boddaert, N., Belin, P., Chabane, N., Poline, J. B., Barthelemy, C., Mouren-Simeoni, M. C., Brunelle, F., Samson, Y., & Zilbovicius, M. (2003). Perception of complex sounds: Abnormal pattern of cortical activation in autism. American Journal of Psychiatry, 160, 2057–2060.

Boddaert, N., Chabane, N., Belin, P., Bourgeois, M., Royer, V., Barthelemy, C., Mouren-Simeoni, M. C., Philippe, A., Brunelle, F., Samson, Y., & Zilbovicius, M. (2004). Perception of complex sounds in autism: Abnormal auditory cortical processing in children. American Journal of Psychiatry, 161, 2117–2120.

Boucher, J., & Lewis, V. (1992). Unfamiliar face recognition in relatively able autistic-children. Journal of Child Psychology and Psychiatry and Allied Disciplines, 33, 843–859.

Boucher, J., Lewis, V., & Collis, G. (1998). Familiar face and voice matching and recognition in children with autism. Journal of Child Psychology and Psychiatry, 39, 171–181.

Boucher, J., Lewis, V., & Collis, G. M. (2000). Voice processing abilities in children with autism, children with specific language impairments, and young typically developing children. Journal of Child Psychology and Psychiatry, 41, 847–857.

Cascio, C., McGlone, F., Folger, S., Tannan, V., Baranek, G., Pelphrey, K. A., & Essick, G. (2008). Tactile perception in adults with autism: A multidimensional psychophysical study. Journal of Autism and Developmental Disorders, 38, 127–137.

Critchley, H. D., Daly, E. M., Bullmore, E. T., Williams, S. C. R., Van Amelsvoort, T., Robertson, D. M., Rowe, A., Phillips, M., McAlonan, G., Howlin, P., & Murphy, D. G. M. (2000). The functional neuroanatomy of social behaviour - changes in cerebral blood flow when people with autistic disorder process facial expressions. Brain, 123, 2203–2212.

Dalton, K. M., Nacewicz, B. M., Johnstone, T., Schaefer, H. S., Gernsbacher, M. A., Goldsmith, H. H., Alexander, A. L., & Davidson, R. J. (2005). Gaze fixation and the neural circuitry of face processing in autism. Nature Neuroscience, 8, 519–526.

Dawson, G., Meltzoff, A. N., Osterling, J., Rinaldi, J., & Brown, E. (1998). Children with autism fail to orient to naturally occurring social stimuli. Journal of Autism and Developmental Disorders, 28, 479–485.

Eisenberg, R. (1976). Auditory competence in early life: The roots of communicative behavior. Baltimore: University Park Press.

Gervais, H., Belin, P., Boddaert, N., Leboyer, M., Coez, A., Sfaello, I., Barthelemy, C., Brunelle, F., Samson, Y., & Zilbovicius, M. (2004). Abnormal cortical voice processing in autism. Nature Neuroscience, 7, 801–802.

Giovannelli, J. (2006). Face processing abilities in children with autism. Dissertation Abstracts International: The Sciences and Engineering, 67, 3450.

Golan, O., Baron-Cohen, S., & Hill, J. (2006). The Cambridge mindreading (CAM) face-voice battery: Testing complex emotion recognition in adults with and without Asperger syndrome. Journal of Autism and Developmental Disorders, 36, 169–183.

Gustafsson, L. (1997). Inadequate cortical feature maps: A neural circuit theory of autism. Biological Psychiatry, 42, 1138–1147.

Hanson, H. M., & Chuang, E. S. (1999). Glottal characteristics of male speakers: Acoustic correlates and comparison with female data. Journal of the Acoustical Society of America, 106, 1064–1077.

Happe, F. (1999). Autism: Cognitive deficit or cognitive style? Trends in Cognitive Sciences, 3, 216–222.

Hauck, M., Fein, D., Maltby, N., Waterhouse, L., & Feinstein, C. (1998). Memory for faces in children with autism. Child Neuropsychology, 4, 187–198.

Hobson, R. P. (1987). The autistic child’s recognition of age- and sex-related characteristics of people. Journal of Autism and Developmental Disorders, 17, 63–79.

Hobson, R. P., Ouston, J., & Lee, A. (1988). Whats in a face - the case of autism. British Journal of Psychology, 79, 441–453.

Holloway, V., Gadian, D. G., Vargha-Khadem, F., Porter, D. A., Boyd, S. G., & Connelly, A. (2000). The reorganization of sensorimotor function in children after hemispherectomy. A functional MRI and somatosensory evoked potential study. Brain, 123(Pt 12), 2432–2444.

Hui Ye Young, S. (2004). High quality voice morphing. In International conference on acoustics, speech, and signal processing (Vol. 1, pp. 9–12).

Jemel, B., Mottron, L., & Dawson, M. (2006). Impaired face processing in autism: Fact or artifact? Journal of Autism and Developmental Disorders, 36, 91–106.

Joseph, R. M., & Tanaka, J. (2003). Holistic and part-based face recognition in children with autism. Journal of Child Psychology and Psychiatry and Allied Disciplines, 44, 529–542.

Kern, J. K., Trivedi, M. H., Garver, C. R., Grannemann, B. D., Andrews, A. A., Savla, J. S., Johnson, D. G., Mehta, J. A., & Schroeder, J. L. (2006). The pattern of sensory processing abnormalities in autism. Autism, 10, 480–494.

Klin, A. (1991). Young autistic childrens listening preferences in regard to speech - a possible characterization of the symptom of social withdrawal. Journal of Autism and Developmental Disorders, 21, 29–42.

Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry, 59, 809–816.

Kuhl, P. K., Coffey-Corina, S., Padden, D., & Dawson, G. (2005). Links between social and linguistic processing of speech in preschool children with autism: Behavioral and electrophysiological measures. Developmental Science, 8, F1–F12.

Langdell, T. (1978). Recognition of faces - approach to study of autism. Journal of Child Psychology and Psychiatry and Allied Disciplines, 19, 255–268.

Lord, C., Rutter, M., & Lecouteur, A. (1994). Autism diagnostic interview-revised - a revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders, 24, 659–685.

Mendoza, E., Valencia, N., Munoz, J., & Trujillo, H. (1996). Differences in voice quality between men and women: Use of the long-term average spectrum (LTAS). Journal of Voice, 10, 59–66.

Mottron, L., Dawson, M., Soulieres, I., Hubert, B., & Burack, J. (2006). Enhanced perceptual functioning in autism: An update, and eight principles of autistic perception. Journal of Autism and Developmental Disorders, 36, 27–43.

Oldfield, R. C. (1971). Assessment and analysis of handedness - Edinburgh inventory. Neuropsychologia, 9, 97.

Pelphrey, K. A., Sasson, N. J., Reznick, J. S., Paul, G., Goldman, B. D., & Piven, J. (2002). Visual scanning of faces in autism. Journal of Autism and Developmental Disorders, 32, 249–261.

Pierce, K., Glad, K. S., & Schreibman, L. (1997). Social perception in children with autism: An attentional deficit? Journal of Autism and Developmental Disorders, 27, 265–282.

Pierce, K., Muller, R. A., Ambrose, J., Allen, G., & Courchesne, E. (2001). Face processing occurs outside the fusiform ‘face area’ in autism: Evidence from functional MRI. Brain, 124, 2059–2073.

Rosenhall, U., Nordin, V., Sandstrom, M., Ahlsen, G., & Gillberg, C. (1999). Autism and hearing loss. Journal of Autism and Developmental Disorders, 29, 349–357.

Rutherford, M. D., Baron-Cohen, S., & Wheelwright, S. (2002). Reading the mind in the voice: A study with normal adults and adults with Asperger syndrome and high functioning autism. Journal of Autism and Developmental Disorders, 32, 189–194.

Samson, F., Mottron, L., Jemel, B., Belin, P., & Ciocca, V. (2006). Can spectro-temporal complexity explain the autistic pattern of performance on auditory tasks? Journal of Autism and Developmental Disorders, 36, 65–76.

Sattler, J. (2001). Assessment of children: Cognitive applications. San Diego: Jerome M. Sattler Publisher.

Schultz, R. T., Gauthier, I., Klin, A., Fulbright, R. K., Anderson, A. W., Volkmar, F., Skudlarski, P., Lacadic, C., Cohen, D. J., & Gore, J. C. (2000). Abnormal ventral temporal cortical activity during face discrimination among individuals with autism and Asperger syndrome. Archives of General Psychiatry, 57, 331–340.

Talay-Ongan, A., & Wood, K. (2000). Unusual sensory sensitivities in autism: A possible crossroads. International Journal of Disability, Development and Education, 47, 201–212.

Teunisse, J. P., & de Gelder, B. (2003). Face processing in adolescents with autistic disorder: The inversion and composite effects. Brain and Cognition, 52, 285–294.

Walker-Andrews, A. (1988). Infants’ perception of the affordances of expressive behaviors. In C. Rovee-Collier (Ed.), Advances in infancy research. Norwood: Ablex.

Wechsler, D. (2000). WAIS-III Nederlandstalige bewerking. Technische handleiding. London: The Psychological Corporation.

Wechsler, D. (2002). Wechsler intelligence scale for children. Editie NL Handleiding. London: Psychological Corporation.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Groen, W.B., van Orsouw, L., Zwiers, M. et al. Gender in Voice Perception in Autism. J Autism Dev Disord 38, 1819–1826 (2008). https://doi.org/10.1007/s10803-008-0572-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-008-0572-8