Abstract

Assessors’ scores in performance assessments are known to be highly variable. Attempted improvements through training or rating format have achieved minimal gains. The mechanisms that contribute to variability in assessors’ scoring remain unclear. This study investigated these mechanisms. We used a qualitative approach to study assessors’ judgements whilst they observed common simulated videoed performances of junior doctors obtaining clinical histories. Assessors commented concurrently and retrospectively on performances, provided scores and follow-up interviews. Data were analysed using principles of grounded theory. We developed three themes that help to explain how variability arises: Differential Salience—assessors paid attention to (or valued) different aspects of the performances to different degrees; Criterion Uncertainty—assessors’ criteria were differently constructed, uncertain, and were influenced by recent exemplars; Information Integration—assessors described the valence of their comments in their own unique narrative terms, usually forming global impressions. Our results (whilst not precluding the operation of established biases) describe mechanisms by which assessors’ judgements become meaningfully-different or unique. Our results have theoretical relevance to understanding the formative educational messages that performance assessments provide. They give insight relevant to assessor training, assessors’ ability to be observationally “objective” and to the educational value of narrative comments (in contrast to numerical ratings).

Similar content being viewed by others

References

Alves de Lima, A., Barrero, C., Baratta, S., Castillo Costa, Y., Bortman, G., Carabajales, J., et al. (2007). Validity, reliability, feasibility and satisfaction of the Mini-Clinical Evaluation Exercise (Mini-CEX) for cardiology residency training. Medical Teacher, 29(8), 785–790.

Alves de Lima, A., Conde, D., Costabel, J., Corso, J., & van der Vleuten, C. (2011). A laboratory study on the reliability estimations of the mini-CEX. Advances in Health Sciences Education. On-line ahead of print. doi:10.1007/s10459-011-9343-y.

Bargh, J. A., & Chartrand, T. L. (1999). The unbearable automaticity of being. American Psychologist, 54(7), 462–479.

Boshuizen, H. P. A., & Schmidt, H. G. (1992). On the role of biomedical knowledge in clinical reasoning by experts, intermediates and novices. Cognitive Science, 16, 153–184.

Boulet, J. R., McKinley, D. W., Norcini, J. J., & Whelan, G. P. (2002). Assessing the comparability of standardized patient and physician evaluations of clinical skills. Advances in Health Sciences Education: Theory and Practice, 7(2), 85–97.

Brutus, S. (2010). Words versus numbers: A theoretical exploration of giving and receiving narrative comments in performance appraisal. Human Resource Management Review, 20(2), 144–157.

BTS. (2003). British Thoracic Society guidelines for the management of suspected acute pulmonary embolism. Thorax, 58(6), 470–483.

Cook, D. A., & Beckman, T. J. (2009). Does scale length matter? A comparison of nine- versus five-point rating scales for the mini-CEX. Advances in Health Sciences Education: Theory and Practice, 14(5), 655–664.

Cook, D. A., Beckman, T. J., Mandrekar, J. N., & Pankratz, V. S. (2010). Internal structure of mini-CEX scores for internal medicine residents: Factor analysis and generalizability. Advances in Health Sciences Education: Theory and Practice, 15(5), 633–645.

Cook, D. A., Dupras, D. M., Beckman, T. J., Thomas, K. G., & Pankratz, V. S. (2009). Effect of rater training on reliability and accuracy of mini-CEX scores: A randomized, controlled trial. Journal of General Internal Medicine, 24(1), 74–79.

DeNisi, A. (1984). A cognitive view of the performance appraisal process: A model and research propositions. Organizational Behavior and Human Performance, 33(3), 360–396.

Donato, A. A., Pangaro, L., Smith, C., Rencic, J., Diaz, Y., Mensinger, J., et al. (2008). Evaluation of a novel assessment form for observing medical residents: A randomised, controlled trial. Medical Education, 42(12), 1234–1242.

Ericsson, K., & Simon, H. (1980). Verbal reports as data. Psychological Review, 87, 215–251.

Feldman, J. M. (1981). Beyond attribution theory: Cognitive processes in performance appraisal. Journal of Applied Psychology, 66(2), 127–148.

Fernando, N., Cleland, J., McKenzie, H., & Cassar, K. (2008). Identifying the factors that determine feedback given to undergraduate medical students following formative mini-CEX assessments. Medical Education, 42(1), 89–95.

Gingerich, A., Regehr, G., & Eva, K. W. (2011). Rater-based assessments as social judgments: Rethinking the etiology of rater errors. Academic Medicine: Journal of the Association of American Medical Colleges, 86(10 Suppl), S1–S7.

Ginsburgh, S., Mcilroy, J., Oulanova, O., Eva, K., & Regehr, G. (2010). Toward authentic clinical evaluation: Pitfalls in the pursuit of competency. Academic Medicine, 85(5), 780–786.

Govaerts, M. J. B., Schuwirth, L. W. T., Van der Vleuten, C. P. M., & Muijtjens, A. M. M. (2011). Workplace-based assessment: Effects of rater expertise. Advances in Health Sciences Education: Theory and Practice, 16(2), 151–165.

Govaerts, M. J. B., van der Vleuten, C. P. M., Schuwirth, L. W. T., & Muijtjens, A. M. M. (2007). Broadening perspectives on clinical performance assessment: Rethinking the nature of in-training assessment. Advances in Health Sciences Education: Theory and Practice, 12(2), 239–260.

Hawkins, R. E., Margolis, M. J., Durning, S. J., & Norcini, J. J. (2010). Constructing a validity argument for the mini-clinical evaluation exercise: A review of the research. Academic Medicine: Journal of the Association of American Medical Colleges, 85(9), 1453–1461.

Holmboe, E. S., Hawkins, R. E., & Huot, S. J. (2004). Effects of training in direct observation of medical residents’ clinical competence. Annals of Internal Medicine, 140, 874–881.

Holmboe, E. S., Huot, S., Chung, J., Norcini, J., & Hawkins, R. E. (2003). Construct validity of the miniclinical evaluation exercise (miniCEX). Academic Medicine: Journal of the Association of American Medical Colleges, 78(8), 826–830.

Jawahar, I. M., & Williams, C. R. (1997). Where all the children were above average: The performance appraisal purpose effect. Personnel Psychology, 50, 905–925.

Klimoski, R., & Inks, L. (1990). Accountability forces in performance appraisal. Organisational Behavior and human decision processes, 45, 194–208.

Kluger, A. N., & van Dijk, D. (2010). Feedback, the various tasks of the doctor, and the feedforward alternative. Medical Education, 44, 1166–1174.

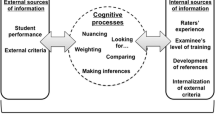

Kogan, J. R., Conforti, L., Bernabeo, E., Iobst, W., & Holmboe, E. (2011). Opening the black box of clinical skills assessment via observation: A conceptual model. Medical Education, 45(10), 1048–1060.

Kogan, J. R., Hess, B. J., Conforti, L. N., & Holmboe, E. S. (2010). What drives faculty ratings of residents’ clinical skills? The impact of faculty’s own clinical skills. Academic Medicine: Journal of the Association of American Medical Colleges, 85(10 Suppl), S25–S28.

Kogan, J. R., Holmboe, E. S., & Hauer, K. E. (2009). Tools for direct observation and assessment of clinical skills of medical trainees: A systematic review. JAMA: The Journal of the American Medical Association, 302(12), 1316–1326.

Kurtz, S., Silverman, J., Benson, J., & Draper, J. (2003). Marrying content and process in clinical method teaching: Enhancing the Calgary-Cambridge guides. Academic Medicine: Journal of the Association of American Medical Colleges, 78(8), 802–809.

Lurie, S. J., Mooney, C. J., & Lyness, J. M. (2009). Measurement of the general competencies of the accreditation council for graduate medical education: A systematic review. Academic Medicine: Journal of the Association of American Medical Colleges, 84(3), 301–309.

Margolis, M. J., Clauser, B. E., Cuddy, M. M., Ciccone, A., Mee, J., Harik, P., et al. (2006). Use of the mini-clinical evaluation exercise to rate examinee performance on a multiple-station clinical skills examination: A validity study. Academic Medicine: Journal of the Association of American Medical Colleges, 81(10 Suppl), S56–S60.

Martin, D. (2003). Martin’s Map: A conceptual framework for teaching and learning the medical interview using a patient-centred approach. Medical Education, 37, 1145–1153.

McLaughlin, K., Coderre, S., Mortis, G., Fick, G., & Mandin, H. (2007). Can concept sorting provide a reliable, valid and sensitive measure of medical knowledge structure? Advances in Health Sciences Education: Theory and Practice, 12(3), 265–278.

Newble, D. (2004). The metric of medical education techniques for measuring clinical competence: Objective structured clinical examinations. Medical Education, 44, 199–203.

NICE. (2010). Transient loss of consciousness (“blackouts”) management in adults and young people. National Institute for Health and Clinical Excellence. Retrieved December 9, 2011, from http://www.nice.org.uk/nicemedia/live/13111/50452/50452.pdf.

Nisbett, R. E., & Wilson, T. D. (1977). Telling more than we can know: Verbal reports on mental processes. Psychological Review, 84(3), 231–259.

Norcini, J. J. (2003). ABC of teaching and learning in medicine work based assessment. British Medical Journal, 326, 753–755.

Norcini, J. J. (2005). The mini clinical evaluation exercise. The Clinical Teacher, 2(1), 25–30.

Norcini, J. J., Blank, L. L., Arnold, G. K., & Kimball, H. R. (1997). Examiner differences in the mini-CEX. Advances in Health Sciences Education: Theory and Practice, 2(1), 27–33.

Pelgrim, E. A. M., Kramer, A. W. M., Mokkink, H. G. A., van den Elsen, L., Grol, R. P. T. M., & van der Vleuten, C. P. M. (2011). In-training assessment using direct observation of single-patient encounters: A literature review. Advances in Health Sciences Education: Theory and Practice, 16(1), 131–142.

Schleicher, D. J., Day, D. V., Mayes, B. T., & Riggio, R. E. (2002). A new frame for frame-of-reference training: Enhancing the construct validity of assessment centers. Journal of Applied Psychology, 87(4), 735–746.

Schuh, L. A., London, Z., Neel, R., Brock, C., Kissela, B. M., Schultz, L., et al. (2009). Education research: Bias and poor interrater reliability in evaluating the neurology clinical skills examination. Neurology, 73(11), 904–908.

SIGN. (2008). Management of acute upper and lower gastrointestinal bleeding. A national clinical guideline. Scottish Intercollegiate Guideline Network. Retrieved December 9, 2011, from http://www.sign.ac.uk/pdf/sign105.pdf.

Strauss, A., & Corbin, J. (1998). Basics of qualitative research techniques and procedures for developing grounded theory (2nd ed.). Thousand Oaks, California: Sage.

Streiner, D., & Norman, G. (2008). Health measurement scales (4th ed.). Oxford: Oxford University Press.

Thammasitboon, S., Mariscalco, M. M., Yudkowsky, R., Hetland, M. D., Noronha, P. A., & Mrtek, R. G. (2008). Exploring individual opinions of potential evaluators in a 360-degree assessment: Four distinct viewpoints of a competent resident. Teaching and Learning in Medicine, 20(4), 314–322.

van der Vleuten, C. P. M., & Schuwirth, L. W. T. (2005). Assessing professional competence: From methods to programmes. Medical Education, 39(3), 309–317.

Weller, J. M., Jolly, B., Misur, M. P., Merry, A. F., Jones, A., Crossley, J. G. M., et al. (2009). Mini-clinical evaluation exercise in anaesthesia training. British Journal of Anaesthesia, 102(5), 633–641.

Wilkinson, J. R., Crossley, James. G. M., Wragg, A., Mills, P., Cowan, G., & Wade, W. (2008). Implementing workplace-based assessment across the medical specialties in the United Kingdom. Medical Education, 42(4), 364–373.

Williams, R. G., Klamen, D. A., & McGaghie, W. C. (2003). Cognitive, social and environmental sources of bias in clinical performance ratings. Teaching and Learning in Medicine, 15(4), 270–292.

Wilson, T. D. (1994). The proper protocol: Validity and completeness of verbal reports. Psychological Science, 5(5), 249–252.

Woehr, D. J. (1994). Rater training for performance appraisal: A quantitative review. Journal of Occupational and Organizational Psychology, 67, 189–205.

Woolf, K., Haq, I., McManus, I. C., Higham, J., & Dacre, J. (2008). Exploring the underperformance of male and minority ethnic medical students in first year clinical examinations. Advances in Health Sciences Education: Theory and Practice, 13(5), 607–616.

Acknowledgments

Thanks to the foundation doctors and simulated patients who were featured in the study videos, to the physicians who helped in validation of the videos, to University Hospital of South Manchester NHS Foundation Trust for acting as the project’s sponsor, to the North Western Deanery for their endorsement and support and to all the research participants. Thanks also to the paper’s reviewers for their very helpful comments on the manuscript. No external funding was received for the study.

Conflicts of interest

None declared.

Ethical approval

Obtained from Manchester West Regional Ethics Committee, ref 09/H1014/72.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yeates, P., O’Neill, P., Mann, K. et al. Seeing the same thing differently. Adv in Health Sci Educ 18, 325–341 (2013). https://doi.org/10.1007/s10459-012-9372-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-012-9372-1