Abstract

This study investigates the effects of profound acquired unilateral deafness on the adult human central auditory system by analyzing long-latency auditory evoked potentials (AEPs) with dipole source modeling methods. AEPs, elicited by clicks presented to the intact ear in 19 adult subjects with profound unilateral deafness and monaurally to each ear in eight adult normal-hearing controls, were recorded with a 31-channel system. The responses in the 70–210 ms time window, encompassing the N1b/P2 and Ta/Tb components of the AEPs, were modeled by a vertically and a laterally oriented dipole source in each hemisphere. Peak latencies and amplitudes of the major components of the dipole waveforms were measured in the hemispheres ipsilateral and contralateral to the stimulated ear. The normal-hearing subjects showed significant ipsilateral–contralateral latency and amplitude differences, with contralateral source activities that were typically larger and peaked earlier than the ipsilateral activities. In addition, the ipsilateral–contralateral amplitude differences from monaural presentation were similar for left and for right ear stimulation. For unilaterally deaf subjects, the previously reported reduction in ipsilateral–contralateral amplitude differences based on scalp waveforms was also observed in the dipole source waveforms. However, analysis of the source dipole activity demonstrated that the reduced inter-hemispheric amplitude differences were ear dependent. Specifically, these changes were found only in those subjects affected by profound left ear unilateral deafness.

Similar content being viewed by others

INTRODUCTION

The plasticity of the mammalian auditory system after experimentally induced hearing loss has been demonstrated in several studies. This functional reorganization is evidenced mainly by two kinds of significant changes in the central auditory system activity: (1) reorganization of frequency maps in the auditory cortex, and (2) alterations in neural responses and binaural interactions at various levels in the auditory pathways. These changes have been demonstrated in both the developing (Harrison et al. 1991; Kitzes 1984; Reale et al. 1987; Eggermont and Komiya 2000) and the mature (Popelár et al. 1994; Rajan et al. 1993; Robertson and Irvine 1989) auditory system.

Restricted damage to the cochlea has been shown to alter the organization of contralateral cortical frequency maps in several species of mammals. For example, Reale et al. (1987) measured neural responses from the auditory cortex in cats reared with a neonatal unilateral cochlear ablation and reported modified tonotopic maps ipsilateral to the intact ear. Robertson and Irvine (1989) assessed the plasticity of cortical frequency organization in adult guinea pigs with induced unilateral partial deafness and found that the contralateral auditory cortex had an expanded representation of frequencies adjacent to the range damaged by the cochlear lesion. Similar reorganization of cortical frequency maps in the primary cortex contralateral to the partially lesioned cochlea has been reported in adult cats by Rajan et al. (1993).

Several studies have also demonstrated that unilateral hearing loss alters the neuronal activation and binaural interactions in the auditory pathways. For example, Kitzes at al. (1984) recorded responses from the inferior colliculus in gerbils with neonatal unilateral cochlear ablation and reported an increase in the excitatory responses on the ipsilateral side. In adult guinea pigs with induced hearing loss, neural responses recorded from both the auditory cortex and inferior colliculus ipsilateral to the intact ear show increased amplitudes and decreased thresholds (Popelár et al. 1994). Similarly, Reale et al. (1987) found reduced acoustic thresholds in the auditory cortex ipsilateral to the intact ear in cats with neonatal unilateral cochlear ablation.

Reorganization in adult humans following unilateral deafness has also been reported in a number of studies (Scheffler et al. 1998; Vasama and Mäkelä 1995, 1997; Vasama et al. 1995, 1998; Fujuki et al. 1998). For example, Scheffler et al. (1998) measured activity in the auditory cortex using functional magnetic resonance imaging (fMRI) and reported significantly smaller ipsilateral–contralateral activity differences in unilaterally deaf adults in comparison to normal-hearing adults. Using auditory evoked magnetic fields (AEFs), Vasama and Mäkelä (1995) reported that the stimulation of the intact ear after sudden sensorineural unilateral hearing loss generates AEFs with contributions from those cortical areas that play no role in the generation of AEFs in normal-hearing humans. These studies provide evidence of modified activation of the human central auditory pathways following unilateral hearing loss.

Ponton et al. (2001) also examined changes in cortical activation following unilateral deafness using evoked potentials. The results further characterized the changes from the pattern of asymmetrical (contralateral >ipsilateral amplitude) and asynchronous (contralateral earlier than ipsilateral) auditory system activation observed in normal-hearing subjects to a much more symmetrical and synchronous activation in the unilaterally deaf. Analyses of peak amplitude correlations suggested that the increased interhemispheric symmetry may be a consequence of gradual changes in the generators producing the N1 (approximately 100 ms peak latency) potential and that these changes continue for at least two years after the onset of hearing loss.

The present study was conducted to further assess modifications of central auditory system activation associated with profound unilateral deafness. The purpose of this analysis was to examine whether the side of deafness (left versus right ear) significantly affects the changes in cortical activation reported by Ponton et al. (2001). In a brain with symmetrical organization of function, it might reasonably be predicted that changes in cortical activation would be independent of the affected ear. However, it is well established that for more than 90% of the population, cortical processes of spoken language perception and production are asymmetrically represented in the left hemisphere of the brain (Zatorre et al. 1992; Belin et al. 2000). Therefore, the changes in cortical activation associated with unilateral deafness may be different for left versus right ear deafness (Bess et al. 1986).

For this analysis, the electrical potentials evoked by brief click trains were subjected to dipole modeling techniques to estimate the underlying current generators of these potentials (Mosher et al. 1992; Ponton et al. 1993, 2002; Scherg 1992). A mathematical model that describes the head as a 4-shell spherical volume conductor (Cuffin and Cohen 1979) and current sources as dipoles (Scherg and Von Cramon 1985; Snyder 1991) was used. The responses in the time window encompassing the N1b/P2 and Ta/Tb components (Näätänen and Picton 1987) were modeled using a pair of dipole sources, one oriented vertically and the other oriented laterally, in each hemisphere (Scherg and Von Cramon 1985). The parameters of the best-fit dipoles were then determined through spatiotemporal source modeling (STSM) techniques by using an iterative least-squares algorithm that fits the dipole-modeled potentials to the measured scalp potentials (Scherg and Von Cramon 1985, 1986; Snyder 1991). The source activity waveforms of the vertically and laterally oriented dipole sources were then used to measure the latencies and strengths of the ipsilateral and contralateral N1b/P2 and Ta/Tb components, respectively. Interhemispheric differences (IHDs) in these parameters were then compared for left and for right ear unilateral deafness across the normal-hearing and unilaterally deaf subjects. The subject data included in this analysis were the same as those described in Ponton et al. (2001).

MATERIALS AND METHODS

Subjects

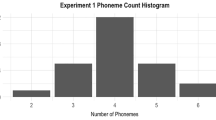

Nineteen adults with profound unilateral deafness (7 males, 12 females; mean age 47 years; range 16–68 years; 9 with left-sided and 10 with right-sided deficit) and eight normal-hearing adults (ages 25–38; mean age 32 years; 4 males and 4 females) served as subjects. In all subjects, audiometric pure-tone thresholds were assessed prior to auditory evoked potential (AEP) recordings. The unilaterally deaf subjects were otherwise healthy and the audiograms in their intact stimulated ears were normal [hearing thresholds ≤ 25 dB hearing level (HL) for frequencies ≤ 4 kHz]. The pure-tone average (mean threshold of 0.5, 1.0, and 2.0 kHz) was 7 dB HL and the mean speech discrimination score was 99% in the intact ears. All unilaterally deaf subjects had adult deafness; most had been deaf for between one and four years prior to being tested (duration of deafness was less than one year in only 1 subject and more than 4 years in 4 subjects). The average duration of hearing loss prior to recordings was 2.4 years (range 0.5–7.8 years). All normal-hearing subjects had normal audiograms in both ears and no history of hearing deficit. Treatment of the subjects and execution of the studies was performed in accordance with the guidelines of the declaration of Helsinki.

Evoked responses

Testing took place in an electrically shielded, sound-attenuated booth with the subjects seated in a comfortable reclining chair. Each stimulus presentation consisted of a brief train often 100 µs clicks, with successive clicks within a train separated by 2 ms. This 10-click train gave a unitary percept. Each subject was presented with 2000 such stimuli at an interstimulus interval of 510 ms. The stimuli were delivered to the ear through earphones at ≈70 dB nHL.

Each subject had 31 electrode positions marked on the head by an experienced technician in accordance with the international 10–20 system. These 31 positions are shown in Figure 1. The AEPs were recorded with reference to the Fpz electrode using a Neuroscan acquisition system. The ground electrode was placed approximately 2 cm to the right and 2 cm up from Fpz. Ocular movements were monitored on two different recording channels. Vertical eye movements were recorded by a pair of electrodes placed above and below the right eye. Horizontal eye movements were recorded by electrodes located on the outer canthus of each eye. A signal derived from the output of the module controlling stimulus presentation served as a trigger for sampling the EEG. The EEG data were recorded with a SynAmps 32-channel amplifier. The amplifier was set in DC mode and sampled the EEG at a rate of 1 kHz with bandpass filter settings from DC to 200 Hz. The data were recorded as single EEG epochs over an analysis window of 500 ms, which included 100 ms prior to stimulus onset. AEPs were recorded to monaural stimulation of each ear in each normal-hearing subject and to stimulation of the intact ear in each unilaterally deaf subject.

Standard 10–20 locations of the 31-electrode configuration projected onto a sphere and the coordinate system used to describe these locations are depicted on the head views. The electrodes Fpz, T3, T4, and Oz form the equatorial plane and the vertex electrode Cz represents the north pole. In this system, the z axis passes through the vertex (Cz), the x axis points to the right (through T4), and the y axis points forward (through Fpz).

Offline, the single EEG epochs were baseline corrected and subjected to an automatic artifact rejection algorithm, wherein sweeps containing activity in any channel that exceeded ±150 µV were excluded from subsequent analysis. A regression-based correction for ocular contamination was applied to the accepted data for vertical and horizontal eye movements. The remaining accepted sweeps (at least 1500) were corrected for eye movement contamination using the Neuroscan analysis software and then averaged. The activity from all channels was then averaged together to generate an average reference channel; this activity was then subtracted from each of the individual recording channels. This average referenced data were then bandpass filtered from 1 to 70 Hz.

Dipole source analyses — spatiotemporal source modeling

Averaged response data were imported into the Brain Electric Source Analysis software (BESA, MEGIS Software, Munich, Germany). During importation the data were spline interpolated and resampled reducing the initial 500 points to 128 points per channel and then bandpass filtered from 1 to 50 Hz (6 dB/octave roll-off). The resulting data were then subjected to dipole source analyses. For detailed descriptions of source analysis, refer to Cuffin and Cohen (1979), Scherg and Von Cramon (1985, 1986), and Snyder (1991).

A 4 sphere head model, with an outer radius of 9 cm and with standard thickness and conductivity values for the various tissue types adapted from Cuffin and Cohen (1979), was selected as the volume conductor model for all subjects. The standard 10–20 positions of the 31 electrodes (projected onto a sphere) and the spherical coordinate system used in BESA are shown in Figure 1.

The fitting of the evoked potential data from multiple scalp electrodes can be performed either for a single timepoint or over a time window encompassing multiple data points. The latter modeling approach is termed as spatiotemporal source modeling (STSM) since it uses both spatial and temporal information in the fitting process. In general, STSM assumes fixed dipole locations and variable dipole orientations over the analysis time window (Scherg and Von Cramon 1985). The changes over time of the dipole strength at the source location are then represented by the dipole source waveforms (Scherg and Von Cramon 1985).

Regional current dipole sources (Scherg and Von Cramon 1985) were used as a first approximation to the cortical generators of the AEPs. A regional dipole source is a configuration of three mutually orthogonal dipoles with a common location. According to Scherg and Von Cramon (1985), the regional dipole model may be more appropriate for modeling neural activity generated in highly folded and functionally related cortical areas. Consistent with previous studies (Scherg and Von Cramon 1985; Ponton et al. 1993, 2002), we used a pair of regional dipoles as the initial source model, with one dipole in each hemisphere, since afferents from each ear (i.e., each cochlear nucleus) are known to project both contralaterally and ipsilaterally. Figure 2 shows the bilaterally symmetrical regional dipole model used in the present study, with each regional dipole consisting of three mutually orthogonal dipoles oriented along the x, y, and z axes and sharing the same location. The odd numbers denote source components in the left hemisphere, while even numbers denote the components in the right hemisphere.

Schematic representation of the bilaterally symmetrical regional dipole model used in the present study, with each regional dipole comprising three dipoles sharing the same location and oriented in mutually orthogonal directions. The odd numbers denote source components in the left hemisphere, while even numbers denote the components in the right hemisphere.

Dipole parameters were determined in BESA by a nonlinear, iterative, least-squares fitting procedure using the simplex algorithm for the spatial parameters (location) and a direct linear approach for the temporal parameters (magnitude). Dipole locations in the vicinity of the auditory cortex were provided as starting estimates to the fitting procedure in order to minimize the possibility of the algorithm getting trapped at a local minimum. The adequacy of the final fit was assessed by the residual variance (RV) criterion (Snyder 1991).

Details of the estimation procedure

The model analysis was restricted to the 70–210 ms period to specifically characterize neural events contributing to the auditory N1b/P2- and T-complex activities. Although the superior temporal gyrus is asymmetrical in humans, the constraint of hemispheric symmetry was imposed (with the two regional dipoles forming mirror images of each other with respect to the y–z plane) to simplify the final source model solution. The symmetry constraint also reduces the number of parameters to be estimated in the nonlinear minimization step. In several subjects, the mastoid electrodes (M1, M2) were excluded because of excessive noise in the scalp waveforms at these sites. This reduces the residual variance of the fit, while dipole locations and source moments are almost unaffected because of the small number of sources and use of symmetry constraints. In a subsequent step, the symmetry constraint was released and the locations of the two regional sources were allowed to change independent of each other. With the exception of two subjects, the final locations of both regional sources were within 1 cm of their previous best-fit locations. Furthermore, no significant interhemispheric differences in locations of the regional sources were observed in any direction. Based on these findings, the hemispheric symmetry constraint was imposed on the regional source locations for all subsequent analysis. The source waveforms of the three dipoles of each regional source were then estimated by a direct linear approach.

We then attempted to orient the dipoles of each regional source such that most of the activity could be described by only two of the three dipoles that formed each regional source. Previous studies have indicated that the N1b/P2 complex has a major contribution from a tangentially oriented source on the superior surface of the temporal lobe within the Sylvian fissure (Ponton et al. 1993, 2002; Scherg and Von Cramon 1985), while the T-complex is possibly due to a laterally oriented source on the lateral surface of the temporal lobe (Tonnquist–Uhlen et al., in press). As shown in Figure 2, the temporal lobe is angled downward in the head. To estimate the natural orientation of the tangential sources in the temporal lobes, the regional sources were rotated around the medial–lateral axis (x axis) until the amplitude of the N1b/P2 peak-to-peak activity in dipoles 1 and 2 was maximized. Even though dipoles 1 and 2 may no longer be oriented vertically or have symmetrical orientations, they are still in the y–z plane. For this reason, we shall continue to refer to them as vertical dipoles. In cases where the source location is close to the coronal midline, these dipoles are also approximately tangentially oriented. After rotating the vertical dipoles to their optimal orientation where N1b/P2 activity was maximized, the orientations of the laterally oriented dipoles 3 and 4 were optimized independently in each hemisphere to further reduce the residual variance of the fit. With the exception of 3 subjects, the final orientations were within 10° of the previous lateral orientation and the maximum difference between the orientations in the two hemispheres was only 14°. This step typically lowered the residual variance (RV) of the fit by only about 1%–2%. Based on these results, we fixed the orientation of both dipoles 3 and 4 to be along the medial–lateral axis. In all subsequent analysis, source waveforms of the vertical dipoles were used to compute all parameters for the ipsilateral and contralateral N1b/P2 complexes, while the source waveforms of dipoles 3 and 4 (oriented along the medial–lateral axis) were used to compute all parameters for the ipsilateral and contralateral T complexes.

Latency and amplitude parameters

Peaks were defined as the maximum positivity or negativity within a particular latency window of specific source waveforms. The waveforms of the vertically oriented dipoles (1 and 2) were used to identify the N1b (70–120 ms) and P2 (140–210 ms) peaks, while the waveforms of the laterally oriented dipoles (3 and 4) were used to identify the Ta (70–130 ms) and Tb (110–180 ms) peaks. Peak-to-peak dipole moments (amplitudes) were also computed for the N1b/P2 complex and the Ta/Tb complex of the ipsilateral and contralateral sources. We used peak-to-peak amplitude measures, as opposed to peak-to-baseline measures, because it was sometimes difficult to clearly assess the individual peak-to-baseline amplitudes of all peaks in both hemispheres. Peak latency was defined as the time from the stimulus onset to the point used to calculate the amplitude measures (i.e., the most positive/negative point within the latency window). Furthermore, the RMS amplitude of the net dipole moment of each regional source over the 70–210 ms time window was also computed.

Interhemispheric differences (IHD).

The above amplitude and latency measures were then compared between the ipsilateral and contralateral hemispheres. Interhemispheric amplitude difference (IHAD) was expressed as a percentage of the summed values over hemispheres, i.e., IHAD = 100 * (CA −IA)/(CA + IA), where CA and IA denote the amplitude values in the hemisphere contralateral and ipsilateral, respectively, to the stimulated ear. An IHAD value of zero denotes full symmetry; positive values denote larger contralateral responses and negative values denote larger ipsilateral responses. Interhemispheric latency differences (IHLD) for each of the peaks (N1b, P2, Ta, Tb) were expressed as IHLD = (IL −CL), where CL and IL denote the latency values of the corresponding peaks in the hemisphere contralateral and ipsilateral, respectively, to the stimulated ear.

RESULTS

In the following sections, ipsilateral and contralateral refer to the side that is the same as or opposite to, respectively, the stimulated ear. The latency and amplitude of a specific peak denote the latency and dipole strength, respectively, of that peak in the dipole source activity waveform. All interhemispheric differences in latencies and amplitudes then refer to the differences between the latencies and strengths of the ipsilateral and contralateral dipole sources.

Dipole source locations and orientations

Figure 3 shows the scalp waveforms for a normal-hearing subject with monaural stimulation of the left ear. The N1b/P2 complex is apparent in several channels. It is largest at Cz, declines in amplitude away from the vertex, and is inverted at occipital recording sites. The T-complex is apparent in the scalp waveforms at the temporal electrodes T3 and T4.

Figure 4 shows the locations of the best-fit dipoles superimposed on the corresponding head views for all normal-hearing (monaural left and monaural right stimulation) and unilateral deaf (monaural stimulation of the intact ear) subjects. Since a symmetrical regional dipole source model was used, the locations are shown at homologous positions in the two hemispheres. All source locations are clustered in the region of the superior temporal lobe of the head model. Table 1 gives the mean location coordinates of the regional source in the right hemisphere. Between-subject variability (standard deviation) was less than 8.6 mm along each coordinate axis. Significant differences in source locations were not found either between the normal-hearing and unilateral deaf groups or between the two stimulation sides in the medial–lateral (x) and anterior–posterior (y) directions. The only significant difference was found (p = 0.03) in the superior–inferior direction.

Locations of the best-fit dipoles for all the normal-hearing (monaural left and right stimulation) and unilaterally deaf (monaural stimulation of the intact ear) subjects used in the present study superposed on the corresponding head views. No significant differences in locations were found between groups or between sides of stimulation.

The regional sources were then rotated around the medial–lateral axis until the strength of the N1b/P2 activity in dipoles 1 and 2 was maximized. The resulting orientation of these dipoles was at a mean angle of approximately 29° and 25° from the vertical in the head model in the normal-hearing and unilateral deaf population, respectively. Differences in the optimal orientations of dipoles 1 and 2 between normal-hearing and unilaterally deaf subjects were also not significant (not shown in Table 1). Figure 5a shows an example of this optimally tilted, final dipole configuration for a normal-hearing subject (monaural left ear stimulation). In this configuration, dipole sources 1 and 2 are oriented almost normal to the superior surface of the temporal lobe in the head; dipole sources 3 and 4 are still oriented laterally (along the direction of the x-axis) toward the temporal electrodes T3 and T4; while dipole sources 5 and 6 are in the y–z plane, oriented at right angles (clockwise) to dipoles 1 and 2.

a. The optimally tilted final dipole configuration for one normal-hearing subject (monaural left ear stimulation). In this configuration, dipoles 1 and 2 are approximately normal to the superior surface of the temporal lobe (N1b/P2), while dipoles 3 and 4 are laterally oriented (T-complex). b. Estimated source waveforms of the dipole sources in a. Dipoles 1 and 2 reflect mostly the N1b/P2 complex activity, while the laterally oriented dipoles 3 and 4 reflect mostly the T-complex activity. Dipoles 5 and 6 reflect small residual activity. The contralateral sources show shorter latencies and larger amplitudes than the ipsilateral sources.

Dipole source waveforms

Figure 5b depicts the estimated source waveforms of the dipoles shown in Figure 5a. Within both hemispheres, a distinct N1b/P2 complex is apparent in the source waveforms of dipoles 1 and 2. The laterally oriented dipoles 3 and 4 show T-complex activity, which includes a positive peak at approximately 100 ms (Ta) and a negative peak at about 150 ms (Tb). A second prominent peak at about 220 ms is also seen in source waveforms 3 and 4. This is probably the P220 component generated by a radial source (Verkindt et al. 1994), which explains its presence in the source waveforms of dipoles 3 and 4. We did not include this second peak in any data analyses. Source waveforms of dipoles 5 and 6 reflect small residual activity.

Latency and amplitude parameters

Group differences

Table 2A lists the mean latencies in the contralateral and ipsilateral hemispheres as well as the mean interhemispheric latency difference (IHLD) values of the different peaks in the normal-hearing and unilaterally deaf subjects. In the normal-hearing subjects, the peak latencies of N1b, P2, Ta, and Tb are all earlier in the hemisphere contralateral to the stimulated ear with mean (±SD) IHLD of 14.4 (±10.8)**, 7.7 (±6.4)*, 8.3 (±9.2)**, and 6.8 (±8.8) ms, respectively (** and * denote p < 0.01 and p < 0.05 significance levels, respectively). In the unilaterally deaf subjects, the peak latencies of N1b, P2, Ta, and Tb are about the same in both hemispheres with mean (±SD) IHLD of 1.9 (±11.2), 0.2 (±14.8), 0.9 (±8.7), and 0.4 (±10.3) ms, respectively. The normal-hearing and unilateral deaf groups differed significantly in IHLD values for N1b (p < 0.01)** and Ta (p < 0.05)*, while IHLD values for P2 (p < 0.06) and Tb (p < 0.06) were not significantly different.

Table 2B lists the root-mean-square (RMS) value of the dipole moment (units of nanoampere meter or nA m) computed in the 70–210 ms interval and the peak-to-peak dipole moment values of the N1b/P2 and Ta/Tb complexes. In the normal-hearing subjects, the RMS and peak-to-peak amplitudes of N1b/P2 and Ta/Tb are all larger in the hemisphere contralateral to the ear of stimulation, with mean (±SD) interhemispheric amplitude differences (IHAD %) of 24.5 (±10.8)**, 31.0 (±14.8)**, 20.6 (±23.0), respectively. In the unilaterally deaf subjects, the RMS and peak-to-peak amplitudes of N1b/P2 and Ta/Tb are also marginally larger in the hemisphere contralateral to the ear of stimulation, with mean (±SD) IHAD % of 12.6 (±11.3)*, 17.0 (±23.1), and 7.1 (±24.0), respectively. All IHAD values of the normal-hearing and unilateral deaf groups differed significantly in RMS (p < 0.01)**, N1b/P2 (p 0.05)*, and Ta/Tb (p < 0.05)*.

The results of Table 2 have been summarized in Figure 6a and b, which shows the mean IHLD and IHAD values for each group of subjects, respectively. It can be clearly seen that major peaks in the contralateral responses are earlier and larger than the corresponding peaks in the ipsilateral responses for the normal-hearing group. In contrast, the major peaks in the unilateral deaf group show similar latencies and reduced amplitude differences between hemispheres.

a. Mean interhemispheric latency differences (IHLD) = (IL−CL) for the normal-hearing (n = 16) and unilaterally deaf subjects (n =19), where IL and CL denote the latency of the peaks in the source waveforms of the ipsilateral and contralateral hemispheres, respectively. The contralateral latencies are all shorter in the normal-hearing group, while interhemispheric differences are close to zero in the unilateral deaf group. b. Mean interhemispheric amplitude differences (IHAD) = 100% * (CA−IA)/(CA + IA) for the two groups, where IA and CA denote the amplitudes (dipole moments) in the ipsilateral and contralateral hemispheres, respectively. The contralateral amplitudes are significantly larger than the ipsilateral amplitudes in the normal-hearing group; these differences are significantly reduced in the unilaterally deaf group.

Ear differences

Table 3A lists the IHLD values for the various peaks separated by the side of stimulation (monaural left vs. right stimulation). No significant differences are seen between IHLD values for the left and right stimulation within either group of subjects. It should be noted here that while data from each of the normal-hearing subjects was acquired under both monaural left and monaural right stimulation, the unilaterally deaf subjects were tested under monaural stimulation of only the intact ear (right ear in 10 subjects and left ear in 9 subjects). Thus, data for comparing IHLD values for left-sided and right-sided stimulation originates from different subjects in the unilateral deaf group.

Table 3B lists the IHAD values separated by the side of stimulation. No significant differences were found between IHAD values for monaural left and monaural right stimulation in the normal-hearing subjects. However, in the unilateral deaf group, the IHAD values for RMS moment (p < 0.05)*, N1b/P2 (p < 0.05)*, and Ta/Tb (p < 0.01)** are all significantly different between monaural left and monaural right stimulation.

The results of Table 3 have also been summarized in Figure 7a and b; which show the mean IHLD and IHAD values for the normal-hearing and unilaterally deaf subjects separated by the side of stimulation. While the two groups of subjects show nonsignificantly different IHLD values irrespective of the side of stimulation, the IHAD values of the unilaterally deaf subjects appear to depend on the ear of stimulation, with those stimulated in the right ear showing much reduced IHAD values (i.e., almost equal amplitudes in both hemispheres). Figures 8 and 9 show the individual source waveforms of the ipsilateral and contralateral vertical dipoles (N1b/P2) for all subjects. The numbers on the right side of each waveform indicate the IHAD values (%). These figures also clearly show that unilaterally deaf subjects with monaural stimulation of the right ear have much reduced N1b/P2 IHADs compared with the normal-hearing subjects with monaural stimulation of either side or the unilaterally deaf subjects with monaural stimulation of the left ear. The results for the radial dipoles were very similar and are not shown here.

Mean IHLD values for the normal-hearing and unilaterally deaf subjects separated by ear of stimulation. The two groups of subjects show similar IHLD values irrespective of the side of stimulation, b. Mean IHAD values for the normal-hearing and unilaterally deaf subjects separated by ear of stimulation. IHAD values of the unilaterally deaf subjects appear to depend on the ear of stimulation, with those stimulated in the right ear showing much reduced IHAD values (i.e., almost equal amplitudes in both hemispheres).

Source waveforms of the ipsilateral (thin lines) and contralateral (thick lines) vertical dipoles (N1b/P2) in the normal-hearing subjects for monaural stimulation of the left and right ear. The numbers 18 on the left side of each pair of waveforms indicate the subjects, while the numbers on the right side of each waveform indicate the corresponding IHAD values (%).

Source waveforms of the ipsilateral (thin lines) and contralateral (thick lines) vertical dipoles (N1b/P2) in the unilaterally deaf subjects for monaural stimulation ofthe left and right ear. The numbers on the left side of each pair of waveforms indicate the subjects, while the numbers on the right side of each waveform indicate the corresponding IHAD values (%).

DISCUSSION

We investigated the effects of profound acquired unilateral deafness with dipole source modeling methods. The N1b/P2 and Ta/Tb components of the AEPs were modeled by a vertically and a laterally oriented dipole source in each hemisphere. The normal-hearing subjects showed significant ipsilateral contralateral latency and amplitude differences for the source waveforms, with contralateral source activities that were typically larger and peaked earlier than the ipsilateral activities regardless of the ear that was stimulated. For unilaterally deaf subjects, analysis of the source dipole activity demonstrated reduced interhemispheric amplitude differences that were ear dependent, whereas latencies were not different across hemispheres. The changes in amplitude were found only in those subjects affected by profound left ear unilateral deafness.

Dipole source analysis approach

The aim of this study was to determine if profound unilateral deafness has any effects on the central auditory system that can be detected using electrophysiological recordings of cortical responses to basic click train stimulation. Auditory evoked activity was modeled using bilaterally symmetrical regional dipoles. Peak latencies and amplitudes of late-latency AEP components were estimated from the corresponding source waveforms of the best-fit dipoles. Interhemispheric differences in these measures were computed for all normal-hearing and unilaterally deaf subjects and used to assess effects of unilateral deafness on central auditory system activity.

As noted by Näätänen and Picton (1987) and demonstrated by the intracranial recordings of a number of investigators (e.g., Liègeois–Chauvel et al. 1991, 1994, 1999; Steinschneider et al. 1999), the N1 potential as recorded from the scalp represents the sum of three or more generators. It is certainly the case that the regional dipole source model represents a first approximation and a center of gravity for this complex generator activity. However, the analyses applied to the data reported in this study represent a significant advance over studies that limit their analyses to a selected very small subset of all recording electrodes used in an experiment.

It may be argued that IHDs in latencies and amplitudes in our results can arise primarily from IHDs in locations/orientations of the dipole sources. This possibility has been addressed in our source model by independently optimizing the locations and orientations of the sources in the two hemispheres. Based on the results of these optimizations, hemispheric symmetry constraints were imposed on (1) locations of the regional sources and (2) orientations of the laterally oriented dipoles. The vertical dipoles were allowed to have different orientations in the hemispheres. In order to assess the effects of the two symmetry constraints used in the study, analyses were also done without imposing any constraints. The IHDs in latencies/amplitudes changed, on average, by 1.1%–1.9% from their previous values obtained with the constrained source model. This suggests that the constraints make the source model simpler without sacrificing accuracy of IHD measures. This was further supported by the fact that the IHDs in RMS amplitude measures, which take into account all the components of each regional source and are independent of the component orientations, are of the same order as IHDs in amplitudes of the individual peaks.

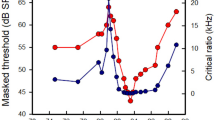

Stimulus factors

Unlike most studies of human auditory cortical activity, clicks trains were used as stimuli in this investigation. In our previous studies of cochlear implant patients (e.g., Ponton et al. 1993, 1996a,b, 1999, 2000), auditory stimulation was produced by applying current pulse trains to the implant hardware. To parallel this as closely as possible, acoustic click trains were used as stimuli for our normal-hearing and unilaterally deaf subjects. The spectral content of the click is very broad; for a 100 s click, the first energy null occurs at 10 kHz. This raises the question of whether a different result might have been obtained if more frequency-specific (i.e., high versus low tones) tonal stimuli had been used to generate the evoked potentials. Evoked potential and current source density data recorded in monkeys by Reser et al. (2000) indicate a differential representation of high- and low-frequency units in the binaural interaction columns of area AI of primary auditory cortex. Low-frequency areas in AI are dominated by EE (excitatory contralateral/excitatory ipsilateral) units, whereas high-frequency areas are dominated by EI units (excitatory contralateral/inhibitory ipsilateral). If human auditory cortex has a similar organization, then differential effects might be observed with tonal stimulation of low versus high frequencies. However, as noted by Jacobson et al. (1992), low-frequency tonal stimulation (250 Hz) produces significantly larger N1 amplitude than higher-frequency stimulation (1 or 4 kHz). Thus, click-evoked N1 activity is more likely dominated by the low- than the high-frequency content of the click. This leads to the prediction that the results reported in the present study might reflect mostly the activity of the low-frequency EE cortical units in AI.

Cortical reorganization due to hearing loss

Using source waveforms to assess IHDs, we found that differences between the ipsilateral and contralateral responses in unilaterally deaf subjects were significantly altered from the normal-hearing subjects. In normal-hearing individuals, the latencies and amplitudes of the major peaks observed in the dipole source activity of the contralateral hemisphere were earlier and larger than those on the ipsilateral side. However, these latency and amplitude differences between the responses on the two sides were significantly smaller in unilaterally deaf subjects. This confirms previous findings based on regional averages of AEPs measured at central electrode sites (Ponton et al. 2001).

Vasama and Mäkelä (1997) reported similar findings in unilaterally deaf subjects. In half of their subjects, they observed shorter response latencies and larger dipole moments in the auditory evoked magnetic fields over the hemisphere ipsilateral to the stimulation. Similar alterations in interhemispheric amplitude differences due to profound unilateral deafness have also been reported by estimating the level of cortical activity in response to tonal stimulation (Scheffler et al. 1998) using fMRI. This study reported a strong lateralization of the cortical response toward the contralateral hemisphere in normal-hearing subjects, while a very weak lateralization toward the hemisphere contralateral to the intact ear was found in unilaterally deaf subjects. While fMRI has good spatial resolution that translates to accurate estimation of cortical activity levels, its poor temporal resolution (of the order of 25 s) does not allow a comparison of response latencies in the two hemispheres. In contrast, the excellent temporal resolution of electrophysiological recordings (of the order of 1 ms) allowed us to assess any alterations in interhemispheric latency differences in the present study. This millisecond level temporal resolution revealed significant alterations in IHLDs due to unilateral hearing loss. That is, while normal-hearing subjects had shorter latencies in the contralateral hemisphere, the latencies of the responses in the contralateral and ipsilateral hemispheres were almost equal in the unilaterally deaf subjects.

The mechanisms responsible for changes demonstrated in our data are likely related to those that characterize the normal hemispheric asymmetry. The shorter latencies for contralateral stimulation can be understood because the contralateral pathway in mammals contains a greater number of nerve fibers and represents a more direct route with fewer synapses to cortex than the ipsilateral pathway (Adams 1979; Brunso–Bechtold et al. 1981; Coleman and Clerici 1987). Central auditory system activity evoked by monaural presentation generally is stronger and has lower activation thresholds in the contralateral than in the ipsilateral auditory pathway (Popelar et al. 1994; Reale et al. 1987; Kitzes 1984). Stronger onset synchrony between the firings of single units in cat auditory cortex correlates with shorter latency (Brosch and Schreiner 1999). Thus, larger evoked potential amplitudes on the side contralateral to the stimulated ear may result from both the larger number of activated neurons and a stronger onset synchrony between the firings. According to our data, the amount of amplitude asymmetry in the normal-hearing subjects is the same for left ear and right ear stimulation. While these observed differences between the two hemispheres reached significance only for the tangential dipoles, the trend for the radial dipoles was the same.

We found no significant difference in the latencies of the N1b/P2 and Ta/Tb components for contralateral stimulation in unilateral deaf and normal-hearing subjects. Furthermore, in unilaterally deaf subjects, the ipsilateral and contralateral responses became nearly identical in the magnitude of activation as well as in the time of activation for the N1b/P2 and Ta/Tb components. In the visual system, behavioral measures (Bjorklund and Lian 1993) and evoked potential measures (Nalcaci et al. 1999) produce a similar estimate of 12 ms for interhemispheric transfer. For the auditory system, Bjorklund and Lian (1993) estimate the interhemispheric transfer at approximately 16 ms. Combined, these data predict that the primary locus of symmetric bilateral activation occurs at a more peripheral level in the auditory system where conduction velocities are high and latency differences are minimal (Kitzes 1984), rather than by an unmasking of hemispheric callosal transfer. It is important to note that initial auditory cortical activation may occur as early as 19 ms. Therefore, considerable processing has undoubtedly occurred by 100 ms. Thus, in order to evaluate the locus of change produced by unilateral deafness, it will be necessary to examine the latency and amplitude properties of the middle-latency auditory evoked potentials which occur 1960 ms after stimulus onset.

Reorganization and side of hearing loss

We further analyzed the IHDs to assess if there were any dependence on the side of stimulation/hearing loss in either subject group. The rationale was based on previous studies that have reported that sound recognition in noise and sound localization are two functions, which may be dependent on the side of the hearing loss. For example, Hartvig et al. (1989) found that children who are unilaterally deaf in the right ear perform significantly poorer in verbal subtests that are sensitive to minor input/processing damages than those impaired in the left ear. Gustafson et al. (1995) showed that individuals with experimentally induced unilateral conductive hearing loss in the right ear are affected significantly more in their localization ability than those with hearing loss in the left ear. In the present study, we found evidence that the side of hearing loss has differential effects on the modification of the central auditory system. We found that the effect of left ear unilateral deafness (right ear stimulation) was to evoke equal cortical activation in the right and left hemispheres for clicks, whereas right ear unilateral deafness (left ear stimulation) produced normal asymmetry, i.e., contralateral right hemisphere larger than ipsilateral left hemisphere activation. This suggests that compensatory plasticity does not take place for a right ear hearing loss. It is important to note that there is a left hemispheric specialization in humans (Johnsrude et al. 1997) and guinea pigs (King et al. 1999) for processing of acoustic transients such as clicks. Thus, in the unilaterally deaf, stimulating the left ear with clicks maintained the normal hemispheric asymmetry and there was no compensatory transfer to the normally-transient-dominant left hemisphere. In contrast, activating the dominant left hemisphere by right ear stimulation in the unilaterally deaf did result in equal activation of both hemispheres. On the basis of the nearly equal latencies for left and right ear stimulation in the unilaterally deaf, it appears highly unlikely that there is compensatory transfer at the callosal level from the left to the right hemisphere. If such compensation occurred by way of the corpus callosum, interhemispheric latency differences (contra < ipsi) should remain. However, the alternative is that changes in inhibition taking place at the brainstem level or midbrain level (Mossop et al. 2000) result in this symmetric activation of auditory cortex. This would require a profound difference in effect regarding the side of hearing loss in the initiation of these changes. This asymmetry could be in the afferent pathway leading to the midbrain but could potentially also result from an asymmetric gain control mediated by the corticofugal pathways at the midbrain level (Suga et al. 2000).

Stimulating with pulsed tones presented at 6/s (Scheffler et al. 1998) also resulted in increased symmetry in the unilaterally deaf as measured using fMRI. These data, although based on only five subjects, suggest that left unilaterally deaf subjects (n = 2) with an intact right ear showed more interhemispheric symmetry than the right unilaterally deaf (n = 3). These results are thus similar to ours, suggesting that the type of stimulation and the dominance of the left hemisphere in processing transient sounds is an aspect that is independent of the degree of symmetrization of interhemispheric activity. This argues once more for changes peripheral to auditory cortex.

CONCLUSIONS

The present study provides evidence of central auditory system reorganization due to profound left ear unilateral deafness in adult humans. In contrast, it appears that unilateral right ear deafness does not result in plastic changes and leaves the left hemisphere with much less activation than the right. It is possible that the use of a regional dipole source model somehow masks subtle compensatory processes that might follow right ear deafness. Further studies are clearly needed that combine both behavioral measures of auditory and speech function with evoked potential recordings using a higher number of electrodes to allow more refined source modeling. Nevertheless, the present results provide no evidence of compensatory plasticity following right ear deafness, i.e., there is no compensatory increase in activation of the ipsilateral left hemisphere. This may underlie the demonstrated poorer verbal performance of unilaterally right ear deaf compared to unilaterally left ear deaf (Hartvig et al. 1989).

For those individuals with profound bilateral deafness considering a cochlear implant, these findings may have implications for determining which side is implanted. Preliminary analyses suggest that the pattern of results observed in the unilateral deaf is the same in cochlear implant users (Ponton et al., unpublished). All other known factors being equal (e.g., degree of hearing loss, etc.), it would seem most prudent to place the implant on the right side, thus stimulating the pathway that produces the most robust activation of the typically speech-dominant left hemisphere. At the very least, as the number of individuals who are binaurally implanted increases, a careful examination of speech processing capabilities as a function of side of stimulation is clearly warranted.

References

JC Adams (1979) ArticleTitleAscending projections to the inferior colliculus. J. Comp. Neurol. 183 519–538 Occurrence Handle1:STN:280:CSaD1cbgtFM%3D Occurrence Handle759446

P Belin RJ Zatorre P Lafaille P Ahad B Pike (2000) ArticleTitleVoice-selective areas in human auditory cortex. Nature. 403 309–312 Occurrence Handle10.1038/35002078 Occurrence Handle1:STN:280:DC%2BD3c7itlantw%3D%3D Occurrence Handle10659849

F Bess T Klee J Culbertson (1986) ArticleTitleIdentification, assessment, and management of children with unilateral sensorineural hearing loss. Ear Hear. 7 43–51 Occurrence Handle1:STN:280:BimC3sjhtlY%3D Occurrence Handle3949100

RA Bjorklund A Lian (1993) ArticleTitleInterhemispheric transmission time in an auditory two-choice reaction task. Scand. J. Psychol. 34 174–182 Occurrence Handle1:STN:280:ByyA3M%2FltV0%3D

M Brosch CE Schreiner (1999) ArticleTitleCorrelations between neural discharges are related to receptive field properties in cat primary auditory cortex. Eur. J. Neurosci. 11 3517–3530 Occurrence Handle10.1046/j.1460-9568.1999.00770.x Occurrence Handle1:STN:280:DC%2BD3c%2Fjt1ajtw%3D%3D Occurrence Handle10564360

JK Brunso–Bechtold GC Thompson RB Masterson (1981) ArticleTitleHRP study of the organization of auditory afferents ascending to the central nucleus of the inferior colliculus in cat. J. Comp. Neurol. 197 705–722 Occurrence Handle1:STN:280:Bi6C1M3ltFY%3D Occurrence Handle7229134

JR Coleman WJ Clerici (1987) ArticleTitleSources of projections to subdivisions of the inferior colliculus in the rat. J. Comp. Neurol. 262 215–226 Occurrence Handle1:STN:280:BiiA3cfpvVQ%3D Occurrence Handle3624552

BN Cuffin D Cohen (1979) ArticleTitleComparison of the magnetoencephalogram and electroencephalogram. Electroencephalogr. Clin. Neurophysiol. 47 132–146 Occurrence Handle1:STN:280:By%2BC3cjosFA%3D

JJ Eggermont H Komiya (2000) ArticleTitleModerate noise trauma in juvenile cats results in profound cortical topographic map changes in adulthood. Hear. Res. 142 89–101 Occurrence Handle10.1016/S0378-5955(00)00024-1 Occurrence Handle1:STN:280:DC%2BD3c3htleqtw%3D%3D Occurrence Handle10748332

N Fujuki Y Naito T Nagamine Y Shiom S Hirano I Honjo H Shibasaki (1998) ArticleTitleInfluence of unilateral deafness on evoked magnetic field. Neuro report. 3129–3133

TJ Gustafson TA Hamill (1995) ArticleTitleDifferences in localization ability in cases of right versus left unilateral simulated conductive hearing loss. J. Am. Acad. Audiol. 6 124–128 Occurrence Handle1:STN:280:ByqB1M%2FmvFY%3D Occurrence Handle7772781

JJ Hartvig J Jensen S Borre PA Johansen (1989) ArticleTitleUnilateral sensorineural hearing loss in children and auditory performance with respect to right/left ear differences. Br. J. Audiol. 23 207–213 Occurrence Handle2790305

RV Harrison A Nagasawa DW Smith S Stanton RJ Mount (1991) ArticleTitleReorganization of auditory cortex after neonatal high frequency cochlear hearing loss. Hear. Res. 54 11–19 Occurrence Handle10.1016/0378-5955(91)90131-R Occurrence Handle1:STN:280:By2D3M7hslE%3D Occurrence Handle1917710

GP Jacobson DM Lombardi ND Gibbens BK Ahmad CW Newman (1992) ArticleTitleThe effects of stimulus frequency and recording site on the amplitude and latency of multichannel cortical auditory evoked potential (CAEP) component N1. Ear Hear. 13 300–306 Occurrence Handle1:STN:280:ByyC3sfis10%3D Occurrence Handle1487089

S Johnsrude RJ Zatorre BA Milner AC Evans (1992) ArticleTitle(1997) Left-hemisphere specialization for the processing of acoustic transients. Neuro report 8 1761–1765

C King T Nicol T McGee N Kraus (1999) ArticleTitleThalamic asymmetry is related to acoustic signal complexity. Neurosci. Lett. 267 89–92 Occurrence Handle10.1016/S0304-3940(99)00336-5 Occurrence Handle1:CAS:528:DyaK1MXjslCjt7g%3D Occurrence Handle10400219

LM Kitzes (1984) ArticleTitleSome physiological consequences of cochlear destruction in the inferior colliculus of the gerbil. Brain Res. 306 171–178 Occurrence Handle10.1016/0006-8993(84)90366-4 Occurrence Handle1:STN:280:BiuB1c7ntlY%3D Occurrence Handle6466971

C Liègeois–Chauvel A Musolino P Chauvel (1991) ArticleTitleLocalization of the primary auditory area in man. Brain. 114 139–153 Occurrence Handle1900211

C Liègeois–Chauvell A Musolino JM Badier P Marquis P Chauvel (1994) ArticleTitleEvoked potentials recorded from the auditory cortex in man: evaluation and topography of the middle latency components. Electroencephalogr. Clin. Neurophysiol. 92 204–214 Occurrence Handle10.1016/0168-5597(94)90064-7 Occurrence Handle7514990

C Liègeois–Chauvel JB de Graaf V Laguitton P Chauvel (1999) ArticleTitleSpecilaization of left auditory cortex for speech perception in man depends on temporal coding. Cereb. Cortex. 9 484–496 Occurrence Handle10.1093/cercor/9.5.484 Occurrence Handle10450893

JC Mosher PS Lewis RM Leahy (1992) ArticleTitleMultiple dipole modeling and localization from spatiotemporal MEG data. IEEE Trans. Biomed. Eng. 39 541–557 Occurrence Handle10.1109/10.141192 Occurrence Handle1:STN:280:By2B1M%2FkvFA%3D Occurrence Handle1601435

JE Mossop MJ Wilson DM Caspary DR Moore (2000) ArticleTitleDown-regulation of inhibition following unilateral deafening. Hear. Res. 147 183–187 Occurrence Handle10.1016/S0378-5955(00)00054-X Occurrence Handle1:STN:280:DC%2BD3cvpsFynsA%3D%3D Occurrence Handle10962184

R Näätänen T Picton (1987) ArticleTitleThe N1 wave of the human electric and magnetic response to sound: A review and an analysis of the component structure. Psychophysiology 24 375–425 Occurrence Handle3615753

E Nalcaci C Basar–Eroglu M Stadler (1999) ArticleTitleVisual evoked potential interhemispheric transfer time in different frequency bands. Clin. Neurophysiol. 110 71–81 Occurrence Handle10.1016/S0168-5597(98)00049-5 Occurrence Handle1:STN:280:DyaK1M3ns1Skuw%3D%3D Occurrence Handle10348323

CW Ponton M Don MD Waring JJ Eggermont A Masuda (1993) ArticleTitleSpatio-temporal source modeling of evoked potentials to acoustic and cochlear implant stimulation. Electroencephalogr. Clin. Neurophysiol. 88 478–493 Occurrence Handle10.1016/0168-5597(93)90037-P Occurrence Handle1:STN:280:ByuD283lvVA%3D

CW Ponton M Don JJ Eggermont MD Waring A Masuda (1996a) ArticleTitleMaturation of human cortical auditory function: Differences between normal hearing and cochlear implant children. Ear 17 430–437 Occurrence Handle1:STN:280:ByiD28nktFQ%3D

CW Ponton M Don JJ Eggermont MD Waring B Kwong A Masuda (1996b) ArticleTitleAuditory system plasticity in children after long periods of complete deafness. Neur report. 8 61–65 Occurrence Handle1:STN:280:ByiB3cvhvVU%3D

CW Ponton JK Moore JJ Eggermont (1999) ArticleTitleProlonged deafness limits auditory system developmental plasticity: evidence from an evoked potential study in children with cochlear implants. Scand. Audiol. Suppl. 51 13–22

CW Ponton JJ Eggermont M Don MD Waring B Kwong J Cunningham P Trautwein (2000) ArticleTitleMaturation of the mismatch negativity: effects of profound deafness and cochlear implant use. Audiol. Neurotol. 5 167–185 Occurrence Handle10.1159/000013878 Occurrence Handle1:STN:280:DC%2BD3cvgt1OnsQ%3D%3D

CW Ponton JP Vasama K Tremblay D Khosla B Kwong M Don (2001) ArticleTitlePlasticity in the adult human central auditory system: evidence from late-onset profound unilateral deafness. Hear. Res. 154 32–44 Occurrence Handle10.1016/S0378-5955(01)00214-3 Occurrence Handle1:STN:280:DC%2BD3MznslajtA%3D%3D Occurrence Handle11423213

CW Ponton JJ Eggermont D Khosla B Kwong M Don (2002) ArticleTitleMaturation of human central auditory system activity: separating auditory evoked potentials by dipole source modeling. Clin. Neurophysiol. 113 407–420 Occurrence Handle10.1016/S1388-2457(01)00733-7 Occurrence Handle11897541

J Popelár JP Eire JM Aran Y Cazals (1994) ArticleTitlePlastic changes in ipsi-contralateral differences of auditory cortex and inferior colliculus evoked potentials after injury to one ear in the adult guinea pig. Hear. Res. 72 125–134 Occurrence Handle10.1016/0378-5955(94)90212-7 Occurrence Handle8150729

R Rajan DR Irvine LZ Wise P Heil (1993) ArticleTitleEffect of unilateral partial cochlear lesions in adult cats on the representation of lesioned and unlesioned cochleas in primary auditory cortex. J. Comp. Neurol. 338 17–49 Occurrence Handle1:STN:280:ByuC3s3jsFM%3D Occurrence Handle8300898

RA Reale JF Brugge JC Chan (1987) ArticleTitleMaps of auditory cortex in cats reared after unilateral cochlear ablation in the neonatal period. Brain Res. 192 281–290

DH Reser YI Fishman JC Arezzo M Steinschneider (2000) ArticleTitleBinaural interactions in primary auditory cortex of the awake macaque. Cereb. Cortex. 10 574–584 Occurrence Handle10.1093/cercor/10.6.574 Occurrence Handle1:STN:280:DC%2BD3czhsleisg%3D%3D Occurrence Handle10859135

D Robertson DR Irvine (1989) ArticleTitlePlasticity of frequency organization in auditory cortex of guinea pigs with partial unilateral deafness. J. Comp. Neurol. 282 456–471 Occurrence Handle1:STN:280:BiaB3svmvVM%3D Occurrence Handle2715393

K Scheffler N Bilecen K Tschopp J Seelig (1998) ArticleTitleAuditory cortical responses in hearing subjects and unilateral deaf patients as detected by functional magnetic resonance imaging. Cereb. Cortex. 8 156–163 Occurrence Handle10.1093/cercor/8.2.156 Occurrence Handle1:STN:280:DyaK1c3gtFyqsQ%3D%3D Occurrence Handle9542894

M Scherg D Von Cramon (1985) ArticleTitleTwo bilateral sources of late AEP as identified by a spatiotemporal dipole model. Electroencephalogr. Clin. Neurophysiol. 62 32–44 Occurrence Handle10.1016/0168-5597(85)90033-4 Occurrence Handle1:STN:280:BiqC3c7gvF0%3D

M Scherg D Von Cramon (1986) ArticleTitleEvoked dipole source potentials of the human auditory cortex. Electroencephalogr. Clin. Neurophysiol. 65 344–360 Occurrence Handle10.1016/0168-5597(86)90014-6 Occurrence Handle1:STN:280:BimA3c%2Fls10%3D

M Scherg (1992) ArticleTitleFunctional imaging and localization of electromagnetic brain activity. Brain Topogr. 5 103–111 Occurrence Handle1:STN:280:ByyC2cvgs1w%3D Occurrence Handle1489638

AZ Snyder (1991) ArticleTitleDipole source localization in the study of EP generators: A critique. Electroencephalogr. Clin. Neurophysiol. 80 321–325 Occurrence Handle10.1016/0168-5597(91)90116-F Occurrence Handle1:STN:280:By6A38zivVA%3D

M Steinschneider IO Volkov MD Noh PC Garell MA Howard (1999) ArticleTitleTemporal encoding of the voice onset time phonetic parameter by field potentials recorded directly from human auditory cortex. J. Neurophysiol. 82 2346–2357 Occurrence Handle1:STN:280:DC%2BD3c%2FivFymsw%3D%3D Occurrence Handle10561410

N Suga E Gao Y Zhang X Ma JF Olsen (2000) ArticleTitleThe corticofugal system for hearing: recent progress. Proc. Natl. Acad. Sci. U.S.A. 97 11807–11814 Occurrence Handle10.1073/pnas.97.22.11807 Occurrence Handle1:CAS:528:DC%2BD3cXnvVSgs7s%3D Occurrence Handle11050213

I Tonnquist–Uhlen CW Ponton JJ Eggermont B Kwong M Don () ArticleTitleMaturation of human central auditory system activity: The T-complex. Clin. Neurophysiol.

J-P Vasama JP Mäkelä (1995) ArticleTitleAuditory pathway plasticity in adult humans after unilateral idiopathic sudden sensorineural hearing loss. Hear. Res. 87 132–140 Occurrence Handle10.1016/0378-5955(95)00086-J Occurrence Handle1:STN:280:BymC38fltFU%3D Occurrence Handle8567430

J-P Vasama JP Mäkelä (1997) ArticleTitleAuditory cortical responses in humans with profound unilateral sensorineural hearing loss from early childhood. Hear. Res. 104 183–190 Occurrence Handle10.1016/S0378-5955(96)00200-6 Occurrence Handle1:STN:280:ByiC1cvgt1A%3D Occurrence Handle9119762

J-P Vasama JP Mäkelä I Pyykkö R Hari (1995) ArticleTitleAbrupt unilateral deafness modifies function of human central auditory pathways. Neuro report. 6 961–964 Occurrence Handle1:STN:280:ByqA2MbjvVE%3D

J-P Vasama JP Mäkelä H Ramsay (1998) ArticleTitleModification of auditory pathway functions in patients with hearing improvements after middle ear surgery. Otolaryngol. Head Neck Surg. 119 125–130 Occurrence Handle1:STN:280:DyaK1czjslarsA%3D%3D

C Verkindt O Bertrand M Thevenet J Pernier (1994) ArticleTitleTwo auditory components in the 130–230 ms range disclosed by their stimulus frequency dependence. Neuro report. 5 1189–1192 Occurrence Handle1:STN:280:ByqD3c3nt1A%3D

RJ Zatorre AC Evans E Meyer A Gjedde (1992) ArticleTitleLateralization of phonetic and pitch discrimination in speech processing. Science. 256 846–849 Occurrence Handle1:STN:280:By2B2s7itV0%3D Occurrence Handle1589767

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Khosla, D., Ponton, C.W., Eggermont, J.J. et al. Differential Ear Effects of Profound Unilateral Deafness on the Adult Human Central Auditory System . JARO 4, 235–249 (2003). https://doi.org/10.1007/s10162-002-3014-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10162-002-3014-x