Abstract

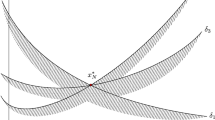

In quantitative risk management, it is important and challenging to find sharp bounds for the distribution of the sum of dependent risks with given marginal distributions, but an unspecified dependence structure. These bounds are directly related to the problem of obtaining the worst Value-at-Risk of the total risk. Using the idea of complete mixability, we provide a new lower bound for any given marginal distributions and give a necessary and sufficient condition for the sharpness of this new bound. For the sum of dependent risks with an identical distribution, which has either a monotone density or a tail-monotone density, the explicit values of the worst Value-at-Risk and bounds on the distribution of the total risk are obtained. Some examples are given to illustrate the new results.

Similar content being viewed by others

References

Denuit, M., Genest, C., Marceau, É.: Stochastic bounds on sums of dependent risks. Insur. Math. Econ. 25, 85–104 (1999)

Embrechts, P., Höing, A., Juri, A.: Using copulae to bound the value-at-risk for functions of dependent risks. Finance Stoch. 7, 145–167 (2003)

Embrechts, P., Höing, A.: Extreme VaR scenarios in higher dimensions. Extremes 9, 177–192 (2006)

Embrechts, P., Puccetti, G.: Bounds for functions of dependent risks. Finance Stoch. 10, 341–352 (2006)

Embrechts, P., Puccetti, G.: Bounds for functions of multivariate risks. J. Multivar. Anal. 97, 526–547 (2006)

Embrechts, P., Puccetti, G.: Risk aggregation. In: Jaworski, P., et al. (eds.) Copula Theory and Its Applications. Lecture Notes in Statistics, vol. 198, pp. 111–126. Springer, Berlin (2010)

Gaffke, N., Rüschendorf, L.: On a class of extremal problems in statistics. Math. Operforsch. Stat., Ser. Optim. 12, 123–135 (1981)

Kaas, R., Laeven, R., Nelsen, R.: Worst VaR scenarios with given marginals and measures of association. Insur. Math. Econ. 44, 146–158 (2009)

Knott, M., Smith, C.S.: Choosing joint distributions so that the variance of the sum is small. J. Multivar. Anal. 97, 1757–1765 (2006)

Puccetti, G., Rüschendorf, L.: Bounds for joint portfolios of dependent risks. J. Comput. Appl. Math. 236, 1833–1840 (2012)

Rüschendorf, L.: Random variables with maximum sums. Adv. Appl. Probab. 14, 623–632 (1982)

Rüschendorf, L.: Solution of a statistical optimization problem by rearrangement methods. Metrika 30, 55–61 (1983)

Rüschendorf, L., Uckelmann, L.: Variance minimization and random variables with constant sum. In: Cuadras, C.M., Fortiana, J., Rodrguez-Lallena, J.A. (eds.) Distributions with Given Marginals, pp. 211–222. Kluwer Academic, Norwell (2002)

Wang, B., Wang, R.: The complete mixability and convex minimization problems for monotone marginal distributions. J. Multivar. Anal. 102, 1344–1360 (2011)

Acknowledgements

We thank the co-editor Kerry Back, an associate editor and two reviewers for their helpful comments which significantly improved this paper. Wang’s research was partly supported by the Bob Price Fellowship at the Georgia Institute of Technology. Peng’s research was supported by NSF Grant DMS-1005336. Yang’s research was supported by the Key Program of National Natural Science Foundation of China (Grants No. 11131002) and the National Natural Science Foundation of China (Grants No. 11271033).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Proof of Proposition 2.4

1. The case n=1 is trivial. For n≥2, by the definition of JM distributions, there exist X 1∼F 1,…,X n ∼F n such that Var(X 1+⋯+X n )=0. Since

we have \(2\sigma_{1}-\sum_{i=1}^{n}\sigma_{i}\le 0\). Similarly, we can show that \(2\sigma_{k}-\sum_{i=1}^{n}\sigma_{i}\le 0\) for any k=1,…,n, i.e., (2.2) holds.

2. We only need to prove the “⇐” part for n≥2. Without loss of generality, assume σ 1≥σ 2≥…≥σ n . Let X=(X 1,…,X n ) be a multivariate Gaussian random vector with known marginal distributions F 1,…,F n and an unspecific correlation matrix Γ. We want to show there exists a Γ with Var(X 1+⋯+X n )=0. Let T be the correlation matrix of (X 2,…,X n ) and Y=X 2+⋯+X n . Define \(f(T)=\sqrt{\mathrm {Var}(X_{1})}-\sqrt{\mathrm {Var}(Y)}\). Clearly, f(T) is a continuous function of T with canonical distance measure. It is easy to check that \(f(T)=\sigma_{1}-\sum_{i=2}^{n}\sigma_{i}\le 0\) when X 2=σ 2 Z+μ 2,…,X n =σ n Z+μ n for some Z∼N(0,1). Because we have σ 1≥σ 2≥…≥σ n , we also obtain that \(f(T)=\sigma_{1}-|\sum_{i=2}^{n}(-1)^{i}\sigma_{i}| \ge 0\) when X i =(−1)i σ i Z+μ i for i=2,…,n. Hence there exists a correlation matrix T 0 such that f(T 0)=0. With the correlation matrix of (X 2,…,X n ) being T 0, we define \(X_{1}=-Y+\mathbb {E}(Y)+\mu_{1}\). Hence \(X_{1}\sim N(\mu_{1},\sigma_{1}^{2})\) and Var(X 1+⋯+X n )=0, which implies that F 1,…,F n are JM. □

Proof of Proposition 2.9

When s≥nF −1(1), we have m +(s)=1=ψ −1(s/n). Suppose s<nF −1(1). Obviously,

For \(r\in [0,\frac{s}{n})\), from \((\frac{\int_{r}^{\infty}(1- F(t))\,\mathrm{d}{t}}{s-nr})'=0\), we have

Suppose r=r ⋆ satisfies (5.2). Then

Note that r ⋆ always exists since g is continuous, g(0)=−s+nμ<0, F(s/n)<1 and

Integration by parts leads to

and hence

i.e.,

Therefore, the bound in (2.6) is greater than or equal to the bound in (2.5) by (5.1), (5.3), and (5.4). Note that the bound (2.6) is strictly greater if F −1(1)=∞.

For proving the second part of Proposition 2.9, we only need to consider the case of s<nF −1(1)<∞, since m +(s)=1=ψ −1(s/n) when nF −1(1)≤s<∞. Consider the problem (2.7) and

From the above proof, we can see that r ⋆ is the unique solution to (5.5). Therefore, the bounds (2.5) and (2.6) are equal if and only if (2.7) and (5.5) are equal. Since

we have \(r^{\star}\in[0,\frac{s-F^{-1}(1)}{n-1}]\). Thus

Therefore, the bounds (2.5) and (2.6) are equal if and only if a solution to (2.7) lies in \([0,\frac{s-F^{-1}(1)}{n-1}]\). □

Proof of Proposition 3.1

1. By (a) in Sect. 3.1, for any i≠j, \(U_{i}\in \left[0,c\right]\) implies that \(U_{j}\in \left[1-(n-1)c,1\right]\). Hence

and ℙ(A i ∩A j )=0. As a consequence, we have ⋃ i≠j A i ⊆B j . Note that we also have ℙ(⋃ i≠j A i )=(n−1)c=ℙ(B j ). Thus \(\mathbf {1}_{\bigcup_{i\neq j}A_{i}}=\mathbf {1}_{B_{j}}\) a.s. and

which implies that \(\mathbf {1}_{\{U_{j}\in (c,1-(n-1)c)\}}=\mathbf {1}_{(\bigcup_{i=1}^{n}A_{i})^{c}}\) a.s. for j=1,…,n.

2. We only prove the case when F has an increasing density. When c n =0, (3.6) follows from the definition of \(Q_{n}^{F}\). Next we assume c n >0. Write D j =A j ∪B j and X j =F −1(U j ), \(U_{j}\sim \mathrm{U}\left[0,1\right]\) for j=1,…,n. First note that by condition (b) in Sect. 3.1, for any j=1,…,n, F −1(U 1)+⋯+F −1(U n ) is a constant on the set \(D_{j}^{c}\). This constant equals its expectation, which is

The last equality holds because of (3.2) and

Therefore, almost surely,

Since c n ≤1/n and the sets A 1,…,A n and \(D_{1}^{c}\) are disjoint, we have

Hence there exists a \(U\sim \mathrm{U}\left[0,1\right]\) such that

□

Proof of Lemma 3.3

(i) Under the assumption on F, F −1(x) is convex and differentiable. Thus H a (x) is convex and differentiable. The definition of c n (a) shows that the average of H a (x) on \([c_{n}(a),\frac{1}{n}(1-a)]\) is H a (c n (a)) if \(0<c_{n}(a)<\frac{1-a}{n}\), namely

With H a (x) being convex, we have \(H'_{a}(c_{n}(a))\le 0\) and so \(H'_{a}(x)\le 0\) on \(\left[0,c_{n}(a)\right]\). Note that for n>2, \(H'_{a}(\frac{1-a}{n})=((n-1)^{2}-1)(F^{-1})'(\frac{1-a}{n})>0\) implies

for some \(c<\frac{1-a}{n}\); thus \(c_{n}(a)<\frac{1-a}{n}\) always holds. For n=2, \(H'_{a}(x)\le 0\) on \([0,\frac{1-a}{n}]\) since \(H'_{a}(\frac{1-a}{n})=0\) and H is convex.

(ii) This follows from similar arguments as in (i).

(iii) Suppose c n (a)>0. By the continuity of H a (x) with respect to x and (3.7), we know that c n (a) satisfies

Note that for any \(c\in \bigl[0,\frac{1}{n}(1-a)\bigr]\),

Thus it follows from the definition of c n (a) that \(H_{a}(c_{n}(a))=n\mathbb {E}(F^{-1}(V_{a}))\). For the case c n (a)=0, it is obvious that \(\psi(a)=n\phi(a)=n\mathbb {E}(F^{-1}(V_{a}))\).

(iv) Note that in a given probability space, for any measurable set B with ℙ(B)>0 and any continuous random variable Z with distribution function G, we have

To see this, denote the distribution of Z conditional on B by G 1 and the distribution conditional on {Z≥G −1(1−ℙ(B))} by G 2. Then we have

which implies that for \(U\sim \mathrm{U}\left[0,1\right]\),

Since \(A=\bigcap_{i=1}^{n} \{U_{i}\in\left[a,1-c_{n}(b)\right]\}\), we have \(\mathbb {P}(A)\ge 1-\frac{nc_{n}(b)}{1-a}>0 \) and U i ≤1−c n (b) on A. By defining \(Z=F^{-1}(U_{i}) \mathbf {1}_{\{U_{i}\le 1-c_{n}(b)\}}+F^{-1}(a)\mathbf {1}_{\{U_{i}>1-c_{n}(b)\}}\), it follows from (5.6) that

(v) It follows from (i), (ii) and the arguments in Remark 3.2.

(vi) We first prove the case when F has a decreasing density. Since H a (x) is convex with respect to x and differentiable with respect to a, the definition of c n (a) implies that c n (a) is continuous. Hence \(\phi(a)=n\mathbb {E}(F^{-1}(V_{a}) )\) is continuous. Suppose \(U_{a,1},\dots, U_{a,n}\sim \mathrm{U}\left[a,1\right]\) with copula \(Q^{\tilde{F}_{a}}_{n}\). Then \(F^{-1}(U_{a,1}),\dots,F^{-1}(U_{a,n})\sim \tilde{F}_{a}\) and they have copula \(Q^{\tilde{F}_{a}}_{n}\) too. By (v), we have

Thus from (5.7) and (5.8) we have

Next we prove the case when F has an increasing density. The continuity of c n (a) comes from the same arguments as above. By definition, H a (0) and ψ(a) are continuous and increasing functions of a. So we only need to show that when c n (a) approaches 0, H a (0)−ψ(a) approaches 0, too. Suppose that as a↗a 0, c n (a)→0 and c n (a)≠0 for a 0−ϵ<a<a 0 and ϵ>0. Then

which implies that

as a↗a 0. Together with the continuity of H a (0)−ψ(a), we get H a (0)−ψ(a)→0 as a→a 0. □

Rights and permissions

About this article

Cite this article

Wang, R., Peng, L. & Yang, J. Bounds for the sum of dependent risks and worst Value-at-Risk with monotone marginal densities. Finance Stoch 17, 395–417 (2013). https://doi.org/10.1007/s00780-012-0200-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00780-012-0200-5