Abstract

This paper proposes the design of ultra scalable MPI collective communication for the K computer, which consists of 82,944 computing nodes and is the world’s first system over 10 PFLOPS. The nodes are connected by a Tofu interconnect that introduces six dimensional mesh/torus topology. Existing MPI libraries, however, perform poorly on such a direct network system since they assume typical cluster environments. Thus, we design collective algorithms optimized for the K computer.

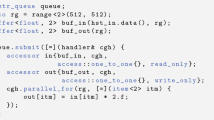

On the design of the algorithms, we place importance on collision-freeness for long messages and low latency for short messages. The long-message algorithms use multiple RDMA network interfaces and consist of neighbor communication in order to gain high bandwidth and avoid message collisions. On the other hand, the short-message algorithms are designed to reduce software overhead, which comes from the number of relaying nodes. The evaluation results on up to 55,296 nodes of the K computer show the new implementation outperforms the existing one for long messages by a factor of 4 to 11 times. It also shows the short-message algorithms complement the long-message ones.

Similar content being viewed by others

References

Ajima Y, Sumimoto S, Shimizu T (2009) Tofu: A 6D mesh/torus interconnect for exascale computers. Computer 42(11):36–40

Ajima Y, Takagi Y, Inoue T, Hiramoto S, Shimizu T (2011) The Tofu interconnect. In: Proc of HotI 2011, pp 87–94

Almási G, Archer C, Erway CC, Heidelberger P, Martorell X, Moreira JE, Steinmacher-Burow BD, Zheng Y (2005) Optimization of MPI collective communication on BlueGene/L systems. In: Proc of ICS 2005, pp 253–262

Barnett M, Payne DG, van de Geijn RA, Watts J (1996) Broadcasting on meshes with worm-hole routing. J Parallel Distrib Comput 35(2):111–122

Graham RL, Shipman GM, Barrett BW, Castain RH, Bosilca G, Lumsdaine A (2006) Open MPI: a high-performance, heterogeneous MPI. In: Proc of HeteroPar 2006

Jain N, Sabharwal Y (2010) Optimal bucket algorithms for large MPI collectives on torus interconnects. In: Proc of ICS 2010, pp 27–36

Matsumoto Y, Adachi T, Tanaka M, Sumimoto S, Soga T, Nanri T, Uno A, Kurokawa M, Shoji F, Yokokawa M (2011) Implementation and evaluation of MPI_Allreduce on the K computer. In: IPSJ SIG technical report, vol 2011-HPC-132

McCalpin JD (1995) Memory bandwidth and machine balance in current high performance computers. In: IEEE TCCA Newsletter, pp 19–25

Rabenseifner R (2004) Optimization of collective reduction operations. In: Proc of ICCS 2004, pp 1–9

Saad Y, Schultz MH (1989) Data communication in parallel architectures. Parallel Comput 11(2):131–150

Simmen M (1991) Comments on broadcast algorithms for two-dimensional grids. Parallel Comput 17(1):109–112

van de Geijn RA (1991) Efficient global combine operations. In: Proc of DMCC ’91, pp 291–294

Watts J, van de Geijn RA (1995) A pipelined broadcast for multidimensional meshes. Parallel Process Lett 5(2):281–292

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Adachi, T., Shida, N., Miura, K. et al. The design of ultra scalable MPI collective communication on the K computer. Comput Sci Res Dev 28, 147–155 (2013). https://doi.org/10.1007/s00450-012-0211-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00450-012-0211-7