Abstract

In this article we present a framework for assessing climate change impacts on water and watershed systems to support management decision-making. The framework addresses three issues complicating assessments of climate change impacts—linkages across spatial scales, linkages across temporal scales, and linkages across scientific and management disciplines. A major theme underlying the framework is that, due to current limitations in modeling capabilities, assessing and responding to climate change should be approached from the perspective of risk assessment and management rather than as a prediction problem. The framework is based generally on ecological risk assessment and similar approaches. A second theme underlying the framework is the need for close collaboration among climate scientists, scientists interested in assessing impacts, and resource managers and decision makers. A case study illustrating an application of the framework is also presented that provides a specific, practical example of how the framework was used to assess the impacts of climate change on water quality in a mid-Atlantic, U.S., watershed.

Similar content being viewed by others

Introduction

Water and watershed systems are highly sensitive to climate (Gleick and Adams 2000). This is well understood by water managers who have long sought to manage the risk associated with shorter-term, seasonal to interannual climatic variability, e.g., extreme weather events, floods, and droughts (CIG 2006). To meet this goal, a variety of tools and practices have been developed for assessing and managing risk. Current management practices, however, typically focus on this shorter-term climate variability. Long-term climate is assumed to be stationary.

The Fourth Assessment Report of the Intergovernmental Panel on Climate Change (IPCC) states that warming of the climate system is unequivocal, as is now evident from observations of increases in global average air and ocean temperatures, widespread melting of snow and ice, and rising average global sea level (IPCC 2007). Changes in the form, amount, and intensity of precipitation have also been observed, although with significant regional variability (IPCC 2007). These trends are expected to continue into the future, with a range of consequent effects on water and watershed systems. Responding to the challenge of long term climate change will require that managers be able to assess the possible impacts of climate change at relevant spatial and temporal scales, effectively incorporate this information into their decision making processes, and, where necessary, develop and implement strategies for adaptation.

Ecological risk assessment (ERA) is a well established approach for incorporating scientific information into environmental decision making (USEPA 1998). With its roots in earlier human health risk assessment approaches (e.g., USEPA 1986), ERA principles have recently been applied to watersheds (USEPA 2008). ERA involves an iterative dialogue between scientists and stakeholders occurring in problem formulation, analysis, and risk characterization phases (USEPA 2008). The principles underlying ERA provide a useful (and, to environmental managers, familiar) approach for assessing and managing climate change risk, though to date they have not generally been applied in this way.

Consideration of climate change in a risk assessment context is complicated by at least three factors: the broad spatial scope of the effects, from global to regional to local; the wide range of relevant timescales of the effects, from near- to long-term; and the many disciplines whose expertise must be blended to understand complex natural systems and their response to shifts in climate drivers. For example, spatially, climate change is a global phenomenon, but the systems of interest to resource managers (e.g., a particular watershed) are local. Similarly, large-scale climate change is manifested on timescales of decades and longer, while many resources must be managed over shorter time horizons. Finally, comprehensive assessments of climate change impacts on an ecosystem attribute or service might require input from climatologists, meteorologists, hydrologists, and ecologists. Consideration of management alternatives might require additional input from economists, sociologists, and urban or rural planners, among others. These characteristics create large and hard-to-characterize uncertainties in potential impacts and management strategies. Practical strategies are needed so that these complexities do not become insurmountable obstacles to incorporating information about long-term climate change into resource planning and management.

In this article we present a framework that explicitly incorporates the capabilities, tools, and methods needed to address the challenges associated with assessing climate change impacts on water and watershed systems. The framework is based on ERA, but does not exactly mimic the approach. A set of steps is presented that address linkages across spatial scales, temporal scales, and disciplines. Collectively, this series of steps make up a general strategy for using information about long-term climate change to conduct assessments of climate change impacts. To illustrate an application of the framework, a case study is also presented describing an assessment of the potential impacts of climate change on water quality in a mid-Atlantic, U.S., watershed.

Rationale and Audiences

The rationale for this article stems from two sources. First, several recent efforts suggest a need for improving the use of scientific information and tools to inform decision-making about climate change. The U.S. Climate Change Science Program (CCSP), the interagency program responsible for coordinating climate change research within the U.S. government, held a workshop in November 2005 to assess the capability of climate science to inform decision making (CCSP 2005). The workshop report emphasized progress to date in producing decision-relevant climate impacts information, but also the need for significant improvements. A 2007 National Research Council (NRC) evaluation of CCSP activities also concluded that advances in the science and understanding of the climate system are advancing well, but the use of that knowledge to support decision making is advancing too slowly (NRC 2007a). Finally, a NRC review of global change assessments identified clear, strategic framing of the assessment process as an essential need for conducting effective assessments (NRC 2007b).

Second, within the context of this top-down statement of need is our own experience speaking with resource managers about the impacts of climate change on water and watershed systems. For example, in March, 2007, the U.S. EPA sponsored a workshop addressing the effects of climate change on biological indicators (USEPA 2007). During the workshop, questions frequently arose concerning computer models of the climate system, our ability (or lack thereof) to predict the future using these models, and how to take the first steps to incorporate climate change into their work. Some examples include:

-

“I know that climate change is something I need to pay attention to, but how do I sort through the information and data that are out there to identify what’s most relevant to me?”

-

“What kind of predictions do the models make for my region/watershed?”

-

“How ‘good’ are these models?”

-

“Which is the ‘best’ model?”

-

“Is it true that future model simulations are so uncertain that we cannot use their output for anything practical?”

In this article we attempt to articulate the issues that lie beneath these questions. We recognize three broadly defined audiences: water managers and decision makers; the climate science and modeling community; and the climate change impacts science and assessment community. Differences in the perspectives and needs of these groups can limit effective collaboration (Nilsson and others 2003; NOAA 2004). The framework we present is intended to help bridge these gaps to facilitate the assessment of climate change impacts.

We recognize the complexity of the assessment process and do not suggest that ours is the only viable approach. We also acknowledge that many of the ideas and approaches discussed in this article have been developed, adopted, and promoted by other institutions and authors (e.g., certain activities carried out as part of the Intergovernmental Panel on Climate Change [IPCC], EPA’s Regional Vulnerability Assessment program [REVA], and NOAA’s Regional Integrated Science and Assessment program [RISA], to give just a few examples; see also Jones 2001). The value of the framework lies largely in the synthesis of information and methodologies already familiar to certain user groups (e.g., risk assessment, downscaling climate data, scenario planning) into a common framework useful for addressing climate change impacts on water and watershed systems.

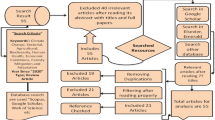

Assessment Framework

The following set of steps provides a framework for assessing climate change impacts on water and watershed systems (Fig. 1). Although presented as a linear sequence of steps, in practice there is considerable flexibility in how the steps can be applied. For example, many assessments involve a priori assumptions about the availability of data, modeling capabilities, and resources that are not ultimately met. Assessment endpoints may also be selected which are, upon investigation, not able to be adequately addressed. Finally, in Step 7, we emphasize the need to evaluate whether the information generated from sensitivity and scenario analyses fulfills the goals of the assessment. In each case it will be necessary to loop back to earlier steps, make necessary changes, and cycle through the process until the desired goals are achieved.

The flexibility inherent in the framework also provides a capability for cycling through the process to first screen for potential impacts followed by more focused and detailed analyses of priority concerns. For example, when first confronted with the issue of climate change, managers may want to first conduct relatively simple, screening-level assessments to determine the potential scope and magnitude of the problem, particularly when dealing with limited resources. Based on these results, managers may decide that more detailed studies are not justified. Steps 1 and 2 alone may often provide sufficient guidance for certain kinds of decisions. Conversely, results may suggest that more detailed studies focusing on specific climatic drivers, system endpoints, or other questions are necessary. In this way, the screening studies can be used to identify and prioritize assessment endpoints, drivers, or scenarios presenting the greatest risk of harm and warranting further study.

Step 1: Define the Decision Context

Much has been written on the elements of effective, assessment-based decision support for environmental problems (e.g., NRC 2007b; IPCC_TGICA 2007; Purkey and others 2007; Pyke and Pulwarty 2006; Cash and Moser 2000; Pyke and others 2007). The goal is the production of knowledge that is “credible,” “salient,” and “legitimate” (Cash and others 2006). To meet this goal, widespread agreement exists on the need for collaboration and dialogue among scientists, managers, and decision makers (NRC 1996; Jones 2001). However, in practice assessments often function via the “loading dock” approach (Cash and others 2006), whereby decision support information is developed by scientists and handed off in a one-way exchange to potential users, e.g., to other scientists interested in applying information about climate change to assess impacts, or to resource managers and decision makers. Assessments produced in this way are less likely to be effective in a decision making context, as their outputs will not necessarily match the needs of managers and decision-makers (Pyke and others 2007; NRC 1996).

Herrick and Pendleton (2000) and Jones (2001) have emphasized the need to determine the policy relevant parameters of a problem in advance, before choosing and analyzing models and data. This need is also fundamental to ERA in the planning and problem formulation phases (USEPA 1998, 2008).

An essential first step in the assessment process is thus to define assessment goals in the context of specific management, policy, or other decisions to be informed by the science (Glicken 2000). In practical terms, establishing the decision context provides four pieces of information that are essential for moving forward (USEPA 1998):

-

A process for identifying partners and building an interdisciplinary collaboration (i.e., the assessment team)

-

Management goals and endpoints of concern

-

Appropriate time horizons of the monitoring, management, and planning processes surrounding these endpoints

-

Risk preferences and likely tradeoffs of alternative management options

A process is required for identifying partners and building and sustaining an interactive dialogue involving the producers (e.g., scientists) and users of information (e.g., managers and decision makers) (Pyke and others 2007; NRC 1996; van der Meijden and others 2003; Wears and Berg 2005). In a fundamental sense, this process, and the joint production of relevant knowledge that it enables, is the assessment. Collaborations can occur and function in different ways depending on the specific institutions, individuals, and tasks involved. A detailed discussion of developing and maintaining effective collaborations is beyond the scope of this article, but for more information see, for example, USEPA (2003).

Having established a collaboration, the process of defining assessment goals might begin with an examination of the system as it is currently managed without explicit consideration of long-term climate change (CCSP 2008b; Altalo and others 2003a, b). Management goals and relevant decisions influencing management goals are clearly articulated at this point. Endpoints such as a minimum acceptable flow rate, dissolved oxygen level, or the presence of a particular indicator organism are identified, along with the timescales at which monitoring or planning decisions are made. Understanding the economic costs or other ramifications of potential outcomes is also crucial, as the tolerance for scientific uncertainty decreases as the severity of the possible consequences increases. For example, a decision whether or not to carry an umbrella when there is a 30% chance of rain will differ from a decision to evacuate a city in the face of a 30% chance of hurricane landfall.

The goal of this dialogue is first to ascertain from managers what decisions are most relevant for responding to climate change. Scientists must then articulate where the science is strongest and able to inform these decisions. It is particularly important at this stage that scientists know among themselves what information they can provide (e.g., how different models can be linked), and that both scientists and managers work together to understand each other’s needs and capabilities (Nilsson and others 2003). As will be discussed further under Step 6, there is a range of questions that can be posed to any assessment: from questions about general understanding aimed at improving our basic knowledge of how the system works, to more focused questions designed to address in detail specific management concerns. The type and level of decision support desired, the level of agreement on tradeoffs implied by decisions being considered, and the maturity of the current body of scientific knowledge about a given topic will determine from where in this spectrum the assessment questions are drawn and the degree to which dialogue is necessary throughout the assessment process (Chess and others 1998).

Finally, it should be recognized that scientific information is not always a major input to policy decisions (Policansky 1998). In this article, we implicitly limit our discussion to situations in which science can make a contribution to improved decision making. In addition, the scope of many assessment activities may be limited by practical constraints such as available funding or project timing. While we recognize these constraints, here we focus on technical factors affecting the assessment process.

Step 2: Develop a Conceptual Model Linking Drivers to Endpoints

A conceptual model linking climate drivers and assessment endpoints is a useful heuristic tool that helps to identify the major processes that must be captured to adequately assess impacts. Conceptual model development is a critical element of the problem formulation phase of ERA (e.g., see Suter 1999; http://cfpub.epa.gov/caddis/). Here we define the term conceptual model in same way as used in ERA, as a visual representation of predicted relationships between physical or ecological entities and the stressors to which they may be exposed (USEPA 1998). The information provided by a conceptual model can be helpful in at least five ways:

First, a conceptual model can be used to identify the key climate variables that are drivers of the system, e.g., temperature, precipitation, sunlight, etc. This guides the search for appropriate data sources, the subject of Step 3.

Second, the conceptual model highlights the relevant spatial and temporal scales of interaction between drivers and endpoints. This is critical for ensuring that sensitivity studies and scenarios developed for the assessment capture the most important dependencies in the system. For example, considering precipitation, climate change could lead to changes in seasonal or annual average values as well as changes in the frequency or characteristics of individual events and extremes (IPCC 2007; Groisman and others 2005). Often, available datasets will need to be manipulated further to be applied at the fine scales characteristic of local impacts problems. Such “downscaling” is discussed under Step 4.

Third, the conceptual model assists in the selection of physical models to be linked together to capture the interplay between climate drivers and management endpoints. This will be discussed further under Step 5.

Fourth, a conceptual model can be used to anticipate the major sensitivities in the system, guiding the choice of analyses to determine the most important responses of the endpoints to changes in the drivers, as well as to prioritize the major and minor sources of uncertainty. This is discussed under Step 6.

Finally, development of a conceptual model allows the assessment goals to be expressed as hypotheses to be tested and specific research questions to be answered as part of the assessment (Lookingbill and others 2007). This allows a scientifically rigorous interpretation of the assessment results.

The level of detail in a conceptual model will reflect what is required given the decision context, and what is possible given the scientific understanding of the system. In some cases, limitations in knowledge, data, or modeling tools, or the ability to link models from different disciplines, may make it impossible to meet the decision criteria. In these cases it may be necessary to return to Step 1 and revisit elements of the decision context in light of what is likely achievable with current capabilities. In turn, the conceptual model will evolve over time as new scientific information becomes available.

Step 3: Assess Available Data on Climate Drivers to Determine What is Known About Climate Change

Climate change is regionally variable. Different sources of information about climate change may also be available for different locations. The purpose of Step 3 is to determine what data are available on climate variables identified as drivers of change in the conceptual model, how such data can be acquired, and whether they are adequate for achieving the assessment goals.

Information about potential future climate change can be derived from a wide variety of sources (IPCC-TGICA 2007). These include historical weather observations, which provide a wealth of information on climate variability as well as on trends that may be extrapolated into the future. Paleoclimate data based on ice cores, tree rings, lake sediments, and other proxy sources can provide similar information over longer periods of record, albeit often with greater uncertainties. The emphasis in this article, however, is on information from climate modeling experiments designed to simulate the future effects of human modifications of the climate system.

Climate models encapsulate current levels of scientific understanding, including the complexities of feedbacks and threshold effects that may emerge when the different sub-systems (e.g., atmosphere, oceans, land, etc.) are linked together (IPCC 2007). They provide detailed snapshots of possible climate futures under different assumptions, including information on which variables might be most affected, the timing and range of changes in particular variables, clues to more subtle changes (e.g., how the intensity of precipitation or the relative magnitude of changes in daily maximum versus minimum temperatures might shift), and the specific physical processes and pathways whereby changes may occur. Simulations using climate models are also internally consistent: i.e., temperature, humidity, precipitation, and winds will all vary together in a realistic way (in the limit of the accuracy of the model equations and structure) within a given simulation. There are, however, several technical and conceptual challenges associated with the use of climate models to support impact assessments.

Generally speaking, the explosion of computing power and proliferation of digital environmental datasets in recent years has raised the expectations of decision makers that decisions across a variety of integrated earth systems problems can be informed by explicit predictions (Sarewitz and others 2000). With climate modeling in particular, prediction seems like a reality because of historical associations (climate models grew out of the models originally developed for short-term weather forecasting) combined with the level of detail, seeming realism, and detailed graphical presentation of model output. These numerical experiments are cognitively compelling—they seem like “truth.”

Current climate models, however, have not yet been demonstrated to provide precise, probabilistic predictions of future climate changes at the regional spatial scales needed by water managers (the attempt to develop probabilistic projections is, however, an active area of research: e.g., see http://www.ukcip.org.uk/). There are a number of characteristics that make the climate change problem a poor candidate for effective prediction-supported decision making (Herrick and Pendleton 2000). For example, there are large, and in some cases irreducible, uncertainties associated with long-term climate predictions. These uncertainties are particularly pronounced when the focus is on the diffuse, regional impacts that are of most interest to decision makers. Uncertainties arise from various sources: e.g., our understanding of important natural climate forcings, like volcanic or solar changes; the inherently chaotic nature of the atmospheric and oceanic fluid dynamical systems, reflected in internal model variability and hard-to-predict nonlinear behaviors; model configuration differences that arise due to computational constraints on model resolution, numerical approximations, and imperfect understanding of key climate system processes or imperfect observations of key climate system parameters; and assumptions about changes in anthropogenic emissions of greenhouse gases and aerosols, which are contingent on difficult-to-model trends in demographics, economic growth, and technologic development. The blending of the physical and social sciences in the climate change problem make accurate prediction particularly problematic. This is illustrated by the poor performance of forecasts of present-day energy consumption made over the preceding few decades (Craig and others 2002). In addition, the time frames associated with climate prediction make it very difficult to continuously compare predictions with observations of what actually happened, a crucial factor in the improvement of short-term weather forecasts over time (Hooke and Pielke 2000). These uncertainties in climate prediction propagate into the assessment of specific impacts, often through subsequent, linked models, potentially leading to extremely broad ranges of possible outcomes (Jones 2001).

The lack of a predictive capability, however, does not mean that climate models are not useful for impacts assessments. Climate models are excellent tools for providing insight into the range of (sometimes surprising) behaviors that result from interactions among the different linked components of complex systems. In the context of water management, this improved understanding of system behavior and system sensitivity to climate is valuable input for assessing and managing the risk of future harm.

Under a risk management paradigm, the goal of resource management is not to develop an optimal solution for a single, most-likely future, but instead to manage risk robustly over a wide range of plausible futures while avoiding unintended consequences (Jones 2001; Peterson and others 2003). If used appropriately, information from climate models can contribute to this strategy, even at regional and local scales. For example, the level of agreement among outputs from multiple climate models can be a guide for estimating what aspects of climate change may be understood more robustly, versus what aspects might only be characterized by examination of the widest range of possible futures. Moreover, even incomplete information can be useful. For example, there is disagreement among leading global climate models over the precise regional distribution of increases or decreases in future annual-average precipitation (IPCC 2007). Most models do agree, however, that a warmer atmosphere will result in increased intensity of the precipitation events that do occur (information that is bolstered by our physical understanding of the thermodynamics of the climate system and historical trend data). This is critical information for evaluating the impacts on endpoints that are sensitive to precipitation intensity, such as flooding or sediment erosion.

Finally, it should be noted that even without considering long-term climate change, many problems in water and watershed management are already characterized by large, irreducible uncertainties and lack of control over the primary drivers of change (e.g., changes in land use and landcover; hereafter referred to as land use), but managers are still confronted by the need to make decisions.

Step 4: Downscale Climate Data to Required Spatial and Temporal Scales

Though computing power continues to rise, current limits still make it extremely difficult to run climate models at the resolution needed to directly study regional and local scale impacts. Global-scale climate models, to date, typically operate using horizontal grid meshes on the order of 100–500 km (IPCC 2007). This is far coarser than the scale of a stream reach or small watershed, and cannot be used to accurately capture fine-scale processes, specific metrics, and higher-order statistics (e.g., the diurnal cycle or the timescales of individual storm events) that may be needed to understand impacts on particular management targets. This is perceived to be one of the most significant barriers to incorporating information on climate change into water and watershed management decision-making. “Downscaling” is a class of methods for overcoming this barrier.

Downscaling refers generally to the manipulation of a coarser resolution dataset to create data with finer resolution. Approaches for downscaling fall into two basic categories, often referred to as statistical and dynamical downscaling. The Intergovernmental Panel on Climate Change (IPCC) has produced guidance documents outlining the methods and best practices for both statistical (Wilby and others 2004) and dynamical (Mearns and others 2003) downscaling. The particular techniques chosen depend on the needs of the problem and the adequacy of pre-existing datasets for meeting those needs, as defined in Steps 1, 2, and 3.

The principle behind statistical downscaling is that both the large-scale climate state and local physiographic features (e.g., topography, land use, the presence of nearby lakes or oceans) act together to determine local climate (von Storch 1995, 1999). In statistical downscaling, empirical relationships between large-scale and local-scale averages of variables like temperature and precipitation are developed based on historical observations via a variety of methods (Wilby and others 2004). The major advantage of statistical downscaling is the relative computational efficiency compared to dynamical downscaling. For example, the relationships thus developed can be applied directly to output from a global climate model simulation. This type of approach is beginning to be widely used in a number of impacts assessment areas: for example, Wilby and Harris (2006) applied statistical downscaling in an investigation of the probability of low flows in the River Thames in the 2080s. It is important to realize, though, that a key assumption of statistical downscaling is that the statistical relationships between coarse- and fine-resolution variables created using historical data will also hold in the future under a changing climate.

Dynamical downscaling, instead of applying statistical relationships, uses output from global climate models to establish boundary conditions for finer resolution model simulations of much more limited areas of the globe (Mearns and others 2003). For example, the potential impacts of global climate change on a specific part of the world can be studied by running a so-called “regional climate model” at a much higher spatial and temporal resolution over just that domain using coarser resolution output from a global climate model at the domain boundaries. The major advantages of dynamical downscaling are the same as those associated with using a global climate model—namely, a detailed, three-dimensional picture of simulated changes across many variables, internal consistency between different variables based on physical principles (addressing the primary shortcoming associated with statistical downscaling), and the ability to investigate the specific physical processes and system dynamics that led to the simulated changes—coupled with the much higher resolution (typically on the order of 10–50 km horizontal grid mesh) that is computationally feasible given the limited simulation domain. This higher resolution allows for the development of fine-scale features associated with local topography/geography and land use, frontal systems, and convective rainstorms, often significantly improving on regional biases in the coarser-scale global models (e.g., Liang and others 2006). A recent application of dynamical downscaling is an investigation of the possible decline in western U.S. snowpack in warmer future decades, with associated increases in winter flooding and decreases in summer water availability (Leung and others 2004).

In either case, statistical or dynamical downscaling is the combination of information obtained at a coarser scale with some other source of information about the system, whether a statistical relationship or a full computer model. The resulting downscaled data can provide the necessary resolution to support a wide range of assessment activities.

Step 5: Assess and Select Available Tools to Simulate Biophysical Processes

The conceptual model developed in Step 2 describes the relevant causal pathways and biophysical processes linking climate drivers to assessment endpoints. The purpose of Step 5 can be thought of as being to build a mirror of the conceptual model out of available hydrologic, water quality, and other models and data. This provides a general capability for conducting experiments to understand how perturbations in climate drivers will impact particular management targets or endpoints.

It is important to recognize that water and watershed managers may already have a set of data, models, and tools which they use to assess the effects of stressors, including climatic variability, and to inform management decisions. It should first be determined whether these existing tools are adequate for also assessing the impacts of long-term climate change on the desired assessment endpoints. In particular, it should be determined how well existing tools and models capture the key biophysical processes in the conceptual model linking climate drivers to endpoints, whether they are already able to incorporate information about weather and climate, and if so, whether they are able to generate information appropriate to the decision context. If the tools and models currently used by managers do not meet this requirement, it will be necessary to modify and/or develop new tools, models, and analysis techniques for assessing impacts.

Different options exist for simulating the biophysical processes linking climate drivers and assessment endpoints. In certain cases empirical models based on the past observed system response to climatic variability might be a useful guide to projecting future impacts, particularly for assessing changes which do not exceed the range of historical variability. The principal benefit of these types of models is their simplicity and grounding in observed data. Their application is limited, however, by the assumption that system behavior in the future under changing climate conditions will be the same as in the past. Empirical models also can also be limited in their ability to represent a range of different drivers and endpoints.

Mechanistic models that numerically describe specific hydrologic and biogeochemical processes governing watershed behavior provide an alternative to empirical models. Mechanistic models have been developed for applications ranging from hydrology and water quality, soil erosion, and water resources planning and management. The principal benefit of mechanistic models is their ability to simulate, with varying degrees of detail, the complex, interdependent set of biophysical processes linking climate drivers to different system endpoints. An example is the HSPF (Hydrologic Simulation Program—Fortran) watershed model used by the U.S. EPA and others to assess the impacts of land-use change and management activities on stream water quality (Bicknell and others 1996). The complexity of mechanistic models can also be a weakness, however, as difficulty in acquiring the required input data, calibrating for a large number of parameters, and validating these models may be prohibitive for many potential users. In many cases, the representation of water management practices will also be an important component of simulations.

Step 6: Conduct Sensitivity Analysis and Scenario Planning

As discussed previously, we are currently not able to predict long-term climate change at local and regional scales with confidence. An underlying theme of this article is that the problem should therefore be approached from the perspective of risk management. Within a risk management paradigm, the goal is not to develop an optimal solution for a single, most-likely future, but instead to identify vulnerabilities and manage risk robustly over a wide range of potential future conditions (USEPA 1998; Peterson and others 2003).

Assessments can be designed to answer a range of questions covering the spectrum from improving basic system knowledge to evaluating a specific management option. Here we discuss examples of analysis approaches that might be used to address this range of questions. We sort these approaches loosely into two categories, sensitivity analysis and scenario planning, but there is significant overlap between them. The specific information required to meet assessment goals will vary depending on the decision context.

First, we define “sensitivity” and “scenario” for the purposes of this article. Sensitivity refers to the characteristics of the response of the system endpoints to perturbations in the drivers (in this case, climate drivers). This perturbation-response behavior provides a foundation for risk assessment. A scenario is a plausible, though often simplified, description of how the future may develop, based on a coherent, internally consistent set of assumptions about driving forces and key relationships (IPCC-TGICA 2007; CCSP 2007). A scenario is not a forecast because no calculation is done of the likelihood of that particular future actually occurring. Rather, each scenario is one alternative image of how the future can unfold. Climate change scenarios are typically developed in three ways: based on plausible but arbitrary changes; based on analog climate data from another place or in the past; or based on output from climate models (IPCC-TGICA 2007). To be most useful, scenarios developed for local- and regional-scale impacts assessment studies should be consistent with associated global-scale scenarios, physically plausible, sufficiently detailed to support the assessment of the given impact, representative of the range of possible future changes, and accessible to the target user (Smith and Hulme 1998). Scenarios can be used in sensitivity analysis or to support specific decision-focused planning activities (and combinations of the two, as there is the potential for considerable overlap).

Sensitivity Analysis

Analyses designed to evaluate the basic sensitivities in the system can vary in complexity depending on the goals of the assessment (Fig. 2). For example, examinations of the scientific literature or elicitations of expert judgment may be sufficient to address certain questions. An observed response to historical climate variability can also provide useful information about system sensitivity to climate, although this is limited to changes within the historical range of variability. In other cases, however, analyses of more detailed scenarios are required, e.g., to assess multi-stressor impacts or variability outside the range of observations.

Scenarios where climatic drivers, either individually or in physically realistic combination, are systematically changed by arbitrary amounts, often based on a qualitative interpretation of available information about climate change, are referred to as “synthetic scenarios” (IPCC-TGICA 2007). Sensitivity analyses based on synthetic scenarios are useful for systematically exploring how system endpoints respond to different assumptions about the type and magnitude of change, such as changes in a specific climatic variable (e.g., increased winter temperatures, decreased summer rainfall). The point is to explore the behavior of the system, paying attention to the following attributes:

-

Significant responses of endpoints to changes in some particular drivers and not others;

-

Asymmetrical responses of endpoints (e.g., with respect to impacts of changes in precipitation, large sensitivities to dry conditions but little response to wet conditions);

-

Other nonlinear behaviors, such as a high “gain” in the response of endpoints to drivers in certain portions of the range;

-

Thresholds above or below which particularly severe system impacts occur (e.g., the amount of climatic warming required to raise water temperatures in a stream to the point that a cold water fish species cannot reproduce and survive).

The main limitation of analyses based on this approach is that, because of their arbitrary character, synthetic scenarios do not necessarily reflect plausible future conditions. In addition, synthetic scenarios must also often be simplified, and thus may not capture the full spectrum of types of change, seasonal and other temporal variability, and the effects of internally self-consistent co-variations among different variables. The results of sensitivity analysis based on synthetic scenarios may therefore be most useful to the science community interested in general questions about system behavior.

Sensitivity analyses using analog and model based scenarios directly reflect more likely or plausible future conditions, more physically based relationships between changes in multiple variables, and better capture more complex patterns of change. Analog scenarios can be developed based on historical data and previously observed sensitivity to weather and climate variability (IPCC-TGICA 2007). Similarly, analog scenarios for one location can be developed based on observations from a different region. In addition, as discussed previously, scenarios based on climate models can also be used to develop an understanding of the potential system response to a range of plausible future conditions.

Sensitivity analyses using synthetic, analog, and model based scenarios centered on plausible futures are related. As discussed earlier in the article, the framework steps can be implemented in stages, with screening-level studies followed by more targeted, decision-specific assessments. The broader-based, general system understanding provided by the sensitivity analyses of synthetic scenarios provides a context for any set of futures selected for planning purposes. For example, the broad-based understanding of system response to a wide range of changes provides important context for evaluating the implications of any specific future change, such as whether the change occurs close to a tipping point in the system. Results from this type of analysis provide information for managers about the kinds of changes that need to occur to affect key management targets. Examples of how these different types of scenarios can be used will be presented in the discussion of the case study in the second half of the article.

In the context of decision support, a limitation of sensitivity analysis is that outputs do not necessarily provide the information most needed by decision makers (e.g., probability of exceeding a threshold, level of intervention required to offset a negative outcome). Scenario planning is an alternative approach that explicitly addresses this issue (Means and others 2005; Shoemaker 1995).

Scenario Planning

Scenario planning involves the use of a limited set of contrasting scenarios to explore the uncertainty surrounding the future consequences of a decision (Duinker and Greig 2007; Peterson and others 2003; CCSP 2007). It combines an emphasis on evaluating multiple futures with a specific focus on presentation and use by decision makers. As with sensitivity analysis, scenario planning is useful in situations such as climate impacts assessment where there are large, potentially irreducible uncertainties about the future, and where even if known, certain factors might be minimally controllable (IPCC-TGICA 2007; Peterson and others 2003; IPCC 2007; CCSP 2007). Information from an analysis based on scenario planning can be used by managers to proactively manage risk by implementing practices and strategies to make systems resilient to an appropriate range of plausible future conditions and events (Sarewitz and others 2000). Moreover, properly chosen scenarios have the potential to challenge the worldview of the decision maker and lead to consideration of diverse policy alternatives (Wright and Goodwin 2000).

Scenario planning can also be useful for data reduction (e.g., in some cases it may be adequate to know only the extreme high or low response) or when faced with resource limitations (e.g., comprehensive sensitivity studies can first be completed using simpler models and approaches, followed by more detailed assessments for a selected set of scenarios).

After conducting sensitivity analysis and scenario planning, it is necessary to evaluate the information generated to determine potential management responses, recognizing that the consequences of decisions are generally not known, and hence decisions are made to manage risk. This requires the meaningful communication of the results of the analyses and their relevance for the decisions being considered between the scientists and the managers (see Fig. 2). An important component of this communication is the characterization of the uncertainties associated with the analysis results. A detailed discussion of characterizing and communicating uncertainty is beyond the scope of this article, but for more information see, for example, Moss and Schneider (2000) and CCSP (2008a).

Step 7: Risk Management (Adaptation)

Risk management is the application of various legal, institutional, structural or other actions to reduce exposure to risk or otherwise reduce the likelihood of future harm. In the context of climate change, adaptation is applied as a process to manage climate-related risks. Much has been written about the elements of effective adaptation (e.g., CCSP 2008b). What is important to note here, however, is that from a risk management perspective, the major criterion for judging the success of an assessment activity is the extent to which information generated can be used to inform risk management decisions. We began by emphasizing the dependence on decision context in Step 1, and have referred to it throughout the discussion of the framework.

In this step it is necessary to consider whether the information generated fulfills the goal of the assessment and is adequate to support decision making. For example, after completing an assessment it may be determined that the resulting uncertainties are too great to support certain kinds of decision-making. Alternatively, the assessment may show that endpoints are not sensitive to the plausible range of changes in climate, thus indicating a low risk to future management from climate change.

Ultimately, decision makers must choose a course of action given the best available information at the given time. If the assessment as originally conducted is inconclusive, the new information can be added to the existing knowledge of the system, and then the process can be started again. An inconclusive assessment may also reveal gaps in the conceptual model, available data, and analytical models, and identify flaws in our understanding of known processes. In either case, this information can be used to guide future research to improve the quality of assessments. Failure to assess the adequacy of information to support decision making is a hallmark of the often ineffective “loading dock” approach discussed earlier.

Finally, it should be noted that assembling the right participants and needed expertise to conduct an assessment is an important accomplishment that should be maintained even after assessment goals have been met. Climate change and climate change impacts will occur gradually over time. There is thus great value in having all members of the assessment team participate in evaluating the effectiveness of risk management decisions over time, either through active monitoring or other methods of judging outcomes. This type of information can provide not only validation of assessment assumptions and results (e.g., by confirming or eliminating certain scenarios from consideration), but also provides a basis for continued learning and the adaptive management of resources over time as climate changes.

Example: Potential Impacts of Climate Change in the Monocacy River Watershed

This section describes a case study assessment of the potential impacts of climate change on water quality in a mid-Atlantic, U.S. watershed, the Monocacy River. The Monocacy River flows from south-central Pennsylvania to central Maryland, and is a tributary of the Potomac River and Chesapeake Bay. The case study provides a specific, practical example of how the steps outlined above can be followed to guide an assessment activity. We do not suggest the approach taken here is ideal in any absolute sense. Rather, it is merely a single example given the decision context, opportunities, and constraints specific to this place and time. Other assessments based on the framework could be very different. The intended focus is the assessment process rather than the results. Accordingly, only a limited set of results are presented. The following sections describe each step of the Monocacy assessment.

Decision Context

The Monocacy assessment was initiated and funded by the EPA ORD Global Change Research Program as part of larger program to assess and manage the potential impacts of climate change on U.S. water quality. The project was intended to address the vulnerability to climate change of a priority region, the Chesapeake Bay watershed, and to illustrate the use of recently developed tools in EPA’s BASINS modeling system for assessing watershed sensitivity to changes in climate. The project was completed over a period of about one year, from fall 2006 to summer 2007.

The Chesapeake Bay is the largest estuary in the U.S., supporting a complex ecosystem and a variety of products and services of vital importance to the region.

During the latter half of the 20th century, human activities within the Bay watershed resulted in severe ecological impairment, principally due to nutrient pollution and associated reductions in dissolved oxygen and water clarity. To address the problem, a government partnership called the Chesapeake Bay Program (CBP) was established in 1983 among Maryland, Virginia, Pennsylvania, the District of Columbia, and the federal government with the goal of restoring water quality and living resources throughout the Bay and tributaries. Although progress has been made in many areas, ecological impairment is still a major problem throughout much of the Chesapeake Bay.

The Chesapeake Bay watershed is fortunate to have an active and dynamic research community associated with numerous academic institutions, government agencies, and non-governmental organizations. Prior to the assessment, an expert advisory group was formed consisting of members of the Chesapeake Bay Program, academic researchers knowledgeable of climate change in the Bay area, and EPA and other government agency staff. Candidate members of this group were identified by networking through contacts from a previous collaboration (EPA’s Consortium of Atlantic Regional Assessments [CARA] project), and by reviewing the literature and contacting individuals who had published studies related to climate change or the impacts of climate change on the Chesapeake Bay. This advisory group was engaged throughout the assessment process to help define the decision context, provide technical support, and help coordinate with related research activities.

The decision context for the Monocacy assessment reflects the activities and goals of the EPA Chesapeake Bay Program Office (CBPO). In 2000, the Chesapeake Bay Program partners pledged to restore Bay water quality by 2010. If water quality standards for dissolved oxygen, chlorophyll, and water clarity are not met by this time, a Total Maximum Daily Load (TMDL) will likely be required, including mandatory control actions. Excessive loading of nitrogen, phosphorus, and sediment have an important influence on Bay dissolved oxygen, chlorophyll, and water clarity. The CBPO is thus interested in developing a better understanding of how multiple factors, including climate change, could alter current nutrient and sediment reduction targets to meet water quality standards.

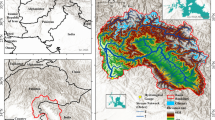

Watershed modeling at the scale of the entire Chesapeake Bay watershed (165,760 km2) was not feasible in this study. It was thus decided that the assessment should focus on a single subwatershed, and be framed as a screening-level study to determine whether the potential implications of climate change in this sub-watershed warrant consideration of potential impacts at the scale of the entire Bay watershed. The Monocacy River watershed was selected for study due to its size (1927 km²), mix of land uses, and the availability of historical streamflow data from a USGS streamgage located near the mouth of the river (see Fig. 3). Nitrogen, phosphorus, and sediment were selected as assessment endpoints because of their influence on Bay dissolved oxygen, chlorophyll, and water clarity, the endpoints that may be regulated if a TMDL is required. A relatively near-term planning horizon of 2030 was selected to be consistent with a previous CBP effort to assess the impacts of land use change on water quality in 2030. The impacts of future land use change were not, however, considered in this study. The specific goal of the Monocacy assessment was thus to determine a plausible range of changes in nitrogen, phosphorus, and sediment loads resulting from changes in climate by the year 2030.

Conceptual Model

A simplified conceptual model that illustrates the main process pathways linking climate and other relevant drivers to water quality endpoints is shown in Fig. 4. Changes in temperature and precipitation were considered the key climate drivers influencing water quality endpoints. More specifically, changes in the amount, seasonal variability, and intensity of precipitation events were identified as important to assessing nonpoint nutrient and sediment loading, along with seasonally variable changes in air temperature that influence evapotranspiration and other hydrologic processes. The conceptual model provides a benchmark against which the capabilities of assessment models can be compared. Note that land use change is included as a driver in the conceptual model, but was not considered in the Monocacy assessment. Land use change was included due to its important influence on nutrient and sediment loading to streams, and because understanding the relative influence of climate versus land use change on watersheds will be important for developing adaptive strategies.

Available Data on Climate Drivers

Having defined the assessment goals and endpoints (the decision context), and the key climate drivers influencing assessment endpoints (from the conceptual model), it was necessary to acquire data about future climate change in the Chesapeake Bay region to develop climate change scenarios. As discussed previously, it is not currently possible to predict with any degree of accuracy what future climate change will be at any particular location. It is possible, however, to identify a potential range of future changes by considering data and information from a variety of sources, models, and/or modeling assumptions. Accordingly, we wished to acquire data and information sufficient to estimate the plausible types and ranges of change for the Chesapeake Bay region by the year 2030.

A literature review was first conducted which provided general information about the types and ranges of potential changes, albeit in the context of other specific studies (e.g., changes estimated for the year 2100 rather than 2030). This effort was greatly facilitated by working with the expert advisory group to identify previous studies and sources of information. A particular challenge was finding climate change data for the relatively near-term planning horizon of 2030, which is earlier than the horizon considered in most studies. Details such as anticipated seasonal variability in future changes were also difficult to acquire from the published literature.

We then looked to available sources of information about climate change based on climate modeling experiments. Climate modeling data are available from a variety of sources (e.g., the Intergovernmental Panel on Climate Change [IPCC] Data Distribution Center). In this study we used climate modeling data available through Penn State University’s Consortium of Atlantic Regional Assessments project (CARA) web page (http://www.cara.psu.edu/).

The CARA dataset is derived from the same global climate model (GCM) experiments archived by the IPCC but distributed in an easily accessible summary form. Simulated future changes in temperature and precipitation totals for the mid-Atlantic region were acquired for the period 2010–2039 from climate modeling experiments using 7 GCM models (from the IPCC Third Assessment Report; IPCC 2001) and two IPCC future greenhouse gas emission storylines, A2 (a moderate-high, fossil fuel intensive emissions future) and B2 (a moderate-low, renewable-heavy future; IPCC 2001). CARA data are expressed as changes relative to the base period 1971–2000, averaged for each season of the year, and interpolated spatially from the original GCM grid resolution to 1/8 degree resolution. This information was used to develop a set of scenarios reflecting the potential range of future changes in temperature and precipitation in the Monocacy watershed by 2030.

Downscale Data on Climate Drivers

The next three steps outlined in the assessment framework were completed using tools and models within EPA’s BASINS modeling system. The climate assessment tool (CAT) within BASINS provides a set of capabilities for modifying historical weather data to create user-specified climate change scenarios for input to the Hydrological Simulation Program—FORTRAN (HSPF) watershed model (Johnson and Kittle 2006). In this study, BASINS CAT was used to create climate change scenarios for the Monocacy watershed by modifying 16 years of hourly historical temperature and precipitation data, the period from 1984 to 2000, from 7 NCDC weather stations located within or adjacent to the Monocacy watershed. In each case, the base historical time series were modified to reflect a potential future change scenario from the CARA dataset. This approach provided a simple but effective form of spatial and temporal downscaling, whereby data of coarse spatial resolution (e.g., 1/8 degree resolution gridded data from CARA) was interpolated to the location of individual NCDC weather stations, and data of course temporal resolution (seasonal average changes from the CARA dataset) were layered over higher temporal resolution data from the historical record (hourly data at each weather station). Creating scenarios in this way, by modifying historical records from multiple weather stations, allows any spatial variability in climate change to be captured while maintaining the existing spatial correlation structure (e.g., among adjacent NCDC weather stations).

Assess Available Impacts Models

In this study the HSPF model was selected for assessing impacts on watershed endpoints. HSPF uses information on precipitation, temperature, evaporation, and parameters related to land use patterns, soil characteristics, and agricultural practices to simulate the processes that occur in a watershed. The model is a well documented, broadly applicable analytical tool with an established record of applications.

HSPF was selected for two reasons. First, the impacts of climate change on watershed processes are complex, and it was decided that a relatively detailed model was necessary to adequately represent the sensitivities of the system to changes in temperature and precipitation. This is particularly true given the importance of understanding the sensitivity of biologically reactive water quality endpoints such as nitrogen to changes in climate. Second, to be useful in a decision making context to the Chesapeake Bay Program, it was important that the assessment model be compatible with the existing tools and models used by the Chesapeake Bay Program to assess program outcomes. The Chesapeake Bay Program supports and evaluates program outcomes using a model known as the Phase 5 Community Watershed Model, which is based on HSPF (http://www.chesapeakebay.net/phase5.htm). The use of HSPF in this assessment thus allows the results to be benchmarked against analyses conducted using the Phase 5 model. All HSPF simulations in this study were set up in the same way using the same model parameters, land use data, and including the same best management practice (BMP) data used by the Phase 5 model.

Conduct Sensitivity Analysis and Scenario Planning

Sensitivity analysis and scenario planning were conducted using tools and models within the BASINS system. The scenarios evaluated reflect potential future changes in temperature and precipitation. Future changes in land use or other factors that could impact nutrient and sediment loading to the Monocacy River were not considered.

BASINS CAT was used to create and analyze two types of scenarios to assess different questions about system sensitivity to changes in temperature and precipitation. The first analysis used a set of “synthetic” scenarios to gain fundamental understanding of important system properties, e.g., thresholds and non-linear behavior. Synthetic scenarios describe techniques where particular climatic attributes are changed by a realistic but arbitrary amount, often according to a qualitative interpretation of climate model simulations for a region (IPCC_TGICA 2007). The results of this type of analysis are particularly useful for providing insights about the type and amount of change necessary to threaten a management target or push the system past a threshold (e.g. the amount of warming required to increase water temperatures to the point where a sensitive species is impacted). A set of 42 synthetic scenarios were created and analyzed that reflected different combinations of arbitrarily assigned changes in temperature and precipitation. Baseline temperatures (historical time series from 1984 to 2000) were adjusted by 0, 2, 4, 6, 8, and 10° F, and baseline precipitation volume by −10, −5, 0, 5, 10, 15, and 20 percent. Changes in precipitation volume were implemented by applying a uniform multiplier to all precipitation events in the record. The range of changes evaluated were selected to be consistent with model simulations from the CARA dataset.

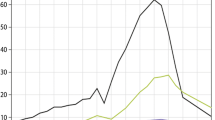

Figure 5 shows the simulated response of mean annual nitrogen loading in the Monocacy River to changes in mean temperature and precipitation. Contours were generated by interpolation from the 42 HSPF simulations using synthetic scenarios. The simulated impact of warming temperatures on nitrogen loading can be seen in Fig. 5 by moving from the point labeled “current climate” vertically upwards. The impacts of changes in precipitation can be seen by moving horizontally left or right from this point. In a similar way, the simulated impacts of any combination of change in temperature and precipitation on annual nitrogen loading can be seen by moving along different trajectories within the plot. On average, mean annual nitrogen loading changes about 1 percent per percent change in mean annual precipitation (relative to current conditions), and about 3 percent per degree C change in mean annual air temperature. Similar plots were developed for phosphorus and sediment loading but are not shown here.

Contour plot showing simulated changes in N loading (kgs*1000 per year) in the Monocacy River as a function of mean annual temperature and precipitation. Contours are interpolated from 42 HSPF simulations using synthetic climate change scenarios. The historical mean annual temperature and precipitation are indicated by the black star

The second analysis used a set of model-based climate change scenarios to estimate the potential range of changes in nitrogen, phosphorus, and sediment loading in the Monocacy River by the year 2030. This analysis was intended to assess the sensitivity of these endpoints to a set of specific, internally consistent climate change scenarios based on climate modeling experiments reflecting different assumptions about future greenhouse gas emissions and different model representations of the climate system.

BASINS CAT was used to create and analyze a set of 42 model-based scenarios reflecting seasonally variable climate simulations from 7 GCM models and 2 emissions storylines for the period 2010–2039 (the averaging period in the CARA dataset closest to the 2030 planning horizon of the Chesapeake Bay Program) from the CARA dataset. Scenarios were created by adjusting the baseline historical temperature and precipitation time series to reflect simulated changes for each season of the year (Dec/Jan/Feb; Mar/Apr/May; Jun/Jul/Aug; Sep/Oct/Nov). In addition, scenarios for each of the 14 model/storyline combinations were created in 3 different ways to reflect different assumptions about how the changes in precipitation could occur.

It is generally expected that as climate changes, a greater proportion of annual precipitation will occur in larger magnitude events (IPCC 2007). The CARA dataset, however, did not provide information about which events would be most affected. Accordingly, to capture a range of plausible changes in event intensity, scenarios were created by applying the simulated seasonal changes from the CARA dataset in three ways: (1) as a constant multiplier applied equally to all events within the specified season, (2) as a constant multiplier applied only to the largest 30% of events within the specified season, and (3) as a constant multiplier applied only to the largest 10% of events within the specified season. These 3 assumptions about which events will be most affected by future changes capture a range consistent with observed trends during the 20th century (e.g., during this period there was a general trend throughout the U.S. towards increases in the proportion of annual precipitation occurring in roughly the largest 30 percent magnitude events; Groisman and others 2005). The resulting set of 42 scenarios capture a broad range of potential future climate change suitable for identifying a range of potential impacts on water quality endpoints.

Figure 6 shows the simulated response of mean annual nitrogen loading in the Monocacy River to the model-based scenarios developed from climate model simulations. Figure 6 shows results for only the 14 scenarios created by applying a uniform multiplier to all events (i.e., does not reflect potential changes in the proportion of precipitation occurring in large magnitude events). The spatial distribution of the points shows the range of simulated changes in mean annual temperature and precipitation from the 7 GCM models and two emissions storylines. The star indicates the historical mean temperature and precipitation. All simulations show warming mean annual temperatures by 2030. The simulations vary with respect to changes in mean annual precipitation, with some showing increases, and others decreases. Simulated changes in mean annual precipitation for these scenarios ranged from about −1 to 5 percent, and changes in mean annual temperature from about 1 to 2°C. The resulting changes in mean annual nitrogen loads ranged from about −5 to 3 percent. The range for all scenarios including those with increasing intensity was −5 to 8 percent. The decreases are likely to result from decreases in streamflow also projected by 2030. Changes in phosphorus and sediment loads based on all scenarios ranged from about −16 to 20 percent and −20 to 140 percent, respectively.

Scatter plot showing simulated changes in N loading in the Monocacy River (indicated by the gray-scale in kgs*1000 per year). Points are from 14 HSPF simulations using model-based climate change scenarios from the CARA dataset. Circles represent simulations based on the B2 emissions storyline, triangles represent simulations based on the A2 emissions storyline, and the star represents historical mean annual temperature and precipitation

Risk Management

This assessment is just a first step towards understanding the very complex implications of climate change for the Chesapeake Bay, and thus it was not intended to prescribe specific practices for managing risk. Rather, it was the goal of this assessment to determine whether the potential implications of climate change for the Monocacy watershed warrant further consideration and study, including the assessment of potential impacts at the scale of the entire Bay watershed. The results of this study, together with the existing knowledge about system sensitivity to interannual climate variability, suggest that future changes in climate could indeed influence the ability of the Chesapeake Bay Program to meet water quality restoration goals.

The Monocacy assessment has resulted in several followup actions which can be considered risk management. First, after completing the assessment, the EPA CBPO expressed interest in scaling it up to conduct a similar screening-level analysis for the entire Chesapeake Watershed. Because of the large effort involved, however, the number of scenarios assessed needed to be reduced. It was decided run a reduced set of three climate change scenarios at the scale of the entire Bay watershed using the Phase 5 Community Watershed Model. To capture a complete range of potential outcomes, the three scenarios resulting in the highest, middle, and lowest changes in N loading from the Monocacy assessment were selected for use with the Phase 5 model. These model runs have been completed and the data are being analyzed. The outcomes of this work will provide an indication of the potential risk to Bay restoration goals presented by climate change. More generally, the results of this assessment can be used to guide and/or focus future assessment activities.

In addition, given the well established influence of land use on stream nutrient and sediment loading, the EPA Global Change Research Program decided to extend the Monocacy assessment to assess a series of coupled climate-land use change scenarios. The effectiveness of management practices under future climate scenarios will also be considered. This work will be conducted during the fall of 2008. Ultimately, these studies will provide information to CBP managers to help manage any increase in risk to water quality restoration goals due to climate change.

Finally, in 2008 a scientific and technical advisory committee to the Chesapeake Bay program initiated the development of a strategy for addressing the impacts of climate change on the Chesapeake Bay watershed. Key members of this group were also members of the advisory group assembled for the Monocacy assessment. Although not a direct outcome of the Monocacy assessment, this illustrates the ongoing benefits of a successful collaboration.

Summary

In this article we present a framework for assessing climate change impacts on water and watershed systems that address three characteristics complicating the assessment process—linkages across spatial scales, across temporal scales, and across scientific and management disciplines. The framework stresses the need for a clear definition of the decision context to ensure results are responsive to the needs of decision makers, and that due to current limitations in modeling capabilities, assessing and responding to climate change should be approached from the perspective of risk assessment and management rather than as a prediction problem. The framework also stresses the need for close collaboration among climate scientists, scientists interested in assessing impacts, and resource managers and decision makers.

A case study assessment in the Monocacy River watershed provides an example of how the framework can be applied. A subwatershed of the Chesapeake Bay, the Monocacy assessment was conducted as a screening-level analysis to determine whether the potential implications of climate change on nutrient and sediment loads in the Monocacy River warrant further consideration and study, including the assessment of potential impacts at the scale of the entire Bay watershed. Results suggest the potential for significant impacts, and have resulted in followup work assessing climate change impacts for the entire Chesapeake Bay watershed, as well as extension of the Monocacy assessment to include consideration of coupled climate and land use change scenarios.

We recognize the complexity of the assessment process and do not suggest that ours is the only viable approach. We also acknowledge that many of the ideas and approaches discussed in this article have been developed, adopted, and promoted by other institutions and authors. The specific steps outlined in the framework grew largely out of the authors’ personal experiences conducting assessments and interacting with resource managers and decision makers. We hope the approach presented here can, in some way, assist others to better understand the risk presented by climate change and to make well-informed decisions about responding to the challenge of climate change.

References

Bicknell BR, Imhoff JC, Kittle J, Donigian AS, Johansen RC (1996) Hydrological simulation program-FORTRAN, user’s manual for release 11. U.S. Environmental Protection Agency. Environmental Research Laboratory, Athens

Cash DW, Borck JC, Patt AG (2006) Countering the loading-dock approach to linking science and decision making. Science, Technology & Human Values 31:465–494

Cash DW, Moser SC (2000) Linking global and local scales: designing dynamic assessment and management processes. Global Environmental Change 10(2):109–120

CCSP (2005) Report on the U.S. Climate Change Science Program Workshop: climate science in support of decisionmaking, Arlington, VA, 14–16 November 2005

CCSP (2007) Scenarios of Greenhouse Gas Emissions and Atmospheric Concentrations (Part A) and Review of Integrated Scenario Development and Application (Part B). A Report by the U.S. Climate Change Science Program and the Subcommittee on Global Change Research [Clarke L, Edmonds J, Jacoby J, Pitcher H, Reilly J, Richels R, Parson E, Burkett V, Fisher-Vanden K, Keith D, Mearns L, Rosenzweig C, Webster M (Authors)]. Department of Energy, Office of Biological & Environmental Research, Washington, DC, USA, 260 pp

CCSP (2008a) Best Practice Approaches for Characterizing, Communicating and Incorporating Scientific Uncertainty in Climate Decision Making. A Report by the U.S. Climate Change Science Program and the Subcommittee on Global Change Research [Morgan MG (author) with Dowlatabadi H, Henrion M, Keith D, Lempert R, McBride S, Small M, Wilbanks T (contributors)]. Public review draft available from http://www.climatescience.gov/Library/sap/sap5-2/public-review-draft/default.htm

CCSP (2008b) Preliminary review of adaptation options for climate-sensitive ecosystems and resources. A Report by the U.S. Climate Change Science Program and the Subcommittee on Global Change Research. [Julius SH, West JM (eds) Baron JS, Griffith B, Joyce LA, Kareiva P, Keller BD, Palmer MA, Peterson CH, Scott JM (Authors)]. U.S. Environmental Protection Agency, Washington, DC, 873 pp

Chess C, Dietz T, Shannon M (1998) Who should deliberate when? Human Ecology Review 5(1):45–48

CIG (2006) Climate Impacts Group (CIG), Joint Institute for the Study of the Atmosphere and Ocean, University of Washington. “Planning for Climate Variability and Change.” Available from Worldwide Web: http://www.cses.washington.edu/cig/fpt/cvplanning.shtml

Craig PP, Gadgil A, Koomey JG (2002) What can history teach us? a retrospective examination of long-term energy forecasts for the United States. Annual Review of Energy and the Environment 27:83–118

Duinker PN, Greig LA (2007) Scenario analysis in environmental impact assessment: improving explorations of the future. Environmental Impact Assessment Review 27:206–219

Gleick P, Adams DB (2000) Water: the potential consequences of climate variability and change for water resources of the United States, Report of the Water Sector Assessment Team of the National Assessment of the Potential Consequences of Climate Variability and Change. Pacific Institute, Oakland, p 151

Glicken J (2000) Guiding stakeholder participation “right”: a discussion of participatory processes and possible pitfalls. Environmental Science & Policy 3:305–310

Groisman P, Knight R, Easterling D, Karl T, Hegerl G, Razuvaev V (2005) Trends in intense precipitation in the climate record. Journal of Climate 18:1326–1350s

Herrick CJ, Pendleton JM (2000) A decision framework for prediction in environmental policy. In: Sarewitz D, Pielke RA Jr, Byerly R Jr (eds) Prediction: science, decision making, and the future of nature. Island Press, Washington, 405 pp

Hooke WH, Pielke RA Jr (2000) Short-term weather prediction: an orchestra in need of a conductor. In: Sarewitz D, Pielke RA Jr, Byerly R Jr (eds) Prediction: science, decision making, and the future of nature. Island Press, Washington, 405 pp

IPCC (2001) Climate change 2001: impacts, adaptation, and vulnerability: contribution of Working Group II to the Third Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, UK

IPCC (2007) Climate Change 2007: The Physical Science Basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change [Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds)]. Cambridge University Press, Cambridge, United Kingdom and New York, NY, 996 pp

IPCC-TGICA (2007) General Guidelines on the Use of Scenario Data for Climate Impact and Adaptation Assessment. Version 2. Prepared by T.R. Carter on behalf of the Intergovernmental Panel on Climate Change, Task Group on Data and Scenario Support for Impact and Climate Assessment, 66 pp

Johnson T, Kittle J Jr (2006) Sensitivity analysis as a guide for assessing and managing the impacts of climate change on water resources. AWRA Water Resources Impact 8(5):15–17

Jones RN (2001) An environmental risk assessment/management framework for climate change impact assessments. Natural Hazards 23(2–3):197–230

Leung LR, Qian Y, Bian X, Washington WM, Han J, Roads JO (2004) Mid-century ensemble regional climate change scenarios for the western. United States Climatic Change 62:75–113

Liang X-Z, Pan J, Zhu J, Kunkel KE, Wang JXL, Dai A (2006) Regional climate model downscaling of the U.S. summer climate and future change. Journal of Geophysical Research 111. doi:10.1029/2005JD006685

Lookingbill TR, Gardner RH, Townsend PA, Carter SL (2007) Conceptual models as hypotheses in monitoring urban landscapes. Environmental Management 40(2):171–182

Means E, Patrick R, Ospina L, West N (2005) Scenario planning: a tool to manage future water utility uncertainty. Journal of American Water Works Association 97(10):68

Mearns LO, Giorgi F, Whetton P, Pabon D, Hulme M, Lal M (2003) Guidelines for use of climate scenarios developed from regional climate model experiments. Report to the Task Group on Scenarios for Climate Impact Assessment (TGCIA) of the Intergovernmental Panel on Climate Change (IPCC), 38 pp