Abstract

We propose a quantile–based method to estimate the parameters of an elliptical distribution, and a battery of tests for model adequacy. The method is suitable for vast dimensions as the estimators for location and dispersion have closed–form expressions, while estimation of the tail index boils down to univariate optimizations. The tests for model adequacy are for the null hypothesis of correct specification of one or several level contours. A Monte Carlo study to three distributions (Gaussian, Student–t and elliptical stable) for dimensions 20, 200 and 2000 reveals the goodness of the method, both in terms of computational time and finite samples. An empirical application to financial data illustrates the method.

Similar content being viewed by others

Notes

There exists a tail–trimmed version of GMM (Hill and Renault 2012) that does not require existence of moments.

\(\varvec{\Sigma }\) is the variance–covariance matrix, up to a scale, if second moments exist.

The idea of using \(X \pm Y\) as a way to glean information about dependence is embedded in the concept of co–difference (see e.g. Rosadi and Deistler 2011). Co–difference and our proposal are however substantially different in several respects. First, our measure is not the sum but the projection onto the 45–degree line. Second, and more importantly, co–difference is a measure of dependence between two random variables, i.e. the equivalent of \(\sigma _{i\,j}\) in the elliptical family. Our projection is a way to estimate the latter.

An alternative that requires only one univariate optimization regardless of the dimension is to pool the functions to match instead of the estimators –\(h_{\alpha }(\varvec{\hat{q}}_{N})=\sum _{j=1}^N h_{\alpha }(\varvec{\hat{q}}_{j\,N})\) and \(\ddot{h}_{\alpha }^R(\varvec{q}_{\varvec{\theta }})=\sum _{j=1}^N \ddot{h}_{\alpha }^R(\varvec{q}_{\varvec{\theta }_j})\)–, and then

$$\begin{aligned} \hat{\alpha }_{N}=\mathop {arg min}\limits _{\alpha \in \varvec{\Theta }}\, (h_{\alpha }(\varvec{\hat{q}}_{N}) - \ddot{h}_{\alpha }^R(\varvec{q}_{\varvec{\theta }}) ) W_{\check{\alpha }_N}(h_{\alpha }(\varvec{\hat{q}}_{N}) - \ddot{h}_{\alpha }^R(\varvec{q}_{\varvec{\theta }}) ). \end{aligned}$$Simulating from an ESD requires to simulate from a Gaussian and a totally right skewed standardized univariate stable distribution:

$$\begin{aligned} A \sim S_{\alpha /2}\left(\left(\cos \frac{\pi \alpha }{4}\right)^{2/ \alpha },1,0\right), \end{aligned}$$for which we use Chambers et al. (1976).

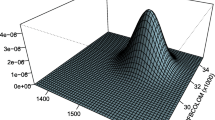

The plots of the estimated dispersion matrices in Fig. 5 should be taken cautiously since the scale is not the same as in the true dispersion matrix.

All the simulation studies and the empirical illustration below were programmed in Matlab R2009b, and performed on a Sony Vaio with an Intel Core Due processor of 2.10GHz and 4GB of SDRAM.

See Bingham et al. (2003) for applications of the elliptical family to risk management.

If the hypotheses would be independent \(\zeta = 1 - \prod \limits _{i=1}^b (1 - \zeta _0w_i)\).

References

Babu GJ, Rao CR (1988) Joint asymptotic distribution of marginal quantiles and quantile functions in sample from a multivariate population. J Multivar Anal 27:15–23

Barigozzi M, Halbleib R, Veredas D (2012) Which model to match? ECARES WP 2012/04

Bingham NH, Kiesel R (2003) Semi-parametric modelling in finance: theoretical foundations. Quant Financ 2:241–250

Bingham NH, Kiesel R, Schmidt R (2003) A semi-parametric approach to risk management. Quant Financ 3:426–441

Cambais S, Huang S, Simons G (1981) On the theory of elliptically contoured distributions. J Multivar Anal 11:368–385

Chambers JM, Mallows CL, Stuck BW (1976) A method for simulating stable random variables. J Am Stat Assoc 71, 340–344. Corrections 82 (1987):704, 83 (1988):581

Chen XC, Jacho-Chavez DT, Linton O (2009) An alternative way of computing efficient instrumental variable estimators. LSE STICERD Research Paper EM/2009/536

Cramér H (1946) Mathematical methods of statistics. Princeton University Press, Princeton

de Vries CG (1991) On the relation between GARCH and stable processes. J Econom 48:313–324

Dominicy Y, Veredas D (2012) The method of simulated quantiles. J Econ, forthcoming

Fama E, Roll R (1971) Parameter estimates for symmetric stable distributions. J Am Stat Assoc 66:331–338

Fang KT, Kotz S, Ng KW (1990) Symmetric multivariate and related distributions. Chapman and Hall, New York

Frahm G (2004) Generalized elliptical distributions. PhD thesis, University of Cologne

Ghose D, Kroner KF (1995) The relationship between GARCH and symmetric stable processes: finding the source of fat tails in financial data. J Empir Financ 2:225–251

Gonzalez-Rivera G, Senyuz Z, Yoldas E (2011) Autocontours: dynamic specification testing. J Bus Econ Stat 29:186–200

Gonzalez-Rivera G, Yoldas E (2011) Autocontour-based evaluation of multivariate predictive densities, Int J Forecast, forthcoming

Gouriéroux C, Monfort A, Renault E (1993) Indirect inference. J Appl Econom 8:85–118

Hallin M, Paindaveine D, Oja H (2006) Semiparametrically efficient rank-based inference for shape. II. Optimal R-estimation of shape. Ann Stat 34:2757–2789

Hautsch N, Kyj LM, Oomen RC (2011) A blocking and regularization approach to high dimensional realized covariance estimation, forthcoming in the J Appl Econ

Hill JB, Renault E (2012) Generalized method of moments with tail trimming. University of North Carolina at Chapel Hill, Mimeo

Kelker D (1970) Distribution theory of spherical distributions and a location-scale parameter generalization. Sankhya A32:419–430

Laloux L, Cizeau P, Bouchaud J-P, Potters M (1999) Noise dressing of financial correlation matrices. Phys Rev Lett 83:1467–1470

Laurent S, Veredas D (2011) Testing conditional asymmetry. A residual-based approach, J Econ Dyn Control, forthcoming

Lombardi MJ, Veredas D (2009) Indirect inference of elliptical fat tailed distributions. Comput Stat Data Anal 53:2309–2324

McCulloch JH (1986) Simple consistent estimators of stable distribution parameters. Commun Stat Simul Comput 15(4):1109–1136

Nolan JP (2010) Multivariate elliptically contoured stable distributions: theory and estimation. Mimeo

Rosadi D, Deistler M (2011) Estimating the codifference function of linear time series models with infinite variance. Metrika 73:395–429

Tola V, Lillo F, Gallegati M, Mantegna R (2008) Cluster analysis for portfolio optimization. J Econ Dyn Control 32:235–258

Tyler DE (1987) A distribution-free M-estimator of multivariate scatter. Ann Stat 15:234–251

Acknowledgments

Yves Dominicy acknowledges financial support from a F.R.I.A. grant. Hiroaki Ogata acknowledges financial support from the Japanese Grant–in–Aid for Young Scientists (B), 22700291, and the International Relations Department of the Université libre de Bruxelles. David Veredas acknowledges financial support from the IAP P6/07 contract from the Belgian Scientific Policy. We are grateful to the associate editor, two anonymous referees, Dante Amengual, Piotr Fryzlewicz, Marc Hallin, Enrique Sentana, Davy Paindaveine, Esther Ruiz, and Kevin Sheppard for insightful remarks. We are also grateful to the seminar participants at CEMFI, LSE, the University of Melbourne and numerous conferences. Any error and inaccuracy are ours. Yves Dominicy and David Veredas are members of ECORE, the recently created association between CORE and ECARES.

Author information

Authors and Affiliations

Corresponding author

Appendix: eigenvalue cleaning

Appendix: eigenvalue cleaning

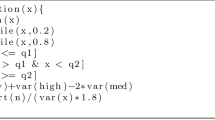

Let \(\hat{\varvec{\Gamma }}_N=diag(\hat{\varvec{\Sigma }}_N)^{-1/2}\hat{\varvec{\Sigma }}_Ndiag(\hat{\varvec{\Sigma }}_N)^{-1/2}\) be the estimated standardized dispersion matrix with spectral decomposition \(\hat{\varvec{\Gamma }}_N=\hat{\mathbf{Q }}_N\hat{\varvec{\Lambda }}_N\hat{\mathbf{Q }}_N^{^{\prime }}\), where \(\hat{\mathbf{Q }}_N\) is the orthonormal matrix of estimated eigenvectors and \(\hat{\varvec{\Lambda }}_N\) is the diagonal matrix of estimated eigenvalues. Let \(\hat{\lambda }_{(1)\,N} \ge \ldots \ge \hat{\lambda }_{(J)\,N}\) be the ordered eigenvalues (i.e. \(\hat{\lambda }_{(1)\,N}\) is the largest and \(\hat{\lambda }_{(J)\,N}\) is the smallest). Eigenvalue cleaning is based on replacing the eigenvalues less than a threshold \(\lambda _{max}\) by the average of the positive eigenvalues below \(\lambda _{max}\):

where \(L+1\) corresponds to the position, from the right, of the largest eigenvalue smaller than \(\lambda _{max}\).

The resulting estimated standardized dispersion matrix is \(\tilde{\varvec{\Gamma }}_N=\hat{\mathbf{Q }}_N\tilde{\varvec{\Lambda }}_N\hat{\mathbf{Q }}_N^{^{\prime }}\) and the positive definite estimated dispersion matrix is obtained by un–standardizing \(\tilde{\varvec{\Gamma }}_N\): \(\tilde{\varvec{\Sigma }}_N=diag(\hat{\varvec{\Sigma }})_N^{1/2}\tilde{\varvec{\Gamma }}_N diag(\hat{\varvec{\Sigma }}_N)^{1/2}\).

The threshold is given by \(\lambda _{max}=\left(1-\sum _{l=1}^{L^*}\hat{\lambda }_{(l)\,N}/J\right)\left(1+J/N+2\sqrt{J/N}\right)\), i.e. it is a function of the \(L^*\) largest eigenvalues. The smaller \(L^*\), the largest the difference between \(\hat{\varvec{\Sigma }}_N\) and \(\tilde{\varvec{\Sigma }}_N\). Laloux et al. (1999), Tola et al. (2008), and Hautsch et al. (2011) consider \(L^*=1\) on the grounds that \(\hat{\lambda }_{(1)\,N}\) represents the common dispersion. There is no reason however in our case to consider this value. After calibration we have found that \(L^*=10\) is the best compromise. Results for the Monte Carlo study and the empirical application with other values of \(L^*\) are available upon request.

Rights and permissions

About this article

Cite this article

Dominicy, Y., Ogata, H. & Veredas, D. Inference for vast dimensional elliptical distributions. Comput Stat 28, 1853–1880 (2013). https://doi.org/10.1007/s00180-012-0384-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-012-0384-3