Abstract

The Spatial-Numerical Association of Response Codes (SNARC) suggests the existence of an association between number magnitude and response position, with faster left-hand responses to small numbers and faster right-hand responses to large numbers. Recent studies have revealed similar spatial association effects for non-numerical magnitudes, such as temporal durations and musical stimuli. In the present study we investigated whether a spatial association effect exists between music tempo, expressed in beats per minutes (bpm), and response position. In particular, we were interested in whether this effect is consistent through different bpm ranges. We asked participants to judge whether a target beat sequence was faster or slower than a reference sequence. Three groups of participants judged beat sequences from three different bpm ranges, a wide range (40, 80, 160, 200 bpm) and two narrow ranges (“slow” tempo, 40, 56, 88, 104 bpm; “fast” tempo 133, 150, 184, 201 bpm). Results showed a clear SNARC-like effect for music tempo only in the narrow “fast” tempo range, with faster left-hand responses to 133 and 150 bpm and faster right-hand responses to 184 and 201 bpm. Conversely, a similar association did not emerge in the wide nor in the narrow "slow" tempo ranges. This evidence suggests that music tempo is spatially represented as other continuous quantities, but its representation might be narrowed to a particular range of tempos. Moreover, music tempo and temporal duration might be represented across space with an opposite direction.

Similar content being viewed by others

Introduction

Converging evidence from different domains suggests that the representation of magnitudes is strongly linked with space. Through different tasks and types of stimuli, humans have shown a reliable tendency to respond to different ranges of stimuli by using preferred spatial coordinates. A well-known example of this tendency is the Spatial-Numerical Association of Response Codes (SNARC) effect, which consists of a left (vs. right) response advantage for small (vs. large) numbers (Dehaene, Bossini & Giraux, 1993). The authors suggest that this effect is directly connected to the mental representation of numbers in western culture, namely a spatially oriented mental-number-line (MNL; Restle, 1970). Similar to numbers, it has been shown that other non-numerical magnitudes are spatially coded and elicit analogous effects, which are often referred as SNARC-like effects. Examples of these effects can be found for angle magnitude (Fumarola et al., 2016), physical size of pictorial surfaces (Ren, Nicholls, Ma & Chen, 2011; Prpic et al., 2018), luminance (Fumarola et al., 2014; Ren et al., 2011), loudness (Hartmann & Mast, 2017) and emotional magnitude (Holmes & Lourenco, 2011; but see also Fantoni et al., 2019). Among the great variety of stimuli that elicited a similar response pattern to the SNARC effect, we will restrict the evidence reported in the literature to the domains of musical cognition and temporal information processing.

Several studies investigated the relationship between musical stimuli and space. Rusconi, Kwan, Giordano, Umiltà and Butterworth (2006) firstly revealed a SNARC-like effect for pitch height (the so called SMARC – Spatial-Musical Association of Response Codes – effect), which consists of a bottom/left response advantage for low pitches and a top/right response advantage for high pitches. Several follow-up studies further investigated this phenomenon, which was consistently replicated over time (Lachmair, Cress, Fissler, Kurek, Leininger & Nuerk, 2017; Lidji, Kolinsky, Lochy & Morais, 2007; Pitteri, Marchetti, Priftis & Grassi, 2017; Prpic & Domijan, 2018; Timmers & Li, 2016). Although most of the studies in the field focused on tonal aspects of music (i.e., pitch height), there are a few studies that investigated temporal aspects of musical stimuli. In particular, musical note values – which are the symbolic representation of a note’s duration – demonstrated SNARC-like effects through various tasks that have been previously used in numerical cognition (Prpic et al., 2016; Prpic, 2017). This suggests that numbers and musical notes can be represented in a similar spatial manner.

The relationship between temporal aspects and space has been largely investigated beyond the musical domain. For instance, Vallesi, Binns and Shallice (2008) reported that participants assessing the temporal duration of visual stimuli showed a left-response advantage for short durations and a right-response advantage for long durations. Similar results were also found when the duration of pairs of auditory tones was compared (Conson, Cinque, Barbarulo & Trojano, 2008). In another study, Ishihara, Keller, Rossetti and Prinz (2008) asked participants to judge the onset timing (early vs. late) of an auditory stimulus following a periodic and regular beat sequence. In their study, the interval between the beat sequence served as a reference for judging whether the onset of the target stimulus came earlier or later than that interval. Following this procedure, participants had to focus on the duration between the last beat of the regular sequence and the probe sound. Results showed a left response advantage for early-onset timing and a right response advantage for late-onset timing, suggesting that time is represented from left-to-right along the horizontal axis. In other words, when focusing on time duration, shorter durations were spatially represented on the left and longer durations on the right. Several other studies investigated the interactions between time and space processing, supporting the idea that the time flow is represented on a spatially oriented “mental time line” (for a review, see Bonato, Zorzi & Umiltà, 2012).

Converging evidence of the interaction between numerical/non-numerical magnitudes, time and space is suggestive of a shared magnitude representation system (Bueti & Walsh, 2009; Walsh, 2003). Indeed, in his ATOM (A Theory of Magnitude) model, Walsh (2003) suggests that spatial representation might be the most suitable form for representing various types of magnitudes. The idea of a generalised magnitude system is further supported by evidence of a common neural mechanism for numbers, temporal durations and space, which seems to be located in the intraparietal sulcus (IPS) (Fias, Lammertyn, Reynvoet, Dupont & Orban, 2003; Leon & Shadlen, 2003; Piazza, Pinel, Le Bihan & Dehaene, 2007; Pinel, Piazza, Le Bihan & Dehaene, 2004). Therefore, time, space and numbers are likely to share common neural areas and a generalised representational system (i.e., left-to-right orientation).

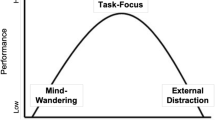

In the present study we sought to investigate the spatial representation of a fundamental temporal aspect of music, namely music tempo. In music terminology, tempo is defined as the speed or pace of a musical composition and is usually measured in beats per minute (bpm) (Honing, 2013). The instrument that is traditionally used to mark music tempo is the metronome. Tempo, however, is not only important for music but it is a fundamental component of every motor activity (Larsson, 2014), such as dancing, playing sports or simply walking (for a review, see Murgia et al., 2017). It is relevant to highlight that music tempo is different from time duration: the first one is a fundamental aspect of music and other motor activities related to rhythm, while the second one is a more general aspect of time. Similar to other temporal information, we hypothesize that music tempo can be spatially represented along the horizontal axis. From this perspective, the investigation of music tempo opens up the intriguing possibility that music tempo might be processed differently from other aspects of time, such as time duration. As previously mentioned, the study of Ishihara et al. (2008) reported that time duration is represented from left-to-right, respectively from short to longer durations. Music tempo, on the contrary, is traditionally labelled as “fast” when the time duration between beats (i.e. intervals) is “short”, and “slow” when the intervals between beats are “long”. In other words, music tempo with short intervals between beats has a high beat frequency, and music tempo with long intervals between beats has a low beat frequency. Consequently, if participants are processing the temporal length of the intervals between separate beats when assessing music tempo, we should expect an association resembling the one reported in previous studies (Ishihara et al. 2008, Vallesi, Binns & Shallice, 2008). That is, slow beat sequences (long temporal intervals between beats) should be associated with the right space, while fast beat sequences (short temporal intervals between beats) should be associated with the left space. Conversely, if music tempo is processed as temporal frequency (where the term “frequency” is used to identify the number of beats in time) rather than as temporal duration, we should expect the opposite association direction. That is, slow beat sequences (low temporal frequency) should be associated with the left space, while fast beat sequences (high temporal frequency) should be associated with the right space.

Preliminary evidence of the spatial representation of music tempo has already been reported in a previous study by Prpic, Fumarola, De Tommaso, Baldassi and Agostini (2013), suggesting that temporal frequency is driving this effect (slower beat sequences were preferentially responded with the left key, and vice versa). However, this study only marginally investigated the phenomenon, leaving several unsolved questions. Firstly, the temporal range of the stimuli used in the previous study was quite narrow and it did not cover the full range of tempos that are commonly used in music and dance. In particular, the SNARC-like effect for music tempo reported in Prpic et al. (2013) was revealed only for relatively fast tempos (ranging from 133 bpm to 201 bpm), while slower tempos were not considered in the study. Secondly, the study failed to show a clear association for all the stimuli being tested. Specifically, the slowest stimulus (i.e., 133 bpm) did not elicit a response advantage for either left or right responses, while a clear association was evident for the other tempos at test, namely 150, 184 and 201 bpm. As a consequence, this further narrows down the range of the stimuli in which the association was reported.

The issues related to the range of the stimuli at test are not only problematic for the generalisation of the effect to other ranges of tempos, but are also important for assessing whether this effect has the same properties of the SNARC effect. Indeed, one of the main characteristics of the SNARC effect is its flexibility, which is shown through range dependency. In the numerical domain, for example, the digits 4 and 5 are associated with the right space when the range being tested is 0–5, but the same digits are associated with the left space when the range is 4–9 (Antoine & Gevers, 2016; Dehaene et al., 1993; Fias et al., 1996). Similarly, if the effect revealed for music tempo had the same properties of the SNARC effect, it should show similar degrees of flexibility through different ranges of stimuli.

The aim of the present study is, thus, twofold. The first one is to replicate and, consequently, to generalise the spatial association effect to a wider range of tempos. The second one is to test for range dependency by separately investigating two narrower ranges of tempos (i.e., slow vs. fast). To do so, we designed three separate experiments all consisting of two-alternative forced-choice speed comparison tasks. Periodic beat sequences with different tempos had to be judged as slower/faster than a middle reference beat sequence. In Experiment 1, we tested a wide range of stimuli (from 40 to 200 bpm) encompassing the extremes of rhythm perception (Fraisse, 1978). In this range, 200 bpm constituted an upper limit in which beat’s cadence is sufficient to allow beats to be perceived as distinct entities, whereas 40 bpm constituted a lower limit in which beats can be perceived as a streaming rhythm and not as independent sounds. Moreover, such a wide range of tempos is more representative of the rhythms commonly used in music and dance. In Experiments 2 and 3, we separately tested two narrower ranges of stimuli, one with relatively slow tempos (slower than 120 bpm; Experiment 2) and one with relatively fast tempos (faster than 120 bpm; Experiment 3). While all the experiments were designed to test whether the spatial association for music tempo reported in the previous study by Prpic et al. (2013) extends to a wider range of stimuli, Experiment 2s and 3 conveyed the additional scope to investigate range dependency.

Experiment 1

Methods

Participants

Eighteen undergraduate students (Mage = 22.1 years, 15 females) with no formal musical or dance education took part in the experiment after providing informed consent. Standard school music class was not considered as formal musical or dance education. All participants were right-handed and native Italian speakers. The experiment was carried out in accordance with the Declaration of Helsinki.

Apparatus

Participants were seated in a dimly illuminated room 60 cm from the monitor (1,024 × 768 resolution, 100 Hz). The generation and presentation of the stimuli was controlled using E-Prime 2.0 software (Psychology Software Tools, Pittsburgh, PA, USA) running on Windows 7. Auditory stimulation was administered with AKG K240 headphones. All sound manipulations and sound synthesizers were carried out using demo versions of the software Ableton Live 8.

Stimuli

Auditory stimuli consisted of regular rhythmic beats streaming in five different tempos: 40, 80, 120, 160 and 200 bpm. The 120-bpm stimulus served as reference stimulus, while the other stimuli served as targets (see Procedure for details). The beat units comprising the stimuli were the same across all experiments and had a metronome-like timbre. The frequency spectrum of the beat unit was always the same. The amplitude of the stimuli was set at a comfortable level for each participant and held constant throughout the whole experiment.

Procedure

Each trial started with a central white fixation cross appearing on a uniformly black background that lasted 500 ms. Then, a reference stimulation started to play in the participant’s headphones. The reference stimulation was always the standard 120-bpm stimulus. The duration of the reference stimulus allowed participants to listen to four beat units (i.e. the first beat unit started at 0 ms, the second at 500 ms, the third at 1,000 ms and the fourth at 1,500 ms), while a white hashtag appeared on the screen. The duration of the silent ISI after the fourth beat unit varied randomly (700 ms or 1,000 ms) in order to avoid participants guessing the start of the target sequence; the target stimulus then started playing while a white exclamation mark appeared on the screen. Participants’ task was to report as fast and as accurately as possible whether the target stimulus was slower or faster than the reference stimulus, by pressing the “a” or the “l” keyboard keys with the index finger of their left or their right hand, respectively. The experiment was divided in two sessions, the order of which was counterbalanced between participants: in one session, participants had to respond with the right hand (“l” key) if the target was faster than the reference, and to respond with the left hand (“a” key) if the target was slower than the reference. In the other session, the response assignment was reversed. After participants responded, the stimulation stopped and a silent 1,500-ms inter-trial blank screen occurred before the next trial (see Fig. 1). Each session involved 80 trials, balanced across target conditions, the order of presentation of which was randomized. Before starting each experimental session, participants performed eight practice trials.

Results

The overall accuracy in reporting the target speed relative to the reference stimulus was 98.02%. Here and in the following experiments, no analysis on accuracy rates was implemented due to the low number of errors. Outliers, here 6.5%, were identified as exceeding 2 standard deviations from the mean for each condition. The analysis was carried out on the remaining data by means of a repeated-measures design for regression analysis (Fias, Brysbaert, Geypens & D’Ydewalle, 1996; Lorch & Myers, 1990). The analysis unfolded as follows. We computed the median of the reaction times (RTs) of the correct responses for each participant and for each target stimulus, separately for left- and right-hand responses. Then, ΔRT was computed by subtracting the median RT of left-hand responses from the median RT of right-hand responses:

As a result, positive ΔRTs indicated faster responses with the left key-press, whereas negative ΔRTs indicated faster responses with the right key-press. The tempo of the target stimuli was taken as the predictor variable, whereas ΔRT was taken as the criterion variable. In a further step, we calculated a regression equation for each participant and β regression coefficients were extracted. Then, we performed a one-sample t test to assess whether βs of the group deviated significantly from zero. However, the analysis of ΔRT revealed that the regression slopes were not significantly different from zero (t(17) = 0.577; p = .572), indicating that left key-presses and right key-presses did not differ as a function of the tempo of the target stimuli (see Fig. 2, panel a).

An additional analysis was implemented on absolute RTs. A repeated-measures ANOVA on RTs of correct responses with Response side (left vs. right) and Tempo (40, 80, 120 and 200 bpm) as a within-subjects factors showed a significant main effect of Tempo F(3,51)=24.80, p < .001, \( {\upeta}_{\mathrm{p}}^2 \) = .593, but no other significant effect (main effect of Response side, p = .932; Response side × Tempo interaction, p = .970), indicating that RTs varied across stimuli depending on their bpm frequencies but they were not modulated by the side of response (see Fig. 3, panel a). This confirms the results of the regression analysis, thus suggesting the absence of a SNARC-like effect for music tempo.

Discussion

The aim of Experiment 1 was to investigate the spatial representation of music tempo across a wide range of tempos that spanned between the boundaries of rhythm perception. However, no association between slower (vs. faster) tempos and left (vs. right) space emerged, apparently suggesting that the results found in Prpic et al. (2013) do not generalise to a wider range of temporal stimuli.

However, it is worth noting that the stimuli at test in Experiment 1 and in the Prpic et al. (2013) study differed by at least two important features. Firstly, as already mentioned, the tempos of the stimuli ranged between two very different extremes (40–200 bpm in Experiment 1; 133–201 bpm in the Prpic et al., 2013, study). Therefore, one hypothesis is that a SNARC-like effect for music tempo can be elicited only by a certain range of temporal frequencies. Here, the term frequency is used to identify the number of beats in time, and it is not to be confused with the frequency of the sound pitch (the number of sound pressure waves) of the beat itself, which was held constant throughout the study. Given the partial overlap between the stimuli used in the two studies, the results might suggest that the effect is not elicited by the slowest stimuli presented in Experiment 1. Secondly, the distance in bpm between the individual stimuli being used in Experiment 1 and in the Prpic et al. (2013) study was also substantially different. Indeed, each stimulus in Experiment 1 differed by 40 bpm, while in Prpic et al. (2013) each stimulus differed by only 17 bpm. It is thus possible that participants found it difficult to integrate stimuli with such a difference in cadence in a unitary temporal representation and that, as a consequence, a SNARC-like effect for music tempo failed to emerge. In order to disentangle these two hypotheses, we decided to run two further experiments by manipulating the overall temporal range of the stimuli and by keeping the distance in bpm between individual stimuli constant. Indeed, in Experiments 2 and 3, only relatively slow (40, 56, 72, 88 and 104 bpm) and fast (133, 150, 167, 184 and 201 bpm) tempos were presented, respectively, while the distance in bpm between individual stimuli was kept constant and resembled the one used by Prpic et al. (2013).

Experiment 2

Methods

Participants

Twenty-one undergraduate students (Mage = 22.1 years, 20 females) with no formal musical or dance education took part in the experiment after providing informed consent. Standard school music class was not considered as formal musical or dance education. All participants were right-handed and native Italian speakers. None of the participants took part in Experiment 1. The experiment was carried out in accordance with the Declaration of Helsinki.

Apparatus

Apparatus was the same as in Experiment 1.

Stimuli

Stimuli were the same as in Experiment 1, except that auditory stimuli consisted of regular rhythmic beats streaming in five different tempos: 40, 56, 72, 88 and 104 bpm. The 72-bpm stimulus served as reference stimulus, while the other stimuli served as target stimuli.

Procedure

The procedure was the same as in Experiment 1, except for the fact that the reference stimulation was always 72 bpm.

Results

The analysis, as in Experiment 1, was carried out by adopting a repeated-measures design for regression analysis (Fias et al., 1996; Lorch & Myers, 1990). The overall accuracy in reporting the target speed relative to the reference stimulus was 97.67%. Outliers were 6.3%. A one-sample t test on the β regression coefficients of the group of all participants revealed that the regression slopes were not significantly different from zero (t(20) = -1.292; p = .211), indicating that left key-presses and right key-presses did not differ as a function of the tempo of the target stimuli (see Fig. 2, panel b).

The analysis on absolute RTs, with the same factors as in Experiment 1, showed a significant main effect of Tempo F(3,60) = 31.84, p < .001, \( {\upeta}_{\mathrm{p}}^2 \) = .614, but no other significant effect (main effect of Response side, p = .986; Response side × Tempo interaction, p = .645), indicating that RTs varied across stimuli depending on their Tempo but they were not modulated by the side of response (see Fig. 3, panel b). This confirms the results of the regression analysis, thus suggesting the absence of a SNARC-like effect for music tempo.

Discussion

Experiment 2 tested the spatial representation of music tempo in the range of relatively slow tempos (40, 56, 72, 88 and 104 bpm). In contrast to Experiment 1 and in agreement with Prpic et al. (2013), individual stimuli were closer in terms of bpm frequency. Despite this, our results failed to show a SNARC-like effect for music tempo in the range of stimuli being tested. These results suggest that a SNARC-like effect for music tempo might emerge only within a given range of temporal frequencies, which seems not to lie in the relatively slow temporal range.

In Experiment 3, we aimed at replicating the results obtained by Prpic et al. (2013) by testing the SNARC-like effect for music tempo in the relatively fast temporal range and by keeping the distance in bpm between individual stimuli similar to the one used in Experiment 2.

Experiment 3

Methods

Participants

Twenty-one undergraduate students (Mage = 22.1 years, 20 females) with no formal musical or dance education took part in the experiment after providing informed consent. Standard school music class was not considered as formal musical or dance education. All participants were right-handed and native Italian speakers. None of the participants took part in Experiments 1 or 2. The experiment was carried out in accordance with the Declaration of Helsinki.

Apparatus

Apparatus was the same as in Experiment 1.

Stimuli

Stimuli were the same as in Experiment 1, except that auditory stimuli consisted of regular rhythmic beats streaming in five different tempos: 133, 150, 167, 184 and 201 bpm. The 167-bpm stimulus served as reference stimulus, while the other stimuli served as targets.

Procedure

The procedure was the same as in Experiment 1, except for the fact that the reference stimulation was always 167 bpm.

Results

As in Experiments 1 and 2, the analysis was carried out by adopting a repeated-measures design for regression analysis (Fias et al., 1996; Lorch & Myers, 1990). The overall accuracy in reporting the target speed relative to the reference stimulus was 94.49%. Outliers were 6%. A one-sample t test on the β regression coefficients of the group of all participants revealed that the regression slopes were significantly different from zero, t(20) = -2.592, p = .017, d = -0.566. These results indicate a relative left key-press advantage in processing slower tempos (i.e., 133 and 150 bpm) and a relative right key-press advantage in processing faster tempos (i.e., 184 and 201 bpm; see Fig. 2, panel c).

The analysis on absolute RTs, with the same factors as in Experiment 1, showed a significant main effect of Tempo, F(3,60) = 10.34, p < .001, \( {\eta}_{\mathrm{p}}^2 \) = .341, a significant Response side × Tempo interaction, F(3,60) = 2.98, p = .038, \( {\upeta}_{\mathrm{p}}^2 \) = .130, but no significant main effect of Response side (p = .133), indicating that RT variation across Tempo was modulated by the side of response (see Fig. 3, panel c). This confirms the results of the regression analysis, thus suggesting the presence of a SNARC-like effect for music tempo, with slower tempos being preferentially responded with a left-key, and faster tempos with a right-key.

Discussion

In Experiment 3 we aimed at replicating the SNARC-like effect reported in Prpic et al. (2013) by testing the spatial association for music tempo in the relatively fast temporal range. Our results show a significant spatial association effect, with faster left-key responses for slower tempos and faster right-key responses for faster tempos in the range of stimuli at test. These results are in line with the hypothesis that a SNARC-like effect for music tempo might emerge only within the relatively fast range of temporal frequencies perceived by humans.

General discussion

In this study we aimed at investigating whether music tempo is spatially represented similarly to other numerical and non-numerical magnitudes. To the best of our knowledge, only one study has previously addressed this question (Prpic et al., 2013), revealing original evidence of an association between relatively slow (vs. fast) tempo and left (vs. right) space. The present study was designed to replicate and extend previous findings to a wider range of stimuli. Indeed, Prpic et al. (2013) tested only a narrow range of stimuli that limited the generalisability of this effect for the entire range of tempos commonly used in music and dance. Furthermore, we were also interested to verify whether the effect for music tempo has similar properties to the SNARC effect by investigating flexibility through range dependency. We designed three experiments in order to address these questions. Participants performed two-alternative forced-choice speed comparison tasks in which periodic beat sequences with different tempos had to be judged as slower/faster than a middle reference beat sequence.

In Experiment 1, the stimuli consisted of four periodic beat sequences with different tempos (40, 80, 160 and 200 bpm) and a middle reference standard (120 bpm). As opposed to the previous study, which used only a narrow range of relatively fast tempos (Prpic et al., 2013), the current study presented a wide range that spanned from very slow to very fast tempo. Our results failed to show any evidence of an association between slow (vs. fast) tempo and left (vs. right) space, suggesting that the previously reported association is not generalisable to the full range of tempos. However, one alternative hypothesis was that the difference between the stimuli was so dramatic that participants failed to create a unique representation of the stimuli. Indeed, comparing stimuli that have very large temporal differences could be unusual and could cause high variability in the responses. To further investigate this hypothesis, in Experiments 2 and 3 we separately tested for slow and fast temporal ranges, whilst reducing the stimuli gap. Another solution to overcome the large temporal difference between stimuli while testing a vast range of tempos is to design an experiment with more stimuli covering the whole 40 to 200 bpm spectrum but separated by shorter gaps. However, this solution was not adopted for mainly two reasons. Firstly, to avoid a large number of stimuli. Indeed, in order to maintain a difference between the stimuli (in terms of bpm) comparable to other experiments, the test stimuli would have been ten plus a middle reference stimulus. Secondly, difference would dramatically increase between the reference stimulus and the test stimuli at the extremes. Such implications could be problematic in terms of interpreting the results in light of an unwarranted comparison between experiments. Consequently, two separate experiments were further undertaken. Experiments 2 and 3 were comparable in terms of bpm distance between stimuli, therefore, as a direct consequence, such stimuli were highly inhomogeneous with regard to the time interval between beats. However, this is unavoidable when the homogeneity is applied to different bpm, because different bpm have different time gaps between the beats by definition.

Experiments 2 and 3 consisted of two separate conditions that differed for the range of the stimuli being tested. Both in the slow (Experiment 2) and in the fast (Experiment 3) tempo conditions, stimuli consisted of four beat sequences (slow tempo: 40, 56, 88 and 104 bpm; fast tempo: 133, 150, 184 and 201 bpm) and a middle reference standard (slow tempo: 72 bpm; fast tempo: 167 bpm). Our results showed no evidence of an association between tempo and space in the slow tempo condition (Experiment 2). Although we reduced the gap between the stimuli to a comparable level to that utilised in the previous study (Prpic et al., 2013), a SNARC-like effect for music tempo was still absent. Conversely, in the fast-tempo condition a clear association between relatively slow tempos (133 and 150 bpm)/left space, and fast tempos (184 and 201 bpm)/right space was revealed, successfully replicating the evidence of Prpic et al. (2013).

Experiments 2 and 3 suggest that a substantial difference exists between slow and fast music tempo in eliciting a SNARC-like effect. Indeed, only in the fast tempo condition was a significant association between music tempo and the space of response execution revealed. One possibility to account for this difference consists of the fact that extremely slow beat sequences could fail to be represented as a unique stream of events. Indeed, every periodic beat sequence, like the metronome stimuli that we used in our study, is composed of a series of events (beats) separated by a temporal gap. In fast sequences the gap is quite short, but it becomes increasingly longer for slow sequences. In order to perceive a temporal sequence as a unique stream of events, we need to “fill” the gaps between each beat and create a representation of the whole beat sequence. We speculate that this process works well when tempo lies in a certain range, but that it degrades when tempo is extremely slow. Despite this, we acknowledge the identification of the rhythmic perceptual boundaries reported by Fraisse (1978). We speculate that in the setting of Experiments 1 and 2 the slower stimuli, being so close to the perceptual boundary, were difficult to group as a single rhythmic stream. Indeed, when the gap between each beat becomes too large we perhaps start perceiving single events (beats) instead of a unique stream of events. Conversely, for extremely fast sequences the gap between each beat would become so small that we would start perceiving a continuous sound. Therefore, one possibility to account for the lack of a SNARC-like effect for music tempo in both Experiment 1 and Experiment 2 is that participants could have struggled to create a unique representation of the slowest beat sequences we used in this study (e.g. 40 bpm).

Another possible explanation to account for our results is related to the time course of the SNARC effect. Indeed, we can assume that there is a relatively narrow temporal window in which the SNARC effect is elicited, with both a well-defined onset and decay. To our knowledge, there are no studies to date that specifically investigated the time course of the SNARC effect, considering both its onset and conclusion. The only indications we may have come from studies using a detection task with numbers as cues and non-numerical spatial (left/right) targets. A study that first reported evidence of the time course of the so-called attentional SNARC effect showed that it appears at 400 ms after stimulus presentation, becomes robust at 500 ms lasting until 750 ms, and deteriorates around 1,000 ms (Fischer, Castel, Dodd & Pratt, 2003). Another study (Dodd, Van der Stigchel, Leghari, Fung & Kingstone, 2008) further narrowed down the time window in which the SNARC effect was revealed, reporting a robust effect at 500 ms after stimuli presentation but no effect at 750 ms, thus suggesting a faster deterioration. However, considering the difference between the paradigms used in these studies and the present work, we can just speculate that if a response is delayed the effect might fade to a point at which it disappears. Conversely, regarding the onset of the SNARC effect, a recent study adopting the classical SNARC paradigms showed that the strength of the SNARC effect is modulated by overall response latencies irrespective of the level of semantic processing (Didino, Breil & Knops, 2019). Indeed, faster responses (<450 ms) were shown not to elicit a SNARC effect, while slower responses (>500 ms) were shown to elicit the strongest effect. Overall, these studies suggest that the SNARC effect has a well-defined time window and, therefore, it is possible that some of our manipulations exceeded the temporal boundaries of the effect itself.

In the present study, it is not possible to define the exact moment at which our stimuli were presented, since music tempo is perceived through time and cannot be captured in one precise moment – this is different in comparison to what occurs for numbers and many other kinds of stimuli. Indeed, only the first beat of each sequence was presented at the same time for every stimulus, while the subsequent beats followed at various time intervals due to different music tempos. To determine tempo, however, people need to listen to at least two beats, and, therefore, they are forced to wait a certain amount of time. It is thus possible that, if the amount of time between each beat in the sequence exceeds the time course of the effect, no SNARC-like effect would be revealed. This speculation is supported by the fact that the stimulus with the slowest tempo we used in both Experiment 1 and Experiment 2 is 40 bpm. This means that between each single beat there is a gap of 1,500 ms, largely exceeding the time courses reported for the SNARC effect.

Such an hypothesis holds if all responses are given after the second beat. However, our data show that the mean RTs in the 40-bpm condition is shorter than 1,500 ms, meaning that in the 40-bpm condition responses were given on average before the second beat. It remains possible that participants waited for the second beat, but were sure about their response before the second beat occurred because a critical delay had been exceeded. From this perspective, it is possible that participants focused on the time duration between the first beat and a critical delay, and therefore they did not properly focus on rhythmic tempo. Such conditions are indeed problematic in terms of interpretation because they lie between two speculations about the focus of participants’ judgements, namely, whether their decision is based on tempo or on time duration. It is therefore critical to assess a procedure that could distinguish with certainty what participants are focusing on.

A significant association between music tempo and space was found only in Experiment 3, in which the gap between each beat was relatively short. This evidence replicates the results of the previous study (Prpic et al., 2013), showing a left-key advantage for slower tempo and a right-key advantage for faster tempo in the range between 133 bpm and 201 bpm. Furthermore, the shape of this association seems to be categorical rather than linear, similar to what occurs for the SNARC effect during magnitude classification tasks (Wood, Willmes, Nuerk & Fischer, 2008). This evidence suggests that the spatial-association effect for music tempo shares common properties with the SNARC effect. However, due to the lack of the effect in Experiment 2 we have no evidence in support of flexibility, which is an important property of the SNARC effect. Future studies should further investigate flexibility within the range where the effect was successfully identified.

In conclusion, music tempo was shown to be spatially represented similarly to other numerical and non-numerical magnitudes. However, a significant SNARC-like effect for music tempo, consisting of a left-key advantage for relatively slow tempos and a right-key advantage for fast tempos was only revealed within the faster temporal range (133–201 bpm). The reasons why the same effect was not found with different ranges of stimuli are not completely clear, but it is possible that the temporal structure of some of the stimuli negatively interfered with the time course of the effect. While the spatial association for music tempo was shown to share some of the properties of the SNARC effect, others such as flexibility were not confirmed, as suggested by the absence of the effect in the slower temporal range (40–104 bpm). Nevertheless, music tempo seems to be tightly linked with space, similar to other numerical and non-numerical magnitudes.

References

Antoine, S. & Gevers, W. (2016). Beyond left and right: Automaticity and flexibility of number-space associations. Psychonomic Bulletin & Review, 23(1), 148-155.

Bonato, M., Zorzi, M. & Umiltà, C. (2012). When time is space: evidence for a mental time line. Neuroscience & Biobehavioral Reviews, 36(10), 2257-2273.

Conson, M., Cinque, F., Barbarulo, A. M. & Trojano, L. (2008). A common processing system for duration, order and spatial information: evidence from a time estimation task. Experimental Brain Research, 187(2), 267-274.

Dehaene, S., Bossini, S. & Giraux, P. (1993). The mental representation of parity and number magnitude. Journal of Experimental Psychology. General, 122, 371–396.

Didino, D., Breil, C. & Knops, A. (2019). The influence of semantic processing and response latency on the SNARC effect. Acta psychologica, 196, 75-86.

Dodd, M. D., Van der Stigchel, S., Leghari, M. A., Fung, G. & Kingstone, A. (2008). Attentional SNARC: There’s something special about numbers (let us count the ways). Cognition, 108(3), 810-818.

Fantoni, C., Baldassi, G., Rigutti, S., Prpic, V., Murgia, M. & Agostini, T. (2019). Emotional Semantic Congruency based on stimulus driven comparative judgements. Cognition, 190, 20-41.

Fias, W., Brysbaert, M., Geypens, F. & d’Ydewalle, G. (1996). The importance of magnitude information in numerical processing: Evidence from the SNARC effect. Mathematical Cognition, 2, 95–110.

Fischer, M. H., Castel, A. D., Dodd, M. D. & Pratt, J. (2003). Perceiving numbers causes spatial shifts of attention. Nature neuroscience, 6(6), 555.

Fraisse, P. (1978). Time and rhythm perception. In: E.C. Carterette & M.P. Friedmans (Eds.), Handbook of perception. Vol. 8. New York: Academic Press, pp. 203-254.

Fumarola, A., Prpic, V., Da Pos, O., Murgia, M., Umiltà, C. & Agostini, T. (2014). Automatic spatial association for luminance. Attention, Perception & Psychophysics, 76(3), 759-765.

Fumarola, A., Prpic, V., Fornasier, D., Sartoretto, F., Agostini, T. & Umiltà, C. (2016). The Spatial Representation of Angles. Perception, 45(11), 1320-1330.

Hartmann, M. & Mast, F. W. (2017). Loudness counts: Interactions between loudness, number magnitude, and space. The Quarterly Journal of Experimental Psychology, 70(7), 1305-1322.

Honing, H. (2013). Structure and interpretation of rhythm in music. In D. Deutsch (Ed.), Psychology of music (pp. 369–404). Oxford, United Kingdom: Academic Press.

Ishihara, M., Keller, P. E., Rossetti, Y. & Prinz, W. (2008). Horizontal spatial representations of time: evidence for the STEARC effect. Cortex, 44(4), 454-461.

Lachmair, M., Cress, U., Fissler, T., Kurek, S., Leininger, J. & Nuerk, H. C. (2017). Music-space associations are grounded, embodied and situated: examination of cello experts and non-musicians in a standard tone discrimination task. Psychological Research, 1-13.

Larsson, M. (2014). Self-generated sounds of locomotion and ventilation and the evolution of human rhythmic abilities. Animal Cognition, 17(1), 1–14. doi:https://doi.org/10.1007/s10071-013-0678-z

Leon, M. I. & Shadlen, M. N. (2003). Representation of time by neurons in the posterior parietal cortex of the macaque. Neuron, 38(2), 317-327.

Lidji, P., Kolinsky, R., Lochy, A. & Morais, J. (2007). Spatial associations for musical stimuli: A piano in the head? Journal of Experimental Psychology: Human Perception & Performance, 33, 1189-1207.

Murgia, M., Prpic, V., McCullagh, P., Santoro, I., Galmonte, A. & Agostini, T. (2017). Modality and Perceptual-Motor Experience Influence the Detection of Temporal Deviations in Tap Dance Sequences. Frontiers in Psychology, 8, 1340.

Piazza, M., Pinel, P., Le Bihan, D. & Dehaene, S. (2007). A magnitude code common to numerosities and number symbols in human intraparietal cortex. Neuron, 53(2), 293-305.

Pinel, P., Piazza, M., Le Bihan, D. & Dehaene, S. (2004). Distributed and overlapping cerebral representations of number, size, and luminance during comparative judgments. Neuron, 41(6), 983-993.

Pitteri, M., Marchetti, M., Priftis, K. & Grassi, M. (2017). Naturally together: pitch-height and brightness as coupled factors for eliciting the SMARC effect in non-musicians. Psychological research, 81(1), 243-254.

Prpic, V. (2017). Perceiving Musical Note Values Causes Spatial Shift of Attention in Musicians. Vision, 1(2), 16.

Prpic, V. & Domijan, D. (2018). Linear representation of pitch height in the SMARC effect. Psihologijske teme, 27(3), 437-452.

Prpic, V., Fumarola, A., De Tommaso, M., Baldassi, G. & Agostini, T. (2013). A SNARC-like effect for music tempo. Review of Psychology, 20(1-2), 47-51.

Prpic, V., Fumarola, A., De Tommaso, M., Luccio, R., Murgia, M. & Agostini, T. (2016). Separate mechanisms for magnitude and order processing in the spatial-numerical association of response codes (SNARC) effect: The strange case of musical note values. Journal of Experimental Psychology: Human Perception and Performance, 42(8), 1241.

Prpic, V., Soranzo, A., Santoro, I., Fantoni, C., Galmonte, A., Agostini, T. & Murgia, M. (2018). SNARC-like compatibility effects for physical and phenomenal magnitudes: a study on visual illusions. Psychological research, 1-16.

Ren, P., Nicholls, M.E.R., Ma, Y.Y. & Chen, L. (2011). Size matters: non-numerical magnitude affects the spatial coding of response. PLoS ONE 6 (8), e23553.

Restle, F. (1970). Speed of adding and comparing numbers. Journal of Experimental Psychology, 83(2 Pt 1), 274-278.

Rusconi, E., Kwan, B., Giordano, B. L., Umiltà, C. & Butterworth, B. (2006). Spatial representation of pitch height: The SMARC effect. Cognition, 99, 113-129.

Timmers, R. & Li, S. (2016). Representation of pitch in horizontal space and its dependence on musical and instrumental experience. Psychomusicology: Music, Mind, and Brain, 26(2), 139-148.

Vallesi, A., Binns, M. A. & Shallice, T. (2008). An effect of spatial–temporal association of response codes: understanding the cognitive representations of time. Cognition, 107(2), 501-527.

Wood, G., Willmes, K., Nuerk, H.-C. & Fischer, M. H. (2008). On the cognitive link between space and number: A meta-analysis of the SNARC effect. Psychology Science, 50(4), 489-525.

Acknowledgements

We thank Courtney Goodridge for the English proofreading.

Open Practices Statement

The data and materials for all experiments are available upon request. None of the Experiments was preregistered.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

De Tommaso, M., Prpic, V. Slow and fast beat sequences are represented differently through space. Atten Percept Psychophys 82, 2765–2773 (2020). https://doi.org/10.3758/s13414-019-01945-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-019-01945-8