Abstract

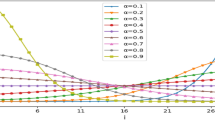

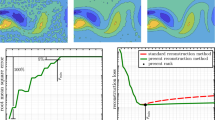

The method for selecting the stable singular points of images obtained with BRISK and AKAZE algorithms is proposed. The method is based on separation of points preserved on the image after its considerable distortion. The results of numerical experiments on the basis of 1000 images are given confirming the efficiency of the proposed method. It is established that the sets of points obtained with the proposed method contain 3–5 times more stable points than random sets of the same size. It is shown that, for selecting the stable points, it is more efficient to use considerable distortions of images.

Similar content being viewed by others

REFERENCES

K. Nagara, H. R. Roth, S. Nakamura, H. Oda, T. Moriya, M. Oda, and K. Mori, ‘‘Micro-CT guided 3D reconstruction of histological images,’’ in Patch-Based Techniques in Medical Imaging. Patch-MI 2017, Ed. by G. Wu, B. Munsell, Y. Zhan, W. Bai, G. Sanroma, and P. Coupé, Lecture Notes in Computer Science, vol. 10530 (Springer, Cham, 2017), pp. 93–101. https://doi.org/10.1007/978-3-319-67434-6_11

K. Yamada and A. Kimura, ‘‘A performance evaluation of keypoints detection methods SIFT and AKAZE for 3D reconstruction,’’ in Int. Workshop on Advanced Image Technology (IWAIT), Chiang Mai, Tailand, 2018 (IEEE, 2018), pp. 1–4. https://doi.org/10.1109/IWAIT.2018.8369647

J. Hlubik, P. Kamencay, R. Hudec, M. Benco, and P. Sykora, ‘‘Advanced point cloud estimation based on multiple view geometry,’’ in 28th Int. Conf. Radioelektronika, Prague, 2018 (IEEE, 2018), pp. 1–5. https://doi.org/10.1109/RADIOELEK.2018.8376366

L. Lou, Y. Liu, M. Sheng, J. Han, and J. H. Doonan, ‘‘A cost-effective automatic 3D reconstruction pipeline for plants using multi-view images,’’ in Advances in Autonomous Robotics Systems, Ed. by M. Mistry, A. Leonardis, M. Witkowski, and Ch. Melhuish (Springer, Cham, 2014), pp. 221–230. https://doi.org/10.1007/978-3-319-10401-0_20

S. Milosavljevic and W. Freysinger, ‘‘Quantitative measurements of surface reconstructions obtained with images a surgical stereo microscope,’’ in Proc. of the Jahrestagung der Deutschen Gesellschaft für Computer- und Roboterassistierte Chirurgie (CURAC 2016), Bern, 2016, pp. 47–52.

S. M. Borzov, E. S. Nezhevenko, and O. I. Potaturkin, ‘‘Search for man-made objects with the use of their structural features,’’ Optoelectron., Instrum. Data Process. 46, 429–434 (2010). https://doi.org/10.3103/S8756699011050049

M. N. Favorskaya, A. I. Pakhirka, A. G. Zotin, and V. V. Buryachenko, ‘‘Creation of panoramic aerial photographs on the basis of multiband blending,’’ Optoelectron., Instrum. Data Process. 54, 230–236 (2018). https://doi.org/10.3103/S8756699018030032

V. V. Nabiyev, S. Yilmaz, A. Günay, G. Muzaffer, and G. Ulutaş, ‘‘Shredded banknotes reconstruction using AKAZE points,’’ Forensic Sci. Int. 2017. 278, 280–295 (2017).

Y. Kajiwara, M. Nakamura, and H. Kimura, ‘‘Classification of single-food images by combining local HSV-AKAZE features and global features,’’ Int. Res. J. Comput. Sci. 2 (1), 12–17 (2015).

O. Taran, S. Rezaeifar, O. Dabrowski, J. Schlechten, T. Holotyak, and S. Voloshynovskiy, ‘‘PharmaPack: Mobile fine-grained recognition of pharma packages,’’ in Proc. of the 25th Eur. Signal Processing Conf. (EUSIPCO), Kos, Greece, 2017 (IEEE, 2017), pp. 1917–1921. https://doi.org/10.23919/EUSIPCO.2017.8081543

D. V. Svitov, V. A. Kulikov, and V. P. Kosykh, ‘‘Detection of suspicious objects on the basis of analysis of human X-ray images,’’ Optoelectron., Instrum. Data Process. 53, 159–164 (2017). https://doi.org/10.3103/S875669901702008X

Y. Ishikoori, H. Madokoro, and K. Sato, ‘‘Semantic position recognition and visual landmark detection with invariant for human effect,’’ in IEEE/SICE Int. Symp. on System Integration (SII), Taipei, Taiwan, 2017 (IEEE, 2017), pp. 657–662. https://doi.org/10.1109/SII.2017.8279296

X. Lu and D. Li, ‘‘Research on target detection and tracking system of rescue robot,’’ in Chinese Automation Congress (CAC), Jinan, China, 2017 (IEEE, 2017), pp. 6623–6627. https://doi.org/10.1109/CAC.2017.8243970

E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, ‘‘ORB: An efficient alternative to SIFT or SURF,’’ in Int. Conf. on Computer Vision, Barcelona, 2011 (IEEE, 2011), pp. 2564–2571. https://doi.org/10.1109/ICCV.2011.6126544

E. Rosten and T. Drummond, ‘‘Machine learning for high-speed corner detection,’’ in Computer Vision—ECCV 2006, Ed. by A. Leonardis, H. Bischof, and A. Pinz, Lecture Notes in Computer Science, vol. 3951 (Springer, Berlin, 2006), pp. 430–443. https://doi.org/10.1007/11744023_34

S. Leutenegger, M. Chli, and R. Y. Siegwart, ‘‘BRISK: Binary robust invariant scalable keypoints,’’ in Int. Conf. on Computer Vision, Barcelona, 2011 (IEEE, 2011), pp. 2548–2555. https://doi.org/10.1109/ICCV.2011.6126542

P. F. Alcantarilla, J. Nuevo, and A. Bartoli, ‘‘Fast explicit diffusion for accelerated features in nonlinear scale spaces,’’ in Proc. British Machine Vision Conf. (BMVA Press, 2013), pp. 13.1–13.11 https://doi.org/10.5244/C.27.13

O. Veres, B. Rusyn, A. Sachenko, and I. Rishnyak, ‘‘Choosing the method of finding similar images in the reverse search system,’’ CEUR Workshop Proc. 2136, 99–107 (2018).

L. Bureš and L. Müller, ‘‘Selecting keypoint detector and descriptor combination for augmented reality application,’’ in Speech and Computer. SPECOM 2016, Ed. by A. Ronzhin, R. Potapova, and G. Németh, Lecture Notes in Computer Science, vol. 9811 (Springer, Cham, 2016), pp. 604–612. https://doi.org/10.1007/978-3-319-43958-7_73

F. K. Noble, ‘‘Comparison of OpenCV’s feature detectors and feature matchers,’’ in 23rd Int. Conf. on Mechatronics and Machine Vision in Practice (M2VIP), Nanjing, China, 2016 (IEEE, 2016), pp. 1–6. https://doi.org/10.1109/M2VIP.2016.7827292

A. Satnik, R. Hudec, P. Kamencay, J. Hlubik, and M. Benco, ‘‘A comparison of key-point descriptors for the stereo matching algorithm,’’ in 26th Int. Conf. Radioelektronika, Kosice, Slovakia, 2016 (IEEE, 2016), pp. 292–295. https://doi.org/10.1109/RADIOELEK.2016.7477419

S. A. K. Tareen and Z. Saleem, ‘‘A comparative analysis of SIFT, SURF, KAZE, AKAZE, ORB, and BRISK,’’ in Int. Conf. on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 2018 (IEEE, 2018), pp. 1–10. https://doi.org/10.1109/ICOMET.2018.8346440

K. Lenc and A. Vedaldi, ‘‘Large scale evaluation of local image feature detectors on homography datasets,’’ in Proc. of the British Machine Vision Conf. (BMVC), Newcastle, 2018 (BMVA Press, 2018), pp. 1–13.

A. K. Shakenov, ‘‘Comparison of detectors of image singular points and estimation of their statistical characteristics,’’ Optoelectron., Instrum. Data Process. 57, 8–17 (2021). https://doi.org/10.3103/S875669902101009X

F. Wang, Zh. Liu, H. Zhu, and P. Wu, ‘‘An improved method for stable feature points selection in structure-from-motion considering image semantic and structural characteristics,’’ Sensors 21, 2416 (2021). https://doi.org/10.3390/s21072416

M. F. Pinto, A. Melo, L. M. Honorio, and A. Marcato, ‘‘Deep learning applied to vegetation identification and removal using multidimensional aerial data,’’ Sensors 20, 6187 (2020). https://doi.org/10.3390/s20216187

Z. Živkovic and F. van der Heijden, ‘‘Improving the selection of feature points for tracking,’’ Pattern Anal. Appl. 7, 144–150 (2004). https://doi.org/10.1007/s10044-004-0210-9

C. Wu, ‘‘Towards linear-time incremental structure from motion,’’ in Int. Conf. on 3D Vision, Seattle, 2013 (IEEE, 2013), pp. 127–134. https://doi.org/10.1109/3DV.2013.25

The MIRFLICKR. Retrieval Evaluation. MIRFLICKR Image Database. http://press.liacs.nl/mirflickr/. Cited February 10, 2016.

Funding

The work is supported by the Ministry of Higher Education and Science of Russia (state registration no. 121022000116-0).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

The author declares that he has no conflicts of interest.

Additional information

Translated by E. Oborin

About this article

Cite this article

Shakenov, A.K. Selection of Singular Points Stable to Blurring and Geometric Distortions of Images. Optoelectron.Instrument.Proc. 57, 632–638 (2021). https://doi.org/10.3103/S8756699021060121

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3103/S8756699021060121