Abstract

The fast propagation of the Internet of Things (IoT) devices has driven to the development of collaborative healthcare frameworks to support the next generation healthcare industry for quality medical healthcare. This paper presents a generalized collaborative framework named collaborative shared healthcare plan (CSHCP) for cognitive health and fitness assessment of people using ambient intelligent application and machine learning techniques. CSHCP provides support for daily physical activity recognition, monitoring, assessment and generate a shared healthcare plan based on collaboration among different stakeholders: doctors, patient guardians, as well as close community circles. The proposed framework shows promising outcomes compared to the existing studies. Furthermore, the proposed framework enhances team communication, coordination, long-term plan management of healthcare information to provide a more efficient and reliable shared healthcare plans to people.

Similar content being viewed by others

Introduction

The Internet of Things (IoT) [1] is an emerging technology that provides seamless and ubiquitous connectivity to smart devices over the Internet. IoT is composed of smart devices that are diverse, such as Radio Frequency Identification (RFID) tags, sensors, and smartphones. Smartphone allows interaction with the surroundings and can support collaboration with other entities to provide numerous services such as health analysis, fitness tracking, and physical activity recognition [2,3,4,5]. Physical activity recognition is the mechanism of recognizing the daily life activities of an individual. Evidence shows that there exists a positive correlation between competence in fundamental physical activities and health among people [3, 6, 7]. Physical inactivity causes the same number of deaths each year as smoking [8]. Another study suggests that physical activities are fundamentally and definitively connected with self-reported mental health [9]. There exist numerous studies for physical activity recognition. Some researchers used on-body sensors [10] and some use non-obtrusive sensors [11] for physical activity recognition. Smart homes and smartphones are the best examples of a non-obtrusive environment. Smart homes are embedded with a variety of sensors installed at a different location of the home. The primary purpose of the smart home is to provide unobtrusive assistance to the smart home resident [12]. However, this technology is not currently useful because smart home provides limited services as most of the activities cannot be performed within the physical confinement of a smart home (i.e. running and cycling).

Other options for activity recognition such as on-body sensors [13] are difficult to wear and carry, therefore, this approach is impractical due to its obtrusive nature. For physical activity recognition, recent work [12, 14, 15] indicates that smartphone sensors can be adopted as a more convenient medium for activity recognition. Smartphones are embedded with built-in motion sensors such as accelerometer, gyroscope, magnetometer, light sensors, and proximity sensors. The smartphone sensors have enabled many context-aware applications in different areas such as healthcare [16] and assisted living. Ambient intelligence in coordination with multiagent systems can also be used in healthcare, transport, and many other highly assisted technologies. In healthcare, detecting the physical activities of the patient is an important task because daily routine activities are directly linked with the health of an individual [17,18,19].

In [20], the authors identify the following challenges of healthcare coordination and communication of a healthcare provider team: (1) The healthcare provider team must be diverse that include members who have varying expertise and backgrounds. (2) The healthcare provider team members focus on different aspects of a patient’s healthcare, i.e., a physical therapist should see the mobility weekly and a psychiatric should examine the mental health conditions. (3) Every team member might be involved in other healthcare teams to compose a dynamic team to operate over a long time period. (4) Except for the family, team members might not be aware of all other team members.

Collaborative multiagent frameworks using ambient intelligence have gained significant interest in both academia and Industry [21, 22] as they enable the environment to be smart, flexible, adaptive, context-aware, and intelligent [11]. In a collaborative multiagent framework, an agent is an entity that communicates with other entities to assist users in terms of information sharing. In the healthcare domain, an agent shares instructions to help a patient in performing daily routine [23]. In our scenario, doctors, guardians, and gym trainers represent the agents that can communicate with each other via a web service to provide a shared healthcare plan to the individuals. The medical and healthcare communities have highlighted the issues regarding the importance of teamwork and the need for an enhancement of team interaction to improve the quality of healthcare through designing and monitoring healthcare plans [24].

Problem statement

To the best of our knowledge, all the collaborative systems proposed earlier for healthcare and fitness purposes are either environment-specific or user-specific, such as for an office environment and children with special conditions [21, 22]. The authors in [22], propose a framework acting as a personal cognitive assistant to accomplish high-level goals such as handling routine tasks in a specific office environment. The authors in [25], propose an approach based on a personal assistant to recommend potential actions (i.e. read) at a specific time to increase the productivity of the user. The healthcare framework proposed in [21] is environment specific and only helps out the children with a special condition. It is therefore important to develop a generalized healthcare framework to support users in diverse environmental settings through assessing their daily life activities. Healthcare and fitness assessment frameworks are supposed to be working unobtrusively and robustly. To make a generic framework, the smartphone may represent an optimal choice, because, it is easy to carry, and almost every person holds the smartphone most of the time while performing daily routine activities. Moreover, it provides accurate sensor readings as stated in [15]. There exist several smartphone applications for activity recognition such as Google-Fit [26]. The Google-Fit is not efficient, because, it provides different probabilities that specific activities are being performed with confidence ranging between 0 and 100 each time (i.e., 70% running, 40% walking, 10% still). Moreover, Google-Fit does not support agent-based collaboration [26]. To facilitate users with an accurate healthcare plan, recommendation, or prescription by a doctor, there shouldn’t be any confusion in activity recognition. In light of this, there is a need for a collaborative, smartphone-based, and generic framework that should be intelligent, autonomous, and working as a team to support independent living.

Contributions

In this paper, we make the following contributions:

-

1.

Propose a novel collaborative framework CSHCP for cognitive healthcare and fitness assessment using ambient intelligence and machine learning.

-

2.

CSHCP recognizes and monitors physical activities like sitting, standing, walking, running, upstairs and downstairs with significant accuracy.

-

3.

Android-based ambient application where different agents are intelligently deployed for sharing healthcare-related information.

-

4.

Proof-of-concept of activity recognition assessment along with benchmark results.

The remainder of the paper is structured as follows: “Literature review” section presents a detailed analysis of the related work based on the healthcare systems, multiagent systems, and physical activity recognition. “Collaborative shared healthcare plan framework” section presents the proposed framework. “Evaluation and analysis of results” section shows the experimentation containing datasets, observations, results, and comparative analysis with state-of-the-art work. “Conclusion and future work” section provides a summarized overview of the paper and leads towards future recommendations.

Literature review

Modern e-Healthcare systems focus on providing remote monitoring, robust collaboration, accurate healthcare plans to the citizens [27]. Different healthcare providers can help people to accomplish their daily tasks if they are aware of their daily routine activities and can collaborate with them. A collaborative shared healthcare framework has to be intelligent, autonomous, and diverse in assisting and handling these issues. The framework could empower these agents and human interaction by providing methods to minimize human cognitive load.

Authors in [21] designed a framework to assist patients and their caretakers. A healthcare plan is shared among all healthcare providers and in response, a revised healthcare plan is given by a team which consists of doctors, pediatrician, parents and physical therapist where they focused to facilitate children with complex neurodegenerative conditions. The authors proposed a framework that consists of agents working as a team. The limitations of this study is the fixed environment, the very specific nature of users with complex conditions and lack of activities recognition. In [20] and [28], after conducting comprehensive findings on care coordination and health information, the authors proposed the design of a system called “GoalKeeper” to support the creation, monitoring, and revision of team-based healthcare plans. Firstly, the team members define the shared healthcare plan for children and a description of the design for parents. Secondly, they allow every member to choose the detailed level of healthcare plan required i.e., every member has only the right context to support decision making. In the third phase, the status of the completion of actions and the progress towards goals is updated to avoid deadlock in information sharing. In the end, the relevant information is served to each member intelligently. RADAR [22], Electrical Elves [29] and CALO [25] focus on multiagent collaboration and highlight the issues in planning the teams. These systems assist agents to enable the participants to accomplish their daily life tasks in a better way either in the office or home environment. The prior work such as the Coordinators system [30] also highlights the collaboration element among personal assistant agents.

Authors in [31] describe an engagement framework for the patient, family, and healthcare professionals that work at various levels across the healthcare system to improve patient’s health. The phase occurrence of patient engagement throughout the healthcare system is also examined i.e., indirect care, organizational design, and policy-making. This framework gives an advantage to the development of interventions that support patient engagement. In [32], authors describe an ontology-based context model and context management middleware for emergency services, assistance services for patient daily activities, services for life quality improvement. Authors in [33] proposed a collaborative system to gain the telemedicine experience of home care for chronic patients. They conduct a clinical experiment for one year on 157 patients to find out its impact on health. The healthcare team can be called through the call center without requiring frequent visits. An Electronic Chronic Patient Record (ECPR) that was available on the web-based patient management module is shared by the healthcare team. The results suggest that the system supports the following: (1) Keeping automatically records of all the interactions with the patients. (2) Providing ubiquitous access to the healthcare team about up-to-date patient information. (3) Decreasing the workload of the professionals by maintaining a queue of patients that have to assist with closer follow-up. The limitation of this study was a bias introduced by the case manager, such as information about the patient cannot be organized blindly and there should be sustainability in a closer relationship between the patient and the healthcare team.

Google-Fit is an application developed by Google [26], capable of tracking physical activity with over ten million downloads since its release. This activity traction process runs in a background that continuously tracks daily life physical activities: walking, running, still, on a bicycle, and cycling. This application is quite popular among fitness enthusiasts. A user can form their plans i.e., setting a step counter, duration of performing an activity, and countdown. The user can also provide basic information (gender, height, and weight) to estimate the calories burned while performing an activity. The smartphone must be placed on the user while performing an activity either in the pocket or in the bag. Some limitations that are addressed in this study are the following: (1) Accuracy of recognizing an activity is not efficient. (2) Running in the background continuously that reduces battery life.

Recently many researchers have worked on smartphone sensors because it is easy to carry, gives more reliable and accurate readings and results. Android smartphone has an open-source operating system that benefits researchers and all end-users with its rich functionalities [14, 34, 15]. Physical activity recognition and activity assessment using smartphone sensors have enabled us to built context-aware ambient intelligent applications. These applications can be used in different areas like healthcare, industrial zone, and security. Android smartphone has built-in sensors which are precise, reliable, and more accurate than the other methods used in the past such as on-body sensors. Authors in [15] provided a survey on online Physical activity recognition using smartphone sensors. They showed that Physical activity recognition done by using mobile phone sensors give more accurate and better results. For physical activity recognition, many researchers used accelerometer only [35, 36]. Authors in [14] proposed an approach for elderly people to monitor their physical activity in a non-controlled environment. The study also suggested that the combination of gyroscope, magnetometer, and barometer improves the activity recognition accuracy as suggested by other authors [4, 34, 37, 38]. Researchers in [34] used the accelerometer as a leading role with a gyroscope and magnetometer. They showed that for some activities, a combination of more than one sensor results in better accuracy. Some researchers used on-body sensors to recognize user activity. In [39] the authors used 4 tri-axial accelerometer that was placed on the hip, wrist, ankle, arm, and thigh. They collected data from users and the recognition of 20 activities analyzed. They show analyses of different posture likes sitting, standing, walking, and stairs climbing and cycling. Another work [38] presents a hierarchical physical activity recognition approach utilizing three smartphone sensors by recording their raw readings. This approach specifically focused on activities that are difficult to recognize and share a lot of confusing patterns such as walking, running and jogging. It first bound the highly indistinguishable activities into clusters and then recognize all inter-class activities on a fine-grained level.

Authors in [40] proposed unsupervised clustering approach for recognition of activities using undirected graph approach. They claimed that their approach is more general from supervised methods as it distinguished activities without huge training data. The [41] proposed a new ensemble Extreme Learning Machine (ELM) approach for physical activity recognition using smartphone sensors. They compare their results on experimental data provided by [42] to evaluate the performance.

Although the above studies [14, 34, 39,40,41] provide systems to recognize physical activities, they lack in providing multiagent collaboration for monitoring and suggesting a shared healthcare plan, which is the primary focus of this work. In addition to that, the activity recognition accuracy of these studies is not promising. An efficient healthcare plan cannot be suggested if the activity recognition process is not appealing.

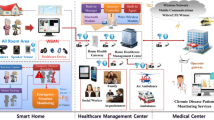

Collaborative shared healthcare plan framework (CSHCP)

In this section, we present the proposed framework named collaborative shared healthcare plan (CSHCP) which is specifically designed to recognize and monitor daily life activities using Machine Learning (ML) model [43], analyze location traces, mobile usage analysis and providing an optimal healthcare plan. Figure 1 illustrates our proposed framework.

Illustration of collaborative shared healthcare plan framework. Data collected using smartphone sensors of the patient is fed into the ML Model. The ML model provides activity recognition data and exploratory analysis of daily life routine tasks to the care providers such as Doctor, Guardian, and Close Community Circles

Application agent

We develop an Android-based framework that is responsible for recognizing and monitoring daily life activities. We fix the minimum operating system requirement to 4.0 Android version, which is the least operating system that Android users use nowadays. The majority of the Android users are using an operating system greater than 4.0 Android versions. An Android operating system allows applications to access data from smartphone sensors, while the iOS allow some third-party applications to access data from limited sensors keeping in view the strict policy against data theft. Below are the characteristics of the application.

-

It consists of a user-friendly graphical user interface. It is designed systematically and contains a sign-up user interface, sign-in user interface, automatic activity recognition user interface (Google-Fit API), online recognition user interface (ML Model deployed over Web Service). Furthermore, it analyze location traces and mobile usage.

-

A service collects data from three sensors continuously while running in the background all the time. An agent 3.1 is made to ensure that service is running in the background all the time.

-

We select accelerometer, gyroscope, and magnetometer to collect the data while activity is being performed.

-

It collects data at a constant frequency of 40 samples per second.

-

Initially data is stored in smart phone’s memory.

-

Data for the predictive model is sent to the server.

-

All the processing is being done remotely using a web service.

The application agent collects data and passes it to the ML Model that is a web service, which returns a response to the doctor and healthcare provider, as illustrated in Fig. 1. According to this response, the doctor issues a healthcare plan accordingly. Suppose a person login in this app starts walking and our ML Model generates a report regarding walking pattern is deviating from its original. The doctor provides a healthcare plan to the patient and healthcare providers.

This app consists of multiple agents that are briefly described below.

-

Hardware permission agent This agent is responsible for acquiring hardware permissions intelligently like accessing write, read, sensor accessing, WIFI, Mobile data, and Location permissions, etc. Suppose if there is no WIFI access then it will for Mobile data permissions and if both are on then it will turn off mobile data for saving data and battery.

-

Service agent This agent is responsible for managing android background services. It keeps them running in the background, restarting services after they are killed or mobile phone shutdown, schedules services to perform data collection operations.

-

Mobile usage agent This agent collects and monitors information regarding mobile usage. How many times the user uses the smartphone. The time interval in which mobile is mostly used and storing data for comparing mobile usage patterns.

-

Location agent This agent is responsible for intelligently using the location sensor, locating an individual, storing information regarding visited places, and predicting places to visit in the future by past traces. If a user is at the same place then this sensor is not invoked for 1 minute after 1 minute, it will locate the location and write it into a text file.

-

Automatic activity recognition agent (Google-Fit) This agent is responsible for recognizing activity using the Google-Fit API. We use this data to discover the pattern of daily life activities. This agent recognizes activities such as still, walking, traveling, cycling, running and tilting activity which is not enough for assessing the health and daily activities pattern. Therefore, we make a novel activity recognition technique using the machine learning algorithm. We named this Online Activity Recognition which is discussed below.

-

Online activity recognition agent Online activity recognition agents can recognize activities with better accuracy. Activities include sitting, standing, running (normal), walking, upstairs, downstairs, and cycling. In this agent, three sensors accelerometer, gyroscope, and magnetometer were used. Some activities are classified more accurately when the accelerometer is used alone and some activities were classified more accurately when used in combination with other sensors like gyroscope and magnetometer. We use machine learning classifiers to classify daily life activities and exploratory data analysis techniques to identify the deviation from a normal life routine since physical activities directly affect daily routine life. Various studies such as [3, 6, 7] show that health is directly proportional to the physical activities performed.

We use both of the above techniques to make the activity recognition process efficient.

Data collection

Large data collection is important in the case of accurately train machine learning classifiers to predict current activities in real-time. We collect data from smartphones: Oppo F3 and Oppo F1S. Ten participants were asked to perform 7 activities. We asked participants to perform selected activities while keeping the smartphone. During activities, the smartphone was placed in the left pocket of trouser/pant, and in some activities, it was placed in the right pocket of the trouser/pant. Each agent collects data at a different frequency and writes it into a file. All the data is comma-separated and time-stamped.

Below we described all the data collection activities in detail.

-

Automatic activity recognition (Google-Fit) Data is collected from this module and saved in a text file. Data is collected directly using Google-Fit API. We store the data along with confidence in a percentage ranging between 0 and 100, smartphone id, date, and time-stamp. The more the percentage, the more the accurate recognition rate of the activity being performed. A total dataset of 70,000 instances is collected after removing noise for each participant. This percentage lie between 0 and 100. Later this data is sent to the doctor and guardian after processing using web service.

-

Location data Location data is collected intelligently using the location sensor, locating the individual, storing information regarding visited places and predicting places to visit in the future by past traces. If a user is at the same location then this sensor will be invoked after 1 min. After 1 min, it will record the location and write it into a text file along with accuracy and time-stamp. A total dataset of 2000 instances is collected as input for the ML model.

-

Mobile Usage data Mobile usage data is collected with binary states when it is locked and when it is unlocked along with a time-stamp. A total dataset of 3000 instances is collected after removing noise for each participant.

-

Online activity recognition (ML Model) Data is collected from three sensors Accelerometer, Gyroscope, and Magnetometer. A total dataset of 40,000 instances is collected after removing noise for each participant. A machine learning model is built to classify activities performed by individuals.

Below we explain the activity representation in terms of Android sensor data readings.

Activity representation

In this work, We select 7 activities: standing, sitting, running, walking, upstairs, cycling and downstairs. Below are the representation of activities.

-

Sitting shows a unique behavior. The range of \(A_x\) falls between \((-1 \le A_x \le -3)\), \(A_y\) lies between \((-1 \le A_y \le -4)\) and \(A_z\) lies between \((-7 \le A_z \le -9)\) as shown in Fig. 2. Similarly, we observe that gyroscope’s readings \(G_x\) falls between \((-0.01 \le G_x \le -0.05)\), \(G_y\) lies between \((-0.0 \le G_y \le 0.02)\) and \(G_z\) lies between \((-0.0 \le G_z \le 0.08)\) as shown in Fig. 3 and magnetometer readings \(M_x\) falls between \((-11 \le M_x \le -13)\), \(M_y\) lies between \((28 \le M_y \le 30)\) and \(M_z\) lies between \((33 \le M_z \le 35)\) as shown in Fig. 4.

-

Standing shows a unique behavior. Figure 2 shows that the range of \(A_x\) falls between \((0 \le A_x \le 1)\), \(A_y\) lies between \((8 \le A_y \le 11)\) and \(A_z\) lies between \((0.1 \le A_z \le 2)\). Similarly we observe that gyroscope’s readings \(G_x\) falls between \((-0.001 \le G_x \le 0.15)\), \(G_y\) lies between \((-0.001 \le G_y \le 0.01)\) and \(G_z\) lies between \((-0.01 \le G_z \le 0.19)\) as shown in Fig. 3 and magnetometer readings \(M_x\) falls between \((-4 \le M_x \le -9)\), \(M_y\) lies between \((-35 \le M_y \le -42)\) and \(M_z\) lies between \((25 \le M_z \le 34)\) as shown in Fig. 4.

-

Walking Data is collected at a constant frequency of 1 sample per second. Figure 2, 3 and 4 shows that while sitting and standing it is fine to get data at frequency. This activity is performed by healthy individual as well as with cognitive impairment therefore data collection from impaired individual is less than healthy individuals. This activity shows similar behavior with the jogging. By analyzing accelerometer’s detected readings, we notice that the range of \(A_x\) falls between \((-3 \le A_x \le 12)\), \(A_y\) lies between \((-22 \le A_y \le -1)\) and \(A_z\) lies between \((-25 \le A_z \le 10)\) as shown in Fig. 2. Similarly we notice that gyroscope’s readings \(G_x\) falls between \((-0.2 \le G_x \le 5)\), \(G_y\) lies between \((-5 \le G_y \le 8)\) and \(G_z\) lies between \((-2 \le G_z \le 2)\) as shown in Fig. 3 and magnetometer’s reading \(M_x\) falls between \((-33 \le M_x \le -20)\), \(M_y\) lies between \((21 \le M_y \le 40)\) and \(M_z\) lies between \((-35 \le M_z \le 11)\) as shown in Fig. 4.

-

Running Similar is the case in running each individual has to run for a long time so the healthy individuals performed this activity long time rather the others performed short time. This activity shows similar behavior to the fast walking. Figure 2 depicts that the range of \(A_x\) falls between \((-7 \le A_x \le 6)\), \(A_y\) lies between \((-2 \le A_y \le 4)\) and \(A_z\) lies between \((-15 \le A_z \le 7)\). Similarly we notice that gyroscope’s readings \(G_x\) falls between \((-3 \le G_x \le 2)\), \(G_y\) lies between \((-2 \le G_y \le 2)\) and \(G_z\) lies between \((-2 \le G_z \le 2)\) as shown in Fig. 3 and magnetometer readings \(M_x\) falls between \((30 \le M_x \le -41)\), \(M_y\) lies between \((20 \le M_y \le 33)\) and \(M_z\) lies between \((-36 \le M_z \le 20)\) as shown in Fig. 4.

-

Upstairs This activity is performed by 1 healthy and 1 cognitive impaired individual. Stairs of a home were used, with a little turn in shape of stairs. This activity shows a unique behavior. Figures 2, 3 and 4, shows that the range of \(A_x\) falls between \((0 \le A_x \le 8)\), \(A_y\) lies between \((6 \le A_y \le 15)\) and \(A_z\) lies between \((-0.3 \le A_z \le 6)\). Similarly, gyroscope’s readings \(G_x\) falls between \((-2 \le G_x \le 2)\), \(G_y\) lies between \((-1 \le G_y \le 1)\) and \(G_z\) lies between \((-0.3 \le G_z \le 1)\) as shown in Fig. 3 and magnetometer readings \(M_x\) falls between \((-54 \le M_x \le 30)\), \(M_y\) lies between \((-60 \le M_y \le -20)\) and \(M_z\) lies between \((-50 \le M_z \le 10)\) as shown in Fig. 4.

-

Downstairs This activity shows a unique behavior. By analyzing accelerometer’s detected readings in Fig. 2, it is seen that the range of \(A_x\) falls between \((-1 \le A_x \le -5)\), \(A_y\) lies between \((7 \le A_y \le 15)\) and \(A_z\) lies between \((-0.3 \le A_z \le 5)\). Similarly we notice that gyroscope’s readings \(G_x\) falls between \((-1 \le G_x \le 1)\), \(G_y\) lies between \((-0.9 \le G_y \le 1)\) and \(G_z\) lies between \((-1 \le G_z \le 2)\) as shown in Fig. 3 and magnetometer readings \(M_x\) falls between \((-39 \le M_x \le 20)\), \(M_y\) lies between \((-60 \le M_y \le -20)\) and \(M_z\) lies between \((-45 \le M_z \le 32)\) as shown in Fig. 4.

-

Cycling shows a unique behavior as shown in Figs. 2, 3 and 4. We see that the range of \(A_x\) falls between \((6 \le A_x \le 7)\), \(A_y\) lies between \((2 \le A_y \le 3)\) and \(A_z\) lies between \((-8 \le A_z \le -7)\). Similarly we notice that gyroscope’s readings \(G_x\) falls between \((-0.04 \le G_x \le 0.03)\), \(G_y\) lies between \((-0.04 \le G_y \le 0.02)\) and \(G_z\) lies between \((-0.02 \le G_z \le 0.03)\) as shown in Fig. 3 and magnetometer readings \(M_x\) falls between \((-28 \le M_x \le -26)\), \(M_y\) lies between \(-37 \le M_y \le -33)\) and \(M_z\) lies between \((6\le M_z \le 8)\) as shown in Fig. 4.

Preprocessing data

For the purpose of data collection task, smartphone is kept in the participant’s left pocket in most cases. Before starting an activity, the smartphone is placed in the participant’s pocket, which causes some noise. The removal of smartphone from the participant’s pocket and closure of the application also produces some noise. This normally takes almost 2–3 sec, which translates to 120–200 samples, from the start and the end. This noise generated at the start and end of each activity needs to be minimized or removed from each activity in order to obtain better results.

k-Nearest neighbour classifier

Multiple machine learning classifiers can be used for this work (i.e., Support Vector Machine, Decision Trees, Naive Bayes, and k-nearest neighbour). The kNN [44] is a lightweight algorithm that works best in uncertain conditions. The kNN is an algorithm used for both regression and classification. In kNN, the unlabeled activities are assigned labels voted by nearest neighbors using a distance function. Distance function and number of nearest neighbour are two primary hyperparamteres of k-NN. We test different distance functions and nearest-neighbor value and extracted best results with ED as being distance function and \(K=3\) being the number of nearest neighbors. ED can be calculated using following Equations 1 and 2:

Where \(y_i\) is the nearest neighbour. and \(\delta\)(y, y(\(y_i\))\(= 1\) if \(y = y\)(\(y_i\)) otherwise \(\delta\)(y, y(\(y_i\))\(= 0\). Labeled is assigned to the test instance based on ED between them.

Evaluation and analysis of results

This section presents the experiments, evaluation measures, and results. To ensure the reliability of the ML model, we select various performance measures. Performance measures are an essential part to assess the reliability of the ML model. We select below shown performance measures.

Equations 3, 4, 5, 6 present the performance measures to evaluate the efficiency of a ML model [38]. Assume two activities (i.e., sitting and standing) to be predicted by the ML model can be evaluated using the below terms.

-

True Positive (TP) sitting instance correctly classified as Sitting instance.

-

True Negative (TN) standing instance correctly classified as standing instance.

-

False Positive (FP) standing instance incorrectly classified as a sitting instance.

-

False Negative (FN) sitting instance incorrectly classified as standing instance.

Automatic activity recognition (Google-Fit) results

The Google-Fit is an API that keeps track of fitness and health statistics. It is an open-source project provided by the Google team and it gives an estimate of the activities being performed as a percentage. The more the percentage the more the chances are that a particular activity is being performed. Figure 5 plots the activities pattern performed by a participant by analyzing a chunk of the dataset in terms of activities concerning a percentage. This shows that a participant performed these activities in a particular time frame.

Online activity recognition results using kNN algorithm

The proposed framework achieves promising results using the kNN algorithm with Ten-Fold cross-validation and \(\hbox {k}=3\) nearest neighbors. Table 1 demonstrates that the average Precision, Recall, F-score, and Accuracy of all 7 activities is 97.9%, 97.8%, 98%, and 97.8% respectively. While the ’Running’ is associated with less accuracy than other activities because it can be easily confused with similar activities.

Table 2 demonstrates the results using instances of accelerometer alone and its combination with gyroscope and magnetometer. It is important to note that using the only accelerometer does not perform well and obtain an F-score of 0.854. The disadvantage of using the only accelerometer is that some activities are being performed when leg rotates such as upstairs, running, and downstairs. We address this drawback by using the accelerometer in combination with the gyroscope. It is noted that using the accelerometer in combination with the gyroscope performs well and obtains an F-score of 0.928. The recognition accuracy of activities such as upstairs, running, and downstairs that was lower when it is recognized using the only accelerometer, improves significantly using the accelerometer in combination with the gyroscope. The accelerometer reading with the magnetometer performs well as compared to the previous two cases and obtain an accuracy of 0.970. Finally, we analyze that using the accelerometer with the gyroscope and magnetometer provides a promising improvement in results as compared to all previous cases by obtaining an F-score of 0.978. The recognition accuracy of all activities improves significantly.

Figure 6 depicts the results of all the combinations of sensors. We found that results using the reading of accelerometer, gyroscope, and magnetometer are better than all other combinations.

The confusion matrix in Table 3 shows the correctly classified activities with respect to misclassified activities. Only 6% of ’Upstairs’ instances are misclassified as ’Downstairs’ and 2% vice versa. The ’Running’ activity is misclassified 5% as ’Walking’, while the ’Standing’, ’Sitting’, ’Walking’ and ’Bicycle’ are accurately classified 100%.

Deviation analysis

Figure 7 illustrate, how physical activity recognition helps generate a shared healthcare plan. Both subfigures in Fig. 7 shows the performance graph and deviation analysis of two full working days: Starting from Sunday night and ending on Tuesday night on date 11/27/2017 and 11/28/2017. The red box on the left side in Fig. 7a shows the sleep time of the participant that starts around 12:30 A.M. and ends at 12:00 P.M. The dark blue spikes on the left side of the red box show the continuous usage of smartphones for around 30 min. But the next day the participant will face difficulty to sleep as shown in Fig. 7b. The participant used the smartphone continuously for around 3.5 h and slept only 4 h and went somewhere around 1:35 P.M. as shown in light blue spikes. This pattern is followed in the past day but on that day the participant slept around 9–10 h. The participant might have struggled to sleep at night for 3 h on Tuesday. The black box is shown in the center of Figs. 7a, b depicts almost all activities between the time interval of 3–6. It is the most productive time of the day. On the first day as shown in Fig. 7a participant walked or was on foot for around 30 min in the evening between the time interval of 4:00–5:00 P.M. but next day at the same time interval the participant was using the smartphone. It clearly shows that the participant’s routine is disturbed and he is lacking behind performing daily routine life tasks. The red box is shown on the right side of the Fig. 7a depicts that on the first day the participant was again walking in the night but in the Fig. 7b the participant was resting as shown by the orange spikes.

This is how a healthcare provider can provide suggestions and healthcare plan to the participants by visualizing their performance deviation graphs.

We can analyze the participant’s routine in combination with the location. Figure 8 depicts the location analysis with respect to activities and time performed on the whole day. As previously explained, in Fig. 7a that participant was trying to sleep. In Fig. 8, one can see that user was at home as shown in left red box between the interval of 12 A.M. to 12 P.M. Later in Fig. 7a the participant used the vehicle and went to the university also can be seen in Fig. 8 between the interval 12 P.M. to 3 P.M. Later in Fig. 7a, one can see some blue spikes which show that the participant used the vehicle again and went to the “263, g11” which is a public park. Grey spikes show that the participant was walking. In the next step, the participant was still between the time 5 P.M. to 8 P.M. as shown in Figs. 7a and 8 which represents the home of the participant. Later between the time interval of 9 P.M. to 10.30 P.M. the participant was walking as can be seen in green lines in Fig. 7a which is labeled as service road east in Fig. 8. This strong co-relation shows that activities performed in a day can be co-related with health status.

Location agent results

We select a chunk of time series from the location dataset to analyze the pattern of visited places of the participant as shown in Fig. 9. This is the real-life scenario of one of our participants who executes its daily life routine. Figure 9 demonstrates that the participant spent 65% of their time at a location (Street 14, E-11, Islamabad) which is the house of the participant and 20% on another location that is the friend’s home of the participant. This analysis is important to identify when participants might be diverting from the daily routine to uncertain conditions. It is shown that the participant went to some other places but not frequently. Also, through this analysis, the healthcare providers could assess how the participant’s daily routine changes and could accordingly suggest new guidelines to the participant.

Mobile usage analysis results

Mobile usage analysis can be key to analyze the condition of a user. Figure 10 illustrates the smartphone usage frequency patterns according to the ON/OFF states concerning the date. It can be seen that on 11/26/2017, the user used the smartphone at night for a little time interval while on the next day 11/27/2017, the user used smartphone extensively and similarly on 11/28/2017 user used the smartphone for a very long time interval which depicts that user’s routine is disturbed and the user had a hard time in sleeping at night.

Comparative study

We compare the results of the framework with existing research work to evaluate the effectiveness of our proposed CSHCP framework. Table 4 shows that CSHCP provides a more accurate and reliable performance than existing work. We achieve 14%, 8%, 13%, and 9% higher Precision, Recall, F-score, and Accuracy respectively than [40] who claimed generalization of their approach using supervised methods as it distinguished different physical activities without requiring a large number of labeled data. Authors in [41] proposed an ensemble approach with similar accuracy to our approach but they did not ensure the reliability by measuring other metrics as one cannot rely on Accuracy only if activities are imbalanced. Furthermore, the complexity of their ensemble approach is very high. Authors in [45] used dynamic features with CNN classification and have 7% less accurate results than our approach. This approach is associated with huge time complexity. Furthermore, we evaluate CSHCP on publicly available dataset [42] used by existing studies [14, 41, 45]. In particular, we achieve 8% and 3% higher accuracy than [45] and [14]. Most of the research work did not take activities imbalance into account. They only show accuracies while activity instances are imbalanced. It is natural that activities that are difficult to perform contain fewer instances. In this particular case, we rely on the F-score.

Conclusion and future work

We have presented a collaborative shared healthcare plan framework for daily life activity recognition, monitoring, location analysis, mobile usage analysis, providing a shared healthcare plan, and supporting improved coordination and communication among all key stakeholders. Our paper envisions the importance of such a collaborative framework which provides a noticeable improvement in a challenging environment. We provide a systematic and functional framework that is capable of providing a shared healthcare plan to the patient by doctors, their guardian, as well as their close circle community members using a collaborative framework. Beyond the potential for improving the quality of healthcare for people, the development of such frameworks would advance the current state-of-the-art in planning and information sharing. One important limitation of this work is that the proposed approach is not tested in a real environment where the test subject would be real individuals with health conditions. Therefore, as future work, we would like to perform a health assessment of a specific health condition, namely individuals with some level of Dementia using our proposed work to detect different stages of Dementia individuals.

References

Naiqi W, Li Z, Barkaoui K, Li X, Murata T, Zhou M (2017) Iot-based smart and complex systems: a guest editorial report. IEEE/CAA J Automatica Sinica 5(1):69–73

Mohammed AK, Thar B, Carl C, Muhammad A, Hoshang K, Muhammad F, Atif W (2019) Remote health monitoring of elderly through wearable sensors. Multimedia Tools Appl 78(17):24681–24706

Hulteen RM, Morgan PJ, Barnett LM, Stodden DF, Lubans DR (2018) Development of foundational movement skills: a conceptual model for physical activity across the lifespan. Sports Med 48(7):1533–1540

Javed AR, Beg MO, Asim M, Baker T, Al-Bayatti AH (2020) Alphalogger: detecting motion-based side-channel attack using smartphone keystrokes. J Ambient Intell Human Comput. https://doi.org/10.1007/s12652-020-01770-0

Khan SU, Islam N, Jan Z, Din IU, Khan A, Faheem Y (2019) An e-health care services framework for the detection and classification of breast cancer in breast cytology images as an iomt application. Fut Gener Comput Syst 98:286–296

Lee IM, Shiroma EJ, Evenson KR, Kamada M, LaCroix AZ, Buring JE (2018) Accelerometer-measured physical activity and sedentary behavior in relation to all-cause mortality: the women’s health study. Circulation 137(2):203–205

Xie J, Wen D, Liang L, Jia Y, Gao L, Lei J (2018) evaluating the validity of current mainstream wearable devices in fitness tracking under various physical activities: comparative study. JMIR mHealth uHealth 6(4):e94

Lee I-M, Shiroma EJ, Lobelo F, Puska P, Blair SN, Katzmarzyk PT et al, Lancet Physical Activity Series Working Group (2012) Effect of physical inactivity on major non-communicable diseases worldwide: an analysis of burden of disease and life expectancy. Lancet 380(9838):219–229

Chekroud SR, Gueorguieva R, Zheutlin AB, Paulus M, Krumholz HM, Krystal JH, Chekroud AM (2018) Association between physical exercise and mental health in 1.2 million individuals in the USA between 2011 and 2015: a cross-sectional study. Lancet Psychiatry 5(9):739–746

Wang L, Tao G, Tao X, Jian L (2012) A hierarchical approach to real-time activity recognition in body sensor networks. Pervasive Mob Comput 8(1):115–130

Cook DJ, Augusto JC, Jakkula VR (2009) Ambient intelligence: technologies, applications, and opportunities. Pervasive Mob Comput 5(4):277–298

Guo J, Yong M, Xiong M, Liu Y, Jingxuan G (2019) Activity feature solving based on tf-idf for activity recognition in smart homes. Complexity; 2019

Ferhat A, Samer M, Mariam D, Faicel C, Latifa O, Yacine A (2015) Physical human activity recognition using wearable sensors. Sensors 15(12):31314–31338

de la Álvarez CMÁ, Luis MSM, García JAÁ, González-Abril L (2017) Mobile activity recognition and fall detection system for elderly people using ameva algorithm. Pervasive Mob Comput 34:3–13

Shoaib M, Bosch S, Incel OD, Scholten H, Havinga PJM (2015) A survey of online activity recognition using mobile phones. Sensors 15(1):2059–2085

Habibzadeh H, Dinesh K, Shishvan OR, Boggio-Dandry A, Sharma G, Soyata T (2019) A survey of healthcare internet of things (hiot): a clinical perspective. IEEE Internet Things J 7(1):53–71

Rahmani AM, Thanigaivelan NK, Gia TN, Granados J, Negash B, Liljeberg P, Tenhunen H (2015) Smart e-health gateway: bringing intelligence to internet-of-things based ubiquitous healthcare systems. In: 2015 12th annual IEEE consumer communications and networking conference (CCNC). IEEE, pp 826–834

Wang PW, Ding ZJ, Jiang CJ, Zhou MC (2013) Design and implementation of a web-service-based public-oriented personalized health care platform. IEEE Trans Syst Man Cybern Syst 43(4):941–957

Zhou J, Wang J, Wang J (2019) A simulation engine for stochastic timed petri nets and application to emergency healthcare systems. IEEE/CAA J Automatica Sinica 6(4):969–980

Amir O, Grosz BJ, Gajos KZ, Swenson SM, Sanders LM (2014) AI support of teamwork for coordinated care of children with complex conditions. In: Proceedings of the AAAI fall symposium: expanding the boundaries of health informatics using AI. AAAI Press

Amir O, Grosz BJ, Law EL, Stern R (2013) Collaborative health care plan support. In: Proceedings of the 12th international conference on Autonomous agents and multiagent systems. ACM

Garlan D, Schmerl B (2007) The radar architecture for personal cognitive assistance. Int J Softw Eng Knowl Eng 17(02):171–190

Kuziemsky CE, Harris A (2019) An agent based framework for healthcare teamwork. In: Proceedings of the 2019 2nd international conference on information science and systems, pp 271–275

Polu SK (2019) Modeling of efficient multi-agent based mobile health care system. Int J Innov Res Sci Technol 5(8):10–14

Yorke-Smith N, Saadati S, Myers KL, Morley DN (2012) The design of a proactive personal agent for task management. Int J Artif Intell Tools 21(01):1250004

Menaspà P (2015) Effortless activity tracking with google fit. Br J Sports Med 49(24):1598–1598

Islam N, Faheem Y, Din IU, Talha M, Guizani M, Khalil M (2019) A blockchain-based fog computing framework for activity recognition as an application to e-healthcare services. Fut Gener Comput Syst 100:569–578

Amir O, Grosz BJ, Gajos KZ, Swenson SM, Sanders LM (2015) From care plans to care coordination: opportunities for computer support of teamwork in complex healthcare. In: Proceedings of the 33rd annual ACM conference on human factors in computing systems, pp 1419–1428

Tambe M (2008) Electric elves: what went wrong and why. AI magazine 29(2):23–23

Wagner T, Phelps J, Guralnik V, VanRiper R (2004) An application view of coordinators: coordination managers for first responders. In: AAAI, pp 908–915

Carman KL, Dardess P, Maurer M, Sofaer S, Adams K, Bechtel C, Sweeney J (2013) Patient and family engagement: a framework for understanding the elements and developing interventions and policies. Health Affairs 32(2):223–231

Paganelli F, Giuli D (2007) An ontology-based context model for home health monitoring and alerting in chronic patient care networks. In: 21st international conference on advanced information networking and applications workshops (AINAW’07), vol 2. IEEE, pp 838–845

de Toledo P, Jimeanez S, Roca J, Alonso A, Hernandez C et al (2006) Telemedicine experience for chronic care in copd. IEEE Trans Inform Technol Biomed 10(3):567–573

Shoaib M, Bosch S, Incel OD, Scholten H, Havinga PJM (2014) Fusion of smartphone motion sensors for physical activity recognition. Sensors 14(6):10146–10176

Javed AR, Sarwar MU, Khan S, Iwendi C, Mittal M, Kumar N (2020) Analyzing the effectiveness and contribution of each axis of tri-axial accelerometer sensor for accurate activity recognition. Sensors 20(8):2216

Sarwar MU, Javed AR (2019) Collaborative health care plan through crowdsource data using ambient application. In: 2019 22nd international multitopic conference (INMIC). IEEE, pp 1–6

Shoaib M, Scholten H, Havinga PJM (2013) Towards physical activity recognition using smartphone sensors. In: 2013 IEEE 10th international conference on ubiquitous intelligence and computing and 2013 IEEE 10th international conference on autonomic and trusted computing. IEEE, pp 80–87

Sarwar MU, Javed AR, Kulsoom F, Khan S , Tariq U, Bashir AK (2020) Parciv: recognizing physical activities having complex interclass variations using semantic data of smartphone. Software: Practice and Experience

Mannini A, Sabatini AM (2010) Machine learning methods for classifying human physical activity from on-body accelerometers. Sensors 10(2):1154–1175

Lu Y, Wei Y, Liu L, Zhong J, Sun L, Liu Y (2017) Towards unsupervised physical activity recognition using smartphone accelerometers. Multimedia Tools Appl 76(8):10701–10719

Chen Z, Jiang C, Xie L (2018) A novel ensemble elm for human activity recognition using smartphone sensors. IEEE Trans Ind Inform 15(5):2691–2699

Anguita D, Ghio A, Oneto L, Parra X, Reyes-Ortiz JL (2013) A public domain dataset for human activity recognition using smartphones. In: Esann, vol 3, p 3

Din Id, Guizani M, Rodrigues JJPC, Hassan S, Korotaev VV (2019) Machine learning in the internet of things: designed techniques for smart cities. Fut Gener Comput Syst 100:826–843

Altman NS (1992) An introduction to kernel and nearest-neighbor nonparametric regression. Am Stat 46(3):175–185

Nakano K, Chakraborty B (2017) Effect of dynamic feature for human activity recognition using smartphone sensors. In: 2017 IEEE 8th international conference on awareness science and technology (iCAST). IEEE, pp 539–543

Kwapisz JR, Weiss GM, Moore SA (2011) Activity recognition using cell phone accelerometers. ACM SigKDD Explora Newslett 12(2):74–82

Wei Y, Liu L, Zhong J, Lu Y, Sun L (2015) Unsupervised race walking recognition using smartphone accelerometers. In: International conference on knowledge science, engineering and management. Springer, pp 691–702

Author information

Authors and Affiliations

Contributions

All authors gave equal contribution. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Javed, A.R., Sarwar, M.U., Beg, M.O. et al. A collaborative healthcare framework for shared healthcare plan with ambient intelligence. Hum. Cent. Comput. Inf. Sci. 10, 40 (2020). https://doi.org/10.1186/s13673-020-00245-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13673-020-00245-7