Abstract

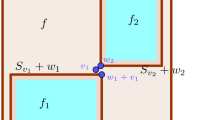

Discrete tori are \({\mathbb {Z}}_m^N\) thought of as vertices of graphs \({\mathcal {C}}_m^N\) whose adjacencies encode the Cartesian product structure. Space-limiting refers to truncation to a symmetric path neighborhood of the zero element and spectrum-limiting in this case refers to corresponding truncation in the isomorphic Fourier domain. Composition spatio-spectral limiting (SSL) operators are analogues of classical time and band limiting operators. Certain adjacency-invariant spaces of vectors defined on \({\mathbb {Z}}_m^N\) are shown to have bases consisting of Fourier transforms of eigenvectors of SSL operators. We show that when \(m=3\) or \(m=4\), all eigenvectors of SSL arise in this way. We study the structure of corresponding invariant spaces when \(m\ge 5\) and give an example to indicate that the relationship between eigenvectors of SSL and the corresponding adjacency-invariant spaces should extend to \(m\ge 5\).

Similar content being viewed by others

Notes

The term discrete torus also may refer to the two-dimensional Cartesian products \({\mathcal {C}}_m\square {\mathcal {C}}_n\)

References

Alon, N., Feldheim, O.N.: The Brunn–Minkowski inequality and nontrivial cycles in the discrete torus. SIAM J. Discrete Math. 24, 892–894 (2010)

Alon, N., Klartag, B.: Economical toric spines via Cheeger’s inequality. J. Topol. Anal. 1, 101–111 (2009)

Banu Priya, S., Parthiban, A., Abirami, P.: Equitable power domination number of total graph of certain graphs. J. Phys. Conf. Ser. 1531, 012073 (2020)

Barrera, R., Ferrero, D.: Power domination in cylinders, tori, and generalized Petersen graphs. Networks 58, 43–49 (2011)

Benjamini, I., Ellis, D., Friedgut, E., Keller, N., Sen, A.: Juntas in the $\ell ^1$-grid and Lipschitz maps between discrete tori. Random Struct. Algorithms 49, 253–279 (2016)

Bezrukov, S.L.: Edge isoperimetric problems on graphs. Graph theory and combinatorial biology (Balatonlelle, 1996), Bolyai Soc. Math. Stud., vol. 7. János Bolyai Math. Soc., Budapest, pp. 157–197 (1999)

Bezrukov, S.L., Elsässer, R.: Edge-isoperimetric problems for Cartesian powers of regular graphs. Graph-theoretic concepts in computer science (Boltenhagen, 2001), Lecture Notes in Comput. Sci., vol. 2204. Springer, Berlin, pp. 9–20 (2001)

Bobkov, S., Houdré, C., Tetali, P.: The subgaussian constant and concentration inequalities. Isr. J. Math 156, 255–283 (2006)

Bollobás, B., Kindler, G., Leader, I., O’Donnell, R.: Eliminating cycles in the discrete torus. Algorithmica 50, 446–454 (2008)

Bollobás, B., Leader, I.: An isoperimetric inequality on the discrete torus. SIAM J. Discrete Math. 3, 32–37 (1990)

Bouyrie, R.: An unified approach to the junta theorem for discrete and continuous models, Arxiv e-prints 1702.00753, (2017)

Brouwer, A.E., Haemers, W.H.: Spectra of Graphs. Universitext, Springer, New York (2012)

Bruck, J., Cypher, R., Ho, C.-T.: Fault-tolerant meshes with small degree. SIAM J. Comput. 26, 1764–1784 (1997)

Carlson, T.A.: The edge-isoperimetric problem for discrete tori. Discrete Math. 254(1), 33–49 (2002)

Chung, F.R.K.: Spectral graph theory. CBMS Regional Conference Series in Mathematics, vol. 92. American Mathematical Society, Providence (1997)

Cicerone, S., Stefano, G.D., Handke, D.: Self-spanner graphs. Discrete Appl. Math. 150, 99–120 (2005)

Das, S., Gahlawat, H.: Variations of cops and robbers game on grids. Discrete Appl. Math. (2020)

Dobrev, S., Vrťo, I.: Optimal broadcasting in tori with dynamic faults. Parallel Process. Lett. 12(1), 17–22 (2002)

Dyer, M., Galanis, A., Goldberg, L., Jerrum, M., Vigoda, E.: Random walks on small world networks. ACM Trans. Algorithms (TALG) 16, 1–33 (2020)

Emmanuel, J.: Candès and Carlos Fernandez-Granda, Towards a mathematical theory of super-resolution. Commun. Pure Appl. Math. 67(6), 906–956 (2014)

Galvin, D.: Sampling independent sets in the discrete torus. Random Struct. Algorithms 33(3), 356–376 (2008)

Greengard, P., Serkh, K.: On generalized prolate spheroidal functions, Arxiv e-prints arXiv:1811.02733, (2018)

Grünbaum, F.A.: Eigenvectors of a Toeplitz matrix: discrete version of the prolate spheroidal wave functions. SIAM J. Algebr. Discrete Methods (2), 136–141 (1981)

Grünbaum, F.A.: Toeplitz matrices commuting with tridiagonal matrices. Linear Algebra Appl. 40, 25–36 (1981)

Hartnell, B.L., Whitehead, C.A.: Decycling sets in certain Cartesian product graphs with one factor complete. Australas. J Combin. 40, 305 (2008)

Hogan, J.A., Lakey, J.: Duration and Bandwidth Limiting. Prolate Functions, Sampling, and Applications. Birkhäuser, Boston (2012)

Hogan, J.A., Lakey, J.: An analogue of Slepian vectors on Boolean hypercubes. J. Fourier Anal. Appl. 25(4), 2004–2020 (2019)

Hogan, J.A., Lakey, J.: Spatio-spectral limiting on Boolean cubes. J. Fourier Anal. Appl. 27, 40 (2021)

Jain, A.K., Ranganath, S.: Extrapolation algorithms for discrete signals with application in spectral estimation. IEEE Trans. Acoust. Speech Signal Process. 29, 830–845 (1981)

Jaming, P., Speckbacher, M.: Concentration estimates for finite expansions of spherical harmonics on two-point homogeneous spaces via the large sieve principle, Arxiv e-prints arXiv:2004.02474 (2020)

Khodkar, A., Sheikholeslami, S.M.: On perfect double dominating sets in grids, cylinders and tori. Australas. J. Combin. 37, 131–139 (2007)

Koh, K.M., Soh, K.W.: On the power domination number of the Cartesian product of graphs. AKCE Int. J. Graphs Combin. 16, 253–257 (2019)

Landau, H.: Extrapolating a band-limited function from its samples taken in a finite interval. IEEE Trans. Inf. Theory 32(4), 464–470 (1986)

Landau, H.J., Pollak, H.O.: Prolate spheroidal wave functions, Fourier analysis and uncertainty. II. Bell Syst. Tech. J. 40, 65–84 (1961)

Landau, H.J., Pollak, H.O.: Prolate spheroidal wave functions, Fourier analysis and uncertainty. III. The dimension of the space of essentially time- and band-limited signals. Bell Syst. Tech. J. 41, 1295–1336 (1962)

Nowakowski, R., Winkler, P.: Vertex-to-vertex pursuit in a graph. Discrete Math. 43, 235–239 (1983)

Osipov, A., Rokhlin, V., Xiao, H.: Prolate spheroidal wave functions of order zero, Applied Mathematical Sciences, vol. 187. Springer, New York (2013). Mathematical tools for bandlimited approximation

Plattner, A., Simons, F.J.: Spatiospectral concentration of vector fields on a sphere. Appl. Comput. Harmon. Anal. 36(1), 1–22 (2014)

Sammer, M., Tetali, P.: Concentration on the discrete torus using transportation. Combin. Probab. Comput. 18, 835–860 (2009)

Simons, F.J., Dahlen, F.A., Wieczorek, M.A.: Spatiospectral concentration on a sphere. SIAM Rev. 48(3), 504–536 (2006)

Slepian, D.: Prolate spheroidal wave functions, Fourier analysis and uncertainty. IV. Extensions to many dimensions; generalized prolate spheroidal functions. Bell Syst. Tech. J. 43, 3009–3057 (1964)

Slepian, D.: Prolate spheroidal wave functions, Fourier analysis, and uncertainty. V—the discrete case. Bell Syst. Tech. J. 57, 1371–1430 (1978)

Slepian, D., Pollak, H.O.: Prolate spheroidal wave functions, Fourier analysis and uncertainty. I. Bell Syst. Tech. J. 40, 43–63 (1961)

Speckbacher, M., Hrycak, T.: Concentration estimates for band-limited spherical harmonics expansions via the large sieve principle. J. Fourier Anal. Appl. 26(3), 18, Paper No. 38 (2020)

Thomson, D.J.: Spectrum estimation and harmonic analysis. Proc. IEEE 70, 1055–1096 (1982)

Tsitsvero, M., Barbarossa, S., Di Lorenzo, P.: Signals on graphs: uncertainty principle and sampling. IEEE Trans. Signal Process. 64, 4845–4860 (2016)

Xu, W.Y., Chamzas, C.: On the periodic discrete prolate spheroidal sequences. SIAM J. Appl. Math. 44, 1210–1217 (1984)

Zemen, T., Mecklenbräuker, C.F.: Time-variant channel estimation using discrete prolate spheroidal sequences. IEEE Trans. Signal Process. 53, 3597–3607 (2005)

Zhu, Z., Wakin, M.B.: Time-limited Toeplitz operators on abelian groups: applications in information theory and subspace approximation, Arxiv e-prints 1711.07956 (2017)

Acknowledgements

The authors thank the anonymous referees of an earlier draft of this work for suggesting the addition of several figures and examples, along with other suggestions to help to clarify the technical contents.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Götz Pfander.

Appendices

Proofs of Auxiliary lemmas for Theorem 22

Proof of Lemma 18

The basic idea is similar to that of the proof of Lemma 3. We begin with a computation of \([A_{+,\ell },A_{-,\ell '}]\) for \(1\le \ell ,\ell '\le M\).

To get from the first line to the second we used the condition that if \(d_\nu (v)=\ell \) and \(d_\mu (v_\nu ^-)=\ell '-1\) then either \(\nu =\mu \) and \(\ell '=\ell \) or else \(\mu \ne \nu \) and \(d_\mu (v)=\ell '-1\). To get from the third to fourth lines we used that if \(\mu \ne \nu \) then \(d_\nu (v)=d_\nu (v_\mu ^+)\) and to get from the fourth to fifth line we used that if \(d_\mu (v)=\ell '-1\) and \(d_\nu (v_\mu ^+)=\ell \) then either \(\mu =\nu \) and \(\ell '=\ell \) or \(\mu \ne \nu \). We conclude that

In particular, if \(\ell '\ne \ell \) then \([ A_{-,\ell '}, \, A_{+,\ell }]=0\), which is Lemma 18.i.

For the next set of identities in Lemma 18.ii, first consider the case \(\ell =1\). In this case if \(d_\mu (v)=\ell -1=0\) then using the convention above, \( f((v_\mu ^+)_\mu ^-) =2f(v)\) while if \(d_\nu (v)=\ell =1\) then \(f((v_\nu ^-)_\nu ^+)=f(v)+f({\tilde{v}}_\nu )\) and

This establishes the case \(\ell =1\) of Lemma 18.ii.

In the case \(\ell =M\), again using the conventions above, if \(d_\nu (v)=M\) then \(f((v_\nu ^-)_\nu ^+) =2f(v)\) while if \(d_\mu (v)=M-1\) then \( f((v_\mu ^+)_\mu ^-)=f(v)+f({\tilde{v}}_\mu )\) so

This establishes the case \(\ell =M\) of Lemma 18.ii.

Finally, let \(1<\ell <M\). In this case, \((v_\mu ^+)_\mu ^-=(v_\nu ^-)_\nu ^+=v\) for each \(\mu ,\nu \) so

This completes the proof of Lemma 18.ii.

For Lemma 18.iii, a simple calculation shows that \(([R_\ell ,A_{+,\ell }]f)(v)=\sum _{d_\nu (v)=\ell } f((v_\nu ^-)_\nu ^\sim )\). We then compute \([A_{-,\ell }, [R_\ell ,A_{+,\ell }]]\) as follows.

Subtracting \(([R_\ell ,A_{+,\ell }] A_{-,\ell }f)(v) \) from both sides gives Lemma 18.iii.

To prove Lemma 18.iv, let \(\ell \ne \ell '\). Recalling that \(([R_\ell ,A_{+,\ell }]f)(v)=\sum _{d_\nu (v)=\ell } f((v_\nu ^-)_\nu ^\sim )\),

Here we observed that if \(d_\nu (v)=\ell '-1\) and \(d_\mu (v_\nu ^+)=\ell \) then \(\mu \ne \nu \) since \(\ell \ne \ell '\). Similarly, if \(d_\mu (v)=\ell \) and \(d_\nu (v_\mu ^-)=\ell '-1\) then \(\mu \ne \nu \). Subtracting \( [R_\ell ,A_{+,\ell }] A_{-,\ell '}f\) from both sides gives Lemma 18.iv and completes the proof of the lemma. \(\square \)

Proof of Lemma 19

Since we finish with a linear term to the left of \(A_{+,\ell }\) in each case, it suffices to assume that \(p({\mathbf {R}})\) is a monomial of the form \(p({\varvec{R}})=R_1^{\nu _1}\cdots R_{M-1}^{\nu _{M-1}}\). First consider the case \(\ell =1\). We wish to show that \(p({\varvec{R}})A_{+,1}=A_{+,1}p_{+,1}({\varvec{R}})\) for an appropriate \(p_{+,1}\). Since \([R_j,A_{+,1}]=0\) for each \(j>1\) we evidently have \(p({\varvec{R}})A_{+,1}=R_1^{\nu _1}A_{+,1}R_2^{\nu _2}\cdots R_{M-1}^{\nu _{M-1}}\). By iterating Lemma 20.i we have \(R_1^{\nu _1}A_{+,1}=A_{+,1}(I+R_1)^{\nu _1}\) so we may take \(p_{+,1}=(I+R_1)^{\nu _1}R_2^{\nu _2}\cdots R_{M-1}^{\nu _{M-1}}\). The case \(\ell =M-1\) is similar yielding \(p({\varvec{R}})A_{+,M}= A_{+,1}R_1^{\nu _1}\cdots R_{M-2}^{\nu _{M-2}}(R_{M-1}-I)^{\nu _{M-1}}\). Now consider the case \(1<\ell <M\). By Lemma 20.ii for the same \(p({\varvec{R}})\) we can write

Splitting this sum into its monomial pieces then reduces the problem to showing that, for each \(\nu =1,2,\dots \) we can write

for appropriate p and q. We can proceed by induction. Assuming such an expression is valid for \(\nu \), we have

By Lemma 20.ii we can write

Substituting this into (13) and gathering like terms then proves the induction step and completes the proof of Lemma 19\(\square \)

Proof of Lemma 20

To prove Lemma 20.i in the case \(\ell =1\) we have

Here we use the fact that if \(d_\nu (v)=1\) then \((v_\nu ^-)_\nu ^\sim =v_\nu ^-\). The proof that \([R_{M-1},A_{+,M}]=-A_{+,M}\) is essentially the same. This proves Lemma 20.i. To prove Lemma 20.ii,

Here we observed that if \(d_\mu (v)=\ell \) then \(\{\nu :d_\nu (v)=\ell \}\cup \{\nu :d_\nu (v)=\ell -1\} =\{\nu :d_\nu (v_\mu ^-)=\ell \}\cup \{\nu :d_\nu (v_\mu ^-)=\ell -1\}\) since \(d_\mu (v_\mu ^-)=\ell -1\) and \(d_\nu (v_\mu ^-)=d_\nu (v)\) if \(\mu \ne \nu \).

To prove Lemma 20.iii, using again that \( ([R_\ell ,A_{+,\ell }]f)(v)=\sum _{d_\nu (v)=\ell } f((v)_\nu ^-)_\nu ^\sim )\),

Subtracting \(([R_\ell ,A_{+,\ell }] R_\ell f)(v)\) from both sides proves Lemma 20.iii. \(\square \)

Proof of Lemma 21

The proof is by induction on k. If \(k=1\) then by Lemma 19, we can write \(p_1({\varvec{R}})A_{+,\ell +1}=c_1 A_{+,\ell } p_{1,+}({\varvec{R}}) +c_2 R_\ell A_{+,\ell } q_{1,+}({\varvec{R}})\) for certain constants \(c_1,c_2\) depending on \(q_0,\dots , q_M\). If g is a common eigenvector of \({\varvec{R}}\) then we can write \(p_1({\varvec{R}})A_{+,\ell +1} g=(c_1 A_{+,\ell } p_{1,+}({\varvec{\lambda }}) g +c_2 R_\ell A_{+,\ell } q_{1,+}({\varvec{\lambda }}) g) =(c_1' A_{+,\ell } g +c_2' R_\ell A_{+,\ell } g)\) since \(p_{1,+}({\varvec{\lambda }}) \) and \(q_{1,+}({\varvec{\lambda }}) \) are in \({\mathbb {C}}\). Suppose that for fixed k, any \(p_k({\varvec{R}})A_{+,\ell _k}\cdots p_1({\varvec{R}})A_{+,1} g\) can be written as a linear combination of at most \(2^{k-1}\) terms of the form \(B_{+,\ell _k}\cdots B_{+,\ell _1}g\) with \(B_{+,\ell }\) as in the lemma, for the same \(g\in {\mathcal {W}}\). Let f have this form and set \(h=p_{k+1}({\varvec{R}}) A_{+,\ell _{k+1}} f\). Set \(\ell =\ell _{k+1}\). Again by Lemma 19, we can write

By the induction hypothesis \(p_{+,k+1}({\varvec{R}}) f\) is a linear combination of at most \(2^{k-1}\) terms of the form \(B_{+,\ell _k}^{(1)}\cdots B_{+,\ell _1}^{(1)} g\) and likewise \(q_{+,k+1}({\varvec{R}})f\) with terms \(B_{+,\ell _k}^{(2)}\cdots B_{+,\ell _1}^{(2)} g\). Applying (14) we conclude then that \(p_{k+1}({\varvec{R}}) A_{+,\ell } f\) can be expressed as a sum of at most \(2^{k}\) terms of the form \(B_{+,\ell _k}\cdots B_{+,\ell _1}g\) for the same \(g\in {\mathcal {W}}\). \(\square \)

Proof of Proposition 9

The main idea is to associate with each r-element set \(S\subset \{1,\dots , N\}\) a Hadamard-type matrix whose columns form an orthonormal basis for the \(2^r\)-dimensional space \(\ell ^2(\Sigma _{r,S})\) of vectors f such that \(f(v)\ne 0\) and \(d_\nu (v)>0\) implies \(\nu \in S\). The standard Hadamard matrix of size \(2^r\times 2^r\) is (up to normalization) the orthogonal matrix \(\otimes ^r H\) where \(H=\frac{1}{\sqrt{2}}\left( {\begin{matrix}1 &{} 1\\ 1&{} -1\end{matrix}}\right) \). Each column of \(\otimes ^r H\) has the form \(\alpha _{\epsilon _1}\otimes \cdots \otimes \alpha _{\epsilon _{r}}\) where \(\sqrt{2}\, \alpha _0=\left( {\begin{matrix}1 \\ 1\end{matrix}}\right) \) and \(\sqrt{2}\, \alpha _1=\left( {\begin{matrix}1 \\ -1\end{matrix}}\right) \). Specifically, the kth column of \(\otimes ^r H\) has \(\alpha _{\epsilon _i}\) in the ith tensor slot, according to the binary expansion \(k=1+\sum _{i=1}^{r} \epsilon _i 2^{i-1}\), \(k=1,\dots , 2^r\). To view these vectors as vertex functions, first, for simplicity, take \(S=\{1,\dots , r\}\). Any vertex \(v\in \Sigma _{r,S}\) has the form \(v=\sum _{\nu =1}^r (-1)^{\epsilon _\nu } e_\nu \) where \(\epsilon _\nu \in \{0,1\}\). Thus v is uniquely associated among vertices in \(\Sigma _{r,S}\) with \(\epsilon (v)=(\epsilon _1,\dots ,\epsilon _r)\in \{0,1\}^r\). Suppose now that \(\gamma =(\gamma _1,\dots ,\gamma _r)\in \{0,1\}^r\). Associate with \(\gamma \) the Hadamard vector \(h_\gamma (v)=2^{-r/2} (-1)^{\langle \epsilon (v),\, \gamma \rangle }\). When \(S=\{1,\dots , r\}\), and \((\gamma _1,\dots ,\gamma _r)\) is associated with the integer \(n(\gamma )=1+\sum _{p=1}^r \gamma _p 2^{p-1}\), \(h_\gamma \) is associated with the \(n(\gamma )\)th column of \(\otimes ^r H\) and can also be expressed as \(h_\gamma =\alpha _{\gamma _1}\otimes \cdots \otimes \alpha _{\gamma _r}\).

We claim that \(\rho _k h_\gamma =h_\gamma \) if \(\gamma _k=0\) and \(\rho _k h_\gamma =-h_\gamma \) if \(\gamma _k=1\). To see this, observe that \(\epsilon _k({\tilde{v}}_k)=1-\epsilon _k(v)\), \(k=1,\dots , r\). Therefore

since \((-1)^{-\epsilon }=(-1)^\epsilon \) if \(\epsilon \in \{0,1\}\). This verifies the claim. It is well known that the matrix \(\otimes ^r H\) is orthogonal, so when \(S=\{1,\dots , r\}\), the vectors \(h_{\gamma ,S}\) form an orthonormal basis for \(\ell ^2(\Sigma _{r,S})\) as \(\gamma \) ranges over \(\{0,1\}^r\).

To address the general case of \(S=\{\beta _1,\dots ,\beta _r\}\subset \{1,\dots , N\}\) we assume that the \(\beta _i\) are listed in increasing order. Again, each \(v\in \Sigma _{r,S}\) is uniquely associated with an \(\epsilon =(\epsilon _{\beta _1},\dots ,\epsilon _{\beta _r})\) by \(v=\sum _{\nu =1}^r (-1)^{\epsilon _{\beta _\nu }} e_{\beta _\nu }\). We can then assign accordingly \(h_{\gamma ,S}(v)=2^{-r/2} (-1)^{\langle \gamma ,\epsilon \rangle }\). To define the vectors \(h_{\gamma ,S}\) globally (on \(\Sigma _{r}\)) we define a block matrix \({\varvec{H}}_r\) of size \(2^r\left( {\begin{array}{c}N\\ r\end{array}}\right) \times 2^r\left( {\begin{array}{c}N\\ r\end{array}}\right) \) having \(2^r\times 2^r\) diagonal blocks each containing an isomorphic copy of \(\otimes ^r H\) whose columns extend \(h_{\gamma ,S}\) when the subsets \(S\subset \{1,\dots , N\}\) are ordered lexicographically, say. In this way we can associate each \(h_{\gamma ,S}\) with a unique column of \({\varvec{H}}_r\). We refer to each \(h_{\gamma ,S}\) as a Hadamard-type vector.

Lemma 23

Fix \(r\in \{1,\dots , N\}\) and for each \(\gamma \in \{0,1\}^r\) and \(S=\{\beta _1,\dots ,\beta _r\}\subset \{1,\dots , N\}\) define \(h_{\gamma ,S}\) as above. Then

-

(i)

the vectors \(h_{\gamma ,S}\) form an orthonormal basis for \(\ell ^2(\Sigma _r)\)

-

(ii)

if \(\# \{k: \gamma _k=0\}=s\) then \( A_0 h_{\gamma ,S} =(2s-r)\, h_{\gamma ,S}\)

-

(iii)

\(\mathrm{span}\{h_{\gamma ,S}:\, A_0 h_{\gamma ,S}=(2s-r) h_{\gamma ,S}\}\) has dimension \(\left( {\begin{array}{c}N\\ r\end{array}}\right) \left( {\begin{array}{c}r\\ s\end{array}}\right) \)

Proof

(i) follows since the \(\{h_{\gamma ,S}\}\) are orthonormal for each fixed S and have disjoint supports for different S. (ii) follows from Lemma 5 and the observations above. For (iii), there are \(\left( {\begin{array}{c}N\\ r\end{array}}\right) \) choices of r-element disjoint supports S and the number of s-element subsets of an r-element set is \(\left( {\begin{array}{c}r\\ s\end{array}}\right) \) (\(0\le s\le r\)). \(\square \)

By the injectivity of \(A_+\) on \(\ell ^2(\Sigma _r)\) (\(r<N/2\)) and since \(A_+\) is the adjoint of \(A_-\), membership in \({\mathcal {W}}_r\) is the same as being in the orthogonal complement of \(A_+ (\ell ^2(\Sigma _{r-1}))\) in \(\ell ^2(\Sigma _r)\). Since the Hadamard-type vectors that are symmetric in s coordinates span the subspace of \(\ell ^2(\Sigma _r)\) of \((2s-r)\)-eigenvectors of \(A_0\), and since an element \(A_+h\) of \(A_+ (\ell ^2(\Sigma _{r-1}))\) is a \((2s-r)\)-eigenvector of \(A_0\) precisely when its preimage h is a \((2s-1-r)\)-eigenvector of \(A_0\) on \(\ell ^2(\Sigma _{r-1})\), it follows that \({\mathcal {W}}_{r;\, 2s-r}\) is equal to the orthogonal complement of the image of the \((2s-1-r)\)-eigenspace of \(A_0\) under \(A_+\) inside the \((2s-r)\)-eigenspace of \(A_0\). These conditions can be described in terms of Hadamard-type vectors. Specifically, for a Hadamard-type vector \(h_{\gamma ',S'}\) defined on \(\Sigma _{r-1,S'}\), and S and r-element subset of \(\{1,\dots , N\}\) that contains \(S'\), define \(h_{\gamma ',S',S}\) to be the Hadamard-type vector supported in \(\Sigma _{r,S}\) such that \(h_{\gamma ',S',S}\) is the truncation on \(\Sigma _{r,S}\) of \(A_+ h_{\gamma ',S'}\). There is a unique index \(\beta \in S\setminus S'\) so \(h_{\gamma ',S',S}\) can be identified with the vector \(\alpha _{\gamma '_1}\otimes \cdots \alpha _{\gamma '_{i-1}}\otimes \alpha _0\otimes \alpha _{\gamma '_{i}}\otimes \cdots \otimes \alpha _{\gamma '_{r-1}}\) when \(\beta \) is the ith element of S when listed in increasing order. Given a fixed \(h_{\gamma ',S'}\), its image under \(A_+\) can be expressed as a sum \(\sum _{\# (S\setminus S')=1} h_{\gamma ',S',S}\), of one-coordinate symmetric extensions of \(h_{\gamma ', S'}\).

Since \(A_+\) is injective from \(\ell ^2(\Sigma _{r-1})\) to \(\ell ^2(\Sigma _{r})\), when \(h_{\gamma ', S'}\) are \((2s-r-1)\)-eigenvectors of \(A_0\) in \(\ell ^2(\Sigma _{r-1})\), their extensions \(\sum _{\# (S\setminus S')=1} h_{\gamma ',S',S}\) are linearly independent \((2s-r)\)-eigenvectors of \(A_0\) in \(\ell ^2(\Sigma _r)\). The latter eigenspace has dimension \(\left( {\begin{array}{c}N\\ r\end{array}}\right) \left( {\begin{array}{c}r\\ s\end{array}}\right) \) and contains the image of the \((2s-r-1)\)-eigenvectors of \(A_0\) as a subspace of dimension \(\left( {\begin{array}{c}N\\ r-1\end{array}}\right) \left( {\begin{array}{c}r-1\\ s-1\end{array}}\right) \), accounted for by the Hadamard-type elements \(h_{\gamma ',S'}\in \ell ^2(\Sigma _{r-1})\) that are symmetric in \(s-1\) coordinates. Thus the orthogonal complement of the span of the images \(\sum _{\# (S\setminus S')=1} h_{\gamma ',S',S}\) inside the \((2s-r)\)-eigenspace of \(A_0\), which is equal to \({\mathcal {W}}_{r;\, 2s-r}\), has dimension \(\left( {\begin{array}{c}N\\ r\end{array}}\right) \left( {\begin{array}{c}r\\ s\end{array}}\right) -\left( {\begin{array}{c}N\\ r-1\end{array}}\right) \left( {\begin{array}{c}r-1\\ s-1\end{array}}\right) \). This proves the first statement of Proposition 9.

As for the second statement, orthogonality of the spaces \({\mathcal {W}}_{r;\, 2s-r}\) for different s follows from their being spanned by Hadamard-type vectors having different numbers of symmetric coordinates. Completeness follows from the fact that the Hadamard-type vectors \(h_{\gamma ,S'}\) span \(\ell ^2(\Sigma _{r-1})\) as \(\gamma '\) ranges over \(\{0,1\}^{r-1}\) and \(S'\) ranges over all \((r-1)\)-elements subsets of \(\{1,\dots ,N\}\). The space \({\mathcal {W}}_{r,-r}\) is spanned by those Hadamard-type vectors in \(\ell ^2(\Sigma _r)\) that are antisymmetric in each coordinate. Since the image of \(h_{\gamma ',S'}\) under \(A_+\) is symmetric in at least one coordinate on each S, Hadamard vectors \(h_{\gamma ,S}\in \ell ^2(\Sigma _{r,S})\), \(\gamma =(1,\dots , 1)\)) are automatically in the kernel of \(A_-\). The subspace \({\mathcal {W}}_{r,-r}\) of \({\mathcal {W}}_r\) has dimension \(\left( {\begin{array}{c}N\\ r\end{array}}\right) \). This proves Proposition 9.

We now provide a proof of the first part of Lemma 7—that if f is a \(\lambda \)-eigenvector of \(A_0\) in \(\ell ^2(\Sigma _r)\) on \({\mathcal {C}}_3^N\) and in the kernel of \(A_-\) then \(A_+^{k+1}f=0\) whenever \(2N-2k-3r-\lambda \le 0\). Fix \(s\in \{1,\dots , r\}\) and \(\lambda =2s-r\). Then the last condition is that \(k\ge N-r-s\). We will use the following fact proved in [28].

Lemma 24

Let \(f\in \ell ^2(\Sigma _r({\mathcal {C}}_2^N))\) (\(r<N/2\)). If \(A_-({\mathcal {C}}_2^N)f=0\) and \(k>N-2r\), then \(A_+^kf=0\).

Proof of (i) of Lemma 7

In view of the Hadamard-type analysis above, specifically that \(A_-f=0\) precisely when \(\langle f,\, \sum _{\beta \notin S'} h_{\gamma ',S',S'\cup \{\beta \}}\rangle =0\) for each \(\gamma '\in \{0,1\}^{r-1}\) and \(S'\) an \((r-1)\)-element subset of \(\{1,\dots ,N\}\), it suffices to assume that for each r-element set \(S\subset \{1,\dots , N\}\), f is symmetric in s of the coordinates in S and antisymmetric in the others. First, suppose that f is symmetric in every coordinate (\(\lambda =r\) in Lemma 7). That is, for each \(v\in \Sigma _r\) and each \(\nu \) such that \(d_\nu (v)=1\), \(f({\tilde{v}}_\nu )=f(v)\). Then f is constant on \(\Sigma _{r,S}\) and can regarded as a function \(f_2\) defined on the r-element subsets of \(\{1,\dots , N\}\). Subsets of \(\{1,\dots , N\}\) can be used to index the vertices of \({\mathcal {C}}_2^N\). Thus, f can be identified with a function \(f_2\) on \(V({\mathcal {C}}_2^N)\) supported in \(\Sigma _r({\mathcal {C}}_2^N)\) and in the kernel of \(A_-({\mathcal {C}}_2^N)\). Moreover, \((A_+^kf)(v)\) is also constant in this case on \(\Sigma _{r+k,{\hat{S}}}\) where \({\hat{S}}\) is any fixed \((r+k)\)-element subset of \(\{1,\dots , N\}\). In fact, \(A_+^kf\) can be identified in the same way with the function \(A_+^k({\mathcal {C}}_2^N) f_2\). In [28] we proved that for such \(f_2\), \((A_+({\mathcal {C}}_2^N))^kf_2=0\) whenever \(k>N-2r\). Consequently we also have \(A_+^kf=0\) on \(V({\mathcal {C}}_3^N)\) when \(k>N-2r\).

For the general case, if for each \(v\in \Sigma _r\) and each k such that \(d_\nu (v)=1\), either \((\rho _\nu f)(v)=f(v)\) or \((\rho _\nu f)(v)=-f(v)\), then we have

since \(\sum _{\nu : f(v+e_\nu )=-f(v-e_\nu )} f(v+e_\nu )+f(v-e_\nu )=0\) automatically. Fix an \((r-s)\)-element subset \(S'\subset \{1,\dots , N\}\) (to be concrete, without loss of generality take \(S'=\{N-(r-s)+1,\dots , N\}\)) and let \(V(r,S',f)\) be the set of all vertices in \(\Sigma _r\) such that \(f({\tilde{v}}_\nu )=-f(v)\) for each \(\nu \in S'\). Fix a choice \((\ell _{N-(r-s)+1},\dots ,\ell _N)\) of the last \((r-s)\) (i.e., \(S'\)) coordinates and for \(v\in V(r,S',f)\) let \({\bar{v}}\in V(r,S',f)\) be the vertex whose first s level-one coordinates are those of v and whose last \((r-s)\) ones are as fixed. We call \(v\in V(r,S',f)\mapsto f({\bar{v}})\) the clamping of f at \((\ell _{N-(r-s)+1},\dots ,\ell _N)\). Then \(f({\bar{v}})\) is constant on \(\Sigma _{r,{\bar{S}}\cup S'}\) whenever \({\bar{S}}\subset \{1,\dots , N-(r-s)\}\) has s elements. We can thus identify with this restriction of f a function \(f_{S'}\) defined on s-element sets \({\bar{S}}\subset \{1,\dots , N-(r-s)\}\) by \(f_{S'}({\bar{S}})=f({\bar{v}})\) whenever \({\bar{v}}\in \Sigma _{r,S'\cup {\bar{S}}}\). By (15), \(f_{S'}\) satisfies \(\sum _{\beta \notin U} f_{S'}(U\cup \{\beta \})=0\) whenever U is an \((s-1)\)-element subset of \(\{1,\dots , N-(r-s)\}\). This last condition is equivalent to \(f_{S'}\), thought of as a function in \(\ell ^2(\Sigma _s({\mathcal {C}}_2^N))\), being in the kernel of \(A_-({\mathcal {C}}_2^N)\). By the results in [28], then \(A_+({\mathcal {C}}_2^N)^k f_{S'}=0\) whenever \(k>(N-(r-s))-2s=N-r-s\). Returning to \({\mathcal {C}}_3^N\), the value of \((A_+^k f)({\bar{v}})\) at any vertex in \(\Sigma _{r+k}\) whose last \((r-s)\) coordinates are fixed as above, is equal to a value of \(A_+({\mathcal {C}}_2^N)^k f_{S'}\) on a \((k+r)\)-element set. In particular, the value is equal to zero if \(k>N-r-s\). This same conclusion holds if we replace this clamping procedure done for \(S'=\{N-(r-s)+1,\dots , N\}\) by a parallel procedure for f restricted to vertices determined by any fixed choice \(S'\) of \((r-s)\) anti-symmetry coordinates. Since f is a linear sum of such restrictions, the conclusion that \(A_+^{k}f=0\) when \(k>N-s-r\) follows and Lemma 7 is proved. \(\square \)

Rights and permissions

About this article

Cite this article

Hogan, J.A., Lakey, J.D. Spatio-spectral limiting on discrete tori: adjacency invariant spaces. Sampl. Theory Signal Process. Data Anal. 19, 14 (2021). https://doi.org/10.1007/s43670-021-00014-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43670-021-00014-2