Abstract

We study estimators that combine an unbiased estimator with a possibly biased correlated estimator of a mean vector. The combined estimators are shrinkage-type estimators that shrink the unbiased estimator towards the biased estimator. Conditions under which the combined estimator dominates the original unbiased estimator are given. Models studied include normal models with a known covariance structure, scale mixtures of normals, and more generally elliptically symmetric models with a known covariance structure. Elliptically symmetric models with a covariance structure known up to a multiple are also considered.

Similar content being viewed by others

References

Arslan, O. (2001). Family of multivariate generalized t distributions. Journal of Multivariate Analysis, 89, 329–251.

Berger, J. (1975). Minimax estimation of location vectors for a wide class of densities. The Annals of Statistics, 3, 1318–1328.

Berger, J. (1976). Admissible minimax estimation of a multivariate normal mean with arbitrary quadratic loss. The Annals of Statistics, 4, 223–226.

Casella, G., & Hwang, J. T. (1982). Limit expressions for the risk of James–Stein estimators. Canadian Journal of Statistics-revue Canadienne De Statistique, 10, 305–309.

Cessie, S., Nagelkerke, N., Rosendal, F., Stralen, K., Pomp, E., & Houwelingen, H. (2008). Combining matched and unmatched control groups in case–control studies. American Journal of Epidemiology, 168, 1204–1210.

Fourdrinier, D., & Strawderman, W. (2008). Generalized Bayes minimax estimators of location vectors for spherically symmetric distributions. Journal of Multivariate Analysis, 99, 735–750.

Fourdrinier, D., Strawderman, W., & Wells, M. (2018). Shrinkage estimation (1st ed.). Springer Nature.

Genz, A., Bretz, F., Miwa, T., Mi, X., Leisch, F., Scheipl, F., & Hothorn, T. (2020). mvtnorm: Multivariate normal and t distributions. Retrieved from https://CRAN.R-project.org/package=mvtnorm

Green, E., & Strawdermann, W. (1991). A James–Stein type estimator for combining unbiased and possibly biased estimators. Journal of the American Statistical Association, 86, 1001–1006.

Judge, G., & Mittelhammer, R. (2004). A semiparametric basis for combing estimation problems under quadratic loss. Journal of the American Statistical Association, 99, 479–487.

Lehmann, E., & Romano, J. (2005). Testing statistical hypothesis (3rd ed.). Springer.

Mardia, K., Kent, J., & Bibby, J. (1979). Multivariate analysis. Academic Press Inc.

Stapleton, J. (2009). Linear statistical models (2nd ed.). Wiley.

Strawderman, W. (1974). Minimax estimation of location parameters for certain spherically symmetric distributions. Journal of Multivariate Analysis, 4, 255–264.

Strawderman, W. (2003). On minimax estimation of a normal mean vector for general quadratic loss. Lecture Notes-Monograph series, 42, 223–226.

Venables, W., & Ripley, B. (2002). Modern applied statistics with S (4th ed.). Springer.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was partially supported by grants from the Simons Foundation (\(\#\) 209035 and \(\#\) 418098 to William Strawderman).

Appendices

Appendix A

1.1 A.1. Proof of Lemma 2 in Sect. 2

Proof

Let \(Z\sim N_p(\mu , I_p)\), then \(Y=Z'Z\) has a \(\chi ^2_p(\mu '\mu )\) distribution with density:

where \(P_k\) is the Poisson density, \(\frac{e^{-\frac{\mu '\mu }{2}}(\frac{\mu '\mu }{2})^k }{k!}\), and \(f_{p+2k}\) is the density of a \(\chi ^2_{p+2k}\), \(\frac{y^{\frac{p+2k}{2}-1}e^{-\frac{y}{2}}}{\Gamma (\frac{p+2k}{2})2^{\frac{p+2k}{2}}}\), so that Y is a Poisson mixture of central \(\chi ^2\) densities. Supposing that \(p\ge 3 \), by Tonelli’s theorem

where the expectation in (51) is taken with respect to a Poisson distribution with parameter \(\frac{\mu '\mu }{2}\). In order for the expectation of the random variable \(\frac{1}{Y}\) to exist, \(p\ge 3 \) is necessary since for \(k=0\), \(E\left[ \frac{1}{\chi ^2_p}\right] < \infty \) implies \(p\ge 3\). Furthermore for \(p\ge 3\) and \(l>0\),

which is finite. By the convexity of the function \(g(k)=\frac{1}{2k+p-2}\), Jensen’s inequality implies

Casella and Hwang (1982) gives the more refined upper bound

for \(p \ge 3\). When \(p>3\), further refinement on the upper bound for the expectation in expression (53) is given in Green and Strawdermann (1991)

Combining the bounds from expression (54) and (53), the following expression was established in Green and Strawdermann (1991) when \(p\ge 4\)

Suppose now that \(X\sim N_p(\mu ,\Sigma )\) and let \(Y=\Sigma ^{-\frac{1}{2}}X\sim N_p(\Sigma ^{-\frac{1}{2}}\mu ,I_p) \). For any orthogonal matrix U with rank p, \(Z=U'Y\sim N_p(U'\Sigma ^{-\frac{1}{2}}\mu ,I_p).\) Using the spectral value decomposition of \(\Sigma \) to get

where P is the orthogonal matrix of eigenvectors of \(\Sigma \) and \(\Lambda \) is the diagonal matrix of eigenvalue of \(\Sigma \), and denoting \(\lambda _{(1)}\) and \(\lambda _{(p)}\) as the largest and smallest eigenvalues of \(\Sigma \), the following inequalities exist:

with \(Z=P'\Sigma ^{-\frac{1}{2}}X\). Noting that the function \(\phi (x)=\frac{1}{x}\) is convex for \(x>0\),

provided \(p \ge 3\), where \(Z \sim N_p(P'\Sigma ^{-\frac{1}{2}}\mu ,I_p)\).

From expression (55) then, when \(p \ge 4 \),

and for \(p\ge 3\),:

\(\square \)

1.2 A.2. Proof of Lemma 5 in Sect. 2

Proof

Let \(q_{ij}\) denote the \((i,j)^{th}\) entry in Q.

(by an application of Lemma 4 on \(\Vert x-y\Vert ^2_{Q}\))

so that

\(\square \)

1.3 A.3. Proof of Corollary 2 in Sect. 2

Proof

Let \(Z=B(Y-AX)\). Using expression (14), the risk of the estimator \(\delta \), \(R(\delta ,\theta ,\eta )\) can be expressed as:

Since \(Q>0\) and symmetric, \(Q^*= \Sigma _{11}^{-1} Q^{-1}\Sigma _{11}^{-1}\) has a symmetric square root denoted by \(Q^{*}{}^{\frac{1}{2}}\). Let

Making the change of variables,

and

in (59) implies

Since V has a multivariate normal distribution, whose parameters are given in (60), an application of Lemma 2 to the \(E\left[ \frac{1}{V'V}\right] \) in (61) implies the result since any eigenvalue of \(Q^*{}^{\frac{1}{2}}[\Sigma _{11}+B(\Sigma _{22}-\Sigma _{21}\Sigma _{11}^{-1}\Sigma _{12})B']Q^*{}^{\frac{1}{2}}\) is also an eigenvalue of \([\Sigma _{11}+B(\Sigma _{22}-\Sigma _{21}\Sigma _{11}^{-1}\Sigma _{12})B']Q^*\). \(\square \)

1.4 A.4. Proof of Lemma 6 in Sect. 3.1

Proof

Let \(Y=\frac{1}{\sigma }\Sigma ^{-\frac{1}{2}}X\), and \(Z = P'Y\) where \(P'\Sigma ^{\frac{1}{2}}Q\Sigma ^{\frac{1}{2}}P =\Lambda \) where \(\Lambda \) is the diagonal matrix of eigenvalues and P is the associated column matrix of eigenvectors of \(\Sigma ^{\frac{1}{2}}Q\Sigma ^{\frac{1}{2}}\). Then, \(Y \sim N_p(\frac{1}{\sigma }\Sigma ^{-\frac{1}{2}}\mu , I)\) and \(Z\sim N_p(\frac{1}{\sigma }P'\Sigma ^{-\frac{1}{2}}\mu ,I)\) so that:

Now, \(\lbrace Z_i \rbrace _{i=1}^p\) is an an independent collection of random variables, where

and where \(\mu ^*=P'\Sigma ^{-\frac{1}{2}}\mu ,\) and thus \(\lbrace Z_i^2 \rbrace _{i=1}^p\) is a collection of independent random variables with

Since a non-central chi-squared random variable has a monotone likelihood ratio (Lehmann & Romano, 2005), \(\chi ^2_1(\nu _i)\) is stochastically increasing in the parameter \(\nu _i\), and thus is stochastically decreasing in \(\sigma ^2\). Let

Since U is increasing in each of its coordinates, \(\lbrace Z_i^2 \rbrace _{i=1}^p\) is independent collection of random variables, and each \(Z_i^2\) is stochastically decreasing in \(\sigma ^2\), \(U(Z_1^2,Z_2^2,\ldots ,Z_p^2)=\sum _{i=1}^p\lambda _iZ_i^2\) is stochastically decreasing in \(\sigma ^2\), establishing the result. \(\square \)

1.5 A.5. Proof of Lemma 7 in Sect. 3.2

Proof

where \(t=\Vert x-\theta \Vert ^2_{\Sigma _{11}^{-1}}+\Vert y-(\theta +\eta ) \Vert ^2_{\Sigma _{22}^{-1}}\), and \(\sigma _{ij}^{*}\) is the i, \(j^{th}\) element of \(\Sigma _{11}^{-1}\). Upon dividing and multiplying (62) by f(t),

establishing the result. \(\square \)

1.6 A.6. Proof of Lemma 8 in Sect. 4

Proof

Let \(t=\frac{1}{\sigma ^2}(\Vert x-\theta \Vert ^2_{\Sigma _{11}^{-1}} + \Vert y-\eta ^* \Vert ^2_{\Sigma _{22}^{-1}}+\Vert u \Vert ^2)\). Denoting

the partial derivative of F(t) with respect to the \(i^{th}\) coordinate of u is

Therefore,

Let \(g_{x,y}(u) = \frac{uh(x,y,\Vert u \Vert ^2)}{\Vert u \Vert ^2}\) with \(i^{th}\) coordinate

in (65). By the weak differentiability of g, and the expression for the partial derivative of F(t) in (64), expression (65) satisfies

where the equality from (67) to (68) is justified by the weak differentiability of \(g_{x,y}(u)\) for all (x, y).

Since

expression (69) is equivalent to

establishing the result. \(\square \)

Appendix B

1.1 B.1. Development of UMVUE Estimator

When \(\eta =0\) the density of the pair of random variables \(\begin{pmatrix} X\\ Y \end{pmatrix}\) is

Let

so that X is independent of \(X_{2.1}\), and

so that,

\(Y^*\) is an unbiased estimator of \(\theta \), with variance

The joint density of \(\begin{pmatrix} X\\ Y^*\end{pmatrix} \) is

where k is the normalizing constant in (74). By expanding the quadratic form in the exponential of density (74), we can find a complete sufficient statistic for the parameter \(\theta \) that will be unbiased and thus will be the UMVUE estimator. Expanding the quadratic form in (74) yields

which implies the density in (74) is an exponential family of the form

with complete sufficient statistic

Thus, the UMVUE estimator of \(\theta \), \(\delta _c\), is

with variance

When the loss is of the form \(L_Q(d,\theta )=(d-\theta )'Q(d-\theta )\), the risk \(\delta _c\) when \(Y^*\) is unbiased for \(\theta \) is

When the researcher is mistaken and \(Y^*\) is a biased for \(\theta \) (\(\eta \ne 0\)), with bias

the risk of using \(\delta _c\) as an estimator of \(\theta \) will be

which is unbounded in \(\eta \) unlike the estimators develop in Sect. 2 which have bounded risk.

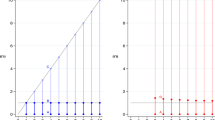

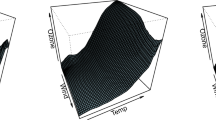

1.2 B.2. Comparison of Risks Between \(\delta _c\) (50) and \(\delta _1\)(45)

To compare the risk \(\delta _1(X,Y)\) and \(\delta _c(X,Y)\), we first compare the risk of using \(\delta _c\) when \(\eta =0\), and show that \(\delta _c\) will have uniformly smaller risk than \(\delta _1\) when \(\eta =0\). We then give sufficient conditions for when the risk of \(\delta _1\) will dominate the risk of \(\delta _c\) by comparing the upper bound for the risk of \(\delta _1(X,Y)\) developed in Corollary 2 to the exact risk of using \(\delta _c\). When \(\eta '\eta =0\),

where

and

by Corollary 2. In comparison, the exact risk of \(\delta _c\)

so that

implying that \(R(\delta _c(X,Y), \theta , 0 ) < R(\delta _1(X,Y), \theta , 0)\).

When the upper bound for the risk of \(\delta _1\) given by Corollary 2 is less than the risk of \(\delta _c\)

Let \(x =\eta '\eta \) where \(x \in [0,\infty )\) and

For

and

expressions (80)–(81) will be satisfied once \(Q(x)>0\). Since \(a>0\) and \(c<0\), Q(x) will have 2 real roots denoted by \(r_1\) and \(r_2\) respectively, where \(r_1 <0\) and \(r_2>0\). Since \(a>0\), \(x >r_2\) implies \(Q(x)>0\) and so a sufficient condition for \(R(\delta _1(X,Y), \theta ,\eta ) < R(\delta _c(X,Y), \theta ,\eta ) \) is

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zinonos, S., Strawderman, W.E. On combining unbiased and possibly biased correlated estimators. Jpn J Stat Data Sci (2023). https://doi.org/10.1007/s42081-023-00194-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42081-023-00194-2