Abstract

Purpose of Review

The purpose of this review was to summarise how machine perfusion could contribute to viability assessment of donor livers.

Recent Findings

In both hypothermic and normothermic machine perfusion, perfusate transaminase measurement has allowed pretransplant assessment of hepatocellular damage. Hypothermic perfusion permits transplantation of marginal grafts but as yet has not permitted formal viability assessment. Livers undergoing normothermic perfusion have been investigated using parameters similar to those used to evaluate the liver in vivo. Lactate clearance, glucose evolution and pH regulation during normothermic perfusion seem promising measures of viability. In addition, bile chemistry might inform on cholangiocyte viability and the likelihood of post-transplant cholangiopathy.

Summary

While the use of machine perfusion technology has the potential to reduce and even remove uncertainty regarding liver graft viability, analysis of large datasets, such as those derived from large multicenter trials of machine perfusion, are needed to provide sufficient information to enable viability parameters to be defined and validated .

Similar content being viewed by others

Introduction

The assessment of donor liver viability before transplantation has traditionally involved pre-retrieval review of the donor circumstances and biochemistry, followed by visual appraisal of the organ in situ in the donor. This has been further refined by the development of prognostic models which give an estimation of the risk of graft failure based on multivariate analysis of large donor datasets, so called donor risk indices [1•, 2,3,4]. These indices include factors relating to donor age, sex, race, height, cause of death (trauma, stroke, anoxia or other), bilirubin, smoking history, the location of the donor in relation to the transplant centre, whether the graft was whole, split, or a partial graft, whether from a brain dead or circulatory death donor and cold ischaemic time [1•, 3, 4].

While such indices have helped reduce uncertainty about the suitability of a liver for transplantation, they have not eliminated it. There are many reasons for this, some of which are enumerated below:

-

1.

Causes of death

While all indices have a broad category distinguishing causes of death associated with better and worse outcomes for the liver following transplantation (e.g. trauma and stroke, respectively), other causes of death may have adverse effects on graft outcomes (e.g. carbon monoxide poisoning). In addition, hepatic ischaemia prior to death (e.g. as a result of an out of hospital cardiac arrest) will have different graft outcomes depending when in the recovery from the initial ischaemic insult the organ was retrieved from the donor and transplanted.

-

2.

Mechanism of death

While donor risk indices include a variable for livers donated after circulatory death (DCD), none include any variable relating to the duration of the withdrawal phase (withdrawal of treatment to circulatory arrest) or the duration of asystole (circulatory arrest to cold in situ perfusion) which may be important outcome determinants [5]. Animal work has documented the adverse hormonal and haemodynamic changes occurring in this period which affect graft outcomes [6, 7]. Similarly, brain death in donation after brain death (DBD) donors has complex and widespread consequences that affect all organs. The autonomic reflex response to increasing intracranial pressure includes the release of massive quantities of catecholamines; this release causes peripheral vasospasm and reduced organ perfusion. In the process of brain death, hemodynamic instability worsens, with loss of sympathetic vascular tone and hypovolemia as a consequence of diabetes insipidus. Brain death also results in a range of pro-inflammatory and immune responses that affect the donor liver and impair outcome [8,9,10]. Variables, such as the time from brain death to donation, have been shown to affect outcomes in renal transplantation and may have similar effects on liver outcomes [11]. None of these are taken into account in the different risk indices.

-

3.

Pre-donation management

A number of different donor treatment strategies have been suggested to influence the outcomes of transplanted organs. One of the most recent is the induction of mild hypothermia (35 ± 0.5 °C) in DBD donors which has been shown to reduce delayed graft function in renal transplantation, and to be particularly beneficial to extended criteria donor kidneys [12].

-

4.

Steatosis

Steatosis is well recognised as an adverse factor, one associated with primary non-function and early allograft dysfunction [13]. It is not included in any of the above prognostic models and is difficult to quantify accurately in the donor [14].

-

5.

Extraction time

Recent work has shown that the period of time taken to extract kidneys from the donor and place them into cold storage influences early outcome significantly [15], and it is likely that the time taken to extract the liver has a similar adverse effect. Although flushed with cold preservation solution in situ, it takes some time for the liver to cool down [16], and during prolonged extraction, it is probable that some rewarming occurs that might have an effect on short- and long-term outcome.

-

6.

Recipient factors

Recipient variables also affect the outcome of liver transplantation and should be kept in mind when selecting an appropriate donor liver [17, 18].

It is against this background of uncertainty that liver transplant surgeons are challenged to decide whether or not to use a liver graft, and in whom to use it, knowing also that a decision not to proceed leaves their patient at risk of death on the waiting list. In 2015 in the USA, 1673 (11%) patients died on the waiting list and 1227 (8%) were removed due to being too sick, while 703 livers were retrieved but not transplanted, representing 9.6% of all retrieved livers [19, 20]. A similar picture is seen in the UK and across the Eurotransplant region, with around 18% of patients dying or being removed from the waiting list while potentially viable DBD and DCD livers go unused [21, 22].

This review summarises some principles that might be used for viability assessment as well as recent advances and current limitations of liver graft viability assessment using extracorporeal machine perfusion technologies.

In assessing viability, it is important to independently assess the major liver cellular compartments.

Viability Assessment of the Hepatocellular Compartment: Metabolic Zonation

The range of biochemical tests examining hepatocellular function makes their interpretation complex, but we believe that the interpretation is helped by considering the tests in the context of metabolic zonation of the liver. Zonation refers to the functional specialisation of different hepatocytes along the liver lobule and is related to the exposure of zone 1 (periportal) hepatocytes to the inflow of blood with higher levels of oxygen, hormones, and metabolic substrates compared to zone 3 hepatocytes. Figure 1 illustrates the zonal distribution of some of the processes occurring in vivo. During extracorporeal perfusion, the liver is subject to different levels of oxygen and different zonal oxygen gradients, may be free of hormonal influence and is exposed to artificial concentrations of substrate depending on the perfusate used, all of which may modify the zonal behaviour.

Schematic drawing illustrating metabolic zonation in the liver with reference to glucose and ammonia metabolism. Blood entering the liver lobule in vivo through hepatic artery (HA) and portal vein (PV) branches is rich in hormones, nutrients and oxygen. Periportal (zone 1) metabolic processes will include those requiring such conditions, while perivenous (zone 3) hepatocytes may preferentially include those metabolic processes that are less dependent on high levels of oxygen, for example, or those requiring products made in the periportal hepatocytes, such as urea

Glucose metabolism was the first process to be identified as having zonal metabolic differences [23], with gluconeogenesis occurring in zone 1 and glycolysis in zone 3. Lactate metabolism is an oxygen and ATP-dependent process predominantly occurring in zone 1. Impaired lactate clearance implies zone 1 damage, and since zone 1 would be the last zone to be deprived of a supply of oxygenated blood, it may actually signify a pan-lobular injury. Conversely, an ischaemic injury to zones 2 and 3 may not affect lactate clearance; hence, lactate clearance is a relative insensitive marker of moderate damage, but may be a useful marker of viability. Damage to zones 2 and 3 may affect the incorporation of circulating glucose into glycogen, and therefore, perfusate glucose might be a marker of severe damage signifying impaired liver viability.

Hepatic regulation of acid-base balance depends upon the differential metabolism of glutamine along the lobule [24, 25]. Ammonia is transported as glutamine to the liver where it is hydrolysed by glutaminase to glutamate and ammonia, and the ammonia then enters the urea cycle to form urea. Glutaminase activity is largely confined to zone 1, and its activity is pH dependent, being inhibited in the presence of an acidosis. Urea synthesis can occur across zones 1, 2 and the first part of zone 3; residual ammonia is taken up by glutamine synthetase which is located in the cells immediately adjacent the central veins [26]. Interruption of ammonia metabolism will compromise the liver’s ability to regulate pH, resulting in worsening acidosis.

Other metabolic processes may be used to demonstrate liver synthetic function, such as the production of coagulation factors, albumin and complement. To be useful, there need to be rapid and sensitive assays for such metabolic products. Metabolic processes involved in drug metabolism are also distributed along the lobule, providing an opportunity to interrogate the integrity of each zone with the appropriate reagent.

Interrogating liver zones will reveal a pattern of damage, but will not necessarily give a global impression of functional liver reserve. Markers of hepatocellular damage, such as transaminase release into the perfusate, give an indication of damage, from which the amount of residual function may be inferred, but they are limited as markers of viability. Evaluating the ability of the liver to metabolise a substrate known to be metabolised by all liver zones may provide an estimate, and such assays are currently under development but are yet to be validated in an extracorporeal circuit [27]. Unlike other organs, the liver has a remarkable regenerative ability and it remains to be defined what level of injury, what threshold of residual functional capacity and which recipient circumstances are required to guarantee complete functional recovery of the liver and survival of the recipient post-transplant.

Viability Assessment of the Cholangiocyte Compartment: Bile Biochemistry

While evaluation of hepatocellular function should enable avoidance of primary non-function of the liver, it will not predict cholangiopathy. To assess the bile duct, different physiological processes need to be monitored.

The amount of bile production has been commonly cited as a marker of liver viability [28, 29], but in our initial experience, the volume of bile produced does not appear to correlate with graft function post-transplant [30•, 31]. Bile production is a combination of two processes, bile salt-dependent secretion by biliary canaliculi (also known as the bile acid dependent canalicular fraction) and bile salt independent secretion [32]. Once in the bile ducts, the bile is subject to modification by cholangiocytes by resorptive and secretory processes, which add bicarbonate and water, and reabsorb glucose, amino acids and bile salts. The bile salt-dependent fraction of bile forms around a third of normal bile, and bile salts are either synthesised by the liver or derived from sinusoidal blood as part of the enterohepatic circulation. In an isolated perfused liver, with no supplementary bile salts added, this fraction is likely to be very small unless bile salts are added to the perfusate [33]. The bile salt-independent production of bile may also be adversely affected by ex situ perfusion. For example, hyperglycaemia, independent of diabetes, has been shown to reduce the production of bile by affecting the cholangiocyte’s absorptive processes; as the cells absorb the higher quantities of glucose, they take up more water from the bile (see below) [34]. Hence, in the presence of the high glucose concentrations seen in many liver perfusions, the volume of bile salt-independent bile produced could be reduced.

Assessment of the Vascular Compartment: Vascular Resistance

Disruption of the endothelial cell lining and the no reflow phenomenon cause an increase in vascular resistance that reflects endothelial damage and viability, although oedema may also influence blood flow to an organ [35]. When a liver is removed from cold storage and reperfused on a machine, vascular resistance is high but falls quickly, both during hypothermic perfusion and normothermic perfusion (personal observations) [36, 37]. This resistance pattern is similar to that seen in hypothermic perfusion of kidneys, and in that setting, resistance is commonly cited as discriminating good from bad kidneys, although the evidence for a threshold value is absent [38, 39]. In a similar manner, some authors have quoted arterial and portal flows as markers of viability [40••, 41], although absolute values of flow are unhelpful without knowledge of perfusion pressure. While there are some preliminary animal data suggesting that portal (and not hepatic arterial) resistance may be discriminatory [30•], this remains to be substantiated. Portal resistance is also affected by the pressure in the hepatic veins, which varies according to which perfusion machine is used and the method of caval drainage (negative pressure or passive drainage), so absolute values will depend on the circumstance of perfusion.

Assessment of the Immune Cell Compartment

The final compartment that contributes to reperfusion injury, and hence viability, is the immune compartment. The liver is host to numerous different leucocyte populations such as Kupffer cells and dendritic cells, all of which may respond to ischaemia-reperfusion injury by production of inflammatory proteins such as cytokines and damage-associated molecular patterns (DAMPs) [42,43,44]. Our unpublished observations show release of large quantities of chemokines, including interleukins-6, -8, -10, -18 and monocyte chemotactic protein 1 (MCP1/CCL2), into the perfusate reflecting activation of the immune cell compartment [42, 43]. This is in contrast to results from hypothermic oxygenated liver perfusion [45, 46]. The ability to measure the degree of immune cell activation during normothermic perfusion in real time may give another dimension to predicting viability post-transplant. It is also one area that is potentially modifiable during perfusion, with the addition of leucocyte filters to reduce the circulating numbers of emergent liver immune cells and direct chemokine inhibitors to moderate immune activation.

Hypothermic Perfusion

One certainty is that the longer the liver remains in static cold storage, the poorer the function. It is possible that some of the effects of hypothermic storage may be mitigated by oxygenated machine perfusion. Two groups have led the evaluation of hypothermic extracorporeal liver perfusion. Guarrera and colleagues were the first to evaluate clinical hypothermic machine perfusion, and although not incorporating an oxygenator into their circuit, analysis of perfusate showed that some oxygenation was achieved passively in the organ chamber [46, 47]. The other group to pioneer hypothermic perfusion is that of Dutkowski and colleagues, who perfused the portal vein alone with actively oxygenated perfusate in a technique they termed hypothermic oxygenated perfusion (HOPE) [48]. This has been followed by Porte and colleagues performing hypothermic oxygenated perfusion of both artery and portal vein or Dual-HOPE (D-HOPE) [49]. Hypothermic extracorporeal liver perfusion has been shown to be associated with good outcomes for livers that would ordinarily be considered marginal, either because of their DCD status or because of other poor prognostic factors [46, 48, 49]. There appears to be a direct benefit in reducing expression of proinflammatory cytokines [46], down-regulation of Kupffer cell activity [45], replenishing ATP stores [50] as well as reducing vascular resistance [51, 52]. All of these may contribute to the function and marked absence of biliary complications, including cholangiopathy, in DCD livers that have experienced prolonged warm ischaemia [53•]. Replenishing oxygen at low temperature may also have the advantage of avoiding the generation of reactive oxygen species (ROS) [54, 55•], while in contrast, the generation of ROS during normothermia has been cited as a complication of aggressive oxygenation during normothermic perfusion with resultant post reperfusion syndrome and vasoplegia [31]. Whether hypothermic extracorporeal liver perfusion improves long-term outcomes is the subject of ongoing randomised controlled clinical trials (NCT01317342; NCT03124641; NCT03031067; NCT02584283).

Assessment of the liver’s functional capacity during cold perfusion is difficult, since metabolic processes are differentially affected by hypothermia and may be unrepresentative of function at normothermia. Nevertheless, biochemical analysis of the perfusate may provide insight into the degree of hepatocellular damage sustained before and during preservation [56], but simple analysis of the effluent from flushing out the cold storage solution during bench work might be equally insightful. Pacheco et al. have previously shown a relationship between the transaminase content of the effluent washed out of the graft immediately before reperfusion and the post-transplant levels in the recipient [57], and more recently, Hoyer et al. showed that the ALT levels during controlled rewarming also mirrored levels post-reperfusion in the recipient [58]. We have also demonstrated that effluent cold storage solution washed out immediately prior to normothermic perfusion correlates with ALT levels during normothermic perfusion and that perfusion levels in turn correlate with post-transplant transaminase levels [36].

Normothermic Perfusion

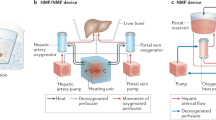

The technique of normothermic extracorporeal liver perfusion (NELP) typically involves perfusing a red cell-based perfusate through hepatic artery and portal vein at physiological pressures and incorporates a heater/oxygenator into the circuit to maintain temperature and oxygenation. In contrast to hypothermic perfusion, NELP provides an opportunity to assess viability using similar biochemical assessments that are employed clinically. The viability assessment itself needs to include evaluation of both hepatocellular and biliary components of injury and function. Any functionality assessment must also recognise that the ability to function in an isolated artificial circulation of around 2 l is not the same as being able to function in vivo.

The absence of validated criteria for viability assessment has not prevented clinical evaluation of NELP, initially in case reports used to assess marginal grafts [59], and latterly in small clinical studies [31, 40••, 41, 60, 61]. Table 1 compares recent studies of NELP with respect to measures used to assess livers during normothermic perfusion, with reference to hepatocellular and biliary compartments. As can be seen, lactate clearance and bile output are the most common functional measures, with transaminase levels as markers of hepatocellular damage. What is more surprising is the absence of assessment of cholangiocyte integrity in many reports.

Hepatocellular Compartment During Normothermic Perfusion

Lactate clearance is the most widely accepted measure of liver function during normothermic perfusion, but it depends only on the viability of zone 1 hepatocytes and not zones 2 and 3. In a small volume perfusion circuit, a relatively small number of viable hepatocytes may be sufficient to clear lactate, and hence, clearance of lactate is not necessarily a marker of viability. Conversely, an inability to clear lactate may represent damage to zone 1 hepatocytes (and thus usually a pan lobular injury) or may be due to concomitant lactate production, as may happen when an aberrant lobar artery is not perfused.

Figure 2 shows typical biochemical profiles during NELP. The raised perfusate glucose which characterises most NELPs is likely to be in part due to glycogen breakdown (zone 3), in part due to the metabolism of lactate and in part due to glucose synthesis from circulating amino acids and pyruvate, although glucose synthesis is likely to be inhibited in the presence of high concentrations of circulating glucose. Glycogen breakdown is an oxygen-independent ATP-independent process occurring during hypothermia [68, 69]. It continues early after reperfusion during NELP, but in viable livers, the high levels of circulating glucose should block glycogenolysis and stimulate glycogenesis. As a result, perfusate glucose falls, reflecting zone 3 integrity. In some perfusions, however, the perfusate glucose may not rise, and as such may imply glycogen depletion or pan-lobular injury; in such circumstances, a glucose challenge may discriminate between the two, since in the glycogen-depleted liver, the added glucose will be metabolised or converted to glycogen. It is possible that a liver with marked disruption of zone 3 metabolism may exhibit a persistently high perfusate glucose, as the ability to incorporate glucose into glycogen is impaired. This interpretation of these observations remains to be proven.

Typical normothermic perfusion profiles. The figure shows schematic graphs with typical biochemical and resistance profiles during normothermic perfusion with our interpretation given our current state of knowledge. Profiles of viable hepatocellular compartment livers are denoted by solid black lines, while dashed lines denote grafts where viability might be in doubt, due to a slow lactate clearance, persistently raised perfusate glucose, rising perfusate transaminase concentration or requirement for continued bicarbonate support to maintain pH. The graphs also show the different biochemical profiles of bile depending on the viability of the ducts, where viable cholangiocytes producing bile with a pH > 7.5, low glucose (especially relative to the high perfusate glucose) and increasing bicarbonate. To date, there is no clinical evidence in support of bile production or hepatic resistance thresholds for viability

Ammonia metabolism plays a crucial role in the liver’s ability to regulate pH. Interruption of ammonia metabolism will therefore result in worsening acidosis. Hence, the requirement for additional alkali supplementation to maintain a near physiological pH during normothermic extracorporeal liver perfusion implies a larger lobular injury affecting all zones.

Cholangiocyte Compartment During Normothermic Perfusion

Cholangiocyte viability during NELP can be monitored by the degree to which the bile has undergone secretory and resorptive modification, and in particular, the secretion of bicarbonate to deprotonate bile acids and the removal of glucose [32, 70]. Deprotonation is believed to be necessary to prevent cholangiocyte damage by bile acids within the duct and is achieved largely by bicarbonate secretion [71, 72]. Thus, in the presence of normal cholangiocyte function, bile should have an alkali pH; our early results suggest that a pH > 7.5 is associated with viable cholangiocytes and no post-transplant cholangiopathy [36].

Glucose absorption by cholangiocytes facilitates water absorption from bile [70], and its concentration in bile should be ≤ 1 mmol/L in the context of a normal plasma glucose of 4 to 8 mmol/L [73]. Assessment of bile glucose during NELP is more complex, since the perfusate glucose concentration is frequently supra-physiological. Nevertheless, a biliary glucose ≤ 3 mmol/L or a bile/perfusate gradient ≥ 10 mmol/L was associated with viable ducts in our series [36].

The lack of viability criteria also hampers development of optimal protocols and perfusates for NELP, since without a validated viability endpoint, the results of clinical and preclinical studies are difficult to interpret. Instead, NELP has been introduced as a tool to reassure surgeons, but as such, it has not completely removed “gut feeling” in deciding whether or not to use a liver, in particular a marginal liver. In addition, there is little evidence to date that moderate periods of NELP are superior to hypothermic oxygenated machine perfusion. Indeed, the incidence of primary non-function, early allograft dysfunction and cholangiopathy in early reports of NELP contrasts with that in reports of hypothermic perfusion [31, 53•, 61, 67].

Conclusions

Years of preclinical perfusion research, focusing on machine perfusion as a preservation method, are now translating into the clinic. While the use of machine perfusion technology has the scope to reduce and potentially even remove uncertainty regarding liver graft viability, there remains an element of gut feeling when determining whether or not to transplant a machine-perfused liver.

Although hypothermic oxygenated perfusion has been shown to permit transplantation of marginal grafts, it has not, to date, afforded the ability to verify viability before implantation. Normothermic extracorporeal liver perfusion provides more information regarding liver function, and normal biochemical parameters during perfusion are readily recognised. However, interpretation of data relating to less than ideal livers remains challenging, in particular differentiating viable from non-viable. Introduction of the technology in the absence of clear guidance regarding viability has allowed accumulation of data to inform assessment, albeit with a risk of using a graft that will not work, or which may be associated with long-term problems such as cholangiopathy. More data are needed before meaningful and accurate guidance can be produced.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance ••Of major importance

• Feng S, Goodrich NP, Bragg-Gresham JL, Dykstrab DM, Punch JD, DebRoy MA, et al. Characteristics associated with liver graft failure: the concept of a donor risk index. Am J Transplant. 2006;6(4):783–790. This is the first risk index to be produced to guide clinicans about donor factors influencing graft survival after liver transplantaiton. Note the equation for the DRI in the text is wrong; a correct version is in Schuabel et al, 2008. https://doi.org/10.1111/j.1600-6143.2006.01242.x.

Schaubel DE, Sima CS, Goodrich NP, Feng S, Merion RM. The survival benefit of deceased donor liver transplantation as a function of candidate disease severity and donor quality. Am J Transplant. 2008;8(2):419–25. https://doi.org/10.1111/j.1600-6143.2007.02086.x.

Braat AE, Blok JJ, Putter H, Adam R, Burroughs AK, Rahmel AO, et al. The Eurotransplant donor risk index in liver transplantation: ET-DRI. Am J Transplant. 2012;12(10):2789–96. https://doi.org/10.1111/j.1600-6143.2012.04195.x.

Collett D, Friend PJ, Watson CJ. Factors associated with short- and long-term liver graft survival in the United Kingdom: development of a UK donor liver index. Transplantation. 2017;101(4):786–92. https://doi.org/10.1097/TP.0000000000001576.

Coffey JC, Wanis KN, Monbaliu D, Gilbo N, Selzner M, Vachharajani N, et al. The influence of functional warm ischemia time on DCD liver transplant Recipients' outcomes. Clin Transpl. 2017;31(10):03. https://doi.org/10.1111/ctr.13068.

Rhee JY, Alroy J, Freeman RB. Characterization of the withdrawal phase in a porcine donation after the cardiac death model. Am J Transplant. 2011;11(6):1169–75. https://doi.org/10.1111/j.1600-6143.2011.03567.x.

White CW, Lillico R, Sandha J, Hasanally D, Wang F, Ambrose E, et al. Physiologic changes in the heart following cessation of mechanical ventilation in a porcine model of donation after circulatory death: implications for cardiac transplantation. Am J Transplant. 2016;16(3):783–93. https://doi.org/10.1111/ajt.13543.

Olinga P, van der Hoeven JA, Merema MT, Freund RL, Ploeg RJ, Groothuis GM. The influence of brain death on liver function. Liver Int. 2005;25(1):109–16. https://doi.org/10.1111/j.1478-3231.2005.01035.x.

Dziodzio T, Biebl M, Pratschke J. Impact of brain death on ischemia/reperfusion injury in liver transplantation. Curr Opin Organ Transplant. 2014;19(2):108–14. https://doi.org/10.1097/MOT.0000000000000061.

Weiss S, Kotsch K, Francuski M, Reutzel-Selke A, Mantouvalou L, Klemz R, et al. Brain death activates donor organs and is associated with a worse I/R injury after liver transplantation. Am J Transplant. 2007;7(6):1584–93. https://doi.org/10.1111/j.1600-6143.2007.01799.x.

Nijboer WN, Moers C, Leuvenink HG, Ploeg RJ. How important is the duration of the brain death period for the outcome in kidney transplantation? Transpl Int. 2011;24(1):14–20. https://doi.org/10.1111/j.1432-2277.2010.01150.x.

Niemann CU, Feiner J, Swain S, Bunting S, Friedman M, Crutchfield M, et al. Therapeutic hypothermia in deceased organ donors and kidney-graft function. N Engl J Med. 2015;373(5):405–14. https://doi.org/10.1056/NEJMoa1501969.

Chu MJ, Dare AJ, Phillips AR, Bartlett AS. Donor hepatic steatosis and outcome after liver transplantation: a systematic review. J Gastrointest Surg. 2015;19(9):1713–24. https://doi.org/10.1007/s11605-015-2832-1.

Neuberger J. Transplantation: assessment of liver allograft steatosis. Nat Rev Gastroenterol Hepatol. 2013;10(6):328–9. https://doi.org/10.1038/nrgastro.2013.74.

Osband AJ, James NT, Segev DL. Extraction time of kidneys from deceased donors and impact on outcomes. Am J Transplant. 2016;16(2):700–3. https://doi.org/10.1111/ajt.13457.

Villa R, Fondevila C, Erill I, Guimera A, Bombuy E, Gomez-Suarez C, et al. Real-time direct measurement of human liver allograft temperature from recovery to transplantation. Transplantation. 2006;81(3):483–6. https://doi.org/10.1097/01.tp.0000195903.12999.bc.

Blok JJ, Putter H, Rogiers X, van Hoek B, Samuel U, Ringers J, et al. Combined effect of donor and recipient risk on outcome after liver transplantation: research of the Eurotransplant database. Liver Transpl. 2015;21(12):1486–93. https://doi.org/10.1002/lt.24308.

Stey AM, Doucette J, Florman S, Emre S. Donor and recipient factors predicting time to graft failure following orthotopic liver transplantation: a transplant risk index. Transplant Proc. 2013;45(6):2077–82. https://doi.org/10.1016/j.transproceed.2013.06.001.

Kim WR, Lake JR, Smith JM, Skeans MA, Schladt DP, Edwards EB, et al. OPTN/SRTR 2015 annual data report: liver. Am J Transplant. 2017;17(Suppl 1):174–251. https://doi.org/10.1111/ajt.14126.

Israni AK, Zaun D, Bolch C, Rosendale JD, Schaffhausen C, Snyder JJ, et al. OPTN/SRTR 2015 annual data report: deceased organ donation. Am J Transplant. 2017;17(Suppl 1):503–42. https://doi.org/10.1111/ajt.14131.

NHS Blood and Transplant. Annual Report on Liver Transplantation: Report for 2015/16. Bristol: 2016.

Jochmans I, van Rosmalen M, Pirenne J, Samuel U. Adult liver allocation in Eurotransplant. Transplantation. 2017;101(7):1542–50. https://doi.org/10.1097/TP.0000000000001631.

Jungermann K. Functional significance of hepatocyte heterogeneity for glycolysis and gluconeogenesis. Pharmacol Biochem Behav. 1983;18(Suppl 1):409–14. https://doi.org/10.1016/0091-3057(83)90208-3.

Häussinger D. Liver and kidney in acid-base regulation. Nephrol Dial Transplant. 1995;10(9):1536.

Brosnan ME, Brosnan JT. Hepatic glutamate metabolism: a tale of 2 hepatocytes. Am J Clin Nutr. 2009;90(3):857S–61S. https://doi.org/10.3945/ajcn.2009.27462Z.

Atkinson DE, Camien MN. The role of urea synthesis in the removal of metabolic bicarbonate and the regulation of blood pH. Curr Top Cell Regul. 1982;21:261–302. https://doi.org/10.1016/B978-0-12-152821-8.50014-1.

Stockmann M, Lock JF, Malinowski M, Seehofer D, Puhl G, Pratschke J, et al. How to define initial poor graft function after liver transplantation? - a new functional definition by the LiMAx test. Transpl Int. 2010;23(10):1023–32. https://doi.org/10.1111/j.1432-2277.2010.01089.x.

Bowers BA, Branum GD, Rotolo FS, Watters CR, Meyers WC. Bile flow--an index of ischemic injury. J Surg Res. 1987;42(5):565–9. https://doi.org/10.1016/0022-4804(87)90033-3.

Sutton ME, op den Dries S, Karimian N, Weeder PD, de Boer MT, Wiersema-Buist J, et al. Criteria for viability assessment of discarded human donor livers during ex vivo normothermic machine perfusion. PLoS One. 2014;9(11):e110642. https://doi.org/10.1371/journal.pone.0110642.

• Brockmann J, Reddy S, Coussios C, Pigott D, Guirriero D, Hughes D, et al. Normothermic perfusion: a new paradigm for organ preservation. Ann Surg. 2009;250(1):1–6. https://doi.org/10.1097/SLA.0b013e3181a63c10. Pig liver ischaemia model describing 7 criteria associated with the unsucessful transplantation of 4 out of 17 livers undergoing normothermic perfusion.

• Watson CJE, Kosmoliaptsis V, Randle LV, Gimson AE, Brais R, Klinck JR, et al. Normothermic perfusion in the assessment and preservation of declined livers before transplantation: Hyperoxia and Vasoplegia-important lessons from the first 12 cases. Transplantation. 2017;101(5):1084–98. https://doi.org/10.1097/TP.0000000000001661. Account of the first 12 liver perfusions in Cambridge, refuting bile production as a viability predictor and identifying that low biliary pH was assocaited with cholangiopathy; in addition associated hyperoxic pefusion conditions with vasoplegia and post reperfusion syndrome.

Esteller A. Physiology of bile secretion. World J Gastroenterol. 2008;14(37):5641–9. https://doi.org/10.3748/wjg.14.5641.

Imber CJ, St Peter SD, de Cenarruzabeitia IL, Lemonde H, Rees M, Butler A, et al. Optimisation of bile production during normothermic preservation of porcine livers. Am J Transplant. 2002;2(7):593–9. https://doi.org/10.1034/j.1600-6143.2002.20703.x.

Garcia-Marin JJ, Villanueva GR, Esteller A. Diabetes-induced cholestasis in the rat: possible role of hyperglycemia and hypoinsulinemia. Hepatology. 1988;8(2):332–40. https://doi.org/10.1002/hep.1840080224.

Bouleti C, Mewton N, Germain S. The no-reflow phenomenon: state of the art. Arch Cardiovasc Dis. 2015;108(12):661–74. https://doi.org/10.1016/j.acvd.2015.09.006.

Watson CJE, Kosmoliaptsis V, Pley C, Randle LV, Fear C, Crick K et al. Observations on the ex vivo perfusion of livers for transplantation – predicting viability. Submitted. 2017.

Derveaux K, Monbaliu D, Crabbe T, Schein D, Brassil J, Kravitz D, et al. Does ex vivo vascular resistance reflect viability of non-heart-beating donor livers? Transplant Proc. 2005;37(1):338–9. https://doi.org/10.1016/j.transproceed.2004.11.065.

Jochmans I, Moers C, Smits JM, Leuvenink HG, Treckmann J, Paul A, et al. The prognostic value of renal resistance during hypothermic machine perfusion of deceased donor kidneys. Am J Transplant. 2011;11(10):2214–20. https://doi.org/10.1111/j.1600-6143.2011.03685.x.

Parikh CR, Hall IE, Bhangoo RS, Ficek J, Abt PL, Thiessen-Philbrook H, et al. Associations of Perfusate biomarkers and pump parameters with delayed graft function and deceased donor kidney allograft function. Am J Transplant. 2016;16(5):1526–39. https://doi.org/10.1111/ajt.13655.

•• Ravikumar R, Jassem W, Mergental H, Heaton N, Mirza D, Perera MT, et al. Liver transplantation after ex vivo Normothermic machine preservation: a phase 1 (first-in-man) clinical trial. Am J Transplant. 2016;16(6):1779–87. https://doi.org/10.1111/ajt.13708. First study of normothermic liver perfusion in man showing safety of the procedure.

Mergental H, Perera MT, Laing RW, Muiesan P, Isaac JR, Smith A, et al. Transplantation of declined liver allografts following Normothermic ex-situ evaluation. Am J Transplant. 2016;16(11):3235–45. https://doi.org/10.1111/ajt.13875.

van Golen RF, van Gulik TM, Heger M. The sterile immune response during hepatic ischemia/reperfusion. Cytokine Growth Factor Rev. 2012;23(3):69–84. https://doi.org/10.1016/j.cytogfr.2012.04.006.

Quesnelle KM, Bystrom PV, Toledo-Pereyra LH. Molecular responses to ischemia and reperfusion in the liver. Arch Toxicol. 2015;89(5):651–7. https://doi.org/10.1007/s00204-014-1437-x.

Land WG, Agostinis P, Gasser S, Garg AD, Linkermann A. Transplantation and damage-associated molecular patterns (DAMPs). Am J Transplant. 2016;16(12):3338–61. https://doi.org/10.1111/ajt.13963.

Schlegel A, Kron P, Graf R, Clavien PA, Dutkowski P. Hypothermic oxygenated perfusion (HOPE) downregulates the immune response in a rat model of liver transplantation. Ann Surg. 2014;260(5):931–937; discussion 7-8. https://doi.org/10.1097/SLA.0000000000000941.

Guarrera JV, Henry SD, Chen SW, Brown T, Nachber E, Arrington B, et al. Hypothermic machine preservation attenuates ischemia/reperfusion markers after liver transplantation: preliminary results. J Surg Res. 2011;167(2):e365–73. https://doi.org/10.1016/j.jss.2010.01.038.

Guarrera JV, Henry SD, Samstein B, Reznik E, Musat C, Lukose TI, et al. Hypothermic machine preservation facilitates successful transplantation of "orphan" extended criteria donor livers. Am J Transplant. 2015;15(1):161–9. https://doi.org/10.1111/ajt.12958.

Dutkowski P, Schlegel A, de Oliveira M, Mullhaupt B, Neff F, Clavien PA. HOPE for human liver grafts obtained from donors after cardiac death. J Hepatol. 2014;60(4):765–72. https://doi.org/10.1016/j.jhep.2013.11.023.

van Rijn R, Karimian N, Matton APM, Burlage LC, Westerkamp AC, van den Berg AP, et al. Dual hypothermic oxygenated machine perfusion in liver transplants donated after circulatory death. Br J Surg. 2017;104(7):907–17. https://doi.org/10.1002/bjs.10515.

Westerkamp AC, Karimian N, Matton AP, Mahboub P, van Rijn R, Wiersema-Buist J, et al. Oxygenated hypothermic machine perfusion after static cold storage improves hepatobiliary function of extended criteria donor livers. Transplantation. 2016;100(4):825–35. https://doi.org/10.1097/TP.0000000000001081.

Lee CY, Zhang JX, Jones JW Jr, Southard JH, Clemens MG. Functional recovery of preserved livers following warm ischemia: improvement by machine perfusion preservation. Transplantation. 2002;74(7):944–51. https://doi.org/10.1097/01.TP.0000026246.17635.67.

Op den Dries S, Sutton ME, Karimian N, de Boer MT, Wiersema-Buist J, Gouw AS, et al. Hypothermic oxygenated machine perfusion prevents arteriolonecrosis of the peribiliary plexus in pig livers donated after circulatory death. PLoS One. 2014;9(2):e88521. https://doi.org/10.1371/journal.pone.0088521.

• Dutkowski P, Polak WG, Muiesan P, Schlegel A, Verhoeven CJ, Scalera I, et al. First Comparison of hypothermic oxygenated perfusion versus static cold storage of human donation after cardiac death liver transplants: an international-matched case analysis. Ann Surg. 2015;262(5):764–70. https://doi.org/10.1097/SLA.0000000000001473. discussion 70–1. Initial clinical experience with hypothermic perfusion. Althoguh the comparator group is not ideal, the results of HOPE are excellent.

Luer B, Koetting M, Efferz P, Minor T. Role of oxygen during hypothermic machine perfusion preservation of the liver. Transpl Int. 2010;23(9):944–50. https://doi.org/10.1111/j.1432-2277.2010.01067.x.

• Schlegel A, Rougemont O, Graf R, Clavien PA, Dutkowski P. Protective mechanisms of end-ischemic cold machine perfusion in DCD liver grafts. J Hepatol. 2013;58(2):278–86. https://doi.org/10.1016/j.jhep.2012.10.004. Good account of why hypothermic oxygenated perfusion is superior to simple cold storage, and perhaps to normothermic perfusion.

Liu Q, Vekemans K, Iania L, Komuta M, Parkkinen J, Heedfeld V, et al. Assessing warm ischemic injury of pig livers at hypothermic machine perfusion. J Surg Res. 2014;186(1):379–89. https://doi.org/10.1016/j.jss.2013.07.034.

Pacheco EG, Silva OD Jr, Sankarankutty AK, Ribeiro MA Jr. Analysis of the liver effluent as a marker of preservation injury and early graft performance. Transplant Proc. 2010;42(2):435–9. https://doi.org/10.1016/j.transproceed.2010.01.018.

Hoyer DP, Paul A, Minor T. Prediction of hepatocellular preservation injury immediately before human liver transplantation by controlled oxygenated rewarming. Transplant Direct. 2017;3(1):e122. https://doi.org/10.1097/TXD.0000000000000636.

Watson CJ, Kosmoliaptsis V, Randle LV, Russell NK, Griffiths WJ, Davies S, et al. Preimplant Normothermic liver perfusion of a suboptimal liver donated after circulatory death. Am J Transplant. 2016;16(1):353–7. https://doi.org/10.1111/ajt.13448.

Selzner M, Goldaracena N, Echeverri J, Kaths JM, Linares I, Selzner N, et al. Normothermic ex vivo liver perfusion using Steen solution as Perfusate for human liver transplantation-first north American results. Liver Transpl. 2016;22(11):1501–8. https://doi.org/10.1002/lt.24499.

Bral M, Gala-Lopez B, Bigam D, Kneteman N, Malcolm A, Livingstone S, et al. Preliminary single-center Canadian experience of human Normothermic ex vivo liver perfusion: results of a clinical trial. Am J Transplant. 2017;17(4):1071–80. https://doi.org/10.1111/ajt.14049.

op den Dries S, Karimian N, Sutton ME, Westerkamp AC, Nijsten MW, Gouw AS, et al. Ex vivo normothermic machine perfusion and viability testing of discarded human donor livers. Am J Transplant. 2013;13(5):1327–35. https://doi.org/10.1111/ajt.12187.

Liu Q, Nassar A, Farias K, Buccini L, Baldwin W, Mangino M, et al. Sanguineous normothermic machine perfusion improves hemodynamics and biliary epithelial regeneration in donation after cardiac death porcine livers. Liver Transpl. 2014;20(8):987–99. https://doi.org/10.1002/lt.23906.

Nassar A, Liu Q, Farias K, D'Amico G, Tom C, Grady P, et al. Ex vivo normothermic machine perfusion is safe, simple, and reliable: results from a large animal model. Surg Innov. 2015;22(1):61–9. https://doi.org/10.1177/1553350614528383.

Reiling J, Lockwood DS, Simpson AH, Campbell CM, Bridle KR, Santrampurwala N, et al. Urea production during normothermic machine perfusion: price of success? Liver Transpl. 2015;21(5):700–3. https://doi.org/10.1002/lt.24094.

Banan B, Watson R, Xu M, Lin Y, Chapman W. Development of a normothermic extracorporeal liver perfusion system toward improving viability and function of human extended criteria donor livers. Liver Transpl. 2016;22(7):979–93. https://doi.org/10.1002/lt.24451.

Pezzati D, Ghinolfi D, Balzano E, De Simone P, Coletti L, Roffi N, et al. Salvage of an octogenarian liver graft using Normothermic perfusion: a case report. Transplant Proc. 2017;49(4):726–8. https://doi.org/10.1016/j.transproceed.2017.02.014.

Dodero F, Benkoel L, Allasia C, Hardwigsen J, Campan P, Botta-Fridlund D, et al. Quantitative analysis of glycogen content in hepatocytes of human liver allograft after ischemia and reperfusion. Cell Mol Biol. 2000;46(7):1157–61.

Cherid A, Cherid N, Chamlian V, Hardwigsen J, Nouhou H, Dodero F, et al. Evaluation of glycogen loss in human liver transplants. Histochemical zonation of glycogen loss in cold ischemia and reperfusion. Cell Mol Biol (Noisy-le-grand). 2003;49(4):509–14.

• Tabibian JH, Masyuk AI, Masyuk TV, O'Hara SP, LaRusso NF. Physiology of cholangiocytes. Compr Physiol. 2013;3(1):541–65. https://doi.org/10.1002/cphy.c120019. Excellent review of cholangiocyte physiology.

Beuers U, Hohenester S, de Buy Wenniger LJ, Kremer AE, Jansen PL, Elferink RP. The biliary HCO(3)(−) umbrella: a unifying hypothesis on pathogenetic and therapeutic aspects of fibrosing cholangiopathies. Hepatology. 2010;52(4):1489–96. https://doi.org/10.1002/hep.23810.

Erlinger S. A HCO3-umbrella protects human cholangiocytes against bile salt-induced injury. Clin Res Hepatol Gastroenterol. 2012;36(1):7–9. https://doi.org/10.1016/j.clinre.2011.11.006.

Guzelian P, Boyer JL. Glucose reabsorption from bile. Evidence for a biliohepatic circulation. J Clin Invest. 1974;53(2):526–35. https://doi.org/10.1172/JCI107586.

Acknowledgements

The University of Cambridge has received salary support in respect of CJEW from the NHS in the East of England through the Clinical Academic Reserve. The views expressed are those of the authors and not necessarily those of the NHS or Department of Health.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Ina Jochmans reports non-financial support from Astellas Pharma (for travel, accommodation and registration for conferences) and received speaker fees paid to institution from Sanofi Genzyme, outside the submitted work.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Additional information

This article is part of the topical collection on Machine Preservation of the Liver

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Watson, C.J.E., Jochmans, I. From “Gut Feeling” to Objectivity: Machine Preservation of the Liver as a Tool to Assess Organ Viability. Curr Transpl Rep 5, 72–81 (2018). https://doi.org/10.1007/s40472-018-0178-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40472-018-0178-9