Abstract

As civil engineering structures are growing in dimension and longevity, there is an associated increase in concern regarding the maintenance of such structures. Bridges, in particular, are critical links in today’s transportation networks and hence fundamental for the development of society. In this context, the demand for novel damage detection techniques and reliable structural health monitoring systems is currently high. This paper presents a model-free damage detection approach based on machine learning techniques. The method is applied to data on the structural condition of a fictitious railway bridge gathered in a numerical experiment using a three-dimensional finite element model. Data are collected from the dynamic response of the structure, which is simulated in the course of the passage of a train, considering the bridge in healthy and two different damaged scenarios. In the first stage of the proposed method, artificial neural networks are trained with an unsupervised learning approach with input data composed of accelerations gathered on the healthy bridge. Based on the acceleration values at previous instants in time, the networks are able to predict future accelerations. In the second stage, the prediction errors of each network are statistically characterized by a Gaussian process that supports the choice of a damage detection threshold. Subsequent to this, by comparing damage indices with said threshold, it is possible to discriminate between different structural conditions, namely between healthy and damaged. From here and for each damage case scenario, receiver operating characteristic curves that illustrate the trade-off between true and false positives can be obtained. Lastly, based on the Bayes’ Theorem, a simplified method for the calculation of the expected total cost of the proposed strategy, as a function of the chosen threshold, is suggested.

Similar content being viewed by others

1 Introduction

The present time is without doubt the most appropriate for the development of robust and reliable structural damage detection systems as ageing civil engineering structures, such as bridges, are being used past their life expectancy and well beyond their original design loads. Often, when a significant damage to the structure is discovered, the deterioration has already progressed far, and required repair work is substantial and costly. Seldom will the structure be demolished and a new one constructed in its place. This entails substantial expenditures and has negative impact on the environment and traffic during replacement. The ability to monitor a structure in real-time and detect damage at the earliest possible stage supports clever maintenance strategies and provides accurate remaining life predictions. For the exposed reasons, the demand for such smart structural health monitoring (SHM) systems is currently high.

The existing methods implemented in damage detection can be essentially divided into model-based and model-free. The first approach presupposes an accurate finite element model of the target structure, following that one obvious advantage of this approach is that the damage detected has a direct physical interpretation. Yet, it may be difficult to develop an accurate model of a complex structure and it can be intricate as well to obtain and update the parameters defining the structure. On the contrary, the model-free approach allows circumventing the problem of having to develop a precise structural model, mostly by means of artificial intelligence, but it is more difficult to assign a physical meaning to the detected damage. The model-free approach consists in training an algorithm on some acquired data, usually in an unsupervised manner, so that at the end it is able to tell apart different condition states of the structure. These algorithms are referred to as outlier or novelty detection methods: if there are significant deviations between measured and expected values, the algorithm is said to indicate novelty, meaning that the structure has departed from its normal condition and is probably damaged.

This paper presents a model-free damage detection approach based on artificial neural networks. The method is applied to data gathered from simulations of train passages on the finite element model of a fictitious railway bridge. The data sets are obtained from one healthy and two damage case scenarios of the bridge. This data would in reality be obtained directly from measurements performed on the real structure of interest, without the need to develop a complex numerical model. In the first stage of the proposed method, artificial neural networks are trained in an unsupervised manner with input data composed of gathered accelerations on the healthy bridge. Based on the acceleration values at previous instants in time, the networks are able to predict future accelerations. In the second stage, the prediction errors of each network are statistically characterized by a Gaussian process that supports the choice of a damage detection threshold. Then, by comparing damage indices with the threshold, the system is able to point out damage on the bridge. To evaluate the performance of the system, receiver operating characteristic curves that illustrate the trade-off between true and false positives are generated. Finally, based on the Bayes’ theorem, a simplified method for the calculation of the expected total cost of the proposed strategy, as a function of the chosen threshold, is suggested.

1.1 Literature review

A review of some of the most recent developments within SHM and damage detection that resulted in published articles in scientific peer-reviewed journals is here presented. Regarding optimal sensor placement (OSP), a crucial part of any damage detection system, mention can be made to the work of Huang et al. [1] using genetic algorithms, where the proposed algorithm uses the sensor types as input and criteria about the desired measurement accuracy to deliver the optimal number of sensors and their location. The method was validated with experimental analysis on a single-bay steel frame structure case study. Li et al. [2] proposed a novel approach termed dual-structure coding and mutation particle swarm optimization (DSC-MPSO) algorithm. Using a numerical example of a three-span pre-stressed concrete cable-stayed bridge, this approach was demonstrated to have increased convergence speed and precision when compared to the other state-of-the-art methods (e.g. genetic algorithm). Still within the OSP topic, Yi et al. [3] proposed a new optimal sensor placement technique in multi-dimensional space since most available procedures for sensor placement only guarantee optimization in an individual structural direction, which results in an ineffective optimization of the sensing network when employing multi-axial sensors. A numerical study was conducted on a benchmark structure model.

Another common topic of research is the separation of the changes in structural response caused by operational and environmental variability from the changes triggered by damage. This is indeed one of the principal challenges to transit SHM technology from research to practice. Jin et al. [4] proposed an extended Kalmar filter-based artificial neural network for damage detection in a highway bridge under severe temperature changes. The time-lagged natural frequencies, time-lagged temperature and season index are selected as the inputs for the neural network, which predicts the natural frequency at the next time step. The Kalmar filter is used to estimate the weights of the neural network and the confidence intervals of the natural frequencies that allow for damage detection. The method was tested with a numerical case study of an existing bridge. The published work regarding machine learning methods applied in SHM is continuously expanding.

Machine learning algorithms have been implemented to expose structural abnormalities from monitoring data. These algorithms normally belong to the outlier detection category, which considers training data coming exclusively from the normal condition of the structure (unsupervised learning). Worden and Farrar [5] have contributed with reputable work on monitoring of structures using machine learning techniques, such as neural networks, genetic algorithms, and support vector machines. Rao and Lakshmi [6] proposed a novel damage identification technique combining proper orthogonal decomposition (POD) with time-frequency analysis using Hilbert Huang transform (HHT) and dynamic quantum particle swarm optimization (DQPSO). The algorithm was tested with two numerical examples with single and multiple damages. Diez et al. [7] proposed a Clustering-based data-driven machine learning approach, using the k-mean clustering algorithm, to group joints with similar behavior on the bridge to separate the ones working in normal condition from the ones working in abnormal condition. The feasibility of the approach was demonstrated using data collected during field test measurements of the Sydney Harbour Bridge. Zhou et al. [8] proposed a structural damage detection method based on posteriori probability support vector machine (PPSVM) and Dempster-Shafer (DS) evidence theory adopted to combine the decision level fusion information. The proposed damage detection method was verified by means of experimental analysis of a benchmark structure model. All the mentioned latter combined methods revealed to improve the accuracy and stability of the damage detection system when compared to other popular data mining methods.

There are several published works regarding the detection of structural damage with the aid of Machine Learning techniques. Still, most of the proposed methods are based on a supervised learning approach, which requires data of the damage condition of the structure to be available. This poses a difficulty to the practical implementation of these methods because, as it is known, the data in damaged condition does not normally exist. The method presented in this paper consists in an updated mode-free damage detection algorithm using Machine Learning techniques based on the work of González [9]. The primary step in this study involves the development of a three-dimensional finite element model of a railway bridge. The vertical deck accelerations at different positions of the bridge are gathered using simulations of train passages and assuming that the bridge behaves in both normal and abnormal conditions, considering one baseline model and two damaged models, respectively. The first stage of the proposed method consists in the design and training of artificial neural networks (ANNs) which, given any input features, are trained to predict future values of the features. Following the validation of the best trained network, the second stage of the proposed algorithm consists in using the predicted acceleration errors to fit a Gaussian process (GP) that enables to perform a statistical analysis of the errors’ distributions. After this process, damage indices (DIs) can be obtained and compared to a defined detection threshold for the system, allowing for one to study the probability of true and false detection events. From these results, a receiver operating characteristic (ROC) curve is generated and used to obtain information about the performance of the algorithm. Finally, a simplified method for the calculation of the expected total cost of the strategy based on the Bayes’ Theorem is proposed.

1.2 Background in SHM

Visual inspections are regarded as the basic technique for the assessment of condition of in-service bridges, as this technique is intuitive and provides information in a direct way exclusively based on observation. However, this technique used alone has many shortcomings due to the growing complexity in the design of modern structures. One can mention as disadvantages the fact that the technique may not be practicable if the structure has restricted accessibility or if the traffic is excessively disturbed, its application is time-discrete and the conclusion of the visual inspection is inevitably subjective. In this sense, effort was placed in developing damage detection techniques that handle measurement data to find structural changes [10].

Structural health monitoring techniques comprise non-physically and physically based methods. The former uses a system model to study a physical structure and predict the responses; examples of methods that fall into this category are the probabilistic, non-parametric and autoregressive models (AR) methods [11]. The physically based methods perform damage identification by comparing natural frequencies and mode shapes data between the healthy and damaged structural model [12]; examples of methods that fall into this category are the vibration-based damage identification methods (VBDIM), such as frequency response [13], mode shape and strain energy methods [14], modal flexibility and modal stiffness methods. Many broad classes of damage detection algorithms have emerged, most dealing with vibration measurements and modal analysis of the structural system. Although structural properties like damping, modal shapes and frequencies are not directly measurable, they can be inferred from other measured data. These properties have somewhat clear definitions and allow the relatively easy design of algorithms that define them, therefore being good candidates for parameters of a damage detection technique.

Damage identification is far from being a forthright process, from the basic step of defining what damage is up to decoding it in mathematical terms. Due to the random variability in experimentally measured dynamic response data, statistical approaches are desired to make sure that the perceived changes in the structure’s response are coming from existing damage and not from variations in the operational and environmental conditions. The careful integration of recent sensing technology with traditional inspection and avant-garde diagnostic techniques will provide essential information that engineers and policymakers need to manage the structures that serve our society.

2 Method

2.1 Artificial neural networks

The first stage of damage identification uses methods which provide a qualitative indication of the presence of damage in the structure, which can be accomplished without prior knowledge of how the system behaves when damaged. These algorithms are referred to as outlier or novelty detection methods. To solve the task of novelty detection, one can use learning algorithms, such as artificial neural networks (ANNs).

Artificial neural networks are a family of mathematical models inspired by the structure of biological neural networks (Fig. 1) in which the basic processing unit of the brain is the neuron. Neurons interact with each other by summing stimuli from connected neurons. Once the total stimuli exceed a certain threshold, the neuron fires—a phenomenon called activation—and it generates a new stimulus that is passed onto the network. Knowledge is encoded in the connection strengths between the neurons in the brain.

Each node \(i\) is connected to each node \(j\) in the previous and following layers over a connection of weight \(w_{ij}\). In layer \(k\), a weighted sum is performed at each node \(i\) of all the signals \(x_{j}^{{\left( {k - 1} \right)}}\) from the preceding layer \(k - 1\), giving the excitation \(z_{i}^{\left( k \right)}\) of the node; this sum is then passed through a nonlinear activation function \(f\) to emerge as the output of the node \(x_{i}^{\left( k \right)}\) to the next layer. The process can be expressed as [5]:

During the training phase of the network, the connection weights \(w_{ij}\) are continuously adjusted. The type of training can involve unsupervised and supervised learning approaches depending on the nature of the problem being solved and the type of training data available. In unsupervised learning, the aim is to discover groups of similar instances within the data while having no information concerning the class label of the instance or how many classes exist. In supervised learning, the instances are given with known labels, the corresponding correct outputs. In every training step, a set of inputs is passed forward in the network giving trial outputs that can be compared to the desired outputs. If it happens that the error is small, the weights are not adjusted; otherwise, the error is passed backwards and the training algorithm uses it to adjust the weights trying to decrease the error—this algorithm is known as the back propagation algorithm.

ANNs are a powerful tool for SHM in the aid of problems in sensor data processing that require parallelism and optimization due to the high complexity of the variables’ interactions. Generally, the ANNs offer solutions to four different problems: auto association, regression, classification and novelty detection. Prosaic examples of potential applications of ANNs are speech recognition and generation, optimization of chemical processes, manufacturing process control, cancer cell analysis, transplant time optimizer, recognition of chromosomal abnormalities, solution of optimal routing problems, such as the Traveling Salesman Problem, et cetera.

2.2 Performance of a classifier

The damage detection process concerning civil engineering structures involves a significant amount of uncertainty, which can determine whether damage is discovered or not. The uncertainties can stem from many causes but are generally due to [15]: inaccuracy in the FEM discretization; uncertainties in geometry and boundary conditions; non-linearity in material properties, environmental settings (e.g. variations in temperature and traffic); errors associated with measurements (e.g. noise) and signal post-processing techniques.

The consequences of false detection can be more or less stern and it is, thus, imperative to carefully investigate the sources of uncertainties, quantify and control their influence. The analysis of Type I (false positive) and Type II (false negative) errors is a frequent practice of reporting the performance of a binary classification, where there are four possible outcomes (Table 1) from an inspection event in a structure. Two statistical tools that enable the evaluation of these errors, in terms of unwanted results (false positives and negatives) and desired results (true positives and negatives), are the probability of detection (POD) curve [16] and the receiver operating characteristic (ROC) curve [17], the latter used in this paper.

A ROC curve is a two-dimensional graphic in which the true positive rate (TPr) is plotted against the false positive rate (FPr) for a given threshold. The graphic demonstrates thus relative trade-offs between benefits and costs, respectively, TPr and FPr, depending on a threshold that is selected (Fig. 2). A very high (strict) threshold will never indicate damage since the classifier finds no positives, resulting in 0% of false and true positives, whereas a very low (lenient) threshold will always indicate damage since everything is classified positive, resulting in 100% of false and true positives. One common way to define the best threshold is by fixing an acceptable FPr and then trying to maximize the TPr.

The ROC curve provides a tool for cost-benefit analysis assisting the practitioner in the selection among different available classifiers and their detection threshold. The optimal detection threshold will commonly be the one that minimizes the total expected cost, which will be connected with the odds of false detection. The accepted idea is that one point in the ROC space is considered better than another if it is associated with a higher TPr for the same FPr. The closer the curve is to the left and top borders of the ROC space, resembling an inverted L shape, the superior the performance of the classifier; the closer the curve is to a 45° diagonal in the ROC space, the less accurate is the classifier.

2.3 Expected cost of the damage detection strategy

Even though the ROC curve constitutes a helpful tool for the determination of the best classifier, it is difficult to decide among thresholds solely based on their correspondent pair TPr/FPr. To assign a worth to each trade-off, the criterion was decided as that the best threshold will yield the minimum expected cost of the damage detection strategy. This cost will be given as the sum of several expenses, such as the ones associated with the flawed performance of the damage detection system, i.e. associated with false positives and negatives. The first type of error is often associated with extraneous inspections and repairs while the second is associated with accumulation of damage by the lack of action, which can eventually lead to life-safety implications in the long run.

The total expected cost, \(C_{\text{T}}\), specified a certain threshold, can be given as [9] a linear combination of the probabilities, \(p_{o}\), of four potential outcomes \(o\) multiplied by the associated costs, \(C_{o}\), multiplied by the a priori probability of the real condition \(c\) of the structure, \(p\left( c \right)\). The following expression is obtained.

where in the presence of the real condition of damage (\(d\)), \(p_{d} \left( {h|e} \right)\) and \(p_{d} \left( {d|e} \right)\) are the probabilities of health and damage given evidence, respectively; in the presence of the real condition of health (\(h\)), \(p_{h} \left( {h|e} \right)\) and \(p_{h} \left( {d|e} \right)\) are the probabilities of health and damage given evidence, respectively; \(p\left( d \right)\) is the priori probability that the structure is damaged; \(p\left( h \right)\) is the a priori probability that the structure is healthy. The evidence, in reference to the proposed damage detection approach, is given by the SHM system after measurements on the structure, acquired during a certain time, are analyzed. It all boils down to whether evidence suggests that the structure is healthy or damaged.

The costs \(C\) that appear in (Eq. 2) are multiplied by the respective associated probabilities \(p\). In this way, one can establish an equilibrium concerning the consequences of damage accumulation or potential structural failure and the expenses with safety measures to mitigate those consequences. It is however thought that the calculation process of the total expected cost (Eq. 2) can be simplified given the following assumptions:

-

there are no costs associated with true negatives, as this corresponds to an ideal situation where damage does not exist and is not detected;

-

the costs related to true positives are disregarded as that is what is sought from the system (to detect existing damage), meaning that these are “desired” costs;

-

the costs related to false positives rely in inspections only. It is assumed that after detection and consequent inspection, no damage is found and therefore there is no need to proceed with repair. The costs of inspection will vary depending on several factors: the inspection technique, the frequency of inspections, the implications of performing inspection in the normal use of the structure (e.g. causing disturbances in the traffic flow), the restoration cost saved by earlier inspection, the difficulty in the access to the element to be inspected, et cetera;

-

the costs related to false negatives are the most penalizing taking into account the potential tragic consequences that can result from missing damage. These costs are established based on risk assessment and valuation of material costs given the occurrence of a traffic accident. The values of human life loss, degree of injury resulting in inpatient and outpatient care, and of property damage are estimated reflecting their cost to society.

After the above mentioned assumptions are considered, (Eq. 2) can be simplified into

The probabilities found in (Eq. 3) are calculated through the Bayes’ Theorem. This theorem provides a mean of understanding how the probability that a theory or hypothesis (\(t\)) is true is affected, strengthened or weakened, by a new piece of evidence (\(e\)) that can confirm or not the theory, respectively. This is equivalent to saying that, in the present study, the probability of detection is updated after each new train passage. Bayes’ theorem is mathematically expressed as:

where \(p\left( t \right)\) is the estimated probability that the theory is true before taking into account the new piece of evidence; \(p\left( {\bar{t}} \right)\) is the probability that the theory is not true; \(p\left( {e|t} \right)\) is the probability of obtaining the new evidence \(e\) given that the theory is true; \(p\left( {e|\bar{t}} \right)\) is the probability of obtaining the new evidence \(e\) given that the theory is not true. In this sense, we can say that \(p\left( t \right)\) is the prior probability of \(t\) and that \(p\left( {t|e} \right)\) is the posterior probability of \(t\). Probabilities \(p\left( {e|t} \right)\) and \(p\left( {e|\bar{t}} \right)\) are read straightforward from the ROC curve, for a given threshold. Applying the Bayes’ theorem in the context of damage detection, for example for the probability of damage given detection, yields the ensuing expression:

where \(d\) stands for damaged condition; \(h\) stands for healthy condition; \(p\left( d \right)\) is the probability of damaged condition; \(p\left( h \right)\) is the probability of healthy condition; \(p\left( {{\text{e}}|d} \right)\) is the probability of getting the evidence given damaged condition; \(p\left( {{\text{e}}|h} \right)\) is the probability of getting the evidence given healthy condition. The probabilities for the other three scenarios are obtained in an analogous way and the probabilities of detection, \(p\left( {{\text{e}}|d} \right)\) and \(p\left( {{\text{e}}|h} \right)\), are straightforwardly read from the ROC curve giving a specific detection threshold. Equation 5 can also be written as:

where \(D\) is the number of train passages that indicates the presence of damage in the structure, \(H\) is the number of train passages that does not indicate the presence of damage in the structure, and TP, FN, FP and TN (Table 1) are the probabilities read directly from the ROC curve for a defined threshold.

Supposing that the costs related to the different outcomes are known fixed numbers, the following approach is pursued each time a new observation takes place for the calculation of the expected total cost:

(For a specific ROC curve)

-

1.

Select detection threshold;

-

2.

Select length of the measurement vector (i.e. the number of observed train passages), \(m\);

-

3.

Stochastically generate realistic \(D_{d}\) and \(H_{d}\), within \(m\), based on the ROC curve and supposing that the structure is damaged:

-

(a)

Obtain \(p_{d} \left( {h|e} \right)\) according to (Eq. 6);

-

(a)

-

4.

Stochastically generate realistic \(D_{h}\) and \(H_{h}\), within \(m\), based on the ROC curve and supposing that the structure is healthy:

-

(a)

Obtain \(p_{h} \left( {d|e} \right)\) according to (Eq. 6);

-

(a)

-

5.

Calculate the expected total cost according to (Eq. 3).

3 Numerical case study

3.1 Bridge and FE model

The method here proposed has the potential to be readily used once plenty of data are collected on the real structure of interest, meaning that there is no need to develop a complex structural model. While the damage detection method is a model-free method, in the lack of measurements from an existing bridge that could be used as a case study, the authors decided to obtain the data from a Finite Element model of a fictitious, yet realistic, bridge.

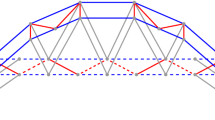

A numerical 3D finite element (FE) model of a single-track railway bridge was developed using FEM software ABAQUS [18]. The structure consists of the following parts: the concrete deck of constant thickness, the two steel girder beams that support the deck and the steel cross bracings that connect the girders. The deck and the girder beams were modelled as shell elements and the cross bracings were modelled as truss elements. All the elements of the bridge are assumed to be rigidly connected to each other. The relevant material and geometric properties of the structural parts are shown in Tables 2 and 3, respectively.

In Table 2, \(E_{\text{concrete}}\) is the Elastic modulus for concrete, \(E_{\text{steel}}\) is the Elastic modulus for steel, \(\rho_{\text{concrete}}\) is the density for concrete, \(\rho_{\text{steel}}\) is the density for steel, \(\nu_{\text{concrete}}\) is the Poisson ratio for concrete and \(\nu_{\text{steel}}\) is the Poisson ratio for steel. Rayleigh damping was considered with damping ratio \(\xi\) of 0.5% for the first mode, as prescribed by the Eurocode [19] for composite bridges with spans exceeding 30 m. In Table 3, \(L_{\text{bridge}}\) is the bridge length, \(w_{\text{deck}}\) is the width of the deck, \(h_{\text{deck}}\) is the thickness of the deck, \(w_{\text{flange}}\) is the width of the flange, \(e_{\text{flange}}\) is the thickness of the flange, \(h_{\text{web}}\) is the height of the web and \(e_{\text{web}}\) is the thickness of the web.

Damage in the bridge is simulated considering two damage scenarios: in damage case 1 (DC1), a section of the bottom flange of one girder beam is removed (Fig. 3), in an attempt to represent a damage situation where a fatigue crack exists. The cut-out section has the dimensions of the flange width by some longitudinal length l, reflecting a situation when a propagating crack has reached its critical depth (about 30% of the flange’s width or less) which causes a sudden rupture through the whole flange width; in damage case 2 (DC2), one bracing is removed (Fig. 4), which equivalently corresponds to reducing to approximately zero its Elastic modulus in the model. Assuming that the girder beam and bracings are connected by high-tension bolts, this can reflect a situation where there is looseness in the bolted connections [20], with resulting inefficiency of the bracing. Both these fictitious damage scenarios are likely situations to be encountered in reality, namely fatigue, as it is one of the most common failure mechanisms occurring in steel and composite steel-concrete bridges. The numbers 1–6 in the figures below represent the locations of the accelerometers that are installed on the top of the bridge deck: three aligned with the train track and three aligned with the girder beam in which damage in DC1 takes place. These correspond to specific nodes in the bridge FE model where the response of the structure will be measured.

The proposed method for structural assessment is intended to detect existing damage from the measured vibration of the bridge. The most common dynamic loads come from traffic, which is expected to be continuous while the bridge is in service. Therefore, traffic-induced vibration was obtained by simulating the passage of a train with a certain constant configuration, crossing the bridge at speeds ranging within [70–100] km/h, in increments of 0.1 km/h. Data was collected at a sampling frequency of about 130 Hz during 4 s, yielding a total of 300 different train passages and corresponding measurement data sets. The moving axle loads were modelled as series of impulse forces with short-time increments conforming to vehicle motion. Figure 5 depicts the configuration of the train, whose properties are found in Table 4.

The train model was based on the HSLM (high-speed load model) train with two assumptions: the train has the dimensional properties (coach length, \(D\), and bogie axle, \(d\)) of the HSLM-A4 train but is composed of only two intermediate coaches (\(N)\) instead of the 15 accordingly to [19]; maintaining the train configuration above described, three different axle loads [170, 180, 190] kN were assumed, even though the HSLM-A4 train model only considers a point force of 190 kN. This model was chosen under the belief that such vehicle model is more complex and hence realistic than the one used in the previous study and nonetheless more simple than the HSLM train model, allowing for instance to reduce the computation time during the simulations. The train–bridge interaction was left out of this study, for the sake of simplification, but should be considered in further studies.

3.2 ANN model

In this work, the ANNs were developed and trained by means of the Neural Network Toolbox available in MATLAB [21]. The architecture of the network is something to be decided ahead of the training phase and it depends mainly on the available amount of data, which for this study was generated by simulations of the FE model above described for 300 train passages. The choice of certain architecture has important consequences in regard to how well the network will make the predictions. In an initial trial, the data from 150 train passages was used for training the network and the 150 remaining ones were used for testing and validation of the trained network. The measured signal is in reality distorted by various sources of error and, to consider that fact, Gaussian white noise with a constant standard deviation of 0.0005 m/s2 was added to the uncorrupted acceleration time histories obtained from the FE model (Fig. 6), before these were used to train the ANN. Despite appearing little, considering the acceleration magnitude under the moving load, this level of noise is actually substantial. This level of noise corresponds to approximately 2% of the standard deviation of the measured signals, a value that is commonly considered for such analysis. Another measurement uncertainty considered was the axle load magnitude and, in that sense, 5% random oscillations of the axle load were considered. Different groups of ANNs were trained with controlled variation of input variables. In each group, six different networks were trained, ANNn with \(n \in \left\{ {1, \ldots ,6} \right\}\), each predicting for one of the six sensors. The chosen training algorithm was the Levenburg–Marquardt backpropagation algorithm [22].

The design process of the ANN implies defining the number of layers of each type and the number of nodes contained in each of these layers. Some empirical rules-of-thumb [23,24,25] provide indication of how many neurons should constitute each layer, depending on factors, such as the complexity of the function to be learned, the type of activation function, the training algorithm, and the number of input and output features. The following configuration of the neural network was explored and proved to work out well:

-

49 input neurons: the number of neurons equals the number of features, which are the 30 accelerations \(a_{t - i}^{n}\) registered by the 6 sensors \(n \in \left\{ {1, \ldots ,6} \right\}\) in the last 5 samplings \(i \in \left\{ {1, \ldots ,5} \right\}\), the 18 axle loads and 1 axle position relative to a reference point;

-

30 neurons for the hidden layer;

-

1 output neuron: the current acceleration \(a_{t}^{n}\) at time \(t\) predicted by sensor \(n\).

All the input parameters were assembled into one single input matrix. The only input variable that is kept present in all the trials of ANN configurations is the acceleration \(a\), since that is also the feature that we desire the network to predict. Any other relevant parameter \(v\) can also be given as input. What the network does is to predict a new acceleration at a certain instant in time based on \(i\) previous accelerations. This quantity \(i\), the number of delays, has to be chosen before the training phase. Perceptibly, while \(i\) can’t be too small as that will give little information for the training of the network, it cannot also be a large number since that leads to the increase in computation time. One possibility for the input matrix could resemble the structure indicated in the scheme of Fig. 7, where in this case five delays \(i\) of the feature acceleration were used.

4 Results

4.1 Evaluation of the prediction errors

One way of evaluating the performance of the trained network is by determining the deviation in the predictions. The use of the Root Mean Squared Error (RMSE) is very common and makes an outstanding general purpose error metric for numerical predictions. Compared to the simple Mean Absolute Error, the RMSE magnifies and severely penalizes large errors. For each sensor and for each train passage (or each speed), in a similar way for both healthy and damaged scenarios, one can estimate the RMSE as:

where for a certain instant \(i\), \({\text{target}}_{i}\) is the expected acceleration in healthy condition of the bridge, \({\text{output}}_{i}\) is the acceleration predicted by the network and \(T\) is the time interval during which the accelerations were recorded. Figure 8 illustrates the RMSE of the predicted accelerations by the six sensors, in the presence of an undamaged structure (blue circle) and for a damaged structure (red square), reflecting damage case 1 with a \(0.9 \times 0.4\) m2 section reduction. An axle load of 180 kN was considered and the train passages in the x-axis are ordered by increasing speed.

There seems to be a tendency for the error to increase with increasing train speed. Moreover, for the highest speeds in the considered range, it seems like even the response of the bridge in healthy structural condition is poorly predicted, yielding large RMSE. This phenomenon could be justified by the fact that the maximum considered speeds excite the structure to frequencies close to its natural frequencies. As a consequence of that, the behavior of the structure may depart from its expected typical behavior and the trained network is not able to properly predict the resulting accelerations anymore. Also noticeable is that the sensors situated closer to the geometric middle of the bridge (sensors 2 and 5) seem to be associated with networks that yield a better separation between structural states, whilst sensors placed nearby the end supports (sensors 1 and 4) are not as efficient in this task. This may be due to the fact that the response of the structure is more prominent in the middle of the span than in its extremities and, accordingly, measurements registered in the middle of the span are expected to favour a clearer distinction between normal and abnormal behavior.

It should be noted that the non-linear input–output relating function that the network uses can be quite complex and for that reason training an ANN that covers all the train load cases and speeds is extremely difficult, especially with the limited amount of data usually available. Thankfully, bridges are designed to be routinely crossed by trains of the same configuration, very similar axle load and moving consistently within a restricted range of speeds. Therefore, the ANN is trained to predict accelerations only for those specific cases of speed and train types. In fact, the range of speeds (70–100 km/h) considered to train the network could certainly be reduced, most likely yielding further accurate predictions of accelerations and, for example, reducing the disorder observed in the plots of sensors 1 and 4 in Fig. 8. In any case, even not making this adaptation, the results turned out to be very satisfactory.

From the shown plots, one has already qualitative evidence that the network can effectively discern structural states. However, making inferences based only in the plot from Fig. 8 would be a subjective way of judging what degree of separation is enough to assert that damage is indeed present in the structure. Even if the bridge is found to be in impeccable condition, the recorded dynamic responses will be different for each train passage, as the magnitude of the response depends on the speed and axle load of the train, not to mention the operational and environmental conditions. In other words, this means that the prediction errors will oscillate with each train passage, even within an unchanged condition of the bridge. Hence the distribution of errors needs to be characterized stochastically and it is the errors that significantly depart from this distribution that will work as an indication that damage may exist.

The prediction errors from 150 randomly selected train passages in healthy condition of the bridge are used to fit a statistical distribution that will work as a baseline for each sensor. The Gaussian process (GP) [26] consists in assigning a normally distributed random variable to every point in some continuous domain. For each train speed, the associated predicted errors are normally distributed, and the mean and standard deviation of the error can be different for each speed. New data are then compared against the baseline: 150 other train passages in healthy condition and 150 in damaged condition. The idea is to compute discordancy measures for data and then compare the discordancy to a threshold, from which one is able to discriminate between healthy and damaged structural condition.

After the outcome of the prediction error is characterized by a GP (Fig. 9), damage detection can be performed by checking predictions that differ considerably from the expected values. A discordancy measure for normal condition data is the deviation statistic:

Gaussian process fitted by prediction errors against increasing train speed. Here a log-normal distribution of the error is considered. Continuous line, mean; grey shaded square, Standard deviation; green plus sign, data to fit the GP; blue circle, data from healthy condition; red square, data from damaged condition, considering damage case 1 with a \(0.9 \times 0.4 {\text{ m}}^{2}\) section

where \(x_{\xi }\) is the candidate outlier, and \(\bar{x}\) and \(\sigma_{x}\) are, respectively, the mean and standard deviation of the data sample. The Mahalanobis distance [27] is one common measure of novelty in data and can be used in standard outlier analysis to provide a Damage Index (DI). To take into account only the train passages that give high prediction errors, the distance to the mean is given in standard deviations and the errors’ differences, \(\left( {{\text{RMSE}}_{n} \left( v \right) - \mu_{n} \left( v \right)} \right)\), are signed. The DI for each train speed \(v\) is then defined as:

where for each sensor \(n\), \({\text{RMSE}}_{n}\) is the predicted error, \(\mu_{n}\) is the mean predicted error and \(\sigma_{n}\) is the standard deviation of the predicted error. Ideally, if the feature vector is related to undamaged condition, then \({\text{DI}} \approx 0\); otherwise, \({\text{DI}} \ne 0\). With the determined DIs for different train passages, the receiver operating characteristic (ROC) curve can be constructed.

4.2 Evaluation of the classifier’s performance

Figure 10 shows the obtained ROC curves corresponding to different damage extents within damage case 1. In the presence of such a damage scenario and obtained ROC curve, for example, for a fixed FPr of 8%, we have associated TPr of 86, 90.7, 92, 96 and 99.3% with damage severities of 20, 40, 70, 100 and 160, respectively. Similarly, Fig. 11 depicts the ROC curve of the system in the manifestation of damage case 2. It is important to note that each ROC curve is related to a unique damage scenario.

The anticipated enhancement in detection capability with increasing damage is, however, not always verified. There are actually some operating points of DI that make the system more apt to detect damage in the presence of smaller rather than larger damage. For instance, one can see in Fig. 10 that the performance of the classifier for very low values of FPr is worse in the presence of the largest damage (blue line 160 cm), since it is related to a lower TPr, when compared to any other smaller damage. The fact that some ROC curves respecting different extents of damage intersect each other at certain points makes it more difficult to choose the best threshold. Furthermore, the process encompasses statistical reasoning, thus yielding slightly different results every time a ROC is regenerated.

In any case, the desired virtues of a good model, such as reliability and robustness, are proven by the above presented ROC curves. The model is considered reliable if damage is early detected with a high probability of detection, i.e. if small damage is efficiently identified. The model is considered robust if two conditions are satisfied: the probability of detection increases with increasing damage severity and changing an input parameter by a small amount does not lead to failure or unacceptable variation of the outcome but rather to proportional small changes.

The confidence with which the system is able to identify the presence of damage depends on the time strategy [28] defined for the SHM system. In principle, the more measurement data are gathered the more one will be certain about the outcome of the system, whether it points out abnormal structural behavior or not. The proposed algorithm performs an evaluation of the structural condition of the bridge following each new train passage and, for that reason, the algorithm is expected to be trustworthy over long periods of time. For a better understanding of the Bayes’ theorem here implemented, the theorem will be illustrated with an example (Table 5) where other possibilities of Bayesian inference concerning different thresholds and event labelling can be found. Let’s assume that four train passages are evaluated and that all make the system point out damage. The assumed a priori likelihood of damage is \(p\left( d \right) = 10^{ - 6}\) [29]. Keeping in mind, the ROC curve presented in Fig. 11, a detection threshold associated with 86% of TPs and 2% of FPs is selected. Replacing these values in (Eq. 4), we find that the a posteriori probability of damage is 77.4%. For instance, considering the same threshold, if we evaluate nine train passages and, by chance, eight out of the nine points out damage, then one will be very sure that damage is present in the structure as the a posteriori probability of damage is very close to 100%.

5 Conclusions and future research

The methodology proposed in this paper provides a rational fashion for enhancing the damage diagnosis strategy for damaged structures, allowing for both improvements in safety and reduction of bridge management cost. The method proposes the use of past recorded deck accelerations in the bridge as input to an Artificial Neural Network that, after being properly trained, is able to predict forthcoming accelerations. The difference between the measured value and the value predicted by the network will work as a primary indicator that damage may exist. This study comprises the statistical evaluation of the prediction errors of the network by means of a Gaussian Process, after which one can select the detection threshold in regard to a Damage Index. Based on the selected threshold, the expected total cost associated with the damage detection strategy can be calculated. Within the interval of viable thresholds, the optimal will be the one that yields the lowest cost. From the attained results, it is possible to derive some general conclusions:

-

lower vehicle speed seems to overall provide measurements that enable better predictions by the trained network, in the sense that the prediction errors in both healthy and damaged structural condition are inferior than for higher speeds;

-

the two sensors placed in the middle of the bridge seem to be the most efficient in the discrimination between healthy and damaged data, apparently disregarding where in the bridge damage takes place. This may be explained by the fact that the response of the simply supported bridge is emphasized at half-span;

-

the ROC curves associated with scenarios where damage is more severe generally present a superior trade-off TP/FP, since to conserve the same probability of TP one needs to accept an inferior probability of FP, when compared to less severe damage;

-

the ideal threshold for the damage detection system will be the one that yields the lowest expected total cost regarding the detection process, where the costs related with false detection have particular impact.

The proposed method has although some weaknesses that can be tackled with additional research. This could concern the study of environmental and operational effects on the damage detection process—other relevant parameters than accelerations may be given as input to the neural networks, such as temperature measurements. The consideration of these will almost certainly produce networks with higher prediction accuracy, making the algorithm more shielded against the influence of damage unrelated factors that can induce significant changes in the behavior of the structure. This study presents a limited number of damage scenarios. A wider range of possible locations for damage in the bridge could be considered, including multiple damage scenarios. It would also be interesting to understand the limitations of the proposed method in terms of the smallest damage that can be detected.

Regarding future research, once damage is detected, a subsequent step could be to study the correlation between measurements acquired from the different devices of the sensing system, in an attempt to pinpoint the location of damage. At the same time, optimal sensor placement could be carried out for the system to identify damage in a sufficiently accurate manner while avoiding redundancy in information. Finally, the suggested calculation of the expected total cost of the damage detection strategy is rather basic. Hence, the formulation of a more refined expression for the cost is desirable. Besides the economic considerations, some constraints may be considered, such as the minimum reliability level of the structure usually defined by the authorities or the limited time the system has to perform between each gathering of new data. In the end, it could even be possible to establish a schedule of inspection/repair based on the cost-benefit trade-off of each decision in the present and future moments.

References

Huang Y, Ludwig SA, Deng F (2016) Sensor optimization using a genetic algorithm for structural health monitoring in harsh environments. J Civ Struct Health Monit 6(3):509–519

Li J, Zhang X, Xing J, Wang P, Yang Q, He C (2015) Optimal sensor placement for long-span cable-stayed bridge using a novel particle swarm optimization algorithm. J Civ Struct Health Monit 5(5):677–685

Yi T-H, Li H-N, Wang C-W (2016) Multiaxial sensor placement optimization in structural health monitoring using distributed wolf algorithm. Struct Control Health Monit 23(4):719–734

Jin C, Jang S, Sun X, Li J, Christenson R (2016) Damage detection of a highway bridge under severe temperature changes using extended Kalman filter trained neural network. J Civ Struct Health Monit 6(3):545–560

Farrar CR, Worden K (2013) Structural health monitoring. A machine learning perspective. Wiley, Hoboken

Rao ARM, Lakshmi K (2015) Damage diagnostic technique combining POD with time-frequency analysis and dynamic quantum PSO. Meccanica 50(6):1551–1578

Diez A, Khoa NLD, Alamdari MM, Wang Y, Chen F (2016) A clustering approach for structural health monitoring on bridges. J Civ Struct Health Monit 1–17

Zhou Q, Zhou H, Zhou Q, Yang F, Luo L, Li T (2015) Structural damage detection based on posteriori probability support vector machine and Dempster-Shafer evidence theory. Appl Soft Comput 36:368–374

Gonzalez I, Karoumi R (2015) BWIM aided damage detection in bridges using machine learning. J Civ Struct Health Monit 5(5):715–725

Das S, Saha P, Patro S (2016) Vibration-based damage detection techniques used for health monitoring of structures: a review. J Civ Struct Health Monit 6(3):477–507

Figueiredo E, Figueiras J, Park G, Farrar CR, Worden K (2011) Influence of the autoregressive model order on damage detection. Comput Aid Civ Infrastruct Eng 26(3):225–238

Neves A, Simões F, Pinto da Costa A (2016) Vibrations of cracked beams: discrete mass and stiffness models. Comput Struct 168:68–77

Bandara RP, Chan TH, Thambiratnam DP (2014) Structural damage detection method using frequency response functions. Struct Health Monit 13(4):418–429

Moradipour P, Chan TH, Gallage C (2015) An improved modal strain energy method for structural damage. Struct Eng Mech 54(1):105–119

Xu YL, Xia Y (2012) Structural health monitoring of long-span suspension bridges. CRC Press, Boca Raton

MIL-HDBK-1823A (2009) Department of defense handbook. Nondestructive evaluation system reliability assessment

Fawcett T (2006) An introduction to ROC analysis. Pattern Recognit Lett 27(8):861–874

Abaqus FEA (2017) ABAQUS Inc., [Online]. http://www.3ds.com/products-services/simulia/products/abaqus/. Accessed May 2017

C.-E (1991) 1991-2, Eurocode 1: Actions on structures—part 2: traffic loads on bridges. European Committee for Standardization

White K (1992) Bridge maintenance inspection and evaluation

MATLAB (2017) The MathWorks, Inc., [Online]. hhttp://www.mathworks.com/products/matlab. Accessed May 2017

Press W, Teukolsky S, Vetterling W, Flannery B (1992) Numerical recipes in C. Cambridge University Press, Cambridge

Berry M, Linoff G (1997) Data mining techniques. Wiley, Hoboken

Blum A (1992) Neural networks in C++. Willey, Hoboken

Swingler K (1996) Applying Neural networks: a practical guide. Academic Press, London

Rasmussen C, Williams C (2006) Gaussian processes for machine learning. The MIT Press, Cambridge

Worden K, Manson G, Fieller N (2000) Damage detection using outlier analysis. J Sound Vib 229(3):647–667

Hejll A (2007) Civil structural health monitoring—strategies, methods and applications. Doctoral Thesis, Luleå University of Technology

Farrar CR, Worden K (2013) Structural health monitoring. A machine learning perspective. Wiley, Hoboken, p 368

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Neves, A.C., González, I., Leander, J. et al. Structural health monitoring of bridges: a model-free ANN-based approach to damage detection. J Civil Struct Health Monit 7, 689–702 (2017). https://doi.org/10.1007/s13349-017-0252-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13349-017-0252-5