Abstract

We propose a “NOVEL Integration of the Sample and Thresholded covariance” (NOVELIST) estimator to estimate the large covariance (correlation) and precision matrix. NOVELIST estimator performs shrinkage of the sample covariance (correlation) towards its thresholded version. The sample covariance (correlation) component is non-sparse and can be low rank in high dimensions. The thresholded sample covariance (correlation) component is sparse, and its addition ensures the stable invertibility of NOVELIST. The benefits of the NOVELIST estimator include simplicity, ease of implementation, computational efficiency and the fact that its application avoids eigenanalysis. We obtain an explicit convergence rate in the operator norm over a large class of covariance (correlation) matrices when the dimension p and the sample size n satisfy log \( p/n\rightarrow 0\), and its improved version when \(p/n \rightarrow 0\). In empirical comparisons with several popular estimators, the NOVELIST estimator performs well in estimating covariance and precision matrices over a wide range of models and sparsity classes. Real-data applications are presented.

Similar content being viewed by others

1 Introduction

Estimating the covariance matrix and its inverse, also known as the concentration or precision matrix, has always been an important part of multivariate analysis and arises prominently, for example, in financial risk management (Markowitz 1952; Longerstaey et al. 1996), linear discriminant analysis (Fisher 1936; Guo et al. 2007), principal component analysis (Pearson 1901; Croux and Haesbroeck 2000) and network science (Jeong et al. 2001; Gardner et al. 2003). Naturally, this is also true of the correlation matrix, and the following discussion applies to it, too. The sample covariance matrix is a straightforward and often used estimator of the covariance matrix. However, when the dimension p of the data is larger than the sample size n, the sample covariance matrix is singular. Even if p is smaller than but of the same order of magnitude as n, the number of parameters to estimate is \(p(p+1)/2\), which can significantly exceed n. In this case, the sample covariance matrix is not reliable, and alternative estimation methods are needed.

We would categorise the most commonly used alternative covariance estimators into two broad classes. Estimators in the first class rely on various structural assumptions on the underlying true covariance. One prominent example is ordered covariance matrices, often appearing in time-series analysis, spatial statistics and spatio-temporal modelling; these assume that there is a metric on the variable indices. Bickel and Levina (2008a) use banding to achieve consistent estimation in this context. Furrer and Bengtsson (2007) and Cai et al. (2010) regularise estimated ordered covariance matrices by tapering. Cai et al. (2010) derive the optimal estimation rates for the covariance matrix under the operator and Frobenius norms, a result which implies sub-optimality of the convergence rate of the banding estimator of Bickel and Levina (2008a) in the operator norm. The estimator of Cai et al. (2010) only achieves the optimal rate if the bandwidth parameter is chosen optimally; however, the optimal bandwidth depends crucially on the underlying unknown covariance matrix, and therefore, this estimator’s optimality is only oracular. The banding technique is also applied to the estimated Cholesky factorisation of the covariance matrix (Bickel and Levina 2008a; Wu and Pourahmadi 2003).

Another important example of a structural assumption on the true covariance or precision matrices is sparsity; it is often made, e.g. in the statistical analysis of genetic regulatory networks (Gardner et al. 2003; Jeong et al. 2001). El Karoui (2008) and Bickel and Levina (2008b) regularise the estimated sparse covariance matrix by universal thresholding. Adaptive thresholding, in which the threshold is a random function of the data (Cai and Liu 2011; Fryzlewicz 2013), leads to more natural thresholding rules and hence, potentially, more precise estimation. The Lasso penalty is another popular way to regularise the covariance and precision matrices (Zou 2006; Rothman et al. 2008; Friedman et al. 2008). Focusing on model selection rather than parameter estimation, Meinshausen and Bühlmann (2006) propose the neighbourhood selection method. One other commonly occurring structural assumption in covariance estimation is the factor model, often used, e.g. in financial applications. Fan et al. (2008) impose sparsity on the covariance matrix via a factor model. Fan et al. (2013) propose the POET estimator, which assumes that the covariance matrix is the sum of a part derived from a factor model, and a sparse part.

Estimators in the second broad class do not assume a specific structure of the covariance or precision matrices, but shrink the sample eigenvalues of the sample covariance matrix towards an assumed shrinkage target (Ledoit and Wolf 2012). A considerable number of shrinkage estimators have been proposed along these lines. Ledoit and Wolf (2004) derive an optimal linear shrinkage formula, which imposes the same shrinkage intensity on all sample eigenvalues but leave the sample eigenvectors unchanged. Nonlinear shrinkage is considered in Ledoit and Péché (2011) and Ledoit and Wolf (2012, 2015). Lam (2016) introduces a Nonparametric Eigenvalue-Regularised Covariance Matrix Estimator (NERCOME) through subsampling of the data, which is asymptotically equivalent to the nonlinear shrinkage method of Ledoit and Wolf (2012). Shrinkage can also be applied on the sample covariance matrix directly. Ledoit and Wolf (2003) propose a weighted average estimator of the covariance matrix with a single-index factor target. Schäfer and Strimmer (2005) review six different shrinkage targets. Naturally related to the shrinkage approach is Bayesian estimation of the covariance and precision matrices. Evans (1965), Chen (1979) and Dickey et al. (1985) use possibly the most natural covariance matrix prior, the inverted Wishart distribution. Other notable references include Leonard and John (2012) and Alvarez et al. (2014).

The POET method of Fan et al. (2013) proposes to estimate the covariance matrix as the sum of a non-sparse, low-rank matrix coming from the factor model part, and a certain sparse matrix, added on to ensure invertibility of the resulting covariance estimator. In this paper, we are motivated by the general idea of building a covariance estimator as the sum of a non-sparse and a sparse part. By following this route, the resulting estimator can be hoped to perform well in estimating both non-sparse and sparse covariance matrices if the amount of sparsity is chosen well. At the same time, the addition of the sparse part can guarantee stable invertibility of the estimated covariance, a prerequisite for the successful estimation of the precision matrix. On the other hand, we wish to move away from the heavy modelling assumptions used by the POET estimator; indeed, our empirical results presented later suggest that POET can underperform if the factor model assumption does not hold.

Motivated by this observation, this paper proposes a simple, practically assumption-free estimator of the covariance and correlation matrices, termed NOVELIST (NOVEL Integration of the Sample and Thresholded covariance/correlation estimators). NOVELIST arises as the linear combination of two parts: the sample covariance (correlation) estimator, which is always non-sparse and has low rank if \(p > n\), and its thresholded version, which is sparse. The inclusion of the sparse thresholded part means that NOVELIST can always be made stably invertible. NOVELIST can be viewed as a shrinkage estimator where the sample covariance (correlation) matrix is shrunk towards a flexible, nonparametric, sparse target. By selecting the appropriate amount of contribution of either of the two components, NOVELIST can adapt to a wide range of underlying covariance structures, including sparse but also non-sparse ones. In the paper, we show consistency of the NOVELIST estimator in the operator norm uniformly under a class of covariance matrices introduced by Bickel and Levina (2008b), as long as \( \log p/n\rightarrow 0\), and offer an improved version of this result if \(p/n \rightarrow 0\). The benefits of the NOVELIST estimator include simplicity, ease of implementation, computational efficiency and the fact that its application avoids eigenanalysis, which is unfamiliar to some practitioners. In our simulation studies, NOVELIST performs well in estimating both covariance and precision matrices for a wide range of underlying covariance structures, benefitting from the flexibility in the selection of its shrinkage intensity and thresholding level.

The rest of the paper is organised as follows. In Sect. 2, we introduce the NOVELIST estimator and its properties. Section 3 discusses the case where the two components of the NOVELIST estimator are combined in a non-convex way. Section 4 describes the procedure for selecting its parameters. Section 5 shows empirical improvements of NOVELIST. Section 6 exhibits practical performance of NOVELIST in comparison with the state of the art. Section 7 presents real-data performance in portfolio optimisation problems and concludes the paper, and proofs appear in “Appendix” section. The R package “novelist” is available on CRAN.

2 Method, motivation and properties

2.1 Notation and method

We observe n i.i.d. p-dimensional observations \(\varvec{X}_1, \ldots , \varvec{X}_n\), distributed according to a distribution F, with \(E(\varvec{X}) = 0\), \(\varSigma = \{ \sigma _{ij} \} = E(\varvec{X}\varvec{X}^{\hbox {T}})\), and \(R=\{\rho _{ij}\}=D^{-1}\varSigma D^{-1}\), where \(D=(\text {diag}(\varSigma ))^{1/2}\). In the case of heteroscedastic data, we apply NOVELIST to the sample correlation matrix and only then obtain the corresponding covariance estimator. The NOVELIST estimator of the correlation matrix is defined as

and the corresponding covariance estimator is defined as \({\hat{\varSigma }}^{N}={\hat{D}}{\hat{R}}^{N}{\hat{D}}\), where \({\hat{\varSigma }}=\{\hat{\sigma }_{ij}\}\) and \({\hat{R}}=\{\hat{\rho }_{ij}\}\) are the sample covariance and correlation matrices, respectively, \({\hat{D}}=(\text {diag}({\hat{\varSigma }}))^{1/2}\), \(\delta \) is the weight or shrinkage intensity, which is usually within the range [0, 1] but can also lie outside it, \(\lambda \) is the thresholding value, which is a scalar parameter in [0, 1], and \(T(\cdot ,\cdot )\) is a function that applies any generalised thresholding operator (Rothman et al. 2009) to each off-diagonal entry of its first argument, with the threshold value equal to its second argument. The generalised thresholding operator refers to any function satisfying the following conditions for all \(z\in \mathbb {R}\), (i) \(\mid T(z, \lambda )\mid \le \mid z\mid \); (ii) \(T(z, \lambda )=0\) for \(\mid z\mid \le \lambda \); (iii) \(\mid T(z, \lambda )-z\mid \le \lambda \). Typical examples of T include soft thresholding \(T_s\) with \(T(z, \lambda )=(z-\text {Sign}(z)\lambda )\mathbb {1}(\mid z\mid >\lambda )\), hard thresholding \(T_h\) with \(T(z, \lambda )=z\mathbb {1}(\mid z\mid >\lambda )\) and SCAD (Fan and Li 2001). Note that \({\hat{\varSigma }}^{N}\) can also be written directly as a NOVELIST estimator with a \(p\times p\) adaptive threshold matrix \(\varLambda \), \({\hat{\varSigma }}^{N}=(1-\delta )\ {\hat{\varSigma }}+\delta \ T({\hat{\varSigma }},\varLambda )\), where \(\varLambda =\{\lambda \hat{\sigma }_{ii}\hat{\sigma }_{jj}\}\).

Left: Illustration of NOVELIST operators for any off-diagonal entry of the correlation matrix \(\hat{\rho }_{ij}\) with soft thresholding target \(T_s\) (\(\lambda =0.5\), \(\delta =0.1\), 0.5 and 0.9). Right: ranked eigenvalues of NOVELIST plotted versus ranked eigenvalues of the sample correlation matrix

NOVELIST is a shrinkage estimator, in which the shrinkage target is assumed to be sparse. The degree of shrinkage is controlled by the \(\delta \) parameter and the amount of sparsity in the target by the \(\lambda \) parameter. Numerical results shown in Fig. 1 suggest that the eigenvalues of the NOVELIST estimator arise as a certain nonlinear transformation of the eigenvalues of the sample correlation (covariance) matrix, although the application of NOVELIST avoids explicit eigenanalysis.

2.2 Motivation: link to ridge regression

In this section, we show how the NOVELIST estimator can arise in a penalised solution to the linear regression problem, which is linked to ridge regression. For linear regression \(Y={\tilde{\varvec{X}}}\beta +\varepsilon \), the traditional OLS solution \(({\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}})^{-1}{\tilde{\varvec{X}}}^{\hbox {T}}Y\) cannot be used if \(p>n\) because of the non-invertibility of \({\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}}\). The OLS solution rewrites as \([(1-\delta ){\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}}+\delta {\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}}]^{-1}{\tilde{\varvec{X}}}^{\hbox {T}}Y\), where \(\delta \in [0,1]\). Using this as a starting point, we consider a regularised solution

where \(f({\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}})\) is any elementwise modification of the matrix \({\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}}\) designed (a) to make A invertible and (b) to ensure adequate estimation of \(\beta \). The expression in (2) is the minimiser of a generalised ridge regression criterion

where \(\delta \) acts as a tuning parameter. If \(f({\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}})= I\), (3) is reduced to ridge regression and A is the shrinkage estimator with the identity matrix target. If \(f({\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}})=T({\tilde{\varvec{X}}}^{\hbox {T}}{\tilde{\varvec{X}}},\lambda \hat{\sigma }_{ii}\hat{\sigma }_{jj})\), A is the NOVELIST estimator of the covariance matrix.

From formula (3), NOVELIST penalises the regression coefficients in a pairwise manner which can be interpreted as follows: for a given threshold \(\lambda \), we place a penalty on the products \(\beta _i\beta _j\) of those coefficients of \(\beta \) for which the sample correlation between \({\tilde{\varvec{X}}}_i\) and \({\tilde{\varvec{X}}}_j\), the ith and jth column of \({\tilde{\varvec{X}}}\) (respectively), exceeds \(\lambda \). In other words, if the sample correlation is high, we penalise the product of the corresponding \(\beta \)’s, hoping that the resulting estimated \(\beta _i\) and \(\beta _j\) are not simultaneously large.

2.3 Asymptotic properties of NOVELIST

2.3.1 Consistency of the NOVELIST estimators

In this section, we establish consistency of NOVELIST in the operator norm and derive the rates of convergence under different scenarios. Bickel and Levina (2008b) introduce a uniformity class of covariance matrices invariant under permutations as

where \(0\le q <1\), \(c_0\) is a function of p, the parameters M and \(\epsilon _0\) are constants, and \(\lambda _{\min }()\) is the smallest eigenvalue operator. Analogously, we define a uniformity class of correlation matrices as

where \(0\le q <1\) and \(\varepsilon _0\) is a constant. The parameters q and \(s_0(p)\) (equiv. \(c_0(p)\)) together control the permitted degree of “sparsity” of the members of the given class. In the remainder of the paper, where it does not cause confusion, we mostly work with \(s_0(p)\) rather than \(c_0(p)\), noting that these two parameterisations are equivalent.

Next, we establish consistency of the NOVELIST estimator in the operator norm, \(\mid \mid A\mid \mid _2^2=\lambda _{\max }(AA^{\hbox {T}})\), where \(\lambda _{\max }()\) is the largest eigenvalue operator.

Proposition 1

Let \( F \) satisfy \(\int _{0}^{\infty } \exp (\gamma t)dG_j(t)<\infty \) for \(0< | \gamma | <\gamma _0\), where \(\gamma _0>0\) and \(G_j\) is the cdf of \(X^2_{1j}\). Let \(R=\{\rho _{ij}\}\) and \(\varSigma =\{\sigma _{ij}\}\) be the true correlation and covariance matrices with \(1\le i, j\le p\), and \(\sigma _{ii}\le M\), where \(M > 0\). Then, uniformly on \({\mathcal {V}}(q,s_0(p),\varepsilon _0)\), for sufficiently large \(M'\), if \(\lambda =M'\sqrt{ \log p/n}\) and \(\log p/n=o(1)\),

Proposition 2

Let the length-p column vector \(\varvec{X}_i\) satisfy the sub-Gaussian condition \(P(|v^{\hbox {T}} \varvec{X}_i| > t) \le \exp (-t^2 \rho / 2)\) for a certain \(\rho > 0\), for all \(t > 0\) and \(\Vert v\Vert _2 = 1\). Let \(R=\{\rho _{ij}\}\) and \(\varSigma =\{\sigma _{ij}\}\) be the true correlation and covariance matrices with \(1\le i, j\le p\), and \(\sigma _{ii}\le M\), where \(M > 0\). Then, uniformly on \({\mathcal {V}}(q,s_0(p),\varepsilon _0)\), for sufficiently large \(M'\), if \(\lambda =M'\sqrt{ \log p/n}\) and \(p=o(n)\),

The proofs are given in “Appendix” section. The NOVELIST estimators of the correlation and covariance matrices and their inverses yield the same convergence rate.

We now discuss the optimal asymptotic \({\delta }\) under the settings of Propositions 1 and 2. Proposition 1 can be thought of as a “large p” setting, while Proposition 2 applies to moderately large and small p.

2.3.2 Optimal \(\delta \) and rate of convergence in Proposition 1

Proposition 1 corresponds to “large p” scenarios, in which p can be thought of as being O(n) or larger (indeed, the case \(p = o(n)\) is covered by Proposition 2). For such a large p, the pre-condition for the consistency of the NOVELIST estimator is that \(\delta \rightarrow 1\), i.e. that the estimator asymptotically degenerates to the thresholding estimator. To see this, take \(p = n^{1+\varDelta }\) with \(\varDelta \ge 0\). If \(\delta \not \rightarrow 1\), the error in part (A) of formula (6) would be of order \(n^{1/2+\varDelta }\sqrt{\log \,{n^{1+\varDelta }}}\) and therefore would not converge to zero.

Focusing on \({\hat{R}}^N\) without loss of generality, the optimal rate of convergence is obtained by equating parts (A) and (B) in formula (6). The resulting optimal shrinkage intensity \( {\tilde{\delta }}\) is

In typical scenarios, bearing in mind that p is at least of order n or larger, and that \(q < 1\), the term \(s_0(p)/p\) will tend to zero much faster than the term \((\log p / n)^{q/2}\), which will result in \({\tilde{\delta }} \rightarrow 1\) and in the rate of convergence of NOVELIST being \(O_p(s_0(p) (\log \, p / n)^{(1-q)/2})\). Examples or such scenarios are given directly below.

Scenario 1

\(q=0\), \(s_0(p)=o((n/\log \,p)^{1/2})\).

When \(q=0\), the uniformity class of correlation matrices controls the maximum number of nonzero entries in each row. The typical example is the moving-average (MA) autocorrelation structure in time series.

Scenario 2

\(q\ne 0\), \(s_0(p)\le C\) as \(p\rightarrow \infty \).

A typical example of this scen is the auto-regressive (AR) autocorrelation structure.

We now show a scen in which NOVELIST is inconsistent, under the setting of Proposition 1. Consider the long-memory autocorrelation matrix, \(\rho _{ij} \sim \, \mid i-j\mid ^{-\alpha }\), \(0 < \alpha \le 1\), for which \(s_0(p) = \max _{1\le i\le p} \sum _{j=1}^p \max (1, |i-j|) ^{-\alpha q} = O(p^{1-\alpha q})\). Take \(q \ne 0\). Note a sufficient condition for \({\tilde{\delta }}\) to tend to 1 is that \((\log \,p)^{(1/2)} n^{-1/2} p^\alpha \rightarrow \infty \). This more easily happens for larger \(\alpha \)’s, i.e. for “less long”-memory processes. However, considering the implied rate of convergence, we have \(s_0(p)(\log \,p / n)^{(1-q)/2} = p^{1-\alpha q} (\log \,p / n)^{(1-q)/2}\), which is divergent even if \(\alpha = 1\).

2.3.3 Optimal \(\delta \) and rate of convergence in Proposition 2

Similarly, in the setting of Proposition 2, the resulting optimal shrinkage intensity \( {\tilde{\delta }}\) is

We now highlight a few special-case scenarios.

Scenario 3

p fixed (and hence \(q = 0\)).

Note that in the case of p being fixed or bounded in n, one can take \(q = 0\) (to obtain as fast a rate for the thresholding part as possible) as the implied \(s_0(p)\) will also be bounded in n. In this case, we have \({\tilde{\delta }} \rightarrow 1\) (and hence NOVELIST degenerates to the thresholding estimator with its corresponding speed of convergence), but the speed at which \({\tilde{\delta }}\) approaches 1 is extremely slow (\(O(\log ^{-1/2}n)\)).

Scenario 4

\(p \rightarrow \infty \) with n, and \(q = 0\).

In this case, the quantity \(\{ (p + \log \,n) / \log \,p \}^{1/2}\) acts as a transition phase: if \(s_0(p)\) is of a larger order, then we have \({\tilde{\delta }}\rightarrow 0\); if it is of a smaller order, then \({\tilde{\delta }} \rightarrow 1\); if it is of this order and if \({\tilde{\delta }}\) has a limit, then its limit lies in (0, 1). Therefore, NOVELIST will be closer to the sample covariance (correlation) if the truth is dense (i.e. if \(s_0(p)\) is large), and closer to the thresholding estimator if \(s_0(p)\) is small.

Scenario 5

\(p \rightarrow \infty \) with n, and \(q \ne 0\).

Here, the transition-phase quantity is \(\frac{(p + \log \,n)^{1/2}}{(\log \,p)^\frac{1-q}{2}n^{q/2}}\) and conclusions analogous to those of the preceding Scenario can be formed.

In the context of Scenario 5, we now revisit the long-memory example from before. The most “difficult” case still included in the setting of Proposition 2 is when p is “almost” the size of n; therefore, we assume \(p = n^{1-\varDelta }\), with \(\varDelta \) being a small positive constant. Neglecting the logarithmic factors, the transition-phase quantity \(\frac{(p + \log \,n)^{1/2}}{(\log \,p)^\frac{1-q}{2}n^{q/2}}\) reduces to \(n^\frac{1-\varDelta -q}{2}\). We have \(s_0(p) = O(n^{(1-\varDelta )(1-\alpha q)})\), and therefore \(s_0(p)\) is of a larger order than \(n^\frac{1-\varDelta -q}{2}\) if \(\alpha < \frac{1-\varDelta +q}{2q(1-\varDelta )}\); in this case, \({\tilde{\delta }} \rightarrow 0\), and the NOVELIST estimator degenerates to the sample covariance (correlation) estimator, which is consistent in this setting at the rate of \(n^{-\varDelta /2}\) (neglecting the log-factors). The other case, \(\alpha \ge \frac{1-\varDelta +q}{2q(1-\varDelta )}\), is impossible as we must have \(\alpha \le 1\). Therefore, the NOVELIST estimator is consistent for the long-memory model under the setting of Proposition 2, i.e. when \(p = o(n)\) (and degenerates to the sample covariance estimator). This is in contrast to the setting of Proposition 1, where, as argued before, the consistency of NOVELIST in the long-memory model cannot be shown.

3 \(\delta \) outside [0, 1]

Some authors (Ledoit and Wolf 2003; Schäfer and Strimmer 2005; Savic and Karlsson 2009), more or less explicitly, discuss the issue of the shrinkage intensity (for other shrinkage estimators) falling within versus outside the interval [0, 1]. Ledoit and Wolf (2003) “expect” it to lie between zero and one, Schäfer and Strimmer (2005) truncate it at zero or one, and Savic and Karlsson (2009) view negative shrinkage as a “useful signal for possible model misspecification”. We are interested in the performance of the NOVELIST estimator with \(\delta \not \in [0,1]\) and have reasons to believe that \(\delta \not \in [0,1]\) may be a good choice in certain scenarios.

We use the diagrams below to briefly illustrate this point. When the target T is appropriate, the “oracle” NOVELIST estimator (by which we mean one where \(\delta \) is computed with the knowledge of the true R by minimising the spectral norm distance to R) will typically be in the convex hull of \(\hat{ R}\) and T, i.e. \(\delta \in [0,1]\) as shown in the left graph. However, the target may also be misspecified. For example, if the true correlation matrix is highly non-sparse, the sparse target may be inappropriate, to the extent that R will be further away from T than from \(\hat{R}\), as shown in the middle graph. In that case, the optimal \(\delta \) should be negative to prevent NOVELIST being close to the target. By contrast, when the sample correlation is far from the (sparse) truth, perhaps because of high dimensionality, the optimal delta may be larger than one (Diagram 1).

Geometric illustration of shrinkage estimators. R is the truth, T is the target, \({\hat{ R}}\) is the sample correlation, \({{\hat{R}}}^N_{opt}\) is the “oracle” NOVELIST estimator defined as the linear combination of T and \({\hat{ R}}\) with minimum spectral norm distance to R. LEFT: \(\delta \in (0,1)\) if target T is appropriate; MIDDLE: \(\delta <0\) if target T is misspecified; RIGHT: \(\delta >1\) if \({\hat{ R}}\) is far from R

4 Empirical choices of \((\lambda , \delta )\) and algorithm

The choices of the shrinkage intensity (for shrinkage estimators) and the thresholding level (for thresholding estimators) are intensively studied in the literature. Bickel and Levina (2008b) propose a cross-validation method for choosing the threshold value for their thresholding estimator. However, NOVELIST requires simultaneous selection of the two parameters \(\lambda \) and \(\delta \), which makes straight cross-validation computationally intensive. Ledoit and Wolf (2003) and Schäfer and Strimmer (2005) give an analytic solution to the problem of choosing the optimal shrinkage level, under the Frobenius norm, for any shrinkage estimator. Since NOVELIST can be viewed as a shrinkage estimator, we borrow strength from this result and proceed by selecting the optimal shrinkage intensity \(\delta ^*(\lambda )\) in the sense of Ledoit and Wolf (2003) for each \(\lambda \), and then perform cross-validation to select the best pair \((\lambda ', \delta ^*(\lambda '))\). This process significantly accelerates computation.

Cai and Liu (2011) and Fryzlewicz (2013) use adaptive thresholding for covariance matrices, in order to make thresholding insensitive to changes in the variance of the individual variables. This, effectively, corresponds to thresholding sample correlations rather than covariances. In the same vein, we apply NOVELIST to sample correlation matrices. We use soft thresholding as it often exhibits better and more stable empirical performance than hard thresholding, which is partly due to its being a continuous operation. Let \({\hat{\varSigma }}\) and \({\hat{R}}\) be the sample covariance and correlation matrices computed on the whole dataset, and let \(T=\{T_{ij}\}\) be the soft thresholding estimator of the correlation matrix. The algorithm proceeds as follows.

For estimating the covariance matrix,

LW (Ledoit–Wolf) step Using all available data, for each \(\lambda \in (0,1)\) chosen from a uniform grid of size m, find the optimal empirical \(\delta \) as

to obtain the pair \((\lambda ,\delta ^*(\lambda ))\).

The first equality comes from Ledoit and Wolf (2003), and the second follows because of the fact that our shrinkage target T is the soft thresholding estimator with threshold \(\lambda \) (applied to the off-diagonal entries only).

CV (Cross-validation) step For each \(z = 1, \ldots , Z\), split the data randomly into two equal-size parts A (training data) and B (test data), letting \({\hat{\varSigma }}_{A}^{(z)}\) and \({\hat{\varSigma }}_{B}^{(z)}\) be the sample covariance matrices of these two datasets, and \({\hat{R}}_{A}^{(z)}\) and \({\hat{R}}_{B}^{(z)}\) – the sample correlation matrices.

-

1.

For each \(\lambda \), obtain the NOVELIST estimator of the correlation matrix \({\hat{R}}^{N^{(z)}}_A(\lambda )={\hat{R}}^{N}({\hat{R}}^{(z)}_{A}, \lambda , \delta ^*(\lambda ))\), and of the covariance matrix \({\hat{\varSigma }}^{N^{(z)}}_A(\lambda )=\hat{D_A}{\hat{R}}^{N^{(z)}}_A(\lambda )\hat{D_A}\), where \(\hat{D_A}=(\text {diag}\)\(({{\hat{\varSigma }}^{(z)}_{A}}))^{1/2}\).

-

2.

Compute the spectral norm error \({\text {Err}}(\lambda )^{(z)}=\mid \mid {\hat{\varSigma }}^{N^{(z)}}_A(\lambda )-{\hat{\varSigma }}_{B}^{(z)}\mid \mid ^2_2\).

-

3.

Repeat steps 1 and 2 for each z and obtain the averaged error \({\text {Err}}(\lambda )=\frac{1}{Z}\sum _{z=1}^{Z} {\text {Err}}(\lambda )^{(z)}\). Find \({\lambda }'=\min _{\lambda }{\text {Err}}(\lambda )\), then obtain the optimal pair \(({\lambda }', {\delta }')=({\lambda }', \delta ^*({\lambda }')).\)

-

4.

Compute the cross-validated NOVELIST estimators of the correlation and covariance matrices as

$$\begin{aligned} {\hat{R}}^{N}_{cv}&={\hat{R}}^{N}({\hat{R}}, \lambda ^{'}, \delta ^{'}), \end{aligned}$$(13)$$\begin{aligned} {\hat{\varSigma }}^{N}_{cv}&={\hat{D}}{\hat{R}}^{N} _{cv}{\hat{D}}, \end{aligned}$$(14)where \({\hat{D}}=(\text {diag}({\hat{\varSigma }}))^{1/2}.\)

For estimating the inverses of the correlation and the covariance matrices, the difference lies in step 2, where the error measure is adjusted as follows. If \(n>2p\) (i.e. in the case when \({\hat{\varSigma }}_{B}^{(z)}\) is invertible), we use the measure \({\text {Err}}(\lambda )^{(z)}=\,\mid \mid ({\hat{\varSigma }}^{N^{(z)}}_A(\lambda ))^{-1}-({\hat{\varSigma }}_{B}^{(z)})^{-1}\mid \mid ^2_2\); otherwise, use \({\text {Err}}(\lambda )^{(z)}=\,\mid \mid ({\hat{\varSigma }}^{N^{(z)}}_A(\lambda ))^{-1}{\hat{\varSigma }}_{B}^{(z)}-\mathcal {I}\mid \mid ^2_2\), where \(\mathcal {I}\) is the identity matrix. In step 4, we compute the cross-validated NOVELIST estimators of the inverted correlation and covariance matrices as

We note that a closely related procedure for choosing \(\delta \) has also been described in Lam and Feng (2017).

5 Empirical improvements of NOVELIST

5.1 Fixed parameters

As shown in the simulation study of Sect. 6.2, the performance of cross-validation is generally adequate, except in estimating large precision matrices with highly non-sparse covariance structures, such as in factor models and long-memory autocovariance structures. To remedy this problem, we suggest that fixed, rather than cross-validated parameters be used, if the eigenanalysis of the sample correlation matrix indicates that there are prominent principal components, when \(p>2n\) or close. We suggest the following rules of thumb: first, we look for the evidence of “elbows” in the scree plot of eigenvalues, by examining if \(\sum _{k=1}^{p} \mathbb {I}\{\gamma _{(k)}+\gamma _{(k+2)}-2\gamma _{(k+1)}>0.1p\}>0\), where \(\gamma (k)\) is the kth principal component. If so, then we look for the evidence of long-memory decay, by examining if the off-diagonals of the sample correlation matrix follow a high-kurtosis distribution. If the sample kurtosis \(\le 3.5\), this suggests that the factor structure may be present, and we use the fixed parameters \((\lambda '',\delta '')=(0.90,0.50)\); if the sample kurtosis \(>3.5\), this may point to long memory, and we use the fixed parameters \((\lambda '',\delta '')=(0.50,0.25)\). The above decision procedure, including all the specific parameter values, has been obtained through extensive numerical experiments not shown in this paper. It is sketched in the following flowchart (Flowchart 1).

5.2 Principal-component-adjusted NOVELIST

NOVELIST can further benefit from any prior knowledge about the underlying covariance matrix, such as the factor model structure. If the underlying correlation matrix follows a factor model, we can decompose the sample correlation matrix as

where \(\hat{\gamma }_{(k)}\) and \(\hat{\xi }_{(k)}\) are the kth eigenvalue and eigenvector of sample correlation matrix, K is the number up to which the principal components are considered to be “large” and \({\hat{R}}_{\hbox {rem}}\) is the sample correlation matrix after removing the first K principal components. Instead of applying NOVELIST on \({\hat{R}}\) directly, we keep the first K components unchanged and only apply NOVELIST to \({\hat{R}}_{\hbox {rem}}\). Principal-component-adjusted NOVELIST estimators are obtained by

In the remainder of the paper, we always use the not-necessarily-optimal value \(K = 1\). We suggest that PC-adjusted NOVELIST should only be used with prior knowledge or if empirical testing indicates that there are prominent principal components.

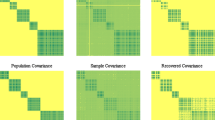

6 Simulation study

In this section, we investigate the performance of the NOVELIST estimator of covariance and precision matrices based on optimal and data-driven choices of \((\lambda , \delta )\) for seven different models and in comparison with five popular competitors. According to the algorithm in Sect. 4, the NOVELIST estimator of the correlation is obtained first; the corresponding estimator of the covariance follows by formula (13) and the inverse of the covariance estimator is obtained by formula (16). In all simulations, the sample size \(n=100\), and the dimension \(p \in \{10, 100, 200, 500\}\). We perform \(N=50\) repetitions.

6.1 Simulation models

We use the following models for \(\varSigma \).

(A) \(\textit{Identity}\)\(\sigma _{ij}=1\mathbb {I}\{i=j\}\), for \(1\le i,j\le p\).

(B) \(\textit{MA(1) autocovariance structure}\)

for \(1\le i,j\le p\). We set \(\rho =0.5\).

(C) \(\textit{AR(1) autocovariance structure}\)

with \(\rho = 0.9\).

(D) \(\textit{Non-sparse covariance structure}\) We generate a positive definite matrix as

where Q has iid standard normal entries and \(\varLambda \) is a diagonal matrix with its diagonal entries drawn independently from the \(\chi ^2_5\) distribution. The resulting \(\varSigma \) is non-sparse and lacks an obvious pattern.

(E) \(\textit{Factor model covariance structure}\) Let \(\varSigma \) be the covariance matrix of \(\mathbf {X}=\{X_1, X_2, \cdot \cdot \cdot , X_p\}^{\hbox {T}}\), which follows a three-factor model

where

\(\mathbf {Y}=\{Y_1, Y_2, Y_3\}^{\hbox {T}}\) is a three-dimensional factor, generated independently from the standard normal distribution, i.e. \(\mathbf {Y}\sim \mathbf {\mathcal {N}(0,\mathcal {I}_3)}\),

\(\mathbf {B}=\{\beta _{ij}\}\) is the coefficient matrix, \(\beta _{ij}\overset{i.i.d.}{\sim }U(0,1)\), \(1\le i \le p\), \(1\le j \le 3\),

\(\mathbf {E}=\{\epsilon _1, \epsilon _2,\cdot \cdot \cdot , \epsilon _p\}^{\hbox {T}}\) is p-dimensional random noise, generated independently from the standard normal distribution, \(\mathbf {\epsilon }\sim \mathbf {\mathcal {N}(0,1)}\).

Based on this model, we have \(\sigma _{ij}={\left\{ \begin{array}{ll} \sum _{k=1}^{3} \beta _{ik}^2+1 &{} \text {if} \ i= j;\\ \sum _{k=1}^{3} \beta _{ik}\beta _{jk} &{} \text {if} \ i\ne j. \end{array}\right. }\).

(F) \(\textit{Long-memory autocovariance structure}\) We use the autocovariance matrix of the fractional Gaussian noise (FGN) process, with

The model is taken from Bickel and Levina (2008a), Sect. 6.1, and is non-sparse. We take \(H=0.9\) in order to investigate the case with strong long memory.

(G) \(\textit{Seasonal covariance structure}\)

where \(\mathbb {Z}_{\ge 0}\) is the set of non-negative integers. We take \(l=3\) and \(\rho =0.9\).

The models can be broadly divided into three groups. (A)–(C) and (G) are sparse, (D) is non-sparse, and (E) and (F) are highly non-sparse. In models (B), (C) (F) and (G), the covariance matrix equals the correlation matrix. In order to depart from the case of equal variances, we also work with modified versions of these models, denoted by (B*), (C*) (F*) and (G*), in which the correlation matrix \(\{\rho _{ij}\}\) is generated as in (B), (C) (F) and (G), respectively, and which have unequal variances independently generated as \(\sigma _{ii}\sim \chi ^2_{5}\). As a result, in the “starred” models, we have \(\sigma _{ij}=\rho _{ij}\sqrt{\sigma _{ii}\sigma _{jj}}\), \(i,j \in (1,p)\).

The performance of the competing estimators is presented in two parts. In the first part, we compare the estimators with optimal parameters identified with the knowledge of the true covariance matrix. These include (a) the soft thresholding estimator \(T_s\), which applies the soft thresholding operator to the off-diagonal entries of \({\hat{R}}\) only, as described in Sect. 2.1, (b) the banding estimator B (Section 2.1 in Bickel and Levina (2008a)), (c) the optimal NOVELIST estimator \({\hat{\varSigma }}^{N}_{opt}\) and (d) the optimal PC-adjusted NOVELIST estimator \(\hat{\varSigma }^N_{\hbox {opt.rem}}\) . In the second part, we compare the data-driven estimators including (e) the linear shrinkage estimator S [Target D in Table 2 from Schäfer and Strimmer (2005)], which estimates the correlation matrix by “shrinkage of the sample correlation towards the identity matrix” and estimates the variances by “shrinkage of the sample variances towards their median”, (f) the POET estimator P (Fan et al. 2013), (g) the cross-validated NOVELIST estimator \({\hat{\varSigma }}^{N}_{cv}\), (h) the PC-adjusted NOVELIST \(\hat{\varSigma }^N_{\hbox {rem}}\) and (i) the nonlinear shrinkage estimator NS (Ledoit and Wolf 2015). The sample covariance matrix \({\hat{\varSigma }}\) is also listed for reference. We use the R package \(\textit{corpcor}\) to compute S and the R package \(\textit{POET}\) to compute P. In the latter, we use \(k=7\) as suggested by the authors and use soft thresholding in NOVELIST and POET as it tends to offer better empirical performance. We use \(Z=50\) for \({\hat{\varSigma }}^{N}_{cv}\) and extend the interval for \(\delta \) to \([-0.5, 1.5]\). \({\hat{\varSigma }}^{N}_{cv}\) with fixed parameters are only considered for estimating precision matrix under model (E), (F) and (F*) when \(p=100, 200, 500\). We use \(K=1\) for \(\hat{\varSigma }^N_{\hbox {opt.rem}}\) and \(\hat{\varSigma }^N_{\hbox {rem}}\). NS is performed by using the commercial package SNOPT for Matlab (Ledoit and Wolf 2015).

6.2 Simulation results

Performance of\({\hat{\varSigma }}^N\)as a function of\((\lambda , \delta )\) Examining the results presented in Figs. 2 and 3 and Table 1, it is apparent that the performance of NOVELIST depends on the combinations of \(\lambda \) and \(\delta \) used. Generally speaking, the average operator norm errors increase as sparsity decreases and dimension p increases. The positions of empirically optimal \(\lambda ^*\) and \(\delta ^*\) are summarised as follows.

-

1.

The higher the degree of sparsity, the closer \(\delta ^*\) is to 1. The \(\delta ^*\) parameter tends to be close to 1 or slightly larger than 1 for the sparse group, around 0.5 for the non-sparse group and about 0 or negative for the highly non-sparse group.

-

2.

\(\delta ^*\) moves closer to 1 as p increases. This is especially true for the sparse group.

-

3.

Unsurprisingly, the choice of \(\lambda \) is less important when \(\delta \) is closer to 0.

-

4.

Occasionally, \(\delta ^* \not \in [0,1]\). In particular, for the AR(1) and seasonal models, \(\delta ^* \in (1, 1.5]\), while in the highly non-sparse group, \(\delta ^*\) can take negative values, which is a reflection of the fact that \(\hat{\varSigma }^N_{\hbox {opt}}\) attempts to reduce the effect of the strongly misspecified sparse target.

50 replicated cross-validation choices of \((\delta ^{'},\lambda ^{'})\) (green circles) against the background of contour lines of operator norm distances to \(\varSigma \) under models (a), (c), (d) and (f) [equivalent to Figs. 2 and 3], \(n=100\), \(p=10\ \text {(Left)}, 100\ \text {(Middle)}, 200\ \text {(Right)}\). The area inside the first contour line contains all combinations of \((\lambda , \delta )\) for which \(\Vert {\hat{\varSigma }}^N(\lambda , \delta )-\varSigma \Vert \) is in the 1st decile of \([\underset{(\lambda , \delta )}{\text {min}}\Vert {\hat{\varSigma }}^N(\lambda , \delta )-\varSigma \Vert , \underset{(\lambda , \delta )}{\text {max}}\Vert {\hat{\varSigma }}^N(\lambda , \delta )-\varSigma \Vert ]\)

Performance of cross-validated choices of\((\lambda , \delta )\) Table 1 shows that the cross-validated choices of the parameter \((\lambda ^{'}, \delta ^{'})\) for \({\hat{\varSigma }}^N_{cv}\) are close to the optimal \(( \lambda ^{*}, \delta ^{*})\) for most models when \(p=10\), but there are bigger discrepancies between \((\lambda ^{'}, \delta ^{'})\) and \(( \lambda ^{*}, \delta ^{*})\) as p increases, especially for the highly non-sparse group. Again, Fig. 4, which only includes representative models from each sparsity category, shows that the choices of \((\lambda ^{'}, \delta ^{'})\) are consistent with \(( \lambda ^{*}, \delta ^{*})\) in most of the cases. For models (A) and (C), cross-validation works very well: the vast majority of \((\lambda ^{'}, \delta ^{'})\) lead to the error lying in the 1st decile of the possible error range, whereas for models (D) and (G) with \(p = 10\), in the 1st or 2nd decile.

However, as given in Tables 4 and 5, the performance of cross-validation in estimating \(\varSigma ^{-1}\) with highly non-sparse covariance structures, such as in factor models and long-memory autocovariance structures, is less good (a remedy to this was described in Sect. 5.1).

Comparison with competing estimators For the estimators with the optimal parameters (Tables 2, 3), NOVELIST performs the best for \(p=10\) for both \(\varSigma \) and \(\varSigma ^{-1}\) and beats the competitors across the non-sparse and highly non-sparse model classes when \(p=100\), 200 and 500. The banding estimator beats NOVELIST in covariance matrix estimation in the homoscedastic sparse models by a small margin in the higher-dimensional cases. For the identity matrix, banding, thresholding and the optimal NOVELIST attain the same results. Optimal PC-adjusted NOVELIST achieves better relative results for estimating \(\varSigma ^{-1}\) than for \(\varSigma \).

In the competitions based on the data-driven estimators (Tables 4, 5), when \(p=10\), the cross-validation NOVELIST is the best for most of the models with heteroscedastic variances and only slightly worse than linear or nonlinear shrinkage estimator for the other models. When \(p=100\), 200 or 500, the cross-validation NOVELIST is the best for most of the models in the sparse and the non-sparse groups (more so for heteroscedastic models) for both \(\varSigma \) and \(\varSigma ^{-1}\), but is beaten by POET for the factor model and the FGN model by a small margin, and is slightly worse than nonlinear shrinkage forhomoscedastic sparse models. However, POET underperforms for the sparse and non-sparse models for \(\varSigma \), and nonlinear shrinkage does worse than NOVELIST for heteroscedastic sparse models. The cases where the cross-validation NOVELIST performs the worst are rare. NOVELIST with fixed parameters as in Flowchart 1 for highly non-sparse cases improves the results for \(\varSigma ^{-1}\). PC-adjusted NOVELIST can further improve the results for estimating \(\varSigma ^{-1}\) but not for \(\varSigma \). We would argue that NOVELIST is the overall best performer, followed by nonlinear shrinkage, linear shrinkage and POET.

7 Portfolio selection

In this section, we apply the NOVELIST algorithm and the competing methods to share portfolios composed of the constituents of the FTSE 100 index. Similar competitions were previously conducted to compare the performance of different covariance matrix estimators (Ledoit and Wolf 2003; Lam 2016). We compare the performance for risk minimisation purposes. The data were provided by Bloomberg.

Daily returns Our first dataset consists of \(p=85\) stocks of FTSE 100 (we removed all those constituents that contained missing values) and 2606 daily returns \(\{r_t\}\) for the period 1 January 2005 to 31 December 2015. We use data from the first \(n=120\) days to estimate the initial covariance matrices of the returns based on six different competing covariance matrix estimators and create six portfolios with weights given by the well-known weight formula

where \({\hat{\varSigma }}^{(120)}_t\) is an estimator of the \(p\times p\) covariance matrix of the past 120-trading-day returns on trading day t (i.e. computed over days \(t-119\) to t) and \(\mathbf {1}_p\) is the column vector of p ones. We hold these portfolios for the next 22 trading days and compute their out-of-sample standard deviations as (Ledoit and Wolf 2003)

which is a measure of risk. On the 23rd day, we liquidate the portfolios and start the process all over again based on the past 120 trading days. The dataset is composed of 113 instances of such 22-trading-day blocks, and the average STD of each portfolio is computed.

5-min returns The second dataset consists of \(p=100\) constituents of FTSE 100 and 13,770 5-min returns \(\{y_t\}\) for the period 2 March 2015 to 4 September 2015 (135 trading days). The procedure is similar to the one above, and only the differences are explained here. We use the first 2 days (\(n=204\)) to estimate the initial covariance matrices of the returns and create portfolios with weights given by

where \({\hat{\varSigma }}^{(204)}_t\) is an estimator of the \(p\times p\) covariance matrix of the 5-min returns over the past 204 data points (2 days) at trading time t. We hold them for the next day and the out-of-sample standard deviations are calculated by

We rebalance the portfolios every day and compute the sum of out-of-sample STD’s over the 133 trading days.

Following the advice from Sect. 5.1, we apply fixed parameters for both NOVELIST and PC-adjusted NOVELIST. Table 6 shows the results. NOVELIST has the lowest risk for both daily and 5-min portfolios, followed by PC-adjusted NOVELIST and nonlinear shrinkage in the low-frequency case and by POET and nonlinear shrinkage in the high-frequency case. In summary, NOVELIST offers the best option in terms of risk minimisation.

8 Discussion

As many other covariance (correlation) matrix estimators which incorporate thresholding, the NOVELIST estimator is not guaranteed to be positive definite in finite samples. To remedy this, our advice is similar to other authors’ (e.g. Cai et al. 2010; Fan et al. 2013; Bickel and Levina 2008b): we propose to diagonalise the NOVELIST estimator and replace any eigenvalues that fall under a certain small positive threshold by the value of that threshold. How to choose the threshold is, of course, an important matter, and we do not believe there is a generally accepted solution in the literature, partly because the value of the “best” such threshold will necessarily be problem-dependent. Denoting the such corrected estimator by \({\hat{\varSigma }}^N(\zeta )\) (in the covariance case) and \({\hat{R}}^N(\zeta )\) (in the correlation case), where \(\zeta \) is the eigenvalue threshold, one possibility would be to choose the lowest possible \(\zeta \) for which the matrix \({\hat{\varSigma }}^N ({\hat{\varSigma }}^N(\zeta ))^{-1}\) (and analogously for the correlation case) resembles the identity matrix, in a certain user-specified sense.

We also note that either part of the NOVELIST estimator can be replaced by a banding-type estimator, for example, as defined by Cai et al. (2010). In this way, we would depart from the particular construction of the NOVELIST estimator towards the more general idea of using convex combinations of two (or more) covariance estimators, which is conceptually and practically appealing but lies outside the scope of the current work.

To summarise, the flexible control of the degree of shrinkage and thresholding offered by NOVELIST means that it is able to offer competitive performance across most models, and in situations in which it is not the best, it tends not to be much worse than the best performer. We recommend NOVELIST as a simple, good all-round covariance, correlation and precision matrix estimator ready for practical use across a variety of models and data dimensionalities.

References

Alvarez I, Niemi J, Simpson M (2014) Bayesian inference for a covariance matrix. Preprint

Bickel P, Levina E (2008a) Regularized estimation of large covariance matrices. Ann Stat 36:199–227

Bickel P, Levina E (2008b) Covariance regularization by thresholding. Ann Stat 36:2577–2604

Cai T, Liu W (2011) Adaptive thresholding for sparse covariance matrix estimation. J Am Stat Assoc 106:672–684

Cai TT, Zhang C, Zhou HH (2010) Optimal rates of convergence for covariance matrix estimation. Ann Stat 38:2118–2144

Chen C (1979) Bayesian inference for a normal dispersion matrix and its application to stochastic multiple regression analysis. J R Stat Soc Ser B 41:235–248

Croux C, Haesbroeck G (2000) Principal component analysis based on robust estimators of the covariance or correlation matrix: influence functions and efficiencies. Biometrika 87:603–618

Dickey JM, Lindley DV, Press SJ (1985) Bayesian estimation of the dispersion matrix of a multivariate normal distribution. Commun Stat Theory Methods 14:1019–1034

El Karoui N (2008) Operator norm consistent estimation of large-dimensional sparse covariance matrices. Ann Stat 36:2717–2756

Evans IG (1965) Bayesian estimation of parameters of a multivariate normal distribution. J R Stat Soc Ser B 27:279–283

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96:1348–1360

Fan J, Fan Y, Lv J (2008) High dimensional covariance matrix estimation using a factor model. J Econ 147:186–197

Fan J, Liao Y, Mincheva M (2013) Large covariance estimation by thresholding principal orthogonal complements. J R Stat Soc Ser B 75:603–680

Fisher RA (1936) The use of multiple measurements in taxonomic problems. Ann Eugen 7:179–188

Friedman J, Hastie T, Tibshirani R (2008) Sparse inverse covariance estimation with the graphical lasso. Biostatistics 9:432–441

Fryzlewicz P (2013) High-dimensional volatility matrix estimation via wavelets and thresholding. Biometrika 100:921–938

Furrer R, Bengtsson T (2007) Estimation of high-dimensional prior and posteriori covariance matrices in Kalman filter variants. J Multivar Anal 98:227–255

Gardner TS, di Bernardo D, Lorenz D, Collins JJ (2003) Inferring genetic networks and identifying compound mode of action via expression profiling. Science 301:102–105

Golub GH, Van Loan CF (1989) Matrix computations, 2nd edn. Johns Hopkins University Press, Baltimore

Guo YQ, Hastie T, Tibshirani R (2007) Regularized linear discriminant analysis and its application in microarrays. Biostatistics 8:86–100

Jeong H, Mason SP, Barabási A-L, Oltvai ZN (2001) Lethality and centrality in protein networks. Nature 411:41–42

Lam C (2016) Nonparametric eigenvalue-regularized precision or covariance matrix estimation. Ann Stat 44:928–953

Lam C, Feng P (2017) Integrating regularized covariance matrix estimators. Preprint

Ledoit O, Péché S (2011) Eigenvectors of some large sample covariance matrix ensembles. Probab Theory Relat Fields 151:233–264

Ledoit O, Wolf M (2003) Improved estimation of the covariance matrix of stock returns with an application to portfolio selection. J Empir Finance 10:603–621

Ledoit O, Wolf M (2004) A well-conditioned estimator for large-dimensional covariance matrices. J Multivar Anal 88:365–411

Ledoit O, Wolf M (2012) Nonlinear shrinkage and estimation of large-dimensional covariance matrices. Ann Stat 4:1024–1060

Ledoit O, Wolf M (2015) Spectrum estimation: a unified framework for covariance matrix estimation and PCA in large dimensions. J Multivar Anal 139:360–384

Leonard T, John SJH (2012) Bayesian inference for a covariance matrix. Ann Stat 20:1669–1696

Longerstaey J, Zangari A, Howard S (1996) Risk metrics\(^{TM}\)-technical document. Technical document. J.P. Morgan, New York

Markowitz H (1952) Portfolio selection. J Finance 7:77–91

Meinshausen N, Bühlmann P (2006) High-dimensional graphs and variable selection with the lasso. Ann Stat 34:1436–1462

Pearson K (1901) On lines and planes of closest fit to systems of points in space. Philos Mag 2:559–572

Rothman AJ, Bickel P, Levina E, Zhu J (2008) Sparse permutation invariant covariance estimation. Electron J Stat 2:494–515

Rothman AJ, Levina E, Zhu J (2009) Generalized thresholding of large covariance matrices. J Am Stat Assoc 104:177–186

Savic RM, Karlsson MO (2009) Importance of shrinkage in empirical bayes estimates for diagnostics: problems and solutions. Am Assoc Pharm Sci 11:558–569

Schäfer J, Strimmer K (2005) A shrinkage approach to large-scale covariance matrix estimation and implications for functional genomic. Stat Appl Genet Mol Biol 4:1544–6115

Wu WB, Pourahmadi M (2003) Nonparametric estimation in the gaussian graphical model. Biometrika 90:831–844

Zou H (2006) The adaptive lasso and its oracle properties. J Am Stat Assoc 101:1418–1429

Acknowledgements

Piotr Fryzlewicz’s work has been supported by the Engineering and Physical Sciences Research Council Grant No. EP/L014246/1.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Additional lemmas and proofs

Firstly, we briefly introduce two lemmas that will be used in the proof of Proposition 1.

Lemma 1

If \( F \) satisfies \(\int _{0}^{\infty } exp(\gamma t)dG_j(t)<\infty \), for \(0<|\gamma |<\gamma _0\), for some \(\gamma _0>0\), where \(G_j\) is the cdf of \(X^2_{1j}\), \(R=\{\rho _{ij}\}\) and \(\varSigma =\{\sigma _{ij}\}\) are the true correlation and covariance matrices, \(1\le i, j\le p\), and \(\sigma _{ii}\le M\), where M is a constant, then, for sufficiently large \(M'\), if \(\lambda =M'\sqrt{\log p/n}\) and \(\log p/n=o(1)\), we have \(\underset{1\le i, j\le p}{\text {max}}|\hat{\rho }_{ij}-\rho _{ij}|=O_p(\sqrt{\log p/n})\), for \(1\le i,j \le p\).

Proof of Lemma 1

By the sub-multiplicative norm property \(\Vert AB\Vert \le \Vert A\Vert \ \Vert B\Vert \) (Golub and Loan 1989), we write

The last equality holds as we have \(\underset{1\le i, j\le p}{\text {max}}|\hat{\sigma }_{ij}-\sigma _{ij}|=O_p(\sqrt{\log p/n})=\underset{1\le i, j\le p}{\text {max}}|\hat{\sigma }_{ij}^{-1}-\sigma _{ij}^{-1}|\) (Bickel and Levina 2008b) and \(\underset{1\le i, j\le p}{\text {max}}|\hat{\sigma }_{ij}|=O_p(\sqrt{\log p/n})=\underset{1\le i, j\le p}{\text {max}}|\hat{\sigma }_{ij}^{-1}|\), and \(\sigma _{ii}\le M\), \(1\le i,j\le p\). \(\square \)

Lemma 2

If \( F \) satisfies \(\int _{0}^{\infty } exp(\gamma t)dG_j(t)<\infty \), for \(0<|\gamma |<\gamma _0\), for some \(\gamma _0>0\), where \(G_j\) is the cdf of \(X^2_{1j}\), \(R=\{\rho _{ij}\}\) is the true correlation matrix, \(1\le i, j\le p\), then, uniformly on \({\mathcal {V}}(q,s_0(p),\varepsilon _0)\), for sufficiently large \(M'\), if \(\lambda =M'\sqrt{\log p/n}\) and \(\log p/n=o(1)\),

where T is any kind of generalised thresholding estimator.

Lemma 2 is a correlation version of Theorem 1 in Rothman et al. (2009) and follows in a straightforward way by replacing \({\hat{\varSigma }}\), \(\varSigma \), \({\mathcal {U}}(q, c_0(p), M,\epsilon _0)\) and \(c_0(p)\) by \({\hat{R}}\), R, \({\mathcal {V}}(q,s_0(p),\varepsilon _0)\) and \(s_0(p)\) in the proof of the theorem.

Proof of Proposition 1

We first show the result for \({\hat{R}}^N\). By the triangle inequality,

Using Lemma 2, we have

For symmetric matrices M, Corollary 2.3.2 in Golub and Loan (1989) states that

Then by Lemma 1,

Thus, we have

Combining formulae (33) and (36) yields the first equality. The second equality follows because

uniformly on \({\mathcal {V}}(q,s_0(p),\varepsilon _0)\).

For the \({\hat{\varSigma }}^N\) estimator, recalling that \(T=T({\hat{R}},\lambda )\) and \(D={(\text {diag}(\varSigma ))}^{1/2}\), we have

Similarly as in 36, we obtain \(III=O_p((1-\delta )p\sqrt{\log p/n})\). For IV, we write

The last equality holds as we have \(\Vert T-R\Vert = O_p(s_0(p)(\log p/n)^{(1-q)/2})\), \(\Vert {\hat{D}}-D\Vert = O_p(\sqrt{\log p/n})\), \(\Vert {\hat{D}}\Vert = O_p(1) = \Vert T\Vert \), and \(\Vert D\Vert =O(1)\) as \(\sigma _{ii}<M\). Because \((\log p/n)^{q/2}(s_0(p))^{-1}\) is bounded from above by the assumption that \(\log p/n=o(1)\) and \(\Vert ({\hat{\varSigma }}^N)^{-1}-{\varSigma }^{-1}\Vert \asymp \Vert {\hat{\varSigma }}^N-\varSigma \Vert \) uniformly on \({\mathcal {V}}(q,s_0(p),\varepsilon _0)\), the result follows. \(\square \)

Proof of Proposition 2

We only need to show the rate for the sample covariance (correlation) part as the arguments for the thresholding part are identical to those in Proposition 1. We first collect the relevant arguments from the proof of Lemma 3 in Cai et al. (2010). Let \(\Vert \cdot \Vert \) denote the spectral norm of a matrix. From the proof of Lemma 3 in Cai et al. (2010), there exist vectors \(v_1, v_2, \ldots , v_{5^m} \in S^{m-1}\), where \(S^{m-1}\) is the unit sphere in the Euclidean distance in \(\mathbb {R}^m\), such that

for all \(m \times m\) symmetric matrices A.

Consider now the sample covariance matrix \({\hat{\varSigma }} = \frac{1}{n} \sum _{i=1}^{n} \varvec{X}_i \varvec{X}_i^{\hbox {T}}\) (recall that \(E(\varvec{X}) = 0\)), satisfying a sub-Gaussian condition in the sense that the length-p column vector \(\varvec{X}_i\) satisfies

for a certain \(\rho > 0\), for all \(t > 0\) and \(\Vert v\Vert _2 = 1\).

Then, by the same arguments as in the proof of Lemma 3 in Cai et al. (2010), there exists \(\rho _1 > 0\) such that

where \(\varSigma \) is the population covariance matrix, for all \(0< x < \rho _1\) and \(\Vert v \Vert = 1\).

We then bound

with \(y = x/4\).

As \(\rho _1\) is unknown, the only “safe” y’s to consider are such that \(y \rightarrow 0\) as \(n \ \rightarrow \infty \), uniformly over all permitted p. We now want

which leads to

This can only converge to zero if \(p = o(n)\). Under this assumption, we therefore indeed have

which completes the proof. \(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Huang, N., Fryzlewicz, P. NOVELIST estimator of large correlation and covariance matrices and their inverses. TEST 28, 694–727 (2019). https://doi.org/10.1007/s11749-018-0592-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-018-0592-4

Keywords

- Covariance regularisation

- High-dimensional covariance

- Long memory

- Non-sparse modelling

- Singular sample covariance

- High dimensionality