Abstract

Accurate information on the location and magnitude of vegetation change in scenic areas can guide the configuration of tourism facilities and the formulation of vegetation protection measures. High spatial resolution remote sensing images can be used to detect subtle vegetation changes. The major objective of this study was to map and quantify forest vegetation changes in a national scenic location, the Purple Mountains of Nanjing, China, using multi-temporal cross-sensor high spatial resolution satellite images to identify the main drivers of the vegetation changes and provide a reference for sustainable management. We used Quickbird images acquired in 2004, IKONOS images acquired in 2009, and WorldView2 images acquired in 2015. Four pixel-based direct change detection methods including the normalized difference vegetation index difference method, multi-index integrated change analysis (MIICA), principal component analysis, and spectral gradient difference analysis were compared in terms of their change detection performances. Subsequently, the best pixel-based detection method in conjunction with object-oriented image analysis was used to extract subtle forest vegetation changes. An accuracy assessment using the stratified random sampling points was conducted to evaluate the performance of the change detection results. The results showed that the MIICA method was the best pixel-based change detection method. And the object-oriented MIICA with an overall accuracy of 0.907 and a kappa coefficient of 0.846 was superior to the pixel-based MIICA. From 2004 to 2009, areas of vegetation gain mainly occurred around the periphery of the study area, while areas of vegetation loss were observed in the interior and along the boundary of the study area due to construction activities, which contributed to 79% of the total area of vegetation loss. During 2009–2015, the greening initiatives around the construction areas increased the forest vegetation coverage, accounting for 84% of the total area of vegetation gain. In spite of this, vegetation loss occurred in the interior of the Purple Mountains due to infrastructure development that caused conversion from vegetation to impervious areas. We recommend that: (1) a local multi-agency team inspect and assess law enforcement regarding natural resource utilization; and (2) strengthen environmental awareness education.

Similar content being viewed by others

Introduction

Scenic forest areas are precious and non-renewable natural resources. In spite of their provision of social, ecological, and environmental services, urban and suburban scenic forests continue to suffer risk of degradation due to socio-economic advancement, demographic growth, and the acceleration of urbanization (Qi 2013). Knowledge of the changes in forest vegetation in scenic forests helps to assess conservation management effectiveness and to formulate conservation measures to respond to vegetation changes and to promote sustainable development of the urban forest environment and the social economy (Qi 2013; Pu and Landry 2012). The development of remote sensing images and technology provides advanced technological measures for detecting these changes.

Traditional assessment methods are useful for medium- and coarse-scale change detection. In contrast, sub-meter scale vegetation change detection is more suitable for determining diverse, subtle changes in scenic forest areas, such as mapping networks of unpaved unofficial mountaineering paths that have increased due to the walking and climbing of local residents (Zhou et al. 2008). High spatial resolution remote sensing images can help detect such subtle changes that are not recorded in the official databases and assess their impacts on forest vegetation and soils that are underestimated by analyses of medium and coarse remotely sensed images.

In traditional vegetation cover change analyses, images are usually classified first and then the classifications are compared. In this case, change detection accuracy depends heavily on classification accuracy. Several highly automated direct change detection methods have been developed and applied to vegetation change detection, including the Landsat-based detection of Trends in Disturbance and Recovery (LandtrendR, Kennedy et al. 2010), the Breaks For Additive Seasonal and Trend (BFAST, Verbesselt et al. 2010) and Vegetation Change Tracker (VCT, Huang et al. 2010). However, these methods are mainly used for time series analyses using relatively low and medium spatial resolution images and are not suitable for high spatial resolution image analyses due to the high cost and the unavailability of high spatial resolution images covering long time spans. Additionally, some of the parameters required for these methods (such as the integrated forest z-score (IFZ) in the VCT) are difficult to derive from high spatial resolution images due to limited spectral bands. Thus, in this study, we considered other detection methods that are easily implemented and suitable for high spatial resolution images. Weismiller et al. (1977) first proposed the image difference method. However, when different sensors are used for change detection, a simple band difference method results in large errors. In order to utilize multiple bands and reduce the amount of workload at the same time, Byrne et al. (1980) applied change detection based on principal component analysis (PCA). However, few studies have used the PCA method with different sensor images for change detection. Chen et al. (2013) proposed the use of the spectral gradient difference (SGD) to detect changes to reduce the impact of different sensors. In addition, many studies applied vegetation index information to reduce the influences of illumination, terrain, atmospheric conditions and other factors. These include the normalized difference vegetation index (NDVI) difference method (Rouse et al. 1973; Yang et al. 2000), and multi-index integrated change analysis (MIICA, Jin et al. 2013) method. Besides NDVI, MIICA added other characteristic parameters that indicate change intensity to improve detection accuracy.

The above-mentioned change detection methods are all pixel-based methods. Since the spatial relationship among pixels is ignored, salt-and-pepper noise is commonly observed and the higher the spatial resolution, the higher the salt-and-pepper noise (Han 2016). To address this problem when change detection is performed using high spatial resolution images, object-oriented image analysis was proposed (Baatz and Schäpe 1999). The traditional object-oriented change detection method is based on an image segmentation followed by the classification of the image objects (Civco et al. 2002; Gamanya et al. 2009; Chen et al. 2012), thus the detection results are also subject to the classification accuracy.

To combine the advantages of direct change detection and object-oriented analysis, in this study, we proposed a new approach to conduct forest vegetation change detection by using cross-sensor high spatial resolution satellite images. This new method integrates the optimal pixel-based method among several tested methods and object-oriented analysis to detect vegetation change. Specifically, we used the precision (P) and recall rate (R) to objectively determine the optimal segmentation parameter (Zhu et al. 2015). This method performs image segmentation first and uses the homogeneous segmentation objects as the analysis units instead of the individual pixels to reduce the salt-and-pepper noise and minimize the influences of the classification accuracy on the detection results.

We used the Purple Mountains in Nanjing, a five A-level scenic spot with abundant vegetation as the study area to test the performance of the proposed change detection methods. The Purple Mountains attract many tourists every year because of several well-known scenic spots including the Dr. Sun Yat-sen Mausoleum and Ming Xiaoling Mausoleum. The area is also an important scenic forest location at the lower reaches of the Yangtze River and a region with scientific research value in the north subtropical zone of China. Additionally, as the “green lung” of Nanjing city, the Purple Mountains support many plant species and have a high forest cover, which provides great values in terms of air and water purification, soil and water conservation, tourism, and scientific research (Liu 2015).

Since 1935, forests of the Purple Mountains have suffered from pine caterpillars and government agencies have taken many prevention and control measures. Many studies have documented changes in the vegetation of the Purple Mountains (Xue and Zhao 1982; Cheng and Ni 2004). However, few studies of vegetation change in the Purple Mountains have been implemented by the scenic location management authority in recent years, especially at the sub-meter scale.

Materials and methods

Study area and datasets

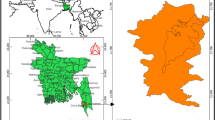

Located in the eastern outskirts of Nanjing City, Jiangsu Province, the Purple Mountains extend from 118°48′E, 32°02′N to 118°52′E, 32°06′N (Fig. 1), covering an area of approximately 30 km2 with forest coverage of 70% (Wang and Li 2017). The highest elevation of the Purple Mountains is 448.9 m. This area falls into the northern margin of the subtropics and is a transitional vegetation zone between warm-temperate deciduous broad-leaved forest and mid-subtropical evergreen broad-leaved forest. Evergreen-deciduous broad-leaved mixed forest dominates this region with a rich diversity of plant species. There are about 113 families and more than 600 species of seed plants (Dong et al. 2007). Through long-term tending and natural succession, the restoration of the natural plant flora and the formation of zonal plant community types have made the Purple Mountains a scientifically important deciduous evergreen broad-leaved forest in the northern subtropics of China (Dong et al. 2011).

The data used in this study (Table 1) include QuickBird images acquired in 2004 (one 0.6-m resolution panchromatic band and four 2.4-m resolution multispectral bands), IKONOS images acquired in 2009 (one 0.8-m resolution panchromatic band and four 3.2-m resolution multispectral bands), and 2015 WorldView2 images (one 0.5-m resolution panchromatic band and four 2.0-m resolution multispectral bands). The satellite images were captured during the growing season (June to September in the mid-latitude regions).

Data preprocessing

The images were radiometrically calibrated using the latest parameters (Qi and Lalasia 2014) to convert the digital numbers (DNs) to top-of-atmosphere radiance values. The FLAASH module in the ENVI software was used to generate surface reflectance images. Using the 2009 IKONOS image as a reference image, we identified 100 ground control points to perform a geometric correction using the nearest neighbor resampling method to achieve a resolution of 0.5 m for all images. The errors after geometric correction were at the sub-pixel level. To avoid the influences of atmospheric conditions, we selected the 2009 IKONOS images as a benchmark and performed a pseudo-invariant feature (PIF) normalization method to ensure radiometric consistency among the images. We selected 7000 pixels including low-, medium-, and high-reflectance pixels and fitted a number of linear regression equations to achieve relative radiometric normalization.

Fusion

Because all three sensors included panchromatic and multispectral bands, we performed image fusion to integrate the spectral and spatial information (Peng and Liu 2007). Based on the atmospherically and geometrically corrected panchromatic bands and multispectral bands, the PCA (Chen and Pu 2006), the Brovey transform (Tan et al. 2008; Gillespie et al. 1987), hue-saturation-value (HSV) transform (Du et al. 2017), Gram–Schmidt (GS) transform (Witharana et al. 2016; Huang and Gu 2010) and wavelet transform methods (Gong et al. 2010) were implemented to fuse the images. For the wavelet transform fusion, we selected different wavelet basis functions and decomposition layers to determine the optimal fusion scheme. Objective quantitative evaluation parameters including the spectral mean values, the standard deviation, the average gradient, the information entropy of the fused images, and the root mean square error (RMSE) between the original images and fused images were calculated in tandem with subjective qualitative evaluation to evaluate the fusion performance. The vegetation indices were generated using the original multispectral images prior to the fusion.

Pixel-based change detection methods

NDVI difference method

Vegetation strongly absorbs red band energy and reflects the near-infrared band energy (Yang et al. 2000). Therefore, the vegetation indices that are calculated using the spectral values of these two bands represent the above-ground biomass (Coppin and Bauer 1994). We used the vegetation index difference of bi-temporal images to obtain vegetation cover changes. We used the NDVI images of the three time periods, performed the relative radiometric normalization, and generated the NDVI difference images. Subsequently, a change detection threshold (see Sect. Determination of the thresholds) was specified to extract the vegetation change areas. The NDVI is defined by Eq. (1):

where ρ(NIR) represents the near-infrared band reflectance and ρ(R) represents the red band reflectance.

MIICA

MIICA is a change detection method developed for Landsat imagery. It is based on the calculation of four spectral parameters including the differenced normalized burn ratio (dNBR),the differenced NDVI (dNDVI), the change vector (CV), and the relative change vector maximum (RCVMAX) to extract the change area from bi-temporal images (Jin et al. 2013). However, the dNBR could not be calculated for the images used in this study due to the lack of short-wave infrared bands.

The Purple Mountains are under strict supervision and control so that forest fires are rare and were therefore ignored for the purpose of this study. Additionally, the four parameters are independent in the calculation. Therefore, only the latter three parameters were used for vegetation change detection in this study. The MIICA process was adapted and the flowchart of the application using the 2009 and 2015 images as examples is illustrated in Fig. 2.

Equation (2)–(4) are used to calculate the parameters:

where 1 and 2 represent the pre-image and post-image respectively; i = 1, 2, 3, and 4, represent the image band numbers; ρ(B1i) and ρ(B2i) are the reflectance values of B1i and B2i.

PCA

After principal component transformation of the bi-temporal images, the first and second principal component bands containing the majority of the image information were extracted for the difference calculation to reduce the information redundancy. Then we enumerated the sample pixel values of the first and second principal component difference bands separately for the different change directions (the conversions from vegetation to water, to impervious areas, and to bare land) and determined the change pixels and change types (Byrne et al. 1980; Yuan and Elvidge 1998; Mo et al. 2013).

SGD

The differences in the land cover types are reflected in the pixel brightness values, the spectral curves, and the shapes (Tso and Mather 2001). The SGD change detection method uses a spectral gradient to quantitatively describe the shape of the spectral curve and compares the shape differences of the spectral curves of the bi-temporal images to distinguish changed areas and unchanged areas. Using the spectral gradient space instead of the traditional spectral space to calculate the magnitude of change is a novel approach. This method has rarely been applied to vegetation change detection because the results are often not adequate to directly infer the direction of vegetation change. In the current analysis, the steps in the SGD method were the following: (1) Generation of the spectral gradient using the adjacent bands of the same image; and (2) Generation of the spectral gradient vectors by combining different spectral gradients and using the spectral gradient vectors of the bi-temporal images to obtain the gradient difference vectors. Their absolute values represent the change magnitude (Chen et al. 2013). Equations (5)–(8) express the parameters used in the SGD:

where ρ(k+1) and ρ(k) denote the spectral reflectance of the adjacent bands k and k + 1 respectively andλ(k+1) andλ(k) are their corresponding wavelengths; g(k,k+1) denotes the spectral gradient between the adjacent bands k and k + 1; G denotes the spectral gradient vector; ΔG is the spectral gradient vector difference derived from the bi-temporal images and its absolute value |ΔG| is the change magnitude. After the generation of |ΔG|, we extracted the change areas using thresholds (Sect. Determination of the thresholds) and masked the non-vegetation change areas. Then we defined the areas of vegetation gain or loss based on the dNDVI (Eq. 2). The vegetation change area with dNDVI values less than 0 was the vegetation gain area, and the vegetation change area with dNDVI values greater than 0 was the vegetation loss area.

Determination of the thresholds

The NDVI difference method, MIICA and SGD methods require a threshold to define the change areas. The threshold selection directly affects the accuracy of change detection. Since some thresholds in this study could not be expressed in a binary format and included two change directions (vegetation gain and loss), the traditional binarization methods were not suitable. The threshold in this study was determined using the following steps: (1) estimation of the approximate value by visual inspection of the histogram of the change magnitude images or the dNDVI images; (2) adjustment of the value at a ± 0.01 step interval to determine the threshold; and (3) terminating the iteration process once the threshold resulted in the highest matching accuracy compared to the reference data (changed and unchanged areas that were identified beforehand) (Pu and Landry 2012). Unlike other adaptive threshold methods, this method allows for adjusting the initial threshold and the number of iterations according to the specific magnitude or dNDVI images, which make the detection results more reliable.

Accuracy assessment

We calculated the accuracy of vegetation change detection by comparing the extracted change results with the reference samples. Due to the lack of higher spatial resolution land cover change data or ground truth data for the corresponding years, the accuracy assessment data used in this study were visually interpreted as change or no-change results based on the reference samples. Random points were generated by using a stratified random sampling strategy (Banko 1998) and we used the three stratified areas of vegetation loss, vegetation gain and no change. We spatially integrated the detection results, and segregate vegetation loss and vegetation gain classes as follows. If a pixel was identified as falling into the vegetation gain or loss areas using any of the four methods, this pixel was classified as vegetation gain or loss. The spatially conflicted pixels were classified as no-change due to their small pixel proportion (less than 0.1% of the total study area) to simplify the evaluation process. Thus, we generated 75 sample points in each of the three stratified areas after the specific division (Congalton 1991). For each of the random sample points, we visually compared the 2009 image with the 2015 image to count the number of no change pixels and change pixels (vegetation gain and vegetation loss). Thus, the confusion matrices were generated to derive the overall accuracy, the kappa coefficient, the producer’s accuracy and the user’s accuracy and to compare the performance of different detection methods.

Object-oriented change detection methods

We chose the appropriate segmentation parameters and segmented the bi-temporal images uniformly to minimize the effect of the differences in size, shape, area, and spatial position of the segmented objects in the bi-temporal images on change detection accuracy. First, the preprocessed bi-temporal images were stacked and segmented uniformly to ensure that the segmented objects from the bi-temporal images corresponded to each other (one-to-one mapping). The segmentation was performed using the Segment Only Feature Extraction module of ENVI, including the segmentation and merging processes. This is a very important step in the process of detecting fine-scale changes in vegetation to separate the narrow roads and gaps inside the forest from vegetation. Therefore, we first used a coarser scale to segment buildings, water, and forest areas. A subsequent segmentation was performed inside the forest areas. Since merging was performed on the basis of the segmented objects, a very large segmentation scale would result in under-segmentation (Li et al. 2017). In order to detect the small open spaces inside the forests, we set the segmentation scale to zero and continuously changed the merging scales. The P and R of the segmentation objects were calculated and compared for different merging scales to determine the optimal segmentation parameter. We calculated P and R for merging scales in the range of 0–100 and for a segmentation scale of 0. We wanted to ensure that P was maximized and a higher R was obtained under this premise. P and R are defined by Eqs. (9)–(12).

where p and r are the precision and recall rate of the segmented object respectively, si is the segmentation object, and s(i,j) is its matching object. P and R are the weighted averages of the p and r values of all objects in the region respectively.

Subsequently, homogeneous objects were generated and the grayscale value of each pixel in the objects was replaced by the average grayscale value of the object. The optimal pixel-based change detection method was applied to the segmented images. Ultimately, the same change threshold strategy was used to extract the change regions and the same accuracy assessment strategy was implemented to derive the accuracy statistics.

Results

Image fusion

Three pan-sharped images were created by fusing the multispectral bands with the panchromatic band. Through subjective qualitative evaluation and objective quantitative evaluation, we found that the best wavelet basis functions were sym5, bior2.8, and coif1 for the 2004, 2009, and 2015 images, respectively. The optimum number of decomposition layers was three. The objective evaluation parameters of different fusion methods are presented in Table S1–Table S2. Among them, the standard deviation, average gradient, and information entropy represent the sharpness and information of the fused image. The fusion result is better when these parameter values are larger. The closer the spectral mean to the original image, the better the result. RMSE reflects the difference between fused image and original image, and the smaller the value, the better the result is. For three images, the HSV and wavelet transform fusion results have higher information entropy. But after considering the other parameters and subjective evaluation criteria, we determined that the HSV fusion algorithm was most suitable for the QuickBird image and the wavelet transform fusion algorithm with the bior2.8 and coif1 basis function and a three-layered decomposition strategy were optimal for the IKONOS and WorldView2 images, respectively. The optimum fusion results for the different sensors are shown in Fig. 3.

Vegetation change detection results of four pixel-based methods

Figure 4 shows the vegetation change detection results of the four pixel-based methods. The corresponding accuracy statistics derived from the stratified random sampling strategy are summarized in Table 2. Locations of vegetation gain and loss from the four methods were spatially similar and the results of SGD and MIICA were most similar (Fig. 4).

The accuracy results (Table 2) showed that MIICA yielded higher user’s accuracy for the vegetation change areas but it’s producer’s accuracy was lower. This indicates that MIICA resulted in few false detections but the omission error was slightly higher for the vegetation change areas. The producer’s accuracy was higher for NDVI difference method than for MIICA but there were more false detections (Fig. 5). The PCA test results were not outstanding because the producer’s accuracy was low and the omission error in the vegetation gain area was more than 50%. Some of the accuracy results were high for the SGD but they were not optimal. MIICA results yielded the highest kappa value, meaning the greatest consistency between the test and actual results. Also MIICA had highest overall accuracy. Based on these results, MIICA was the preferred method for accurate detection, thus, it was used for the subsequent integration with the object-oriented detection method.

Vegetation change detection results of the object-oriented method

When the segmentation scale was 18.2 and the merging scale was 78.3, the buildings, water and forests could be easily segmented (Fig. 6). For subsequent segmentation within forest areas, the trends of P and R with the change in the merging scale at a step of 10 are shown in Fig. 7a. The value of P remained at 0.9 for a merging scale from 0 to 80 and then declined after 80. The P and R values for the merging scale from 80 to 90 at a step of 1 were calculated to see if there was a merging scale with a P value of 0.9 and a higher R value (Fig. 7b). Although the R value increased when merging scale was greater than 81, the P value began to decline. So 80 and 81 proved to be better scales. Since P is inversely related to the merging scale, in order to obtain highest P value and higher R value to ensure that fine-scale changes could be detected as much as possible (Zhu et al. 2015), we chose 80 as the final merging scale. The internal segmentation results of the forest land are shown in Fig. 8. The segmentation algorithm ideally partitioned highly similar pixel clusters into different homogeneous objects and accurately delineated the boundaries of the gaps inside the forest land. Then the MIICA method was applied to the segmented images for change detection. Since the regions of the three stratifications were different in these two accuracy verifications, stratified random sampling was performed on object-oriented MIICA and pixel-based MIICA again using the same method as described in Sect. Accuracy assessment. The final detection accuracy results are summarized in Table 3. The overall accuracy and kappa coefficient of the object-oriented MIICA method was higher than that of the pixel-based MIICA method. Besides, object-oriented MIICA method had higher producer’s accuracy for the vegetation change areas. Figure 9 shows the differences in the results of the two methods and we can conclude that the performance of object-oriented MIICA method was much better than that of pixel-based MIICA method. This is attributed to the relatively regular homogeneous areas and fewer isolated small clusters that were generated by the object-oriented MIICA method. Thus, object-oriented MIICA was used to map forest vegetation changes during 2004–2015.

Comparison of change detection performance between pixel-based MIICA and object-oriented MIICA. a 2009 image; b 2015 image; c pixel-based MIICA detection result; d object-oriented MIICA detection result. a, b are spatially collocated; c, d show the change detection results produced by different methods

Vegetation changes in Purple Mountains

Figure 10 depicts vegetation change during 2004–2009 and 2009–2015 as detected by the object-oriented MIICA method. The vegetation change areas and change rates are presented in Table 4. From 2004 to 2009, vegetation loss rate was relatively large at 2.20%. The vegetation loss occurred mainly at the edges of the Purple Mountains (Fig. 10a). During this period, the rate of the vegetation gain was 0.90% and the increases occurred mainly at the edges of the conservation area. A small number of vegetation loss areas were detected in the central portion of the Purple Mountains from 2009 to 2015, and the rate of loss was 0.66% (Fig. 10b). During this period, the rate of vegetation gain was 1.36%. The increase in vegetation cover mainly occurred at the periphery of the Purple Mountains. The conversion from vegetation to impervious surfaces is listed in Table 5. From 2004 to 2009, the conversion rate from vegetation to impervious surfaces was large, accounting for 79% of the total vegetation loss areas. From 2009 to 2015, the conversion rate from impervious surfaces to vegetation accounted for 84% of the total vegetation gain and was the main reason for the vegetation gain during this period.

Discussion

Change detection algorithms

Among the four pixel-based change detection methods, the detection accuracy of PCA was relatively low and its omission error of the change area was large, especially the omission error of the vegetation gain area. This meant that many change pixels were identified as unchanged areas by PCA. A potential explanation for this high omission error is the difference in the radiometric sensitivity of the same ground objects for the different sensor observations. Thus, it is often not effective to use only spectral information for change detection. The advantage of the PCA method is integrating a few of the original bands and generating principal components that contain the majority of the information variance of the original bands to simplify the calculation and to facilitate the subsequent analyses (Teffera et al. 2018).

The SGD method outperformed PCA. That is because, although the spectral values of the same object in different images might differ, the spectral shapes are similar (Chen et al. 2013), which enables SGD for removing spurious changes caused by various interference factors. The |ΔG| image obtained by the SGD method is a change magnitude image and the change regions can be extracted from it by specifying appropriate thresholds. The changed regions encompass the conversion between various land use or land cover types. Thus, it is necessary to separate the non-vegetated areas from the change areas to determine specific vegetation changes. The process is relatively complicated. We set the threshold using the NDVI values of the bi-temporal images to remove the areas where no vegetation change had occurred.

The MIICA and NDVI difference methods utilize vegetation indices to address the problem of cross-sensor analysis. dNDVI quantifies the magnitude of biomass change and reduces error caused by terrain and atmospheric interferences (Apan 1997). The detection performance was better for MIICA than for the NDVI difference method (Fig. 5). NDVI difference method classified some unchanged pixels into vegetation gain area. Compared to the NDVI difference method, MIICA included two additional parameters, viz. CV, which represents the total spectral change between the two images and RCVMAX, which detects relative change, quantifies the magnitude of relative change, and describes a general change pattern (Diao et al. 2018). This filters out some of the pixels whose dNDVI values reached a certain range but did not indicate change in the land cover type. Thus, MIICA performed better than the NDVI difference method for vegetation change detection. However, two additional thresholds must be specified for MIICA, which may increase the omission error of the vegetation change or result in other uncertainties. The NDVI difference method is the simplest and most straightforward approach for vegetation change detection and the results are reliable in most cases. To retain spectral fidelity, in this study, the NDVI calculation was implemented by using original bands rather than fused bands. Therefore, the initial spatial resolution of the detected vegetation change was at the resolution of 2 m. Unlike the NDVI difference method, MIICA removed some pseudo-change area and, most importantly, it preserved the high spatial resolution of the detected vegetation changes because the indices CV and RCVMAX were calculated from the fused bands rather than the original bands. In change detection studies, it is usually required that the omission error be minimized without forcing a large commission error. If we consider the small difference in the omission errors between MIICA, NDVI difference method, and SGD, the simplicity of the methods, and the preserved resolution of the detection results, we conclude that MIICA proved to be the most suitable method of the four pixel-based change detection methods.

Combination of the object-oriented method and MIICA yielded the most accurate depiction of vegetation change in this study. This method segments and merges objects with similar spectral features, a process that greatly reduces the salt-and-pepper noise (Sun et al. 2011). On the other hand, the setting of the threshold for direct change detection was not completely accurate and pixels at the edge of the threshold range likely produced different results (change or no change) due to small changes in the threshold. However, when these pixels aggregated with other pixels as an object, the impact of threshold change was minimized. Therefore, detection accuracy was greater than that achieved when using only the pixel-based MIICA. The pixel-based MIICA is based on a simpler process and is more suitable for change detection using low- and medium-resolution remotely sensed images. In contrast, the object-oriented MIICA is more appropriate for the detection of fine changes from high spatial resolution images.

Vegetation changes in the Purple Mountains

From 2004 to 2009, the land type conversion at the edge of the Purple Mountains mainly consisted of the transformation of farmland and forest into lakes and buildings (Fig. 11a–c). This was the main cause of vegetation loss during this period and the increase in construction was the most important factor (Table 5). In other words, during this period, the development occurred mostly at the periphery of the Purple Mountains. In spite of this, in some peripheral areas, vegetation cover increased. The largest area was located in the southwest corner of the Purple Mountains (Fig. 11d), which evolved from an architectural area into the present Meihua Valley scenic spot.

During 2009–2015, the available land (mainly used for vegetable production) at the foot of the mountains decreased and coupled with the increase in people’s awareness of environmental protection and the greening concept, vegetation coverage in and around the building area increased (Fig. 12d, e). This occurred because the local administrative agency of the scenic location dismantled some illegal buildings or dispersed aboriginal houses and implemented re-greening projects on those sites to improve the landscape aesthetics. This was an important reason for the large increase in the vegetation area (Table 5). During this period, the vegetation loss in the interior of the mountains was attributed to the expansion of buildings to meet tourism needs, such as the west and south of the Dr. Sun Yat-sen Mausoleum (Fig. 12a, b). This also included vegetation loss near roads and buildings (Fig. 12c). These effects should be evaluated by the management agencies and management guidelines should be developed. These might include strengthening vegetation protection and restoration around the “Wild Road” for climbing, improving vegetation management alongside roads, and reducing the destruction of vegetation by tourists. These measures could prevent additional vegetation losses. In addition, there may be some violations of regulations regarding the expansion of buildings and managers should focus on this problem. Relevant departments should also follow the ecological priority principle in the forest management process and appropriately weigh the ecological and economic needs of forestry. Management should be guided by the relationships between forestry development, society, environment, and nature (Zhang and Du 2007).

We noticed some disadvantages of the proposed method: (1) The detection methods used in this study can only detect changes in vegetation and non-vegetation classes. Changes in specific vegetation types (such as grassland, shrub, and woodland) need to be determined based on visual discrimination of the original image or the use of other classification methods. (2) The determination of change threshold was achieved by adjusting the initial threshold and number of iterations based on the reference data. This method proved to be not only time-consuming but the results of the threshold setting were often affected by the selection of the representative change regions. Therefore, the method for setting the threshold requires improvement for obtaining reliable vegetation change detection results. (3) Current research results do not clearly indicate the specific reasons for vegetation loss. In order to provide more reliable data and recommendations for sustainable management of scenic locations, in future research, we will develop automatic methods to identify and quantify areas of specific conversion types (e.g., paths, buildings).

Conclusions

Our accuracy assessment results indicated that the MIICA was the best suited method for detecting vegetation change among the four pixel-based direct change detection methods. The reasons were the combination of the vegetation indices and other spectral parameters. Furthermore, the proposed object-oriented MIICA was the best method for detection of change using high spatial resolution images. During 2004–2009, the vegetation changes occurred mainly at the periphery of the Purple Mountains. The demand for tourism development was an important reason. During 2009–2015, the greening work around the construction areas increased the area of vegetation cover at the periphery of the area. However, some vegetated areas declined in extent near roads and buildings in the central Purple Mountains.

The subtle vegetation change information derived from high spatial resolution satellite images informed the local agencies of management effectiveness and provided data for use in strengthening tourism management, land use monitoring, and environmental education to prevent further vegetation disturbances.

Change history

11 June 2019

The article “Integrating cross-sensor high spatial resolution satellite images to detect subtle forest vegetation change.

References

Apan AA (1997) Land cover mapping for tropical forest rehabilitation planning using remotely-sensed data. Int J Remote Sens 18(5):1029–1049

Baatz M, Schäpe A (1999) Object-oriented and multi-scale image analysis in semantic networks. In: 2nd international symposium: operationalization of remote sensing

Banko G (1998) A review of assessing the accuracy of classifications of remotely sensed data and of methods including remote sensing data in forest inventory. International Institute for Applied Systems Analysis Interim Report. IR-98-081. Laxenburg Austria

Byrne GF, Crapper PF, Mayo KK (1980) Monitoring land-cover change by principal component analysis of multitemporal landsat data. Remote Sens Environ 10(3):175–184

Chen YL, Pu TX (2006) Study on fusion algorithms of quickbird pan and multi-spectral images. Annual Academic Meeting of China Land Science Society, vol 7

Chen G, Hay GJ, Carvalho LMT, Wulder MA (2012) Object-based change detection. Int J Remote Sens 33(14):4434–4457

Chen J, Lu M, Chen XH, Chen J, Chen LJ (2013) A spectral gradient difference based approach for land cover change detection. ISPRS J Photogramm Remote Sens 85(2):1–12

Cheng XY, Ni JZ (2004) Zijinshan Mountain forest resources development analysis. J Jiangsu For Sci Technol 31(1):6–8

Civco DL, Hurd JD, Wilson EH, Song M, Zhang Z (2002) A comparison of land use and land cover change detection methods. In: Asprs-Acsm conference and Fig Xxii congress

Congalton R (1991) A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens Environ 37(2):35–46

Coppin PR, Bauer ME (1994) Processing of multitemporal Landsat TM imagery to optimize extraction of forest cover change features. IEEE Trans Geosci Remote Sens 32(4):918–927

Diao JJ, Gong XY, Li MS (2018) A comprehensive change detection method for updating land cover data base. Remote Sens Land Resour 30(1):157–165

Dong LN, Ju F, Niu RZ, Song HL (2007) Status and analysis of state-protected plants in Zijin Mountain. J Jiangsu For Sci Technol 34(2):51–54

Dong LN, Xu HB, Ju F, Liu SW, Wan ZZ (2011) Plant diversity and its conservation strategies in Zijin Mountain National Forest Park in Nanjing. J Jiangsu For Sci Technol 38(1):30–35

Du WB, Xie YJ, Yang W (2017) Study on remote sensing image fusion that combines changes between HSV and PCA. Jiangsu Sci Technol Inf 11:44–46

Gamanya R, Maeyer PD, Dapper MD (2009) Object-oriented change detection for the city of Harare, Zimbabwe. Expert Syst Appl 36(1):571–588

Gillespie AR, Kahle AB, Walker RE (1987) Color enhancement of highly correlated images—channel ratio and ‘chromaticity’ transformation techniques. Remote Sens Environ 22(3):343–365

Gong JZ, Liu YS, Xia BL, Chen JF (2010) Effect of wavelet basis and decomposition levels on performance of fusion images from remotely sensed data. Geogr Geo Inf Sci 26(2):6–10

Han F (2016) The change detection combining spectral gradient difference with object-oriented method for high resolution remote sensing images, doctoral dissertation, southwest Jiaotong University

Huang J, Gu H (2010) QuickBird image fusion based on Gram-Schmidt transform. In: 2010 academic annual conference proceedings of geographic information and internet of things forum and Jiangsu Provincial Surveying and Mapping Society, vol 2

Huang CQ, Goward SN, Masek JG, Thomas N, Zhu Z, Vogelmann JE (2010) An automated approach for reconstructing recent forest disturbance history using dense landsat time series stacks. Remote Sens Environ 114(1):183–198

Jin SM, Yang LM, Danielson P, Homer C, Fry J, Xian G (2013) A comprehensive change detection method for updating the national land cover database to circa 2011. Remote Sens Environ 132(10):159–175

Kennedy RE, Yang Z, Cohen WB (2010) Detecting trends in forest disturbance and recovery using yearly landsat time series: 1. Landtrendr—temporal segmentation algorithms. Remote Sens Environ 114(12):2897–2910

Li L, Wang L, Sun XP, Ying GW (2017) Remote sensing change detection method based on object-oriented change vector analysis. Remote Sens Inf 32(6):71–77

Liu L (2015) Changes of vegetation and its effect on environmental quality in Purple Mountain, Nanjing. Fujian Agric 6:209–211

Mo DL, Liu KJ, Cao BC, Bao YQ (2013) Remote sensing image change detection based on principal component analysis. Image Technol 25(5):53–56

Peng SC, Liu J (2007) Multi-spectral image fusion method based on high pass filter. Appl Res Comput 24(8):218–219

Pu RL, Landry S (2012) A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens Environ 124(9):516–533

Qi L (2013) Scenic resource meticulous extraction based on multi-source information fusion, thesis, Civil Aviation University of China

Qi C, Lalasia BM (2014) Assessment of vegetation response to ungulate removal utilizing high resolution remotely sensed data. Status report for the Makua and Oahu implementation plans. Appendix ES-5

Rouse JW, Haas RH, Schell JA, Deering DW (1973) Monitoring vegetation systems in the Great Plains with ERTS. In: 3rd ERTS symposium. NASA SP-351, vol I, pp 309 − 317

Sun XX, Zhang JX, Yan Q, Gao JX (2011) A summary on current techniques and prospects of remote sensing change detection. Remote Sens Inf 30(1):119–123

Tan YS, Shen ZQ, Jia CY (2008) Study on fusion algorithms of QuickBird pan and multi spectral images. Bull Sci Technol 24(4):498–503

Teffera ZL, Li J, Debsu TM, Menegesha BY (2018) Assessing land use and land cover dynamics using composites of spectral indices and principal component analysis: a case study in middle awash subbasin, ethiopia. Appl Geogr 96:109–129

Tso B, Mather PM (2001) Classification methods for remote sense data. Taylor & Francis Press, New York

Verbesselt J, Hyndman R, Newnham G, Culvenor D (2010) Detecting trend and seasonal changes in satellite image time series. Remote Sens Environ 114(1):106–115

Wang Z, Li MY (2017) Methods of color plan of landscape forest at all seasons—a case study of Purple Mountain. For Resour Manag S1:70–76

Weismiller RA, Kristof SJ, Scholz DK, Anuta PE, Momin SA (1977) Change detection in coastal zone environments. Photogramm Eng Remote Sens 43(12):1533–1539

Witharana C, Larue MA, Lynch HJ (2016) Benchmarking of data fusion algorithms in support of earth observation based antarctic wildlife monitoring. ISPRS J Photogramm Remote Sens 113:124–143

Xue XQ, Zhao RS (1982) Application of electronic computing techniques in forecasting the increase and decrease trend of Dendrolimus punctatus in Purple Mountain forest district of Nanjing. J Jiangsu For Sci Technol 2:7–9

Yang CJ, Ou XK, Dang CL, Zhang ZX (2000) Detecting the change information of forest vegetation in Lushui county of Yunnan province. Geo Inf Sci 2(4):71–74

Yuan D, Elvidge C (1998) Nalc land cover change detection pilot study: Washington, DC area experiments. Remote Sens Environ 66(2):166–178

Zhang JC, Du TZ (2007) Vegetation restoration and reconstruction in the hilly and gully regions of the middle and lower reaches of the Yangtze River. China Forestry Press, Beijing, pp 349–350

Zhou GL, Zhang MJ, Chen X (2008) The situation and measure of vegetation along the shortcuts in Zijin Mountain. J Chin Urban For 6(2):41–43

Zhu CJ, Yang SZ, Cui SC, Wei C, Chen C (2015) Accuracy evaluating method for object-based segmentation of high resolution remote sensing image. High Power Laser Part Beams 27(6):37–43

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Project funding: The work was supported by the National Natural Science Foundation of China (31670552), the PAPD (Priority Academic Program Development) of Jiangsu provincial universities and the China Postdoctoral Science Foundation funded project. Additionally, this work was performed while the corresponding author acted as an awardee of the 2017 Qinglan Project sponsored by Jiangsu Province.

The original version of this article was revised due to a retrospective Open Access order.

The online version is available at http://www.springerlink.com.

Corresponding editor: Tao Xu.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhu, F., Shen, W., Diao, J. et al. Integrating cross-sensor high spatial resolution satellite images to detect subtle forest vegetation change in the Purple Mountains, a national scenic spot in Nanjing, China. J. For. Res. 31, 1743–1758 (2020). https://doi.org/10.1007/s11676-019-00978-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11676-019-00978-x