Abstract

We consider the robust version of items selection problem, in which the goal is to choose representatives from a family of sets, preserving constraints on the allowed items’ combinations. We prove NP-hardness of the deterministic version, and establish polynomially solvable special cases. Next, we consider the robust version in which we aim at minimizing the maximum regret of the solution under interval parameter uncertainty. We show that this problem is hard for the second level of polynomial-time hierarchy. We develop exact solution algorithms for the robust problem, based on cut generation and mixed-integer programming, and present the results of computational experiments.

Similar content being viewed by others

1 Introduction

In this paper we consider a robust variant of combinatorial optimization problem of selecting \(p_i\) items from m sets of items, \(i=1,\ldots ,m\), with the objective to minimize their total cost. We assume the possibility that some choices of pairs of items are forbidden. We further assume that costs of items are not given precisely, but only intervals of their true values are known.

This problem models many practical situations, when we need to design a feasible configuration, subject to uncertain costs of its elements. For example, consider designing a production line in a flexible manufacturing system [16, 22]. The production line consists of m sites arranged for performing a sequence of operations. Execution of each operation requires a specific machine tool, and thus for each operation the designer must choose a single eligible tool among a variety of alternatives (for example, belonging to different types or brands of tools, suitable for a given operation). The costs of tools are subject to random fluctuations, due to the number of factors, for example: time difference between design phase and the purchase phase, additional installation costs, additional customization costs, etc. Consequently, it is usually not possible to determine the exact cost of each tool at the design stage. Moreover, not every tool is compatible with each other, and it may not be possible to install arbitrary selection of tools in a common production line. Thus the choice of possible tool configurations is restricted. For decision maker, the goal is to select eligible tools for each operation’s site, so that the total cost would be minimal.

As another example, consider the workflow planning for an engineering project. Decision makers need to assign teams of people to work on developing components of the whole system. For each component they would list a group of candidates that are suitable to working on it. Then they would decide on the actual team members by selecting a required number of people from each group of candidates. Some people, being adequate candidates for more than one team, may be listed more than once. However, due to the assumed work schedule, they cannot be assigned to two teams that work simultaneously. The final cost of completing each component is uncertain, as it depends not only on the skills and experience of selected people, but also on components’ complexity, applied technologies, and possibly many other factors.

1.1 Related work

The basic variant of this problem has been first considered in [11] under the name Representatives Selection Problem, where we are allowed to select one item from each set of alternatives. In order to alleviate the effects of cost uncertainty on decision making, the min-max and min-max regret criteria [3, 18] have been proposed to assess the solution quality. The problem formulations using these criteria belong to the class of robust optimization problems [25]. Such approach appears to be more suitable for large scale design projects than an alternative stochastic optimization approach [17], when: 1) decision makers do not have sufficient historical data for estimating probability distributions; 2) there is a high factor of risk involved in one-shot decisions, and a precautionary approach is preferred. The robust approach to discrete optimization problems has been applied in many areas of industrial engineering, such as: scheduling and sequencing [12, 13, 20], network optimization [5, 7], assignment [2, 24], and others [15].

Note that deterministic version of Representatives Selection Problem is easily solvable in polynomial time. For interval uncertainty representation of cost parameters the problem can still be solved in polynomial time, both in case of minimizing the maximum regret and the relative regret [11]. However, in case of discrete set of scenarios, the problem becomes NP-hard even for 2 scenarios, and strongly NP-hard when the number of scenarios K is a part of the input. In [10] authors prove that strong NP-hardness holds also when sets of eligible items are bounded. In [19] an \(O(\log K / \log \log K)\)-approximation algorithm for this variant was given.

In this paper we consider a generalization of the Representatives Selection Problem, where it is required to select a specific number of items from each set, and constraints may be imposed on the selected items’ configurations, by specifying the pairs of items that cannot be selected simultaneously. We assume the interval representation of cost uncertainty. This representation is useful when enumeration of all possible scenarios is impractical or unwieldy, as is usually the case in the aforementioned problem applications.

2 Problem formulation

We start from defining a deterministic version of the considered problem, which we call Restricted Items Selection Problem (abbreviated RIS). Given are m sets \(I_i\) of items, with integer cost \(c_{ij}\) associated with each \(j \in I_i\), \(r_i = |I_i|\). Given is a set T of tuples (i, k, j, l), \(i,j \in \{ 1,\ldots ,m \}\), \(k \in I_i\), \(l \in I_j\), indicating that items k and l cannot be selected simultaneously (i.e., if k is selected, then l cannot be selected, and if l is selected, then k cannot be selected). The set T contains all the forbidden pairs. Given are m positive integers \(p_i < |I_i|\). The goal is to select for each \(i=1,\ldots ,m\) a subset \(S_i \subset I_i\) of exactly \(p_i\) items, so that the total cost of selected items is minimized.

Let \(x_{ij} = 1\) if jth item from ith set is selected, \(x_{ij} = 0\) otherwise. The problem can be stated as:

subject to:

Let us now define the version of the problem with uncertain data, which we call Interval Min-Max Regret Restricted Items Selection Problem (abbreviated IRIS). For each item, given is an interval of possible costs, \(c_{ij}^- \le c_{ij} \le c_{ij}^+\). The set of all cost scenarios is a product of intervals, denoted:

Let \(\mathbf{x} \) be the vector of variables \(x_{ij}\) (indexing (i, j) agreeing with the cost vector \(\mathbf{c} \)). We define the (maximum) regret of a solution \(\mathbf{x} \) as the difference between the solution value in the worst-case scenario, and the best possible value in the worst-case scenario:

where:

is the set of feasible solutions (choices of items from each set \(I_i\)). The objective of the IRIS problem is to find such a choice \(\mathbf{x} \) that minimizes the maximum regret:

2.1 Related problems

The considered problem generalizes two related fundamental combinatorial optimization problems, namely, the Selecting Items Problem [4, 9], and the aforementioned Representatives Selection Problem [11], of which the robust variants have been investigated in the literature.

-

1. Selecting items problem (SI) Given is a set I of items, with a positive integer \(c_i\), denoting item’s cost, associated with each \(i \in I\). Given is positive integer \(p < |I|\). The goal is to select a subset \(S \subset I\) of exactly p items, so that the total cost of S is minimized. Let \(\mathbf{x} \) be the characteristic vector of S, i.e., \(x_{i} = 1\) if \(i \in S\), and \(x_i=0\) otherwise. The problem can be stated as:

$$\begin{aligned} \min \left\{ \sum _{i=1}^n x_i c_i : \; \sum _{i=1}^n x_i = p, \; \mathbf{x} \in \{ 0, 1 \}^n \right\} . \end{aligned}$$ -

2. Representatives selection problem (RS) Given are m sets \(I_i\) of items, with a positive integer costs \(c_{ij}\) associated with each \(j \in I_i\), \(r_i = |I_i|\). The goal is to select a single item from each set, \(J=(j_1, j_2, \ldots , j_m)\), \(j_i \in I_i\), so that the total cost is minimized. Let \(x_{ij} = 1\) if jth item from ith set is selected, \(x_{ij} = 0\) otherwise. The problem can be stated as:

$$\begin{aligned} \min \left\{ \sum _{i=1} \sum _{j=1}^{r_i} x_{ij} c_{ij} : \; \forall _{i=1,\ldots ,m} \; \sum _{j=1}^{r_i} x_{ij} = 1, \; \forall _{j=1,\ldots ,r_i} \; x_{ij} \in \{ 0,1 \} \right\} . \end{aligned}$$

If \(p_i=1\) for each \(i=1,\ldots ,m\), and \(T = \emptyset \), then problem RIS is equivalent to RS. If \(T = \emptyset \), then problem RIS is equivalent to m independent instances of SI problem.

3 Problem complexity

Deterministic problems SI and RS can be solved in polynomial time (trivially using sorting). However, forbiddance constraints make these problems much more difficult in general.

Theorem 1

Problem RIS is NP-hard, even when \(p_i = 1\) for \(i=1,\ldots ,m\).

Proof

We present reduction from the independent set problem [14]. Let \(G=(V,E)\) be an undirected graph with \(|V| = n\), and we ask if G has an independent set of size at least K. We construct an instance of RIS as follows. Let us enumerate vertices in V by consecutive numbers \(1,\ldots ,n\), denoting ith vertex by \(v_i\). For each vertex \(v_i\) we create an item set \(I_i\), containing an item of cost 0. For each edge \((v_i, v_j) \in E\), we add tuple (i, 1, j, 1) to set T, forbidding simultaneous choice of items corresponding to \(v_i\) and \(v_j\). To each set \(I_i\) we also add a dummy item with cost 1.

Note that a feasible solution to RIS instance always exists (dummy items are not involved in constraints T). In such a solution we have a choice of items, one from each set \(I_i\), such that the corresponding nodes \(v_i\) are not directly connected in G. The cost of an optimal solution of RIS instance is less or equal to \(n-K\) if and only if there is an independent set of size K in G. \(\square \)

Consequently, the uncertain data problem IRIS is NP-hard as well. We can, however, identify the following special cases of RIS by imposing additional restrictions on the set of constraints. By exploiting these properties we are able to improve the solution algorithms of uncertain IRIS problem in these special cases (see Sect. 4).

Theorem 2

Problem RIS can be solved in polynomial time in any of the following special cases:

-

(1)

Each item appears at most once in any constraint in T.

-

(2)

If \((i,k,j,l) \in T\) and \((j,l,p,q) \in T\), then \((i,k,p,q) \in T\) (that is, the “forbiddance” relation between pairs of items is transitive).

Proof

To see that RIS can be solved in polynomial time in case 1), we observe that if each item is involved in at most one constraint in T, then the constraint matrix of integer linear program (1)–(4) is totally unimodular. This can be verified using the following characterization (see [26], chapter 19): a \((0,\pm 1)\) matrix with at most two nonzeros in each column is totally unimodular, if the set of rows can be partitioned into two classes, such that two nonzero entries in a column are in the same class of rows if they have different signs and in different classes of rows if they have the same sign. These conditions are satisfied if we take the rows corresponding to constraints (2) as the first class, and rows corresponding to constraints (3) as the second class.

To prove case 2), we construct an instance of min-cost max-flow network problem that is equivalent to the corresponding RIS instance. First, note that due to the transitivity of “forbiddance” relation, items can be grouped into equivalence classes. From each equivalence class, at most one item can be selected. If an item is not involved in any constraint, it would belong to an equivalence class of cardinality 1. The network consists of m source nodes, two inner layers, followed by a single terminal node (see also Example 1 and Fig. 1). Each source node corresponds to the set \(I_i\), \(i=1,\ldots ,m\), and provides a supply of \(p_i\) units of flow. Nodes in the first inner layer correspond to items in sets \(I_i\), and are connected by unit-capacity arcs to their corresponding source nodes. Each such arc has costs \(c_{ij}\). The second inner layer consists of nodes corresponding to each equivalence class of items. Nodes from the first inner layer, that correspond to the items involved in a common equivalence class, are connected to the common node in the second layer. Arcs between first and second inner layer have unit-capacity and zero cost. Finally, there are unit-capacity and zero-cost arcs from each node in the second layer and the terminal node. The flow network can be constructed in polynomial time given an instance of RIS. \(\square \)

Example 1

Consider an instance of RIS with \(m=3\) item sets, each containing \(r_i=3\) items, with the requirement to select \(p_i=2\) items from each set. The set of forbidden pairs is

Note that the first three pairs form a transitive subset, thus only one item can be selected from it. The corresponding flow network is presented on the Fig. 1. The first inner layer nodes correspond to items, denoted \(i_{p,q}\), and the second inner layer nodes, denoted \(e_k\), correspond to the subsets of items from which only a single item can be selected.

Example of flow network illustrating case (2) of Theorem 2

Note that the reduction used in Theorem 1 implies that the choice of dummy items in the optimal solution of RIS would correspond to the complement of the maximum independent set, that is, to the minimum vertex cover of the input graph G. Consequently, if there exists an \(\alpha \)-approximation algorithm for RIS, then we would also have an \(\alpha \)-approximation algorithm for the minimum vertex cover problem. No such algorithm is possible for \(\alpha < 2\), if the Unique Games Conjecture is true [21].

The special cases of uncertain IRIS problem described in Theorem 2 admit the general 2-approximation algorithm for the class of interval data min-max regret problems with polynomially solvable nominal sub-problems [18]. This algorithm consists of solving a RIS instance for the mid-point scenario (i.e., \(c^S_{ik} = \frac{c_{ik}^- + c_{ik}^+}{2}\), for \(i=1,\ldots ,m\), \(k=1,\ldots ,r_i\)).

We conclude this section with the last statement regarding the complexity of general robust IRIS problem. It turns out that this problem is likely to be even much harder than its already NP-hard deterministic counterpart RIS. We show that it is hard for the complexity class \(\Sigma _2^p = NP^{NP}\), a set of problems that remain NP-hard to solve even if we can use an oracle that answers NP queries in O(1) time (i.e., the set of problems that can be solved in polynomial time on nondeterministic Turing machine that can access an oracle for NP-complete problem in every step of its computations) [27].

The class \(\Sigma _2^p\) can be also characterized as the set of all decision problems that can be stated in the second-order logic using a pair of universal and existential quantifiers. Let us define the following prototypical problem in this class:

Problem: 2-Quantified 3-DNF Satisfiability (abbreviated \(\exists \forall \) 3SAT)

Instance: Two sets \(X=\{ x_1, \ldots , x_s \}\), \(Y=\{ y_1, \ldots , y_t \}\) of Boolean variables. A Boolean formula \(\phi (X,Y)\) over \(X \cup Y\) in disjunctive normal form, where every clause consists of exactly 3 literals.

Question: Is \(\exists _X \forall _Y \; \phi (X, Y)\) true?

Example 2

The following formula \(\phi \) over \(X = \{ x_1, x_2, x_3 \}\) and \(Y = \{ y_1, y_2 \}\) is a positive instance of \(\exists \forall \) 3SAT problem:

Theorem 3

Problem \(\exists \forall \) 3SAT is \(\Sigma _2^p\)-complete [27].

Theorem 4

Problem IRIS with \(p_i=1\), \(i=1,\ldots ,m\), is \(\Sigma _2^p\)-hard.

Proof

Given any 3-DNF formula \(\phi \) we construct and instance of Interval Minmax Regret RIS as follows. For each clause \(\mathcal {C}_i\), \(i=1,\ldots ,m\), we create a set of items \(I_i\). Each set contains three types of items:

-

1)

an X-item that corresponds to the unique satisfying assignment of x-variables in clause \(\mathcal {C}_i\),

-

2)

a collection of Y-items, denoted \(Y_{i,k}\), each corresponding to every assignment of y-variables that make the clause \(\mathcal {C}_i\) evaluate to false,

-

3)

a special item.

We can assume that each clause contains at least one y-variable (otherwise the instance answer is always “yes”). If there are no x-variables in a given clause, then there is no X-item in the set \(I_i\) (only Y-items and a special item).

For each pair of item sets \(I_i\), \(I_j\), and for each pair of items \(Y_{i,k} \in I_i\), \(Y_{j,l} \in I_j\), we add a “forbidding” constraint (i, k, j, l) to T, whenever:

-

1)

both items correspond to assignment of common variable y, and,

-

2)

item \(Y_{i,k}\) corresponds to the assignment of variable \(y=0\), while item \(Y_{j,l}\) corresponds to the assignment \(y=1\), or vice-versa.

Similarly, we add “forbidding” constraints for pairs of X-items from two sets \(I_i\) and \(I_j\), whenever their satisfying x-assignments contain a common Boolean variable, and they correspond to conflicting assignments of x-variables. Special items are not bound by any “forbidding” constraints.

For example, Let \(\mathcal {C}_1 = (x_1 \wedge y_1 \wedge \bar{y}_2)\), and \(\mathcal {C}_2 = (\bar{x}_1 \wedge y_1 \wedge y_3)\). Then X-item from set \(I_1\) cannot be taken simultaneously with X-item from set \(I_2\), since they both correspond to satisfying assignment of variable \(x_1\), that is \(x_1=1\) in \(\mathcal {C}_1\), and \(x_1=0\) in \(\mathcal {C}_2\). Moreover there are 3 Y-items in \(I_1\), corresponding to unsatisfying assignments of \((y_1,y_2)\), that is 00, 01 and 11; and there are 3 Y-items in \(I_2\), corresponding to unsatisfying assignments of \((y_1,y_3)\), that is 00, 01 and 10. Note that since both clauses share variable \(y_1\), items that correspond to a conflicting assignment form a “forbidden pair” (e.g., Y-item 00 from the first set and Y-item 10 from the second set, etc.).

Let \(B > m\) be a large constant. For each set \(I_i\), each X-item corresponding to the unique x-satisfying assignment has cost interval [0, B]. Each Y-item corresponding to unsatisfying assignment of y-variables has cost interval \([0, B^2]\). The special item has cost \(c^- = c^+ = B+1\), regardless of the scenario.

Note that in the worst-case scenario each item selected by the decision maker will have the interval upper-bound cost \(c^+\), while all other items will have their interval lower-bound costs \(c^-\).

Observe that any optimal solution of the considered IRIS problem corresponds to a valid assignment of truth values to all variables in \(X \cup Y\): the choice of items encoded by the characteristic vector \(\mathbf{x} \) corresponds to the assignment of x-variables, while the best alternative solution in the worst-case scenario, encoded by the characteristic vector \(\mathbf{y} \) in (5), corresponds to the assignment of y-variables. Since \(p_i=1\) for \(i=1,\ldots ,m\), from each set \(I_i\) the decision maker selects a single item, deciding on the solution \(\mathbf{x} \). Subsequently, the worst-case scenario is fixed, and the adversary selects a single item from each set \(I_i\), deciding on an alternative solution \(\mathbf{y} \).

The regret minimizing decision maker would always select either X-item or a special item from any set \(I_i\). This follows from the fact that, if decision maker selects an Y-item, then in the worst-case scenario its cost will be \(B^2\), while the adversary would always be able to choose an X-item with cost 0. The decision maker would be better off never selecting an Y-item, having guaranteed cost no greater than \(B+1\) (the cost of the special item, selected only when it is not possible to select X-item, due to “forbidding” constraints). On the other hand, the regret maximizing adversary would select one of Y-items, whenever possible, which would have cost 0 in the worst-case scenario. Only when it is not possible to choose such an item (due to “forbidding” constraints) would the adversary select another item: either a special item, or an X-item, resulting in the cost B or \(B+1\). This happens when the adversary must choose an y-satisfying assignment in the corresponding clause.

Since the items must be selected preserving all the “forbidding” constraints, their choice corresponds to an assignment of truth values to all Boolean variables without any conflicts.

We will show that there exists an assignment of truth values to x-variables, such that for every assignment of y-variables at least one clause is satisfied, if and only if the value of a solution of the constructed instance is at most \(Z = (m-1)B + m-1\).

Suppose that \(\exists \forall \) 3SAT instance is positive (i.e., at least one clause would always be satisfied both by x- and y-variables). Then there exists an assignment of x-variables, consisting of x-satisfying assignment in at least k clauses (\(1 \le k \le m\)), while in every y-assignment, at least one x-satisfied clause is also y-satisfied. In such case the total cost of decision maker’s choice is \(mB + (m-k)\) (k X-items and \((m-k)\) special items). In at least one set \(I_i\) the adversary cannot select Y-item, and thus is forced to select an X-item. But since that item is also selected by the decision maker, its cost is cancelled when evaluating the regret. Consequently, the total regret is no greater than \(mB + (m-k) - B \le (m-1)B + (m-1) = Z\).

Suppose now that \(\exists \forall \) 3SAT instance is negative. Then for every assignment to x-variables consisting of k x-satisfied clauses, the adversary can select an y-assignment such that all these k clauses evaluate to false. Thus decision maker’s cost is \(mB + (m-k)\), while the adversary’s cost is always zero; the adversary can always select either an Y-item with cost 0, or, from a set \(I_i\) that corresponds to a clause that is unsatisfied by x-variables, an X-item with cost 0 (since in that case the decision maker must have taken a special item from \(I_i\)). Consequently, the maximum regret is at least equal to \(mB > (m-1)B + (m-1) = Z\), which concludes the proof. \(\square \)

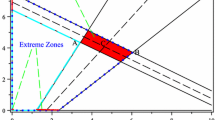

Illustration for Example 3, showing the construction used in the proof of Theorem 4. Each of the 4 sets contains: an X-item with cost interval [0, B], a collection of Y-items with cost intervals \([0,B^2]\), and a special item with cost interval \([B+1,B+1]\). In the worst-case scenario, the items selected by decision maker have upper-bound costs, while all other items have lower bound costs

Example 3

Consider the formula \(\phi \) from Example 2, and let the assignment of x-variables be \((x_1, x_2, x_3) = (1,1,0)\). Then first 3 out of 4 clauses are x-satisfied, and it can be easily verified that all four possible y-assignments to \((y_1, y_2)\) would result in 1 out of the first 3 clauses also y-satisfied.

This formula corresponds to the following instance of IRIS problem shown on Fig. 2. Each item set contains an X-item that corresponds to the satisfying assignment of x-variables, and Y-items that correspond to all possible unsatisfying assignments of y-variables, as well as special items. Forbidden pairs between items from different sets are marked with dashed lines (for example, Y-item from item set 1 cannot be taken simultaneously with two first Y-items from item set 4, as the former corresponds to \(y_1=0\), while the latter to \(y_1=1\), etc.). The solution in which X-items are selected from first 3 sets, and the special item from the set 4, has maximum regret equal to \(3B+1\), since the adversary is forced to select an X-item from one of the first 3 sets.

4 Solution algorithms

A standard technique for solving a general class of min-max regret optimization problems, for which the deterministic (nominal) version can be written as integer linear program, is based on solving a sequence of relaxed problems, with successively generated cuts in order to tighten the relaxation (see e.g., [3]). We develop a solution algorithm for IRIS based on this approach. Note that the worst-case cost scenario \(\mathbf{c} (\mathbf{x} )\) is an extreme point in \(\mathcal {U}\), defined as:

which can be written as \(c_{ij}(\mathbf{x} ) = c_{ij}^- - (c_{ij}^- - c_{ij}^+)x_{ij}\). Using this fact we can rewrite the problem (7) as

where \(\mathcal {F}\) is as defined in (6), and further:

subject to:

where \(\mathcal {C} = \mathcal {F}\) is the set of all feasible solutions of the RIS problem (6).

The above problem has exponentially many constraints (excluding trivial cases). Instead of solving it directly, we relax (10) by selecting a subset \(\mathcal {C} \subset \mathcal {F}\) of small size. After solving relaxed problem we obtain a solution \((\hat{\mathbf{x }}, \hat{z})\). Vector \(\hat{\mathbf{x }}\) is a feasible solution of (7), but not necessarily optimal. We can evaluate the maximum regret (5) for \(\hat{\mathbf{x }}\), and use the value \(\hat{z}\) to check if \(\hat{\mathbf{x }}\) is also feasible for the non-relaxed problem, by testing if

If this is the case, then \(\hat{\mathbf{x }}\) must be optimal for (7). Otherwise, the computed maximum regret \(R(\hat{\mathbf{x }})\) produces an alternative solution \(\hat{\mathbf{y }}\), which can be added to the set \(\mathcal {C}\), tightening the relaxation. We resolve the new relaxed problem, and repeat the above procedure until either an optimal solution is found, or the stopping condition is met. The overview of this solution method is presented as Algorithm 1.

Remarks

-

1.

Note that each time we solve the relaxed problem (9)–(10) we establish an increasingly better lower bound value LB on (7) [by taking the optimal value of the objective function (9)]. We may also discover subsequently better upper bound values UB, since each \(\hat{\mathbf{x }}\) is feasible for (7). Thus the above method allows to determine the relative gap \(g = (UB - LB) / UB\) to the optimal solution value. This is very useful in assessing the quality of suboptimal solutions.

-

2.

For large problem instances, the number of generated cuts \(|\mathcal {C}|\) may become very large in later iterations of the algorithm. This may significantly slow down the algorithm. However, many of these cuts would be inactive for optimal solution. Consequently, in the computational experiments we set the time limit (30 secs.) for solving the relaxed problem (9)–(10) in step 2, and if this limit is exceeded before an optimal solution to relaxation was found, we remove from \(\mathcal {C}\) few constraints with the largest linear slacks in the previous solution, and retry solving. This assures that the size of the relaxed problem remains within reasonable range (as it would otherwise blow up exponentially). Note, however, that in this process some constraints that would become essential at later iterations might be removed; they would need to be generated again and added to \(\mathcal {C}\), and this may potentially consume additional computational time.

-

3.

When solving the relaxed problem (9)–(10) using branch and bound scheme, we may obtain a number of feasible solutions that can be used for the warm-start initialization when resolving with a new cut added. This was also implemented in the computational experiments.

-

4.

For “transitive” instances (as in the second case of Theorem 2), solving the auxiliary RIS subproblem in step 4 can be performed using a dedicated polynomial time algorithm for min-cost max-flow [1]. Otherwise the resulting integer linear problem can be solved via general branch & bound.

4.1 Initializing the set of cuts

The performance of the algorithm described above depends on the choice of initial set of vectors \(\mathcal {C} = \{ \mathbf{y} ^{(k)} \}\) in Step 1. A simplest way to initialize it is to select a number of extreme scenarios \(\mathbf{c} ^{(k)}\) (vertices of \(\mathcal {U}\)), and obtain vectors \(\mathbf{y} ^{(k)}\) by solving deterministic RIS problem for these scenarios.

We initialize this set by randomly sampling 100 extreme scenarios and solving RIS problem for them. We also add to this set the set of solutions found using an evolutionary heuristic method, and use their best value as an initial upper bound UB in the main algorithm.

In the initialization stage, we first compute the mid-point scenario solution, and use it as an initial solution in an evolutionary heuristic. The mid-point scenario solution may already have a value close to optimal. The latter method iteratively searches for improved solutions by applying mutation and crossover operations to the pool of current best solutions (population). Mutation operation randomly changes the selection of items within each set (the number of changes, as well as the number of affected sets, is also selected randomly). The crossover operation takes a pair of solutions, and replaces randomly selected sets from the first solution with the corresponding sets from the second solution. Each change is performed only if it results in a feasible solution. Both operations are repeated a fixed number of times, resulting in a new population of currently best solutions, which are passed to the next iteration. The final population is returned as a result. In the experiments we used 20 iterations on a population of size 10, applying 100 crossovers and mutations in each iteration.

4.2 Compact formulation for special case

In Sect. 3 we have identified two special cases of the deterministic RIS problem that can be solved in polynomial time. We have shown that if the “forbiddance” relation between the pairs of items is transitive, the problem can be reformulated as min-cost max-flow problem. This property can be exploited in order to derive a compact mixed-integer program (MIP) for the robust version of this special case of the problem.

Given an instance of IRIS satisfying condition 2) of Theorem 2, consider the graph G, with the set of vertices corresponding to the set of all items from the item sets \(I_1, \ldots , I_m\), and edges connecting forbidden item pairs. The vertices are indexed by (i, k), where \(i \in \{ 1,\ldots ,m \}\) and \(k \in \{ 1,\ldots ,r_i \}\). Consider the set of all connected components of G, and let \(\mathcal {E} = \{ E_1, E_2, \ldots \}\), where each \(E_q\) is the set of vertex indices of a connected component of G. These components form equivalence classes of the transitivity relation. Denote \(d = |\mathcal {E}|\). The corresponding min-cost max-flow problem can be formulated as linear program in variables \(\mathbf{y} = [y_{ikq}]\) as follows:

s.t.:

Its dual linear program can be written as:

s.t.:

We also note that the special case of RIS satisfying condition 1) in Theorem 2 can be considered in a similar way, with all \(|E_q| \le 2\).

Consequently, we can replace the inner minimization problem in (8) by the equivalent maximization problem, obtaining the compact MIP formulation of the corresponding IRIS instance as follows:

subject to:

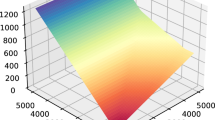

5 Experimental results

In this section we present the results of computational experiments conducted on a sequence of randomly generated problem instances. We examine the performance of the relaxation-based Algorithm 1 (with the initial set of cuts generated by the heuristic algorithm described in the previous section), as well as the performance of compact MIP formulation (applicable to the special case of the problem), described in Sect. 4.2.

Setup Each experiment consists of running the solution algorithm on a set of randomly generated problem instances. The considered parameters of IRIS problem instances are denoted as: m – the number of item sets, \(r_i\) – the number of items in set i, \(p_i\) – the number of items required to be selected from set i, K – the number of constraints of type (3). Column labeled n contains the number of (binary) decision variables in the resulting MIP.

For each fixed parameter values we have generated 10 instances. Bounds of cost intervals of all items were randomly generated integers between 1 and 100.

We distinguish two main types of instances: (a) normal instances and (b) transitive-constraints instances. For the former, the constraints (3) are generated by randomly sampling K pairs of items, each from different set. For the latter, additionally, a transitive closure of the “forbiddance” relation is generated, and the additional constraints are included to restrict the selection of items to one per equivalence class. In the results, an average number of these constraints is reported in the column labeled “\(\hat{K}\)”. Both types of instances were solved using cut generating Algorithm 1, and the results are summarized in Tables 1 and 2. The transitive-constraints instances were also solved directly using MIP described in subsection 4.2; these results are shown in Table 3. Since this method allows for solving even larger instances to optimality in a reasonable time, Table 3 contains also results for additional instance sets, with \(n \ge 300\). The other sets of instances were identical for both solution methods (shown in Table 2 and the first part of Table 3).

In Algorithm 1 the stopping condition was that either an optimal solution has been found, or iteration limit of 500 has been reached. We have also used time limit of 30 sec. for solving relaxed problem (9)–(10) in each iteration of the algorithm. For the solution method based on compact MIP, the time limit of the solver was set to 10 minutes.

All computations have been performed using CPLEX 12.10 optimization software and Python programming language.

Results Each row in Tables 1–3 contains the results from running the algorithm on 10 instances. First, the instance parameters are given, followed by the mean value and standard deviation of elapsed time for the algorithm to terminate. The next columns contain the mean value and standard deviation of the elapsed time, and the iteration count of these runs that resulted in optimal solution. Finally, the number of instances (out of 10) solved to optimality is reported in the column “opt.”, followed by the the mean solution value. For the suboptimal ones, the last column contains the mean relative optimality gap, i.e., the value \(g = (UB - LB)/UB\).

We observe that the hardest instances often contain only few constraints of type (3). This is due to the fact that the larger the number K, the smaller is the set of feasible solutions \(\mathcal {F}\), making it easier to explore. On the other hand, they are typically difficult when it is required to select about the half of the items in each set.

We also note that the compact MIP formulation should be preferred for the spacial case of transitive-constraints instances, since it outperforms the general cut-generating method by at least an order of magnitude. For example, it was able to solve all of 10 instances with 1000 binary variables, while the cut-generating method typically stopped at suboptimal solution already for instances with 100 binary variables. On the other hand, the cut-generating method is applicable to the general case of the considered problem, in particular, when no special constraints structure is guaranteed.

6 Conclusions

We presented a generalization of the representatives selection problem with interval costs uncertainty, and considered it in the robust optimization framework with the maximum regret criterion. We characterized the computational complexity of the problem, and developed both exact and heuristic solution method. The main solution algorithm consists of cut generation and solving a sequence of relaxed problems. An evolutionary heuristic stage was proposed to initialize the algorithm with a set of cuts. While the considered robust problem is difficult to solve to optimality in general, we have demonstrated that it is possible to solve it for moderately-sized problem instances, and approximate solutions using a combination of heuristics and exact method. A special case of the problem, when forbidden pairs constraints form a transitive relation, can be solved much more efficiently with a compact MIP formulation.

The analysis of this problem can be extended in the future for different types of restrictions on item choices. For instance, some items may be required to be selected in bundles, and would be useless without simultaneously selecting specific items from other sets. It would be also interesting to investigate the approximation algorithms for both deterministic and uncertain variants of RIS problem, as well as various techniques to speed-up the general row-generation algorithm. From the theoretical perspective, it is still open whether the IRIS is NP-hard for the special cases described in Theorem 2. Moreover, other forms of uncertainty modeling could be considered, such as polyhedral uncertainty [6] or budgeted uncertainty [8]. It would also be interesting to consider the two-stage and recoverable [23] variants of the restricted item selection problem.

References

Ahuja, R.K., Magnanti, T.L., Orlin, J.B.: Network Flows. Prentice Hall, Upper Saddle River (1988)

Aissi, H., Bazgan, C., Vanderpooten, D.: Complexity of the min-max and min-max regret assignment problems. Oper. Res. Lett. 33(6), 634–640 (2005)

Aissi, H., Bazgan, C., Vanderpooten, D.: Min-max and min-max regret versions of combinatorial optimization problems: a survey. Eur. J. Oper. Res. 197(2), 427–438 (2009)

Averbakh, I.: On the complexity of a class of combinatorial optimization problems with uncertainty. Math. Program. 90(2), 263–272 (2001)

Averbakh, I., Lebedev, V.: Interval data minmax regret network optimization problems. Discr. Appl. Math. 138(3), 289–301 (2004)

Ben-Tal, A., Nemirovski, A.: Robust solutions of uncertain linear programs. Oper. Res. Lett. 25(1), 1–13 (1999)

Bertsimas, D., Sim, M.: Robust discrete optimization and network flows. Math. Program. 98(1–3), 49–71 (2003)

Bertsimas, D., Sim, M.: The price of robustness. Oper. Res. 52(1), 35–53 (2004)

Conde, E.: An improved algorithm for selecting p items with uncertain returns according to the minmax-regret criterion. Math. Program. 100(2), 345–353 (2004)

Deineko, V.G., Woeginger, G.J.: Complexity and in-approximability of a selection problem in robust optimization. 4OR 11(3), 249–252 (2013)

Dolgui, A., Kovalev, S.: Min–max and min–max (relative) regret approaches to representatives selection problem. 4OR 10(2), 181–192 (2012)

Drwal, M.: Robust scheduling to minimize the weighted number of late jobs with interval due-date uncertainty. Comput. Oper. Res. 91, 13–20 (2018)

Drwal, M., Rischke, R.: Complexity of interval minmax regret scheduling on parallel identical machines with total completion time criterion. Oper. Res. Lett. 44(3), 354–358 (2016)

Garey, M., Johnson, D.: Computers and Intractability: A Guide to the Theory of NP-completeness. Freeman, W.H (2002)

Goerigk, M., Schöbel, A.: Algorithm engineering in robust optimization. In: Kliemann, L., Sanders, P. (eds.) Algorithm Engineering, . Lecture Notes in Computer Science, vol. 9220, pp. 245–279. Springer, Cham (2016)

Jahromi, M., Tavakkoli-Moghaddam, R.: A novel 0–1 linear integer programming model for dynamic machine-tool selection and operation allocation in a flexible manufacturing system. J. Manuf. Syst. 31(2), 224–231 (2012)

Kall, P., Wallace, S.W., Kall, P.: Stochastic Programming. Springer, Berlin (1994)

Kasperski, A.: Discrete Optimization with Interval Data: Minmax Regret and Fuzzy Approach. Springer, Berlin (2008)

Kasperski, A., Kurpisz, A., Zieliński, P.: Approximability of the robust representatives selection problem. Oper. Res. Lett. 43(1), 16–19 (2015)

Kasperski, A., Zielinski, P.: Minmax (regret) scheduling problems. In: Y. Sotskov F. Werner (eds.)Sequencing and Scheduling with Inaccurate Data. pp. 159–210, Nova Science Publishers, Inc., Hauppauge, NY (2014)

Khot, S., Regev, O.: Vertex cover might be hard to approximate to within 2- \(\varepsilon \). J. Comput. Syst. Sci. 74(3), 335–349 (2008)

Lee, C.S., Kim, S.S., Choi, J.S.: Operation sequence and tool selection in flexible manufacturing systems under dynamic tool allocation. Comput. Ind. Eng. 45(1), 61–73 (2003)

Liebchen, C., Lübbecke, M., Möhring, R., Stiller, S.: The concept of recoverable robustness, linear programming recovery, and railway applications. In: Ahuja, R.K., Möhring, R.H., Zaroliagis, C.D. (eds.) Robust and online large-scale optimization. Lecture Notes in Computer Science, vol. 5868, Springer, Berlin, Heidelberg (2009)

Pereira, J., Averbakh, I.: Exact and heuristic algorithms for the interval data robust assignment problem. Comput. Oper. Res. 38(8), 1153–1163 (2011)

Roy, B.: Robustness in operational research and decision aiding: A multi-faceted issue. Eur. J. Oper. Res. 200(3), 629–638 (2010)

Schrijver, A.: Theory of linear and integer programming. Wiley, New York (1998)

Stockmeyer, L.J.: The polynomial-time hierarchy. Theor. Comput. Sci. 3(1), 1–22 (1976)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was supported by the National Science Center of Poland, under Grant 2017/26/D/ST6/00423.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Drwal, M. Robust approach to restricted items selection problem. Optim Lett 15, 649–667 (2021). https://doi.org/10.1007/s11590-020-01626-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-020-01626-8