Abstract

The Sarmanov family of bivariate distributions is considered as the most flexible and efficient extended families of the traditional Farlie–Gumbel–Morgenstern family. The goal of this work is twofold. The first part focuses on revealing some novel aspects of the Sarmanov family’s dependency structure. In the second part, we study the Fisher information (FI) related to order statistics (OSs) and their concomitants about the shape-parameter of the Sarmanov family. The FI helps finding information contained in singly or multiply censored bivariate samples from the Sarmanov family. In addition, the FI about the mean and shape parameter of exponential and power distributions in concomitants of OSs is evaluated, respectively. Finally, the cumulative residual FI in the concomitants of OSs based on the Sarmanov family is derived.

Similar content being viewed by others

1 Introduction

The Farlie-Gumbel-Morgenstern (FGM) family is an important and seminal class of bivariate distributions. For a given set of marginal distribution functions (DFs) \(F_{X}(.)\) and \(F_{Y}(.),\) the FGM family is defined as \(G_{X,Y}(x,y)=F_X(x)F_Y(y)[1+\lambda {\overline{F}}_X(x){\overline{F}}_Y(y)],\) \(-1\le \lambda \le 1,\) where \({\overline{F}}_X(x)\) and \({\overline{F}}_Y(y)\) are the survival functions of the random variables (RVs) X and Y, respectively. One important limitation of the FGM family is that the correlation coefficient between its marginals is restricted to a narrow range \(\left[ -\frac{1}{3},\frac{1}{3}\right] ,\) where the maximum value of the correlation is attained for the FGM copula, i.e., with uniform marginals (cf. Schucany et al. [33]). Accordingly, this family can be used to model the bivariate data that exhibits low correlation. For this reason, since the inception of this family, several extensions of it have been introduced in the literature in an attempt to improve the correlation level. Among these extensions, we recall the one-shape-parameter Sarmanov family, which was suggested by Sarmanov [30] as a new mathematical model of hydrological processes. Its copula (with uniform marginals \(F_X(x)=x, F_Y(y)=y,\) \(0\le x,y\le 1\)) is given by

where \( \varphi (x)=2x-1.\) The corresponding PDF is given by

The correlation coefficient of the Sarmanov copula is \(\alpha ,\) which means that the copula’s maximum correlation coefficient is 0.529 (cf. Balakrishnan and Lai, [11]; page 74). Clearly, the Sarmanov family is the most efficient one among many known extended families of the FGM family because on both the positive and negative sides, it delivers the best improvement in the correlation level. Barakat et al. [17] and Husseiny et al. [21] revisited the Sarmanov family with general marginals \(F_X\) and \(F_Y\) (denoted by SAR\((\alpha )\)) and showed that this family is an extension of the FGM family and it belongs to a wider family suggested by Sarmanov [29], which has many recorded applications in the literature (see, e.g., Bairamov et al. [10], and Tank and Gebizlioglu, [37]). Moreover, they showed that the SAR\((\alpha )\) is the only one of the extended families of FGM with a radially symmetric copula about (0.5, 0.5). This property was used by them to reveal several prominent statistical properties for the concomitants of OSs and record values from this family and some of information measures, namely, the Shannon entropy, inaccuracy measure, extropy, cumulative entropy, and Fisher information number (FIN) were studied theoretically and numerically.

Due to its relevance in a wide range of scientific issues, concomitants of OSs have got a lot of attention in recent years. Suppose \((X_i, Y_i), i = 1,2,...,n,\) is a random sample from a bivariate DF \(F_{X,Y}.\) If we order the sample by the \(X-\)variate, and obtain the OSs \(X_{1:n}\le X_{2:n}\le ....\le X_{n:n}\) for the X sample, then the \(Y-\)variate associated with the rth OS \(X_{r:n}\) is the concomitant of the rth OS, and is denoted by \(Y_{[r:n]},r=1,...,n.\) The concept of concomitants of OSs was first introduced by David [20] and almost simultaneously under the name of induced OSs by Bhattacharya [19]. Biological selection problem is the most striking application of concomitants of OSs. Another application of concomitants of OSs is in reliability theory, see Barakat et al. [18]. Scaria and Mohan [31] investigated the concomitants of record values from the Cambanis family with logistic marginals. Moreover, Scaria and Mohan [32] investigated the concomitants of OSs based on the FGM and Cambanis families with exponentiated exponential marginals. Recently, Abd Elgawad et al. [4] studied the concomitants of m-generalized OSs of the Cambanis family and some information measures. Furthermore, Alawady et al. [7] studied the concomitants of m-generalized OSs from the Cambanis family under general setting. For more recent works about this subject see Abd Elgawad and Alawady [1], Abd Elgawad et al. [2,3,4,5], Alawady et al. [7,8,9], Barakat et al. [14, 16], Jafari et al. [22], and Tahmasebi et al. [35, 36].

The FI is a way of determining how much information an observable RV has about an unknown parameter on which the probability of that RV depends. The FI is an important and fundamental criterion in statistical inference, physics, thermodynamics, information theory and some other fields. Traditionally, the FI has played a valuable role in statistical inference through the Cramér–Rao inequality and its association with the asymptotic properties of the maximum likelihood estimators. Knowing how much information a sample contains about an unknown parameter can assist determine bounds on the variance of a particular estimator of that parameter and approximate the sampling distribution of that estimator when the sample is large enough.

Consider a RV X, which has a PDF \(f_X(x;\theta ),\) where \(\theta \in \Theta \) is an unknown parameter with a parameter space \(\Theta .\) Under certain regularity conditions (see, Rao, [28], p. 329 and Abo-Eleneen and Nagaraja, [6]), FI about \(\theta \in \Theta ,\) contained in the RV X, is \(I_{\theta } (X;\theta ):= -\text{ E } \left( \frac{\partial ^{2} \ln f_X(x;\theta )}{\partial \theta ^{2}}\right) =\text{ E } \left( \frac{\partial \ln f_X(x;\theta )}{\partial \theta }\right) ^2.\) For some recent studies on the FI, one may refer to Barakat and Husseiny [13], Barakat et al. [15], and Kharazmi and Asadi [25]. Another important kind of the FI is the FIN, which is the second moment of the score function (the score function of a RV X with a PDF \(f_X\) is defined by \(\rho (x) = \frac{ \frac{\partial f_X(x;\theta )}{\partial x}}{f_X(x;\theta )}=\frac{\partial \ln f_X(x;\theta )}{\partial x}\)), where the derivative is with respect to x in a given PDF \(f_X(x;\theta )\) rather than the parameter \(\theta .\) It is a FI for a location parameter. For some recent works about this measure, see Abd Elgawad et al. [2], Barakat and Husseiny [12], Barakat et al. [17], and Tahmasebi and Jafari [34].

In the definition of FI, one should be aware that, the FI is not robust to the presence of outliers. This is because the FI is an expectation with respect to X, it equally weights all X values, including large values that may be outliers. The cumulative residual Fisher information (abbreviated as CF) was recently presented by Kharazmi and Balakrishnan [26], which is naturally robust to the presence of outliers. This modified measure is defined as \(CF_{\theta }(X;\theta ):=\int _{-\infty }^{\infty }\left( \frac{\partial \ln \overline{F}_X(x;\theta )}{\partial \theta }\right) ^2 \overline{F}_X(x;\theta )dx,\) where \({\overline{F}}_X(x;\theta )\) is the survival function of X. It is worth mentioning that the CF can be used effectively for developing suitable goodness-of-fit tests for lifetime distributions by using empirical versions of this measure. Kharazmi and Balakrishnan [26] analogously defined the modified FIN via the survival function as \(CF(X;\theta ):=\int _{-\infty }^{\infty }\left( \frac{\partial \ln \overline{F}_X(x;\theta )}{\partial x}\right) ^2 \overline{F}_X(x;\theta )dx.\)

The purpose of this work is twofold. The first part (Sect. 2) is to reveal some new salient features pertaining to the dependence structure of SAR\((\alpha ),\) where some bounds and relationships are shown for the correlation coefficient. In the second part (Sects. 3, 4, and 5), we theoretically and numerically study in Sect. 3 the FI, \(I_\alpha (X_{r:n},Y_{[r:n];\alpha }),\) for a single pair \((X_{r:n},Y_{[r:n]}).\) Moreover, in Sect. 4, we theoretically and numerically discuss the FI about the mean of the exponential distribution marginals and the shape parameter of the power function distribution marginals for SAR\((\alpha ).\) In Sect. 5, \(CF(Y_{[r:n]};\alpha )\) is theoretically and numerically investigated.

2 Dependence structure

In this section, we first obtain the maximum bounds for the correlation coefficient, between two arbitrary continuous RVs with finite nonzero variances, which are arising from the SAR\((\alpha ),\) whose copula is defined by (1.1). Secondly, we deduce the sufficient conditions under which the marginal components become uncorrelated, among these conditions, we have a direct condition that \(\alpha =0.\) The upper bound for the correlation coefficient, given in the following theorem, is so large as to confirm the usefulness and distinction of the Sarmanov family.

Theorem 2.1

Let \(F_X(x)\) and \(F_Y(y)\) be the DFs of some RVs X and Y, respectively. If the RVs X and Y are bivariate SAR\((\alpha )\) with finite nonzero variances and correlation coefficient, \(\rho (X,Y),\) where \(F_X=F_Y,\) then \(\rho (X,Y)\le 0.8091502.\)

Proof

First, we assume that \(F_X(x)\) and \(F_Y(y)\) are different. Then in view of (1.1) and (1.2) and by using the result of Schucany et al. [33], we get

where \(\delta _{j}=\int _{-\infty }^{\infty } xdF_j^{2}(x)-\int _{-\infty }^{\infty } xdF_j(x)=\int _{-\infty }^{\infty } x\varphi ^2(F_j(x))dF_j(x),\) \(~j=X,Y.\) Clearly, \(\delta _j>0,~j=X,Y,\) since \(\delta _j\) is the difference between the mean of the larger of two independent RVs from \(F_j\) and the mean of \(F_j\) (cf. Johnson and Kotz, [24], Schucany et al. [33]). On the other hand, we can easily verify that

Moreover, we can show that

Note that, \(W_{j1}\) is the difference between the mean of the larger of three independent RVs from \(F_j\) and the mean of two independent RVs from \( F_j.\) Furthermore, \(W_{j2}\) is the difference between the mean of the larger of two independent RVs from \(F_j\) and the mean of \( F_j.\) Then we get \(W_{ji}>0,~~i=1,2.\) Upon combining (2.1) and (2.2), we get

where for the uniform marginal DFs \(F_U\) and \(F_V,\) (2.3) entails that \( COV(U,V)=3\alpha \delta _{U}\delta _{V}=\frac{\alpha }{12},\) which implies, as it is known, \(\rho (U,V)=\alpha .\) Now, let \(F:=F_X(x)=F_Y(y),\) where F has finite nonzero variance, \(\sigma ^2,\) and finite mean, \(\mu .\) Furthermore, let \(W:=W_j,~j=X,Y.\) Then, we get

since \(\int _{-\infty }^{\infty }(3\varphi ^2(F(x))-1)dF(x)=\int _{0}^{1}(3\varphi ^2(u)-1)du=0.\) Thus, by applying Cauchy–Schwartz inequality, we get

In view of the inequality \(\alpha \le \frac{\sqrt{7}}{5}\) and the result of Schucany et al. [33], a combination between (2.5) and (2.4), thus yields \(\rho (X,Y)\le 0.8091502.\) This completes the proof. \(\square \)

Corollary 2.1

Under the conditions of Theorem 2.1, possibly except the condition \(F_X=F_Y,\) we have \(0.8091502\le \max \rho (X,Y)\le 1.\)

Proof

The proof follows from the general relation \(\max \{x:x\in A\}\le \max \{x:x\in B\},\) if \(A\subseteq B,\) and the result of Theorem 2.1. \(\square \)

Corollary 2.2

Let the conditions of Theorem 2.1, possibly except the condition \(F_X=F_Y,\) be satisfied. Assume that \(W_X,W_Y\ne 0,\) \(\alpha \ne 0,\) and \(|\frac{\delta _{X}\delta _{Y}}{W_{x}W_{Y}}|\le \frac{\sqrt{7}}{12}.\) Then, we get the following interesting result \(\rho (X,Y)=0,\) while \(X\) and Y are dependent, if \(\alpha =\frac{-12}{5}\frac{\delta _{X}\delta _{Y}}{W_{x}W_{Y}}.\)

Proof

The proof follows directly from (2.4). \(\square \)

3 FI in \((X_{r:n},Y_{[r:n]})\) about \(\alpha \) based on the copula of SAR\((\alpha )\)

Let X and Y be uniformly distributed RVs over (0, 1), written \(X,Y\sim U(0,1),\) and let they be jointly distributed as the Sarmanov copula (1.1). This copula is free of any unknown parameters except the parameter \(\alpha .\) The joint PDF of \((X_{r:n},Y_{[r:n]})\) is given by (cf. Abo-Eleneen and Nagaraja [6], Barakat et al. [15])

where \(C_{r,n}=\frac{1}{\beta (r,n-r+1)}\) and \(\beta (a,b)=\int _{0}^{1}u^{a-1}(1-u)^{b-1}~du,~a,b>0,\) is the beta function.

3.1 Theoretical result

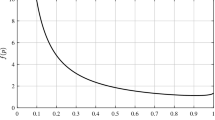

Before formulating Theorem 3.1 about the FI in \((X_{r:n},Y_{[r:n]}),\) we consider the set \(\Omega =\{\alpha :\,\mid 3\alpha \varphi (x)\varphi (y)+\frac{5}{4} \alpha ^2 (3\varphi ^2(x)-1)(3\varphi ^2(y)-1)\mid < 1,~ \forall ~ 0\le x,y\le 1\}.\) From now on, we deal only with \(\alpha \in \Omega \cap \Upsilon ,\) where \(\Upsilon =\{\alpha : \mid \alpha \mid \le \frac{\sqrt{7}}{5}\}.\) Since \(\alpha =0\in \Omega \cap \Upsilon ,\) then the set \(\Omega \cap \Upsilon \) is not empty. On the other hand, the set \(~\{\alpha :\,\mid 3\alpha \varphi (x)\varphi (y)+\frac{5}{4} \alpha ^2 (3\varphi ^2(x)-1)(3\varphi ^2(y)-1)\mid >1, \forall 0\le x,y\le 1\}=\emptyset \) (empty set). Therefore, \(\Omega \cup {\tilde{\Omega }}=\mathcal U\) (while \(\Omega \cap {\tilde{\Omega }}\ne \emptyset \)), where \({\mathcal {U}}\) is the universal set and \({\tilde{\Omega }}\!=\!\{\alpha :\,\mid 3\alpha \varphi (x)\varphi (y)+\frac{5}{4} \alpha ^2 (3\varphi ^2(x)-1)(3\varphi ^2(y)-1)\!\mid<1,\,0\le x\le x_0,\,0\le y\le y_0,\,\text{ and }\,\mid 3\alpha \varphi (x)\varphi (y)+\frac{5}{4} \alpha ^2 (3\varphi ^2(x)-1)(3\varphi ^2(y)-1)\mid \ge 1, x>x_0,y> y_0,\,\text{ for } \text{ some }\, 0<x_0,y_0<1\}.\) In order to check \(\alpha \in \Omega ,\) for any \(\alpha \in \Upsilon ,\) draw the function \({{\mathcal {F}}}(x,y;\alpha )=\) \(\mid 3\alpha \varphi (x)\varphi (y)+\frac{5}{4} \alpha ^2 (3\varphi ^2(x)-1)(3\varphi ^2(y)-1)\mid ,0\le x,y\le 1,\) as 3D diagram \((x,y,{{\mathcal {F}}}),\) by using Mathematica 12. If the curve of \({{\mathcal {F}}}\) falls entirely within the cube \(\mathcal{C}=\{(x,y,z):-1\le x,y,z\le +1\},\) then \(\alpha \in \Omega ,\) otherwise \(\alpha \notin \Omega .\) The fact that \(\Omega \!\cup \!{\tilde{\Omega }}={\mathcal {U}}\) means that we have only two possibilities. The first possibility is that the curve of \({{\mathcal {F}}}\) falls entirely within the cube \({\mathcal {C}},\) represented by the set \(\Omega .\) The second possibility is that a portion of that curve falls within \({\mathcal {C}}\) and the other portion is outside the cube \({\mathcal {C}},\) represented by the set \({\tilde{\Omega }}.\) Parts a,b,c, and d of Fig. 1 show how we can achieve this check for some values of \(\alpha \in \Omega \cap \Upsilon .\)

Theorem 3.1

Suppose that X and Y \(\sim U(0,1)\) with joint PDF (1.2), then for any \(1\le r\le n, \) and \(\alpha \in \Omega \cap \Upsilon ,\) the FI in \((X_{r:n},Y_{[r:n]})\) about \(\alpha \) is given by

where

and

Proof

Thus,

Therefore, by using (3.1), (3.6) and the binomial expansion under the condition \(\alpha \in \Omega \cap \Upsilon ,\) the FI about the shape parameter \(\alpha \) is given as

where

and

Combining (3.8), (3.9), and (3.10), we get \(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha ).\) The theorem is proved. \(\square \)

3.2 Computing \(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha )\) with discussion

Table 1 displays the FI \(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha )\) as a function of \(n, r\le \frac{n+1}{2},\) and \(\alpha ,\) for \(n=1,3,5,15,\) \(\alpha =-0.2,-0.15,-0.1,0.1,0.15,0.2,\) where \(\alpha \in \Omega \cap \Upsilon .\) The entries are computed using the relations (3.2)–(3.5). We only compute 9 terms from the infinite series included in (3.2), as this procedure gives us satisfactory results. The first row of Table 1 represents the FI \(I_{\alpha }(X,Y;\alpha ).\) Based on the fact that the FI \(I_{\alpha }(X,Y;\alpha )\) in a random sample of size n is \(nI_{\alpha }(X,Y;\alpha ),\) Table 1 allows us to compute the proportion of the sample FI \(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha )\) contained in a single pair. For example, when \(n=5,\) the FI \(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha )\) about \(\alpha \) in the extreme pair ranges from \(26\%\) to \(35.5\%\) of the total information, as \(\alpha \) ranges from \(-0.2\) to 0.2. In addition, the FI \(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha )\) in the central pair ranges from \(9\%\) to \(13\%\) of what is available in the complete sample in all cases. Another useful application of Table 1 is that it may be used to quickly extract the FI contained in singly or multiply censored bivariate data sample from the SAR\((\alpha ).\) Simply sum up the FI in each pair that constitutes the censored sample. For example, when \(n = 5,\) the FI about \(\alpha \) in the type-II censored sample consisting of the bottom (or the top) three pairs ranges from \(52\%\) to \(65\%\) as \(\alpha \) ranges from \(-0.2\) to 0.2. The following interesting features can be extracted from Table 1:

-

\(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha )\) increases when the difference between the rank r and the sample size n, increases for \(r\le \frac{n+1}{2}\).

-

For fixed n and r, the value of \(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha )\) is close to \(I_{\alpha }(X_{r:n},Y_{[r:n]};-\alpha ).\) Moreover, \(I_{\alpha }(X_{r:n},Y_{[r:n]};\alpha )=I_{\alpha }(X_{r:n},Y_{[r:n]};-\alpha )\) in some cases.

4 FI in concomitant \(Y_{[r:n]}\) based on some distributions

Barakat et al. [17] studied the concomitants of OSs based on the Sarmanov family with marginal generalized exponential DFs, where the DF of the generalized exponential distribution is defined as \(F_X(x)=\left( 1-e^{-\theta x}\right) ^{a},\) \( x;a, \theta > 0,\) and is denoted by \(GE(\theta ;a).\) Barakat et al. [17] expressed the marginal PDF of the concomitant \(Y_{[r:n]}\) as

where \(V_{1}\sim \text{ GE }(\theta ;2a),\) \( V_{2}\sim \text{ GE }(\theta ;3a)\) , \(\Delta ^{(\alpha )}_{1,r:n}=\frac{\alpha (2r-n-1)}{n+1}\) and \(\Delta ^{(\alpha )}_{2,r:n}=2\alpha ^2 \left[ 1-6\frac{r(n-r+1)}{(n+1)(n+2)}\right] .\)

Proposition 1

Let the FI associated with concomitants of OSs based on SAR\((\alpha )\) with marginal DFs \(GE(\theta ;a)\) about unknown parameter \(\theta \) be denoted by \(I_{\theta }(Y_{[r:n]};\alpha ,\theta ).\) Then,

-

1.

\(I_{\theta }(Y_{[r:n]};-\alpha ,\theta )=I_{\theta }(Y_{[n-r+1:n]};\alpha ,\theta ).\)

-

2.

\(I_{\theta }(Y_{[\frac{n+1}{2}:n]};\alpha ,\theta )=I_{\theta }(Y_{[\frac{n+1}{2}:n]};-\alpha ,\theta ).\)

Proof

From (4.1), we get

By applying the easy-check relations

we immediately get the relation \(f_{[r:n]}~ (y;-\alpha ,\theta )= f_{[n-r+1:n]}(y;\alpha ,\theta ).\) The first part of the proposition is thus proved. Also, for the second part we have \(\Delta ^{(\alpha )}_{1,\frac{n+1}{2}:n}=2\Delta ^{(-\alpha )}_{1,\frac{n+1}{2}:n}=0,\) This completes the proof. \(\square \)

4.1 FI in \(Y_{[r:n]}\) about \(\text{ E }(Y)\) of exponential distribution marginal

By putting \(a=1\) in (4.1), we get the marginal PDF of the concomitant \(Y_{[r:n]}\) based on the exponential distribution as

This expression, after some algebra, can be written as

where \(A_{1}=\left( 1+3\Delta ^{(\alpha )}_{1,r:n}+\frac{5}{2}\Delta ^{(\alpha )}_{2,r:n}\right) ,\) \(A_{2}=-\left( 6\Delta ^{(\alpha )}_{1,r:n}+15\Delta ^{(\alpha )}_{2,r:n}\right) ,\) and \(A_{3}=\left( 15\Delta ^{(\alpha )}_{2,r:n}\right) \). Therefore,

Thus,

where \(w=\frac{y}{\theta }.\) On the other hand, the PDF of the RV \(W=\frac{Y_{[r:n]}}{\theta }\) is \(f_{W}(w)=e^{-w}(A_{1}\) \(+A_{2}e^{-w}+A_{3}e^{-2w}).\) Therefore, the relation (4.2) yields

where

and

Therefore, we get

We use Mathematica 12 to compute the values of the infinite integrals \(T_3,T_4,\) and \(T_{10},\) consequently, the FI \(I_{\theta }(Y_{[r:n]};\alpha ,\theta )\) can be computed by using (4.3). Table 2 provides the values of \(I_{\theta }(Y_{[r:n]};\alpha ,\theta ),\) for \(n=5,15,\) \(\theta =1.\) From Table 2, the following properties can be extracted:

-

In the vast majority of the cases \(I_{\theta }(Y_{[r:n]};\alpha ,1)\) increases when the difference \(n-r\) decreases. In contrast, almost \(I_{\theta }(Y_{[r:n]};-\alpha ,1)\) increases when \(n-r\) increases. Moreover, Table 2 reveals that the greatest values of FI are obtained almost at the maximum OSs.

-

Generally, we have \(I_{\theta }(Y_{[\frac{n+1}{2}:n]};\alpha ,1)=I_{\theta }(Y_{[\frac{n+1}{2}:n]};-\alpha ,1).\)

-

\(I_{\theta }(Y_{[r:n]};\alpha ,1)\) =\(I_{\theta }(Y_{[n-r+1:n]};-\alpha ,1),\) which endorse Proposition 2.

4.2 FI in \(Y_{[r:n]}\) about the shape parameter of power distribution marginal

Let \(f_{Y}(y)=c y^{c-1},\) \(c>0,\) \( 0\le y\le 1.\) By using (4.1) we get the marginal PDF of the concomitant \(Y_{[r:n]}\) based on the power function distribution as:

This expression, after some algebra, can be written as \(f_{[r:n]}(y;\alpha ,c)=cy^{c-1}\left( B_1+B_2y^{c}+B_3y^{2c}\right) ,\) where \(B_{1}=\left( 1-3\Delta ^{(\alpha )}_{1,r:n}+\frac{5}{2}\Delta ^{(\alpha )}_{2,r:n}\right) ,\) \(B_{2}=\left( 6\Delta ^{(\alpha )}_{1,r:n}\right. \left. -15\Delta ^{(\alpha )}_{2,r:n}\right) \) and \(B_{3}=\left( 15\Delta ^{(\alpha )}_{2,r:n}\right) \). Therefore,

which implies

Thus, (4.4) yields

where

and

Therefore, we get \(I_{c}(Y_{[r:n]};\alpha ,c)=\frac{1}{c^{2}}\left( 1-\frac{1}{4}B_{2}-\frac{8}{27}B_{3}\right) +k_{3} .\) Table 3 provides the values of \(I_{c}(Y_{[r:n]};\alpha ,c),\) for \(n=5,15,\) \(c=2.\) From Table 3, the following properties can be extracted:

-

Generally, we have that \(I_{c}(Y_{[r:n]};\alpha ,2)\) increases when the difference \(n-r\) increases. In contrast, \(I_{c}(Y_{[r:n]};\alpha ,2)\) increases when the difference \(n-r\) decreases. Moreover, Table 3 reveals that the greatest values of FI are obtained at the maximum OSs.

-

Generally, we have that \(I_{c}(Y_{[\frac{n+1}{2}:n]};\alpha ,2)=I_{c}(Y_{[\frac{n+1}{2}:n]};-\alpha ,2).\)

-

\(I_{c}(Y_{[r:n]};\alpha ,2)\) =\(I_{c}(Y_{[n-r+1:n]};-\alpha ,2).\)

5 Cumulative residual FI in \(Y_{[r:n]}\)

Theorem 5.1

Let \(F_{[r:n]}(y;\alpha )\) be the DF of the concomitant of \(Y_{[r:n]}\) based on SAR\((\alpha ).\) Then, the CF for location parameter based on \(Y_{[r:n]},\) is given by

where

and

Proof

By using (4.1), the CF of \( Y_{[r:n]}\) is given by

\(\square \)

Proposition 2

Let \({\overline{F}}_{[r:n]}(y;\alpha )\) and \(R_{[r:n]}(y;\alpha )\) be the survival and hazard functions of concomitant \( Y_{[r:n]}\) from \(SAR(\alpha ),\) respectively, where \(R_{[r:n]}(y;\alpha )=\frac{f_{[r:n]}(y;\alpha )}{\overline{F}_{[r:n]}(y;\alpha )}.\) Then,

Proof

From the definition of \(CF(Y_{[r:n]};\alpha )\) and by using the result of Nanda [27], that \(E[R_{F_{X}}(X)]\ge \frac{1}{E(X)},\) we get

The proof is completed. \(\square \)

Example 5.1

Let X and Y have exponential distributions with means \( \frac{1}{\theta *}\) and \(\frac{1}{\theta },\) respectively. Then, \( CF(Y_{[r:n]};\alpha )=\theta ,\) \(\zeta (\alpha )=\frac{\theta }{2}(3\Delta ^{(\alpha )}_{1,r:n}-\frac{5}{2}\Delta ^{(\alpha )}_{2,r:n})+\frac{5\theta }{3}\Delta ^{(\alpha )}_{2,r:n},\) and \( \eta (\alpha )=\frac{\theta }{2}(-3\Delta ^{(\alpha )}_{1,r:n}+\frac{5}{2}\Delta ^{(\alpha )}_{2,r:n})-\frac{5\theta }{3}\Delta ^{(\alpha )}_{2,r:n}\) Thus, the CF of \( Y_{[r:n]}\) is given by

where

Table 4 provides \(CF(Y_{[r:n]};\alpha )\) values for \(n=5,15,\) \(\theta =1.\) From Table 4 following properties can be extracted:

-

Generally, we have \(CF(Y_{[\frac{n+1}{2}:n]};\alpha )=CF(Y_{[\frac{n+1}{2}:n]};-\alpha ,).\) Moreover, \(CF(Y_{[r:n]};\alpha )=CF(Y_{[n-r+1:n]};-\alpha ).\)

-

with fixed n and \(\alpha >0,\) the value of \(CF(Y_{[r:n]};\alpha )\) slowly decreases as r increases. In contrast, the value of \(CF(Y_{[r:n]};\alpha )\) slowly increases as r increases for \(\alpha <0\).

6 Concluding remarks

Among all the known families that generalize the FGM family, we exclusively found that the Sarmanov family shares two properties with the FGM family of theoretical and practical importance. The first property is that they both have one shape parameter, which makes it easy to work with them for bivariate data modeling. The second property is the radial symmetry, which gives many of the measures of information associated with them, mathematical flexibility in terms of simplifying the mathematical relationships that describe those measures. On the other hand, the Sarmanov family surpassed all known families in terms of the high coefficient of correlation between its components.

If we transfer to the issue of modelling multivariate data (with more than two variables), we find the multivariate FGM, which was proposed by Johnson and Kotz [23]. The multivariate FGM family, which has a direct structure and can describe the interrelationships of two or more variables, is useful as an alternative to a multivariate normal distribution and it has been applied to statistical modeling in various research fields. The formulation of the Sarmanov family as a multivariate distribution, which benefits from the radial symmetry property and offers high partial correlations between its variables, will be a very useful contribution in the field of multivariate data modelling and represents a potential future development for the current work.

References

Abd Elgawad, M.A., Alawady, M.A.: On concomitants of generalized order statistics from generalized FGM family under a general setting. Math. Slov. 72(2), 507–526 (2021)

Abd Elgawad, M.A., Alawady, M.A., Barakat, H.M., Xiong, Shengwu: Concomitants of generalized order statistics from Huang–Kotz Farlie–Gumbel–Morgenstern bivariate distribution: some information measures. Bull. Malays. Math. Sci. Soc. 43, 2627–2645 (2020)

Abd Elgawad, M.A., Barakat, H.M., Alawady, M.A.: Concomitants of generalized order statistics under the generalization of Farlie–Gumbel–Morgenstern type bivariate distributions. Bull. Iran. Math. Soc. 47, 1045–1068 (2021)

Abd Elgawad, M.A., Barakat, H.M., Alawady, M.A.: Concomitants of generalized order statistics from bivariate Cambanis family: Some information measures. Bull. Iran. Math. Soc. 48, 563–585 (2021)

Abd Elgawad, M.A., Barakat, H.M., Xiong, S., Alyami, S.A.: Information measures for generalized order statistics and their concomitants under general framework from Huang-Kotz FGM bivariate distribution. Entropy 23(3), 335 (2021)

Abo-Eleneen, Z.A., Nagaraja, H.N.: Fisher information in an order statistic and its concomitant. Ann. Inst. Statist. Math. 54(3), 667–680 (2002)

Alawady, M.A., Barakat, H.M., Xiong, S., Abd Elgawad, M.A.: Concomitants of generalized order statistics from iterated Farlie-Gumbel-Morgenstern type bivariate distribution. Commun. Stat. Theory Methods. 51(16), 5488–5504 (2022)

Alawady, M.A., Barakat, H.M., Xiong, S., Abd Elgawad, M.A.: On concomitants of dual generalized order statistics from Bairamov–Kotz–Becki Farlie–Gumbel–Morgenstern bivariate distributions. Asian-Eur. J. Math. 14(10), 2150185 (2021)

Alawady, M.A., Barakat, H.M., Abd Elgawad, M.A.: Concomitants of generalized order statistics from bivariate Cambanis family of distributions under a general setting. Bull. Malays. Math. Sci. Soc. 44(5), 3129–3159 (2021)

Bairamov, I., Altinsoy, B., Kerns, G.J.: On generalized Sarmanov bivariate distributions. TWMS J. Appl. Eng. Math. 1(1), 86–97 (2011)

Balakrishnan, N., Lia, C.D.: Continuous Bivariate Distributions, 2nd edn. Springer, Dordrecht Heidelberg London New York (2009)

Barakat, H.M., Husseiny, I.A.: Some information measures in concomitants of generalized order statistics under iterated FGM bivariate type. Quaest. Math. 44(5), 581–598 (2021)

Barakat, H.M., Husseiny, I.A.: Fisher information and the Kullback-Leibler distance in concomitants of generalized order statistics under iterated FGM family. Kyungpook Math. J. 62(2), 389–405 (2022)

Barakat, H.M., Nigm, E.M., Alawady, M.A., Husseiny, I.A.: Concomitants of order statistics and record values from generalization of FGM bivariate-generalized exponential distribution. J. Appl. Stat. Sci. 18(3), 309–322 (2019)

Barakat, H.M., Nigm, E.M., Husseiny, I.A.: Measures of information in order statistics and their concomitants for the single iterated Farlie-Gumbel-Morgenstern bivariate distribution. Math. Popul. Stud. 28(3), 154–175 (2020)

Barakat, H.M., Nigm, E.M., Syam, A.H.: Concomitants of ordered variables from Huang-Kotz FGM type bivariate-generalized exponential distribution. Bull. Malays. Math. Sci. Soc. 42(1), 337–353 (2019)

Barakat, H.M., Alawady, M.A., Husseiny, I.A., Mansour, G.M.: Sarmanov family of bivariate distributions: statistical properties-concomitants of order statistics-information measures. Malays. Math. Sci. Soc., to appear, Bull (2022). https://doi.org/10.1007/s40840-022-01241-z

Barakat, H.M., Nigm, E.M., Alawady, M.A., Husseiny, I.A.: Concomitants of order statistics and record values from iterated FGM type bivariate-generalized exponential distribution. REVSTAT 19(2), 291–307 (2021)

Bhattacharya, P.K.: Convergence of sample paths of normalized sums of induced order statistics. Ann. Stat. 2(5), 1034–1039 (1974)

David, H.A.: Concomitants of order statistics. Bull. Int. Statist. Inst. 45, 295–300 (1973)

Husseiny, I.A., Barakat, H.M., Mansour, G.M., Alawady, M.A.: Information measures in records and their concomitants arising from Sarmanov family of bivariate distributions. J. Comp. Appl. Math. 408, 114–120 (2022)

Jafari, A.A., Almaspoor, Z., Tahmasebi, S.: General results on bivariate extended Weibull Morgenstern family and concomitants of its generalized order statistics. Ric. Mat. (2021). https://doi.org/10.1007/s11587-021-00680-3

Johnson, N.L., Kotz, S.: On some generalized Farlie-Gumbel-Morgenstern distributions. Commun. Stat. 4(5), 415–427 (1975)

Johnson, N.L., Kotz, S.: On some generalized Farlie-Gumbel-Morgenstern distributions-II: Regression, correlation, and further generalization. Commun. Stat. Theory Methods 6, 485–96 (1977)

Kharazmi, O., Asadi, M.: On the time-dependent Fisher information of a density function. Braz. J. Probab. Stat. 32, 795–814 (2018)

Kharazmi, O., Balakrishnan, N.: Cumulative residual and relative cumulative residual fisher information and their properties. IEEE Trans. Inf. Theory 67(10), 6306–6312 (2021)

Nanda, A.K.: Characterization of distributions through failure rate and mean residual life functions. Statist. Probab. Let. 80, 752–755 (2010)

Rao, C.R.: Linear Statistical Inference and its Applications, 2nd edn. Wiley, New York (1973)

Sarmanov, I. O.: Generalized normal correlation and two dimensional Frechet classes. Soviet Math. Dokl., 7: 596-599 (1966). [English translation; Russian original in Dokl. Akad. Nauk. SSSR168 (1966) 32-35]

Sarmanov, I. O.: New forms of correlation relationships between positive quantities applied in hydrology. In: Mathematical Models in Hydrology Symposium, IAHS Publication (1974)

Scaria, J., Mohan, S.: Record concomitants of order statistics from Cambanis bivariate logistic distribution. Sci. Soc. 11(2), 181–192 (2013)

Scaria, J., Mohan, S.: Concomitants of order statistics from Cambanis type bivariate exponentiated exponential distribution. Sci. Soc. 14(2), 91–102 (2016)

Schucany, W.R., Parr, W.C., Boyer, J.E.: Correlation structure in Farlie-Gumbel-Morgenstern distributions. Biometrika 65(3), 650–653 (1978)

Tahmasebi, S., Jafari, A.A.: Fisher information number for concomitants of generalized order statistics in Morgenstern family. J. Inf. Math. Sc. 5(1), 15–20 (2013)

Tahmasebi, S., Jafari, A.A.: Concomitants of order statistics and record values from Morgenstern type bivariate-generalized exponential distribution. Bull. Malays. Math. Sci. Soc. 38, 1411–1423 (2015)

Tahmasebi, S., Jafari, A.A., Ahsanullah, M.: Properties on concomitants of generalized order statistics from a bivariate Rayleigh distribution. Bull. Malays. Math. Sci. Soc. 41(1), 355–370 (2018)

Tank, F., Gebizlioglu, O.L.: Sarmanov distribution class for dependent risks and its applications. Belg. Actuar. Bull. 4(1), 50–52 (2004)

Acknowledgements

The authors are grateful to the editors and the anonymous referee for suggestions that improved the presentation substantially.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Barakat, H.M., Alawady, M.A., Mansour, G.M. et al. Sarmanov bivariate distribution: dependence structure—Fisher information in order statistics and their concomitants. Ricerche mat (2022). https://doi.org/10.1007/s11587-022-00731-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11587-022-00731-3