Abstract

Likelihood-free methods are useful for parameter estimation of complex models with intractable likelihood functions for which it is easy to simulate data. Such models are prevalent in many disciplines including genetics, biology, ecology and cosmology. Likelihood-free methods avoid explicit likelihood evaluation by finding parameter values of the model that generate data close to the observed data. The general consensus has been that it is most efficient to compare datasets on the basis of a low dimensional informative summary statistic, incurring information loss in favour of reduced dimensionality. More recently, researchers have explored various approaches for efficiently comparing empirical distributions of the data in the likelihood-free context in an effort to avoid data summarisation. This article provides a review of these full data distance based approaches, and conducts the first comprehensive comparison of such methods, both qualitatively and empirically. We also conduct a substantive empirical comparison with summary statistic based likelihood-free methods. The discussion and results offer guidance to practitioners considering a likelihood-free approach. Whilst we find the best approach to be problem dependent, we also find that the full data distance based approaches are promising and warrant further development. We discuss some opportunities for future research in this space. Computer code to implement the methods discussed in this paper can be found at https://github.com/cdrovandi/ABC-dist-compare.

Similar content being viewed by others

1 Introduction

Likelihood-free Bayesian statistical inference methods are now commonly applied in many different fields. The appeal of such methods is that they do not require a tractable expression for the likelihood function of the proposed model, only the ability to simulate from it. In essence, proposed parameter values of the model are retained if they produce simulated data ‘close enough’ to the observed data. This gives practitioners great flexibility in designing complex models that more closely resemble reality.

Two popular methods for likelihood-free Bayesian inference that have received considerable attention in the statistical literature are approximate Bayesian computation (ABC, Sisson et al. 2018) and Bayesian synthetic likelihood (BSL, Price et al. 2018, Wood 2010). These approaches traditionally assess the ‘closeness’ of observed and simulated data on the basis of a set of summary statistics believed to be informative about the parameters. Both ABC and BSL approximate the likelihood of the observed summary statistic via model simulation, but their estimators take different forms. ABC effectively uses a non-parametric estimate of the summary statistic likelihood (Blum 2010), while BSL uses a parametric approximation via a Gaussian density.

In the context of ABC, the use of a reasonably low-dimensional summary statistic was often seen as necessary to avoid the curse of dimensionality associated with nonparametric conditional density estimation (see Blum 2010 for a discussion of this phenomena in the context of ABC). The intuition is that it is difficult to assess closeness of high dimensional datasets in Euclidean space. Due to its parametric nature, provided that the distribution of the model summary statistic is sufficiently regular, BSL can cope with a higher dimensional summary statistic relative to ABC (Price et al. 2018). However, BSL ultimately suffers from the same curse.

Recently, there has been a surge of likelihood-free literature that challenge the need for data reduction. The appeal of such approaches is two-fold: firstly, these approaches bypass the difficult issue of selecting useful summary statistics, which are often model and application specific; secondly, depending on the method, and in the limit of infinite computational resources, it may be feasible to recover the exact posterior. The latter is typically not true of summary statistic based approaches, since in almost all cases the statistic is not sufficient (i.e. a loss of information). The ultimate question is whether full data approaches can mitigate the curse of dimensionality enough to outperform data reduction approaches. This paper aims to provide insights into the answer to that question.

In the context of ABC, several distance functions have been proposed that compare full observed and simulated datasets via their empirical distributions. For example, the following have been considered: maximum mean discrepancy (Park et al. 2016), Kullback-Leibler divergence (Jiang 2018), Wasserstein distance (Bernton et al. 2019), energy distance (Nguyen et al. 2020), Hellinger distance (Frazier 2020), and the Cramer von Mises distance (Frazier 2020). Furthermore, in the case of independent observations, Turner and Sederberg (2014) propose an alternative likelihood-free estimator that uses kernel density estimation. However, while there has been some comparison between the different methods, no systematic and comprehensive comparison between the full data approaches and summary statistic based approaches has been undertaken.

This paper has two key contributions. The first provides a review of full data likelihood-free Bayesian methods. The second provides a comprehensive empirical comparison between full data and summary statistic based approaches.

The paper is outlined as follows. In Sect. 2 we provide an overview of likelihood-free methods that use summary statistics, focussing on ABC and BSL. Section 3 reviews full data approaches to likelihood-free inference, discusses connections and provides a qualitative comparison of them. Both the full data and summary statistic based likelihood-free approaches are compared on several examples in Sect. 4. The examples differ in complexity and we consider both simulated and real data scenarios. Finally, Sect. 5 concludes the paper with a discussion and outlines directions for further research.

2 Likelihood-free Bayesian inference

We observe data \({\varvec{y}}=(y_{1},\dots ,y_{n})^{\top }\), \(n\ge 1\), with \(y_i\in \mathcal {Y}\) for all i, and denote by \(P^{(n)}_0\) the true distribution of the observed sample \(\varvec{y}\). In this paper, we assume that each \(y_i \in \mathbb {R}\) is a scalar. However, this assumption can be relaxed for the summary statistic based approaches covered in this section and in the case of certain full data approaches. The true distribution is unknown and instead we consider that the class of probability measures \(\mathcal {P}:=\{P^{(n)}_{{\theta }}:\theta \in \Theta \subset \mathbb {R}^{d_{\theta }}\}\), for some value of \(\theta \), have generated the data, and denote the corresponding conditional density as \(p_n(\cdot \mid \theta )\). Given prior beliefs over the unknown parameters in the model \(\theta \), represented by the probability measure \(\Pi (\theta )\), with its density denoted by \(\pi ({\theta })\), our aim is to produce a good approximation of the exact posterior density

In situations where the likelihood is cumbersome to derive or compute, sampling from \(\pi ({\theta \mid \varvec{y}})\) can be computationally costly or infeasible. However, so-called likelihood-free methods can still be used to conduct inference on the unknown parameters \(\theta \) by simulating data from the model. The most common implementations of these methods in the statistical literature are approximate Bayesian computation (ABC) and Bayesian synthetic likelihood (BSL). Both ABC and BSL generally reduce the data down to a vector of summary statistics and then perform posterior inference on the unknown \(\theta \), conditional only on this summary statistic.

More formally, let \(\eta (\cdot ):\mathbb {R}^{n}\rightarrow \mathbb {R}^{d_\eta }\) denote a \(d_\eta \)-dimensional map, \(d_\eta \ge d_\theta \), that represents the chosen summary statistics, and let \(\varvec{z}:=(z_1,\dots ,z_n)^{\top }\sim P_\theta \) denote data simulated from the model \(P^{(n)}_\theta \). For \(G_n(\cdot \mid \theta )\) denoting the projection of \(P^{(n)}_\theta \) under \(\eta (\cdot ):\mathbb {R}^n\rightarrow \mathbb {R}^{d_\eta }\), with \(g_n(\cdot \mid \theta )\) its corresponding density, the goal of approximate Bayesian methods is to generate samples from the approximate or ‘partial’ posterior

However, given the complexity of the assumed model, \(P^{(n)}_\theta \), it is unlikely that the structure of \(G_n(\cdot \mid \theta )\) is any more tractable than the original likelihood function \(p_n(\varvec{y}\mid \theta )\). Likelihood-free methods such as ABC and BSL employ model simulation to stochastically approximate the summary statistic likelihood in various ways.

ABC approximates the likelihood via the following:

where \(\rho \{\eta (\varvec{y}), \eta (\varvec{z})\}\) measures the discrepancy between observed and simulated summaries and \(K_\epsilon [\cdot ]\) is a kernel that allocates higher weight to smaller \(\rho \). The bandwidth of the kernel, \(\epsilon \), is often referred to as the tolerance in the ABC literature. The above integral is intractable, but can be estimated unbiasedly by drawing m mock datasets \(\varvec{z}_1,\ldots ,\varvec{z}_m \sim P^{(n)}_\theta \) and computing

In the ABC literature, m is commonly taken to be 1 and the kernel weighting function given by the indicator function, \(K_\epsilon [\rho \{\eta (\varvec{y}), \eta (\varvec{z})\}] = \mathbf {I}[\rho \{\eta (\varvec{y}), \eta (\varvec{z})\} \le \epsilon ]\). Using arguments from the exact-approximate literature (Andrieu and Roberts 2009), unbiasedly estimating the ABC likelihood is enough to produce a Bayesian algorithm that samples from the approximate posterior proportional to \(g_\epsilon [{\eta (\varvec{y})\mid \theta }]\pi (\theta )\).

As is evident from the above integral estimator, ABC non-parametrically estimates the summary statistic likelihood. In contrast, BSL uses a parametric estimator. The standard BSL approach approximates \(g_n(\cdot \mid \theta )\) using a Gaussian working likelihood

where \(\mu (\theta )\) and \(\Sigma (\theta )\) denote the mean and variance of the model summary statistic at \(\theta \). In almost any practical example \(\mu (\theta )\) and \(\Sigma (\theta )\) are unknown and we must replace these quantities with those estimated from m independent model simulations. The standard approach is to use the sample mean and variance:

and where each simulated data set \(\varvec{z}^i\), \(i=1,\dots ,m\), is generated iid from \(P^{(n)}_{\theta }\). The synthetic likelihood is then approximated as

Unlike ABC, \(\hat{g}_A[{\eta (\varvec{y})\mid \theta }]\) is not an unbiased estimator of \(g_A[{\eta (\varvec{y})\mid \theta }]\). However, Price et al. (2018) demonstrate empirically that the BSL posterior depends weakly on m, provided that m is chosen large enough so that the plug-in synthetic likelihood estimator has a small enough variance to ensure that MCMC mixing is not adversely affected. More generally, Frazier et al. (2021) demonstrate that if the summary statistics are sub-Gaussian, then the choice of m is immaterial so long as m diverges as n diverges. Price et al. (2018) also consider an unbiased estimator of the multivariate normal density for use within BSL. Given the plug-in estimators’ simplicity and its weak dependence on m, we do not consider the unbiased version here.

There exist a number of extensions to the standard BSL procedure. For example, An et al. (2020) develop a semi-parametric estimator that is more robust to the Gaussian assumption. Further, Priddle et al. (2020) consider a whitening transformation to de-correlate summary statistics combined with a shrinkage estimator of the covariance to reduce the number of model simulations required to precisely estimate the synthetic likelihood. See Drovandi et al. (2018) for some other extensions to BSL. For the examples in this paper, we find that the standard BSL method is sufficient to compare with other methods.

The Monte Carlo estimates of the likelihood obtained from ABC or BSL replace the intractable likelihood within a Bayesian algorithm to sample the approximate posterior. Here we use a Metropolis-Hastings Markov chain Monte Carlo (MCMC) algorithm to sample the ABC or BSL target, in order to ensure that most proposed parameter values are proposed in areas of high (approximate) posterior support. MCMC was first considered as a sampling algorithm for ABC in Marjoram et al. (2003), whereas Wood (2010); Price et al. (2018) develop MCMC for BSL.

Traditionally, the choice of summary statistics in likelihood-free methods such as ABC and BSL has been crucial. In the context of ABC, it is generally agreed that one should aim for a low dimensional summary statistic that hopefully carries most of the information contained in the full data. BSL has been shown to be more tolerant to a higher dimensional summary statistic than ABC, provided that the distribution of the model summary statistic is regular enough (Price et al. 2018; Frazier and Drovandi 2021; Frazier et al. 2021). However, increasing the number of statistics in BSL still requires increasing the number of model simulations for precisely estimating the synthetic likelihood, so care still needs to be taken.

Prangle (2018) provides a review of different data dimension reduction methods applied in ABC. These approaches also hold some relevance for BSL. Ultimately, the optimal choice of summary statistics will be problem dependent. For the examples in this paper, we either use a summary statistic that has been reported to perform well from the literature, or the approach we now describe. For the types of examples considered in this paper, namely data with independent observations, a reasonable approach to obtaining useful summary statistics is via indirect inference (e.g. Gourieroux et al. 1993; Drovandi et al. 2015). In indirect inference, we construct an auxiliary model with a tractable likelihood \(p_A(\varvec{y}\mid \phi )\) that is parameterised by a vector of unknown parameters \(\phi \), where \(\phi \in \Phi \subset \mathbb {R}^{d_{\phi }}\) with \(d_{\phi } \ge d_{\theta }\). The idea is that the auxiliary model is not mechanistic but can still fit the data reasonably well and thus capture its statistical features. Either the parameter estimate (Drovandi et al. 2011) or the score of the auxiliary model (Gleim and Pigorsch 2013) can be used to form the summary statistic. Here we use the score, since it only requires fitting the auxiliary model to the observed data and not any datasets simulated during ABC or BSL. For an arbitrary dataset \(\varvec{z}\), the score function is given by

The observed statistic is then \(\eta (\varvec{y}) = S(\varvec{y},\phi (\varvec{y}))\) where \(\phi (\varvec{y}) = \arg \max _\phi p_A(\varvec{y}\mid \phi )\) is the maximum likelihood estimate (MLE). Thus, the observed statistic is a vector of zeros of length \(d_{\phi }\). We drop \(\phi (\varvec{y})\) from the notation of the summary statistic, since it remains fixed throughout. That is, we evaluate the score at \(\phi (\varvec{y})\) for any dataset \(\varvec{z}\) simulated in ABC or BSL. For ABC with summary statistics we use the Mahalanobis distance as the discrepancy function. The weighting matrix of the Mahalanobis distance is \(\varvec{J}({\phi }(\varvec{y}))^{-1}\), where \(\varvec{J}({\phi }(\varvec{y}))\) is the observed information matrix evaluated at the observed data and MLE \(\phi (\varvec{y})\).

A criticism of summary statistic based approaches is that their choice is often ad hoc and there will generally be an inherent loss of information, i.e. \(\pi [{\theta \mid \eta (\varvec{y})}] \ne \pi [{\theta \mid \varvec{y}}]\). Apart from exponential family models, which appear infrequently in the likelihood-free literature, sufficient statistics of dimension lower than the dimension of the full data do not exist. Indeed, the use of summary statistics has often been considered a necessary evil to overcome the curse of dimensionality of likelihood-free methods. However, there has recently been a surge of new approaches that seek to avoid summary statistic selection in favour of directly comparing, in an appropriate distance, the observed and simulated samples. By avoiding summarisation via the direct comparison of observed and simulated data, in a well-chosen distance, the hope is that these approaches will yield more informative inference on the unknown parameters.

3 Full data approaches

All approaches to likelihood-free inference discussed so far rely on the explicit use of a summary statistic \(\eta (\varvec{y})\) that is of much lower dimension than \(\varvec{y}\). The need to consider low dimensional summaries is due to the fact that estimating \(\pi [\theta \mid \eta (\varvec{y})]\) via commonly applied algorithms is akin to nonparametric conditional density estimation, i.e., estimating the density of \(\theta \) conditional on \(\eta (\varvec{y})\), and it is well-known that the accuracy of nonparametric conditional density estimators degrades rapidly as the dimension of \(\eta (\varvec{y})\) increases (see Blum 2010 for an in-depth discussion on this point). On an intuitive level, the curse of dimensionality is caused by the fact that in a high-dimensional Euclidean space, almost all vectors in that space, e.g., \(\varvec{y}\) and \(\varvec{z}\), are equally as distant from each other, so that discerning differences between any two vectors becomes increasingly difficult as the dimension increases.

Ultimately, the curse of dimensionality has led to a fundamental tension in likelihood-free inference: researchers must either make use of exorbitant computational resources in order to reliably compare \(\varvec{y}\) and \(\varvec{z}\), or they must reduce the data down to summaries \(\eta (\varvec{y})\), which can entail an excessive loss of information if \(\eta (\varvec{y})\) is not chosen carefully. However, this tension can actually be cut if we move away from attempting to compare elements in Euclidean space, i.e., comparing \(\varvec{y}\) and \(\varvec{z}\), and instead try to compare \(\varvec{y}\) and \(\varvec{z}\) using their probability distributions.

From the above observation, several approaches for comparing observed and simulated datasets via their distributions have recently been proposed within ABC inference. Recall that \(\mathcal {P}\) denotes the collection of models used to simulate data, \(P_\theta ^{(n)}\in \mathcal {P}\) denotes the distribution of \(\varvec{z}\mid \theta \), \(P_0^{(n)}\in \mathcal {P}\) the distribution of \(\varvec{y}\), and define \(\rho :\mathcal {P}\times \mathcal {P}\rightarrow \mathbb {R}_{+}\) to be a statistical distance on the space of probability distributions \(\mathcal {P}\).Footnote 1

Likelihood-free methods based on \(\rho (\cdot ,\cdot )\) seek to select draws of \(\theta \) such that \(\rho (P^{(n)}_0,P_\theta ^{(n)})\) is “small” with large probability. This construction means that likelihood-free methods based on comparing distributions can differ in two ways: one, the choice of \(\rho (\cdot ,\cdot )\); two, their use of the kernel \(K_\epsilon \) in constructing the posterior for \(\theta \). Since the second aspect has been shown to be largely immaterial for inference in the case of summary statistic based ABC, in this review we focus on the choice of \(\rho (\cdot ,\cdot )\).

In practice, calculating \(\rho (P_\theta ^{(n)},P_0^{(n)})\) is infeasible since \(P_0^{(n)}\) is unknown and \(P_\theta ^{(n)}\) is intractable. To circumvent this issue, instead of attempting to compare the joint laws \(P_0^{(n)}\) and \(P_\theta ^{(n)}\), existing full data approaches only compare marginal distributions. The latter (marginal) distributions can be conveniently estimated using the empirical distributions of \(\varvec{y}\) and \(\varvec{z}\): for \(\delta _{x}\) denoting the Dirac measure on \(x\in \mathcal {Y}\), define the empirical distribution of the observed data \(\varvec{y}\) as \(\hat{\mu } = n^{-1}\sum _{i=1}^n \delta _{y_i}\), and, for any \(\theta \in \Theta \), let \(\hat{\mu }_{\theta }=n^{-1}\sum _{i=1}^n \delta _{z_i}\), where \(\varvec{z}\sim P_\theta ^{(n)}\), denote the empirical distribution of the simulated data. Even though the likelihood is intractable, \(\hat{\mu }\) and \(\hat{\mu }_{\theta }\) can always be constructed.

Given an observed dataset \(\varvec{y}\), and a particular choice for \(\rho (\cdot ,\cdot )\), the ABC posterior based on the statistical distance \(\rho (\cdot ,\cdot )\) uses the likelihood

and yields the ABC posterior

The posterior notation \(\pi ^\rho _\epsilon [\theta \mid \varvec{y}]\) highlights the fact that this posterior is conditioned on the entire sample of observed data \(\varvec{y}\) (via \(\hat{\mu }\)) and depends on the choice of distance \(\rho (\cdot ,\cdot )\).

While there are many possible distances to choose from, and thus many different posteriors one could compute, two different choices of \(\rho (\cdot ,\cdot )\) can deliver posteriors that vary significantly from one another. Moreover, the resources necessary to compute the posterior under different choices for \(\rho (\cdot ,\cdot )\) can also vary drastically. In addition, not all distances on \(\mathcal {P}\) are created equal; certain distances yield more reliable posterior approximations than others depending on the size, type, and variability of the data. Given these issues regarding the choice of \(\rho (\cdot ,\cdot )\), in what follows we review several approaches that have been employed in the literature and attempt to highlight in what types of problems they are best suited.Footnote 2

3.1 Wasserstein distance

One of the most commonly employed approaches to full data inference in ABC, as proposed by Bernton et al. (2019), takes \(\rho (\cdot ,\cdot )\) to be the Wasserstein distance. Let \((\mathcal {Y},d)\) be a metric space, and for \(p\ge 1\) let \(\mathcal {P}_p(\mathcal {Y})\) denote the collection of all probability measures \(\mu \) defined on \(\mathcal {Y}\) with finite p-th moment. Then, in the case of scalar random variables, the p-Wasserstein distance on \(\mathcal {P}\) between \(\mu ,\nu \in \mathcal {P}_p(\mathcal {Y})\) can be defined as

where \(F_\mu (\cdot )\) denotes the cumulative distribution function (CDF) of the distribution \(\mu \), and \(F^{-1}_\mu (\cdot )\) its quantile function. For a review of the Wasserstein distance, and optimal transport more broadly, we refer to Villani (2008).

While the above formula looks complicated, the Wasserstein distance between the empirical distributions \(\hat{\mu }\) and \(\hat{\mu }_\theta \) takes a simpler form in the case of \(p=1\). Namely, for \(y_{(i)}\) denoting the i-th sample order statistic,

which is nothing but comparing, in the \(L_1\) norm, the (average of the) n order statistics calculated from \(\varvec{y}\) and \(\varvec{z}\). As such, calculation of \(\mathcal {W}_{1}\left( \hat{\mu }, \hat{\mu }_\theta \right) \) only requires sorting the samples (separately) and taking the absolute difference between the observed and simulated order statistics.

The use of \(\mathcal {W}_{1}\left( \hat{\mu }, \hat{\mu }_\theta \right) \) within ABC, by replacing \(\rho (\hat{\mu },\hat{\mu }_\theta )\) in (1) by \(\mathcal {W}_{1}\left( \hat{\mu }, \hat{\mu }_\theta \right) \), can be interpreted as matching all quantiles of the empirical and simulated distributions. We note here that the use of quantiles as summary statistics in ABC is commonplace (see, e.g., Fearnhead and Prangle 2012). Given this interpretation, we would expect that ABC based on \(\mathcal {W}_{1}\left( \hat{\mu }, \hat{\mu }_\theta \right) \) will produce reliable posterior approximations in situations where the quantiles of the distribution are sensitive to fluctuations in \(\theta \). However, if the quantiles of \(\varvec{z}\) do not vary significantly as \(\theta \) changes, the Wasserstein distance will not change in a meaningful manner, and the posterior approximation may be poor. For instance, if the data displays dynamic time-varying features in certain conditional moments, then it may be difficult for ABC based on the Wasserstein to account for these features, and the approach may have to be supplemented with additional summaries that specifically target the dynamics inherent in the series.

Lastly, we note that while ABC based on the Wasserstein is a “black-box” approach to choosing summaries, since the approach boils down to matching sorted samples of observations in the \(L_1\) norm, we may encounter the curse of dimensionality in situations where n is large.

3.1.1 Energy distance

Energy distances (or statistics) are classes of functions for measuring the discrepancy between two random variables; we refer to Székely and Rizzo (2005) for a review of the energy distances and their applications in statistics. To define the energy distance let \(Y_1\in \mathcal {Y}\) and \(Z_1\in \mathcal {Y}\) denote independent variables with distributions \(\mu \) and \(\nu \), such that \(\int _{\mathcal {Y}}\Vert y_1\Vert _p\text {d}\mu (y_1)<\infty \) and \(\int _{\mathcal {Y}}\Vert z_1\Vert _p\text {d}\nu (z_1)<\infty \). Also, let \(Y_2\) and \(Z_2\) denote random variables with the same distribution as \(Y_1\) and \(Z_1\), respectively, but independent of \(Y_1\) and \(Z_1\). For an integer \(p\ge 1\), the p-th energy distance \(\mathcal {E}_p(\mu ,\nu )\) can be defined as

and satisfies \(\mathcal {E}_p(\mu ,\nu )\ge 0\), with equality if an only if \(\mu =\nu \) (Székely and Rizzo 2005). Using this latter inequality, \(\sqrt{\mathcal {E}_p(\mu ,\nu )}\) can be viewed as a metric on the space of univariate distribution functions.

The inequality \(\mathcal {E}_p(\mu ,\nu )\ge 0\) provides motivation for using this distance to measure the discrepancy between probability distributions of two separate samples of observations, and in this way is related to other nonparametric two-sample test statistics (such as the Cramer-von Mises statistic discussed later). The ability of the energy distance to reliably discriminate between two distributions has led Nguyen et al. (2020) to use the energy distance to compare \(\varvec{y}\) and \(\varvec{z}\) in order to produce an ABC-based posterior for \(\theta \). Since \(\mathcal {E}_p(\mu ,\nu )\) cannot be calculated directly, Nguyen et al. (2020) propose to replace the energy distance by the V-statistic estimator

and thus set \(\rho (\cdot ,\cdot )=\widehat{\mathcal {E}}(\hat{\mu },\hat{\mu }_\theta )\) in equation (1).Footnote 3

The main restrictions on the use of \(\mathcal {E}_p\) in ABC-based inference relates to its moment restrictions. Existence of \(\mathcal {E}_p(\mu ,\nu )\) requires at least a p-th moment for both variables under analysis. Such an assumption is violated for heavy tailed data, such as stable distributions, which are commonly encountered example in the ABC literature. Consequently, if there are outliers in the data, ABC inference predicated on the energy distance may not be accurate.

In addition, the V-statistic estimator \(\widehat{\mathcal {E}}_p(\hat{\mu },\hat{\mu }_\theta )\) generally requires \(O(n^2)\) computations. Therefore, in situations where n is large, or if many evaluations of \(\mathcal {E}_p(\mu ,\nu )\) are required, posterior inference based on \(\mathcal {E}_p(\mu ,\nu )\) may be time consuming.

3.2 Maximum mean discrepancy

The energy distance is a specific member of the class of maximum mean discrepancy (MMD) distances between two probability measures. Let \(k:\mathcal {Y}\times \mathcal {Y}\rightarrow \mathbb {R}_{}\) be a Mercer kernel function,Footnote 4 and let \(Y_1\in \mathcal {Y}\) and \(Z_1\in \mathcal {Y}\) be distributed according to \(\mu \) and \(\nu \), respectively, with \(Y_2,\;Z_2\) again denoting an iid copy of \(Y_1,\;Z_1\). Then the MMD between \(\mu \) and \(\nu \) is given by

The choice of kernel in the MMD determines which features of the probability distributions under analysis one is interested in discriminating against. If the kernel is taken to be polynomial, as in the energy distance, then one is interested in capturing differences in moments between the two distributions. If instead one chooses a class of kernels such as the Gaussian, \(\exp \left( -\Vert y-z\Vert ^2_2/2\sigma \right) \) or Laplace, \(\exp \left( -|y-z|_1/\sigma \right) \), then one attempts to match all moments of the two distributions.Footnote 5

As with the energy distance, direct calculation of MMD is infeasible in cases where \(\mu ,\nu \) are unknown and/or intractable. However, writing MMD in terms of expectations allows us to consider the following estimator based on \(\varvec{y}\) and \(\varvec{z}\):

The ability to bypass summary statistics via the MMD in ABC was initially proposed by Park et al. (2016), and has found subsequent use in several studies. The benefits of MMD are most appreciable in cases where initial summary statistics are hard to construct, or in situations where the structure of the data makes constructing a single set of summary statistics to capture all aspects of the data difficult, such as in dynamic queuing networks (Ebert et al. 2018).

The MMD estimator \(\widehat{\text {MMD}}^2(\hat{\mu },\hat{\mu }_\theta )\) can be seen as an unbiased U-statistic estimator of the population counterpart. Therefore, \(\widehat{\text {MMD}}^2(\hat{\mu },\hat{\mu }_\theta )\) need not be bounded below by zero (i.e., it can take negative values). Given this fact, Nguyen et al. (2020) argue that it is not necessarily suitable as a discrepancy measure for use in generative models.

Unlike the Wasserstein or energy distance, the use of MMD requires an explicit choice of kernel function, and it is currently unclear how the resulting choice affects the accuracy of the posterior approximation. In particular, while it is common to consider a Gaussian kernel, it is unclear whether this choice is preferable in all situations. Moreover, we note that, as in the case of the Energy distance, the choice of kernel in MMD automatically imposes implicit moment assumptions. Namely, the expectations that define the MMD criterion must exist. Therefore, depending on the kernel choice, MMD may not yield reliable posterior inferences if there are outliers in the data or if the data has heavy tails.

In addition, it is important to point out that the calculation of \(\widehat{\text {MMD}}^2(\hat{\mu },\hat{\mu }_\theta )\) requires \(O(n^2)\) calculations, which can become time consuming when n is large, and/or when many evaluations of \(\widehat{\text {MMD}}^2(\hat{\mu },\hat{\mu }_\theta )\) are required to obtain an accurate posterior approximation.Footnote 6

3.3 Cramer-von mises distance

The Cramer distance between the empirical CDF of the observed sample, \(\hat{\mu }\), and a theoretical distribution \(\mu _\theta \) is defined as the \(L_2\) distance between \(\hat{\mu }\) and \(\mu _\theta \):

However, practical use of the above distance is made difficult by the fact that the distribution of the distance depends on the specific \(\mu _\theta \) under hypothesis. To rectify this issue, we integrate the Cramer distance with respect to the hypothesised measure, \(\mu _\theta \), to obtain the Cramer-von Mises (CvM) distance

which has a distribution that, by construction, does not depend on \(\mu _\theta \) (see, e.g., Anderson 1962).

In the case of ABC, the measure \(\mu _\theta \) is intractable, so direct calculation of \(\mathcal {C}(\hat{\mu },\mu _\theta )\) is infeasible and we can instead employ the following estimator of the CvM distance: for \(\widehat{H}(t)=\frac{1}{2}\left[ \hat{\mu }_n(t)+\hat{\mu }_\theta (t)\right] \)

where \(\hat{\mu }_\theta (t)\) denotes the empirical CDF at the point t based on the simulated data \(\mathbf {z}\).Footnote 7

For continuously distributed data, \(\widehat{\mathcal {C}}(\hat{\mu },\hat{\mu }_\theta )\) can be rewritten in terms of the ranks of the observed and simulated samples. Let \(h_{(1)}<\dots <h_{(2n)}\) denote the ordered joint sample \(\varvec{h}=(\varvec{y}',\varvec{z}')' \). Define \(r_{(1)}<\dots <r_{(n)}\) as the corresponding ranks in \(\varvec{h}\) associated with \(\varvec{y}\), and likewise let \(s_{(1)}< \dots < s_{(n)}\) denoted the ranks in \(\varvec{h}\) associated with \(\varvec{z}\), then Anderson (1962) showed that

The above formula makes clear that calculating the CvM distance is quite simple, as it just involves sorting the entire sample, and calculating the corresponding ranks of \(\varvec{y}\) and \(\varvec{z}\) in the joint sample, \(\varvec{h}\).

The CvM-statistic has certain advantages over other possible distance choices. Most notably, the CvM distance is robust to heavy-tailed distributions and outliers. This property has immediate benefits in the realm of ABC, where it is common to encounter stable distributed random variables, which may not have any finite moments. Furthermore, the CvM distance can be used in any situation where the ECDF can be reliably estimated; i.e., it can be reliably implemented for independent, weakly dependent or cross-sectionally dependent data. An additional advantage is that inference based on the CvM distance is often less sensitive to model misspecification than inferences based on other distances. This latter property is what motivates Frazier (2020) to apply the CvM in misspecified generative models.Footnote 8

While useful, computation of the CvM distance essentially boils down to estimating two empirical CDFs, which means that if the sample size is relatively small, the estimated CvM distance can be noisy and the resulting ABC inference poor. Further to this point, since the CvM distance is based on the difference of two CDFs, which are bounded on [0, 1], differences between the CDFs that only occur in the tails of the data become “pinched” and are unlikely to result in a “large” value of \(\widehat{C}(\hat{\mu },\hat{\mu }_\theta )\). Hence, if there are parameters of the model that explicitly capture behavior in the far tails of the data, but do not impact other features of the distribution, such as skewness or kurtosis, then the CvM may yield inaccurate inferences for these parameters.

In terms of computation, the CvM distance is relatively simple to calculate. However, we note that since the CvM requires sorting the joint sample \(\varvec{h}=(\varvec{y}',\varvec{z}')'\) calculation of \(\widehat{\mathcal {C}}(\hat{\mu },\hat{\mu }_\theta )\) in large samples may take longer than \(\mathcal {W}_1(\hat{\mu },\hat{\mu }_\theta )\), which only requires sorting the individual samples.

3.4 Kullback-Leibler divergence

The last class of statistical distances we review are those based on Kullback-Leibler (KL) divergence. Given two iid datasets \(\varvec{y}\) and \(\varvec{z}\), Jiang (2018) propose to conduct posterior inference on \(\theta \) by choosing as the distance \(\rho (\cdot ,\cdot )\) in (1) the KL divergence between the densities of \(\varvec{y}\) and \(\varvec{z}\). Assume that \(\varvec{y}\) is generated iid from \(\mu \) with density \(f_\mu :=\text {d}\mu /\text {d}\lambda \), and \(\varvec{z}\) iid from \(\nu \) with density \(f_\nu :=\text {d}\nu /\text {d}\lambda \), where \(\text {d}\lambda \) denotes some dominating measure. The KL divergence between \(f_\mu \) and \(f_\nu \) is defined as

and is zero if and only if \(f_\mu =f_\nu \).

Similar to the other distances discussed above, calculation of \(\text {KL}(f_\mu ,f_\nu )\) is infeasible in the ABC context. To this end, given observed data \(\varvec{y}\) and simulated data \(\varvec{z}\), Jiang (2018) estimate \(\text {KL}(f_\mu ,f_\nu )\) using the 1-nearest neighbour density estimator of the KL divergence presented in Pérez-Cruz (2008):

The above discrepancy is simple to calculate and has a time cost of \(O(n\ln n)\) and thus is only marginally slower to calculate than any of the other distances discussed above, except for the MMD or energy distance, which both have a cost of \(O(n^2)\).

Using \(\widehat{\text {KL}}(\varvec{y},\varvec{z})\) in (1), Jiang (2018) compares this approach against other ABC approaches based on both full data distances, such as the Wasserstein, and based on automatic summary statistics a la Fearnhead and Prangle (2012). The results suggest that ABC-inference based on the KL divergence can outperform other measures when the model is correctly specified, and when the data is iid, at least in relatively small samples.Footnote 9

While useful, the approach of Jiang (2018) is only valid for absolutely continuous distributions, and is not applicable for discrete or mixed data. In such cases, and if one still wishes to use something like the KL divergence to conduct ABC, one can instead use the approach proposed by Turner and Sederberg (2014).

While the approach of Jiang (2018) approximates the KL divergence directly, the approach of Turner and Sederberg (2014) essentially constructs a simulated estimator of the likelihood, for every proposed value of \(\theta \), and then evaluates the observed sample at this likelihood estimate. As such, this approach is not strictly speaking an ABC approach, but remains a likelihood-free method.

To present the approach of Turner and Sederberg (2014), for simplicity let us focus on the case where \(z_i\) is generated from a continuous distribution.Footnote 10 Then, the approach of Turner and Sederberg (2014) first generates \(j=1,\dots ,m\) iid realizations of \(\varvec{z}^{j}=(z_1^j,\dots ,z_n^j)'\), with \(z^j_i {\mathop {\sim }\limits ^{\mathrm {iid}}} P_\theta \), for each i and j, and constructs an estimator of the model density at the point \(z^\star \) by averaging, over the m datasets, the standard kernel density estimator

where \(K_\delta \) is a kernel function with bandwidth parameter \(\delta \). Using this density estimator, Turner and Sederberg (2014) construct the estimated likelihood \( \hat{p}_n(\varvec{y}\mid \theta )=\prod _{i=1}^{n}\hat{f}_{m,\delta }(y_i\mid \theta ) \), and subsequently use \(\hat{p}_n(\varvec{y}\mid \theta )\) in place of the actual likelihood within a given MCMC scheme to conduct posterior inference on \(\theta \). When the data are iid there is a computational cost saving that can be achieved. Here the \(n \times m\) individual simulated data points can be concatenated into a vector to construct a single kernel density estimate, which is then evaluated at each of the n observed data points. That is, simulated data for observation i can be recycled for observation \(k \ne i\). This may reduce the value of m required. Indeed, in this iid setting, the size of the single concatenated simulated dataset (here \(n \times m\)) need not be an exact multiple of n.

The approach of Turner and Sederberg (2014) is not based on a distance between the simulated and observed samples, but on a (simulation-based) estimate of the likelihood. Therefore, in the limit of infinite computational resources, i.e., as \(m\rightarrow \infty \), the approach of Turner and Sederberg (2014) will yield the exact likelihood function \(p_n(\varvec{y}\mid \theta )\), and thus the ‘exact’ posterior \(\pi (\theta \mid \varvec{y})\).Footnote 11 In contrast, even in the case where it is feasible to set the ABC tolerance as \(\epsilon =0\), ABC based on the statistical distance \(\rho (\cdot ,\cdot )\) will only ever deliver an approximation to the ‘exact’ posterior that is particular to the choice of statistical distance. The obvious exception to this statement is the case where the information contained in the statistical distance coincides with that contained in the likelihood (i.e., the Fisher information), which is not generally the case for any of the methods discussed above.

Unlike the distance estimators discussed previously, the approach of Jiang (2018) requires iid data, while the approach of Turner and Sederberg (2014) requires (at least) independent data, with the latter approach also requiring additional modifications depending on the model under analysis. Moreover, it is not immediately obvious how to extend these approaches to capture other dependence regimes. Therefore, while these approaches may yield accurate posterior approximations in settings where the density of the model is intractable, e.g., in stable or g-and-k distributions, these methods are not appropriate for conducting inference in models with cross-sectional or temporal dependence. In addition, since these approaches are akin to using a simulation-based estimate of the likelihood function (or a function thereof) as a distance, in cases where the model is misspecified, these approaches may perform poorly and alternative measures may yield more reliable inference (see, e.g., the robust BSL approach of Frazier and Drovandi 2021, or the robust ABC approaches discussed in Frazier 2020).

4 Examples

For the examples we use a Gaussian mixture, parameterised by the component means, standard deviations and weights, as the auxiliary model for forming the summary statistics. Specifically, we use the score function of the Gaussian mixture evaluated at the maximum likelihood estimate (MLE) based on the observed data as the summary statistics. The observed summary statistic, obtained from substituting the observed data into the score function, is thus theoretically equal to a vector of zeros provided that the MLE lies in the interior of the parameter space. The MLE is obtained using the EM algorithm with multiple random initialisations. The number of components in the mixture are specified in each example. In some cases we use different summary statistics, which we define when needed. In the results (shown as tables and figures), we refer to ABC with summary statistics as simply ABC. BSL only uses summary statistics, and so we refer to that as BSL.

When using summary statistics, we use the same ones for both ABC and BSL. We note that in practice the principles for choosing summaries may differ for ABC and BSL, since BSL is more tolerant to high-dimensional summaries but requires that the distribution of the simulated summaries is reasonably well behaved (Frazier et al. 2021). In this paper we choose summaries that are reasonable for both ABC and BSL, in the sense they are low-dimensional and are approximately Gaussian in large samples, in order to more easily compare the methods’ performance.

The approach of Turner and Sederberg (2014) that uses kernel density estimation is referred to as KDE in the results. For the full data distance ABC approaches, we consider the: Cramer von Mises distance (CvM), Wasserstein distance (Wass) and the maximum mean discrepancy (MMD). For MMD we use a Gaussian kernel with a bandwidth that is set as the median of the Euclidean distances between pairs of obsereved data points, consistent with Park et al. (2016). For KDE, we use a Gaussian kernel with a bandwidth given by Silverman’s rule of thumb.

We have chosen the specific distances to use in the following examples based on computational cost and diversity across the methods. In particular, since the energy statistic is a specific member of the MMD family, and since both require \(O(n^2)\) computations for a single evaluation, it is prohibitively difficult to consider repeated sampling comparisons using both methods. In addition, the KDE approach and the KL divergence approach have a similar flavour, both can be seen as based on estimated densities, and both are applicable in the same types of settings (i.e., both require independent data). Therefore, to render the comparison between the various methods more computationally feasible, we only consider the KDE approach in what follows.

We use MCMC to sample the approximate posteriors. When parameters are bounded, we use an appropriate logistic transformation to sample an unbounded space. We use a multivariate normal random walk with a covariance set at an estimate of the relevant approximate posterior obtained from pilot runs. The number of MCMC iterations is set large to ensure that the Monte Carlo error has little impact on the conclusions drawn.

For BSL, we choose m so that the standard deviation of the log-likelihood at a central parameter value (true value when available) is roughly between 1 and 2. For ABC, we take \(\epsilon \) as a particular sample quantile of 100,000 independent simulated ABC discrepancy values based on a central parameter value. We choose the quantile so that the effective sample size of the MCMC is of the same order as that for BSL for the same total number of model simulations. In some examples, the overall ABC distance function is a linear combination of multiple distance functions. For the weights we compute the inverse of the sample standard deviation of the individual discrepancies, or a robust measure thereof when outlier distances are present. For the ABC approaches (both summary statistic and full data) we always use \(m = 1\). It is less clear how to choose m for KDE compared to BSL, as we find that the posterior based on KDE can be quite sensitive to m. Thus, for KDE, we choose m as large as possible so that the overall efficiency (effective sample size divided by the number of model simulations) remains similar to that of BSL. Thus, we allocate roughly the same computational effort in terms of the number of model simulations to all approaches. It is important to note, however, that there can be significant overhead associated with some of the methods. For example, MMD is slow for larger datasets and KDE involves kernel density estimation, which can be slow when there are a large number of simulated data points used to construct the KDE. This aspect is discussed further in each of the examples.

The first two examples are toy and it is possible to compare methods on repeated simulated datasets. The next two examples are more substantive and computationally intensive, hence we compare methods on one single and real dataset only. For a single simulated dataset, we compare methods visually on the basis of which posterior approximation is more concentrated around the true parameter, since ABC and BSL do not tend to over-concentrate due to the use of summary statistics and/or ABC threshold. For the single real dataset where the true parameter is not available we consider the concentration of the posterior approximations, guided by the results for the corresponding simulated dataset.

4.1 g-and-k example

The g-and-k distribution (e.g. Rayner and MacGillivray (2002)) is a complex distribution defined in terms of its quantile function that is commonly used as an illustrative example in likelihood-free research (for early ABC treatments see Allingham et al. (2009); Drovandi and Pettitt (2011)). The quantile function for the g-and-k model is given by

here p denotes the quantile of interest while z(p) represents the quantile function of the standard normal distribution. The model parameter is \({\theta } = (a,b,c,g,k)\), though common practice is to fix c at 0.8, which we do here (see Rayner and MacGillivray (2002) for a justification). The example is suitable to examine the performance of likelihood-free methods since the likelihood can be computed numerically (Rayner and MacGillivray 2002) permitting exact Bayesian inference, albeit more cumbersome than simulating the model which can be done straightforwardly via inversion sampling.

Here we consider sample sizes of \(n = 100\) and \(n = 1000\), with true parameter value \(a = 3\), \(b = 1\), \(g = 2\) and \(k = 0.5\). The true density (approximated numerically) for this parameter configuration is shown in Fig. 1. For each sample size, we generate 100 independent datasets. For BSL we use \(m = 50\) and for KDE we use \(m = 100\). For the summary statistic based approaches, we use a 3 component Gaussian mixture as the auxiliary modelFootnote 12. We find MMD to be too slow for the \(n = 1000\) datasets.

The results for \(n = 100\) are shown in Tables 1, 2, and 4 for the four parameters. In the tables we show, based on the 100 simulated datasets, the estimated bias of the posterior mean, bias of the posterior median, average of the posterior standard deviation, and the coverage rates for nominal rates of 80%, 90% and 95%. If there is a method that clearly performs best for a particular parameter based on a combination of the performance measures, then we bold it in the table. We also use italics for any methods that perform relatively well. We note that there is some subjectivity in these decisions.

Taking the four parameters into account, CvM could be considered the best performing method. This approach clearly produces the best results for g, which is the most difficult parameter to estimate in the g-and-k model. However, it generally produces overcoverage. KDE also generally performs relatively well, followed by BSL. Wass, MMD and ABC perform relatively poorly in this example. The Wasserstein distance is likely having difficulty handling the heavy tailed nature of the data. The results for the larger \(n = 1000\) sized datasets (Tables 5, 6, 7 and 8) are qualitatively similar, but the difference between the methods is more subtle. CvM, KDE and BSL perform similarly, with Wass and ABC noticeably performing worse. Results for estimated posterior correlations are provided in Appendix A of the supplementary material. Wass and MMD appear to be the least accurate in recovering the exact posterior correlations in general, which is consistent with the marginal results presented here.

4.2 M/G/1 example

The M/G/1 queueing model is a stochastic single-server queue model with Poisson arrivals and a general service time distribution. Here we assume that service times are \(\mathcal {U}(\theta _1,\theta _2)\), as this has been a popular choice in other likelihood-free literature (see e.g. An et al. 2020; Blum 2010). The time between arrivals is \(\text {Exp}(\theta _3)\) distributed. We take the observed data \(\varvec{y}\) to be the inter-departure times of 51 customers, resulting in 50 observations. The observed data is generated with true parameter \((\theta _1, \theta _2, \theta _3)^\top = (1, 5, 0.2)^\top \). The prior is \(\mathcal {U}(0, \min (y_1, y_2, \ldots , y_n)) \times \mathcal {U}(0, 10 + \min (y_1, y_2, \ldots , y_n)) \times \mathcal {U}(0, 0.5)\) on \((\theta _1, \theta _2, \theta _3)\). Shestopaloff and Neal (2014) develop a data augmentation MCMC method to sample from the true posterior that we compare the approximate methods with.

We compare methods using 100 independent datasets generated from the true M/G/1 model. A visualisation of one of these datasets is shown in Fig. 2. It can be seen that the data shows some positive skewness. Thus we also consider the impact on the likelihood-free approaches by applying a log transformation to the data, which results in a more symmetric distribution (also shown in Fig. 2). The results based on the log transformation data include log in parentheses after the acronym of the method. The CvM distance is theoretically unaffected by one-to-one transformations of the data, so we expect similar results for both datasets for that approach. However, the other approaches may be impacted by the transformation. For the auxiliary model for ABC and BSL we again use a 3 component Gaussian mixture.Footnote 13 BSL uses \(m = 50\) and KDE uses \(m = 100\).

The results for the three parameters are shown in Tables 9, 10 and 11. Overall the best performing method for this example is KDE. There is little to no autocorrelation in the data, justifying the independence assumption of KDE. Even though the data is skewed, the underlying distribution of the inter-departure time does not have thick tails, and the log transformation helps to remove a large degree of the skewness. BSL also performs relatively well on this example. BSL performs substantially better than ABC with the same summary statistics. This is consistent with the empirical results of Price et al. (2018), which shows that BSL can outperform ABC when the summary statistic distribution is regular enough.

Interestingly, despite being one of the best performing methods in the g-and-k example, CvM is one of the worst performing in this example. The results are very similar when the data is log transformed, as expected. For the other methods, there is generally an improvement in results when log transforming the data, except for MMD, ABC and KDE where the results are worse for \(\theta _2\). The best performing full distance ABC method is Wass (log), with the log transformation being critical to obtain good results for \(\theta _1\).

Unlike in the g-and-k example, the Wasserstein approach significantly outperforms the CvM approach in the M/G/1 example for the parameters \(\theta _1\) and \(\theta _2\) (the two give largely similar results for \(\theta _3\), with the Wasserstein having a slight edge). We hypothesize that this poor performance is due to the relatively small sample size (n = 50 observations); the fact that the parameter \(\theta _1\) controls the lower tail of the observed data; and the fact that, due to the large mean of the exponentially distributed interarrival times, the estimated CDF can be quite noisy at large values in the sample. Results for estimated posterior correlations are provided in Appendix B of the supplementary material. Most methods do a reasonable job in recovering the exact posterior correlations, except that CvM does not accurately estimate the correlation between \(\theta _2\) and \(\theta _3\).

4.3 Stereological extremes example

Here we consider an example in stereological extremes, originally explored in the likelihood-free setting by Bortot et al. (2007). During the process of steel production, the occurrence of microscopic particles, called inclusions, is a critical measure of the quality of steel. It is desirable that the inclusions are kept under a certain threshold, since steel fatigue is believed to start from the largest inclusion within the block. Bortot et al. (2007) develop a new model for inclusions. The stochastic model generates a random number of inclusions, and for each inclusion, the largest principal diameter of an ellipsoidal model of the inclusion in the 2-dimensional cross-section is recorded. We refer the reader to Bortot et al. (2007) for more details, and Anderson and Coles (2002) for an earlier mathematical modelling approach. More details on the model are also provided in Appendix C of the supplementary material.

The model contains three parameters, \(\theta = (\lambda ,\sigma ,\xi )\). Here \(\lambda \) is the rate parameter of a homogenous Poisson process describing the locations of the inclusions, and \((\sigma ,\xi )\) are the (scale, shape) parameters of a generalised Pareto distribution related to the size of the inclusions. The prior distribution is \(\mathcal {U}(30,200) \times \mathcal {U}(0,15) \times \mathcal {U}(-3,3)\). If we denote the vector of observed inclusions by S, then the observed data is \(\varvec{y}= (S, |S|)\) where |S| represents the number of inclusions. Here we consider two datasets, the first simulated from the model with true parameter \((100, 2, -0.1)\) and the second being a real dataset as analysed in Bortot et al. (2007). A visualisation of these datasets is shown in Fig. 3. The number of inclusions in the simulated and real data is 138 and 112 respectively.

For this application, there has been several sets of summary statistics developed. Fan et al. (2013) consider the number of inclusions, as well as the log of the difference of 112 equally spaced quantiles, creating 112 statistics in total (111 from the log quantile differences, and the other from the number of inclusions). Fan et al. (2013) also consider dimension-reduced summary statistics based on the semi-automatic approach of Fearnhead and Prangle (2012). We find that similar results can be obtained using summary statistics from our indirect inference approach. Using a 3 component Gaussian mixture as the auxiliary model produces 9 summary statistics (incorporating the number of inclusions). We use BSL and ABC with this summary statistic, and for BSL we use \(m = 100\) simulated datasets for estimating the synthetic likelihood. We also consider the four summary statistics used in An et al. (2020), which are similar to the original summary statistics in Bortot et al. (2007). These are the number of inclusions, \(\log (\min (S))\), \(\log (\text {mean}(S))\) and \(\log (\max (S))\). As there are only 4 statistics, we consider only ABC and not BSL.

For the full data distance based ABC approaches, we combine 2 distance functions into a single distance function via a weighted average of individual distances (one for the number of inclusions and one for the inclusion sizes). For the number of inclusions, we simply use the L1-norm for the distance. We set the weight for each distance as the inverse standard deviation of the distance estimated from simulations at the true parameter value \((100, 2, -\,0.1)\). If the distribution of the distance has a heavy tail, we use a robust estimate of the standard deviation via 1.4826 times the median absolute deviation.

The KDE method is awkward to apply in this example, since the dataset size is random, and thus it is necessary to include not only the inclusion size data but also the number of inclusions. Here we use m simulated datasets to estimate the density of the number of inclusions. As each model simulation often generates more than one inclusion, we concatenate all the simulated inclusion sizes together for estimating the density of the inclusion size. We then treat the number of inclusions and inclusion sizes as independent when estimating the likelihood via KDE. As with BSL, we use \(m = 100\) for KDE. The MCMC acceptance rate for KDE is higher than BSL with this value of m. However, there is a fair amount of overhead for computing the kernel density estimate for KDE with \(m = 100\), so we do not consider larger values of m.

The results are shown for the simulated and real datasets in Figs. 4 and 5, respectively. In the top row of each figure we compare the full data approaches. Then, in the second row, we compare the best performing full data approach with the summary statistic approaches. The results are qualitatively similar for the simulated and real datasets. For the full data distance approaches, the top performing methods are Wass and MMD. KDE performs well for \(\sigma \) and \(\xi \), but not for \(\lambda \). CvM produces the least precise posteriors in general. The poor performance of the CvM is not particularly surprising given the results of the M/G/1 example. In particular, the parameters \((\sigma ,\xi )\) control the tail shape of the distribution, and the sample size is relatively small. As we have already discussed, the CvM distance can be quite noisy in these circumstances, and thus the resulting posteriors can be inaccurate. As the Wasserstein distance is more convenient to compute than the MMD, we take the Wass method forward to compare with the summary statistic based approaches. Wass performs similarly to ABC with summary statistics (both choices of the summary statistics). However, BSL appears to produce slightly more precise posteriors, particularly for \(\xi \).

4.4 Toad example

The next example we consider is the individual-based movement model of Fowler’s Toads (Anaxyrus fowleri) developed by Marchand et al. (2017). The model has since been considered as a test example in the likelihood-free literature, in particular for synthetic likelihood methods (see An et al. 2020; Frazier and Drovandi 2021; Priddle et al. 2020). We consider the “random return” model of Marchand et al. (2017). We provide only a brief overview of the model herein, and refer the reader to Marchand et al. (2017) and Appendix D of the supplementary materials for more details. For a particular toad, we draw an overnight displacement from the Levy alpha-stable distribution \(S(\alpha , \xi )\), where \(0\le \alpha \le 2\) and \(\xi >0\). At the end of the night, toads return to their previous refuge site with probability \(p_0\), or take refuge at their current overnight displacement. In the event of a return on day i, the refuge site is chosen with probability proportional to the number of times the toad has previously visited each refuge site. The raw data consists of the refuge locations of \(n_t = 66\) toads over \(n_d = 63\) days. We consider both real and simulated data. The simulated data is generated using \(\theta = (\alpha ,\xi ,p_0)^\top = (1.7,35,0.6)^\top \), which seems to be also favourable for the real data.

The raw data consist of GPS location data for \(n_t\) toads for \(n_d\) days, i.e. the observation matrix \(\varvec{Y}\) is of dimension \(n_d \times n_t\). Here \(n_t=66\) and \(n_d=63\). Unlike the previous examples, we compute an initial set of summary statistics as in Marchand et al. (2017). Specifically, \(\varvec{Y}\) is summarised down to four sets comprising the relative moving distances for time lags of 1, 2, 4, 8 days. For instance, \(\varvec{y}_1\) consists of the displacement information of lag 1 day, \(\varvec{y}_1 = \{|\Delta y| = |\varvec{Y}_{i,j}-\varvec{Y}_{i+1,j}| ; 1 \le i \le n_d-1, 1 \le j \le n_t \}\). For each lag, we split the displacement vector into two sets. The first set holds displacements less than 10m, and these are taken as returns, and we simply record the number of returns. The second set holds the vector of displacements that are greater than 10m (non-returns). Combining these two aspects (the number of returns and the vector of non-return displacements) for the four lags produces the data \(\varvec{y}\) for analysis. A visualisation of the non-returns data is given in Fig. 6. It can be seen that the non-returns data has a heavy right tail. The number of returns is in the order of 1000 for the simulated data and 100 for the real data. This is because there are many missing distances in the real data.

For the full distance based ABC approaches, there is no further dimension reduction. We find that standard BSL is not suitable when the summary statistics are formed from a Gaussian mixture model due to lack of normality. Instead, we use the statistics from An et al. (2020) as BSL appears to work well with them. For the non-returns, we compute the log of the differences of the \(0,0.1,\dots ,1\) quantiles and the median for each lag. Combined with the statistics for the returns, there are 48 summary statistics in total. For BSL we use \(m = 500\). For ABC with summary statistics, we use a weighted Euclidean distance, where the weights are the inverse of the standard deviations of the summary statistics estimated from pilot simulations at (1.7, 35, 0.6).

For the full data distance based ABC approaches, we combine 8 distance functions into a single distance function via a weighted average of individual distances (returns and non-returns for the four lags). For the count of the number of returns, we simply use the L1-norm for the distance. Given the heavy tail nature of the data, we also consider the log of the non-returns for the Wass and MMD. Given the relatively large number of non-returns in the simulated data we find MMD to be too slow. Also, the KDE method is awkward to apply for the same reason as the stereological extremes example. With a moderate value of m needed to estimate the density for the number of returns, a huge number of non-returns is generated and the kernel density estimate is expensive to compute. Thus we do not consider the KDE method here.

The estimated posterior marginals for the simulated data and real data are shown in Figs. 7 and 8, respectively. The top row in each figure compares the full distance based approaches, and then the bottom row compares the best performing full distance approach with the summary statistic based methods. Appendix D of the supplementary material shows the estimated bivariate posteriors for all the methods.

For both the simulated and real data it is evident that the full distance based approaches perform similarly, except for Wass, which performs particularly poorly for \(\gamma \). As with the M/G/1 example, it is interesting that performing a log transform of the data significantly improves the performance of the Wasserstein distance. In contrast to the Wasserstein distance, the heavy tailed nature of the data does not affect the results based on the CvM distance. This finding is unsurprising since the CvM distance is generally robust to heavy tailed data. Given that the CvM performs relatively well from a statistical and computational perspective, and it does not require choosing a data transform, we take this method forward to compare with the summary statistic based approaches. ABC with summary statistics produces similar results to CvM. BSL generally produces more precise inferences compared to all other methods.

4.5 Toggle switch example

We also consider a toggle switch model describing gene expressions that can produce multi-modal data. Here we briefly describe the model, and refer to Bonassi et al. (2011) and Gardner et al. (2000) for more details. The example has been considered in a likelihood-free context by, for example, Bonassi and West (2015) and Vo et al. (2019). Let \(u_{c,t}\) and \(v_{c,t}\) be the expressions of genes u and v for cell c at time t. We assume there are 2000 independent cells, \(c = 1,\ldots ,2000\). Given an initial state \((u_{c,0}, v_{c,0})\) and a discrete time step h, \(u_{c,t}\) and \(v_{c,t}\) evolve according to:

where \(\xi _{c,u,t}\) and \(\xi _{c,v,t}\) are independent standard normal random variates that represent the intrinsic noise within cell c. The observed data consists of noisy measurements of \(\{u_{c,T}\}_{c=1}^{2000}\) for some steady state time T. The observation for each cell is modelled as

where the errors \(\eta _c\) have a standard normal distribution. Therefore the data consist of independent observations, \(\varvec{y}= (y_1,y_2,\ldots ,y_{2000})\). The unknown parameter is \(\theta = (\mu , \sigma , \gamma , \alpha _u, \beta _u, \alpha _v, \beta _v)\), and we set \(h=1\) and \(T = 300\) as in Bonassi et al. (2011). We use the same priors as in Bonassi et al. (2011), which are independent and uniformly distributed with lower and upper bounds of \((250, 0.05, 0.05, 0, 0, 0, 0)\) and (400, 0.5, 0.35, 50, 7, 50, 7), respectively. The observed data is simulated from the model with true parameter \(\theta = (320, 0.25, 0.15, 25, 4, 15, 4)\). A visualisation of the data is shown in Fig. 9. Given the relatively large size of the data, we find MMD and KDE substantially more computationally expensive than the other approaches, so we do not consider them in this example. For ABC and BSL we use an auxiliary 3 component Gaussian mixture model.

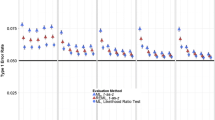

Estimates of the univariate posterior distributions are shown in Fig. 10. It can be seen that all methods perform similarly, except that the CvM produces more diffuse posteriors for \(\mu \), \(\sigma \) and \(\gamma \). Results for other simulated datasets generated from the prior predictive distribution are shown in Appendix E of the supplementary material, and present a wide variety of features. On these additional datasets, the only method to perform consistently well is BSL. CvM generally produces the worst performance, but ABC and Wass also produce poor results in some instances.

We hypothesise that the relatively poor performance of CvM, for \(\mu \), \(\sigma \) and \(\gamma \), is due to the existence of flat regions in the ECDF for data generated from the toggle model; for example, in Fig. 9 the ECDF is nearly flat around a probability of 0.4. Since \(\mu \), \(\sigma \) and \(\gamma \) directly influence the observed data, \(y_c\), this flat region suggests that there are many different values of these parameters for which the ECDF is similar, which would likely result in diffuse posteriors when the chosen distance is CvM. Further evidence for this hypothesis is shown in the Appendix for datasets with similar features. The results in the Appendix also show that CvM can produce relatively poor results for \(\alpha _u\) and \(\beta _v\). We find that this is particularly the case for datasets where there exist a small number of observations far from the bulk of the data. From some model simulations we find that \(\alpha _u\) and \(\beta _v\) can have a strong influence on the presence of these ‘outliers’. The CvM distance places little emphasis on these outliers and thus can accept values of \(\alpha _u\) and \(\beta _v\) that produce simulated data with no outliers, whereas other distances reject these datasets, leading to relatively diffuse approximate posteriors. It is also worth noting that Wass also produces relatively poor results for \(\alpha _u\) for these datasets with outliers. In contrast to CvM, Wass places too much emphasis on the outliers, again leading to an approximate posterior that is too diffuse.

5 Discussion

In this article we reviewed likelihood-free approaches that avoid data summarisation, predominantly focussing on full data distance functions in the ABC context. We performed a qualitative and quantitative comparison between these methods. This should assist practitioners in choosing distance functions that are likely to perform relatively well for their specific applications and data types. We also extended the comparison to likelihood-free approaches that resort to data reduction. We found that at least one of the full data approaches was competitive with or outperforms ABC with summary statistics across most examples, except for some datasets in the toggle switch example. Another interesting finding is that the performance of the full data approaches can be greatly affected by data transformations. The CvM distance function is appealing as it is invariant to monotone transformations, it is fast to compute and is more widely applicable than other full data approaches. However, it did not perform well for the M/G/1 and stereological extremes examples. It would not be difficult to run ABC with different choices of the full data distance functions on parallel cores.

Another finding of this research is that full data distances may need to be split, or augmented with additional information, to ensure they can identify all the model parameters. For example, in the invasive toad model, the data on returns and non-returns, as well as their lags, carry specific information about the model parameters, and combining these data within a single distance can result in a loss of information for certain model parameters; hence, in this example we combine eight different full data distances—one distance for each the first four lags of the returns and non-returns series—to conduct posterior inference. Furthermore, there are other cases, such as the stereological extremes example, where is it useful to augment the full data distances with summary statistics (a finding that was first noted in the case of the Wasserstein distance by Bernton et al. 2019); in this example the number of inclusions carries important information that cannot be recovered by a distance based solely on the inclusion sizes. We suggest, as a default, using a weighted average when combining multiple distance functions, where the weights for each distance are inversely proportional to the standard deviation of the distance.

We note that BSL performed well across all the examples, but it relies on a Gaussian assumption of the model summary statistic. From these examples, it is reasonable to hypothesize that if a great deal of effort is placed on finding informative summary statistics, approaches that use these summaries are likely to outperform the full data approaches in many applications. However, the promising performance of the full data approaches warrants further research in this direction, especially considering that these methods completely obviate the need to choose summary statistics.

We also note that the results obtained for ABC with summary statistics could possibly be improved with the use of regression adjustment methods, see Blum (2018) for a review of such methods, or methods that first conduct summary statistic selection (Prangle 2018). However, such adjustments have not been considered herein to facilitate a more direct comparison between summary-based ABC and ABC based on full data distance approaches.

In some examples we found it useful to combine full data distances with summary statistics that are informative about particular parameters. We expect this approach to be useful in other applications. However, how to appropriately weight the different components in the overall ABC discrepancy function is less clear, and the inferences could be sensitive to these weights. In this paper we adopted a pragmatic approach and set each weight to depend on the variability of its corresponding distance function estimated from simulations at a parameter value within the bulk of the posterior (the true parameter value when it is available). However, this weighting is not guaranteed to be optimal. The research in Prangle (2017) and Harrison and Baker (2020), which aim to optimally weight summary statistics within an ABC discrepancy function, could be adapted to the setting of ABC discrepancy functions that combine full data distances and summary statistics.

For future research we plan to explore these full data distance ABC approaches in the context of likelihood-free model choice. Problems with performing model choice on the basis of summary statistics have been well documented (Robert et al. 2011; Marin et al. 2013). In this paper we assume that the models are well specified, and are able to capture the characteristics of the observed data. However, an interesting extension of this research would be to perform an extensive comparison of full data and summary statistic based approaches in the setting of model misspecification. Such analysis would be particularly interesting given that several studies have documented the potential for poor behavior of summary statistic based approaches in misspecified models (see Frazier et al. 2020 for a discussion in the case of ABC, and Frazier and Drovandi 2021 for a discussion in the case of BSL), while the results of Frazier (2020) suggest that full data approaches to likelihood-free inference can deliver inferences that are robust to certain forms of model misspecification. To further complicate the comparison, a careful choice of summary statistics that ignore features of the data that the model cannot capture can produce inferences robust to misspecification (Lewis et al. 2021). Given that any ranking between the methods is likely to be example specific, great care would be needed in order to construct a set of examples that is broad enough to cover the most common types of model misspecification encountered in practice. Therefore, we leave this interesting topic for future research.

A limitation of the full data approaches in this paper are the types of observed data they can feasibly handle. Currently, these methods are predominantly suited to univariate datasets. We speculate that the application of these distances to univariate problems is due to both computational and statistical concerns. From a statistical standpoint, it is well-known that the Wasserstein distance has a rate of convergence that depends on the dimension of the data, while the simulated likelihood approach of Turner and Sederberg (2014) encounters a similar curse of dimensionality, since it is based on nonparametric density estimation. While multivariate extensions that can mitigate the impact of data dimension do exist, from a computational standpoint, such procedures can be computationally expensive to implement in higher dimensions, and so to date these procedures have not received much focus in the likelihood-free literature. In future work, we plan to explore extending the methods to handle higher dimensional data by exploiting recent research on multivariate non-parametric tests (e.g. Kim et al. 2020). This may increase the class of problems where full data approaches are applicable. It would also be interesting to extend and compare methods on temporal and/or spatial data. This might motivate the use or development of other distance functions.

Notes

We note that in general only a few of the full data methods proposed in the literature are actual metrics/norms. While this complicates the mathematics surrounding verification of certain theoretical properties, it has not stymied the use of such distances in practice.

In what follows, we note that many of the distances presented can be extended to cases where \(\varvec{y}\) is multivariate, and to cases where \(\varvec{y}\) and \(\varvec{z}\) are computed using a differing numbers of observations. However, as these issues are not entirely germane to the production of the ABC posterior based on this distance, or the resulting accuracy of the posteriors across different methods, we do not discuss these extensions herein.

We note that Gretton et al. (2008) have demonstrated that \(\widehat{\mathcal {E}}(\hat{\mu },\hat{\mu }_\theta )\rightarrow _p\mathcal {E}_p(\mu ,\nu )\) as \(n\rightarrow \infty \).

That is, \(k(\cdot ,\cdot )\) is symmetric, continuous, and is positive-definite, i.e., \(\sum _{i=1}\sum _{j=1}k(y_i,y_j)c_ic_j\ge 0\) for all finite sequences \(y_{1},\dots ,y_{n}\) on \(\mathcal {Y}\) and all real \(c_1,\dots ,c_n\).

This latter class of kernels is often called characteristic; see Gretton et al. (2012) and the references therein for further discussion on the use of specific kernel types in MMD.

While faster estimators for MMD, and other distances, may exist, to ensure a fair comparison across different methods we only consider the most basic, and hence direct, estimators of the distances.

If the observed sample has n observations, and the simulated sample m, the two-sample CvM statistic is given by (see, equation (2) in Anderson 1962)

$$\begin{aligned} {[}(nm)/(n+m)]\int [\hat{\mu }_{\theta }(t)-\hat{\mu }_n(t)]^2d \widehat{H}(t), \end{aligned}$$where \((n+m)\widehat{H}(t)=n\hat{\mu }_{}(t)+m\hat{\mu }_{\theta }(t)\). When the samples are the same length, i.e., \(n=m\), the statistic simplifies to \(\widehat{\mathcal {C}}(\hat{\mu },\hat{\mu }_\theta )\) in the displayed equation.

Frazier (2020) also proposes the use of the Hellinger distance to deliver robust inferences in ABC in the case of misspecified models. To keep this review to a reasonable length, we do not review this distance herein.

Across all the simulated examples considered in Jiang (2018) the sample size used for analysis was no greater than \(n=500\).

The case of discrete or mixed data can be handled by considering a kernel density estimator that is appropriate for these settings, and by sufficiently modifying the simulated estimator of the likelihood function.