Abstract

In 2015, Idaho adopted the nation’s first direct admissions system and proactively admitted all high school graduates to a set of public institutions. This reimagination of the admissions process may reduce barriers to students’ enrollment and improve access across geographic and socioeconomic contexts by removing many human capital, informational, and financial barriers in the college search and application process. Despite a lack of evidence on the efficacy of direct admissions systems, the policy has already been proposed or implemented in four other states. Using synthetic control methods, we estimate the first causal impacts of direct admissions on institutional enrollment outcomes. We find early evidence that direct admissions increased first-time undergraduate enrollments by 4–8% (50–100 students per campus on average) and in-state levels by approximately 8–15% (80–140 students) but had minimal-to-no impacts on the enrollment of Pell-eligible students. These enrollment gains were concentrated among 2-year, open-access institutions. We discuss these findings in relation to state contexts and policy design given the emergence of literature highlighting the varied efficacy of similar college access policies.

Similar content being viewed by others

Introduction

While the individual returns to a postsecondary credential have been well documented, state economies also deeply benefit from an educated populous (Oreopoulous & Petronijevic, 2013). The average four-year graduate is 24% more likely to be employed and earns approximately $32,000 more annually (over $1 million across a lifetime), contributing a disproportionately higher share toward states’ tax revenues (Abel & Deitz, 2014). In addition to these economic advantages, higher education provides considerable nonmonetary benefits for society, including improvements in public health, civic engagement, and charitable giving, as well as reduced incarceration rates (Ma et al., 2019; McMahon, 2009; McMahon & Delaney, 2021). Fundamentally, an educated workforce drives a state’s economic competitiveness, and, given a rapidly growing need for a more educated workforce, states have sought public policy options to increase access to higher education (Carnevale et al., 2013; Zumeta et al., 2012). Despite this, few states have managed to meaningfully or equitably improve stagnant college-going rates of high school graduates (National Center for Education Statistics, 2019). In the face of constrained resources and competing spending priorities, many states are seeking viable, low-cost policies to support college enrollment (Barr & Turner, 2013; Doyle & Zumeta, 2014), particularly given declining enrollments at public two- and four-year institutions across the last decade (National Center for Education Statistics, 2020).

Both states and their students face considerable challenges in the college enrollment process. Not all students who would benefit from college enroll. At the individual level, students most notably face information constraints and declining affordability, both of which disproportionately affect students at the lower end of the income distribution (Avery & Hoxby, 2004; Dynarski & Scott-Clayton, 2006). States too face significant challenges when supporting higher education, including competing state budget priorities such as K-12 education, healthcare, prisons, and pension systems (Delaney & Doyle, 2011, 2018). These competing priorities help explain why higher education appropriations are volatile in many states (Doyle et al., 2021), and why some have yet to recover from the Great Recession. Adding to this challenge, despite educational appropriation improvements across the past six fiscal years (17.4% per FTE), inflation adjusted appropriations per student have declined since 2001 (State Higher Education Executive Officers Association, 2019), which has directly impacted college affordability through an increased reliance on tuition and fees to offset these declines (Institute for Research on Higher Education, 2016).

To reduce common enrollment barriers for students, states have experimented with many policy options, including providing targeted college information, behavioral nudges, or broader access to financial aid with grants and place-based scholarships (Carrell & Sacerdote, 2017; Dynarski & Scott-Clayton, 2013; Page & Scott-Clayton, 2016; Perna & Smith, 2020). To date, however, few states have managed to politically and fiscally enact (and scale or modify) these programs to meaningfully improve college enrollments. This reality suggests a need for a viable, low-cost state policy alternative to support college enrollment. In particular, states need low-cost policy innovations that address college access by advancing equity; increasing enrollment while reducing persistent gaps in access by income and geography (Deming & Dynarski, 2009; Hillman, 2016). A direct admissions system is one such possible innovation.

Direct admissions side steps the typical admissions process by proactively and automatically admitting students to college based on a data match between K-12 schools and postsecondary institutions. Typically, all students in a state with direct admissions are admitted to all open-access and non-selective institutions, while students who surpass a pre-identified threshold based on high school performance (such as GPA, standardized test scores, class rank, or a combination of measures) are also admitted to selective institutions. Students, parents, and high schools receive letters indicating a student has been admitted to a set of institutions that also outlines steps for how students can “claim their place” using a common and free application. This policy intervention provides both tailored information for students and families about college and provides the guarantee of a place at a set of postsecondary institutions in the state. In fall 2015, Idaho was the first state to adopt this reimagination of the college admissions process, proactively admitting all 2016 public high school graduates to a group of the state’s public colleges and universities (Howell, 2018).

Direct Admissions in Idaho and Beyond

Idaho’s public education sector has a centralized governance structure, with the Idaho Office of the State Board of Education (OSBE) overseeing K-12 and postsecondary operations, including four public community colleges and four universities. OSBE reported that 48% of the high school senior class of 2017 immediately enrolled in college following high school graduation (OSBE, 2018). After being identified as the state with the lowest college-going rate in 2010 (National Center for Higher Education Management Systems, n.d.), Idaho spent over $8.6 million on college and university student success strategies (Richert, 2017, 2018). To support this goal, the state also adopted a notable college-access initiative: direct admissions. To date, nearly 87,000 Idahoans have been guaranteed admission to five or more of the state’s colleges, with the first cohort entering college in fall 2016 (OSBE, 2020).

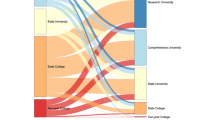

Relying upon a combination of students’ ACT/SAT scores, unweighted GPA, and high school course credits, Idaho’s direct admissions system proactively admits all students to either all eight state institutions (including the selective Boise State University, Idaho State University, and University of Idaho) or to the remaining five institutions (College of Eastern Idaho, College of Southern Idaho, College of Western Idaho, Lewis-Clark State College, and North Idaho College) plus Idaho State University’s College of Technology. As shown in Fig. 1, the state uses a flow-chart to proactively admit students to the “Group of Six” or the “Group of Eight” based on students’ ACT/SAT score, high school credits, and GPA.Footnote 1 After determining eligibility with the state’s longitudinal data system (SLDS, housed at OSBE, which matches students’ K-12 records to the eligibility criteria), students, parents, and high schools received letters in the fall of a student’s senior year (on approximately September 1) informing them of their guaranteed admission. To enroll, students need to only select a college and formally submit a free, common application to “claim their place.” This application is administrative in nature and allows students to select their institution and major but does not determine admission. OSBE testing has shown that the application takes an average of 13–17 min to complete, considerably less time than a traditional college application (Corbin, 2018). Students can begin claiming their place in college for the following fall as early as October 1 of their senior year, the same date that students planning to attend a public institution in Idaho can file their Free Application for Federal Student Aid (FASFA) (Yankey, 2019).

Idaho’s direct admissions system has explicit goals to promote a college-going culture; connect students, families, and K-12 schools with colleges early in the college choice process; ease the transition from high school to college; signal postsecondary opportunities to high school students; and reverse consistent enrollment declines at the state’s public institutions (Howell et al., 2019; Kelly, 2018). Early indicators of direct admissions’ impact are promising, including reported descriptive increases of 3.1% in overall enrollment and a 3-percentage-point decrease in the number of students leaving Idaho for college (Kovacs, 2016). Further, direct admissions has been shown to be exceptionally low-cost, only requiring the existing SLDS and paper and postage for acceptance letters (which can be sent electronically to further reduce costs). This is contrasted with other college access interventions, such as traditional grant aid programs, mentoring, or wrap-around services, which require higher levels of financial investment (Page & Scott-Clayton, 2016).

Given the high-yield, low-cost potential of direct admissions, this practice has already begun to spread across the nation. South Dakota began proactive admissions for the high school class of 2018 (Gewertz, 2017; South Dakota Department of Education, 2019). More recently, the Illinois General Assembly passed a 2019 law that developed a pilot program to automatically admit high-performing high school graduates to targeted public institutions beginning in 2020–2021, and, in 2021, Connecticut’s governor proposed an automatic admissions system for four of the state’s public universities (Office of Governor Ned Lamont, 2021). Likewise, the Minnesota legislature is moving forward legislation that would create a direct admissions program (Nietzel, 2021). Despite this diffusion, no research to date has estimated causal impacts of a direct admissions system.

Direct admissions holds potential to provide important college-going signals to high school students and eliminate the need for extensive financial, social, and cultural capital to navigate the college application process. Interviews with direct admission students in Idaho revealed how this policy changed their college aspirations, with one student noting: “The letter really changed my perspective. It genuinely opened doors for me that I’d never thought possible. I knew after receiving it that my dream of being the college English professor could come true” (Howell, 2019). Others stated “I was really surprised. I wasn’t planning on applying to U of I. I didn’t think U of I was really on my radar in terms of being accepted” and “I didn’t think any college would accept me but I was wrong” (Howell, 2019). While there is still a need to investigate informational, financial, and social-capital issues beyond college admissions in the education production function, early results from Idaho are promising. This technical solution can be universally implemented to level the playing field for all students within a state with a small initial investment for development, use of an existing SLDS, and minimal costs for continued operation. Further, not only does this practice conceptually reduce or eliminate many barriers students face in the college application and admission process, but direct admissions draws upon rich underpinnings in behavioral economics and is built upon a strong foundation of existing research on the impact of offering postsecondary opportunities.

Given Idaho’s centralized governance structure, with one board overseeing both K-12 and postsecondary education in the state, OSBE’s ability to coordinate the state’s education system as a whole and its ability to guarantee a “next step” after high school makes the state an ideal setting for exploring the effectiveness of direct admissions. Further, the state’s small size, limited number (and similar type) of postsecondary institutions, existing development and use of a SLDS, and its low-cost implementation of direct admissions allows us to examine the impact of direct admissions and consider its interaction with existing and complementary state policies and practices. Finally, because Idaho was the first adopter of a direct admissions policy, it is important to critically evaluate Idaho’s outcomes as policymakers within the state consider its effectiveness and those in other parts of the nation consider the adoption and design of their own direct admissions systems.

Research Questions

Given Idaho’s explicit intention to increase college enrollments with the adoption of direct admissions, our study is guided by the following research question: What effect did the adoption of direct admissions have on overall postsecondary enrollment across public institutions in Idaho? Further, given direct admissions’ potential to reduce gaps in college enrollment across socioeconomic lines and to alter college destinations, we also estimate causal effects of direct admissions on enrollment across these contexts by asking: Did direct admissions increase the enrollment of in-state or low-income (Pell-eligible) students across Idaho’s public institutions? Did direct admissions change the type of postsecondary destination for students by shifting enrollment between two- and four-year institutions?

The paper proceeds as follows. First, we present our conceptual model and hypotheses for considering direct admissions’ impacts on the enrollment margins outlined in our research questions. Second, we review related literature. Next, we discuss our data and the empiric strategy motivating our methodological approach. We then discuss our results and outline a series of robustness checks. In our conclusion and discussion section, we consider the implications of our results for research and policy, discuss our study’s limitations, and suggest future directions for research.

Conceptual Framework and Hypotheses

Our study’s framing draws from human capital theory, which suggests that students as rational actors will apply to and enroll in higher education when the benefits of the investment in a postsecondary credential exceed its direct and indirect costs across the lifetime (Becker, 1962; Toutkoushian & Paulsen, 2016). However, students are seldom rational actors when it comes to postsecondary choices, due in part to incomplete information and unequal contexts (DesJardins & Toutkoushian, 2005; McDonough & Calderone, 2006; Rochat & Demeulemeester, 2001). Given the fact that the average college graduate earns well beyond the average costs associated with a credential, the average student would be expected to apply to and enroll in college, yet not all students who could benefit from higher education apply or enroll (Baum et al., 2013).

Conceptually, direct admissions influences students and families during both the college search and college choice processes and may remove substantial barriers for students as they consider if and where to enroll (DesJardins et al., 2006; Hossler et al., 1989; Hoxby, 2004; Perna, 2006). Direct admissions not only promotes equality in college enrollment by ignoring students’ demographic and regional differences but also by defaulting students into affirmative application and admission statuses.

Without this simplification, personalized information, and guarantee of admission, students and families must navigate higher education access and choice on their own—all while facing substantial information constraints that disproportionally affect low-income and minority students (Avery & Kane, 2004; Cabrera & La Nassa, 2001; Dynarski & Scott-Clayton, 2006). These informational constraints are substantial given the fact that the current status quo of simply making open access institutions available to students is not enough to increase successful high-school-to-college transitions. Indeed, informational asymmetries alone have been shown to be detrimental to college search and enrollment (Avery & Kane, 2004; DesJardins et al., 2006). In addition, the incidence of information asymmetries is expected to be most detrimental to the least well-resourced students, including low-income and first-generation students (Cabrera & La Nassa, 2001). By providing college information, proactively signaling college opportunities, and guaranteeing college admission for all students, we hypothesize that the introduction of a direct admissions system will not only increase overall enrollment in Idaho but that direct admissions will also increase enrollments of low-income (Pell-eligible) students.

While direct admissions is expected to operate, in part, like other informational nudges and simplification policies which encourage enrollment, the policy also uniquely contains a guarantee of admission for students, the value of which is also expected to influence behavior. One student interviewed during the direct admissions process shared: “The application process can be scary for teens, and rejection is not easy. So it was nice to get a letter of preapproved acceptance for some colleges” (Howell, 2018, pp. 68–69). The admissions guarantee likely has the impact of enhancing the expected effect of the nudge on total enrollment and among Pell-eligible students. In addition, we expect the value of a guarantee to influence students who are mobile and otherwise may have considered an out-of-state institution. Another direct admissions student in Idaho noted: “I had originally planned on an out-of-state school, but the ease of just going right into an in-state school convinced me to stay” (Howell, 2018, p. 68). Thus, we hypothesize that having a “sure thing” at an in-state institution (thereby reducing the risk of a standard college search process) will also encourage more students to remain in-state for college.

At the institutional level, students receive differential access to degree programs and opportunities depending on where they enroll. Because not all institutions offer all majors or degree types, changing the type of institution attended can alter students’ subsequent degree attainment and labor-market outcomes. Moving from a community college to a four-year institution opens the possibility for a student to earn a bachelor’s degree from their first institution. Similarly, moving from a less- to a more-selective institution generally improves a student’s odds of graduating and earning a degree, while also increasing the likelihood a student has access to greater resources and support services on campus (Alon & Tienda, 2005; Dale & Krueger, 2002). As such, we investigate whether direct admissions alters the type of college where students enroll. We posit that the policy may “pull” students who would not have otherwise attended college into enrolling and may also “push” more academically able students from two- to four-year institutions. We hypothesize that, in addition to total enrollment increases—and enrollment increases among low-income students—direct admissions may also shift enrollments between two- and four-year institutions. Indeed, in addition to the college-going notions shared above, 55% of students in Idaho’s direct admissions program indicated that direct admissions had an “impact” on their decision to attend a “particular” college (Howell, 2018, p. 63).

Literature Review

To navigate the college-going process—from aspiration and preparation to search, application, and choice—students must leverage cultural and social capital (Bourdieu, 1986; Coleman, 1988; McDonough, 1997). These reservoirs of knowledge and support aid students as they complete necessary college-going tasks, like identifying a set of institutions, submitting applications, and filing the FASFA, but not all students have equal access to such capital (Ellwood & Kane, 2000; Perna & Titus, 2005). One way to address this challenge for students who cannot draw upon traditional forms of cultural and social capital has been to “make up differences in students’ levels of these types of capital that can be affected by policy” by offering resources like more advanced coursework or by providing college coaching (Klasik, 2012, p. 511). On average, these practices increase college access outcomes overall—and particularly for low-income and racial-minority students (Page & Scott-Clayton, 2016). This modus operandi has led to the diversity of college access programs that exist today. However, there is likely an equally impactful and more cost-effective mechanism to achieve more equality in the college application and admission process: systematically reduce or eliminate the need for such sources of capital altogether. Direct admissions may achieve this aim in a low-cost, low-touch way.

Prior work has shown that more than half of high achieving, low-income (HALI) students do not apply to selective colleges (Hoxby & Avery, 2013). In fact, only 8% of HALI students apply in a manner similar to their higher-income peers. This is due in part to existing mechanisms in the college access landscape. Widely used college admissions practices, like admissions staff recruiting, campus visits, college fairs, and other access programs, either do not reach or are typically ineffective with these students (Hoxby & Avery, 2013). However, an experiment using a low-cost and low-touch fee waiver intervention for HALI students demonstrated that a simple change in practice could result in large, positive gains. Hoxby and Turner’s (2013) fee-waiver intervention increased the number of applications submitted among HALI students overall and to selective institutions, increased HALI students’ admissions to selective institutions by 31%, and increased subsequent enrollment. While the Hoxby and Turner (2013) study showed positive results for these students in a targeted, small-scale intervention, Gurantz et al. (2020) found no changes in college enrollment patterns among low- and middle-income students in the top 50% of the PSAT and SAT distributions in their similar (but nationally scaled) randomized control trial. These disparate findings not only suggest that the removal of college search and access barriers is of ongoing research interest but that studies seeking to identify what mechanisms and which modalities result in positive outcomes is of utmost importance.

Providing students with relevant, targeted, and transparent information can improve college knowledge and enrollment. A rich body of work suggests college-going information and behavioral nudges improve outcomes across the access pipeline, particularly for low-income and racial-minority students (Bettinger & Evans, 2019; Bettinger et al., 2012; Bird et al., 2019; Castleman & Goodman, 2018; Castleman & Page, 2015; Oreopoulous & Petronijevic, 2019; Pallais, 2015; Stephan & Rosenbaum, 2013). A recent systematic review of the literature on outreach and financial aid further distinguished between these access-oriented programs, finding no effect among informational interventions that generally provided information on higher education but positive effects among targeted policies that also included active college counseling or application simplification (Herbaut & Geven, 2020). A recent experiment in Michigan tested this theory with HALI students. Dynarski et al. (2021) found that providing students with a guarantee of admission, a full scholarship, and encouragement to apply to the targeted institution led 67% of students in the treatment group to apply compared to only 26% in the control group. Additionally, 27% of treated students enrolled compared to only 12% of the control group, and an existing gap between urban and rural enrollments was reduced by half. Similarly, a study on Texas’ automatic Top 10% admissions practice showed the policy reduced income-based inequalities by helping HALI students better match to higher-quality institutions (Cortes & Lincove, 2019). For students who are low-income and from rural areas, these findings highlight the instrumentality of similar interventions on their enrollment prospects.

Direct admissions not only side steps the traditional admissions process by proactively admitting students but may also eliminate many reasons why students do not apply to begin with (e.g., onerous application forms, inconsistent processes across institutions, steep application fees, and a lack of transparent information; Knight & Schiff, 2019; Page & Scott-Clayton, 2016). Direct admissions also signals to students that a postsecondary credential is attainable, particularly for low-income or rural students who do not apply to or enroll in higher education at the same rates as their higher-income or (sub)urban peers (Black et al., 2020; Hamrick & Stage, 2004; National Center for Education Statistics, 2010). By flipping the college admissions process to proactively admit students through direct admissions, inequalities along these dimensions may be reduced given that all students are universally admitted to a set of postsecondary institutions. This steps beyond simply signaling postsecondary options by formally guaranteeing admissions and by further offering students a clear understanding of their admissibility to selective institutions in their state.

Though much of the prior literature focuses on only the highest achieving students, direct admissions functions across the academic ability spectrum. For some, it is possible that direct admissions will yield no change in their behavior (e.g., students who are already college-bound). For others, however, knowing they have been admitted to college could change their life course, particularly among students from groups traditionally underrepresented in higher education. This has already been shown to be true for some HALI students but should equally apply to all students (Dynarski et al., 2021). In fact, Xu et al. (2020) showed that targeted interventions for racial-minority, low-income, and academically underprepared high school students yielded positive effects on college access and success. Similarly, Oreopoulos and Ford’s (2019) experimental results found that college-application-assistance interventions at low-college-transition high schools increased application rates by 15 percentage points, college-going rates by 5 percentage points, and increased subsequent college enrollment by 9 percentage points for students not taking advanced-level courses. Because access to information about college and the college admissions process under traditional admissions models depends to a large extent on students’ access to social and cultural capital, students from certain groups, such as those from low-income families or rural locales, tend to be underserved (Hoxby & Avery, 2013; Klasik, 2012; Knight & Schiff, 2019). Direct admissions may help address this issue through its universal design.

In all, direct admissions may be a viable, low-cost state policy alternative to support college enrollment. Direct admissions not only side steps the traditional admissions process by proactively admitting students to college but may also eliminate many reasons why students do not apply to begin with. Importantly, the policy both signals that postsecondary enrollment is possible and provides a guarantee of admissions. For these reasons, direct admissions holds the potential for states to increase undergraduate enrollment while keeping students in-state and supporting workforce development goals by educating larger and more diverse cohorts. Our study seeks to estimate the impact of the nation’s first direct admission system to inform its ongoing operation and to advise policymakers in many other states considering similar policies.

Data

Using an institution-level analysis, we draw upon data from the U.S. Department of Education’s Integrated Postsecondary Education Data System (IPEDS) covering academic years 2010–2011 through 2017–2018. Beginning with the universe of all public two- and four-year (non-military) institutions in the United States in 2010–2011, we limited our sample to those institutions that are degree-granting, have first-time full-time undergraduate students, and do not exclusively provide distance education courses (n = 1580). Institutional data cover our three primary outcomes of interest: (1) overall first-time degree/certificate-seeking undergraduate enrollment, (2) the number of first-time undergraduates from in-state, and (3) the number of full-time first-time undergraduates awarded Pell grants. These outcomes allow us to view institution-level changes in overall undergraduate enrollments over time (among first-year students), as well as changes across geographic and socioeconomic contexts. We also collect the type of each institution (to test sector-specific effects) and a set of time-variant controls associated with college enrollments: tuition and fee rates, state appropriations, expenditures on scholarships and fellowships, and an institution’s six-year graduation rate.

Given that our outcomes and covariates are relatively standard, there was minimal missingness across IPEDS files. Among the 12,640 campus-by-year observations, there was an average count of missingness across the seven outcomes and covariates of 862 cases (6.8%). For this missingness, we employed linear interpolation to preserve sample size, but institutions that did not report information for at least two years to allow for interpolation were dropped (n = 63), as well as 2 institutions included in the sample that did not report to IPEDS at any time across the 2010–2011 through 2017–2018 panel. Our final analytic sample consists of 1,515 institutions across eight academic years. We adjusted all financial variables to the Consumer Price Index (CPI) for the last fiscal year in the panel (2018) and logged outcomes and predictors for financial variables and those with skewed distributions.Footnote 2

Descriptive statistics are presented in Table 1. Here, we present outcome and covariate statistics for institutions in Idaho and two separate groups: all other institutions in the nation and those in the 15-state Western Interstate Commission for Higher Education (WICHE) region.Footnote 3 These samples range from the entire population of other states and institutions to only those regional peers who were not exposed to direct admissions but are likely similar on many observable and unobservable factors related to college enrollment. Indeed, as shown in Table 1, Idaho is relatively similar to national and regional peers on all outcomes and covariates. One notable difference is that institutions in Idaho enjoy higher-than-average state appropriations (possibly due to the few number of institutions in the state) and expend lower-than-average resources on scholarships and fellowships (likely due to the state’s generous, need-based Idaho Opportunity Scholarship).

Empirical Strategy

Given the universal and fixed adoption of direct admissions first impacting students in Idaho in fall 2016 (academic year 2016–2017), a traditional quasi-experimental difference-in-differences (DID) method could be used to estimate the effect of direct admissions as a natural experiment. A DID estimator would allow us to exploit across-unit and inter-temporal variation in outcomes of interest as we compared outcomes in Idaho to all other (i.e., control) institutions over time and across the direct admissions adoption window. This is a common and preferred method used when assessing the effects of fixed-time policy adoptions because of its ability to address selection concerns and omitted variable bias (Cellini, 2008).

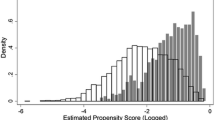

One condition of an unbiased DID estimator is that treatment and control groups follow parallel outcome paths prior to an intervention, combined with an assumption that both groups would continue to exhibit these parallel trends in the absence of treatment. We consider this fundamental assumption and the appropriateness of DID for our study by performing an event study analysis, similar to placebo or falsification tests in our setting, to test for treatment effects in the absence of actual treatment (i.e., if significant effects are observed prior to fall 2016, which would provide evidence of a violation of the parallel-trends assumption). This analysis is further detailed and discussed in the Appendix. We detect large and statistically significant differences between Idaho and both the national and WICHE counterfactual groups in the pre-treatment period on each outcome of interest, which questions the appropriateness of the DID method given a likely violation of the parallel-trends assumption. This methodological challenge motivates our use of a synthetic control approach that relaxes this violated assumption by shrinking these pre-treatment differences between treatment and control groups toward zero.

Generalized Synthetic Control Method

Like its traditional synthetic control peer, the generalized synthetic control method (GSCM) assembles a suitable and weighted counterfactual group that relies upon all available control units to minimize outcome differences between the treatment and control units in the pre-treatment period, relaxing the parallel-trends assumption that may be violated in this natural experiment (Cunningham, 2020; Odle, 2021; Xu, 2017). GSCM has also been shown to be a more robust tool for inference with few treated units given its allowance for treatment effects to be considered individually or collectively across multiple units, rather than traditional synthetic control methods that focus on single unit-by-unit effects (Krief et al., 2016; Powell, 2018; Xu & Liu, 2018, 2020). Recall, our natural experiment affected only one state with eight institutions, producing a small treatment pool and a large imbalance between treatment and control group sizes. Like its traditional peer, however, GSCM still optimally weights control units so that differences in pre-treatment outcomes between groups are minimized, empirically producing a strong counterfactual unit (Abadie, 2019; Fremeth et al., 2016; Gobillon & Magnac, 2016; Xu, 2017). Thus, given our ability to derive long panels from IPEDS covering many years of pre-direct admissions outcomes, we leverage GSCM to estimate causal effects of direct admissions on our outcomes of interest given its comparative strengths and suitable fit for this study.

Formally, our goal is to estimate the treatment effect of direct admissions \((\beta )\) on our outcomes of interest, where

or the difference between Idaho’s average institutional outcome \((\overline{Y })\) at time \(t=1\) (now) if the state was \((Z=1)\) and was not \((Z=0)\) treated by direct admissions. Given that \({\overline{Y} }_{Z=0,t=1}^{\mathrm{Idaho}}\) is unobservable in the potential-outcomes framework (where Idaho will always have \(Z=1\)), Eq. (1) is incomplete. A DID estimator would approximate this value such that

where it would apply the average outcome difference between treatment and control groups in the pre-treatment period (\(t=0\)) to the control group outcomes in the post-treatment period \((t=1)\). These mechanics make an estimate of \(\beta\) sensitive to the selection of a control group and motivates comparisons across multiple control groups. Instead of selecting a control group, however, synthetic control methods create a suitable counterfactual from all available control units such that

where the potential outcome in the post-treatment period is equal to a weighted average outcome of all control units in the same period. A synthetic control approach uses an optimization process to identify a weight \(w\) for each control unit \(i\) by considering individual unit outcomes \(({y}_{it})\) as a linear function of observable covariates (\({\mathbf{X}}_{it}\)) in the pre-treatment period \((t=0)\). Synthetic control methods work to reduce outcome differences between treatment and control units by minimizing root mean square error (RMSE, or “prediction error”) such that

where \({w}_{i}\) is an optimal weight for control unit \(i\) that ensures \({\overline{Y} }_{Z=1,t=0}^{\mathrm{Idaho}}\) and \(\sum {w}_{i}{\overline{Y} }_{t=0}^{\mathrm{Control}}\) are as mathematically close as possible in the pre-treatment period. An optimization algorithm drives the selection of \({w}_{i}\) such that the RMSE is minimized for

where \({y}_{it}\) is the observed outcome for the treatment group and \({\mathbf{X}}_{it}\) capture \(p\) observable predictors of the control units. Knowing that the mean outcome difference between treatment and control units in the pre-treatment period is as mathematically as close to zero as possible, \(\sum {w}_{i}{\overline{Y} }^{\mathrm{Control}}\) becomes a suitable counterfactual, and (similar to DID) deviations between \({\overline{Y} }^{\mathrm{Idaho}}\) and \(\sum {w}_{i}{\overline{Y} }^{\mathrm{Control}}\) in the post-treatment period are attributable to the effect of the policy. With a suitable counterfactual, Eq. (1) can be rewritten to compute the treatment effect as

by taking the average annual difference between treatment and (weighted) control units in the post-treatment period.Footnote 4

GSCM follows the same intuition as the traditional synthetic control method but provides important advantages when necessary, including its ability to allow for multiple treatment units (i.e., for multiple institutions in Idaho) and variation in treatment timing (Krief et al., 2016; Powell, 2018; Xu & Liu, 2018, 2020). GSCM makes these advances by imputing a separate synthetic unit for each treated unit with a linear interactive fixed effects model rather than developing one aggregate synthetic unit (Xu, 2017). When predicting outcomes, interactive fixed effects models interact unit-specific intercepts (“factor loadings”) with time-varying coefficients (“factors”) such that

where \({y}_{it}\) is the outcome for unit \(i\) in year \(t\), conditioned on unit \(({\pi }_{i})\) and time \(({\rho }_{t})\) fixed effects; \({Z}_{it}\) is a time-varying treatment indicator equal to one for treatment units in their post-treatment period (zero otherwise, like a DID \(treat\times post\) setup); and \({\mathbf{X}}_{it}\) is a vector of controls. Here, \({\lambda }_{i}^{^{\prime}}\) are unit factor loadings interacted with time-varying factors \({f}_{t}\), and \({\beta }_{it}\) is the heterogenous average treatment effect on the treated (ATT) estimate for unit \(i\) at time \(t\). The number of factors and their factor loadings are derived by a procedure that similarly minimizes error in the prediction of treatment unit outcomes with control unit observations, performing the equivalent function of the \({w}_{i}\) weights in Eq. (6) to optimally weight control units (Xu, 2017).

Equation (7) can be simplified and separated for treatment units \(({y}_{it}^{\mathrm{Z}=1})\) and their synthetic control units \(({y}_{it}^{\mathrm{Z}=0})\) as

Under this structure, \({\beta }_{it}={y}_{it}^{\mathrm{Z}=1}-{y}_{it}^{\mathrm{Z}=0}\) for treated units in the post-treatment period. Thus, the ATT estimate for the \(n\) units in the treatment group \(T\) can be shown by

which is the average annual difference between treatment units and their synthetic control units. GSCM also relies upon an internal cross-validation procedure for inference, which eliminates the necessity for post-hoc random permutation tests common with traditional synthetic control models (Bai, 2009; Xu, 2017).Footnote 5

We implement GSCM for our institution-level outcomes of interest, where the \({\mathbf{X}}_{it}\) covariates used by our GSCM models are the same as those expressed throughout and described in the Data section above. We also leverage the Gobillon and Magnac (2016) estimator in the “gsynth” R package to improve the precision of our estimates and estimate state-level cluster-bootstrap standard errors to account for serial correlation in outcomes (Cameron et al., 2008). We also implement an additional series of GSCM models within each sector to estimate heterogenous effects across two- and four-year institutions. While synthetic control methods empirically guide the construction of an optimal counterfactual, researchers must guide the selection of a donor pool from which the synthetic unit is constructed (Jaquette et al., 2018). Abadie et al. (2015) recommend imposing restrictions on an available donor pool to (1) exclude units affected by a similar intervention or those with other idiosyncratic shocks in the outcome of interest and (2) focus on units with characteristics similar to those of the treatment units(s). To satisfy these aims, we leverage two donor pools. First, we allow the GSCM optimal weighting process to draw from the universe of other institutions in a national donor pool. Second, to guide this selection process and to better balance the bias-variance tradeoff, we specify the donor pool to include all institutions in the WICHE region. While a national donor pool maximizes statistical power and increases the number of available control units, it may introduce units which are not similar to our treatment unit(s) (e.g., comparing Idaho to Florida, New York, and/or Texas). Conversely, the WICHE comparison helps balance this tradeoff by limiting the donor pool to similar, regional peers—though at a cost to power and sample size. In all, the national and WICHE comparisons should provide complementary evidence of the impacts of direct admissions, and these specifications also meet a third criterion set by Abadie et al. (2015) to ensure there are enough control units to create a sizeable donor pool.

The GSCM equivalent to the DID parallel-trend plots are presented in Fig. 2 for the institution-level enrollment outcomes. These figures show the superior control of GSCM on parallel trends and exhibit the respective outcome deviations between Idaho (“Treated Average”) and its institutional synthetic control unit (“Estimated Y(0) Average”). In aggregate, the optimal weighting process achieved strong alignment between the treatment and synthetic control unit(s) evidenced by the minimal-to-zero differences between each line in the pre-treatment period, suggesting GSCM is an appropriate estimation strategy for this context. In the post-treatment (shaded) period, these figures show descriptive increases in Idaho’s institutional undergraduate enrollments and in-state enrollment proportions following the adoption of direct admissions (observed by gaps between Idaho’s outcomes and its weighted counterfactual). These plots do not, however, suggest a descriptive post-treatment change in Idaho’s Pell enrollment outcome compared to its synthetic control unit. For more information on the construction of our synthetic control units, please see the Appendix.

Results

Results from the primary GSCM models are presented in Table 2 by outcome and donor pool. Estimates disaggregated by sector are presented in Table 3. The GSCM model leveraging the national donor pool suggests direct admissions increased institutions’ Fall first-time, degree-seeking undergraduate enrollments by approximately 8.14% ([\({e}^{0.0783}-1\)] \(\times\)100, \(p\) < 0.001). Given a pre-treatment (2010–2011 through 2015–2016) mean of 1,272 first-time undergraduate students across Idaho’s public institutions, this percentage increase equates to approximately 104 additional students per campus. Similarly, estimates suggest that direct admissions led to a 14.58% increase in the number of first-time undergraduates from in-state, which is an average increase of approximately 143 students per campus. For the same donor pool, however, we do not detect significant impacts on the number of the first-time, full-time students awarded Pell grants. The model suggests a modest decrease to Pell-eligible enrollments of approximately 0.51%, but our estimates are relatively imprecise for this outcome, though small changes are possible (95% CI [− 0.03, 0.02]). Estimates from the WICHE donor pool are similar, suggesting an increase in first-time undergraduate enrollment of approximately 4.20% (53 students), an increase to in-state enrollment of 8.21% (80 students), and a positive but insignificant increase in Pell-eligible enrollments. Overall, GSCM models from both donor pools suggest direct admissions increased institutions’ first-time undergraduate enrollments by approximately 50–100 students per campus, as well as institutions’ first-time enrollments from in-state students by 80–140, but had minimal impacts on Pell-eligible enrollments. While we cannot rule out small increases or decreases to these Pell-eligible outcomes given the precision of our estimates, we can confidently rule out large percentage-point changes like those observed for overall undergraduate and in-state enrollments.

Table 3 presents estimates disaggregated by sector using the national donor pool.Footnote 6 Here, models focus exclusively on institutions in either the two- or four-year sector and draw from equivalent-level institutions from the national donor pool. This allows us to estimate heterogeneous impacts across the state’s two- and four-year institutions. Recall, GSCM imputes a synthetic unit for each treated unit, rather than developing one aggregate synthetic unit. The institutional ATT estimates presented in Table 2 are the average annual difference between each treatment unit and its synthetic control. Therefore, that average taken from an aggregate sample of two- and four-year institutions will generally be bounded by estimates of impacts on outcomes at two-year institutions and impacts on outcomes at four-year institutions (shown here in Table 3). For the undergraduate enrollment outcome, estimates suggest that these increases across institutions are driven entirely by improved enrollment at two-year institutions (i.e., those where all students were proactively admitted compared to four-years where only students with higher GPAs and ACT/SAT scores were admitted). This GSCM model suggests direct admissions increased first-time undergraduate enrollments across Idaho’s two-year institutions by approximately 15.62%, or roughly 155 students given a pre-treatment enrollment mean of 992 per campus. No impacts are observed in the four-year sector, suggesting that direct admissions’ ability to increase overall first-time enrollments for Idaho was primarily through increasing students’ access to two-year, open-access institutions.

For the in-state enrollment outcome disaggregated by sector, overall increases to in-state enrollments were again driven by large increase across the two-year sector with minimum changes at four-year institutions. Estimates suggest direct admissions increase two-year colleges’ first-time, in-state enrollments by roughly 24.73% or 219 students given a pre-2015 mean in-state enrollment of 884 students. In the four-year sector, estimates suggest direct admissions may have increased in-state enrollments by approximately 6.59% (58 students per campus), but our estimates for this sector are relatively imprecise and insignificant.

For the percent Pell outcome, given that we did not detect any overall changes in our primary models, we similarly do not detect any impacts across both sectors, though estimates again suggest possible descriptive increases at two-year institutions and descriptive declines at four-years. Estimates suggest a possible increase of 10 students (2.72%) per two-year campus and a 28 student (7.34%) decrease at four-year institutions, but those both estimates are insignificant. These imprecise estimates suggest a possible redistribution of students across dimensions of socioeconomic status, driving students from more affluent families to four-year institutions or students from lower-income families toward two-year institutions. We further consider this possibility and note caution when interpreting this estimated impact given our robustness checks discussed below. In all, however, these sector-disaggregated models suggest direct admissions increased first-time undergraduate enrollments and first-time, in-state enrollments across two-year institutions in Idaho but had minimal impacts (if any) on the enrollment of Pell-eligible students.

Robustness Checks

Despite the relative consistency of our primary estimates, we conduct two robustness checks commonly paired with synthetic control methods. Recommended by Abadie et al. (2010, 2015), each follows a similar rationale common to DID checks. First, we seek to ensure our results are not driven by the selection of an optimally weighted counterfactual unit. With the knowledge that GSCM weights all available units by some \({w}_{i}\), it is possible that differences in significance could be driven by outcome changes of the non-treatment unit(s) given higher weights (Rubin & González Canché, 2019). While we have already addressed this concern in part by altering the donor pool, where our point estimates remain relatively robust across the national and WICHE comparisons, we explicitly test for this weight-driven possibility and assess whether significantly estimated effects exist for units in the donor pool by conducting “in-space” placebo tests. Here, we falsely assign treatment to units in the national donor pool with the highest weights (i.e., those estimated to most closely resemble institutions in Idaho) and re-estimate our GSCM models with institutions in Idaho removed from the sample (Abadie et al., 2010, p. 500). This procedure has also been referred to as an “across-unit” placebo test (Jaquette et al., 2018, p. 654). Second, we perform a traditional “in-time” placebo test to assess whether significant effects exist in the absence of treatment (Abadie et al., 2010, p. 504), similar to DID placebo or falsification tests. Here, we focus the panel on pre-direct admissions years (2010 through 2015) and falsely assign treatment to institutions in Idaho in 2014, allowing for two post-treatment years.

In addition to these synthetic control robustness checks, we conduct three additional tests to ensure our results are not driven by contemporaneous policy changes in Idaho or in control-group states. First, we seek to ensure that our donor pool (from outside Idaho) is similarly free from contemporaneous treatments which could bias the expected outcome of the synthetic control unit. The Education Commission of the States (ECS) reported that nine other states were developing or operating some form of a guaranteed or automatic admissions policy as of 2017 (ECS, 2017). If these states enacted or operated similar guaranteed or automatic admissions policies as direct admissions, the synthetic unit’s outcome might be artificially inflated or deflated depending on policy timing and impact. To remove this possibility, we re-estimate our models with institutions in these states removed. Second, in this same vein, we also re-estimate our models after removing institutions exposed to a local, regional, or statewide free-college (or “promise”) scholarship program. This allows us to attend to the concern that broad financial aid changes, such as the introduction of a new, universal, state-level promise program, could be a potential confounder to direct admissions policies. Finally, Idaho launched a statewide common application (Apply Idaho) in 2017–2018, the last year in our panel (OSBE, 2019). Given that common applications can influence college enrollments (Knight & Schiff, 2019), our primary estimates could be biased by additionally capturing the effect of Apply Idaho. While we incorporate year fixed effects into all models, we conduct an additional robustness check to eliminate any possible effects of Apply Idaho by re-estimating our primary GSCM models and excluding the 2017–2018 academic year. This limits the post-treatment period to one year but will provide estimates of the effect of one year of direct admission free of confounding by the statewide common application. We are aware of no other possible confounding treatments.Footnote 7

For brevity, results and tables from all five robustness checks are presented and further discussed in the Appendix. In summary, all but two of the six institution-level models produced expected (insignificant) results across the in-space and in-time placebo tests, providing confidence in our GSCM estimates.Footnote 8 Our results also remained robust to the exclusion of states with a developing or implemented guaranteed admissions policy, producing a similarly-estimated 8.56% increase (109 students) in the model testing undergraduate enrollment, a 15.43% (151 student) increase in in-state enrollment, and virtually no impacts on Pell-eligible enrollments. These findings are also similar given the exclusion of states or institutions exposed to any free-college or promise program, where estimates suggest an 8.12% (103 student) increase in first-time enrollments, a 14.76% (145 student) increase to in-state enrollments, and no impacts on Pell-eligible enrollments. Finally, our models that exclude the 2017–2018 academic year, however, are less precisely estimated given only one post-treatment year, precluding us from completely isolating the impact of direct admissions in the 2016–2017 academic year alone. The point estimates suggest a statistically insignificant 5.96% (76 student) increase in first-time undergraduate enrollment (consistent with our primary estimates), a significant 11.58% (113 student) increase to in-state enrollments (also consistent), and insignificant, near-zero changes in Pell-eligible enrollments.

Discussion

In the face of increasingly constrained budgets, states need new and innovative, yet low-cost policies to increase access to and enrollment in postsecondary education (Barr & Turner, 2013; Doyle & Zumeta, 2014). Persistent gaps in college access across socioeconomic and geographic contexts present real challenges for individuals and their communities (Deming & Dynarski, 2009; Hillman, 2016; Zumeta et al., 2012). To improve postsecondary enrollment levels in the face of ongoing declines, reduce persistent income gaps in college access, and arrest increasing out-of-state migration, Idaho leveraged its data capacity to create a direct admissions system. In this study, we sought to estimate causal impacts of this policy on institutional enrollment outcomes using synthetic control methods. We find early evidence that direct admissions increased first-time enrollments by 4–8% (50–100 students per campus on average) and in-state enrollments by approximately 8–15% (80–140 students). These findings were concentrated in the two-year, open-access sector. We also find minimal-to-no impacts on the enrollment of Pell-eligible students. However, given the imprecision of some estimates, we are unable to fully rule out any changes to Pell-eligible enrollments, though we can reject any large impacts. In addition to providing the first evidence on the efficacy of direct admission systems on improving college enrollments, this work also further extends theory by documenting how a simplification of the college search and application process—by reducing informational asymmetries and functionally eliminating the need to access such extensive social and cultural capital to enter the college-going pipeline—can effectively improve individual investments in human capital and reduce barriers toward access. To further consider these findings, we discuss them in relation to the policy’s low-touch and universal design, drawing connections to similar college-access policies.

Direct admissions is a universal program that automatically admits students to college with a guaranteed place, but provides no other college-going supports. Providing guaranteed admission to college addresses only one part, albeit an important part, of the college admissions, search, and enrollment process (Page & Scott Clayton, 2016; Perna, 2006). Students provided with an enrollment place through direct and guaranteed admissions still face other hazards to enrollment, including information and affordability constraints that disproportionately impact students from traditionally underserved populations (Avery & Hoxby, 2004; Cortes & Lincove, 2019; Hoxby & Avery, 2013; Klasik, 2012). Therefore, it is possible that a direct admission system on its own is enough to promote enrollment levels of students generally but not among low-income students. The benefits of other universal (or near-universal programs) accrue disproportionately to students from higher-income households (Bifulco et al., 2019; Dynarski, 2000; Odle et al., 2021). For example, in the year after the introduction of Tennessee’s universal free-college program for community and technical colleges, the state recorded descriptive declines in Pell-eligible enrollments and increases in the average ACT score among entering cohorts (Tennessee Higher Education Commission, 2015, 2016). Conceptually, this makes sense given that higher-income and more-academically-prepared students are best positioned to draw from more social and cultural capital to navigate the college-going process and to benefit from college-access programs (Ellwood & Kane, 2000; Klasik, 2012; Perna & Titus, 2005). This logic may help explain possible, albeit small, declines in Pell-eligible enrollments we find for Idaho’s four-year sector.

This suggests that, in order to achieve improvements in enrollments, direct admissions may best be used as a policy that should also deliver additional supports for students (e.g., financial aid, application fee waiver, informational, and nudge). This is particularly true given our observation that direct admissions’ impacts may not be fully observed until several years following adoption or in tandem with other college-going supports, like the state’s Apply Idaho common application. In all, direct admissions is a promising policy innovation that policymakers should consider as an effective avenue to increase overall enrollment levels and reduce out-of-state migration. In this consideration, policymakers and institutional leaders should also consider pairing a direct admissions system with complementary supports to help students overcome other barriers to college access such as financial aid. Furthermore, given that existing direct admissions systems exclusively target recent high school graduates, policymakers may also consider systems that contemporaneously target adult learners and individuals with some college but no degree.

While our study is the first to estimate causal impacts of direct admissions, it is not without notable limitations. First, given that direct admissions is still a relatively new program, additional years of post-implementation data would strengthen our evaluation. Second, as noted, Idaho also implemented a statewide common application in 2017–2018. While we find relatively consistent point estimates when accounting for this introduction, the common application charged application fees for its first year, and one institution (Idaho State University) continues to charge application fees. Fees like this have been identified by prior research as a deterrent to college enrollment, which could potentially limit any positive impacts of a direct admissions system (Hoxby & Avery, 2013). Thus, the full effects of direct admissions, with direct admissions practices plus a statewide common and free application, may not be detectable until 2017–2018. While we ran our models on the earliest possible year of treatment to reduce the possibility that these related policies confound our findings, a future analysis that lags the policy implementation year or that considers a common application to be part of a direct admissions system may yield different results. Third, while Idaho is in many ways an ideal state in which to study direct admissions, the state is relatively homogenous. For example, more than 90% of the population identified as White in 2018 (U.S. Census Bureau, 2020). It is possible studies in other states with more racial diversity would be better suited to consider direct admissions’ impact among student subgroups. Finally, while our GSCM method achieved a strong fit and reduction in RMSE, estimates from some national and WICHE models remain relatively imprecise, precluding us from fully rejecting any small impacts of direct admissions in Idaho. Despite these limitations, we believe our study still makes an important contribution by working to carefully and rigorously interrogate impacts of this new policy within existing policy environments and data limitations while concurrently calling for additional work in this area. Our study also has important implications for policymakers as it provides the first evidence on the efficacy of direct admissions systems and offers important considerations for the implementation, design, and expected outcomes of these programs.

Given that direct admissions is built upon a strong foundation of theory and experimental evidence suggesting simplifications of the college search and admissions processes should yield increased enrollments, particularly across important student subgroups, future research should continue evaluating this policy for long-run effects in Idaho and in other state contexts. States and institutions, however, should still carefully consider direct admissions as a mechanism to meet a common statewide policy goal of increasing overall enrollment levels and reducing out-of-state migration, particularly given the fact that out-of-state college-going is most common among higher-achieving, wealthier students (González Canché, 2018). Our finding that direct admissions increased in-state enrollments supports this possibility. While institutions in Idaho enjoyed higher overall and in-state enrollments following direct admissions, it is important to note that this reality may not occur for all other states. Specifically, there are two important contexts that have facilitated Idaho’s implementation and operation of a direct admissions system. First, Idaho’s centralized governance structure allows the State Board of Education to not only coordinate both K-12 and postsecondary operations, including by ensuring that all institutions participate in direct admissions, but to also facilitate data sharing between these sectors that is the linchpin of a direct admissions system (i.e., connecting K-12 GPA, ACT/SAT scores, and credits to postsecondary admissions). Given that few states have one single entity governing all education operations, achieving universal participation in a direct admissions system will likely be difficult. Such unequal participation, particularly if only specific institutional types participate in a direct admissions system, may produce different outcomes than those observed in Idaho. Second, Idaho has relatively few and fairly homogenous postsecondary institutions and a limited level of competition among public and private sectors in the state. In states with substantially more complex higher education structures or more robust private sectors where student choice is key driver of enrollment destinations, it is likely that a direct admissions system could have less pronounced impacts unless universal participation is achieved. As prior works have shown, while the design and implementation of postsecondary policies is critical to determining their impacts (Domina, 2014; Musselin & Teixeira, 2014), state contexts are also crucial moderators (Perna & Finney, 2014).

While we found early and suggestive evidence that direct admissions may have increased overall first-time undergraduate enrollments and first-time in-state enrollments in Idaho yet had minimal-at-best impacts on Pell-eligible enrollments, more research using both quantitative and qualitative methods is needed to better understand the mechanisms by which direct admissions might influence students’ enrollment behaviors given its consideration and adoption across other states to date. Future studies in Idaho and beyond should consider the use of student-level data to further investigate impacts across socioeconomic and geographic contexts, and potentially consider differences along dimensions of race, rurality, or first-generation status. Given our suggestive findings that direct admissions may best serve as a complementary, rather than stand-alone, college access tool, additional work could also consider the effects of a direct admissions policy implemented in tandem with other interventions (e.g., a statewide common application, application fee waivers, or need-based financial aid programs). As direct admissions programs are developed in new states, future research using both quantitative and qualitative tools could additionally consider how program design (i.e., eligibility criteria) or deployment (i.e., notifications) impact students’ outcomes and seek to estimate how, if at all, direct admissions impacts ultimate degree attainment and subsequent labor-market outcomes.

Data Availability

Upon Request.

Code Availability

Upon Request.

Notes

Idaho freely administers the SAT to all high school juniors as a high school exit exam. During the COVID-19 pandemic, Idaho only used students’ GPA and high school credits for direct admissions, though this period is outside of our window of analysis.

We log all outcomes and three predictors (tuition and fee rate, state appropriations, and scholarship expenditures).

WICHE states include Alaska, Arizona, California, Colorado, Hawai’i, Idaho, Montana, North Dakota, Nevada, New Mexico, Oregon, South Dakota, Utah, Washington, and Wyoming.

The national donor pool is presented for brevity given three outcomes across two levels. A table for the WICHE comparison group is similar and available upon request.

In addition to speaking with policy staff in Idaho, we conducted a search on education-related legislation and policies from public newspapers and the National Conference on State Legislatures and found no other policies passed during this time that would have influenced enrollment at the state- or institution-level in Idaho.

The only estimates to reach statistical significance were the tests for differences in in-state and Pell grant-eligible enrollments in the artificial pre-direct-admissions period (the in-time model). Here, estimates suggest institutions in Idaho had a predicted decline in both enrollments compared to peers, though this robustness test should also be interpreted with caution given the short period available (i.e., four pre-treatment years and only two post-treatment years). Discussed further in the Appendix, this provides us with caution when considering small, albeit possible, impacts on Pell-eligible enrollments but stronger evidence for in-state enrollment increases given that Idaho is observed to have higher enrollments than peers in the post-treatment period.

Only the national comparison is shown for brevity. Plots for the WICHE comparison group are similar and available upon request.

References

Abadie, A. (2019). Using synthetic controls: Feasibility, data requirements, and methodological aspects. Paper prepared for the Journal of Economic Literature. Massachusetts Institute of Technology

Abadie, A., Athey, S., Imbens, G. W., & Wooldridge, J. (2017). When should you adjust standard errors for clustering? (Working Paper No. 24003). National Bureau of Economic Research

Abadie, A., Diamond, A., & Hainmueller, J. (2010). Synthetic control methods for comparative case studies: Estimating the effect of California’s tobacco control program. Journal of the American Statistical Association, 105(490), 492–505.

Abadie, A., Diamond, A., & Hainmueller, J. (2015). Comparative politics and the synthetic control method. American Journal of Political Science, 59(2), 495–510.

Abel, J. R., & Deitz, R. (2014). Do the benefits of college still outweigh the costs? Current Issues in Economics and Finance, 20(3), 1–11.

Alon, S., & Tienda, M. (2005). Assessing the “mismatch” hypothesis: Differences in college graduation rates by institutional selectivity. Sociology of Education, 78(4), 294–315.

Avery, C., & Hoxby, C. M. (2004). Do and should financial aid packages affect students’ college choices? In C. M. Hoxby (Ed.), College choices: The economics of where to go, when to go, and how to pay for it (pp. 239–302). University of Chicago Press.

Avery, C., & Kane, T. J. (2004). Student perceptions of college opportunities: The Boston COACH Program. In C. M. Hoxby (Ed.), College choices: The economics of where to go, when to go, and how to pay for it (pp. 355–394). University of Chicago Press.

Bai, J. (2009). Panel data models with interactive fixed effects. Econometrica, 77(4), 1229–1279.

Barr, A., & Turner, S. E. (2013). Expanding enrollments and contracting state budgets: The effect of the Great Recession on higher education. The ANNALS of the American Academy of Political and Social Science, 650(1), 168–193.

Baum, S., Kurose, C., & Ma, J. (2013). How college shapes lives: Understanding the issues. College Board.

Becker, G. S. (1962). Investment in human capital: A theoretical analysis. The Journal of Political Economy, 70(5), 9–49.

Bertrand, M., Duflo, E., & Mullainathan, S. (2004). How much should we trust differences-in-differences estimates? The Quarterly Journal of Economics, 119(1), 249–275.

Bettinger, E. P., & Evans, B. J. (2019). College guidance for all: A randomized experiment in pre-college advising. Journal of Policy Analysis and Management, 38(3), 579–599.

Bettinger, E. P., Long, B. T., Oreopoulos, P., & Sanbonmatsu, L. (2012). The role of application assistance and information in college decisions: Results from the H&R Block FAFSA experiment. The Quarterly Journal of Economics, 127(3), 1205–1242.

Bifulco, R., Rubenstein, R., & Sohn, H. (2019). Evaluating the effects of universal place-based scholarships on student outcomes: The Buffalo “Say Yes to Education” program. Journal of Policy Analysis and Management, 38(4), 918–943.

Bird, K. A., Castleman, B. L., Denning, J. T., Goodman, J., Lamberton, C., & Ochs Rosinger, K. (2019). Nudging at scale: Experimental evidence from FAFSA completion campaigns (Working Paper No. 26158). National Bureau of Economic Research.

Black, S. E., Denning, J. T., & Rothstein, J. (2020). Winners and losers? The effect of gaining and losing access to selective colleges on education and labor market outcomes (Working Paper No. 26821). National Bureau of Economic Research.

Bourdieu, P. (1986). The forms of capital. In J. G. Richardson (Ed.), Handbook of theory and research for the sociology of education (pp. 258–341). Greenwood Press.

Cabrera, A. E., & La Nasa, S. M. (2001). On the path to college: Three critical tasks facing America’s disadvantaged. Research in Higher Education, 42(2), 119–149.

Cameron, A. C., & Miller, D. L. (2015). A practitioner’s guide to cluster-robust inference. The Journal of Human Resources, 50(2), 317–372.

Cameron, A. C., Gelbach, J. B., & Miller, D. L. (2008). Bootstrap-based improvements for causal inference with clustered errors. The Review of Economics and Statistics, 90(3), 414–427.

Carnevale, A. P., Smith, N., & Strohl, J. (2013). Recovery: Job growth and education requirements through 2020. Georgetown University.

Carrell, S., & Sacerdote, B. (2017). Why do college-going interventions work? American Economic Journal: Applied Economics, 9(3), 124–151.

Castleman, B., & Goodman, J. (2018). Intensive college counseling and the enrollment and persistence of low income students. Education Finance and Policy, 13(1), 19–41.

Castleman, B., & Page, L. C. (2015). Summer nudging: Can personalized text messages and peer mentor outreach increase college going among low-income high school graduates? Journal of Economic Behavior and Organization, 115, 144–160.

Cellini, S. (2008). Causal inference and omitted variable bias in financial aid research: Assessing solutions. The Review of Higher Education, 31(3), 329–354.

Coleman, J. S. (1988). Social capital in the creation of human capital. American Journal of Sociology, 94, 95–120.

Corbin, C. (2018). State board opens free college application website. Idaho Ed News. https://www.idahoednews.org/news/state-board-opens-free-college-application-website/

Cortes, K. E., & Lincove, J. A. (2019). Match or mismatch? Automatic admissions and college preferences of low- and high-income students. Educational Evaluation and Policy Analysis, 41(1), 98–213.

Cunningham, S. (2020). Causal inference: The mixtape (V. 1.8).

Dale, S., & Krueger, A. (2002). Estimating the payoff to attending a more selective college: An application of selection on observables and unobservables. The Quarterly Journal of Economics, 117(4), 1491–1527.

Delaney, J. A., & Doyle, W. R. (2011). State spending on higher education: Testing the balance wheel over time. Journal of Education Finance, 36(4), 343–368.

Delaney, J. A., & Doyle, W. R. (2018). Patterns and volatility in state funding for higher education, 1951–2006. Teachers College Record, 120(6), 1–42.

Delaney, J. A., & Hemenway, B. (2020). A difference-in-difference analysis of “promise” financial aid programs on postsecondary institutions. Journal of Education Finance, 45(4), 459–492.

Delaney, J. A., & Leigh, E. W. (2020). A promising trend?: An event history analysis of factors associated with establishing single-institution college promise programs. In L. W. Perna & E. J. Smith (Eds.), Improving research-based knowledge of college promise programs (pp. 269–302). American Educational Research Association.

Deming, D., & Dynarski, S. (2009). Into college, out of poverty? Policies to increase the postsecondary attainment of the poor (Working Paper No. 15387). National Bureau of Economic Research.

DesJardins, S. L., Ahlburg, D. A., & McCall, B. P. (2006). An integrated model of application, admission, enrollment, and financial aid. The Journal of Higher Education, 77(3), 381–429.

DesJardins, S. M., & Toutkoushian, R. K. (2005). Are students really rational? The development of rational thought and its application to student choice. In J. C. Smart (Ed.), Higher education: Handbook of theory and research (pp. 191–240). Springer.

Domina, T. (2014). Does merit aid program design matter? A cross-cohort analysis. Research in Higher Education, 55(1), 1–26.

Doyle, W. R., & Zumeta, W. (2014). State-level responses to the access and completion challenge in the new era of austerity. The ANNALS of the American Academy of Political and Social Science, 655, 79–98.

Doyle, W. R., Dziesinski, A. B., & Delaney, J. A. (2021). Modeling volatility in public funding for figher education. Journal of Education Finance, 46(1), 536–591.

Dynarski, S. (2000). Hope for whom? Financial aid for the middle class and its impact on college attendance. National Tax Journal, 53(3), 629–662.

Dynarski, S. M., & Scott-Clayton, J. E. (2006). The costs of complexity in federal student aid: Lessons from optimal tax theory and behavioral economics (Working Paper No. 12227). National Bureau of Economic Research

Dynarski, S. M., & Scott-Clayton, J. E. (2013). Financial aid policy: Lessons from research. The Future of Children, 23(1), 67–91.

Dynarski, S. M., Libassi, C., Michelmore, K., & Owen, S. (2021). Closing the gap: The effect of reducing complexity and uncertainty in college pricing on the choices of low-income students. American Economic Review. https://doi.org/10.1257/aer.20200451&from=f

Education Commission of the States. (2017). 50-state comparison: Does the state have a guaranteed or automatic admissions policy? http://ecs.force.com/mbdata/MBquest3RTA?Rep=SA1704

Ellwood, D. T., & Kane, T. J. (2000). Who is getting a college education? Family background and the growing gaps in enrollment. In S. Danziger & J. Waldfogel (Eds.), Securing the future: Investing in children from birth to college (pp. 283–324). Russell Sage Foundation.

Fremeth, A. R., Holburn, G. L. F., & Richter, B. K. (2016). Bridging qualitative and quantitative methods in organizational research: Applications of synthetic control methodology in the U.S. automobile industry. Organization Science, 27(2), 462–482.

Gándara, D., & Li, A. (2020). Promise for whom? “Free-college” programs and enrollments by race and gender classifications at public, 2-year colleges. Educational Evaluation and Policy Analysis, 42(4), 603–627.

Gewertz, C. (2017). Good common-core test scores get you accepted to college in this state. Education Week (September 19). http://blogs.edweek.org/edweek/high_school_and_beyond/2017/09/south_dakota_guarantees_college_admission_for_good_smarter_balanced_scores.html.

Gobillon, L., & Magnac, T. (2016). Regional policy evaluation: Interactive fixed effects and synthetic controls. The Review of Economics and Statistics, 98(3), 535–551.

González Canche, C. M. S. (2018). Nearby college enrollment and geographical skills mismatch: (Reconceptualizing student out-migration in the American higher education system. The Journal of Higher Education, 89(6), 892–934.

Gurantz, O., Howell, J., Hurwitz, M., Larson, C., Pender, M., & White, B. (2020). A national-level informational experiment to promote enrollment in selective colleges. Journal of Policy Analysis and Management. https://doi.org/10.1002/pam.22262

Hamrick, F. A., & Stage, F. K. (2004). College predisposition at high-minority enrollment, low-income schools. The Review of Higher Education, 27(2), 151–168.

Herbaut, E., & Geven, K. (2020). What works to reduce inequalities in higher education? A systematic review of the (quasi-)experimental literature on outreach and financial aid. Research in Social Stratification and Mobility, 65, 100442. https://doi.org/10.1016/j.rssm.2019.100442

Hillman, N. W. (2016). Geography of college opportunity: The case of education deserts. American Educational Research Journal, 53(4), 987–1021.

Hossler, D., Braxton, J., & Coopersmith, G. (1989). Understanding student college choice. In J. Smart (Ed.), Higher education: Handbook of theory and research (pp. 231–288). Agathon Press.

Howell, C. (2018). Surprise! You are accepted to college: An analysis of Idaho’s direct admissions initiative (Dissertation). Boise State University.

Howell, C. (2019). Direct admissions at work: The Idaho experience (Presentation). Forum on the Future of Public Education, University of Illinois. https://forum.illinois.edu/2019-conference