Abstract

We examine the predictability of 299 capital market anomalies enhanced by 30 machine learning approaches and over 250 models in a dataset with more than 500 million firm-month anomaly observations. We find significant monthly (out-of-sample) returns of around 1.8–2.0%, and over 80% of the models yield returns equal to or larger than our linearly constructed baseline factor. For the best performing models, the risk-adjusted returns are significant across alternative asset pricing models, considering transaction costs with round-trip costs of up to 2% and including only anomalies after publication. Our results indicate that non-linear models can reveal market inefficiencies (mispricing) that are hard to conciliate with risk-based explanations.

Similar content being viewed by others

1 Introduction

Over the last decades, an unprecedented amount of stock market anomalies has been published by researchers in the field of asset pricing theory and factor investing.Footnote 1 To summarize the number of factors and anomalies published in journals, as of January 2019, there were over 400 signals documented in academic publications (Harvey and Liu 2019). Described in Cochrane’s presidential address as factor zoo, the questions about “which characteristics really provide independent information about average returns” and how to overcome the “multidimensional challenge” remain an ongoing debate (Cochrane 2011).

The challenge of navigating the high-dimensional factor zoo is amplified by the issue of data p-hacking,Footnote 2 non-stationarityFootnote 3 and low chronological depth,Footnote 4 potential conditioning and biases from former literature,Footnote 5 and limited access to out-of-sample data. Traditional linear instruments such as ordinary least square regressions might not be able to overcome these issues. Thus, the problem of either selecting the correct subset of factors with real predictive power or cleverly combining the predictive power of the anomaly set remains an ongoing debate. The tremendous enhancements in machine learning and artificial intelligence, and the ability of smart algorithms to uncover complex relationships in large datasets, has the potential to overcome this issue.

As probably one of the most innovative and fastest developing computer technologies of the last decade, machine learning is predicted to fundamentally transform and disrupt entire industries. Referring to the latest Gartner Hype Cycle for Emerging Technologies (Panetta 2019), many innovation triggers are directly or indirectly linked to machine learning advances. We follow the definition of Murphy (2012, p. 1) and define machine learning as “a set of methods that can automatically detect patterns in data, and then use the uncovered patterns to predict future data.” In contrast to conventional algorithms, where the computer receives input data and the specific program logic to calculate the result, machine learning algorithms receive both input and output data in the form of training samples to derive the program logic by themself. This ability to describe complex relationships through autonomous learning from experience (Samuel 1959) without explicitly coding any rules and exceptions is particularly suitable for the field of asset pricing.

Recently, researchers began to explore the potential of these algorithms in the context of stock market anomalies. Among these papers, Gu et al. (2020b) find that machine learning models can be used to create long-short strategies with positive and significant alphas. In the same way, other studies find promising results (e.g., Azevedo et al. 2022; Chen et al. 2020; Tobek and Hronec 2021). However, more recently, Avramov et al. (2022) find that the alphas of long-short strategies of anomalies enhanced by machine learning are attenuated after imposing economic restrictions.

Thus, with the proliferation of machine learning models in financial research, the literature lacks implications of these models in asset pricing literature, as well as evidence on how robust these models are conditional to the assumptions, approaches, and specifications. Furthermore, there is an ongoing debate on whether the results are driven by mispricing, risk, data dredging, or even limits-to-arbitrage. We reassess the predictability of 299 capital market anomalies enhanced by 30 machine learning approaches and over 250 models in a dataset with more than 500 million firm-month anomaly observations.

Among the machine learning models tested, we include six different algorithms, seven feature reduction methods, and multiple variations of training approaches. The anomalies are used to predict a stock’s next-month return, on which we form decile portfolios with the same standardized portfolio-sort strategy. This approach allows us an accurate comparison of the linear and the machine learning models and a robust estimation of the additional value of non-linear interaction effects.

Among the more than 250 models tested, we find that over 80% of the models tested yield equally good or better returns than our linearly constructed baseline factor, which achieved average monthly returns of 0.92%. For our top-performing models, we see significant monthly returns of 1.8–2.0%, indicating about 1.0% additional return above the linear benchmark. Among the best-performing algorithms are tree-based methods such as the GBM and DRF, as well as neural networks. Using hyperparameter optimization, feature interpretation methods, and the inclusion of transaction costs, data dredging seems not to be the underlying cause. Among the machine learning models that underperformed the linear models, the approaches either use a rolling window of only five years or use shrinkage methods. These results are an indication that, overall, most of the stock anomalies seem to have some predictive power and do not seem to add noise to the model. Furthermore, our results show that a rolling window of only five years is not enough to capture the importance of each anomaly in the model.

The positive and significant alphas from the machine learning are robust against transaction costs with round-trip costs of up to 2.4%, and remain stable across different parameter sets and when including anomalies only after publication, making it unlikely that the findings are merely a consequence of p-hacking. Furthermore, the returns are not explainable by common factor models, indicating mispricing effects and market inefficiencies within the stock market, and casting doubt on the current form of standard asset pricing models.

Our paper contributes to the current literature in several ways. First, our empirical analysis provides a replication study for classical anomaly research, reinforcing the current set of meta-studies (Jacobs and Müller 2020; McLean and Pontiff 2016) and replication studies (e.g., Kim and Lee 2014) and confirming the issue of p-hacking. In contrast to other scientific areas, in finance and accounting, the publication of replication studies is not encouraged or acknowledged particularly well (Harvey 2017).Footnote 6 However, replication studies are an “essential component of scientific methodology” (Dewald et al. 1986, p. 600), with out-of-sample data and modified assumptions being necessary to distinguish true causation from correlation. We contribute to the recent awareness of meta-studies in the field by testing a subset of 299 anomalies and verifying former findings.

Our second contribution lies in the broad assessment of machine learning capabilities in asset pricing and anomaly research (Chen et al. 2020; Gu et al. 2020b). Beyond the empirical analysis and former literature, we applied several tools to test the returns’ robustness, with a positive outcome. Our hyperparameter optimization yields on average robust returns, thereby excluding parameter picking in the out-of-sample dataset as a cause for our findings. As an important extension to former literature, our post-publication model variation only uses past data and methodology available at a specific time. This approach can strictly exclude any forward-looking bias both in terms of data and methodology, decreasing data dredging’s likeliness and underlining the existence of real interaction effect causing the additional return.

As a third contribution, our study quantifies the value of non-linear effects among the factor zoo. As the results can neither be traced back to data dredging nor risk components, our findings cast significant doubt on the market’s efficiency and current asset pricing models. Our findings support that the market can efficiently erase arbitrage opportunities from linear effects. However, more complex structures remain exposed to investors, which could increase our understanding of essential market mechanisms and the EMH and lead the way to a new generation of asset pricing models.

2 Related literature

Our paper also contributes to the growing field of the use of machine learning in asset pricing. Whereby Snow (2020, 2020a) describes how the overall portfolio construction in asset management benefits from various machine learning approaches, recent studies introduced more concrete application cases and empirical tests specifically in the context of anomaly-based trading strategies. Distinguished by algorithms, researchers tested among others approaches with shrinkage methodsFootnote 7 (e.g., Han et al. 2018; Chinco et al. 2019; Ban et al. 2018), the class of Support Vector Machines (SVM) (e.g., Cao and Tay 2003; Matías and Reboredo 2012; Dunis et al. 2013; Ren et al. 2019; Huang et al. 2005; Trafalis and Ince 2000), as well as tree-based methods (e.g., Moritz and Zimmermann 2016; Tan et al. 2019; Qin et al. 2013; Basak et al. 2019; Bryzgalova et al. 2019) such as the Gradient Boosting Machine (GBM) or the Distributed Random Forest (DRF). Furthermore, a majority of papers applied various architectures of neural networks to predict future asset prices (e.g., Heaton et al. 2017; Abe and Nakayama 2018; Fischer and Krauss 2018; Feng et al. 2018; Zhang et al. 2020; White 1988; Dunis et al. 2008; Adeodato et al. 2011). Other, less widespread methodologies include Bayesian inference (e.g., Bodnar et al. 2017), autoencoders (e.g., Gu et al. 2020a), and Reinforcement learning (e.g., Moody and Saffell 2001; Zhang et al. 2020; Li et al. 2019).

In their innovative study, Gu et al. (2020b) compare diverse machine learning methods, including generalized linear methods, boosted regression trees, random forests, and neural networks, to estimate expected returns of stocks. The authors use information from 94 firms’ characteristics, as well as eight macroeconomic predictors, in a sample from 1957 to 2016, and they find that tress and neural networks have the best performance. For instance, a zero investment long-short portfolio of deciles based on a neural network with three hidden layers (NN3) reports a monthly value-weighted alpha of 1.76% controlled for the Fama and French (2018) six-factor model. In the same vein, Tobek and Hronec (2021) test a similar setting with an international sample from 1963 to 2018. They find that 153 stock market anomalies enhanced by neural networks report a value-weighted alpha controlled for the Fama and French (2015) of 0.843% (t-statistic of 5.668). Chen et al. (2020) propose an approach that combines four neural networks to take advantage of conditioning information to estimate individual stock returns. The authors use 46 stock anomalies and 178 macroeconomic time series in a sample that spans from 1967 to 2016 as an input to estimate stock returns. Their model reports an annual Sharpe ratio of 2.6 compared to 1.7 for the linear special case of their model.

More recently, Avramov et al. (2022) reassess the results from Gu et al. (2020b) and Chen et al. (2020) and others by applying economic restrictions, such as excluding microcaps, distressed stocks, as well as episodes of high market volatility. In a sample from 1987 to 2017, they find that economic restrictions significantly weakens the profitability of machine learning. For instance, a Fama and French (2018) six-factor value-weighted alpha based on NN3 from Gu et al. (2020b) is 0.312% (t-statistic of 1.51) after excluding microcaps, while the alpha is 2.23% (t-statistic of 8.06) for the full sample.

Our paper sheds light on these results by analyzing the limits-to-arbitrage and different asset pricing models in a large range of machine learning approaches. Our findings are consistent with Gu et al. (2020b) who also explores a wide range of machine learning models with an emphasis on comparative analysis among the models. Our paper diverges from theirs by checking how robust these results are, addressing data dredging concerns, and analyzing the implications of these models in asset pricing. Although our empirical results are in line with the findings of Tobek and Hronec (2021) and Gu et al. (2020b), confirming significant benefits from using non-linear methods, by testing more than 250 models, we find that not all machine learning models outperform a baseline (linear) model. In other words, the superior performance of machine learning models can be conditional to the (ex-post) decisions of the models and parameters. Among alternative models and parameters that can drive the results, we find that, in general, dimensionality reduction models tend to underperform other non-linear models, which is an indication that machine learning models, such as GBM and DRF, can handle well the apparent high dimensionality of (299) anomalies. Furthermore, by analyzing alternative training and validation samples based on static windows, (five-year and ten-year) rolling windows, and expanding windows, we find that adding more recent data in the training and validation samples does not necessarily improve the results, which indicates that the patterns of the relation between anomalies and returns do not seem to change over time.

Finally, our paper provides insight on the findings from Avramov et al. (2022). By showing positive and significant alphas across eight factor models even using anomalies after publication, as well as by reporting that machine learning methods can be positive significant even with round-trip costs of up to 240 basis points, we find important evidence that limits-to-arbitrage cannot fully explain the strong profitability of machine learning methods.

In the following section, we present the data sources and the underlying methodology of our study. We present our empirical findings in twofold. First, we show the performance of both individual anomalies and the linear baseline factor (Sect. 4). These results serve as a replication study and benchmark for our more advanced machine learning models presented in Sect. 5. In Sect. 6, we discuss the empirical findings, advantages, and pitfalls of our approaches. In particular, we perform a model comparison, review the interpretation and parameter tuning in machine learning models, and test the results against common factor models. Section 7 summarizes the study’s main findings, its implications, and an outlook on further research questions.

3 Data and methodology

Our empirical study consists of three steps. First, we calculate the raw signals for each firm-month observation. Based on this dataset, we apply a classical portfolio-sort analysis to examine each anomaly’s performance individually and create a consolidated baseline factor as a linear benchmark, merging the original anomalies into one single meta signal. In step three, we use the anomaly dataset as input for our non-linear machine learning models. We test various algorithms, feature reduction methodologies, and training approaches to investigate the models’ respective predictive power and their additional profit compared to our linear baseline model.

3.1 Data origin, preprocessing and anomaly construction

For the anomaly calculation, we follow to a great extent the open-source code published by Chen and Zimmermann (2020). We use data provided by the Wharton Research Data Service (WRDS), restricting ourselves to the U.S. market to ensure the highest data quality and make the study more comparable with the anomaly discovery’s original publications. In particular, we use CRSP for both the monthly and daily pricing data and COMPUSTAT for the annual and quarterly fundamental data. Thereby, we follow the assumptions of Chen and Zimmermann (2020), namely applying a lag of six and three months for annual, respectively, quarterly accounting data.Footnote 8 We are conservative in our assumption of the reporting lag to avoid look-ahead bias. The recommendation and earnings-forecast-related anomalies are constructed with additional data provided by the Institutional Brokers Estimate System (I/B/E/S). A small fraction of anomalies requires more specific data, which includes the Sin Stock classification of Hong and Kacperczyk (2009), the government index from Gompers et al. (2003), and macroeconomic data from the Federal Reserve Bank of St . Louis (2020). We refer to the source code of Chen and Zimmermann (2020) for more details on the data gathering process. While we exclude some of the original data sources due to limited accessibility, we could calculate a set of 299 anomalies in total. Internet Appendix A gives readers a detailed overview of the anomalies evaluated in this study.

While we do not oppose any strict filters for prices or market cap during the data gathering process, we follow Griffin et al. (2010) by including only common equity (i.e., stocks with a WRDS share code of 10, 11, or 12) and excluding any stock that is not listed at the U.S. exchanges NYSE, NASDAQ or AMEX. However, as the individual anomaly evaluation follows the original author’s proposal, some anomalies have specific selection filters applied during the portfolio construction process. Internet Appendix A includes a list of applied filters for each anomaly for this sample.

The anomalies we calculated are split into Accounting signals (175), Event signals (13), Analyst-based anomalies (18), Price-related signals (64), Trading (18), and other signals (11). This broad range has the potential to incorporate complex relationships and correlations on future returns. The machine learning algorithms’ objective is to exploit these hidden patterns for profitable trading strategies.

We calculate the anomaly set for every firm-month observation available, ranging from 1945 to 2019. However, our main analysis focuses only on the period from 1979 to 2019 (492 months of observations) to reduce the number of missing values in the training set while simultaneously ensuring a large enough and diverse dataset to find profitable patterns. Particularly, analyst recommendations and quarterly-based fundamental data often do not match our quality and quantity requirements before 1979. On average, we build our calculations on 5573 firms per month, with a peak in November 1997 with 7939 firms. Most stocks originate from the NASDAQ exchange, while in terms of market capitalization, the NYSE remains the most important exchange. This pre-filtering leads to a total of 2,742,141 unique firm-month observations, whereby on average, 197 out of 299 signals are available per observation, resulting in 542,346,630 data points or unique firm-month-anomaly observations both the baseline factor and machine learning models are trained. The size of this dataset is comparable with common meta-studies about stock anomalies such as McLean and Pontiff (2016) with 97 signals, Hou et al. (2020) investigating 447 anomalies, Green et al. (2017) calculating 94 anomalies, and Harvey et al. (2016) verifying 315 stock characteristics.

a Illustrates the Spearman correlation among the 44,551 unique anomaly pairs, consolidated on the number of anomaly pairs per Spearman correlation value. As indicated by the graph, the dataset is rather symmetrically split between positive and negative correlations, with the 90% interval depicted as a dotted line ranging from \(-0.21\) to +0.27. The low correlation among anomalies underlines the high dimensionality of the dataset. b Describes the distribution of Spearman correlations between each anomaly and a stock’s next-month return. The graph indicates a relatively low correlation between individual anomalies and future return as well as a comparably symmetric distribution, with the 90% interval depicted as a dotted line ranging from −0.04 to +0.06

Figure 1a shows the paired Spearman correlation of our anomaly set, consisting of 44,551 unique correlation pairs. The graph demonstrates the high dimensionality of our dataset, as nearly 90% of anomalies are correlated only in the range between −0.21 and 0.27, which is in conformance with other meta-studies of anomalies (Jacobs and Müller 2016). Only a few signals are correlated strongly due to small variations of the same anomalies (e.g., quarterly and annually updated anomalies). We dispense to filter these anomalies as both the machine learning algorithms and the feature selection methods proposed should be able to handle this form of data. Similarly, about 90% of the anomalies have an absolute Spearman correlation with a future return of only 0.05, as depicted in Fig. 1b. While a single signal has limited expressiveness, the inclusion of hidden, non-linear structures with machine learning models could potentially provide significant outperformance.

Although we calculated absolute values for our anomalies, we use only percent-ranked anomaly values for portfolio construction and machine learning training. For each anomaly and month, we sort the values between 0 and 1. Transforming machine learning features in a preprocessing step to a common scale increases the performance of the algorithms (Singh and Singh 2020). While there exists a vast variety of data normalization approaches (Nayak et al. 2004), we followed the percent-ranked approach of Stambaugh and Yuan (2017) as it does not affect the portfolio-sort approach, which only cares about anomalies’ absolute monthly rank. Additionally, it allows for a per-month rescaling, which ensures the prevention of any forward-looking bias, and facilitates handling missing values by replacing them with a median of 0.5.

3.2 Portfolio construction methodology and baseline factor composition

Similar to the paper of Chen and Zimmermann (2020), we test anomalies with a simple portfolio-sort strategy. For each month and anomaly, we create portfolios and calculate the spread of the long-short portfolio.

The stock-characteristic portfolio-sort approach is among the most common and dominant instruments to measure an anomalies’ potential profitability and determine its statistical significance. Since the 1970s, many empirical studies such as Basu (1977) and Fama and French (1992) applied the portfolio-sort methodology. Multiple reasons explain this popularity: first, the approach’s simplicity in terms of construction and interpretation. Second, its ability to handle a large and varying number of stocks in non-stationary and potentially infinite time series. Third, the capability to deal not only with linear but, more generally, monotonic relations between signals and return. We apply these capabilities by using portfolio sorts for our anomaly values and machine learning results, ranking every stock for each month into a fixed number of portfolios. We then calculate the spread of the long-short portfolio as the monthly return of our strategy and a t-statistic along with the time series of returns, which allows for an assessment of the strategy’s statistical significance. A popular alternative methodology is the Fama-MacBeth cross-sectional regression approach (Fama and MacBeth 1973), which, due to its regression characteristic, is more vulnerable to outliers and thus microcaps effects. Furthermore, it is limited to linear relationships (Hou et al. 2020), making the non-parametric portfolio sort the preferable approach in our case.

We conduct the portfolio sort following the original authors’ methodology as strictly as possible in terms of quantiles (number of portfolios), weighting (value-weight and equally-weight), holding period and rebalance frequency, starting month total examination period, and filtering of minimum prices and exchanges. However, we additionally assess anomalies based on a standardized approach. Thereby, we do not apply any price or exchange filter, adapt the anomalies to a monthly rebalancing and holding frequency, and conduct a decile portfolio sort. This standardized methodology allows a consistent comparison and benchmark with our baseline factor and is also an attempt to minimize p-hacking issues due to clever parameter picking. Additionally, a standardized guideline in portfolio construction is a pre-requirement for our machine learning-based portfolios. The standardized environment is calculated both for equally-weighted and value-weighted portfolios. Equally-weighted portfolios typically are hard to outperform, and most of the original publications are based on them. However, as noted by Fama (1998), equally-weighted portfolios give more weight to small stocks and are thus more negatively affected by the bad model problem (i.e., explaining the average return of small stocks). Therefore, the interpretation and decision-making during our empirical study are based on the standardized, value-weighted results. Internet Appendix C includes the results of both weighting methodologies for our machine learning models.

For each portfolio-anomaly-month combination, we not only calculate the return in the form of the long-short spread but also include the number of stocks positioned as long and short and the one-side turnover rate. The latter follows the definition of Hanauer and Windmueller (2019):

where t = Current month; i = Stock identifier; Nt = Number of stocks in dataset in month t; wit = Weight of stock i in portfolio of month t.

The turnover rate is defined as the portfolio’s percentage of stocks necessary to rebalance, indicating the potential trading costs associated with a live implementation. We use this indicator for a more practical evaluation of our models by including round-trip costs per strategy in Sect. 6.4.

Besides the portfolio-sort analysis for each individual anomaly, we calculate another signal, the baseline factor, as a linear combination of the anomaly set. Thereby, we orientate ourselves by the approach of Stambaugh and Yuan (2017), calculating the new signal as the arithmetic average of the percent-ranked 299 anomalies for each firm-month observation from 1979 to 2019. Furthermore, we require at least 100 non-missing anomalies for a firm each month to be included in the investment universe to ensure a diversified enough set of signals for the new factor. The baseline factor assessment is performed in the same way as evaluating the individual anomalies, using the portfolio-sort approach with the standardized methodology described above. We use the baseline factor as a benchmark tool to assess our machine learning models in Sect. 5.

3.3 Introduction into examined machine learning models

After having a baseline benchmark for our dataset, we use the same input data of 299 percent-ranked anomalies from 1979 to 2019 as a foundation for our machine learning algorithms. This subsection describes the overall approach, as well as the working mechanism of the selected models. For better reading comprehension, we only give an overview of the applied techniques here. The more detailed procedure is described, along with the presentation of the empirical findings.

We focus on investigating the additional performance of machine learning algorithms compared to traditional factor construction. In other words, we are interested in adapting the currently linear function f(x) from our baseline factor into a non-linear function g(x) using machine learning-based algorithms. To accomplish this, we restrict ourselves to stringently using only the same input data, namely the 299 anomalies per firm and month. Furthermore, to ensure the best comparability, we apply the same portfolio-sort approach with the standardized construction settings described above. The optimal sorting characteristics in this portfolio construction environment in terms of return would be to create decile portfolios based on a firm’s next-month return. Therefore, we train our models to map future stock performance for each firm and month on the anomaly set, using the predicted returns as sorting criteria for the portfolio construction. This new, machine learning-based factor can then be benchmarked against existing literature.

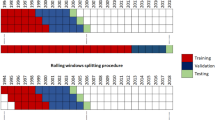

This supervised regression approach can be further distinguished by testing various target variables. In the following, we test both the absolute next-month returnFootnote 9 and the percent-ranked next-month return, with the latter having the advantage of being scaled and standardized in the same way the input variables are preprocessed. As with every supervised learning approach, we split our data into training and test samples. For the training sample from 1979 to 2002, we apply a 3-fold-cross-validation strategy for more robust metrics estimation.Footnote 10 However, the performance measurement was done with models trained on the full training set until 2002 but tested against an out-of-sample environment with data from 2003 onwards.

A common preprocessing step in data science is selecting only the most crucial input signals or applying a feature reduction method to the dataset to reduce any noise. This handling of the data’s high-dimensionality might be beneficial to increasing the signal-to-noise ratio. We refer to Sect. 5.2 for a description of the applied algorithms.

All these approaches have in common being strictly static, meaning that they were not updated when new observations became available during backtesting. This training process allowed us a relatively conservative estimation focusing more on stationary patterns and reduced false-positives’ risk due to the low number of models. However, particularly for practitioners, it might be interesting to update the model over time to further increase its performance by including the most recent data in the training process. We conduct this rolling training approach by retraining our models on new observations becoming available in the out-of-sample approach, avoiding any forward-looking data bias. While the overall approach would even support monthly training, we restrict our study to yearly updates due to the computational effort, which can be considered enough for the overall testing of the hypothesis. Further research and practical implementations might increase training frequency.

As derived from the literature, among some of the best performing algorithms for machine learning, specifically for finance, are tree-based algorithms such as GBM, DRF, and eXtreme Gradient Boosting (XGBoost). We examine most approaches based on these algorithms and add the Generalized Linear Model (GLM) for comparison with a less-complex model. Thereby we enhance the capabilities of the popular open-source machine learning library H2O.ai (2020a). Additionally, as probably the most popular machine learning techniques, Sect. 5.4 shift the focus on neural networks’ performance, whose architecture requires adjustments in construction and training processes. We use the popular Tensorflow (2020) framework developed by computer scientists of Google DeepMind. While an in-depth description of each applied machine learning algorithm would be out of this work’s scope, we briefly introduce each algorithm in the following. We refer to the original documentation and source code for specific implementation details.

The GLM supports a variety of regression types for different distribution and link types. In its simplest variant, the output is a linear regression model. In our case, we use for both target variables the default identity link. The GBM, first described by Friedman (2001, 2002), is an ensemble method by building multiple decision trees. The boosting technique makes former weak learners, such as decision trees, strong and more robust (Zhou 2012). By weighting the individual learners’ predictive power by their performance and focusing future learners on misclassified data, GBM sequentially refines its estimations. In our study, we used the implementation of (Hastie et al. 2001) as described in the H2O library documentation. The XGBoost algorithm originates from the mechanisms of the GBM. However, it has some adaptions, particularly concerning dropout regularization. We use DART, the dropout regularization for regression trees (Rashmi and Gilad-Bachrach 2015). As proposed by Breiman (2001), DRF, similarly to GBM, build on many decision trees using only a random fraction of the available dataset. For prediction, the average of all trees is used. This procedure is called bagging (Breiman 1996).

Neural networks differ from the tree-based algorithm as they aim to imitate the working of human brains by a set of neuron layers. Developed in the 1950s, recent achievements and performance enhancements led to an increasing number of different neural network architectures and application cases. We focus on two forms. First, we apply the Feedforward Neural Network (FNN) as the most basic architecture to attest its suitability in the context of stock market anomalies. We use both a smaller, tunnel-formed and a more extensive, larger architecture illustrated in Internet Appendix D. Another form of neural network particularly effective in time series analysis is the Recurrent Neural Network (RNN). It allows the processing of multiple past observations, creating a form of memory for upcoming predictions. Mainly the latter ability might be promising in the context of stock markets.

4 Creating a baseline: classical portfolio construction

The following section proceeds with the empirical results of our classical anomaly research methodology, replicating most findings of former meta-studies. The outcomes provide us a benchmark to assess our non-linear models in later parts of the study.

4.1 Individual anomaly returns derived from a long-short portfolio-sort strategy

Internet Appendix B lists each of our 299 underlying anomalies’ portfolio performance with both the original sample and our standardized sample. As the latter is also used for our machine learning models, only this sample’s results should be used for comparative analyses. While the original sample follows whenever possible the original’s author construction details and period as outlined by Chen and Zimmermann (2020) (See Internet Appendix A for more details), our standardized sample strictly focuses on the timeframe from 1979 to 2019, using the same construction guideline as described in the previous section. Furthermore, we measure the change after publication as the mean return difference after anomaly publication based on the standardized sample.

While using the author’s original sample period and anomaly construction methodology, the average monthly return per anomaly is around 0.53%, with mean t-statistics of 3.15. 70% of anomalies have a t-statistic of 1.96, and 47% above three, the minimum significance hurdle for new factor discoveries as Harvey et al. (2016) suggested. These results are not surprising, as most published anomalies have significant returns due to the academic journals’ incentive system mentioned above.

These figures change once applying the same standardized portfolio construction framework across all anomalies over the full-time period from 1979 to 2019. The mean return of anomalies drops to 0.31% per month, becoming mostly insignificant with an average t-statistic of 1.38. Of the 299 anomalies examined, only 33% still overcome the t-statistic hurdle of 1.96. With the higher t-hurdle of three, only 46 anomalies remain significant. These results suggest widespread p-hacking in previous anomaly research and are in accordance with previous meta-studies findings. Due to the strong dependency of portfolio returns on construction settings such as weighting and rebalancing frequency, many anomaly findings might only be false-positive discoveries, resulting from a particular set of parameters that luckily had significant returns for the examined period. These anomalies weaken and disappear in a standardized environment. However, if genuinely reflecting either mispricing or risk, the returns should be more robust and less dependent on the construction settings.

Among the best performing anomalies in both samples in terms of return and statistical significance are the Earning Announcement Return (Chan et al. 1996), the Industry Return of Big Firms (Hou 2007), and the Firm-Age Momentum (Zhang 2006). Interestingly, all these three top-performing anomalies are members of the data category “Price.” Consequently, we examined the average returns per category: while the categories “Accounting,” “Event,” “Analyst,” and others are relatively equally performing in the range of 0.21% to 0.28% return per month, “Trading” underperforms, with only 0.15%. In contrast, “Price” anomalies are significantly exceeding other anomalies, with average monthly returns of 0.49%.

Besides the performance differences between original and standardized portfolio construction, we examined the publication bias of McLean and Pontiff (2016). With a median relative decrease in value-weighted returns of 74% of combined statistical bias and publication effect, the results are in line with the reported 58% of the original study and the 66% for the value-weighted study of Jacobs and Müller (2020). Out of 228 anomalies for which we could calculate pre-and post-publication-sample statistics, 160 signals faced decreasing returns, with an average absolute decline of 0.47% per month. These findings underline the anomalies’ non-stationary character and illustrate how research can influence future anomaly returns. Those signals that previously suggested a profitable arbitrage mostly fade away due to investors’ adaption towards exploiting these return spreads.

However, former research is usually focused on single, linear dependencies. By combining multiple firm characteristics into a single signal for portfolio construction, hidden structures might allow further profit opportunities. These patterns might be even more profitable for non-linear combinations machine learning algorithms are capable of uncovering. Therefore, in the next section, we construct a linear factor as a combination of all anomalies. This baseline factor indicates the potential benefits of a multi-anomaly-based strategy and serves as a benchmark for more advanced, non-linear machine learning models in Sect. 5.

4.2 Multi-anomaly-based baseline factor as a linear benchmark

The baseline factor is a linear combination of all available anomalies per firm-month observation. Averaging the percent-ranked values of the anomalies in a standardized sample aims to reduce individual signals’ data mining issues, increasing both the returns’ stability and reliability. In the case of both value-weighted and equally-weighted settings, we see a significant outperformance of the baseline factor, not only regarding averaged groups of anomalies by data category but also across the full spectrum of individual anomalies. Looking at the average monthly development as depicted in Table 1, we see that over the full period, the return of equally-weighted (value-weighted) is 3.26% (1.95%) per month. The statistical significance is on par with the best-performing individual anomalies, with t-statistics of 15.4 and 9.32 for the equally- and value-weighted portfolios. Compared to the value-weighted approach, the equally-weighted portfolio’s outperformance is consistent with former research and serves as a further indicator for the bad model problem (Fama 1998).

For a more restrictive analysis of our baseline factor, we separately examined our standardized sample set from 2003 to 2019. In former research, 2003 marks a critical year (Green et al. 2017; Jacobs and Müller 2016). Out-of-sample and particularly post-publication returns of anomalies are significantly lower (McLean and Pontiff 2016), making many anomalies less profitable. This issue was empirically confirmed for the individual anomalies in the previous section. With 2003 as the mean publication year of our data sample, distinguishing a model’s performance for the pre- and post-2003 range allows a more conservative and robust estimation of its performance. Particularly for the U.S. datasets, 2003 furthermore marks the first reporting year with the Sarbanes-Oxley act as well as new SEC filing changes in place, increasing the auditing and reporting quality significantly (Green et al. 2017). We follow the approach of two different time frames for evaluating the baseline factor and splitting criteria between training and testing data for our machine learning models.

We note that the one-sided turnover rate is higher than the average turnover rate for individual anomalies across different settings, potentially leading to higher transaction costs in practical implementation and lower profitability. This tendency is a consequence of the composition of many signals having a more varying influence on stock rankings, thus causing more volatile portfolio assignments. We examine in Sect. 6 the effect on potential transaction cost.

The significant returns of our baseline factor support the existence of potentially profitable relationships among anomalies. While the individual anomalies are vulnerable to data snooping and non-stationarity, the baseline factor reaches more robust returns in the pre- and post-2003 areas by leveraging the versatility of the full anomaly set. This outperformance is true for both the equally- and value-weighted portfolios.

In summary, we can conclude that the empirical results of the individual anomalies suggest data mining issues in former research and underline the strong non-stationary characteristics of financial time series data. However, when combining the anomalies by averaging the percent-ranked values in a standardized environment, we see significant performance improvements. We use these findings, particularly the value-weighted 0.92% [3.87] average monthly return of the baseline factor in the post-2003 period, as a benchmark and evaluation tool for our machine learning models constructed in the following section.

5 Portfolio construction with machine learning algorithms

5.1 Constructing portfolios based on forecasted future returns

As probably the most straightforward attempt to model the anomaly-return relations with machine learning algorithms, we train a set of different algorithms on the absolute next-month return of a stock. For each firm-month observation, we thus have the formula \(g(anomalies_{t, i}) \rightarrow r_{t+1, i}\). Thereby, \(r_{t+1, i}\) is the absolute next-month return of a firm. To reduce the risk of p-hacking, we use the algorithms’ default parameter without any hyperparameter tuning.Footnote 11 The number of trees for the DRF and GBM model is set to 100 as a balance between generalization ability and computational effort. According to former research, this number seems to propose the biggest gains in performance for these types of tree-based algorithms (Probst and Boulesteix 2017).

The graphs illustrate the cumulative performance of the four different machine learning algorithms in comparison to the Baseline factor during the post-2003 out-of-sample period. a Shows the value-weighted return for the regression approach based on the stocks’ absolute next-month return, while b refers to the approach based on percent-ranked next-month returns

Displayed in Fig. 2a, each model’s performance over the out-of-sample period exceeds the returns of the baseline factor. Particularly noteworthy are the GBM and DRF, which significantly outperform every model, with 1.68% and respectively 2.01% of average monthly returns. Furthermore, the two models’ Sharpe ratios of 1.29 and 1.39 are above the baseline benchmark ratio of 0.92. More performance indicators for both the equally-weighted and value-weighted construction settings are listed in Table 2.

As an alternative approach, we train the same algorithms on a different target value, namely the percent-ranked next-month return. For each firm-month observation, we thus have the formula \(g(anomalies_{t, i}) \rightarrow rp_{t+1, i}\), with \(rp_{t+1, i}\) being the monthly-ranked future return with values between 0 and 1. Through this approach, we train our models only on each stock’s relative performance on which the portfolio-sort algorithm relies. A perfect forecast of both absolute and relative returns would thus yield the same portfolio returns. However, with a target value similarly scaled as the input anomalies, the algorithm might improve overall relationship modeling as it only has to predict the monthly distribution of returns across the stock universe, not the absolute values. That is particularly important for the application within neural networks we examine in Sect. 5.4, for which we follow the same procedure.

Again, all of our four different machine learning algorithms perform at least equal to the baseline factor. While the DRF performs relatively poorly, particularly compared with the previous approach, both the GLM and the XGBoost algorithm perform better than their respective counterpart in the absolute-return regressions. Particularly promising is the GBM, having average monthly returns of 1.89% with a Sharpe ratio of 1.01. More details are given in Table 3.

Noteworthy, we see a potentially systematic difference in the algorithms’ working mechanisms, namely their ability to handle scaled and non-scaled values. Where the GLM and XGBoost algorithms performed particularly poorly in absolute-return-based regressions, the performance of both was significantly better in percent-ranked target values. Conversely, the random forest failed in the latter variant. The GBM seems to have the capability to handle both approaches sufficiently.

Besides the portfolio metrics, Table 2 and Table 3 furthermore list the most common model metrics. In contrast to the significant out-of-sample returns, the machine learning metrics are only mediocre. Focusing on the mean absolute error of the percent-ranked regressions, we see only slight improvements in contrast to a random algorithm, which would, by chance, achieve 0.25. Additionally, the best out-of-sample model metrics do not produce the highest returns in the same period. As we construct the portfolios on deciles of forecasted returns, the common metrics might be poorly suitable in our context. The algorithms’ final performance in terms of strategy returns is dependent on an accurate assignment of stocks to the lowest and highest deciles and not necessarily on the most precise prediction of future returns. In the following, we give stronger attention to the out-of-sample portfolio metrics as an evaluation instrument. Furthermore, we would like to highlight that no single performance metric can fully assess a model’s comprehensiveness. Instead, one should examine the overall picture with multiple indicators for a more robust estimation of the goodness of a models’ predictions.

In summary, each of the machine learning algorithms applied performed at least on par with the traditionally constructed baseline factor. As the performance is significantly positive across different algorithms, target values, and portfolio metrics, it seems unlikely to be only a result of p-hacking but rather a consequence of non-linear effects within the anomaly set. In the following sections, we use the best-performing models of the two approaches, namely the absolute return-based DRF and the percent-ranked GBM, as our reference models for various training approaches, including different feature reduction and shrinkage methods, as well as for rolling training.

5.2 Reducing the high-dimensionality of the factor zoo with unsupervised learning and feature reduction algorithms

Currently, our models are trained on the full set of 299 percent-ranked signals. This high-dimensional data set may contain redundant data and strongly correlating values due to similarly constructed anomalies. A sophisticated reduction or combination of features into a lower-dimensional dataset could filter out unnecessary noise, further improving our algorithms’ performance. In the following, we introduce a variety of common reduction methods and examine their performance impact on our models.

A Principal Component Analysis (PCA) belongs to the best-known feature reduction methodologies and aims to produce (a lower number of) linearly independent components representing the majority of variance of the original feature set. Autoencoders are a special case of Convolutional Neural Networks. Autoencoders, which are invented in the 1980s (Baldi 2012; Rumelhart et al. 1987), can reduce dimensionality by learning the internal representation of the dataset and compressing the input data into a lower dimension. The so-called bottleneck-layer we use in our two autoencoder experiments has 100 and 25 neurons, thus shrinking the feature’s dimensionality by over 60% respectively 90% (see Internet Appendix D for more details). In contrast to the PCA, the autoencoders’ results are non-linear combinations of the basic feature inputs, enabling the representation of more complex data structures that can be leveraged by our machine learning models. The lasso regression and elastic net selection follow common practice. The theory-derived selection of anomalies uses only past anomalies with t-statistics above 1.96 and 3, aiming to reduce the noise of non-important and insignificant signals.

The empirical results of our two reference models from the previous chapters with the inclusion of feature reduction techniques are depicted in Figure 3a and b and are relatively modest. Except for the elastic net, all feature reduction methods reduce our models’ overall performance, in some cases, even below the baseline factor. The autoencoders and the PCA shrink average monthly results to values insignificant different from zero. Only the elastic net in the case of the DRF can increase the post-2003 performance of the static model. However, we do not discern a specific cause explaining these results and suspect it to be a false-positive, as we tested many different feature reduction approaches simultaneously. It seems that any feature reduction method tested fails in filtering out only unnecessary interferences. The poor results of the feature reduction based on past significance further indicate that, while an anomaly might individually be statistically not significant, it can contribute to the overall predictions through hidden joint effects. Consequently, removing any anomaly from the dataset can lead to a significant drop in performance.

In total, our approaches indicate that feature reduction might be less potent in the context of anomalies than suggested. While reducing the noise and dimensionality of the dataset, feature reduction might also weaken or eliminate significant signals, decreasing the model’s overall performance. Since many machine learning models have in-built capabilities to handle high-dimensional datasets, feature reduction might be less critical than in classical regression approaches of former literature.

5.3 Boosting performance with a dynamic, rolling machine learning model

So far, we have only used data from before 2003 to train our machine learning models. While that allowed a very critical and lower-bound-oriented estimation of the portfolio returns due to the weakening returns of the individual anomalies after this point in time, this approach also neglected the information value of more recent data available within the backtesting period. In particular, our models cannot yet exploit temporary relationships within the time series and the accompanying profit opportunities.

We adapt our current approach into a rolling training with interim updates of the algorithms’ parameter to encounter this issue. We start again with 2003 as an out-of-sample period but retrain our models with the updated dataset every year. Although a monthly update of the model would be possible, it would exceed the available computing resources of this study, and an annual retrain frequency should be sufficient to estimate the performance potential for rolling machine learning models. However, it might be interesting for practitioners to optimize their models to the highest retraining frequency possible. Furthermore, we test multiple windows, namely a 5- and 10-years back-looking static frame, as well as a dynamically extending window across the full, up-to-prediction-date dataset. While more training data is generally positively correlated with a model’s performance and ability to generalize, the relevance of older observations decreases due to the non-stationary character of the financial time series. Thus, a shorter time frame with less but more relevant data might increase performance.

We apply the different rolling training approaches to our two reference models, the DRF model for the absolute-return regression and the GBM for the percent-ranked return regression. With two reference models, 17 years of data, and three rolling training variations, this amounts to 102 trained machine learning models.

For the DRF model, a rolling training approach seems to decrease absolute performance. While remaining constant at about 2% per month for the extending rolling learning technique, for the shorter, static time frames, the model fails to exploit the anomalies’ predictive power for profitable trading opportunities. In contrast, the GBM seems, at first sight, to enhance with a rolling 10-years window (2.12% per month). However, while having a higher cumulative return at the end of the out-of-sample period, most of it contributes to a peak in performance in 2018/2019. Because we train a total of 17 machine learning models for each model and training approach, the strong performance might be a false positive and should be treated with caution.

These ambiguous findings are confirmed when using the average monthly return and a paired t-test as an evaluation instrument. The DRF model is less robust on the training window than the GBM, with performance averaging between 0.35% and 2.02% per month. The 10-year and 5-year rolling window frame differences towards the static variant are highly significant with t-statistics above 2.56, whereby the extending window is not significantly different from the static one. Therefore, the rolling training seems to add no value to the case of the DRF. The GBM’s rolling performance partly improves, lying between 1.58% and 2.11%, but the differences towards the static model in a paired t-test remain insignificant.

In summary, the findings of the rolling training approach are mixed. The DRF algorithm fails in applying a rolling strategy. While the inclusion of more recent data in the extending data frame seems not to harm the GBM’s performance significantly, it also does not add significant value in terms of average monthly return. Furthermore, with the higher number of models, the risk of including false positives grows, potentially explaining the peak of the GBM in 2018/2019. The current approach seems not able to exploit significant returns from temporary structures as hypothesized. The fixed rolling window limits the amount of data per training, which might provide insufficient data for the algorithms to learn profitable patterns, particularly for the 5-year window. For the extending window, while including more data, the models might weight recent data not accurately and put too much focus on outdated observations.

5.4 Artificial neural network approaches

Thus far, we have focused on tree-based machine learning models. While they perform best-in-class for many applications, neural networks remain the most popular approach in machine learning. This section outlines the performance of both the standard FNN and a form of RNN in the context of stock market anomalies.

The FNN is among the most intuitive architectures, with one-way connected input and output layers and a variable amount of hidden layers and neurons. We test two different configurations: one smaller neural network with five hidden layers but a decreasing number of neurons per layer (110.821 parameters in total) and a larger variation with only three hidden layers but a higher number of neurons in total (256.400 parameters). More detailed empirical results and a comprehensive description of the architectures are attached in Internet Appendix C and D.

In total, we see a significant and continuous outperformance of the baseline factor for the static trained variant, with average monthly returns of 1.29% for the smaller and 1.68% for the larger model. These figures indicate that the larger model truly benefits from an increased number of neurons. In terms of the rolling models, performance has to be evaluated separately for the two models. While the smaller models seem to benefit from both the 10-year (2.01% per month) and extending window training (1.83% per month), the larger models’ performance does not improve significantly. In contrast, the rolling 10-year window significantly reduces overall performance (1.26% per month). Due to the increasing need for observations to estimate the more extensive set of model parameters, a 10-year subset might not be sufficient for the parameter estimation in the training process. Future research might explore alternative approaches such as transfer learning to reduce the necessary data for rolling training.

Particularly successful in time- and order-dependent data such as time series analysis and natural language understanding are models with an RNN architecture. In contrast to FNNs, an RNN uses former time steps of observation in the prediction process, thereby creating a form of short-term memory to improve performance (Abiodun et al. 2018). In our case, we include twelve timesteps (e.g., each prediction is based on a 2D matrix with the twelve past observations of the past year of all 299 anomalies). As RNN suffers from vanishing or exploding backpropagated errors, we test a variant of RNN, namely the Long short-term memory (LSTM), which includes a memory cell to improve the models’ capability in terms of long-term memory and efficient learning by holding errors constant (Hochreiter and Schmidhuber 1997).

Our findings indicate modest returns for models trained in the same environment as the FNNs. During most of the out-of-sample time, performance is below the other models, with average monthly returns of 1.48%. While, in theory, the model should be able to handle time-series data better, our test results contradict this hypothesis. Concerning the LSTM results, the poor performance of this approach compared to other machine learning approaches could be driven by the architecture chosen and the standard parameters of the model.Footnote 12 Furthermore, with the non-stationary character of our dataset, the high number of factors (299) relative to incorporated backward timesteps (12) (e.g., high dimensionality) might in our configuration not fully exploit the potential for backpropagation, requiring further finetuning. In short, the high dimensionality of data makes LSTM training more complex.

6 Discussion of findings

6.1 Performance comparison of machine learning models

In the previous chapter, we tested four different machine learning algorithms on two different target variables. We also used the two best approaches as references to test seven different feature reduction methods and three different rolling learning scenarios. Additionally, we calculated three different neural networks with static and rolling training variations. In total, Table 4 lists all 35 different models according to their overall performance and returns above the baseline factor. Other key performance indicators for each model are given in Internet Appendix C.

Although the best performing approach with monthly average returns of 2.33% [6.22] is the combination of static DRF with absolute return target and elastic net feature reduction, the result of a single model must be treated with caution, particularly in this case as no other feature reduction achieved any improvement of the overall outcomes. Since we have tested a large number of model combinations, there is the possibility of a false-positive despite high t-statistics due to multiple testing. That is particularly true for the rolling models, with each one consisting of 17 retrained models.

It is more beneficial to analyze the algorithms’ overall distribution and approaches to get an idea of the models’ potential and their range of returns. The best performing algorithms in our context are the GBM, the DRF as well as FNN. These findings are consistent with former literature (Gu et al. 2020b), identifying tree-based algorithms and neural networks as top-performers. While autoencoders and PCA lower the overall performance, the elastic net seems to add value in a single case; however, these findings appear less apparent and robust in this context. It seems that the algorithms can handle the high dimensionality directly by themselves, and any pre-processing reduction methods weaken essential signals. Rolling learning techniques seem to add value in the case of the GBM, while for other algorithms, an updated model seems to be defeated by static models. The GBM architecture might handle the different amounts of observations attributed to the rolling update better than other approaches.

In summary, out of our 35 models, 30 approaches show at least equal average monthly returns as our baseline factor for the period from 2003 to 2019. Moreover, 15 models show significantly higher returns above the 95% confidence interval. Excluding the poorly performing feature reduction methods, out of 21 models, over 90% are equal to or outperform the baseline factor with a mean return of 1.59%. Our best-performing models show both very high t-statistics and monthly returns of around 2%, more than twice the performance the baseline factor yields. These findings agree with the recent study of Gu et al. (2020b), who doubled the Sharpe ratio of standard linear models to 1.35 with neural networks. Similarly, in terms of Sharpe ratios, our results are within the range of 1.0 and 1.3.

It seems unlikely that these yields are merely the result of data dredging. First, the t-statistics are highly significant, both in terms of absolute returns and baseline improvement. Second, all of these approaches can handle the high-dimensional and non-linear data structures but differ in the specific underlying algorithm. Even if we face single false positives, as most models show significant gains, we can conclude that there are most likely arbitrage opportunities in the market or hidden risk components within the factor zoo that our models can exploit.

6.2 Model interpretation and feature importance

The results so far attest to a strong performance of the machine learning-based approaches concerning individual anomalies and the linearly constructed baseline factor. However, previous research about stock market anomalies was mostly concerned with linear models, as they appeal with ease of interpretation and testing. Highly complex models such as random forests with thousands of individual decision trees or neural networks with tens of thousands of parameters cannot keep up with this simplicity. This issue is a consequence of the model size and inevitably follows from its ability to learn complicated and non-linear interactions within data structures that go beyond superficial if-else relationships. Researchers refer to this issue as the black box problem of Artificial Intelligence (Zednik 2019).

However, with the rise of machine learning, computer scientists began to develop some mechanisms to weaken this issue. This section focuses on the interpretation of tree-based algorithms by applying the relative importance of variables. The importance is determined by the variables selected for a split in a decision tree, as well as how they affect the squared error of the predictions.Footnote 13 Figure 4 depicts the distribution of variable importance across our two reference models, the static- and absolute-return-trained DRF and the percent-ranked-trained GBM.

Distribution of feature importance The figure shows the histogram and density plot with the distribution of variables according to their relative importance. We distinguish between our two reference models, the static DRF trained on absolute returns and the static, percent-ranked-trained GBM. While the curve illustrates the density of the feature importance, the histogram depicts the absolute count

As a consequence of the different boosting and bagging mechanisms inherent in the two algorithms, the distribution of feature importance varies substantially. GBM builds the trees sequentially, gradually weighting them to capture step-by-step all the subtleties of the data structure. This method leads to a higher weighting of a few variables, whereas the DRF uses averages, giving equal weight to the individual trees. Correspondingly, the weighting of the features is much more balanced across the factor zoo.

Examining the five most important anomalies for the predictions of the DRF and GBM, we see more similarities. Both the Short-term Reversal (MOM1M) and the Industry Return of Big Firms (INDRETBIG) seem rather important in the algorithms’ return prediction. However, we see that the Idiosyncratic Risk (IDIOVOLAHT) is the most important variable for the GBM-approach, making the algorithm potentially less robust. These results are in accordance with the overall distribution of the importance depicted in Fig. 4.

It is noticeable that the most critical features regularly fall into the data category “price.” Examining the overall distribution of the share of each data category on the feature importance, the results reveal that accounting and price features are by far the most essential components for our models’ predictions. This circumstance naturally follows from the dataset, consisting mainly of accounting (more than 50%) and price (around 25%) anomalies. The DRF follows this distribution, slightly overweighting the importance of price anomalies. This behavior stands in contrast to the GBM, which weights price signals more than twice as high, and reduces the proportion of accounting signals to the same extent. The difference in the assessment of feature importance is perhaps a major driver of GBM’s strong performance, as price signals were among the most stable and profitable anomalies, as demonstrated in Sect. 4. The algorithm seems to identify this circumstance correctly and adapted its weights accordingly.

In summary, we see significant differences in how the model weight features. While we can analyze remarkable characteristics to trace some of the working mechanisms behind the training process, interpretability is limited due to the "black box" characteristics of current algorithms. This issue is not only limited to finance but is a general challenge in machine learning-related tasks.

6.3 The impact of hyperparameter tuning on machine learning performance

A common task in a data science pipeline and particularly in machine learning models is estimating parameters belonging to the specific algorithm. These parameters include the number of trees and learning rates for tree-based algorithms and the number of neurons and hidden layers in neural network architectures. Depending on the model, there exists a wide range of possible parameter combinations, and purely analytical estimation of the best combination based on the underlying dataset is usually not possible. A common way to tune these parameters is to sample different combinations, train them via cross-validation, and select the best-performing one.

Thus far, we use the same default parameters for our models, which offers a favorable combination regarding resource consumption and model complexity. However, this approach may pose a higher false-positive risk only attributed to a luckily, nevertheless, randomly selected parameter set. For a more robust estimation of our machine learning algorithms’ profit span, we optimize the percent-ranked GBM model through hyperparameter tuning. We train the algorithm with 64 different combinations of essential parameters: the number of trees, the learning rate, the maximum depth, the sample rate of rows, and the column sample rate.Footnote 14 The boxplots in Fig. 5 illustrate the range of different key performance indicators achieved by the varying combinations.

Hyperparameter tuning for GBM model The figure illustrates the distribution of the key performance indicators of the 64 GBM models involved in the process of hyperparameter optimization. We focus on the value-weighted returns of the respective portfolios for model assessment. Besides, the performance of both the original static GBM model and the baseline factor is plotted (excluding the mean absolute error for the latter one as the metric does not apply to the linearly constructed factor)

The empirical findings suggest significant differences depending on the chosen parameters. Depending on the parameter set, the 90% confidence interval of returns ranges from 0.67% to 2.01%, with t-statistics between 1.36 and 5.89. However, apart from some rare outliers, the median consistently ranks above the baseline factor over the set of performance indicators, suggesting an overall superiority of the GBM. While we conducted this analysis exemplary for the GBM algorithm, we do not expect significant differences for hyperparameter optimization of other algorithms.

In conclusion, hyperparameter tuning underlines the value of machine learning models in the factor zoo. First, the probability of being only a result of data dredging further decreases, as it seems that the additional profit through non-linear algorithms is not a consequence of cleverly chosen parameters but universally applicable. Second, the upper-bound limit of monthly returns might be higher than estimated since it is possible to further tune an algorithms’ parameter or a neural networks’ architecture for optimal performance.

6.4 Turnover rate and break-even transaction cost considerations

Our current machine learning models are all optimized to predict the next-month stock returns, which would lead to maximum long-short spreads. However, our model’s true profitability furthermore depends on the transaction costs associated with it when being executed. These costs are related to the relative amount of rebalancing per month, referred to as the one-sided turnover rate.

The empirical results indicate a significant (t-statistics \(>5\)) positive relationship between monthly returns and average turnover rate. As we optimize for return predictability with monthly portfolio realignment, the long-short portfolios are always constructed according to the predicted maximum spread, regardless of the previous portfolio state and potential transaction costs. While we thereby maximize mean absolute return, the increased turnover rate might lead to an overall decreasing portfolio return, as indicated by the findings of Novy-Marx and Velikov (2016), who associated lower profitability with higher turnover rates.

To address this issue and gain a more comprehensive understanding of the strategies’ profitability in a real implementation, we calculate the round-trip costs as an indicator for the upper bound of transaction costs. An estimation of the maximum amount of allowable transaction costs for a profitable strategy allows us to analyze whether the higher return of our high-turnover strategies compensates for higher transaction costs. We calculate these round-trip costs as in Grundy and Martin (2001), Barroso and Santa-Clara (2015), and Hanauer and Windmueller (2019) using a Z-score at the 5% significance level:

where S = Portfolio strategy S; TS = t-statistic of strategy S; μS = Average monthly return of strategy S; TOS = One-sided turnover rate of strategy S.

The findings outlined in Table 5 indicate that our best-performing machine learning models, as well as our two static and most conservative models with the full feature set, outperform the baseline factor not only in terms of absolute monthly return but additionally compensate their higher turnover rate. With round-trip costs between 1.4% and 2.4%, these strategies allow realistic transaction costs while remaining profitable. These findings are a robust indicator that no p-hacking took place and strengthen our hypothesis that non-linear patterns in the factor zoo might offer rich profit opportunities.

6.5 Avoiding methodological forward-looking bias with post-publication feature inclusion

Until now, our models have always used the complete set of 299 anomalies available. The feature reduction methods also reduced the amount of input data based on the complete observation. However, our anomalies’ average publication date is 2003 (i.e., our models currently use anomalies whose underlying calculation methodology has not yet been published at the point in time of prediction). Although there was no direct forward-looking bias concerning the observations (which was always used ex-ante), there might be a form of forward-looking methodological bias in current research. To counteract this potential data-mining bias and to observe the size of this effect, we train our percent-ranked GBM model in a rolling training fashion based on post-publication anomalies. For each yearly retrained model from 2003 to 2019, we use only those anomalies that have already been published.

The first models used 106 anomalies starting in 2003, exceeding 250 features in 2012. We see strong growth in accounting anomalies, tripling in total over the post-2003 period. In terms of performance figures, we compare it with the baseline and static reference model and the extending rolling learning GBM from Sect. 5.3 due to its training similarities. In contrast to the reference variants of the GBM, performance only slightly decreases, and the difference is not statistically significant. With average monthly returns of 1.85% and a t-statistic above four, we can further reduce the risk of data snooping within our empirical research. The strict pre-filtering of unpublished anomalies prevents both data and methodological forward-looking in our training process. This approach bolsters our previous findings and underlines that our returns truly originate from either a risk component or a mispricing effect that caused the spread of our models’ long-short portfolios.

6.6 Risk or return? Testing machine learning returns against common factor models

With the probability of emerging purely from data snooping being relatively low, the question arises whether our models’ average monthly returns of 1.8–2% are a consequence of a hidden risk component or an indicator of market inefficiencies and irrational investor behavior. A typical instrument to test the risk component hypothesis is to test the models’ return against common factor models. If these factor models with their respective loadings can satisfactorily explain the models’ return (i.e., only have insignificant alphas in linear regressions), then the models’ performance is fully contributable to these risk components.

To ensure a robust assessment of whether underlying risk components are attributable to the models’ return, we test our two reference model (DRF and GBM) and the post-publication GBM against the most common factor models: the CAPM (Sharpe 1964; Lintner 1965; Mossin 1966), the Carhart (1997) Four-Factor model, the Fama-French Three- and Five-Factor models (Fama and French 1993, 2015), the mispricing factor of Stambaugh and Yuan (2017), as well as against the more recent Q-Factor model (Hou et al. 2015), the Behavioral Factor (DHS) of Daniel et al. (2020a) and the Daniel et al. (2020b) (DMRS) Factor. We utilize the respective factor loadings published as time series by the original authors. The empirical results are depicted in Table 6.

The results underline that no factor model can satisfactorily explain the results of the machine learning models. Both the equally-weighted and value-weighted portfolios have alphas between 2.9% and 3.6% respectively, 1.4% and 2.5%. Significant values in the form of t-statistics are consistently greater than 3. It can also be observed that the alphas are more pronounced for the GBM model than for the DRF.

Derived from these results, it seems that the risk components of standard factor models cannot explain our machine learning models’ returns. Consequently, and underlined by the rather unlikely case of p-hacking, any attempt to explain the returns will inevitably point to potential market inefficiencies and mispricing issues or shortcomings in asset pricing models (Joint Hypothesis). Arbitrage opportunities usually disappear through investors’ trading adaptions. In the case of machine learning algorithms, these relationships might have been too complex and hidden in the factor zoo such that investors were not yet able to exploit them. That could also explain why the profits are relatively non-stationary, as our best-performing models were statically trained with pre-2003 data, trading up to 2019 without updates. In the future, and with a more widespread application of machine learning algorithms, rolling techniques might become increasingly important to retain constant profits by exploiting temporary limited, non-linear patterns.

7 Conclusion

Our study replicated many findings of former meta-studies. It showed that most anomaly returns mitigate and disappear when using a standardized framework across the full factor zoo instead of the authors’ original construction settings. This tendency underlines the widespread issue of data dredging in anomaly research. The empirically confirmed post-publication effect of McLean and Pontiff (2016), combined with the non-stationarity of the time series, makes replication studies across different timeframes particularly important. When using a combined baseline factor as the average of each firm-month observation, monthly long-short spreads of 0.92% with high significance are achievable. These findings lead to the hypothesis that while individual anomalies often do not provide significant returns, a combined approach might yield robust earnings.