Abstract

When designing a message transmission system, from the point of view of making sure that the information transmitted is as fresh as possible, two rules of thumb seem reasonable: use small buffers and adopt a last-in-first-out policy. In this paper, the freshness of information is interpreted as the recently studied “age of information” performance measure. Considering it as a stochastic process operating in a stationary regime, we compute the whole marginal distribution of the age of information for some well-performing systems. We assume that the arrival process is Poisson and that the messages have independent service times with common distribution, i.e., the M/GI model. We demonstrate the usefulness of Palm and Markov-renewal theory to derive results for Laplace transforms. Our numerical studies address some aspects of open questions regarding the optimality of previously proposed scheduling policies, and a policy newly considered herein, for AoI management.

Similar content being viewed by others

Notes

“Dequeuing” policy denotes how the server selects which to serve from among stored messages when it becomes free. FIFO or LIFO dequeuing policies are relevant when more than one message can be stored.

In the \({\mathcal {P}}_2\) and \({\mathcal {B}}_2\) systems, there is obviously only at most one message to choose from when the server is free, so these systems are both LIFO and FIFO.

At the “network layer,” the Internet performs “in order” (FIFO) delivery of packets by rule.

Time reversibility is also employed when using Markov embeddings to similarly study GI/M models which are not addressed herein.

That is, \({\mathbb {P}}(N=k)=q^k(1-q)\) for \(k=0,1,2, \ldots \)

References

Asmussen, S.: Applied Probability and Queues. Springer, New York (2003)

Baccelli, F., Brémaud, P.: Elements of Queueing Theory: Palm Martingale Calculus and Stochastic Recurrences, 2nd edn. Springer, Berlin (2003)

Bedewy, A.M., Sun, Y., Shroff, N.B.: Minimizing the age of information through queues. IEEE Trans. Info. Th. 65(8), 5125–5232 (2019)

Çinlar, E.: Introduction to Stochastic Processes. Prentice-Hall, Englewood Cliffs, NJ (1975)

Champati, J.P., Al-Zubaidy, H., Gross, J.: On the Distribution of AoI for the GI/GI/1/1 and GI/GI/1/2* Systems: Exact Expressions and Bounds. In: Proceedings of IEEE INFOCOM (2019)

Costa, M., Codreanu, M., Ephremides, A.: On the age of information in status update systems with packet management. IEEE Trans. Info. Th. 62(4), 1897–1910 (2016)

Inoue, Y., Masuyama, H., Takine, T., Tanaka, T.: A general formula for the stationary distribution of the age of information and its application to single-server queues. IEEE Trans. Inf. Th. 65(12), 8305–8324 (2019)

Jiang, Y., Miyoshi, N.: Joint performance analysis of ages of information in a multi-source pushout server. IEEE Trans. Info. Th. 68(2), 965–975 (2022)

Kaul, S., Yates, R.D., Gruteser, M.: Real-time status: How often should one update? In: Proceedings of IEEE INFOCOM (2012)

Kaul, S., Yates, R.D., Gruteser, M.: Status updates through queues. In: Proceedings of Conference on Information Sciences and Systems (CISS) (2012)

Kavitha, V., Altman, E., Saha, I.: Controlling Packet Drops to Improve Freshness of information. arXiv:1807.09325, July 18, (2018)

Kesidis, G., Konstantopoulos, T., Zazanis, M.A.: The new age of information: a tool for evaluating the freshness of information in bufferless processing systems. Queu. Syst. 95(3), 203–250 (2020)

Konstantopoulos, P., Zazanis, M.: Sensitivity analysis for stationary and ergodic queues. Adv. Appl. Prob. 24(3), 738–750 (1992)

Kosta, A., Pappas, N., Angelakis, V.: Age of information: a new concept, metric, and tool. Found. Trends Netw. 12(3), 162–259 (2017)

Kosta, A., Pappas, N., Ephremides, A., Angelakis, V.: Age and value of information: non-linear age case. In: Proceedings of IEEE ISIT (2017)

Sun, Y., Kadota, I., Talak, R., Modiano, E.: Age of Information: A New Metric for Information Freshness. Synthesis Lectures on Communication Networks (2019)

Yates, R., Sun, Y., Brown, D., Kaul, S., Modiano, E., Ulukus, S.: Age of information: an introduction and survey. IEEE J. Sel. Areas Commun. 39(5), 1183–1210 (2021)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

T. Konstantopoulos: research supported by Cast. Co. IIS-75.

Appendices

Appendix A: Proof of Theorem 2 by means of Markov embedding

We make use of (11) of Proposition 1 which needs computation of the quantities involving \(\alpha (0)\) in its right-hand side. The analog of Lemma 2 is Lemma 10 below which looks conspicuously the same. In fact, the first two formulas are identical. The last two differ.

Lemma 10

For \({\mathcal {P}}_2\),

Proof

-

1)

When \(K_{-1}=0, K_0=0\) or when \(K_{-1}=0,K_0=1\) the AoI is the same as in the \({\mathcal {B}}_2\) system, the reason being that the number of messages in the system is always at most 1, see Fig. 3.

-

2)

Suppose next that \(K_{-1}=1,K_0=0\). In Fig. 19, we depict the two scenarios corresponding to the possible values of \(K_{-2}\), namely \((K_{-2},K_{-1},K_0)=(0,1,0)\) or (1, 1, 0). In both cases, (3) and the system dynamics imply that

$$\begin{aligned} \alpha (0) = S_0-S_{-1}+V, \end{aligned}$$V is the time elapsed between the last arrival in the interval \((S_{-2},S_{-1})\) and \(S_{-1}\). If there is only one arrival in this interval, then \(V:=S_{-1}-T_{0}\). In any case,

$$\begin{aligned}&\text {conditionally on }\{K_{-1}=1,K_0=0\}, \text { the random variables} V \text { and }S_0-S_{-1}\\&\quad \text { are independent.} \end{aligned}$$Therefore,

$$\begin{aligned} {\mathbb {E}}^0[e^{-s (S_0-S_{-1}+V)} \mid K_{-1}= & {} 0,K_0=1] = {\mathbb {E}}^0[e^{-s (S_0-S_{-1})} \mid K_{-1}=0,K_0=1]\,\\ {\mathbb {E}}^0[e^{-s V} \mid K_{-1}= & {} 0,K_0=1] \end{aligned}$$and the first factor on the right is easy:

$$\begin{aligned} {\mathbb {E}}^0[e^{-s (S_0-S_{-1})} \mid K_{-1}=0,K_0=1] \;=\; \int _0^\infty e^{-s x} \frac{e^{-\lambda x} dG(x)}{{\hat{G}}(\lambda )} \;=\; \frac{{\hat{G}}(s+\lambda )}{{\hat{G}}(\lambda )}. \end{aligned}$$To evaluate \({\mathbb {E}}^0[e^{-s V} \mid K_{-1}=0,K_0=1]\), we note that V is the distance of the last Poisson point inside the interval \((T_{-1},S_{-1})\) (in the left scenario of Fig. 19) or the interval \((T_{-1},S_{-1})\) in the right scenario. (In both cases, the length of the interval is that of a message size conditioned on containing at least one Poisson point.) To obtain the Laplace transform of V look backward in time starting from \(S_{-1}\) until the first Poisson point appears and condition on the event that this occurs between \(S_{-1}\) and \(S_{-2}\). Thus, the density of V at \(v>0\) is

$$\begin{aligned} \frac{(1-G(v))\lambda e^{-\lambda v}}{1-{\hat{G}}(\lambda )}, \end{aligned}$$which gives

$$\begin{aligned} {\mathbb {E}}^0[e^{-s V} \mid K_{-1}=0,K_0=1]= & {} \int _0^\infty e^{-sv} \frac{\lambda e^{-\lambda v} (1-G(v))}{1-{\hat{G}}(\lambda )} dv \nonumber \\= & {} \frac{\lambda }{\lambda +s} \, \frac{1-{\hat{G}}(s+\lambda )}{1-{\hat{G}}(\lambda )} . \end{aligned}$$(46)Putting these together, we obtain (44).

-

3)

Finally, assume that \(K_{-1}=1,K_0=1\). This situation is similar to the previous one and thus, will be treated succinctly. We are guided by Fig. 20. Firstly, we have

$$\begin{aligned} {\mathbb {E}}^0[e^{-s (S_0-S_{-1})} \mid K_{-1}=1,K_0=1]= & {} \int _0^\infty e^{-s x} \frac{1-e^{-\lambda x} dG(x)}{1-{\hat{G}}(\lambda )}\\= & {} \frac{{\hat{G}}(s)-{\hat{G}}(s+\lambda )}{1-{\hat{G}}(\lambda )}. \end{aligned}$$

Secondly, the argument used to derive (46) can be used here again with no changes to obtain

Putting these together, we obtain (44) as well. \(\square \)

The formula for \({\mathbb {E}}e^{-s \alpha (0)}\) in Theorem 2 is now clear:

Proof

Adding up (42) and (43) of Lemma 10 and similarly (44) and (45), we obtain

Substituting these expressions in the numerator of (11), and recalling the definition (19) of \(G_I\), we obtain (25). \(\square \)

An alternative expression for (25) is:

Corollary 4

For \({\mathcal {P}}_2\) with exponential message sizes,

and, with \(\rho =\lambda /\mu \), the standard deviation of \(\alpha (0)\) under \({\mathbb {P}}\) is

The expectation and variance have been computed by summing up the expectations and variance of the three independent random variables comprising \(\alpha (0)\). Inverting \({\mathbb {E}}e^{-s \alpha (0)}\) shows that \(\alpha (0)\) has density (5), i.e., when \(\mu =1\):

if \(\lambda \ne 1\) where

else if \(\lambda = 1\),

It is easy to see from (48) that \(\lim _{\lambda \rightarrow \infty } {\mathbb {E}}[e^{-s\alpha (0)}] = (\mu /(s+\mu ))^2\), the sum of 2 i.i.d. exponentials.

Appendix B: Analysis of the \({\mathcal {P}}_{2,\theta }\) system

We now analyze the system described in Sect. 7.

Given \(K_{-1}=0\), consider Fig. 21 at right.

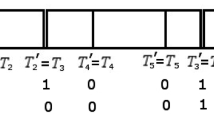

Number of messages in the system (black line) when \(K_{-1}=0,K_0=0\) (left) and \(K_{-1}=0,K_0=1\) (right). A black dot indicates a (successful) message departure. A green dot indicates an arrival of a message that will successfully depart. A red dot indicates an arrival of an unsuccessful message. If the fate of an arrival is not indicated in the figure, then it has no indicating dot

A message service period is successful with probability

So, considering the successful service period which concludes at \(S_0\):

Given \(K_{-1}=1\), consider Fig. 22 below.

Use the memoryless property of interarrivals to similarly obtain

So, \(K_n\) is an i.i.d. Bernoulli sequence with

The queueing process over consecutive intervals \([S_{i-1},S_i)\) and \([S_i,S_{i+1})\) is conditionally independent given \(K_i\). Thus, \(\{S_i,K_i\}_{i\in {\mathbb {Z}}}\) is Markov-renewal with renewal times \(S_i\) and the queueing process is semi-Markov [4]. In particular, \(\alpha (S_i)\) and \(S_{i+1}-S_i\) are conditionally independent given \(K_i\).

As Proposition 1, we have:

Proposition 3

The Laplace transform of the stationary AoI distribution is

where \({\mathbb {P}}^0\) and \({\mathbb {E}}^0\) are, respectively, probability and expectation given \(S_0=0\).

Proof

By the Palm inversion formula [2],

where the numerator

\(\square \)

To calculate the terms in (50), we need to follow the steps outlined in the lemmas below. Let \({\hat{F}}_0(s)= {\hat{G}}(s+\lambda )/{\hat{G}}(\lambda )\) and \({\hat{J}}(s) = \int _0^\infty e^{-s y} dJ(y)\) where \(J(y)= 1\) for \(y\ge \theta \) and, for \(0\le y<\theta ,\)

Lemma 11

Proof

See Fig. 21 at left and consider the interval \([S_{-1},S_0)\). Let \(\tau _{-1} \) be first message arrival time in this interval minus \(S_{-1}\), so that \(\tau _{-1}\sim \exp (\lambda )\) by the memoryless property. Note that there is a geometric number N of interarrival times each of which is smaller than both \(\theta \) and the associated service time; \(N=2\) in Fig. 21. Again, the probability of such unsuccessful service is

So, \({\mathbb {P}}(N=k)=(1-q) q^k\) for \(k=0,1,2, \ldots \). Let \(Y {\mathop {=}\limits ^{\text {(d)}}}(\tau | \tau < \theta \wedge \sigma )\) so that \({\mathbb {P}}(Y\le y)=J(y)\). Finally, let \(\tau _0\sim \exp (\lambda )\) be the duration between the arrival time (green dot) of the message that departs at \(S_0\) and the next arrival time. The service time (from the green dot to \(S_0\)) \(\sigma _0\) is independent of \(\tau _0\). Considering the prior N unsuccessful service completions, we are given that \(\tau _0 >\sigma _0\) or \(\tau _0>\theta \). Given \(K_0=0\), \(\tau _0>\sigma _0\). Let \(X_0 {\mathop {=}\limits ^{\text {(d)}}}(\sigma _0|\tau _0>\sigma _0)\) which has distribution

with \({\mathbb {E}}e^{-s X_0}={\hat{F}}_0(s)\). So,

a sum of independent terms with \(Y_n {\mathop {=}\limits ^{\text {(d)}}}Y\). Also, \(\alpha (S_0) {\mathop {=}\limits ^{\text {(d)}}}X_0\) in this case. \(\square \)

Define

Lemma 12

Proof

See Fig. 21 at right. The difference between this and the previous case is that here \(\sigma _0>\tau _0>\theta \). So, the distribution of \(X_1 {\mathop {=}\limits ^{\text {(d)}}}(\sigma _0\mid \sigma _0>\tau _0>\theta )\) is \(dF_1(x)\). \(\square \)

Define

Lemma 13

Proof

See Fig. 23 below.

For \(\alpha (S_0)\), there are two subcases depending of whether there are initial unsuccessful arrivals in the interval \([S_{-1},S_0)\), i.e., whether \(N>0\). When \(N>0\) (Fig. 23 right), this case is like when \(K_{-1}=0,K_0=0\). Otherwise (Fig. 23 left),

where V is the duration between \(S_{-1}\) the last arrival before \(S_{-1}\) (green dot), and V and \(S_0-S_1\) are independent given \(K_{-1}=1\). Starting from \(S_{-1}\), look backward in time until the first Poisson point appears (green dot) and condition on the event that this occurs at least \(\theta \) units of time before the service time ends. Thus, \(V {\mathop {=}\limits ^{\text {(d)}}}(\tau |\tau <\sigma -\theta ,\sigma >\theta )\) with distribution H. Also, \(S_0-S_{-1}\) is distributed as for the case where \(K_{-1}=0,K_0=0\) except the first interarrival time is absent. \(\square \)

Lemma 14

Proof

See Fig. 22. For \(S_0-S_{-1}\), this case is a combination of the previous two cases, and for \(\alpha (S_0)\) follow the previous case except use (54) instead of (52). \(\square \)

The final stage: The formulas obtained in the lemmas above must now be substituted into (50) as follows:

So,

Moreover,

Appendix C: High traffic asymptotics

“High traffic asymptotics” refers to the regime \(\rho =\lambda /\mu \rightarrow \infty \). Even though we have no explicit formulas for \({\mathcal {P}}_n\) or \({\mathcal {B}}_n\) when \(n \ge 3\), we can easily obtain asymptotics from the system dynamics.

Proposition 4

Let \(\sigma , \sigma _1, \sigma _2, \ldots \) be i.i.d. copies of \(\sigma \). Let \(\sigma _I\) be distributed as \({{\hat{G}}}_I\) as in (19). Then,

while

Sketch of proof

In both systems, the buffer consists of n cells. The message being processed sits in cell 1. In \({\mathcal {P}}_n\), the freshest message is either in cell 1, in which case all other cells are empty, or in cell 2. When \(\rho \) is high, there is always a message being processed in cell 1 and the freshest message is in cell 2. Hence, at any time t, the AoI \(\alpha _{{\mathcal {P}}_n}(t)\) equals the service time of the message in cell 2 plus the remaining service time of the message in cell 1. These are two independent random variables. The first one is distributed as \(\sigma \). The second is distributed as \(\sigma _I\) since the system is stationary. For \({\mathcal {B}}_1\), we can obtain the limit from the Laplace transform of (28). It is easy to see that \(\lim _{\rho \rightarrow \infty } {\mathbb {E}}[e^{-s \alpha _{{\mathcal {B}}_1}}] = {{\hat{G}}}(s) \, {{\hat{G}}}_I(s)\) and so \(\alpha _{{\mathcal {B}}_1} \xrightarrow [\rho \rightarrow \infty ]{\mathrm{d}} \sigma + \sigma _I\). For general n, when \(\rho \) is high, the AoI \(\alpha _{{\mathcal {B}}_n}(t)\) equals the remaining service time of the message in cell 1 (in distribution equal to \(\sigma _I\)) plus the time elapsed until the beginning of its service which is, in distribution, equal to the sum of n independent service times. \(\square \)

Remark 2

When \(\sigma =1\) with probability 1, \(\sigma _I\) is a uniform random variable in the interval [0, 1]. Hence, \(\sigma _1+\cdots +\sigma _n+\sigma _I = n + \sigma _I\) and the variance of this random variable is 1/12. Similarly, for \({\mathcal {P}}_2\), the asymptotic variance is again 1/12.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kesidis, G., Konstantopoulos, T. & Zazanis, M.A. Age of information using Markov-renewal methods. Queueing Syst 103, 95–130 (2023). https://doi.org/10.1007/s11134-022-09852-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-022-09852-w