Abstract

Researchers have improved travel demand forecasting methods in recent decades but invested relatively little to understand their accuracy. A major barrier has been the lack of necessary data. We compiled the largest known database of traffic forecast accuracy, composed of forecast traffic, post-opening counts and project attributes for 1291 road projects in the United States and Europe. We compared measured versus forecast traffic and identified the factors associated with accuracy. We found measured traffic is on average 6% lower than forecast volumes, with a mean absolute deviation of 17% from the forecast. Higher volume roads, higher functional classes, shorter time spans, and the use of travel models all improved accuracy. Unemployment rates also affected accuracy—traffic would be 1% greater than forecast on average, rather than 6% lower, if we adjust for higher unemployment during the post-recession years (2008 to 2014). Forecast accuracy was not consistent over time: more recent forecasts were more accurate, and the mean deviation changed direction. Traffic on projects that opened from the 1980s through early 2000s was higher on average than forecast, while traffic on more recent projects was lower on average than forecast. This research provides insight into the degree of confidence that planners and policy makers can expect from traffic forecasts and suggests that we should view forecasts as a range of possible outcomes rather than a single expected outcome.

Similar content being viewed by others

Introduction

In his review of the 50-year history of travel forecasting, Hartgen (2013) said, “The greatest knowledge gap in US travel demand modeling is the unknown accuracy of US urban road traffic forecasts.” Researchers have improved travel demand forecasting methods in recent decades but invested relatively little in understanding their accuracy. This underinvestment is unfortunate because accurate forecasts improve decisions about the evaluation, selection and design of transportation projects.

The absence of data has been the major barrier to the study of travel forecast accuracy (Nicolaisen and Driscoll 2014). This deficiency arose because accumulating the data needed for retrospective analysis requires proactive planning. The responsible agencies do not commonly preserve and archive forecasts, and so often lose these data. Long project development cycles and staff attrition make recovering this information cumbersome.

In National Cooperative Highway Research Program Project 08-110, we assembled those data, compiling a database of forecast traffic and post-opening traffic counts for 1291 road projects in six states in the United States (US) and four European countries. This resulted in the largest known database of forecast accuracy. We used those data to assess the accuracy of the forecasts, and identify the factors related to better or worse accuracy. The data points span from 1960 to 2017 and do not consider the effects of reduced travel due to COVID-19.

The final report from that project, NCHRP Report 934 (Erhardt et al. 2020), contains detailed descriptions of the data cleaning and exploration, several case studies identifying the causes of inaccuracy, and recommendations for improving practice. This paper represents a distinct and independent extension of the NCHRP study. In addition to summarizing key findings for the readers of Transportation, we extend the analysis here to consider how and why forecast accuracy changes over time, to examine the effect of the Great Recession on forecast accuracy, and to place our work more clearly in the context of the academic literature.

Literature review

Most past studies of travel demand forecast accuracy focused on transit capital projects and toll roads. We review these briefly before turning our attention to toll-free road projects, which were the focus of this study, and considering past explanations for forecast inaccuracy.

Ridership on major transit projects often fell short of forecasts, with observed ridership 16 to 44% less than forecast on average (Webber 1976; Pickrell 1989; Kain 1990; Button et al. 2010; Nicolaisen and Driscoll 2014; Schmitt 2016). The Federal Transit Administration (FTA) found that transit forecasts became more accurate over time, and analysts attributed that improvement to better scrutiny of travel forecasts and improved analytical tools (Spielberg et al. 2003; Lewis-Workman et al. 2008). Furthermore, Voulgaris (2019) noted that this inaccuracy improved by 2% every year.

Like transit projects, the post-opening traffic on toll roads was lower on average than forecast. Counted toll road traffic was an average of 23% less than forecast in a global sample (Bain and Polakovic 2005; Bain 2009), 45% lower in an Australian sample (Li and Hensher 2010), and opening-year revenue was 41% less than forecast on a sample of 26 toll roads in the US (Kriger et al. 2006).

In contrast to transit and toll roads, most studies found that post-opening traffic on free roads was 3–11% higher on average than forecast (Nicolaisen and Driscoll 2014). The most diverse samples were a study of 183 large road projects (both tolled and free) in 14 countries that found counted traffic was an average of 9.5% higher than forecast (Flyvbjerg et al. 2005), and a study of 146 road projects in Denmark, Norway, Sweden and the United Kingdom (UK) that found counted traffic was on average 11% higher than forecast (Nicolaisen 2012). Most other studies of road forecast accuracy analyzed a single state or country. Welde and Odeck (2011) found counted traffic on Norwegian road projects 19% higher than forecast. Parthasarathi and Levinson (2010) examined the accuracy of traffic forecasts for one city in Minnesota and found forecasts underestimating counted traffic, especially for high volume roads. Buck and Sillence (2014) evaluated 131 forecasts in Wisconsin and determined that the mean absolute percent difference between forecast and counted traffic was 16%. Giaimo and Byram (2013) analyzed over 2000 traffic forecasts for road segments in Ohio produced between 2000 and 2012 and found that counts were slightly lower than forecast, but the difference was within the standard error of traffic count data. In contrast to most studies, Miller et al. (2016) reported that counts were lower than forecast, with a median percent error of 31% for 39 road projects in Virginia. While the average difference for free roads was in the opposite direction to and smaller in magnitude than for transit and toll roads, each study of the topic showed substantial forecast inaccuracies.

Studies have identified several factors as potential sources of forecast errors, including inaccurate forecasts of travel model inputs, model specifications, operational changes, construction delays and land use changes (Flyvbjerg 2007; van Wee 2007; Parthasarathi and Levinson 2010; Hartgen 2013). In addition to technical limitations, average toll and transit volumes could be lower than forecast because of cognitive biases (Duru 2014), “strategic misrepresentation” (Flyvbjerg 2007), or selection bias where transportation agencies are more likely to fund and build proposed projects with higher forecast demand (Eliasson and Fosgerau 2013). We might expect these same factors to cause all types of travel demand to be less than forecast, but several past studies showed toll-free road traffic was on average higher than forecast instead (Flyvbjerg et al. 2005; Parthasarathi and Levinson 2010; Welde and Odeck 2011; Nicolaisen 2012; Nicolaisen and Driscoll 2014). One possible reason is that, at least in the United States, many road projects are funded through formula-based grants to the states, so a demand forecast may have less effect on whether a specific road project is funded. Another reason may be that road project appraisal often focuses on congestion relief, but travel demand models that do not adequately capture induced traffic demand may produce erroneous forecasts for such projects (Næss et al. 2012; Antoniou et al. 2011; Volker et al. 2020). If a forecast were to project too little traffic, it would also project too little congestion and may therefore overestimate the benefits (Næss et al. 2012).

Identifying the causal mechanisms for inaccuracy has been challenging for even the most robust studies (Nicolaisen and Driscoll 2014), often due to the lack of necessary data. For example, Anam et al. (2020) found that measured factors could only explain a quarter of the variation between forecasts and counts. In this study, we compiled and analyzed data that were not previously available as a contribution to the effort.

Methods and data

Many others have conducted case studies of particular projects, but this paper presents a statistical analysis of a large sample of projects, and therefore constitutes a large-N study (Flyvbjerg et al. 2005). Large-N analysis aims to determine how close the forecasts are to observed volumes (Miller et al. 2016). Consistent with Flyvbjerg et al. (2006) and others, we expressed the percent difference in counted traffic from the forecast as:

where PDF is the percent difference from forecast. Negative values indicate that the counted volume was lower than the forecast, and positive values indicate the counted volume was higher than the forecast. It expresses the deviation relative to the forecast, so provides meaningful information when making a forecast. While some authors refer to this expression as Percent Error (PE), we prefer the PDF terminology because it makes the directionality clear.

Whereas PDF measures accuracy for a single project, we are interested in measuring accuracy across a sample of projects. Accuracy is comprised of trueness (lack of bias) and precision, as Fig. 1 illustrates (Collaboration for Nondestructive Testing n.d.). In the context of scientific measurement, trueness is the agreement between the average of a large series of measurements and the true value, and precision is the agreement between repeated measurements of the same quantity (ISO 5725-1 1994). These terms do not explain why an outcome occurred: an error does not imply a mistake, and bias does not imply a lack of objectivity.

Several differences arose when we translated these terms into the context of traffic forecasting. A simple rendition would take the post-opening traffic count as representative of the true value and the forecast as a measurement. However, a count is itself a measurement subject to substantial error from temporal variation and traffic mix (Ismart 1990; Horowitz et al. 2014). A forecast, on the other hand, is distinct from a measurement because of the time between making a forecast and observing an outcome. In addition, we rarely have repeated traffic forecasts for the same road project, so could not measure precision through repeated measurements. Nonetheless, distinguishing between the components of accuracy is useful. Instead of trueness, we reported the mean and median PDF as measures of the overall deviation. Instead of precision, we reported half the difference between the 5th and 95th percentiles as a measure of the spread of outcomes after adjusting for the average deviation. We separately reported the mean absolute PDF (MAPDF) as a measure of the general accuracy.

We compiled a database containing forecast information from six participating states (Florida, Michigan, Minnesota, Ohio, Massachusetts and Wisconsin) and four European countries (Denmark, Sweden, Norway and the United Kingdom). The sources included Department of Transportation (DOT) databases, project forecast reports and/or traffic/environmental impact statements as well as databases from other published studies (Parthasarathi and Levinson 2010; Nicolaisen 2012; Giaimo and Byram 2013; Buck and Sillence 2014; Marlin Engineering 2015; Miller et al. 2016). The database includes information on each project (unique project ID, improvement type, facility type, location), forecast (forecast horizon year, methodology etc.) and the post-opening traffic count information. The data contain a diversity of projects, including new roads, road widenings, interchange reconstructions, safety and operational improvements and pavement resurfacings.

In total, the database contains reports for 2611 unique projects, and for 16,697 road segments that comprise those projects. Some of the projects had not yet opened; some of the segments did not have traffic count data associated with them, and others did not pass the quality control checks for inclusion in statistical analysis. While we retained all records for future use, we based our analysis on a subset of 1291 projects and 3912 segments, as Table 1 shows. Most participating agencies compiled the data retrospectively. For some jurisdictions, we only have data for a few projects, and those projects tend to be larger in scope and therefore better documented. In contrast, for Agency E, we have data for nearly every forecast made starting in the early 2000s, comprising 44% of our sample. (Because their management directed them to clean their office, staff from this agency also provided about two dozen boxes of paper records for these projects. We organized and digitized the basic attributes of these projects and included them in our database.)

As Flyvbjerg (2005) recommends, we evaluated opening-year conditions. We defined the opening-year as the first post-opening year with traffic count data available. If we had multiple forecasts for a single project (such as opening-year and design-year forecasts, usually 20 years after project opening), we used the forecast closest to the opening-year. To make the comparison in the same year, we held the counts constant and scaled the forecast to the year of the count using the growth rate implied by opening and design year forecasts, and a standard growth rate of 1.5% if they were unavailable.

Projects did not always open in the year anticipated. This happened if a project was delayed, if a forecast was for an alternative design that was not built, or if funding priorities changed. We usually knew when delays occurred for large projects. For smaller projects we could not always determine when and if construction finished because DOTs do not necessarily link forecast records to construction records. Where we could not verify the project completion date (for 488 projects out of the 1291 in our analysis), we assumed that maintenance, minor construction or low risk projects were completed within 1 year of planned opening, and that major construction projects took 2 years beyond that. This assumption reduced the risk of including counts collected prior to the project opening. Because most projects took place on existing facilities, pre-opening counts are often available but may be affected by construction activity.

When comparing forecasts and counts, we compared the average daily traffic (ADT), although its exact definition depended upon the source. Some agencies provided data as average annual daily traffic (AADT), some as average weekday daily traffic (AWDT), and some as typical weekday traffic, which usually was for non-holiday weeks with school in session. The units were not always clear in the data, so they may vary between agencies, but we assumed consistency between forecasts and counts within an agency.

Often, the forecasts included estimates of traffic on multiple road segments. These estimates were likely correlated, such as for different directions of flow on the same road or for two road segments aligned end-to-end. Rather than retain separate observations for each segment, we aggregated them to a single project-level observation by averaging the forecast and observed traffic volume for all segments with available forecast and count data.

In addition to the forecast and counted traffic volumes, we compiled the attributes for each project as Table 2 shows. Not all attributes were available for every project, usually because those data were not recorded when the forecast was made.

Table 2 also indicates the percent of projects with each attribute available. Different agencies also have different practices for recording attributes such as functional class, improvement type and forecast method, so we mapped those attributes to common categories.

We compiled these data based on their availability and they do not represent a random sample of transportation projects. We analyzed projects opening between 1970 and 2017, with about 90% opening in 2003 or later. We do not have details about the nature and scale of some projects, but earlier projects were often major infrastructure capital investment projects and later projects were often routine resurfacing projects on existing roadways. This trait of the database occurs because some state agencies began routine tracking of all forecasts only within the past 10 to 15 years and, in earlier years, retained only information for major investments. Similarly, the type of project, the methods used, and the specific data recorded all differ because of the practices of the agencies providing the data.

Results and discussion

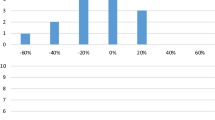

Figure 2 shows the overall PDF distribution, replicated here from NCHRP 934 (Erhardt et al. 2020) with permission from TRB, which reveals that counted traffic was lower than forecast on average. About 68.5% of projects had traffic lower than forecast. The mean PDF was − 5.6% and the mean absolute PDF was 17.3%. The 5th percentile PDF was − 37.6% and the 95th percentile PDF was + 36.9%. The average difference was opposite in direction from the results of most previous studies of toll-free road traffic forecasts. This difference reflects the composition of the sampled projects, whether by location, type of project, year, or some other factor. We explore how the accuracy relates to such factors in the rest of this section.

Distribution of percent difference from forecast (Erhardt et al. 2020)

NCHRP Report 255 (Pedersen and Samdahl 1982) provided recommendations on the maximum desirable deviation of a traffic assignment model from base year traffic counts. According to this guidance, assignment deviation should not result in a design deviation of more than one highway travel lane. NCHRP Report 765 described this as the “half-lane rule” and extended it by considering the approximate error in traffic counts, in the expectation that an assignment model would not reasonably have less error than traffic counts (Horowitz et al. 2014). In Fig. 3, we plotted the absolute PDF against the forecast volume, and overlaid the maximum desirable deviation and expected deviation of traffic counts. We found that 83.8% of forecasts fell within the maximum desirable deviation, and 46.5% of forecasts had less deviation than expected of traffic counts.

To more explicitly test forecasts against the half-lane rule, we calculated the number of lanes required for forecast traffic and counted traffic on each road segment, assuming the same Level of Service. Some 36 segments out of 3912 (1.0%) would have required an additional lane to allow the traffic to flow at the forecast level of service (LOS). Conversely, forecasts for 158 links (4.2%) over-estimated the traffic by an amount such that they could provide adequate service with fewer lanes per direction; 92 of those links were interstate highways, 64 were principal arterials and the rest were minor arterials.

Table 3 presents the statistical measures of available categorical variables. We discuss the values below.

Jurisdiction

We observe that some agencies have more accurate forecasts than others, although the sample sizes are small for some and we do not know whether this accuracy is due to better forecasting techniques or a different mix of projects. We noted previously that Agency E recorded nearly every forecast they made since the early 2000s, comprising 44% of our sample. The Agency E projects have a lower absolute deviation (MAPDF of 13.7% compared to 20.1% for the rest), which may relate to including more routine projects. However, their average deviation is more negative (mean PDF of − 9.5% against − 2.7%), which may be because many of these projects opened in the wake of the Great Recession.

Functional class

The results show that forecasts were more accurate on higher functional class facilities. Higher functional class roads carry more traffic than other road classes, so a similar absolute deviation is associated with a smaller percent deviation. In addition, smaller facilities may be more affected by zone size and network coding details where all traffic from a traffic analysis zone may enter the road network at one location, leading to uneven traffic assignment outputs.

Area type

The results show little difference between the accuracy of forecasts in rural or mostly rural counties versus those in urban counties.

County population growth

We further grouped projects based on whether they were in counties with growing, stable or declining population between the start year and the opening year. Counted traffic in counties experiencing more than 1% growth was about 12.8% less than forecast on average, compared to 7.9% and 8.6% less in counties with declining or stable population. This result suggests that when a large share of the forecast traffic is due to expected population growth, as might be expected in a growing county, there is a risk that the traffic growth does not materialize.

Project type

The traffic on routine maintenance projects (resurfacing or repaving) was on an average lower than forecast. Capacity expansion projects had average counts slightly exceeding forecasts and were less accurate. The difference could reflect capacity expansion projects generating more induced traffic. Forecasts for the construction of new roads were more accurate than forecasts on existing roads, but the sample size was small.

Forecast method

A Large-N analysis such as this offers the potential to assess the performance of tools available to forecasters, although we were limited to those recorded in the data. Regional travel demand models produced more accurate forecasts than traffic count trends. Some forecasters used professional judgment to combine count trends and volume from a demand model. The resulting forecasts were almost as accurate as those based on models alone, suggesting that considering count trends worsened rather than improved traffic forecasts. We do not know the forecast method for about half the projects with a large percentage (562 out of 676 projects) of those in the jurisdiction of Agency E, which did not record that information.

Agency type

Relative to state DOTs, consultants produced forecasts with a more negative mean difference, but a smaller spread. Projects with an unknown agency type have a smaller spread than either and almost all of these projects are under the jurisdiction of Agency E (562 out of 563). We do not know whether the differences between consultant- and DOT-prepared forecasts are meaningful. It is possible that consultants lead forecasts for more complex and challenging projects, or it is possible that the differences instead relate to practices that vary across jurisdictions.

Time span

We defined the time span as the number of years between the start year and the year of count. Forecasts with a span of 5+ years were less accurate, and counts were lower on average than forecasts. The greater the number of years between forecast production and traffic count, the larger the opportunity for changes to have occurred in the economy, land use patterns, fuel prices, and other factors that influence travel. These are all variables that are difficult to predict, but their effects are evident. This finding is consistent with findings by Bain (2009) who concluded that longer-term forecasts are critically dependent on macro-economic projections.

Unemployment rate

Economic conditions that differ from expectations can lead to forecast inaccuracy (Anam et al. 2020). We measured this by examining both the state/country level unemployment rate in the opening year, and the change in unemployment rate from the start year. When the opening year unemployment rates were less than 5%, counted traffic was on average higher than forecast, and when unemployment rates were higher, traffic was lower than forecast. Higher employment rates lead to more traffic as more people commute to and from work. This result highlights the importance of good economic forecasts.

Because a large share of projects in our sample opened during or shortly after the Great Recession, we considered how this unexpected event may affect the accuracy of forecasts. To do so, we measured the accuracy of the same traffic forecasts against a counterfactual world in which the Great Recession did not occur. We did this by holding the forecasts constant and adjusting the traffic counts to offset the high unemployment rates observed from 2008 through 2014. Previous work estimated that median post-opening traffic volumes decrease 3% for each percentage point increase in the unemployment rate (Erhardt et al. 2020). We applied this rate to the difference between the opening-year unemployment rate and the pre-recession (2007) unemployment rate for the same county. This process results in adjusted counts that are higher than the true counts for the 6-year period in which unemployment exceeded its pre-recession levels. Then we compared the forecasts to the adjusted counts, as Table 4 shows.

For projects opening during the 2008 through 2014 period, post-opening counts are on average 8.2% lower than forecast. However, the recession-adjusted counts are 1.9% higher than forecast. When considering all projects, counts are on average 5.6% lower than forecast, but recession-adjusted counts are 1.3% higher than forecast. Figure 4 shows the effect of this adjustment visually, with the count adjustment shifting the distribution to the right and also spreading it out, as observed in the larger difference between the 5th and 95th percentiles. From these results we conclude that the Great Recession was the major cause of the observed shift, but that other factors cause random deviations resulting in the observed spread.

Year

For projects that opened in 2003 or later, traffic was on average lower than forecast; for projects that opened before 2003, traffic counts were on average higher than forecast. The negative deviation from forecasts in older projects aligns with most previous literature on toll-free road projects (Flyvbjerg et al. 2005; Parthasarathi and Levinson 2010; Welde and Odeck 2011; Nicolaisen 2012), but it is interesting that the average difference changes direction for more recent projects. Overall, more recent traffic forecasts were more accurate, as measured by the mean absolute PDF.

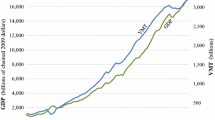

Several factors could explain these changes. First, the difference may be due to the Great Recession. Second, better data and improved forecasting methods may have led to more accurate forecasts. Third, the mix of projects in our data may have driven the change, such as the relative frequency of small versus large projects. We would expect non-capacity increasing projects to generate less induced demand than capacity increasing projects, so if induced demand were an important factor in traffic forecast accuracy, then the project mix matters. Fourth, vehicle miles traveled (VMT) per capita grew rapidly in the 1980s and 1990s. In the 2000s, this trend leveled off and declined, before subsequently rebounding in about 2013. Traffic forecasts might not adequately capture these macro-trends, which may extend beyond the years of the Great Recession and be driven largely by gross domestic product (GDP) per capita and fuel price (Bastian et al. 2016), with possible contributions from other factors such as discount air travel and the substitution of better information and communications technology for travel (Erhardt 2017).

To further consider these possibilities, we plotted the PDF by opening year alongside the vehicle miles traveled (VMT) per capita in the United States (Source: Davis 2019) in Fig. 5. While our data included projects outside the United States, similar VMT trends were observed in Europe (Bastian, Börjesson, and Eliasson 2016). To minimize the impact of changing project types, we excluded repaving projects from Fig. 5. Each point represents a single project, and the blue line is a 5-year rolling average of PDF. The figure shows noticeable correlation between PDF and VMT per capita. While VMT per capita was increasing, counted traffic volumes were higher than forecast, but after VMT per capita peaks, the opposite is true. This relationship suggests that traffic forecasts may not have fully captured the factors driving aggregate VMT trends. This relationship between traffic forecast accuracy, aggregate VMT trends and related factors, such as fuel price and economic growth, warrants further investigation.

Summary of findings

In this research we used a large database to explore the accuracy of road traffic forecasts and document the distribution of counted versus forecast traffic volumes. The descriptive statistics provide insight into the factors affecting forecast accuracy and the changes in accuracy over time. Because we selected projects based on the availability of data, and they did not constitute a random or representative sample of all projects, selection bias may influence these findings. A large portion of the sample comes from one agency that recorded nearly all forecasts since the early 2000s, but inclusion of projects from other agencies is limited to those having sufficient documentation. In addition, several key variables such as forecast method and agency type, are missing for portion of the sample. The missing data are not randomly distributed and instead relate to the practices of the agencies recording the data. Furthermore, 38% of the projects in our sample didn’t record a definite opening year and we created a buffer based on the project type to get the post-opening traffic count. Errors in specifying the project opening affect conclusions about induced demand and can influence forecast accuracy. Despite these limitations, it is appropriate to conclude:

-

1.

Observed traffic was 6% lower than forecast on average, but this difference is due to lower traffic following the Great Recession. If not for higher unemployment rates from 2008 through 2014, the traffic would have been 1% higher than forecast on average. This result was not consistent through time, however, as we note next.

-

2.

The mean absolute difference between measured traffic volumes and forecasts was 17%. In addition, 90% of opening-year traffic volumes were in the range of − 38 to + 37% of the forecast volumes. This spread of outcomes persists after adjusting for the shift due to the Great Recession, suggesting that there are reasons for inaccuracy beyond this unforeseen event. These values highlight that we should not consider traffic forecasts to be point estimate, but a range of possible outcomes.

-

3.

The average deviation changed direction: observed traffic on projects opening before 2003 was higher than forecast but starting in 2003 it was lower. This change is due in part to the effect of the Great Recession, but a notable shift in the average deviation remains even after adjusting for the effect of the economic downturn. Evolving forecasting methods, a different mix of projects, or exogenous trends could explain this shift. We observed this shift even when limiting the analysis to capacity expansion projects, suggesting that changing project types did not fully explain the change. The data showed a possible relationship to aggregate VMT trends. When VMT per capita was uniformly growing from the 1980s through early 2000s, observed traffic was on average higher than forecast. In the 2000s, however, VMT per capita leveled off, declined, then again increased. During this period observed traffic was on average lower than forecast. Evidence suggests that economic and fuel price changes determine much of the VMT change (Bastian et al. 2016). Those same factors may also explain changing traffic forecast accuracy. Future research should aim to untangle these relationships.

-

4.

Traffic forecasts became more accurate over time. In addition to the changes in average deviation noted above, projects opening more recently had a narrower spread of outcomes. Better data and improved forecasting methods may lead to this improvement, or it may relate to broader socioeconomic and project type trends noted above.

-

5.

Traffic forecasts were more accurate for higher volume roads and higher functional classes. The counted volumes on collector and arterial roads were more likely to be lower than the forecasts and percent deviation from forecasts had a greater spread than those on freeways. These challenges may be due to limitations of zone size and network detail, as well as less opportunity for offsetting inaccuracies on smaller facilities.

-

6.

Traffic forecasts were less accurate as the time span lengthens. Forecasts depend on exogenous projections which are more uncertain further into the future. They also depend on estimated relationships between travel behavior and those exogenous factors that may evolve over time. Put simply: it is easier to predict tomorrow’s traffic than it is to predict traffic 10 years into the future.

-

7.

Travel models produced more accurate forecasts than traffic count trends. Travel models are sensitive to the underlying determinants of traffic growth, including land-use changes and road network changes, so they were more accurate than traffic count trends.

-

8.

Some 95% of project forecasts meet the “half-lane rule”. Considering the level of service in each segment, the inaccuracy in forecast would not have affected about 95% of the projects to warrant additional or fewer lanes. A total of 84% of project forecasts fell within the maximum desirable deviation suggested by NCHRP report 765 (Horowitz et al. 2014). These deviations were unlikely to affect a project decision about the number of lanes on a highway.

The descriptive analysis presented here provides insight into the degree of confidence that planners and policy makers can expect from traffic forecasts. While traffic forecasts have improved, substantial deviation between counts and forecasts remains, and the data reveal several factors related to accuracy. Among these are economic conditions, and we found evidence of a major unforeseen event—the Great Recession—causing a systematic shift in accuracy. We are now in a much different disruption due to COVID-19. Our results are retrospective—they did not anticipate COVID-19, nor do they reflect future changes such as the impact of automation or other technology changes. It is reasonable to expect that there may be some major disruptive event within the scope of our next long-range forecasts, and our results should open a discussion on communicating uncertainty in forecasting.

Moreover, such events are not the only factors contributing to forecast inaccuracy as a substantial spread of percent difference from forecasts remains after adjusting for the recession. Factors like forecast methodology, forecast horizon and project type affect the accuracy as demonstrated in this paper, along with other unknown or unquantified factors. Among the factors we could not account for here are inaccurate travel model inputs, such as exogenous population, employment and fuel price forecasts. Research investigating the causes of traffic forecast inaccuracy for several “deep dives” reveals that model inputs account for an important portion of the forecast deviation, and their contribution varies substantially between projects (Erhardt et al. 2020).

Instead of dismissing forecasts as inherently subject to error, we recommend that agencies make forecasts more useful and more believable by acknowledging uncertainty as an element of all forecasting. Forecasts should not be a singular outcome, but a range of possible outcomes. In related work, we demonstrated how to estimate uncertainty windows around traffic forecasts from their historical accuracy (Hoque et al. in-review). Planners can combine uncertainty windows with decision intervals to determine whether a forecast deviation would change a project decision (Anam et al. 2020).

To facilitate further exploration of these data we created a repository that contains data for the 1291 projects analyzed in this paper (also provided as Online Resource 1) and an additional 1320 projects that had not opened to traffic when the analysis was completed. We invite others to further explore these data and to proactively collect data on their own forecasts and evaluate those forecasts. NCHRP 934 provides instructions for accessing and contributing to this repository and offers advice about establishing a systematic process of data collection and evaluation. Additional systematically collected data will enable future research to identify sources of inaccuracy, compare the accuracy of different types of travel models, and guide the development of more accurate forecasting methods. More accurate traffic forecasts and greater understanding of factors that influence accuracy will contribute to more efficient allocation of resources and build public confidence in the agencies that produce those forecasts.

Availability of data and materials

The data supporting this paper are available in the supplementary materials (Online Resource 1). A repository with additional data can be found at: https://github.com/uky-transport-data-science/forecastcarddata.

Code availability

Code in support of the data repository can be found at: https://github.com/uky-transport-data-science/forecastcards.

References

Anam, S., Miller, J.S., Amanin, J.W.: Managing traffic forecast uncertainty. ASCE-ASME J. Risk Uncertain. Eng. Syst. Part A Civil Eng. 6(2), 04020009 (2020). https://doi.org/10.1061/AJRUA6.0001051

Antoniou, C., Psarianos, B., Brilon, W.: Induced traffic prediction inaccuracies as a source of traffic forecasting failure. Transp. Lett. 3(4), 253–264 (2011). https://doi.org/10.3328/TL.2011.03.04.253-264

Bain, R.: Error and optimism bias in toll road traffic forecasts. Transportation 36(5), 469–482 (2009). https://doi.org/10.1007/s11116-009-9199-7

Bain, R., Polakovic, L.: Traffic Forecasting Risk Study Update 2005: Through Ramp-up and Beyond. Standard & Poor’s, London. http://toolkit.pppinindia.com/pdf/standard-poors.pdf (2005). Accessed 06 Oct 2016

Bastian, A., Börjesson, M., Eliasson, J.: Explaining ‘peak car’ with economic variables. Transp. Res. Part A Policy Pract. 88(June), 236–250 (2016). https://doi.org/10.1016/j.tra.2016.04.005

Buck, K., Sillence, M.: A Review of the accuracy of Wisconsin’s traffic forecasting tools. In: Transportation Research Board 93rd Annual Meeting. https://trid.trb.org/view/2014/C/1287942 (2014). Accessed 06 Oct 2016

Button, K.J., Doh, S., Hardy, M.H., Yuan, J., Zhou, X.: The accuracy of transit system ridership forecasts and capital cost estimates. Int. J. Transp. Econ. Riv. Int. Econ. Trasp. 37(2), 155–168 (2010)

Collaboration for Nondestructive Testing. n.d.: Uncertainty Terminology. https://www.nde-ed.org/GeneralResources/ErrorAnalysis/UncertaintyTerms.htm. Accessed 2 July 2020

Davis, J.: U.S. VMT Per Capita By State, 1981–2017. Eno Center for Transportation (blog). https://www.enotrans.org/eno-resources/u-s-vmt-per-capita-by-state-1981-2017/ (2019). Accessed 7 June 2019

Duru, O.: The role of predictions in transport policy making and the forecasting profession: misconceptions, illusions and cognitive bias. SSRN Electron. J. (2014). https://doi.org/10.2139/ssrn.2442658

Eliasson, J., Fosgerau, M.: Cost overruns and demand shortfalls—deception or selection? Transp. Res. Part B Methodol. 57, 105–113 (2013). https://doi.org/10.1016/j.trb.2013.09.005

Erhardt, G.D.: Conceptual models of the effect of information and communications technology on long-distance travel demand. Transp. Res. Rec. J. Transp. Res. Board 2658, 26–34 (2017). https://doi.org/10.3141/2658-04

Erhardt, G., Schmitt, D., Hoque, J., Chaudhary, A., Rapolu, S., Kim, K., Weller, S., et al.: Traffic forecasting accuracy assessment research. National Cooperative Highway Research Program NCHRP Report 934. Transportation Research Board (2020). https://doi.org/10.17226/25637

Flyvbjerg, B.: Measuring inaccuracy in travel demand forecasting: methodological considerations regarding ramp up and sampling. Transp. Res. Part A Policy Pract. 39(6), 522–530 (2005). https://doi.org/10.1016/j.tra.2005.02.003

Flyvbjerg, B.: Policy and planning for large-infrastructure projects: problems, causes, cures. Environ. Plan. B Plan. Des. 34(4), 578–597 (2007). https://doi.org/10.1068/b32111

Flyvbjerg, B., Holm, M.K.S., Buhl, S.L.: How (in)accurate are demand forecasts in public works projects? The case of transportation. J. Am. Plan. Assoc. 71(2), 131–136 (2005)

Flyvbjerg, B., Skamris Holm, M.K., Buhl, S.L.: Inaccuracy in traffic forecasts. Transp. Rev. 26(1), 1–24 (2006)

Giaimo, G., Byram, M.: Improving project level traffic forecasts by attacking the problem from all sides. Presented at the The 14th Transportation Planning Applications Conference, Columbus (2013)

Hartgen, D.T.: Hubris or humility? Accuracy issues for the next 50 years of travel demand modeling. Transportation 40(6), 1133–1157 (2013). https://doi.org/10.1007/s11116-013-9497-y

Hoque, J.M., Erhardt, G.D., Schmitt, D., Chen, M., Wachs, M.: Estimating the uncertainty of traffic forecasts from their historical accuracy. Transp. Res. Part A Policy Pract. (in-review)

Horowitz, A., Creasey, T., Pendyala, R., Chen, M.: Analytical travel forecasting approaches for project-level planning and design. Project 08-83. Transportation Research Board (2014)

Ismart, D.: Calibration and Adjustment of System Planning Models. Federal Highway Administration, Washington, DC (1990)

ISO 5725-1: Accuracy (Trueness and Precision) of Measurement Methods and Results — Part 1: General Principles and Definitions. https://www.iso.org/obp/ui/#iso:std:iso:5725:-1:ed-1:v1:en (1994). Accessed 03 July 2020

Kain, J.F.: Deception in Dallas: strategic misrepresentation in rail transit promotion and evaluation. J. Am. Plan. Assoc. 56(2), 184–196 (1990)

Kriger, D., Shiu, S., Naylor, S.: Estimating toll road demand and revenue. Synthesis of Highway Practice NCHRP Synthesis 364. Transportation Research Board. https://trid.trb.org/view/2006/M/805554 (2006). Accessed 06 Oct 2016

Lewis-Workman, S., White, B., McVey, S., Spielberg, F.: The Predicted and Actual Impacts of New Starts Projects. US Department of Transportation Federal Transit Administration, Washington, DC (2008)

Li, Z., Hensher, D.A.: Toll roads in Australia: an overview of characteristics and accuracy of demand forecasts. Transp. Rev. 30(5), 541–569 (2010). https://doi.org/10.1080/01441640903211173

Marlin Engineering: Traffic Forecasting Sensitivity Analysis. TWO#13 (2015)

Miller, J.S., Anam, S., Amanin, J.W., Matteo, R.A.: A Retrospective Evaluation of Traffic Forecasting Techniques. FHWA/VTRC 17-R1. Virginia Transportation Research Council. http://ntl.bts.gov/lib/37000/37800/37804/10-r24.pdf (2016)

Næss, P., Nicolaisen, M.S., Strand, A.: Traffic forecasts ignoring induced demand: a shaky fundament for cost-benefit analyses. Eur. J. Transp. Infrastruct. Res. 12(3), 291–309 (2012). https://doi.org/10.18757/ejtir.2012.12.3.2967

Nicolaisen, M.S.: Forecasts: Fact or Fiction?: Uncertainty and Inaccuracy in Transport Project Evaluation. PhD thesis, Videnbasen for Aalborg UniversitetVBN, Aalborg UniversitetAalborg University, Det Teknisk-Naturvidenskabelige FakultetThe Faculty of Engineering and Science. http://vbn.aau.dk/ws/files/81035038/Nicolaisen_2012_Forecasts_Fact_or_Fiction_PhD_Thesis.pdf (2012). Accessed 23 Sept 2014

Nicolaisen, M.S., Driscoll, P.A.: Ex-post evaluations of demand forecast accuracy: a literature review. Transp. Rev. 34(4), 540–557 (2014). https://doi.org/10.1080/01441647.2014.926428

Parthasarathi, P., Levinson, D.: Post-construction evaluation of traffic forecast accuracy. Transp. Policy 17(6), 428–443 (2010). https://doi.org/10.1016/j.tranpol.2010.04.010

Pedersen, N.J., Samdahl, D.R.: Highway Traffic Data for Urbanized Area Project Planning and Design. National Cooperative Highway Research Program NCHRP 255. Transportation Research Board, Washington, DC (1982)

Pickrell, D.H.: Urban Rail Transit Projects: Forecast Versus Actual Ridership and Costs. Final Report. https://trid.trb.org/view.aspx?id=299240 (1989). Accessed 06 Oct 2016

Schmitt, D.: A transit forecasting accuracy database: beginning to enjoy the ‘outside view.’ In: Transportation Research Board 95th Annual Meeting. Washington, DC (2016)

Spielberg, F., Lewis-Workman, S., Buffkin, T., Clements, S., Swartz, J.: The predicted and actual impacts of new starts projects. US Department of Transportation Federal Transit Administration, Washington, DC (2003)

van Wee, B.: Large infrastructure projects: a review of the quality of demand forecasts and cost estimations. Environ. Plan. B Plan. Des. 34(4), 611–625 (2007). https://doi.org/10.1068/b32110

Volker, J.M.B., Lee, A.E., Handy, S.: Induced vehicle travel in the environmental review process. Transp. Res. Rec. (2020). https://doi.org/10.1177/0361198120923365

Voulgaris, C.T.: Trust in forecasts? Correlates with ridership forecast accuracy for fixed-guideway transit projects. Transportation 1–39 (2019)

Webber, M.W.: The BART Experience—What Have We Learned? Institute of Urban & Regional Development, October. http://escholarship.org/uc/item/7pd9k5g0 (1976). Accessed 06 Oct 2016

Welde, M., Odeck, J.: Do planners get it right? The accuracy of travel demand forecasting in Norway. EJTIR 1(11), 80–95 (2011). https://doi.org/10.18757/ejtir.2011.11.1.2913

Acknowledgements

We compiled the data used this research as part of NCHRP Project 08-110. The final report from that project was published as NCHRP Report 934: Traffic Forecasting Accuracy Assessment Research, which reports accuracy measures, plus a series of ex-post case studies and a guidance document with recommendations for improving practice. This paper was written after the completion of that project as an independent contribution. It summarizes key findings about forecast accuracy and extends the analysis to consider change to forecast accuracy over time and the effects of the Great Recession, as well as to more clearly place the results in the context of the academic literature. We thank the additional co-authors of NCHRP 934; our program officer, Larry Goldstein; the project panel, the participants of the project workshop, and especially the individuals and agencies that provided data in support of this study, including: Mark Byram and Greg Giaimo, Ohio data; Pavithra Parthasarathi and David Levinson, Minnesota data; Don Mayle, Michigan data; Shi-Chiang Li, Hui Zhao and Jason Learned, Florida data; Jonathan Reynolds, Kentucky data; John Miller, Virginia data; Morten Skou Nicolaisen, European data.

Funding

This research was funded through the Cooperative Research Programs of the Transportation Research Board.

Author information

Authors and Affiliations

Contributions

JMH: software, investigation, formal analysis, writing—original draft. GE: conceptualization, methodology, supervision. Writing—reviewing and editing. DS: conceptualization, methodology, data curation, supervision. MC: methodology, writing—reviewing and editing. AC: data curation. MW: writing—reviewing and editing. RS: writing—reviewing and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Hoque, J.M., Erhardt, G.D., Schmitt, D. et al. The changing accuracy of traffic forecasts. Transportation 49, 445–466 (2022). https://doi.org/10.1007/s11116-021-10182-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11116-021-10182-8