Abstract

This work shows that chaotic signals with different power spectrum and different positive Lyapunov exponents are robust to linear superposition, meaning that the superposition preserves the Lyapunov exponents and the information content of the source signals, even after being transmitted over non-ideal physical medium. This work tackles with great detail how chaotic signals and their information content are affected when travelling through medium that presents the non-ideal properties of multi-path propagation, noise and chaotic interference (linear superposition), and how this impacts on the proposed communication system. Physical media with other non-ideal properties (dispersion and interference with periodic signals) are also discussed. These wonderful properties that chaotic signals have allow me to propose a novel communication system based on chaos, where information composed from and to multiple users each operating with different base frequencies and that is carried by chaotic wavesignals, can be fully preserved after transmission in the open air wireless physical medium, and it can be trivially decoded with low probability of errors.

Similar content being viewed by others

1 Introduction

Communication systems are designed to cope with the constraints of the physical medium. Previous works have shown that chaos has intrinsic properties that make it attractive to sustain the modern design of communication systems.

Take x(t) to represent a controlled chaotic signal and that encodes information from a single transmitter. Let r(t) represent the transformed signal that is received. Chaos has offered communication systems whose information capacity could remain invariant by a small increase in the noise level, [1,2,3,4] and could be robust to filtering [5,6,7] and multi-path propagation [7], intrinsically present in the wireless communication. Decoding of r(t) can be trivial, with the use of a simple threshold technique [7, 8]. Chaos allows for simple controlling techniques to encode digital information [9, 10]. For the wonderful solvable systems proposed in [11, 12], simple analytical expressions to generate the controlled signal x(t) can be derived [13, 14]. Moreover, these systems have matched filters whose output maximizes the signal-to-noise ratio (SNR) of r(t), thus offering a practical and reliable way to decode transmitted information. Chaos allows for integrated communication protocols [15]; it offers viable solutions for the wireless underwater [16, 17], digital [18] and optical [19] communication, radar applications [20], and simultaneously radar communication [21]. Chaotic communication has been experimentally shown to achieve higher bit rate in a commercial wired fibre-optic channel [22] and lower bit error rate (BER) than conventional wireless non-chaotic baseband waveform methods. Moreover, chaos-based communication only requires equipment that is compatible with the today’s commonly used ones [14].

Several works on communication with chaos have focused on a system composed by two users, the transmitter and the receiver. Some works were motivated by the master–slave synchronization configuration [23] where the master (the transmitter) sends the information to the slave (the receiver) [1]. The understanding of how two users communicate cannot always capture the complexities involved in even simple networked communication systems. It is often more appropriate to break down this complex communication problem into a much simpler problem consisting of two configurations, the uplink and the downlink. The uplink configuration would render us an understanding of how several nodes that transmit different information signals can be processed in a unique central node. The downlink configuration would render us an understanding about how a unique central node that transmits a single signal can distribute dedicated information for several other nodes. This strategy to break a complex network problem into several smaller networks being described by the uplink and the downlink configurations, which is crucial to understand very complex technologically oriented flow networks, such as the communication and power networks, can also shed much light into the processing of information in networks as complex as the brain. The uplink configuration would contribute to a better understanding about how pre-synaptic neurons transmit information to a hub neuron, and the downlink configuration would contribute to a better understanding about how post-synaptic neurons can process information about a hub neuron. This paper focuses on information signals that are linearly composed, and thus, this approach could in principle be used to explain communication in neurons doing electric synapses. However, the main focus of the present paper is about the understanding of how superimposed chaotic signals can be robust to non-ideal properties of physical medium that is present in wireless communication networks.

A novelty of this work is to show that chaos can naturally allow for communication systems that operate in a multi-transmitter/receiver and multi-frequency environment. In a scenario where the received signal, r(t), is composed by a linear superposition of chaotic signals of two transmitters \(x^{(1)}\) and \(x^{(2)}\) (or more), as in \(r(t)={\tilde{\gamma }^{(1)}} x^{(1)}(t) + {\tilde{\gamma }^{(2)}}x^{(2)}(t) + w(t)\), each signal operating with different frequency bandwidths and each encoding different information contents with different bit rates, with \({\tilde{\gamma }^{(i)}} \in \mathfrak {R}\) and w(t) representing additive white Gaussian noise (AWGS) modelling the action of a physical medium in the composed transmitted signal, is it possible to decompose the source signals, \(x^{(1)}(t)\) and \(x^{(2)}(t)\), out of the received signal r(t), and recover (i.e. decode) their information content? My work explores the wonderful decomposability property chaotic signals have to positively answer this question, enabling a solution for a multi-source and multi-frequency communication.

In this paper, I show that for the no-noise scenario, the spectrum of positive Lyapunov Exponents (LEs) of r(t) is the union of the set of the positive Lyapunov exponents of both signals \(x^{(1)}(t)\) and \(x^{(2)}(t)\). This is demonstrated in the main manuscript in Sect. 2.2 for the system used to communicate. “Appendix C” generalizes this result to superimposed signals coming from arbitrary chaotic systems. And what is more, for the system proposed in [11], the information content of the composed signal r(t) preserves the information carried by the source signals, this being linked to the preservation of the positive Lyapunov exponents. This result is fully explained in Sect. 2.3, where I present the information encoding capacity of the proposed communication system, or in other words, the rate of information contained the linearly composed signal of several chaotic sources. I also discuss in this section how this result extends to communication systems that have users communicating with other chaotic systems, different from the one in Ref. [11]. Preservation of the Lyapunov exponents in the composed signals of arbitrary chaotic systems is demonstrated; thanks to an equivalence principle deterministic chaotic systems have that permits that the composed signal can be effectively described by a signal departing from a single source but with time-delayed components. Moreover, when the physical medium where the composed signal is transmitted has noise, it is possible to determine appropriate linear coefficients \(\tilde{\gamma }^{(i)}\) (denoted as power gains, see Eq. (17) in Sect. 2.4), which will depend on the natural frequency of the user, on the attenuation properties of the media and the number of users (end of Sect. 2), such that the information content carried by the composed signal r(t) can be trivially decomposed, or decoded, by a simple threshold (see Eq. (18)), with low probability of errors, or no errors at all for sufficiently small noise levels. In the latter case, that would imply that the information encoding capacity provides the information capacity of the system, or the rate of information received/decoded.

The scientific problem to decompose a linear superposition of chaotic signals that renders the mathematical support for the proposed communication system is similar to that of blind source separation for mixed chaotic signals [24] or that of the separation of a signal composed of a linear superposition of independent signals [25]. However, these separation methods require long measurements, and additionally either several measurements of multiple linear combinations of the source signals, or source signals that have similar power spectra and that are independent. These requirements cannot be typically fulfilled by a typical wireless communication environment, where information must be decoded even when very few observations are made, signals are sent only once with constant power gains, source signals can have arbitrary natural frequencies, and they can be dependent.

I also show in Sect. 3 that in the single-user communication system proposed in the work of [11], with a chaotic generator for the source signal and a matched filter to decode information from the received signal corrupted by noise, the chaotic generator has no negative LEs, which leads to a stable matched filter with no positive LEs, and that can therefore optimally filter noise. Moreover, I show that the single-user communication system formed by the chaotic generator plus the matched filter can be roughly approximated by the unfolded Baker’s map [26]. This understanding permits the conclusion that in the multi-user environment the matched filter that decomposes the source signal of a user from the received composed signal r(t) is the matched filter of that user alone.

I will then study, in Sect. 4, the information capacity of the proposed communication system in prototyped wireless network configurations, and in Sect. 4.1, I will compare its performance with a non-chaotic communication method that is the strongest candidate for the future 5G networks, the non-orthogonal multiplex access (NOMA), and will show that the proposed multi-user chaos-based communication system can (under certain configurations) communicate at higher bit rates for large noise levels in the physical medium.

In Sect. 5, I will discuss how communication with chaos can be made robust to other types of non-ideal physical media (also refereed as a “channel of communication”) [27] that present dispersion and whose signals interfere with other period (non-chaotic) signals.

Finally, for a succinct presentation on the historical developments of chaos for communication, see “Appendix D”.

2 Linear composition of chaotic signals, the preservation of the Lyapunov exponents and encoding for transmission

A wonder of chaotic oscillations for communication is the system proposed in Ref. [11]. With an appropriate rescaling of time to a new time frame \(\mathrm{d}t{^{\prime }}=\gamma \mathrm{d}t\), it can be rewritten as

where \(s(t) \in (-1,1)\) is a 2-symbol alphabet discrete state that switches the value by the signum function \(s(t)={x(t)/|x(t)|}\), whenever \(|x(t)|<1\) and \(\dot{x}=0\). If the information to be communicated is the binary stream \(\mathbf{b} =\{b_0,b_1,b_2, \ldots \}\) (\(b_n \in \{0,1\}\)), a signal can be created such that \(s(t) = (2b_n-1)\), for \(nT \le t < (n+1)T\) [13]. In this new time frame, the natural frequency is \(f(\gamma )=1/\gamma \) (\(\omega =2\pi f\)), the period \(T(\gamma )=1/f(\gamma )=\gamma \), and \(\beta (\gamma ) =\beta (\gamma =1) f(\gamma )\), where \(0 < \beta (\gamma =1) \le \ln {(2)}\). More details can be seen in “Appendix A”. \(\beta (\gamma =1)\) is a parameter, but with an important physical meaning. It represents the Lyapunov exponent (LE) of the system in units of nepits per period (or per cycles), which is also equal to the rate of information produced by the chaotic trajectory in nepits per period. On the other hand, \(\beta (\gamma )\) represents the LE in units of nepits per unit of time, which is also equal to the rate of information produced by the chaotic trajectory in nepits per unit of time. See Sect. 2.3.

The received signal in the noiseless wireless channel from user k can be modelled by

where there are L propagation paths, each with an attenuation factor of \(\alpha _l\) and a time delay \(\tau _l\) for the signal to arrive to the receiver along the path l (with \(0=\tau _0< \tau _2< \cdots < \tau _{L-1}\)), and \( \gamma ^{(k)}\) is an equalizing power gain to compensate for the amplitude decay due to the attenuation factor. The noisy channel can thus be modelled by \(r(t)+w(t)\), where w(t) is an AWGN.

Let me consider the time-discrete dynamics of the signal generated by a single user \(r^{(k)}(t) = r(t)\) (with \(\gamma ^{(k)}=1\)), whose signal is sampled at frequency f, so \(r_n=r(n/f)\) are collected, then the return map (see “Appendix B”) of the received signal (assuming for simplicity that \( \gamma ^{(k)}\)=1) is given by

where \(n^{\prime } = n - \lceil f \tau _l \rceil \) and \(\mathcal {K}_l = e^{-\beta (\tau _l - \lceil \tau _l/T \rceil T)}[\cos {\left( 2\pi \frac{\tau _l}{T}\right) } + \frac{\beta }{\omega }\sin {\left( 2\pi \frac{\tau _l}{T}\right) }]\) where \(s_n\) represents the binary symbol associated with the time interval \(nT \le t <(n+1)T\), so \(s_n=s(t=nT)\), \(\lceil f \tau _l \rceil \) representing the ceiling integer of \(f \tau _l\), and \(\frac{\beta }{f}\) denotes \( \frac{\beta (\gamma )}{f(\gamma )} = \beta (\gamma =1)\). Equation (3) extends the result in [28], valid for when \(\tau _l = mT\), with \(m \in \mathbb {N}\), when \(\mathcal {K}_l=1\).

The Lyapunov exponent (LE) of the 1-dimensional map in Eq. (3) in units of nepits per period for multi-path propagation, denoted by \(\chi \), (which is equal to the positive LE of the continuous dynamics—see Sect. I of Supplementary Material (SM)) is equal to \(\chi = \frac{\beta }{f} = \beta (\gamma =1)\) [nepits per period], since \(\chi = \lim _{n\rightarrow \infty } \frac{1}{n} \ln {\left| \prod _{i=0}^{n} \frac{dr_{n+1}}{dr_n} \right| }\). This LE can be calculated in nepits per unit of time by simply making \(\frac{\chi }{T} = \beta \). LE can be calculated in units of “bits per period” by using binary logarithm instead of natural logarithm. This is also equal to the LE of the return map

obtained from Eq. (3) when there is only a direct path, \(L=1\). Notice also that the constant attenuation factor \(\alpha _l\) does not contribute to this LE, only acting on the value of the binary symbols. This is to be expected [29].

2.1 Linear composition of chaotic signals for the uplink and the downlink communication configurations

The analysis will focus on two prototype wireless communication configurations: the uplink and the downlink. In the uplink communication, several users transmit signals that become linearly superimposed when they arrive to a base station antenna (BS). In the downlink communication, a BS sends 1 composed signal (linear superposition of chaotic signals) signal containing information to be decomposed (or decoded) by several users.

I propose a chaos-based communication system, named “Wi-C1”, that allows for multi-user communication, where one of the N users operates with its own natural frequency. It is assumed that other constraints of the wireless medium are present, such as multi-path propagation and AWGN. Wi-C1 with 1 BS can be modelled by a linear superposition of chaotic signals as

\(O(t)_{u}\) in Eq. (5) represents the composed signal received at BS from all users in the uplink. This signal will be the focus of the paper from now on. \(O^{(m)}(t)_{d}\) represents the signal received by user m from a composed signal transmitted by the BS in the downlink. w(t) represents an AGWN at the base station, and \(w^{m}(t)\), for \(m=1,\ldots ,N\) represents AGWN at the user m. \(\alpha _l^{(k)}\) is the attenuation factor between the BS and the user k along path l, and \(\gamma ^{(k)}\) and \(\tilde{\gamma }^{(k)}\) are power gains. \(L^{(k)}\) are the number of propagation paths between user k and the BS. In this work, we will choose \(\gamma ^{(k)} = 1/\alpha _l^{(k)}\), to compensate for the medium attenuation, and \(\tilde{\gamma }^{(k)}\) is a power gain to be applied at the transmitter or BS and that can be identified as being the linear coefficients of the superposition of chaotic signals.

I will now consider the uplink, where 2 users send signals that are linearly composed by a superposition that happens at the BS, each user or source signal is identified with an index \(k=\{1,2\}\) and will in most of the following results neglect in Eq. (3) the contribution from other propagation paths other than the direct (\(L^{(1)}=L^{(2)}=1\)). Assume user 1 to operate at frequency \(f^{(1)}=f=1/T\) and user 2 at frequency \(f^{(2)}=2f=2/T\), and \(\gamma ^{(k)}=1\). In order to reduce the continuous mathematical description of the uplink communication, including the decoding phase to the 2D unfolded Baker’s map, I will only treat cases for which the natural frequency of user k is given by \(f^{(k)} = 2^{m} f\), with \(m \in \mathbb {N}\), the parameter \(\beta ^{(k)}=f^{(k)}\ln {(2)}\), and f is the base frequency of user 1, which will be chosen to be 1. At time \((n+1)T\), the signal received by BS from user k=1 as a function of the signal received at nT is described by

At time \((n+1)T\), the signal received by BS from user k=2 as a function of the signal received at nT is

where the \(r_{2n}\) represents the value of \(r^{(2)}(t=nT)\) (recall that at each time interval T, user 2 chaotic system completes two full cycles each with period T/2). Notice that the LE of Eq. (7) will provide a quantity in term of 2 cycles of user 2, but 1 cycle in terms of user 1. So, the LE of Eq. (7) is equal to \(\ln {(4)}\) nepits per each period T, which is twice the LE of Eq. (6) for that same period T. Comparison of both LEs become easier if we calculate them in units of nepits per unit of time. LE for user 1 is \(\beta ^{(1)}=f^{(1)}\ln {(2)}=\ln {(2)}\) and that for user 2 is \(\beta ^{(2)}=f^{(2)}\ln {(2)}=2\ln {(2)}\). This is because user 2 has a frequency twice larger than that of user 1 [30]. Since these two maps are full shift, their LE equals their Shannon entropy, so their LE represents the encoding capacity (in units of nepit). Doing the coordinate transformation \(r^{(1)}_{n}=2u_n^{(1)}-1\) (for the map in (6)) and \(r^{(2)}_{2n}=2u_n^{(2)}-1\) (for the map in (7)) and choosing \(\gamma ^{(k)}=1/\alpha ^{(k)}\), Eqs. (6) and (7) become, respectively,

where \(u^{(k)}_n \in [0,1]\) (in contrast to \(r_n^{(k)} \in [-1,1]\)), and \(b^{(1)}_n=1/2(s^{(1)}_n+1) \in (0,1)\), and \(b^{(2)}_n=(s^{(2)}_n+s^{(2)}_{n+1}/2) \in (0,1,2,3)\). Equation (8) is simply the Bernoulli shift map, representing the discrete dynamics of user 1 (the signal received after equalizing for the attenuation), and Eq. (9) is the second iteration of the shift map representing the discrete dynamics of user 2 (after equalizing the attenuation, by doing \(\gamma ^{(k)}=1/\alpha ^{(k)}\)).

Figure 1A, B shows in red dots solutions for Eqs. (8) and (9), respectively. Corresponding return maps of the discrete set of points \(x_n^{(k)}\) is constructed directly from the continuous solution of Eq. (1) with frequency given by \(f^{(k)}=kf\) by taking points at the time \(t=nT\), and doing the normalization as before \(x^{(k)}_{n}=2x_n^{(k)}-1\) (so, \(x_n^{(k)} \in [0,1]\)) is shown by the black crosses.

The composed received signal at discrete times nT, a linear superposition of 2 chaotic signals with different power spectrum, is given by

Generalization for N source signals can be written as \(O_{n}=\sum _{k=1}^{N} \tilde{\gamma }^{(k)}u^{(k)}_n\). At the BS, the received signal is \(O_n + w_n\), so it is corrupted by an AGWN \(w_n\) that has a signal-to-noise rate (SNR) in dB as compared with the power of the signal \(O_n\). The received discrete-time return map, for \(w_n=0\), can be derived by putting Eqs. (8) and (9) into Eq. (10)

where Eq. (12) is just Eq. (8).

2.2 Preservation of LEs for linear compositions of chaotic source signals

The system of Eqs. (11) and (12) has two distinct positive LEs, one along the direction \({\varvec{v}}^{(1)} = (0 \,1)\) associated with the user 1 and equal to \(\chi ^{(1)}=\ln {(2)}\) nepit per period T, and another along the direction \({\varvec{v}}^{(2)} = (1\, 0)\), which can be associated with the user 2 and equals \(\chi ^{(2)}=\ln {(4)}=2ln{(2)}\) nepit per period T.

To calculate the LEs of this 2-dimensional system (see [29, 31]), we consider the expansion of a unitary basis of orthogonal perturbation vectors \(\mathbf {v}\) and calculate them by

where \(||\varvec{v}||\) is the norm of vector \(\mathbf {v}\), \(\varvec{M}=\varvec{J}^n\), and \(\varvec{J}=\left( \begin{array}{cc} 4 &{} -2\tilde{\gamma }^{(1)} \\ 0 &{} 2\end{array} \right) \). Thus, combining chaotic signals with different frequencies as a linear superposition described by Eq. (10) preserves the spectra of LEs of the signals from the users alone. This is a hyperbolic map where the sum of the positive Lyapunov exponents is equal to the Kolmogorov–Sinai’s entropy, which represents the information rate. Consequently, the information received is equal to the sum of the information transmitted by both users, for the no-noise scenario. More details about this relationship are presented in Sect. 2.3. In other words, a linear superposition of chaotic signals as represented by Eq. (10) does not destroy the information content of each source signal. Preservation of the spectrum of the LEs in a signal that is a linear superposition of chaotic signals with different power spectrum is a universal property of chaos. Demonstration is provided in “Appendix C”, where I study signals composed by two variables from the Rössler attractor, user 2 with a base frequency that is Q times that of the user 1. This demonstration uses an equivalence principle. Every wireless communication network with several users can be made equivalent to a single user in the presence of several imaginary propagating paths. Attenuation and power gain factors need to be recalculated to compensate for a signal that is in reality departing from user 2 but that is being effectively described as departing from user 1. Suppose the 2 users case, both with the same frequency \(f^{(k)}=f\), in the uplink scenario. The trajectory of user 2 at a given time t, \(x^{(2)}(t)\), can be described in terms of the trajectory of the user 1 at a given time \(t-\tau \). So, the linear superposition of 2 source signals in Eq. (5) can be simply written as a single source with time-delayed components as

In practice, \(\tau \) can be very small, because of the sensibility to the initial conditions and transitivity of chaos. For a small \(\tau \) and \(\epsilon \), it is true that \(|x^{(2)}(t) - x^{(1)}(t-\tau )| \le \epsilon \), regardless of t.

This property of chaos is extremely valuable, since when extending the ideas of this work to arbitrarily large and complex communicating networks, one might want to derive expressions such as in Eqs. (11) and (12) to decode the information arriving at the BS. Details of how to use this principle to derive these equations for two users with \(f^{(2)}=2f^{(1)}\) and also when \(f^{(2)}=f^{(1)}\) are shown in Sect. II of SM.

2.3 Lyapunov exponents, the information carried by chaotic signals and the information capacity of Wi-C1

Pesin’s equality relates positive Lyapunov exponents (LEs) with information rate of a chaotic trajectory [32]: The sum of positive LEs of a chaotic trajectory is equal to the Kolmogorov–Sinai entropy, denoted \(H_{KS}\) (a kind of Shannon entropy rate), a quantity that is considered to be the physical entropy of a chaotic system. This is always true for chaotic systems that possess the Sinai–Ruelle–Bowen (SRB) measure [33], or more precisely that have absolutely continuous conditional measures on unstable manifolds. In this work, I have considered a parameter configuration such that the system used to generate chaotic signals is described by the shift map, a hyperbolic map, which has SRB measure. Therefore, the amount of information transmitted by a user is given by the LE of the system in Eq. (1).

I have demonstrated that linearly composed chaotic signals with different natural frequencies preserve all the positive LEs of the source signals (“Appendix C”). By a chaotic signal, I mean a 1-dimensional scalar time-series, or simply a single variable component of a higher-dimensional chaotic trajectory. If the chaotic signals are generated by Eq. (1), their linear composition in Eqs. (11) and (12) is still described by a hyperbolic dynamics (possessing SRB measure), thus leading to a trajectory whose information content is given by the sum of the positive LEs, which happens to be equal to the sum of the LEs of the source signals. So, the information encoding capacity in units of nepits per unit of time of the Wi-C1, denoted by \(\mathcal {C}_e\), when users use the system in Eq. (1) to generate chaotic signals, is given by the sum of Lyapunov exponents of the source signals:

where \( f^{(k)}\) and \(\beta (\gamma =1)^{(k)}\) and are the natural frequency of the signal and the LE of user k (in units of nepits per unit of time), respectively. By information encoding capacity, I mean the information rate of a signal that is obtained by a linear composition of chaotic signals. If linear coefficients (power gains) are appropriately chosen (see next Sect. 2.4) and noise is sufficiently low (see Sect. 4), then the information encoding capacity of Wi-C1 is equal to the information capacity of Wi-C1, or the total rate of information being received/decoded.

It is worth discussing, however, what would be the information capacity of Wi-C1, in case one, considers users communicating with other chaotic systems than that described by Eq. (1). My result in “Appendix C” demonstrates that all the positive Lyapunov exponents of the chaotic source signals are present in the spectra of the linearly composed chaotic signals constructed using different chaotic signals (that may have different natural frequencies) and being generated by the same chaotic system.

Recent work [34, 35] has shown that there is a strong link between the sum of the positive LEs and the topological entropy, denoted \(H_T\), in a chaotic system. The topological entropy measures the rate of exponential growth of the number of distinct orbits, as we consider orbits with growing periods. For Eq. (1), its topological entropy equals its positive LE and its Kolmogorov–Sinai entropy. So, \(H_T = \beta (\gamma )=H_{KS}\) (in units of bits per unit of time). That is not always the case. Denoting the sum of LEs of a chaotic system by \(\sum ^+ \), one would typically expect that \(H_T \ge H_{KS}\) and moreover that \(\sum ^+ \ge H_{KS}\). However, the recent works in Refs. [34, 35] have shown that there are chaotic systems for which \(H_T = \sum ^+ \).

This work considers that the proposed communication system Wi-C1 has users that use chaotic signals generated by means of controlling (class (i) discussed in Sect. 1), so that the trajectory can represent the desired information to be transmitted. The work in Ref. [9] has shown that the information encoding capacity of a chaotic trajectory produced by control is given by the topological entropy of the non-perturbed system, not by its Kolmogorov–Sinai entropy. Therefore, if only a single user is being considered in the communication (e.g. only one transmitter), and this user generates chaotic signals for which \(H_T = \sum ^+ \), the information encoding capacity of this communication system would be given by \(\sum ^+\).

Let us now discuss the multi-user scenario, still assuming that the users generate their source chaotic signals using systems for which \(H_T = \sum ^+ \). As demonstrated in “Appendix C”, all the positive Lyapunov exponents of chaotic source signals are preserved in a linearly composed signal. Moreover, since that LEs of a chaotic signal are preserved by linear transformations, and since a linear transformation to a signal does not alter its information content, it is suggestive to consider that the information capacity of this multi-user communication system would be given by the sum of the positive LEs of the chaotic source signals for each user. This, however, will require further analysis.

2.4 Preparing the signal to be transmitted (encoding): finding appropriate power gains

In order to avoid interference or false near neighbours crossing in the received composed signal, allowing one to discover the symbols \(b^{(1)}\) and \(b^{(2)}\) only by observing the 2-dimensional return map of \(O_{n+1} \times O_{n}\) that maximizes the separation among the branches of the map to avoid mistakes induced by noise, we need to appropriately choose the power gains \(\tilde{\gamma }^{(k)}\). Looking at the mapping in Eq. (11), the term \(2^{f^{(2)}} O_n\) represents a piecewise linear map with \(2^{f^{(2)}}\) branches. The spatial domain for each piece has a length denoted by \(\zeta (f^{(2)})\). The term \((2^{f^{(2)}} - 2) \tilde{\gamma }^{(1)} u^{(1)}_{n}\) representing the dynamics for the smallest oscillatory frequency is described by a piecewise linear map with \((2^{f^{(2)}} - 2)\) branches. To avoid interference, the return map for this term must occupy a length \(\zeta (f^{(1)})\) that is fully embedded within the domain for the dynamics representing higher-order frequencies. Assuming that for a given number of users N, all frequencies \(f^{(i)}\) with \(i=1,\ldots ,N\) are used; this idea can be expressed in terms of an equation where

Then, \(\tilde{\gamma }^{(k)}=\zeta (k)\), but for a received map within the interval [0, 1], normalization of the values o \(\tilde{\gamma }^{(k)}\) by

For 2 users (\(N=2\)) and \(\zeta (1)=0.2\), the appropriate power gains to be chosen in the encoding phase and that allows for the decomposition (or decoding) of the information content of the composed received signal are given by \(\tilde{\gamma }^{(1)}=0.2\) and \(\tilde{\gamma }^{(2)}=0.8\). Using these values for \(\tilde{\gamma }^{(1)}\) and \(\tilde{\gamma }^{(1)}\) in Eq. (10) and considering an AWGN \(w_n\) with SNR of 40dB (with respect to the power of \(O_n\)) produces the return map shown by points in Fig. 2A, with 8 branches all aligned along the same direction (the branches would have the same derivative for the no noise scenario), which therefore prevents crossings or false near neighbours—and are also equally separated to avoid mistakes in the decoding of the information due to noise.

The choice of the power gains for the downlink configuration is similarly done as in the uplink configuration, taking into consideration that each user has its own noise level. This is shown in Sect. III of SM.

3 Decomposing the linear superposition of chaotic signals, and the decoding of signals and their information content

3.1 Decomposition (decoding) by thresholding received signal

Communication based on chaos offers several alternatives for decoding, or in other words, the process to obtain the information that is conveyed by the received signal. Assuming the received signal is modelled by Eqs. (11) and (12), with the appropriated power gains as in Eq. (17), the optimal 2-dimensional partition to decode the digital information is described by the same map of Eqs. (11) and (12) with a translation. For the case of 2 users in the uplink scenario, this translates into a 7-line partition

These partition lines for \(\tilde{\gamma }^{(1)}=0.2\) and \(\tilde{\gamma }^{(2)}=0.8\) are shown by the coloured straight lines in Fig. 2A. They allow for the decomposition/decoding of the digital (symbolic) information contained in the composed received signal.

3.2 Decomposition (decoding) by filtering received signal

A more sophisticated approach to decode information is based on a matched filter [11]. In here I show that the system formed by Eq. (1) and its matched filter can be approximately described by the unfolded Baker’s map, a result that allows us to understand that the recovery of the signal sent by a user from the composed signal solely depends on the inverse dynamics of this user. Details of the fundamentals presented in the following can be seen in Sect. IV of SM. If the equations describing the dynamics of the transmitted chaotic signal (in this case Eq. (1)) possess no negative Lyapunov exponents—as it is shown Sect. I of SM—attractor estimation of a noisily corrupted signal can be done using its time-inverse dynamics that is stable and possesses no positive LEs (shown in Sect. V of SM). The evolution to the future of the time-inverse dynamics is described by a system of ODE hybrid equations obtained by the time-rescaling \(\mathrm{d}/\mathrm{d}t^{\prime } = -\mathrm{d}/\mathrm{d}t\) applied to Eq. (1) resulting in

where the variable y represents the x in time reverse, and as shown in Sect. IV of SM, if \(\eta (t)\) is defined by \(\dot{\eta (t)}=x(t)-x(t-T)\) (defined as \(\dot{\eta (t)}=x(t+T)-x(t)\) in Ref. [11]) it can be roughly approximated to be equal to the symbol s(t).

Taking the values of y at discrete times at nT, writing that \(y(nT)=y_n\), and defining the new variable for users 1 and 2 as before \(y^{(1)}_n=2z^{(1)}_n -1\) and \(y^{(2)}_{2n}=2z^{(2)}_n -1\) if Eqs. (8) and (9) are map solutions of Eq. (1) (in the re-scaled coordinate system, with appropriate \(\gamma \) gains) for user k with frequencies \(f^{(k)}=k\), their inverse mapping the solution of Eq. (19) is given by

This map can be derived simply defining \(z^{(k)}_{n+1} = u^{(k)}_{n}\) and \(z^{(k)}_{n} = u^{(k)}_{n+1}\). We always have that \(\lfloor 2^{k}u^{(k)}_n \rfloor = b^{(k)}_n\). So, for any \(z^{(k)}_n \in [0,1]\) and which can be simply chosen to be equal to the received composed signal \(O_n\) (normalized such that \(\in [0,1]\)), it is also true that

So, if we represent an estimation of the transmitted symbol of user k by \(\tilde{b}^{(k)}_n\), then decoding of the transmitted symbol of user k can be done by calculating \(z^{(k)}_{n+1}\) using the inverse dynamics of the user k

and applying this value to Eq. (21). This means that the system formed by the variables \(u^{(k)}_{n},z^{(k)}_{n}\) is a generalization (for \(k \ne 1\)) of the unfolded Baker’s map [26], being described by a time-forward variable \(u^{(k)}_{n}\) (the Bernoulli shift for k=1), and its backward variable component \(z^{(k)}_{n}\).

Figure 2B demonstrates that it is possible to extract the signal of a user (user k=2) from the composed signal, \(O_n\) (Eq. (10)), by setting in Eq. (22) that \(z^{(2)}_n=O_n\), and \(\tilde{b}^{(k)}_n = b^{(k)}_n\). Even though \(u^{(2)}_n \ne z^{(2)}_n\), decoding Eq. (21) is satisfied. Therefore, the matched filter that decomposes the source signal of a user from the received composed signal is the matched filter of that user alone.

In A points shows the return map of the received signal with \(\tilde{\gamma }^{(1)}=0.2\) and \(\tilde{\gamma }^{(2)}=0.8\), and the lines the partitions from which received symbols are estimated. Inside the parenthesis, the first symbol is from user 2 and the second symbol is from user 2. In B, one sees a solution of the unfolded Baker’s map, where horizontal axis shows trajectory points from Eq. (9) and vertical axis trajectory points from Eq. (21), for the user k=2. In C is shown C against \(\sum I\), with respect to the signal-to-noise ratio (SNR)

4 Analysis of performance of Wi-C1, under noise constraints

I can now do an analysis of the performance of the Wi-C1, for both the uplink and the downlink configurations, for 2 users modelled by Eqs. (8) and (9) with power gains \(\tilde{\gamma }^{(1)}=0.2\) and \(\tilde{\gamma }^{(2)}=0.8\). The information capacity for both users (in bits per iteration, or bits per period) is given by

where \(\hbox {SNR} = \frac{P}{P^{w}}\) (units in dB, decibel) is the signal-to-noise ratio, the ratio between the power P of the linearly composed signal \(\tilde{\gamma }^{(1)}u_n^{(1)} + \tilde{\gamma }^{(2)}u_n^{(2)}\) (arriving at the BS, in the uplink configuration, or departing from it, in the downlink configuration) and \(P^{w}\), the power of the noise \(w_n\) at the BS (for the uplink configuration) or at the users (for the downlink configuration, assumed to be the same). The total capacity of the communication denoted by C is calculated, assuming that decoding of users 1 and 2 is simultaneously done from the noisily corrupted received signal \(O_n+w_n\) (see Eq. (10)), and so, decoding of the signal from user 2 does not treat the signal of user 1 as noise.

This capacity has to be compared to the actual rate of information being realised at the BS (or at the receivers), quantified by the mutual information, \(I(b_n^{(k)};\tilde{b}_n^{(k)})\) between the symbols transmitted (\(b_n^{(k)}\)) and the decoded symbols \(\tilde{b}_n^{(k)}\) estimated by using partition in Eq. (18), defined as usual by \(I(b_n^{(k)};\tilde{b}_n^{(k)}) = H(b_n^{(k)}) - H(b_n^{(k)}|\tilde{b}_n^{(k)}) \)

where \(H(b_n^{(k)})\) denotes the Shannon’s entropy of the user k which is equal to the positive LE of the user k, for \(\beta (\gamma =1) = \ln {(2)}\), and \(H(b_n^{(k)}|\tilde{b}_n^{(k)})\) is the conditional entropy.

Figure 2C shows in red squares the full theoretical capacity given by C against the rate of information decoded given by \(\sum I = I(b_n^{(1)};\tilde{b}_n^{(1)}) + I(b_n^{(2)};\tilde{b}_n^{(2)})\), in black circles, with respect to the SNR. As it is to be expected, the information rate received \(\sum I\) is equal to the information encoding capacity \(\mathcal {C}_e\) that is transmitted (both equal to 3bits/period) for low noise levels, tough smaller than the theoretical limit.

Notice that this analysis was carried out using the map version of the matched filter [11] in Eq. (19), and as such lacks the powerful use of the negativeness of the LE to filter noise. Moreover, decoding used the trivial 2D threshold by Eq. (18), and not higher-dimensional reconstructions.

4.1 Comparison of performance of Wi-C1 against NOMA

To cope with the expected demand in 5G wireless communication, non-orthogonal multiple access (NOMA) [36,37,38] was proposed to allow all users to use the whole available frequency spectrum. One of the most popular NOMA schemes allocates different power gains to the signal of each user. Full description of this scheme and its similarities with Wi-C1 is given in Sect. VI of SM.

The key concept behind NOMA is that users signals are superimposed with different power gains, and successive interference cancellation (SIC) is applied at the user with better channel condition, in order to remove the other users signals before detecting its own signal [39]. In the Wi-C1, as well as in NOMA, power gains are also applied to construct the linear superposition of signals. But in this work, I assume that the largest power gain is applied to the user with the largest frequency. Moreover, in this work, I have not done successive interference cancellation (SIC), since the information from all the users is simultaneously recovered by the thresholding technique, by considering a trivial 2D threshold by Eq. (18).

Comparison of the performance of Wi-C1 and NOMA is done considering the work in Ref. [40], which has analysed the performance of NOMA for two users in the downlink configuration, under partial channel knowledge. Partial channel knowledge means in rough terms that the “amplitude” of the signal arriving to a user from the BS is incorrectly estimated. In this sense, I have considered in the Wi-C1 perfect channel knowledge, since my simulations in Fig. 2C based on Eq. (10) assume that \(\gamma ^{(k)}=\frac{1}{\alpha ^{(k)}}\) to compensate for the amplitude decay \(\alpha ^{(k)}\) in the physical media (see Eq. (5)). More precisely, partial channel knowledge means that a Gaussian distribution describing the signal amplitudes departing from a user decreases its variance inversely proportional to a power-law function of the distance between that user and BS. The variance of the error of this distribution estimation is denoted by \(\sigma _{\epsilon }\), an important parameter to understand the results in Ref. [40]. Partial channel knowledge will impact on the optimal SIC performed for the results in Ref. [40]. Recall again that for the Wi-C1, no SIC is performed.

In Fig. 3, the curve for \(\sum I\) (the rate of decoded information) in Fig. 2C is plotted in red circles and compared with data shown in Fig. 3 of Ref. [40] for the quantity “average sum rate”, where each dataset considers a different channel configuration. Blue down triangles show the quantity “average sum rate” for perfect channel knowledge (\(\sigma _{\epsilon }=0\)), and black squares represent the same quantity for partial channel knowledge (\(\sigma _{\epsilon }=0.0005\)). The data points in Fig. 3 of Ref. [40] were extracted by a digitalization process. The quantity \(\sum I\) for Wi-C1 in in Fig. 2C in units of bits per period (or channel use) is compared with the quantity “average sum rate” (whose unit was given in bits per second per Hz) by assuming the period of signals in Ref. [40] is 1s. The average value obtained in Ref. [40] has taken into consideration Monte Carlo simulations of several configurations for 2 users that are uniformly distributed in a disk and the BS is located at the centre.

The results in Fig. 3 show that Wi-C1 has similar performance in terms of the bit rate for 0 dB, better performance for the SNR \(\in ]0,30]\) dB as that of the NOMA (with respect to the average sum rate) for perfect channel knowledge, and better performance for the SNR \(\in ]0,40[\) dB as that of the NOMA (with respect to the average sum rate) for partial channel knowledge.

One needs to have into consideration that this outstanding performance of Wi-C1 against NOMA is preliminary, requiring more deep analysis, but that is out of the scope of the present work.

5 Other non-ideal physical media

Previous sections of this work have tackled with great rigour and detail how chaotic signals are affected when travelling through medium that presents non-ideal properties such as multi-path propagation, noise and chaotic interference (linear superposition), and how this impacts on the proposed communication system. This section is dedicated to conceptually discuss with some mathematical support how chaotic signals and their information content are transformed by physical channels with other non-ideal properties (dispersion and interference with periodic signals), and how this impacts on the multi-user communication system proposed.

For the following analysis, I will neglect the existence of multiple indirect paths of propagation and will consider that only the direct path contributes to the transmission of information, so \(L=1\). I will consider the uplink scenario where users transmit to a BS. I will initially focus the analysis about the impact of the non-ideal physical medium on the signal of a single user, in particular the effect of the medium in the received discrete signal being described by Eq. (3) and its Lyapunov exponent (LE), and will then briefly discuss the impact of the non-ideal medium on a communication configuration with multi-users.

5.1 Physical media with dispersion

Physical media with dispersion are those in which waves have their phase velocity altered as a function of the frequency of the signal. However, a dispersive medium does not alter the frequency of the signal, and therefore, it does not alter its natural period, only its propagation velocity. As a consequence, the LEs of any arbitrary chaotic signal travelling in a dispersive medium are not modified. The information carried by this chaotic signal would also not be altered, if it were generated by Eq. (1), or by a system whose chaotic trajectory possesses SRB measure, or that its topological entropy \(H_{T}\) equals the sum of the positive LEs.

However, the travel time of a signal to arrive at the BS along the direct path \(\tau _0\) is altered. This can impact on the ability to decode as can be seen from Eq. (3). Suppose that the travel time of user k, given by \(\tau ^{(k)}_0\), increases from 0 (as in the previous derivations) to a finite value that is still smaller than the period of that user \(T^{(k)}\), so that \(n^{\prime }=n\) for that user. But, \(\mathcal {K}_0\) would be different than 1, and as a consequence, the return map of the received signal would contain a term that is a function of the symbol \(s_{n+1}\). Extracting the symbols from the received discrete signal (decoding) would have to take into consideration this extra symbol, which represents a symbol 1 iteration (or period) in the future. Decoding for the symbol \(s_n\) from the received signal would require the knowledge of the symbol \(s_{n+1}\). So, to decode what is being received at a given moment in the present would require knowledge of the symbol that has just been sent. To circumvent this limitation, one could firstly send a dummy symbol known by both the transmitter and the receiver at the BS, and use it to decode the incoming symbol \(s_n\), which then could be used to decode \(s_{n-1}\), and so on. Noise could impact on the decoding. Every new term that appears in Eq. (3) results in a new branch for this map. With noise, a branch in the return map that appears due to the symbol \(s_{n+1}\) could be misinterpreted as a branch for the symbol \(s_n\), causing errors in the decoding.

In a multi-user scenario, dispersion would only contribute to change the time delays \(\tau ^{(k)}_l\) for each user for each propagating path. As discussed, this will not affect the LEs of the source chaotic signals. Moreover, as demonstrated, the LEs of the source signals should be preserved by the linearly composed signal arriving at the BS, suggesting that the information encoding capacity given by Eq. (15) in the multi-user scenario could also be preserved for the systems for which \(H_T=\sum ^+\) or \(\sum ^+ = H_{KS}\) (as discussed in Sect. 2.3). Noise would, however, increase the chances of mistakes in the decoding of a multi-user configuration, thus impacting on the information capacity of the communication, since branches in the mapping of the received signal could overlap. At the overlap, one cannot discern which symbol was transmitted.

5.2 Physical media with interfering periodic (non-chaotic) signals

This case could be treated as a chaotic signal that is modulated by a periodic signal. Assuming no amplitude attenuation, the continuous signal arriving at the BS from user k can be described by

where \(f_p\) represents the frequency of the periodic signal, and \(\phi _0\) its initial constant phase. In here, I analyse the simplest case, when \(f_p=f^{(k)}\), in which the discrete-time signal arriving at the BS at times \(t=nT\), from user k, would receive a constant contribution \(c^{(k)} = A\sin {(2\pi n + \phi _0)}\), due to the interfering periodic signal. If \(r^{(k)}_n\) and \(r^{(k)}_{n+1}\) denote the discrete time signals arriving at the BS without periodic interference from user k at discrete times \(t=nT\) and \(t=(n+1)T\), respectively, then \(\tilde{r}^{(k)}_n\) and \(\tilde{r}^{(k)}_{n+1}\) described by

would represent the discrete time signals arriving at times \(t=nT\) and \(t=(n+1)T\) at the BS, respectively, after suffering interference from the periodic signal. Substituting these equations into the mapping in Eq. (3) would allow us to derive a mapping for the signal with interference

As expected, adding a constant term to a chaotic map does not alter its LE given by \(\frac{\beta }{f}\). Consequently, the information encoding capacity of this chaotic signal is also not altered, since it is generated by Eq. (1).

This constant addition results in a vertical displacement of the map by a constant value -\((e^{\frac{\beta }{f}} -1) c^{(k)}\). So, added noise in the received signal with interference would not impact more than the impact caused by noise in the signal without interference.

In a multi-user scenario, LEs of the linearly composed signal arriving at the BS should preserve all the LEs of the source chaotic signals, suggesting that the information encoding capacity in the multi-user scenario could also be preserved, for signals being generated by the chaotic systems discussed in Sect. 2.3. Noise would, however, increase the chances of mistakes in the decoding of a multi-user configuration, thus impacting on the information capacity of the communication, since for each user the branches of the mapping describing the received signal would be vertically shifted by a different constant, resulting in branches of the received signal that overlap. At the overlap, one cannot discern which symbol was transmitted.

6 Conclusions

In this work, I show with mathematical rigour that a linear superposition of chaotic signals with different natural frequencies fully preserves the spectra of Lyapunov exponents and the information content of the source signals. I also show that if each source signal is tuned with appropriated linear coefficients (or power gains), successful decomposition of the source signals and their information content out of the composed signal is possible. Driven by today’s huge demand for data, there is a desire to develop wireless communication systems that can handle several sources, each using different frequencies of the spectrum. As an application of this wonderful decomposability property that chaotic signals have, I propose a multi-user and multi-frequency communication system, Wi-C1, where the encoding phase (i.e. the preparation of the signal to be transmitted through a physical media) is based on the correct choice of the linear coefficients, and the decoding phase (i.e. the recovery of the transmitted signals and their information content) is based on the decomposition of the received composed signal.

The information encoding capacity of Wi-C1, or the information rate of a signal that is obtained by a linear composition of chaotic signals, is demonstrated to be equal to the sum of positive Lyapunov exponents of the source signals of each user. If linear coefficients (power gains) are appropriately chosen, and noise is sufficiently low, then the information encoding capacity of Wi-C1 is equal to the information capacity of Wi-C1, or the total rate of information being received/decoded.

Further improvement for the rate of information could be achieved by adding more transmitters (or receivers) at the expense of reliability. One could also consider similar ideas as in [3, 4], which would involve more post-processing, at the expense of weight. Post-processing would involve the resetting of initial conditions in Eq. (20) all the time, and then using the inverse dynamics up to some specified number of backward iterations to estimate the past of \(u^{(k)}_n\). One could even think of constructing stochastic resonance detectors to extract the information of a specific user from the received composed signal [41]. These proposed analyses for the improvement of performance in speed, weight and reliability of the communication are out of the scope of this work.

I have compared the performance of Wi-C1 with a non-chaotic communication method that is the strongest candidate for the future 5G networks, the non-orthogonal multiplex access (NOMA), and have shown that Wi-C1 can communicate at higher bit rates for large noise levels in the channel.

The last section of this paper is dedicated to conceptually discuss with some mathematical support how chaotic signals and their information content are transformed by physical channels with other non-ideal properties (dispersion and interference with periodic signals), and how this impacts on the multi-user communication system proposed.

Availability of data and materials

All data and material for this work are being published in this manuscript.

References

Cuomo, K.M., Oppenheim, A.V.: Circuit implementation of synchronized chaos with applications to communications. Phys. Rev. Lett. 71(1), 65 (1993)

Bollt, E., Lai, Y.C., Grebogi, C.: Coding, channel capacity, and noise resistance in communicating with chaos. Phys. Rev. Lett. 79(19), 3787 (1997)

Rosa Jr., E., Hayes, S., Grebogi, C.: Noise filtering in communication with chaos. Phys. Rev. Lett. 78(7), 1247 (1997)

Baptista, M.S., López, L.: Information transfer in chaos-based communication. Phys. Rev. E 65(5), 055201 (2002)

Badii, R., Broggi, G., Derighetti, B., Ravani, M., Ciliberto, S., Politi, A., Rubio, M.: Dimension increase in filtered chaotic signals. Phys. Rev. Lett. 60(11), 979 (1988)

Eisencraft, M., Fanganiello, R., Grzybowski, J., Soriano, D., Attux, R., Batista, A., Macau, E., Monteiro, L.H.A., Romano, J., Suyama, R., et al.: Chaos-based communication systems in non-ideal channels. Commun. Nonlinear Sci. Numer. Simul. 17(12), 4707 (2012)

Ren, H.P., Baptista, M.S., Grebogi, C.: Wireless communication with chaos. Phys. Rev. Lett. 110(18), 184101 (2013)

Ren, H.P., Bai, C., Liu, J., Baptista, M.S., Grebogi, C.: Experimental validation of wireless communication with chaos. Chaos 26(8), 083117 (2016)

Hayes, S., Grebogi, C., Ott, E.: Communicating with chaos. Phys. Rev. Lett. 70, 3031 (1993). https://doi.org/10.1103/PhysRevLett.70.3031

Bollt, E.: Review of chaos communication by feedback control of symbolic synamics. Int. J. Bifurc. Chaos 13(02), 269 (2003)

Corron, N.J., Blakely, J.N., Stahl, M.T.: A matched filter for chaos. Chaos 20(2), 023123 (2010)

Corron, N.J., Blakely, J.N.: Chaos in optimal communication waveforms. P. R. Soc. A Math. Phys. Eng. Sci. 471(2180), 20150222 (2015)

Grebogi, C., Baptista, M.S., Ren, H.P.: Wireless communication method using a chaotic signal. United Kingdom Patent Application No. 1307830.8, London (2015)

Yao, J., Sun, Y., Ren, H., Grebogi, C.: Experimental wireless communication using chaotic baseband waveform. IEEE Trans. Veh. Technol. 68(1), 578 (2019)

Baptista, M.S., Macau, E.E., Grebogi, C., Lai, Y.C., Rosa Jr., E.: Integrated chaotic communication scheme. Phys. Rev. E 62(4), 4835 (2000)

Ren, H.P., Bai, C., Kong, Q., Baptista, M.S., Grebogi, C.: A chaotic spread spectrum system for underwater acoustic communication. Phys. A Stat. Mech. Appl. 478, 77 (2017)

Bai, C., Ren, H.P., Grebogi, C., Baptista, M.: Chaos-based underwater communication with arbitrary transducers and bandwidth. Appl. Sci. 8(2), 162 (2018)

Bailey, J.P., Beal, A.N., Dean, R.N., Hamilton, M.C.: A digital matched filter for reverse time chaos. Chaos 26(7), 073108 (2016)

Jiang, X., Liu, D., Cheng, M., Deng, L., Fu, S., Zhang, M., Tang, M., Shum, P.: High-frequency reverse-time chaos generation using an optical matched filter. Opt. Lett. 41(6), 1157 (2016). https://doi.org/10.1364/OL.41.001157

Beal, A.N., Blakely, J.N., Corron, N.J., Jr. R.N.D.: High frequency oscillators for chaotic radar. In Radar Sensor Technology XX, vol. 9829, ed. by K.I. Ranney, A. Doerry. International Society for Optics and Photonics (SPIE, 2016), vol. 9829, pp. 142–152. https://doi.org/10.1117/12.2223818

Pappu, C., Carroll, T., Flores, B.: Simultaneous radar-communication systems using controlled chaos-based frequency modulated waveforms. IEEE Access PP, 1 (2020). https://doi.org/10.1109/ACCESS.2020.2979324

Argyris, A., Hamacher, M., Chlouverakis, K., Bogris, A., Syvridis, D.: Photonic integrated device for chaos applications in communications. Phys. Rev. Lett. 100(19), 194101 (2008)

Pecora, L.M., Carroll, T.L.: Synchronization in chaotic systems. Phys. Rev. Lett. 64, 821 (1990). https://doi.org/10.1103/PhysRevLett.64.821

Jianning, Y., Yi, F.: Blind separation of mixing chaotic signals based on ICA using kurtosis. In: 2012 International Conference on Computer Science and Service System, pp. 903–905 (2012)

Krishnagopal, S., Girvan, M., Ott, E., Hunt, B.R.: Separation of chaotic signals by reservoir computing. Chaos Interdiscip. J. Nonlinear Sci. 30(2), 023123 (2020)

Lasota, A., Mackey, M.C.: Probabilistic Properties of Deterministic Systems. Cambridge University Press, Cambridge (1985)

Eisencraft, M., Fanganiello, R., Grzybowski, J., Soriano, D., Attux, R., Batista, A., Macau, E., Monteiro, L., Romano, J., Suyama, R., Yoneyama, T.: Chaos-based communication systems in non-ideal channels. Commun. Nonlinear Sci. Numer. Simul. 17(12), 4707 (2012)

Yao, J.L., Li, C., Ren, H.P., Grebogi, C.: Chaos-based wireless communication resisting multipath effects. Phys. Rev. E 96(3), 032226 (2017)

Araujo, M.A.: Lyapunov exponents and extensivity in multiplex networks. Ph.D. thesis, University of Aberdeen (2019). Can be downloaded at https://digitool.abdn.ac.uk/

Eckmann, J.P., Ruelle, D.: Ergodic theory of chaos and strange attractors. In: The theory of chaotic attractors. Springer, pp. 273–312 (1985)

Araujo, M.A., Baptista, M.S.: Extensivity in infinitely large multiplex networks. Appl. Netw. Sci. 4(1), 1 (2019)

Pesin, Y.B.: Dimension Theory in Dynamical Systems: Contemporary Views and Applications. University of Chicago Press, Chicago (2008)

Young, L.S.: What are SRB measures, and which dynamical systems have them? J. Stat. Phys. 108(5–6), 733 (2002)

Catalan, T.: A link between topological entropy and Lyapunov exponents. Ergodic Theory Dyn. Syst. 39, 620 (2019)

Matsuoka, C., Hiraide, K.: Computation of entropy and Lyapunov exponent by a shift transform. Chaos Interdiscip. J. Nonlinear Sci. 25(10), 103110 (2015)

Kizilirmak, R.C., Bizaki, H.K.: Non-Orthogonal multiple access (NOMA) for 5G networks. Towards 5G Wireless Networks-A Physical Layer Perspective, pp. 83–98 (2016)

Benjebbour, A.: An overview of non-orthogonal multiple access. ZTE Commun. 15(S1), 1 (2017)

Dai, L., Wang, B., Ding, Z., Wang, Z., Chen, S., Hanzo, L.: A survey of non-orthogonal multiple access for 5G. IEEE Commun. Surv. Tutor. 20(3), 2294 (2018)

Saito, Y., Benjebbour, A., Kishiyama, Y., Nakamura, T.: System-level performance evaluation of downlink non-orthogonal multiple access (NOMA). In: 2013 IEEE 24th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), pp. 611–615 (2013)

Yang, Z., Ding, Z., Fan, P., Karagiannidis, G.K.: On the performance of non-orthogonal multiple access systems with partial channel information. IEEE Trans. Commun. 64(2), 654 (2016)

Galdi, V., Pierro, V., Pinto, I.: Evaluation of stochastic-resonance-based detectors of weak harmonic signals in additive white Gaussian noise. Phys. Rev. E 57, 6470 (1998)

Corron, N.J., Hayes, S.T., Pethel, S.D., Blakely, J.N.: Chaos without nonlinear dynamics. Phys. Rev. Lett. 97(2), 024101 (2006)

Liu, L., Wang, Y., Li, Y., Feng, X., Song, H., He, Z., Guo, C.: Noise robust method for analytically solvable chaotic signal reconstruction, circuits, systems, and signal processing, pp. 1–19 (2019)

Hampton, J.R.: Introduction to MIMO Communications. Cambridge University Press, Cambridg (2013)

Ren, H.P., Baptista, M.S., Grebogi, C.: Chaos wireless communication and code sending methods. P. R. China Patent Application No. 201410203969.9, Beijing (2014)

Corron, N.J., Cooper, R.M., Blakely, J.N.: Analytically solvable chaotic oscillator based on a first-order filter. Chaos 26(2), 104 (2016)

Dedieu, H., Kennedy, M.P., Hasler, M.: Chaos shift keying: modulation and demodulation of a chaotic carrier using self-synchronizing Chua’s circuits. IEEE Trans. Circuits Syst. II Analog Digit. Signal Process. 40(10), 634 (1993)

Ott, E., Grebogi, C., Yorke, J.A.: Controlling chaos. Phys. Rev. Lett. 64, 1196 (1990). https://doi.org/10.1103/PhysRevLett.64.1196

Parlitz, U., Ergezinger, S.: Robust communication based on chaotic spreading sequences. Phys. Lett. A 188(2), 146 (1994). https://doi.org/10.1016/0375-9601(84)90009-4

Kocarev, L., Parlitz, U.: General approach for chaotic synchronization with applications to communication. Phys. Rev. Lett. 74, 5028 (1995). https://doi.org/10.1103/PhysRevLett.74.5028

Kennedy, M.P., Dedieu, H.: Experimental demonstration of binary chaos-shift keying using self-synchronising chua’s circuits. In: Proceedings of the Workshop of Nonlinear Dynamics and Electronic Systems (NDES93) 1, 67 (1993)

Kolumban, G., Kennedy, M.P., Chua, L.O.: The role of synchronization in digital communications using chaos. II. Chaotic modulation and chaotic synchronization, IEEE Transactions on Circuits and Systems I: Fundamental Theory and Applications 45(11), 1129 (1998)

Acknowledgements

The author would like to acknowledge preliminary simulations done by Dr Ezequiel Bianco-Martinez with combined maps trajectories, and an email communication by Dr Pedro Juliano Nardelli and Dr Daniel B. da Costa suggesting “the investigation of Non-Orthogonal Multiple Access (NOMA) in chaos-based communication systems”. This communication has motivated the author to introduce the NOMA framework and compare it with the proposed Wi-C1 in Sect. VI of SM. The analysis of performance (Fig. 2C), and discussions involving similarities (and dissimilarities) between the uplink and the downlink scenarios have also benefited from this communication. The author would finally like to thank discussions about detailed numerical solutions of Eqs. (1)–(3) performed by MSc Louka Kovatsevits, for arbitrary values of \(\tau _l\) and \(\beta ({\gamma })\). A follow-up paper reporting this analysis will be submitted elsewhere. Points in Fig. 3 of Ref. [40] were extracted using the software Plot Digitizer. The following references [36,37,38, 42,43,44] have contributed to the material presented in SM.

Funding

This work has not been funded by any funding agency.

Author information

Authors and Affiliations

Contributions

MS Baptista is the single author and has solely contributed to this work.

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendices

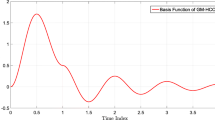

Appendix A: The continuous hybrid-dynamics chaotic wavesignal generator

A wonder of chaotic oscillations for communication is the system proposed in Ref. [11]. As originally proposed, the system is operating in a time frame whose infinitesimal is denoted by dt, has a natural frequency \(f_0=1\), a natural period \(T_0=1\), and an angular frequency \(\omega _0=2\pi \). With an appropriate rescaling of time to a new time frame \(\mathrm{d}t{^{\prime }}=\gamma \mathrm{d}t\), it can be rewritten as in Eq. (1), which I reproduce here to simplify understanding of this and following sections of this “Appendix”.

where \(s(t) \in (-1,1)\) is 2-symbol alphabet discrete state that switches value by the signum function \(s(t)={x(t)/|x(t)|}\), whenever \(|x(t)|<1\) and \(\dot{x}=0\). In this new time frame, the natural frequency is \(f(\gamma )=(1/\gamma )\) (angular frequency equals \(2\pi f\)), the period \(T(\gamma )=1/f(\gamma )=\gamma T_0(\gamma =1)\), and \(\beta (\gamma ) =\beta (\gamma =1) f(\gamma )\), where \(0 < \beta (\gamma =1) \le \ln {(2)}\). \(\frac{\beta }{f}\) will be further used to denote \( \frac{\beta (\gamma )}{f(\gamma )} = \beta (\gamma =1)\).

Equation (28) (and Eq. (1)) has an analytical solution that links its continuous form to its symbolic encoding, provided by the discrete state \(s_n\) obtained by sampling the time at \(t=n/f\), where \(n=\lfloor f t \rfloor \) is the floor function that extracts the integer part of ft:

Main steps to derive this equation can be seen in Refs. [8, 13, 28, 45]; however, its present form allows Eq. (3) to express a return map of signals with multiple propagation paths and with arbitrary time delays for the time of propagation of the multiple paths. Its formal derivation will be elsewhere published. In this equation, \(s_n\) represents the binary symbol associated with the time interval \(nT \le t <(n+1)T\), where \(s_n=s(t=nT)\). Sampling the time at this same rate a discrete mapping of x(t) can be constructed

Moreover, this solution can be written in terms of an infinite sum of basis function whose coefficients are the symbolic encoding of the analogical trajectory (\(s_n\)). This representation allows for the creation of a matched filter, which receives as the input the signal x(t) corrupted by white Gaussian noise (AWGN) and produces as the output an estimation of x(t).

It offers in a single system all the benefits of both the analogical and digital approaches to communicate. The continuous signal copes with the physical medium, and the digital representation provides a translation of the chaotic signal to the digital language that we and machines understand. Supposing the information to be communicated is a binary stream \(\mathbf{b} =\{b_0,b_1,b_2,\ldots \}\), a signal can be created (the source encoding phase) such that \(s(t) = (2b_n-1)\), for \(nT \le t < (n+1)T\) [13]. The so-called source encoding phase is thus based on a digital encoding. Moreover, the discrete variable \(s_n\) is the symbolic encoding of the chaotic trajectory in the space \(x,\dot{x}\).

This kind of hybrid chaotic system to communicate is not unique. Corron and Blakely [12] and Corron, Cooper and Blakely [46] have recently proposed other similar chaotic systems to that of Eq. (28) (and Eq. (28)). It is hypothesised in Ref. [12] that the optimal waveform that allows for a stable matched filter is a chaotic waveform. In this work, we provide support for this conjecture, but by showing that stability for the recovery of the information can be cask in terms of the non-existence of negative Lyapunov exponents of Eq. (28) (and Eq. (28)). This is shown in Sect. I of SM. Had this system negative LEs, its inverse dynamics—the matched filter—responsible to filter out noise of the received signal would possess a positive LE making it to become unstable to small perturbations in the input of the matched filter (the received signal).

Appendix B: The return mapping of the received signal for a single user and an arbitrary number of propagation paths, in the noiseless channel

In Eq. (29), \(s_n\) represents the binary symbol associated with the time interval \(nT \le t <(n+1)T\), where \(s_n=s(t=nT)\).

The received signal in the noiseless wireless channel with a single user can be modelled by

where there are L propagation paths, each with an attenuation factor of \(\alpha _l\) and a time delay \(\tau _l\) for the signal to arrive to the receiver along the path l (with \(0=\tau _0< \tau _2< \cdots < \tau _{L-1}\)).

To obtain a map solution for Eq. (31), we need to understand which symbol \(s_{n^{\prime }}\) is associated with the time. \(t-\tau _l\). Let us define the time-translated variable

where t represents the global time for all elements involved in the communication, the time that a certain signal x(t) was generated by a user from the chaotic system in Eq. (28), the “transmitter”. \(t^{\prime }\) represents the delayed time. The clock of the user, the “receiver”, is at time t, but it receives the signal \(r(t^{\prime })\). The receiver decodes for the symbol \(s_{n^{\prime }}\), where

The operator \(\lfloor \cdot \rfloor \) represents the floor function. The transmitter constructs a map at times \(t=n/f\), so Eqs. (32) and (33) can be written as

where the operator \(\lceil \cdot \rceil \) represents the ceiling function.

In the time frame of \(t^{\prime }\), the solution in (29) multiplied by an arbitrary attenuation factor can be written as

Let us understand what happens to the oscillatory term \(\left( \cos \omega t^{\prime } -\frac{\beta }{\omega } \sin \omega t^{\prime } \right) \) in Eq. (36). Using Eq. (35), we obtain that

So,

where

Let us calculate the previously shown quantities for a delayed time \(t^{\prime \prime }\) one period ahead in time of \(t^{\prime }\):

It can be also be written that

This equation can be derived by doing \( t^{\prime \prime } - n^{\prime \prime }T = t^{\prime } + \frac{1}{f} -n^{\prime }T -T \).

Using Eqs. (32), (40), and (42), it is possible to obtain

So, the attenuated signal at time \(t^{\prime \prime }\) is given by

Returning to Eq. (38), notice that by a manipulation of the terms inside the summation, it can be written as

Multiplying this equation by \(e^{\beta /f}\) results in

The received signal at time t and \(t+T\) is then given by

If we observe the received signal only at discrete times \(t=nT\), and defining the discrete variable \(r(nT) \equiv r_n\), we obtain that

where

The variable \(\mathcal {K}_l\) is derived by noticing that

Comparing Eqs. (50) and (51), we finally arrive at a return map for the received signal with multipath propagation

Appendix C: The equivalence principle for flows, and the preservation of the Lyapunov exponents for linearly composed chaotic signals

Let us assume that information is being encoded by using the Rössler attractor. User 1 encodes its information in the variable \(x_1(t)\) and user 2 in the variable \(x_2(t)\). User 2 has a base frequency Q times the one from user 1. Notice that \(Q=1/\gamma \), where \(\gamma \) is the time-rescaling factor. User 1 chaotic signal \(x_1\) is generated by

User 2 uses the signal \(x_2\) generated by :

where a, b and c represent the usual parameters of the Rössler attractors. Notice, however, that this demonstration would be valid to any nonlinear system, the Rössler was chosen simply to make the following calculation straightforward to follow. The system of equations in (56) is already in the transformed time frame, so that user 2 has a basis frequency Q times larger than user 1.

The transmitted composed signal can be represented by

where \(\alpha _1\) and \(\alpha _2\) are attenuation, or power gain factors.

The interest is to derive a systems of ODEs that describes all variables involved in ODE system describing the received signal

Determinism in chaos allows us to write that at a time t there exists a \(\tau \) such that \( x_2(t) = x_1(t-\tau )\). More generally, if user 2 has a basic frequency Q times that of user 1, its trajectory at time \(t+n\delta t\) (\(n \in \mathbb {N}\)) can be written in terms of the user 1’s trajectory by

I now define a new set of transformation variables given by

where

Defining the vectors \(\mathbf {X}_1(t)=\{x_1(t),y_1(t),z_1(t)\}\) and \(\mathbf {W}^1_n(t)=\{w_n^{1x}(t),w_n^{1y}(t),w_n^{1z}(t)\}\), I can write Eqs. (60)–(62) in a compact form

Notice also that by defining the vector \(\mathbf {X}_2(t)=\{x_2(t),y_2(t),z_2(t)\}\), I can write that at time t

To facilitate the following calculations, I express some terms of Eqs. (66) and (67) along the variables \(x_1(t)\) and \(x_2(t)\) for \(n=\{0,1,2,\ldots ,N-1,N\}\):

I express the transformation variables considering a small displacement \(Q \delta t\) in time from the time t:

Then, time derivatives can now be defined by

For the variables of the user 2, we have that

which takes us to

The original variables of the Rössler system for user 1 can be written in terms of the new transformed variables by

and for the user 2

Before I proceed, it is helpful to do some considerations, regarding this transformation of variables. Notice that \(\dot{x}_2=\frac{w_{N-1}(t) - w_N(t)}{\delta t}\) and \(\dot{x}_1=\frac{w_{0}(t) - w_1(t)}{Q \delta t}\). So, \(\dot{x}_2 = Q\dot{x}_1\). Moreover, \(\mathbf {W}^1_n(t=\mathbf {X}_1(t-\tau +(N-Qn)\delta t))\) and \(\mathbf {W}^2_n(t=\mathbf {X}_2(t+(N-Qn)/Q \delta t))\). So, \(\mathbf {W}_n^1(t) = \mathbf {W}_n^2(t)\), but their derivatives are not equal.

In the new variables, the ODE system describing the received signal from user 1 is described by

and user 2 is described by:

The received signal is described by

and its first time derivative

A final equation is needed to describe the first time derivative of \(\dot{w}_0^{2x}(t)\) in terms of the previously defined new variables. We have that

Moreover,

Placing Eqs. (85) and (86) in Eq. (84) takes us to

The variational equations of the systems formed by Eqs. (75), (77), (80), (81), (83), and (87) can be constructed by defining the perturbed variables \(\tilde{w}^{jk}_i=w^{jk}_i + \delta w^{jk}_i\), with the index representing \(j \in \{1,2,3\}\), \(k \in \{ x,y,z \}\), \(i \in \{0,\ldots ,N\}\), whose first derivative is \(\dot{\tilde{w}}^{jk}_i=\dot{w}^{jk}_i + \delta \dot{w}^{jk}_i\). Also, \(\tilde{O}=O+\delta O\), for the received signal. The Jacobian matrix of the variational equations is thus given by

The upper-left diagonal block

is responsible to produce the same three Lyapunov exponents \(\chi _1,\chi _2=0,\chi _3\) (\(\chi _1>0,\chi _3<0 \)) of the Rössler attractor, for the user 1.

The bottom-right diagonal block

is responsible to produce the three Lyapunov exponents \(Q\chi _1,\chi _2=0,Q\chi _3\) (so Q times the Lyapunov exponents of the Rössler attractor), for the user 2. The diagonal elements of the Jacobian will produce \(N-1\) Lyapunov exponents equal to \((Q \delta t)^{-1}\) and \(N-1\) exponents \(-\delta t^{-1}\). The signs of the “infinities” Lyapunov exponents are a consequence of the way the derivatives were defined, and they could have been made to have the same signs. These exponents represent a higher-dimensional dynamics that effectively does not participate in the low-dimensional ordinary dynamics of the measured received signal. They are a consequence of the transformation of a time-delayed system of differential equations into an ODE, without explicitly time dependence.

Concluding, the spectra of Lyapunov exponents of the dynamics generating the signals for user 1 and 2 are preserved in the received signal and are not affected by the combination of the signals.

Appendix D: A succinct presentation on the history of chaos for communication

This work reveals novel fundamental properties of chaotic signals to communicate. Some of the work presented in this work also incorporates wonderful properties of chaos that had been reported in the almost 2 decades of research in this field, which I succinctly acknowledge in the following. Three seminal works [1, 9, 47] have launched the area of communication with chaos. In Ref. [9], the authors have controlled chaos [48] to propose a communication system where signals would represent desired digital information, yet preserving the original dynamics of the chaotic system. In Ref. [1], the authors have proposed a communication system in which analog information signals were added (masked) into the chaotic signal to be transmitted. Synchronization of chaos between transmitter and the receiver [23] was used as a means to extract the information at the receiver end. In Ref. [47], the authors have shown that chaos can be modulated to represent arbitrary digital information. Later on, other works have followed [49, 50], where in Ref. [49], it was shown that chaotic signals could be modulated in spread sequences to represent arbitrary digital information, and in Ref. [50], a general method to communicate based on the synchronization of chaotic systems was proposed. Since then, the area has attracted great attention to the scientific community, producing a vast collection of research.

These communication methods can be classified according to the wonderful fundamental properties of chaos they take advantage of: (i) those methods that control the chaotic signal to represent (encode) information, yet preserving relevant invariant properties of chaos, as in Refs. [9, 13, 14]; (ii) those that manipulate/modulate the chaotic signal for it to represent (encode) the desired information by altering some natural property of the signal, for example its amplitude, as the chaos shift keying [47, 51] and the spreading method of [49]); (iii) those in which information is extracted at the receiver (decoded) by means of visual inspection of the received signals, as in Refs. [7, 9]; (iv) those in which extraction of information happens via a matched filter as in Ref. [11] (also known as coherent matched filter receiver [52]); (v) those in which information is extracted by means of synchronization (also known as coherent correlation receiver with chaotic synchronization [52]), as in Ref. [1, 50]. In the review on digital communication with chaos in Ref. [52], two additional schemes are discussed, noncoherent detection techniques, and differentially coherent reception. The present work belongs to the 4 first categories ((i)–(iv)). I do assume that there is a control producing a chaotic signal that encodes a desired arbitrary digital message (i), the signal has its amplitude modified by a power gain (ii), decoding is done by a simple visual inspection of the received signal (iii), and information can be extracted by means of a matched filter at the receiver (iv). However, my work additionally explores the wonderful decomposability property chaotic signals have and that enables a solution for a multi-source and multi-frequency communication, a work that can allow chaos to be adopted as a native signal to support wireless networked communication systems such as the Internet of Things or 5G.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Baptista, M.S. Chaos for communication. Nonlinear Dyn 105, 1821–1841 (2021). https://doi.org/10.1007/s11071-021-06644-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-021-06644-4