Abstract

Minimal but increasing number of assessment instruments for Executive functions (EFs) and adaptive functioning (AF) have either been developed for or adapted and validated for use among children in low and middle income countries (LAMICs). However, the suitability of these tools for this context is unclear. A systematic review of such instruments was thus undertaken. The Systematic review was conducted following the Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) checklist (Liberati et al., in BMJ (Clinical Research Ed.), 339, 2009). A search was made for primary research papers reporting psychometric properties for development or adaptation of either EF or AF tools among children in LAMICs, with no date or language restrictions. 14 bibliographic databases were searched, including grey literature. Risk of bias assessment was done following the COSMIN (COnsensus-based Standards for the selection of health status Measurement INstruments) guidelines (Mokkink et al., in Quality of Life Research, 63, 32, 2014). For EF, the Behaviour Rating Inventory of Executive Functioning (BRIEF- multiple versions), Wisconsin Card Sorting Test (WCST), Go/No-go and the Rey-Osterrieth complex figure (ROCF) were the most rigorously validated. For AFs, the Vineland Adaptive Behaviour Scales (VABS- multiple versions) and the Child Function Impairment Rating Scale (CFIRS- first edition) were most validated. Most of these tools showed adequate internal consistency and structural validity. However, none of these tools showed acceptable quality of evidence for sufficient psychometric properties across all the measured domains, particularly so for content validity and cross-cultural validity in LAMICs. There is a great need for adequate adaptation of the most popular EF and AF instruments, or alternatively the development of purpose-made instruments for assessing children in LAMICs.

Systematic Review Registration numbers: CRD42020202190 (EF tools systematic review) and CRD42020203968 (AF tools systematic review) registered on PROSPERO website (https://www.crd.york.ac.uk/prospero/).

Similar content being viewed by others

Introduction

Rationale

Only 10% of research into child and adolescent mental health (CAMH) problems is carried out in low-and-middle-income countries (LAMICs) (Kessler et al., 2007; Merikangas et al., 2009). Meanwhile, over 90% of the world’s children live in LAMICs (WHO, 2008) and this proportion is only set to grow with the high birth rate in these countries. Historically the focus of public health in LAMICs has been on communicable diseases like HIV and Malaria. However, as the standard of living of LAMICs continues to improve, the data shows that in recent years the gradual decrease in infant mortality has resulted in an increasing shift toward non-communicable diseases such as neurodevelopmental disorders (NDDs) (Bakare et al., 2014) and CAMH disorders, which impact cognitive function. Children who would otherwise have died from various infections and birth injuries are now surviving, but surviving with the sequalae of the varied insults suffered at birth and the perinatal period, which are common in LAMICs because of poor obstetric care (Omigbodun & Bella, 2004). The common pathway of many of these conditions are neurobehavioral difficulties often described as Acquired Brain Injury (Bennett et al., 2005; Stuss, 1983) which affects brain function and the mental health and well-being of these children. It is within this context that accurate assessment of executive dysfunction and adaptive functioning, as known sequalae for brain injury, becomes quite important for children in LAMICs.

Executive functions (EF) may be defined as “top-down control processes” of human behaviour (Diamond, 2013) whose primary function is “supervisory control” (Stuss & Alexander, 2000) and includes such abilities as initiation, planning, and decision-making (Diamond, 2013). Better EF is linked to many positive outcomes (Diamond, 2013) such as greater success in school (Duncan et al., 2007; St Clair-Thompson & Gathercole, 2006), while deficits in EF are associated with slow school progress (Morgan et al., 2017) difficulties in peer relationships (Tseng & Gau, 2013) and poor employment prospects (Bailey, 2007). This may be because EF’s have also been presented as potential endophenotypes of various childhood mental disorders such as Hyperkinetic Disorder (ICD 10Footnote 1 code: F90.1; also commonly called Attention Deficit Hyperactivity Disorder (ADHD)) (Doyle et al., 2005). Behaviourally, EF deficits may manifest as distractibility, fidgetiness, poor concentration, chaotic organization of materials, and trouble completing work (Bathelt et al., 2018). Given the difficulties seen, it is therefore important that mental health and rehabilitation services are able to pinpoint areas of greatest difficulty and target interventions appropriately and cost effectively through accurate assessments (Simblett et al., 2012).

Adaptive functioning on the other hand is an area that is just beginning to be examined (Semrud-Clikeman et al., 2017). It is defined as behaviours necessary for age-appropriate, independent functioning in social, communication, daily living or motor areas (Matson et al., 2009), tapping into the ability to carry out everyday tasks within age and context appropriate constraints (World Health Organization, 2001). In this present study, the term was restricted to the narrow scope of adaptive function following brain injury/brain pathology.

Adaptive functioning may be viewed as the practical expression of executive functions in an everyday functional context. Executive function abilities are related and have predictive power over adaptive behaviour (Clark et al., 2002) in both typical and atypical populations according several studies (Gardiner & Iarocci, 2018; Gilotty et al., 2010; Gligorović & Ðurović, 2014; Low Kapalu et al., 2018; Perna et al., 2012; Pugliese et al., 2015; Sabat et al., 2020; Schonfeld et al., 2007; Ware et al., 2012; Zorza et al., 2016). Specific domains of adaptive behaviours and academic achievement may, in part, depend on executive function capacities (Clark et al., 2002). Specifically, the core domains of EF- working memory, inhibition, and cognitive flexibility- have been shown to relate to the domains of adaptive behaviour as conceptual skills (e.g., language and the understanding of time, money, and number concepts) (Gilotty et al., 2010; Pugliese et al., 2015; Sabat et al., 2020) and practical skills (e.g., personal care, occupational and safety capabilities, use of money and transportation, and following of schedules and routines) (Perna et al., 2012; Sabat et al., 2020) and social skills (Gilotty et al., 2010; Pugliese et al., 2015; Zorza et al., 2016). Therefore, reviewing tools for the two related constructs seemed like an appropriate approach to take. Therefore, assessed together, EF and AF could provide the most utility to LMIC clinicians, depending on whether the goal is to focus interventions from a specific domains’ perspective or specific areas of functional deficit in a day-to-day context for interventions.

At this point, one may wonder why of all the cognitive functioning constructs we chose to review tools assessing executive functions and adaptive functioning, and not say IQ. We wanted to review constructs that found the widest applicability trans-diagnostically and the most utility in the clinical setting, and for which interventions could be most directly designed. The information that assessing other psychological constructs related to frontal lobe functioning such as IQ might give, may not be as actionable as what a comprehensive assessment of the domains of EF and AF would provide. For example, an IQ assessment may be useful in diagnosing intellectual disability and in school placement (which are both very important of course), whereas an assessment of EF and AF could lead to identifying domains and areas of functional deficit in the child’s life (across several diagnostic labels) that would lend itself most immediately to therapeutic interventions. Furthermore, AF assessment would also allow clinicians to determine the level of support required (Association & Association, 2013). Finally, even in the developed world with the full range of neuropsychological services available, neuropsychological assessments such as EF and AF assessments alone represents a significant proportion of all assessment services by clinical psychologists (up to 21% according to a large national representative survey among US psychologists (Camara et al., 2000). It therefore seemed more useful for resource constrained LMICs to do a systematic review of such tools for EF and AF than for any other psychological construct at the present time.

Several tools have been developed to assess executive and adaptive function in Western or High-Income-Country (HIC) populations, which perpetuates the trend of skewing research towards wealthier countries as noted above (Kessler et al., 2007; Merikangas et al., 2009). However, not much is known about the nature and quality of tools developed for LAMIC populations. A recent scoping review of EF tools among adolescents was limited in scope, and did not focus particularly on LAMICs (Nyongesa et al., 2019). More importantly, this study did not evaluate risk of bias of the eligible papers (particularly noteworthy was the lack of focus on assessing risk of bias of content validity and cross cultural validity) but only reported on the results declared therein (Nyongesa et al., 2019). Another recent scoping review- this time focused on NDD’s among children in LAMICs also reported regarding EF and AF tools used in LAMICs that, only a few tests have been used to assess executive function in children in LAMICs (Semrud-Clikeman et al., 2017). This paper also failed to do a critical appraisal, though that was outside its scope.

There is a high burden of the known causes of brain injury in developing countries (Bitta et al., 2017; Merikangas et al., 2009) and therefore a rigorous critical appraisal of appropriate assessment tools for EF and AF in this specific context will be highly desirable. Towards this goal of elucidating the issue of assessment of executive and adaptive functioning among children in the context of LAMICs, a scoping review of the subject was undertaken by the authors in an earlier paper to broadly map out the kinds of instruments that had either been newly developed or adapted for use in this context, the results of which are reported elsewhere (Kusi-Mensah et al., 2021). However, reviewing the quality of evidence found in the reviewed papers was beyond the scope of that scoping review. This present paper, therefore, is a continuation of that project, seeking to critically appraise the quality of evidence for the results of the scoping review, and to thus make more definitive recommendations on the best instruments with the most high-quality evidence for use among children in LAMICs.

Objectives

The present study seeks to undertake a systematic review of published literature on the reliability and validity of assessment tools for executive functioning and adaptive functioning among children in LAMIC contexts. The purpose of this is to critically appraise and summarise the evidence for the scientific rigour of the methodologies used (risk of bias), and the results presented (psychometric measurement properties established) for the tools which have been developed, adapted or validated among children in developing country contexts, as well as document any knowledge gaps that may exist.

The following research questions were therefore formulated:

-

1.

What is the quality of adaptation (including content validation) of existing HIC-derived assessment tools for executive functioning or adaptive functioning among children in LAMICs?

-

2.

What is the nature and quality of evidence undergirding newly developed and purpose-made tools for assessment of executive functioning or adaptive functioning among children in LAMICs?

-

3.

What is the nature and quality of evidence supporting the psychometric properties (validity and reliability) of all HIC-derived assessment tools for executive functioning or adaptive functioning among children in LAMICs?

Methods

Protocol and Registration

This systematic review was conducted following the Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) checklist (Liberati et al., 2009), and our protocol was written according to the PRISMA- Protocol extension (PRISMA-P) guideline (Shamseer et al., 2015). The protocols for the systematic reviews of EF and AF measurement tools were successfully registered separately on the PROSPERO website (see here: https://www.crd.york.ac.uk/prospero/) with registration numbers CRD42020202190 and CRD42020203968 for the EF tools systematic review and AF tools systematic review respectively.

Eligibility Criteria

As alluded to earlier, the papers selected for critical appraisal in this systematic review were selected from the scoping review conducted earlier by the authors. The details of the eligibility criteria therefore can be found in that paper (Kusi-Mensah et al., 2021). However, in summary, we made a search for primary research papers of all study designs that focused on development or adaptation/validation of EF and AF tools used in the context of the target outcomes (executive functioning or adaptive functioning following brain pathology) among children in LAMIC countries, with no date or language restrictions. This meant that papers published from 1st January 1894 (earliest date of all search engines used) to 15th September 2020 (the last day of update of the search strategy) were included. The paper also had to primarily be concerned with developing, adapting or assessing the validity of the instrument of choice as one of its main stated study aims (if not the main), and not just as an incidental concern, to be eligible. For participants, studies examining children aged 5 years to 18 years (both healthy and clinical populations) living in LAMICs were included. All eligible full articles in any language were included in the search with no a priori language limits set on the search, and an attempt was made to translate non-English articles using Google Translate or volunteer native language speakers (as available). A list of potentially eligible articles that could not be obtained or translated have been provided in Appendix II. Target outcomes were defined as: 1. Papers reporting on the development (specifically concept elicitation and content validation) of a new tool assessing EF or AF; 2. Papers reporting on adaptation (content validation) of an existing HIC-derived EF or AF tool; and 3. Papers reporting psychometric properties of EF or AF tools in a LAMIC. Specifically, psychometric properties that were included as eligible for consideration were:

-

(a)

Internal consistency

-

(b)

Reliability (test–retest, inter-rater and intra-rater reliability)

-

(c)

Validity (structural, cross-cultural, construct and criterion validity)

-

(d)

Measurement Error

-

(e)

Responsiveness

These measurement properties are fully defined in the ‘data items’ section below. Excluded were animal studies, studies that only used the instrument as an outcome measurement instrument (for instance in randomized controlled trials), studies in which validation was not of the EF or AF tool (but rather validation of another instrument for another non-EF/AF construct such as visuospatial ability, intelligence, short-term memory etc.), and all studies that did not meet the inclusion criteria.

Information Sources

The following databases were searched with indicated dates:

-

1.

MEDLINE (OVID interface, 1946 onwards)

-

2.

EMBASE (OVID interface, 1974 onwards)

-

3.

Cochrane library (current issues)

-

4.

PsychINFO (1894 onwards)

-

5.

Global health (1973 onwards)

-

6.

Scopus (1970 onwards)

-

7.

Web of Science (1900 onwards)

-

8.

SciELO (2002 onwards): Latin America focused database providing scholarly literature in sciences, social sciences, and arts and humanities published in leading open access journals from Latin America, Portugal, Spain, and South Africa; this was an important source of non-English language studies from developing countries in Latin America, particularly from Brazil.

-

9.

Education Resources Information Centre (ERIC, 1966 onwards)

-

10.

British Education Index (BEI, 1996 onwards)

-

11.

Child Development & adolescent studies (CDAS, 1927 onwards)

-

12.

Applied Social Sciences Index and Abstracts (ASSIA, 1987 onwards): important source for multidisciplinary papers; includes social work, nursing, mental health and education journals.

GRAY LITERATURE DATA SOURCES

-

13.

Open grey (1992 onwards): includes theses, dissertations, and teaching guides

-

14.

PROSPERO (2011 onwards): repository of pre-registered study protocols for systematic reviews for trial protocols for similar scoping reviews through PROSPERO.

-

15.

Cochrane library (see above)

-

16.

EMBASE (see above)

-

17.

ERIC (see above)

-

18.

CDAS (see above)

In all 14 unique databases were searched initially by 20th March 2020, and finally on 15th September 2020. We also scanned the reference list of selected papers for other papers of possible interest which might have been missed in the literature search, particularly so for systematic and scoping review papers we found in our search.

Search Strategy

We developed literature search strategies using text words and medical subject headings (MeSH terms) related to the following themes:

-

Executive function/Frontal lobe function/Frontal lobe damage/Adaptive Function and their synonyms and variants using truncation

-

Assessments/Validation/reliability/norms/reproducibility/standardization of instruments and their synonyms and variants using truncation

-

Children/adolescents and their variants using truncation

-

Developing countries/lower-middle-income-countries/LMIC and their synonyms and variants using truncation

The search strategy was developed by a member of the study team (KKM) who had undergone extensive training from the Medical Library Services, University of Cambridge in conducting Systematic Reviews and in using search strategies in all the above-named databases. The search strategy was also reviewed by an experienced Medical Librarian who has extensive expertise in systematic review searching. The search terms were entered sequentially first with individual terms/synonyms connected with the Boolean operator “OR” as a theme-group to broaden the inclusivity of potential hits, while theme-groups were then connected with the Boolean operator “AND” entered into the advance search function to enhance the accuracy of potential hits. The full search strategy for MEDLINE is re-produced in Appendix I (see Supplemental Material) with more details of the search strategy. This search strategy was adapted for each of the 14 databases with each of their result documented in Table 7 below (see result section).

Study Selection

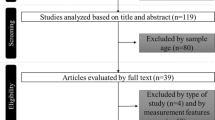

This has been extensively described in the scoping review paper. Six reviewers (all authors except AB) reviewed abstracts and critically appraised all papers. A minimum of 2 reviewers independently evaluated and screened each abstract and full paper at the abstract screening and full paper screening phases and compared results at each stage. Discrepancies were resolved by discussion and mutual agreement, or where there was no agreement, by arbitration by a 3rd reviewer. As a final resort, persistent disagreements were arbitrated by AB, the guarantor. Further, the pre-resolution inter-rater agreement ranged between 81.6%–88.9%, which was above the recommended minimum 80% agreement. In accordance with PRISMA recommendations, the selection process was documented in a flow diagram (see Fig. 1 below reproduced from scoping review paper) (Kusi-Mensah et al., 2021).

Data Collection Process

At least two reviewers independently extracted data from the screened articles using purpose-made data extraction charts: for the preliminary data collection the chart was designed following the PRISMA-ScR and PRISMA-P checklists (see scoping review paper for details); while for the critical appraisal, the chart was designed using items from the risk of bias assessment tool, the Consensus-based Standards for the selection of health status Measurement Instruments (COSMIN) checklist items guidelines (Mokkink et al., 2018b). First a calibration exercise was done for all 6 reviewers using a sample of 100 abstracts and 10 full paper articles to ensure uniform use of the screening criteria and the charting forms. The search results were uploaded and saved into Mendeley using the ‘groups’ function, which allowed online collaboration and discussion among the reviewers. The data were charted using custom-made Excel spreadsheets downloaded from the COSMIN website (see ‘help organising your risk of bias ratings’ here: https://www.cosmin.nl/tools/guideline-conducting-systematic-review-outcome-measures/).

Data Items

The following data points were collected and critically appraised using the following definitions:

Instrument reference

this referred to the name of lead author and publication year of the paper.

Instrument name

this referred to the instrument name and version under consideration.

Outcome Variable

the outcome variables of interest were executive functioning and adaptive functioning as defined above.

Country Settings

the desired setting was low-and-middle-income countries (‘LAMIC’) setting which was defined according to the World Bank list of lower income country (LIC- GNI per capita less than $1025), and middle-income countries which are split into Lower-middle-Income Country (LMIC- GNI per capita between $1026 to $3995) and upper-middle-income country (UMIC- GNI per capita between $3,996 TO $12,375) list (Cochrane Library, 2012; World Bank Group, 2019).

Type of Study:

The critical appraisal was done according to the specific type of study done in the paper, with COSMIN criteria changing for different types of studies. ‘Validation of an assessment tool’ was defined according to specific items/criteria used for reliability and validity according to the COSMIN manual guidelines (Mokkink et al., 2014). Specific items (including their taxonomy and definitions) that were included as part of validation if they were reported upon were defined according to the COSMIN manual (see page 11, Table 1 of the manual) as follows:

-

RELIABILITY: This is a domain which covers the extent to which scores for patients who have not changed on the construct in question, are the same for repeated measurement under several conditions e.g. scores not changing when one uses different sets of items from the same instrument (internal consistency); not changing over time (test‐retest reliability); not changing even when done by different persons on the same occasion (inter‐ rater reliability); or even when done by the same persons (raters or responders) on different occasions (intra‐rater reliability). It is a domain that measures degree to which the measurement is free from measurement error. The larger domain “RELIABILITY” comprises of the following measurement properties:

-

Internal consistency: The aspect of the ‘reliability’ domain which looks at the degree of the interrelatedness among the items. In other words, internal consistency is the maintenance of the same score for the same patient when different sets of items (which are related to each other) from the same instrument are used.

-

Measurement error: The systematic and random error of a patient’s score that is not attributed to true changes in the construct to be measured. One source of this error could be a lack of internal consistency, among other sources.

-

Reliability: This is a measurement property of the domain “RELIABILITY” that specifically looks at the proportion of the total variance in the measurements which is due to ‘true’ differences between patients (i.e., variance which excludes all sources of measurement error). This measurement property tests test–retest, inter-rater and intra-rater reliabilities (see above for definitions).

-

-

VALIDITY: This domain refers to the degree to which an instrument measures the construct(s) it purports to measure. This domain consists of the following measurement properties:

-

Content validity (including face validity): a measurement property of validity which looks at the degree to which the content or items of an instrument is an adequate reflection of the construct to be measured within the specific cultural context in question. It usually covers the following aspects of its measurement properties: ascertaining whether each item is relevant (i.e. refers to concepts that are familiar and common to the experience of the target audience/likely participants), comprehensible (i.e. words used can be understood at the language and educational level of the target audience) and comprehensive (i.e. items cover all the domains or aspects of the concept as understood by the target audience).

-

Construct Validity: a measurement property of validity which examines the degree to which the scores of the instrument are consistent with known hypotheses (for instance with regard to internal relationships, relationships to scores of other instruments, or differences between relevant groups) based on the assumption that the instrument validly measures the construct to be measured. This will comprise of the following aspects of measurement property subsets: Structural Validity which is the degree to which the scores of an instrument are an adequate reflection of the dimensionality of the construct to be measured (i.e. if the construct is seen as a single domain, a factor analysis will not produce 2 or more factors for that same construct but just one factor reflecting that single domain); Cross-cultural validity: which is the degree to which the performance of the items on a translated or culturally adapted instrument are an adequate reflection of the performance of the items of the original version of the instrument; and Hypothesis-testing (construct validity): which is the degree to which scores produced by the instrument are consistent with a known true hypothesis. Hypothesis-testing can be further divided into two sub-categories: discriminant validation (where the hypothesis is testing the ability of the instrument to discriminate between 2 group- say a clinical versus healthy group such as an EF instrument’s scores discriminating between a population of healthy children and children with ADHD in terms of ability to plan without distraction); convergent validation (where the hypothesis is testing the convergence of the instrument of interest- say an EF tool- with another instrument of related but different construct- such as an ADHD screening tool, such that there is good enough correlation between the scores produced by the two different but related tools).

-

Criterion validity: a measurement property of validity which looks at the degree to which the scores of an instrument are an adequate reflection of a ‘gold standard’. For outcome measurement instruments the ‘gold standard’ is usually taken as the original full version of an instrument where a shortened version is being evaluated.

-

-

RESPONSIVENESS: Another broad domain which looks at the ability of an instrument to detect change over time in the construct to be measured

The above definitions of measurement properties also largely conformed with the definitions given in The Standards for Educational and Psychological Tests manual American Educational Research Association, American Psychological Association, & National Council on Measurement in Education, 2018). Any paper that reported information on any of the above was considered as eligible for having included an eligible outcome measure. An important judgement decision that was made in respect of classification of what constituted “a study” was that any individual validation conducted in any given research project/paper was regarded as a “study” in accordance with COSMIN manual recommendations (Prinsen et al., 2018). So, for example, a given paper might report the conduct of say- construct validation (hypothesis testing), cross cultural validation and structural validation of one instrument all within the same paper. This was thus reported as three studies reported within one paper.

Mode of administration: This was defined as the way in which the instrument was administered, i.e. whether the instrument was a performance-based task, or an informant-based tool (i.e., self-reported or parent/proxy-based questionnaire etc.). For performance-based tasks, the number of trials of a task was considered as the “number of items” when it came to evaluating aspects like adequacy of sample size in Structural validity or Cross-cultural validity studies.

Sub-domains: the number of sub-scales or sub-domains or items of interest of the tool in question reported on.

Sample size: number of participants used.

Demographics: mean age and gender percentages of sample.

Local Settings: whether study was predominantly set in rural or urban settings (or both).

Condition: whether study was conducted among health sample or clinical sample, and if so, what clinical condition.

Language of population: What local language-group was the study conducted among.

Risk of Bias in Individual Studies

Each study was evaluated for both the quality of its methodology (Risk of bias assessment) and the quality of its results following the COSMIN guidelines (Mokkink et al., 2018a). This COSMIN guidelines came in 2 separate manuals: 1. The COSMIN methodology for assessing the content validity of Patient‐Reported Outcome Measures (PROMs) (C. B. ) which focuses on assessing risk of bias in content validity studies, and 2. the COSMIN methodology for systematic reviews of Patient‐Reported Outcome Measures (PROMs) (Prinsen et al., 2018) which focuses on risk of bias for all other types of validity studies. This section describes the risk of bias rating for the methodology used while the next section describes that of results. The risk of bias evaluation was done at both the study level and the instrument level. A critical appraisal of each study was done independently by at least 2 reviewers and compared, and consensus reached. The methodology was rated using 4 codes: ‘very good’, ‘adequate’, ‘doubtful’ and ‘inadequate’. So, for example, in evaluating a Structural Validity study for an instrument developed using classical test theory (CTT), the first item to be evaluated in the methodology would be whether Confirmatory Factor Analysis (CFA) was performed (scored as: ‘very good’), as opposed to Exploratory Factor Analysis (EFA score: “adequate”), or no CFA or EFA was performed at all (score: “inadequate”). For giving the overall rating of each study, the ‘worst score count’ system was used as per the COSMIN guideline, for the simple reason that “poor methodological aspects of a study cannot be compensated by good aspects” (Mokkink et al., 2018a; Caroline B ). For a detailed explanation of the individual criteria for each respective rating in all the scoring domains refer to pages 47 – 63 of the ‘COSMIN Manual for Systematic Reviews of PROMs’ (Mokkink et al., 2018a) which is downloadable for free here: https://www.cosmin.nl/tools/guideline-conducting-systematic-review-outcome-measures/.

Further, any qualitative studies for content validation found for new tool development or adaptation studies were critically appraised using the separate COSMIN guidelines for evaluation of content validation studies (Terwee et al., 2018a, b) (which is different from the COSMIN manual whose methodology was “developed in 2016 in a Delphi study among 158 experts from 21 countries”. This is also downloadable for free at the above URL of the COSMIN website. Here also the methodology of the content validation/instrument development study was appraised for risk of bias (also rated as: ‘very good’, ‘adequate’, ‘doubtful’ and ‘inadequate’- see COSMIN box 1 and box 2 in excel spreadsheet in supplemental material). The extracted and critically appraised data were then summarised per each identified instrument, and an overall rating given to the quality of evidence according to the COSMIN guideline, using the GRADE (Grading of Recommendations Assessment, Development, and Evaluation) approach (Schünemann et al., 2008), as described below (see Table 2 below).

Summary Measures

This section describes evaluation of results reported by the papers. The principal summary measures to be evaluated across studies differs according to study type. Table 1 below (reproduced from COSMIN manual for Systematic Reviews) summarises the various summary measures and criteria for all study types except content validity (reported later). After rating the methodology, the results reported were then rated as: ‘ + ’ for sufficient, ‘– ‘for insufficient and ‘?’ for indeterminate. For the construct validation, the acceptable measure of effect of the instrument of interest when compared with another similar instrument was pre-defined by the study team as an expected correlation between the two of at least 0.5 as recommended by the COSMIN guideline (Mokkink et al., 2018a). For comparison of healthy versus clinical populations on EFs or AFs, the pre-defined minimum acceptable difference was a statistically significant difference (Mokkink et al., 2018a) with an odds-ratio of at least 1.5. The results for individual assessment tools from different papers were then qualitatively pooled together and given an overall rating also according to ‘sufficient’, ‘insufficient’ and ‘indeterminate’.

For the content validation, results were rated as: ‘ + ’ for sufficient, “– “ for insufficient, ‘?’ for indeterminate and “ ± “ for inconsistent. The general rule for giving a sufficient rating per criterion was as follows:

-

+ ≥ 85% of the items of the instrument (or subscale) fulfill the criterion.

-

– < 85% of the items of the instrument (or subscale) does fulfill the criteria.

-

?No(t enough) information available or quality of (part of a) the study inadequate.

-

± Inconsistent results.

The ten (10) individual criteria for synthesizing results of Content Validity are specified in Table 2 below. The results of each criterion are rated taking into consideration the risk of bias assessment (i.e., quality of methodology- which would have been rated in COSMIN box 1 and box 2) and rated accordingly. The results rating was recorded in Table 2 below.

In using the COSMIN content validation manual (Terwee et al., 2018a, b, we made the following modifications for the following reasons. Firstly, although the COSMIN content validation manual allowed for three types of ratings to be assessed- 1. rating of original development study (if available or relevant) 2. rating of all adaptation or content validation studies, and 3. independent rating of all items by reviewers- following which a summary score would be given for the content validity of the instrument (see page 52 of COSMIN content validity manual), we chose to drop the third type of rating (the reviewers independent rating) and rather just stick with the first two- development study rating and adaptation/content validity studies rating. We followed this course of action for two reasons: firstly obtaining the actual instrument under review (rather than just the published validation study on that instrument) was not always going to be practical or even possible sometimes since several of them were copyrighted material under commercial licence for which we would have to pay a fee to obtain the instrument; the decision was therefore made that if we were not going to be able to obtain the full instrument for ALL eligible papers, then it would be unfair to evaluate some instruments on those three levels, and compare these with others that were evaluated on just 2 or even 1 level simply because some instruments were freely available and others were not.

Secondly, given our varying levels of expertise/experience and given the wide variety of countries from which we were going to evaluate papers, we also wanted to further minimise the amount of subjectivity that would go into us personally reviewing each item on candidate instruments as to the appropriateness of items for each country across such a diverse array of country-specific sociocultural realities and languages that we were not personally familiar with; and then going on to factor in these subjective impressions into the overall rating of the instrument. We therefore thought it would be better to leave the review of the appropriateness of individual items for individual country contexts (if done at all) to the local experts who might have been involved with the individual projects. We thus thought it best to restrict ourselves to only the review of the published development and adaptation/content validation papers using the specified standard criteria published in the two COSMIN manuals across board. This we felt would be more objective and give all tools an even playing field.

A practical effect of this approach in determining the OVERALL RATING of results of an instrument (summing up ALL available studies) was as follows: the COSMIN manual states that since there is supposed to be a Reviewers’ independent rating of each item (apart from the rating of the published development study and rating of the adaptation studies), where the results of the development and adaptation studies are at variance, the Reviewers’ own independent rating should be used, hence it should not be possible to give an ‘indeterminate’ OVERALL RATING for any instrument (see page 60 of COSMIN content validity manual). However, contrary to this, we did make it possible to give an 'indeterminate' overall results rating ('?') where the rating for the development study and the adaptation study were at variance since we did not do any Reviewers' rating to fall back on for an overall rating.

Another modification that was made in the application of the COSMIN criteria was in the risk of bias (RoB) evaluation for performance-based tasks. In evaluating the content validity of performance-based tasks, we did not deem it appropriate to assess "comprehensiveness" of the task (how well does the task cover all aspects of the construct at hand) among the caregivers/patients, because being lay people it seemed a bit unfair and unreasonable to ask them to evaluate how comprehensive such highly specialized tasks were to the construct of interest. Therefore, we only evaluated “comprehensiveness" among subject matter experts. However, for such domains as “relevance”, we went ahead and evaluated these performance-based tasks for relevance among caregivers/patients as per COSMIN guidelines because we felt that asking about the relevance of a task to the experience of a patient/caregiver was perfectly legitimate in such circumstances. As noted by Semrud-Clikeman and colleagues, when a task is unfamiliar to a child in a LAMIC but familiar to children in Western cultures, administering such a task to the LAMIC child may measure the ability of LAMIC children to adapt to new situations rather than their ability to complete the actual task, rendering the scores invalid for the domain being measured (Semrud-Clikeman et al., 2017), hence the need to assess even performance-based tasks for relevance.

A final modification made in applying COSMIN was in evaluating the RECALL PERIOD (see item 5 in Table 2 below). Because behaviours being described by the two constructs we evaluated (Executive and Adaptive functioning) were not "symptoms" per se, items concerning RECALL PERIOD were deemed as 'NA- not applicable'. This was because given that these behaviours were not symptoms with a "time of onset", but rather normal behaviours expected at various age-appropriate milestones, it did not seem suitable to evaluate whether authors concerned themselves with recall periods of how far back the item was to be evaluated and would thus have been unfair to have penalized them for not doing so (as per COSMIN guidelines) given the context. Further, for performance-based tasks only, evaluation of appropriateness of RESPONSE OPTIONS (see item 4 in Table 2 below) such as ‘often’, ‘sometimes’, ‘never’ etc. as seen in questionnaires was also deemed ‘not applicable’. This question while making perfect sense in the context of an informant-based instrument (which has various response options such as ‘sometimes’, ‘never’, ‘often’ etc.), would not make sense in the context of a performance-based tasks such as Go/No-go or Wisconsin Card Sorting Test where the response is an action.

Synthesis of Results

This section describes how methodology and results ratings of individual studies were synthesized and summarized across several papers for each instrument. After rating the methodology and results of individual studies and qualitatively pooling these together to give an overall rating per instrument, the overall quality of the evidence for the reported results of the instrument in question (taking into consideration all published papers for that instrument) was then graded following the GRADE (Grading of Recommendations Assessment, Development, and Evaluation) approach (Schünemann et al., 2019). In other words, after giving the overall rating of the results of a study type per instrument, this result was then accompanied by a grading of the quality of evidence using the GRADE approach.

The instrument in question is usually assumed to be of high quality of evidence from the start (see Table 3 below reproduced from page 34 of COSMIN manual (Mokkink et al., 2018a)), and progressively downgraded to lower levels, taking into consideration the best rating for Risk of Bias, inconsistencies of results from different studies (i.e. between study variability/heterogeneity), imprecision of results (i.e. down-grading for low pooled sample sizes) and indirectness (i.e. downgrading for studies that were (partly) performed in another population or another context of use than the population or context of use of interest in the systematic review, for example, evidence from a mixed sample with adults rather than just children and adolescents).

The final quality of evidence was then graded as: ‘high’, ‘moderate’, ‘low’ or ‘very low’ depending on which level the instrument was finally left at following successive downgrades. This grading serves to indicate how confident one can be that the overall rating is trustworthy (Terwee et al., 2018a, b). So, for example, in this scheme, 2 different tools- Instrument A and Instrument B- might both have a rating of “ + ” (sufficient) in their structural validity, but Instrument A might have a grading of “high” for the quality of evidence supporting its rating of “ + ”, while Instrument B might have a grading of “very low” for the quality of evidence supporting its own “ + ” rating.

To go into more detail, Risk of Bias (RoB) was given an overall grade of “No risk”, “serious”, “very serious” or “extremely serious” risk depending on the situation specified against each grade in Table 4 below (reproduced from page 34 of COSMIN manual (Mokkink et al., 2018)). So, for example, an instrument that had multiple studies of ‘inadequate’ quality, or only one study of ‘doubtful’ quality in its methodology rating would be rated as having ‘very serious’ RoB (see Table 3) and would be downgraded by –2 steps from say “High” quality of evidence to “Low” quality of evidence according to GRADE criteria (see Table 4).

A similar principle of progressive downgrading was followed for Inconsistency of Results. The pooled or summarized results were rated based on a majority of individual (study) results as ‘sufficient’ or ‘insufficient’ (i.e., if most consistent results were ‘sufficient’ overall rating would be sufficient, if majority of consistent results were ‘insufficient’, overall rating for consistency would be ‘insufficient’) and then downgraded accordingly using the GRADE approach in Table 3. For the purposes of determining the degree to which the downgrade was to be made for inconsistency, COSMIN recommended that the review team come to consensus about that decision. Consequently, the team decided that a downgrade of –1 (‘serious’) would be made for inconsistency if a super majority of results (above 75%) were consistent, in which case the results would be rated as ‘sufficient’ but quality of evidence would be downgraded by only –1 (say from ‘high’ to ‘moderate’) for inconsistency. However, if less than 75% but more than 50% of studies found agreement (e.g., 60% of studies gave ‘sufficient’ results and 40% gave ‘insufficient’ results for any given parameter- say, Test–retest reliability) then the overall rating would be given as “sufficient” but quality of evidence downgraded by –2 levels (‘very serious’) say from “High” to “Low” quality. If less than 50% of studies gave a consistent result (e.g., 40% of studies gave ‘sufficient’ and 60% gave ‘insufficient’ results) then the overall rating would be given as ‘insufficient’ but quality of evidence downgraded by –2 levels.

Having given this explanation, it must be stated that a slight departure was made in applying this rule when it came to Construct Validation, though this alternative approach was still in accordance with COSMIN recommendations (see page 34 of COSMIN manual). For Construct validation, where the two hypotheses being tested were of a fundamentally different nature (e.g., discriminant validation, as opposed to say a convergent validation), the strategy adopted was to summarize the results by sub-groups (i.e. summarize according to each individual hypothesis) rather than pooling all the results together. So, in this case, the results of all convergent validity studies will be pooled separately from the results of all discriminant validation studies treating both as “sub-groups” of construct validation, rather than pooling together all “construct validity” studies and using the 75% majority rule.

For Imprecision, the total sample size of all the included studies (on the validation study in question) were simply pooled together and the quality of evidence simply downgraded with one level when the total sample size of the pooled or summarized studies was below 100, and with two levels when the total sample size was below 50, as per the COSMIN manual (see page 35) (Mokkink et al., 2018). For Indirectness, this was typically not a major concern in this study since part of the screening criteria was that the study be performed primarily among children (aged 5 – 18 years). However, in the few instances where there were mixed study populations involving say young adults (19 – 25 years), only a single level downgrade (‘serious’) of quality of evidence was made, as recommended by the COSMIN manual.

This downgrading was done successively from “high” downwards following evaluation of ‘risk of bias’, ‘inconsistency of results’, ‘imprecision’ and ‘indirectness’ as explained above. The results of the quality of evidence for all instruments were then summarized and presented below in the results section. The interpretation of what each of the ratings (individual studies) and the grading (overall quality of evidence for individual instruments) is shown in Table 5 below.

For the synthesis of results of the content validation, following the separate COSMIN guidelines for evaluation of content validation studies (Terwee et al., 2018a, b) a similar but slightly different approach was used for results synthesis. First, as stated above with the other types of validations, each criterion for results of content validity was given a rating (± /?) that took into consideration the methodology of content validation rating (see COSMIN box 1 and box 2 in Excel spreadsheet in supplemental material), which rating was recorded in the corresponding item in Table 2. After rating each criterion, a summary rating is then given to the RELEVANCE box (criteria 1 – 5), COMPREHENSIVENESS box (criteria 6), and COMPREHENSIBILITY box (criteria 7 – 8) as either ± /?/ ± (see COSMIN content validity manual, page 58, Table 3 for rules for summarising rating per box).

Then, a CONTENT VALIDITY rating is given for the study according to COSMIN guidelines (see COSMIN content validity manual, page 59, Table 4 for rules for summarising content validity rating per study) also as ± /? / ± . Finally, the content validity ratings from all available studies are summarised for the instrument and an overall quality of evidence is given according to GRADE approach as high’, ‘moderate’, ‘low’ and ‘very low’, all following the specified COSMIN guidelines (see steps 3b and 3c of COSMIN Content Validity Manual on page 60–62).

This overall rating is done factoring in both the score of the content validity of the eligible adaptation study/studies under consideration, as well as the score of the content validity of the original instrument development study of that instrument following a critical appraisal as per usual COSMIN guidelines whether or not this original development study was captured by the search strategy. This would mean attempting to obtain the original instrument development study and appraising it, even where it would not otherwise fall into the eligibility criteria (for example, when appraising instruments developed in HICs that were being adapted for use in a LAMIC- the original development study (in the HIC) will have to be critically appraised, and the score factored into the overall content validity rating of the instrument). Where the original development study could not be obtained (for whatever reasons) to be critically appraised, an estimation of their probable score based on publicly available information and an assumption of the best case scenario of their likelihood of fulfilling (or failing to fulfil) the COSMIN criteria was made, and this was then used in estimating their overall content validation score while factoring in the score of the adaptation studies that were eligible as just described, with cogent reasons provided for their estimated score. Wherever this type of estimation was done, this has been clearly indicated in the results and in the supplemental material for the sake of transparency.

The criteria for rating quality of evidence are reproduced in Table 6 and Fig. 1 below. Because of the decision to exclude ‘Reviewer rating’ level, the COSMIN guideline for summarising and giving an overall content validity rating per instrument was modified to allow for an ‘indeterminate’ rating (?). As recommended by COSMIN, where multiple content validity studies were reported for any single instrument, ‘indeterminate’ studies were ignored, and only ‘sufficient’ or ‘insufficient’ results were considered and summarised. However where only a single study of ‘indeterminate’ result was found for content validity, rather than ignore the “?” rating, we chose to report this (?) as the final summary rating. The quality of evidence was then determined as ‘high’, ‘moderate’, ‘low’ and ‘very low’ according to the modified GRADE approach as outlined in COSMIN guidelines.

Downgrading the GRADE quality of evidence rating for risk of bias was done as follows. Downgrade for ‘serious RoB’ (–1 level, e.g., from high to moderate) was made if the adaptation/content validity study was of ‘doubtful’ methodological quality. If there were no content validity studies (or only of inadequate quality) and the instrument development study was of doubtful quality, downgrade for ‘very serious RoB’ (–2 levels) was made. If there was no adaptation/content validity study (or if only one of ‘inadequate’ quality) and the instrument development study was also of ‘inadequate’ quality, RoB downgrade was –3 levels (extremely serious) to ‘very low’ quality of evidence. Figure 1 (reproduced from COSMIN content validity manual) summarizes the flowchart for downgrading for RoB. For inconsistency, downgrade to ‘serious’ (–1 level) was made if the results rating for the instrument development study and the adaptation/content validation study were inconsistent.

Risk of Bias Across Studies

Meta-biases like publication bias were reduced by searching the ‘grey literature’ as indicated above. However, it was difficult to guard against selective reporting within studies as this was a largely qualitative systematic review which therefore precluded requesting for original datasets from authors to conduct a “meta-analysis” of results.

Results

Study Selection

Table 7 (reproduced from the scoping review) summarises the results of the initial search in each individual data source, along with the dates of coverage of the search in each database.

Following de-duplication, manual screening of full papers for eligibility, 51 full papers were found to be eligible for full data extraction and critical appraisal. Web of Science showed a surprisingly higher number of results than PsychINFO possibly because of the search strategy that was used: because the search terms, ab initio, did not preclude studies that only used these assessment tools in psychometrically unrelated papers without necessarily reporting psychometric properties (these were later excluded on manual inspection of abstracts and full review papers). Therefore, Web of Science, which is a database dedicated to multidisciplinary research (and hence also likely to have a lot more abstracts than the more specialised PsychINFO), was likely to include a significant proportion of abstracts of such “use studies” which were later excluded as ineligible upon manual screening by the reviewers. The screening process and reasons for exclusion are summarised in the PRISMA flow diagram below in Fig. 2.

PRISMA Flow Diagram for Study Selection

Study Characteristics

Details of the study characteristics of each paper are reported elsewhere in the scoping review paper by the authors (Kusi-Mensah et al., 2021), and provided in the Appendix of this paper. However, some key highlights are pertinent here. A total of 163 studies (as defined under Data Items above) were reported in 51 papers. The most frequently conducted types of study were Structural Validity and Construct Validity/Hypothesis testing studies at 38 studies each (23.3% each of total individual studies), followed by Internal consistency studies at 27 (16.6%). In terms of geographical regions, the Americas (Central and South America) (30.4% of papers), Sub-Saharan Africa (21.4% of papers) and the Middle East (17.9% of papers) were the top 3 performing regions in terms of number of papers published from LAMICs. Most studies conducted involved urban populations at (76.7%), and exclusively healthy populations (60.8%) as opposed to clinical populations (with health controls).

Results: Risk of Bias Within and Across Studies and Results of Individual Studies

Table 8 shows a summary of the results (synthesis of results for all studies for an instrument pertaining to each measurement property) of the critical appraisal for all the types of validity except content validity (see Table 9 for content validity results). The quality of evidence was summarised using the GRADE approach (Schünemann et al., 2019) shown by the colour coding in the table. For the details of the rating of individual studies, see the following boxes in the Supplemental Material: for Risk of Bias of various measurement properties of validations in individual studies- COSMIN boxes 3–10, for results of individual studies- COSMIN Supplemental Table 2; for details of the reasons behind each rating, see comments on ‘Summary Psychometric Ratings’ in Supplemental Material.

In this systematic review, 40 unique tools coming in 49 version/variants were identified as having been either developed or adapted/validated for use among children in LAMIC countries. Figures 3 and 4 below provide a snapshot summary of the overall results of this critical appraisal in pie chart format. Figure 3 shows the overall summary of the results ratings of the various measurement properties, while Fig. 4 shows the summary of the overall GRADE ratings of the quality of evidence for the various measurement properties. None of these tools showed full validation (i.e., validation in all domains assessed) in LAMICs. Only 11% of adaptation/development studies showed “sufficient” content validation, while 33% showed sufficient results for reliability and 36% did same for cross cultural validity. However, for internal consistency, structural, convergent and discriminant validities results were good at 91.3%, 53%, 75% and 84% “sufficient” results respectively. When it came to the quality of evidence as well (see Fig. 4), the pattern of results was similar with 100% of content validation studies, 86% of cross-cultural validations and 90.5% of reliability studies being of either low or very low quality. Internal consistency was generally of high quality (87%), with 50.1% of structural validity, 75% of convergent/concurrent studies and 71.8% of discriminant validity studies showing either high or moderate quality of evidence supporting their results.

Going into the specific instruments (see Table 8), the BRIEF (in its various versions) (Gioia et al., 2000), Wisconsin Card Sorting Test (WCST) (Berg, 1948), Go/No-go (GNG) (Luria, 1973) and the Rey-Osterrieth complex figure (ROCF) (Rey, 1941) had the most validations undertaken, both in terms of the variety of validations and the number of studies. None of them though had either ‘high’ or even ‘moderate’ quality evidence for ‘sufficient’ psychometric properties across all the measured psychometric domains. The BRIEF (in all its versions) showed high quality of evidence for ‘sufficient’ internal consistency, high quality evidence for ‘sufficient’ construct validity (for BRIEF- pre-school version) and moderate to low quality evidence for sufficient construct validity (mostly convergent validity) for BRIEF-parents and BRIEF-teachers respectively. The WCST showed moderate quality evidence for ‘sufficient’ internal consistency and low-quality evidence for ‘sufficient’ construct validity across several studies, while the ROCF showed high quality evidence for ‘sufficient’ construct validity (discriminant validity). The GNG showed high quality evidence for both ‘sufficient’ structural validity and ‘sufficient’ internal consistency. For cross-cultural validity, only two instruments had moderate quality evidence for a ‘sufficient’ rating: GNG and the Behavioural Assessment System for Children (BASC) (Reynolds & Kamphaus, 1992). They both also showed high quality evidence for sufficient structural validity and internal consistency. However, BASC showed low quality evidence for sufficient construct validity in the countries of interest.

For adaptive functioning, the most assessed tools were the Vineland Adaptive Behaviour Scales (VABS) (Sparrow et al., 1984, 2005) and the Child Function Impairment Rating Scale (CFIRS) (Tol et al., 2011), a newly developed tool from Indonesia. Of these two, while both had high quality evidence for ‘sufficient’ internal consistency, VABS showed low-quality evidence for ‘insufficient’ structural validity, and indeterminate cross-cultural validity. The CFIRS showed low quality evidence for sufficient construct validity but reported no formal assessment of comprehensibility and comprehensiveness among subjects during tool development (hence was rated ‘indeterminate’ in both) with methodological shortcomings in relevance assessment (hence rated ‘insufficient’).

Table 9 shows a summary of the results of the critical appraisal for content validity. First few columns show results of individual studies content validation studies, with the “overall rating” column showing the synthesis of results for all content validation studies pertaining to an instrument, and the colour coding showing the GRADE rating for quality of evidence. For details of the reasons behind each results rating, see comments on ‘Summary Content validity’ in supplemental material; and for Risk of Bias of content validation for individual instrument development studies, see COSMIN box 1, while that for content validation of adaptation studies is in COSMIN box 2 in the supplemental material.

Here, 16 unique tools coming in 18 version/variants had either instrument development studies (i.e., brand new tools developed for the countries of interest) or adaptation studies reporting on their content validity in those countries, which were critically appraised. None of these tools showed full content validity (i.e., ‘sufficient’ rating of high or moderate quality evidence in all three sub-domains of RELEVANCE, COMPREHENSIBILITY and COMPREHENSIVENESS) in the LAMICs considered. Of these, only the BRIEF (pre-school version) (Isquith et al., 2004) and the VABS (Sparrow et al., 1984, 2005) had studies showing ‘sufficient’ content validity but of very low quality of evidence, with all the others showing either ‘indeterminate’ (?) or ‘inconsistent’ ( ±) content validity results of either low or very low quality of evidence. For several of the legacy tests such as the Wisconsin Card Sorting Test (WCST) (Berg, 1948), Go/No-go (GNG) (Luria, 1973) and the Rey-Osterrieth complex figure (ROCF) (Rey, 1941), the original development studies could not be obtained to be critically appraised. Therefore we estimated their probable score based on the best case scenario of their likelihood of fulfilling (or failing to fulfil) the COSMIN criteria for development studies, which was used in estimating their overall content validation score (while factoring in the score of the adaptation studies that were eligible as described in Synthesis of Results section of Methodology, and in COSMIN guideline pages 62–63), with reasons provided for their estimated score.

Discussion

This systematic review was carried out to critically appraise the validation and adaptation studies that had been conducted for use of executive and adaptive functioning tools among children and adolescents in LAMICs, to make recommendations for clinical use and for further research in such cross-cultural contexts.

Summary of the evidence: The General State of the Evidence Available.

The first noteworthy observation is that there is an obvious lack of validation studies for executive functioning (EF) and adaptive functioning (AF) instruments in LAMICs, period. The many ‘potholes’ in the summary tables of Tables 8 and 9 is ample evidence of this lack of validity studies. Further, even where the evidence exists, the quality of evidence leaves much to be desired. Particularly concerning were the quality of evidence for content validation, cross-cultural validation, reliability and (to a lesser extent) structural validation studies, where most of these types of studies were of either ‘low’ or ‘very low’ quality as shown in Figs. 3 and 4.

For cross-cultural validation studies, only 5 out of 14 (35%) showed ‘sufficient’ cross cultural validity (see Fig. 3), and even then, the quality of evidence for 12 out of 14 (86%) was ‘low’ or ‘very low’ (see Fig. 4). One of the major methodological problems that led to this poor result was that a vast majority of papers purporting to do a cross-cultural validation failed to use an appropriate approach to compare the two groups (comparing original culture to target cultural context) such as Multi-group Confirmatory Factor Analysis (MGCFA) with most opting for a Principal Component Analysis (PCA), or even ANOVAs and student t test approaches. In order to adequately ascertain measurement invariance of a tool in one cultural context as compared to its use in its original context, one must assess how each item in the tool functions in the target population- whether the items achieved scalar invariance, metric invariance and configural invariance- to be able to confidently conclude that the tool functions in the same way in the target population as it does in the original population in which it was developed (Fischer & Karl, 2019). Only an appropriate method such as MGCFA can provide this information. A PCA for example, based on its underlying assumption of only common variance and no unique variance among items, will only be useful as a data reduction tool that can essentially “boil down” a complex set of variables to an essential set of composite variables (i.e. reduce the number of necessary items in a tool), and not to uncover the latent constructs underlying the variables or to ascertain how items behave in another population. Therefore, use of PCA for cross-cultural validation is considered an inappropriate methodology, and was the major reason for most of the cross-cultural validation studies being rated so poorly, as per COSMIN criteria.

Reliability studies also had a poor showing. Only 6 out of 21 (29%) showed ‘sufficient’ reliability, and even then, the quality of evidence for 19 out of 21 (90%) was ‘low’ or ‘very low’. The reason for this poor showing for many of the studies was either time interval between assessments not stated, or too long an interval (whether for test–retest or inter-rater reliability), where many studies went well beyond the recommended (by COSMIN) 2 week-period (some studies as long as 48 – 96 weeks between assessments), taking too long a time interval to be sure that the underlying construct (e.g. EF) had not actually changed in reality, especially considering that EF does change with age. A second reason was an inappropriate method of data analysis, where some papers used a Pearson’s correlation to calculate reliability without any evidence provided that no systematic change had occurred (since Pearson’s does not take systematic error into account), rather than using the more appropriate Intra Class Coefficient (ICC) (which does take systematic errors into account) and describing the model of ICC used.

For content validity, only 2 out of 16 studies (13%) had ‘sufficient’ content validity with all of them showing either ‘low’ or ‘very low’ quality of evidence. The commonest reasons for this were that many adaptation or instrument development studies did not involve the target audience in either concept elicitation (for instrument development studies) or the determination of relevance, comprehensiveness or comprehensibility (for adaptation studies), with most relying heavily on “expert panel” input for these things. Given the modern sweep towards “co-production” methods, this “expert dominated” synthesis cannot continue to be encouraged. Also, most did not report on enough detail of the pilot studies to allow for adequate assessment of methodology, hence the many ‘indeterminate’ ratings of content validity (according to COSMIN, where not enough information is provided to make a judgement one way or another whether evidence is ‘sufficient’ or not, it is recommended to make a rating of ‘indeterminate’). Further, in a few cases where either legacy instruments (such as WCST and ROCF) or some proprietary instruments were involved, it was not always possible to obtain the original development studies if they were not freely available online (some proprietary instruments only published their development study in their instrument manuals which need to be purchases along with the tools), and therefore an estimation rating had to be made for the development tools based on information that was publicly available, assuming a best-case scenario each time.

Having noted the above, the best performing tools for use among children in LAMICs (judging by quality of evidence for psychometric properties) were BRIEF for executive function (EF) and VABS for adaptive function (AF). For EFs, the main weakness of the close contenders to the BRIEF- the legacy instruments/tasks of WCST, GNG and ROCF- was their lack of comprehensiveness in assessing ALL domains of EF as currently widely accepted. The BRIEF (in all its various versions) showed a respectable coverage of almost all the domains of validity that were assessed with positive results in a good number of them: there was high quality evidence for ‘sufficient’ internal consistency (seen in 7 studies across all versions of BRIEFs); ‘sufficient’ construct validity (mostly convergent and some discriminant validity) whose quality of evidence ranged from low to high (seen in 5 studies across 3 out of 4 versions of BRIEFs); and moderate to low quality evidence for ‘sufficient’ construct validity (mostly convergent validity) for BRIEF-parents and BRIEF-teachers versions respectively. However evidence for good content validity, structural validity and cross-cultural validity of the BRIEFs among children in LAMIC was lacking: there was very low quality evidence for one 'indeterminate' rating of content validity (parent version) and one 'sufficient' content validity (pre-school version) seen in 2 studies; low to very low quality evidence for mostly 'indeterminate' structural validity seen in 6 studies across all versions of BRIEF (except for one study showing low quality evidence of 'sufficient' structural validity of BRIEF-teacher version); and low quality evidence for 'indeterminate' cross-cultural validity (seen in 2 studies but only for 2 versions- parent and pre-school).

Having noted the above, an honourable mention is the TEXI/CHEXI tool, which although a relatively new tool with fewer studies conducted in LAMICs showed remarkably good results with decent quality of evidence: high quality evidence for ‘sufficient’ internal consistency (seen in 2 studies across all versions), low to high quality evidence for ‘sufficient’ construct validity (seen in 2 studies across all versions), moderate quality evidence for ‘sufficient’ structural validity (2 studies across all versions) and low quality evidence for ‘sufficient’ reliability (seen in 1 study for TEXI). The EFICA tool- developed in Brazil- was also noteworthy because although there was only 1 study evaluating it, that single study produced high quality evidence of sufficient structural validity, internal consistency and (for the parent version only) construct validity (discriminant validity) across its 2 versions- parent and teachers. Both of these other tools though did not have good evidence for content validity in their reported studies. Glaringly missing in all EF instruments though was any tool that explicitly assesses the domain of meta-cognition, but this is probably due to the continuing evolution and refinement of the concept even in Western thought.

For adaptive functioning (AF), VABS was the most validated as earlier mentioned. The main weakness of the closest contender, the CFIRS, was the fact that outside of its home country of Indonesia it had not been validated in a cross-cultural setting, which is understandable given its relatively newer status. As has been noted elsewhere in the literature, adaptive behaviour and expectations of daily living skills and socialization are culturally dependent, hence local input into the appropriate types of questions to be asked in adaptive behavior assessment is crucial (Semrud-Clikeman et al., 2017). The VABS (in all its editions) also showed a decent coverage of almost all domains of validity evaluated with positive results: high quality evidence of sufficient internal consistency (seen in 2 studies) and construct validity (backed by 2 studies); low quality evidence for ‘sufficient’ reliability (backed by 2 studies); and very low-quality evidence for sufficient content validity (backed by an ‘inadequate’ adaptation study and an ‘inadequate’ development study). However, results for cross-cultural validity and structural validity were not the best: there was low quality evidence for an ‘indeterminate’ rating of cross culturally validity (2 studies); and very low-quality evidence of insufficient structural validity (backed by 1 study).

Evidence from High-Income Countries

Evidence from HICs indicate that assessments of EF and adaptive behavior form a very significant proportion of all assessments performed by clinical psychologists and neuropsychologists with approximately 50% of clinical psychologists practicing in this area (Camara et al., 2000) which indicates its importance. In this nationally representative survey of 1002 neuropsychologists and 1500 clinical psychologists, the most frequently used EF instruments were such legacy tests as Trail making test (TMT) and WCST while that for adaptive behaviour was the VABS (Camara et al., 2000), which was confirmed by a broader-based and more recent follow up survey among 2004 north American neuropsychologists (Rabin et al., 2005), with the ROCF and NEPSY also featuring more prominently in this survey. To provide some context though, it is worth noting that these large surveys are rather dated as the data were collected over 20 years ago, hence would not have included many of the instruments included in this review which were developed in the last 20 years.

Interestingly, in a similar recently published systematic review of EF performance-based instruments used within the context of Occupational therapy (which incidentally also used the COSMIN manual for assessing risk of bias, and which was not limited to LMICs), the following instruments, none of which have been adapted for use in LAMICs hence failed to show up in our review, were all found to have a “low” GRADE rating of quality of evidence: the Behavioural Assessment of the Dysexecutive Syndrome for Children, Children’s Cooking Task, Children’s Kitchen Task Assessment, Do-Eat, and Preschool Executive Task Assessment (Gomez et al., 2021). Another such systematic review performed by researchers from Italy (also using COSMIN, and also not limited to LAMICs, but considering a much limited array of databases compared to this present study) also identified 19 EF tools which scored poorly on their methodological rigour, but also found the BRIEF (2 of its variants) to have the best risk of bias rating, noting that it particularly did well in internal consistency, but reported inadequate indices for reliability and other measurement properties (Berardi et al., 2021). This study also noted the relatively good performance of another test not included in our review (for lack of adaptation in LMIC)- the Lion Game (Van de Weijer-Bergsma et al., 2014) developed for study of visuo-spatial working memory in Dutch. Finally another paper reviewing EF tools used in Brazil was also found (Guerra et al., 2020) however no critical appraisal was performed in this paper. These findings from the wider literature therefore largely confirm the findings in this systematic review.

Cross Cultural Considerations

Since one of the desired outcomes of this paper is to be able to make recommendations of assessment tools for use in LAMICs, an important consideration for the authors was which of these instruments had adequate content validity and cross-cultural validity in LAMICs. The importance of adequate cultural adaptation or development of instruments in accurately measuring such psychological constructs cannot be overstated. This very point was made by Geisinger in his seminal paper on cross-cultural normative assessment (Geisinger, 1994) when he highlighted the importance of going beyond simple language translation and considering cultural backgrounds during adaptation of instruments among sub-populations in the US. Concept formation is goal-directed. Which means, if the socio-cultural milieu within which a child is placed does not place certain types of goals before him/her, he/she will not form concepts to solve the problems standing as obstacles to that goal, a point echoed by scholars across the decades from Vygotsky first in the 1930’s (Vygotsky, 1980) through to Ardila (1995), and Nell (1999) more recently while discussing the work of Luria (1973). For a word to have any meaningful meaning, it must represent a concept that is widely shared with the speakers to whom the communication is directed. Or put in the words of Vygotsky: “This is why certain thoughts cannot be communicated to children even if they are familiar with the necessary words” (Vygotsky, 1980). Any task or set of questions placed before this child (or caregiver) that has not been rigorously determined to be within the conceptual understanding of said child, therefore, is bound to fail in assessing what it purports to assess without a proper rigorous cultural (and conceptual) adaptation. This type of “cultural specificity” of conceptualization has been well documented in the literature in studies from across the world, for example by Venter in the context of the acquisition of Piagetian tasks in cognitive development (Venter, 2000).

Unsurprisingly, the EF instruments that performed best in cross-cultural settings were legacy performance-based tools such as the GNG (moderate quality evidence of sufficient cross-cultural validity) and Tower Test (low quality evidence of sufficient cross-cultural validity), with the one interview-based instrument that did reasonably well being the BASC (moderate quality evidence of sufficient cross-cultural validity). Of these, the only instrument for which a formal adaptation was reported was the GNG, which showed low quality evidence for 'inconsistent' rating of content validity. Further, it is reported elsewhere in the literature from LAMICs that a version of the GNG (the knock tap test) should be used with extreme caution because of poor reliability (Semrud-Clikeman et al., 2017).

An important implication of these finding is that potentially performance-based tasks/tools are perhaps most easily translatable across different cross-cultural contexts to produce consistent results across different population groups. An obvious shortcoming of this though is the issue of most of these existing (legacy) tasks lacking comprehensiveness in terms of our current understanding of the various domains of EF. A potential solution to this dilemma might be the development of more comprehensive, culturally appropriate performance-based measures of EF which tap into almost all domains of EF. These could either be universally adaptable age-appropriate tasks- such as cooking or running errands- or more regionally peculiar tasks- such as fetching water, playing of particular local games or occupational activities like farming- if they could potentially be calibrated to assess all domains of EF and AF as we currently understand them.

Tool Recommendations