Abstract

Despite the existence of studies addressing the historical development of digital platforms, none of them has yet drawn a coherent and comprehensive interpretation of the emergence of scientific digital platforms. The previous literature (i) focuses on specific scientific practices; (ii) does not reach far enough back into the past; (iii) does not cover all relevant groups of social actors; (iv) does not propose a taxonomy for scientific digital platforms; and (v) does not provide a definition for scientific digital platforms. We propose in this paper a long-term view (from 1990 onwards), allowing us to identify the participation of distinct groups of social actors—within State, Market and Science subsystems—in the process of science platformization. Dialoguing with the most up-to-date literature, we broaden our understanding of the ongoing process of platformization of the research life cycle, proposing a taxonomy and a definition for scientific digital platforms. The evidence provided throughout the paper unveils that (i) the changes (caused by platformization) in each of the phases of the research cycle are not at all linear and are not happening simultaneously; (ii) actors from different subsystem played important roles in the platformization of science; and, (iii) specific categories of platforms have consolidated themselves as infrastructures and certain scientific infrastructures have been platformed, although this varies by category.

Similar content being viewed by others

Introduction

With the emergence of the World Wide Web (WWW) in the mid- and late 1980s, a fragmentation process of traditional academic infrastructures began. This process is twofold: while on the one hand, we can witness the obsolescence of traditional structures (e.g., printed journals); on the other, new online entities—boutique digital libraries, institutional repositories, content management platforms, open protocols, metadata aggregation (Plantin et al. 2018)—emerge, fighting for their own space. Despite the existence of studies addressing the historical development of digital platforms (Acs et al. 2021; Langlois 2012; Helmond 2015; Tabarés 2021; Helmond et al. 2019), none of them has yet drawn a coherent and comprehensive interpretation of the emergence of scientific platforms.

Plantin et al. (2018) account for the multiplicity of societal groups that seek to re-integrate some aspects of the fragmented scientific infrastructure caused by the emergence of the web. Mirowski (2018) offered a survey on science-focused private platforms and concluded that "Science 2.0" is a neoliberal project that seeks to profit from phases of the scientific process leading to decreasing autonomy of researchers. He also provided a "landscape of science platforms", crossing phases of scientific activities (getting started; preparatory; research protocols; writeup; publication; and post-publication) with users (normal scientist; funders; competing scientist; spectator scientist; outsider citizens; and, kibitzer), illustrating with some representative platforms. Both Mirowski (2018) and Plantin et al. (2018) did not present a chronological narrative or a relational ordering of their historical trajectories. In that regard, Jordan (2019) outlined the historical expansion of science platforms, but only for a specific class: academic social networks (ASN). She presented a timeline from 2002 to 2017, in which it is possible to observe the evolution of ASN and general-purpose social networks. Mirowski (2018) also did not separate in his analysis general-purpose social networks (such as Twitter and LinkedIn) from exclusive/dedicated science platforms.

Those previous studies on platforming approach the development of digital platforms from a relatively short-term perspective, narrowly emphasizing the role of the market. They neither propose a taxonomy for scientific digital platforms nor provide a definition for scientific digital platforms. Advancing on that ground, we propose a longer-term view (from 1990 onwards), which allows us to identify the participation of other actors in different subsystems in the process of science platformization, with possible impacts on the scientific infrastructure. This way, dialoguing with the most up-to-date literature, we contribute to broadening the understanding of the ongoing process of platformization of research life cycle, answering the following research question: what are the ways in which the scientific phases have been platformed?

We organize the article into five sections, including this introduction. In the next section, we lay out our conceptual background and the method used. We follow Yin's (2003) guidance and structure our research strategy to develop an exploratory study. In section 3, we start our exploratory case study exposing emblematic scientific digital platforms developed within three "great subsystems of modernity": State, Market and Science (Nowotny, Scott, and Gibbons 2003). In this context, three large groups of social actors from each one of the subsystems appear crucial to us: public administration, private sector, and, research communities, respectively. In section 4, we propose a discussion on our findings and suggest systematizing figures of the exploratory study presented. The evidence throughout the paper unveils important conclusions: (i) the changes (caused by platformization) in each of the phases of the research cycle are not at all linear and are not happening simultaneously; (ii) actors from different subsystem played important roles in the platformization of science; and, (iii) a large number of platforms that become infrastructures and infrastructures that have become platformed, although this does not invariably occur. Finally, in the last section, we raise pertinent questions for future inquiries.

Conceptual Background and Method

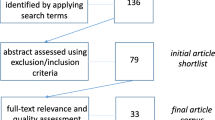

Our exploratory case study—whose goal is “to develop pertinent hypotheses and propositions for further inquiry” (Yin 2003: 17)—departs from four conceptual dimensions, which appear particularly relevant in the context of our study.

The first is that the research process “includes the key steps in the research life cycle” (Dai et al. 2018: 08). The European Comission (2016) proposition of a-five-phase process—i.e., (i) conceptualization; (ii) data-gathering; (iii) analysis; (iv) publication; and, (v) review—was advanced by Dai, Shin, and Smith (2018) who included both "planning" and "funding" activities in the conceptualization phase. They also included "processes of outreach" and "impact assessment" as a sixth phase of the research process.

The second dimension recognizes that platformization accelerates. Digital platforms have been penetrating in different economic sectors and spheres of life (van Dijck et al. 2018; Poell et al. 2019). They are defined as organizational models (Gawer 2021; Kenney and Zysman 2020) or (virtual) spaces “where social and economic interactions are mediated online, often by apps” (Acs et al. 2021: 1635). Digital platforms are "governing systems" (Schwarz 2017) that exhibit regulatory power over the markets/social arenas they mediate.

Our third conceptual dimension states that platformization is a multiagent phenomenon. The subsystems that lead the platformization processes are diverse, not restricted to the Market (Mansell and Steinmueller 2020). Corporations have developed and marketed digital platforms. These proprietary platforms exhibit institutional logics that combine previously separate logics (such as the logic of markets and the logic of the regulatory state) (Frenken and Fuenfschilling 2020). Governments and their agencies have developed platforms for the delivery of public services (Thompson and Venters 2021; Mukhopadhyay et al. 2019). International associations (Otto and Jarke 2019) and cooperatives also have digital platforms, which exemplifies how different actors can develop and operate digital platforms.

Finally, the fourth conceptual dimension highlights that digital platforms and socio-economic infrastructures are merging. Once platforms achieve properties such as ubiquity, invisibility and essentiality, they generate what has been called "infrastructuralization of platforms" (Plantin et al. 2018). Pari passu, motivated by the control of data flows, intermediary platforms seek to expand upstream and downstream, occupying spaces of often public infrastructure platforms, causing what can be called the "platformization of infrastructures" (Plantin et al. 2018; van Dijck 2020).

Considering the conceptual background above, the phases of the scientific process may go through platforming processes led by different social actors, with possible impacts on the scientific infrastructure. From the above follows our research question: what are the ways in which the scientific phases have been "platformed"? To guide our investigation, we have broken down the main question into subsidiary questions: what is a scientific platform? What are the main types of scientific digital platforms? What are the degrees of integration of the main types of scientific digital platforms into the research cycle? To answer the previous questions, we first define scientific digital platforms as the digital governing systems of virtual spaces that leverage network effects towards one (or more) phase(s) of the scientific research processFootnote 1. However, there are differences among this large population set and we propose a taxonomy of scientific platforms summarized in Table 1. As per Hodgson (2019: 04), taxonomic definitions “concern populations of social phenomena that exhibit some degree of commonality and some degree of diversity” and they “identify the minimum number of properties that are sufficient to demarcate one group of entities from all other entities”.

We characterize each scientific platform category resorting to emblematic scientific platforms (Table 1). Beforehand it is necessary to make a caveat: we do not aim to make an inventory of all scientific digital platforms developed from 1990 to 2020; despite the irruption (and "death") of many scientific digital platforms in this 30-year life span, making a census survey of them all is out of the scope of this paper. Instead, according to specific criteria we select digital platforms that reveal typifying features. The cases were selected through a purposeful sampling guided by four criteria: (i) historical relevance, (ii) emergence within the WWW context from 1990 on; (iii) availability of public information for analysis; and (iv) diversity of platform groups in relation to specific social subsystems. The next section discusses each of the types in detail.

It is worth noting how each of the groups of scientific platforms is associated with one of the three "great subsystems of modernity" (Nowotny et al. 2003): State, Market and Science. The associations are not exclusive, but they reflect historical relevance. For example, there are archives developed by Market and State actors, but the role of the scientific community (Science subsystem) was and continues to be the most relevant in the development and operation of this group of scientific platforms.

As an exploratory study, we resorted to documents from companies, such as corporation brochures; public platform documents, such as government reports, white papers and awards; news and letters published in specialized journals for the communication of the scientific community (e.g., Nature). For comprehensibility reasons, Table 3 (Annex) presents a complete list of documents accessed.

The Platformization of Research Phases: An Exploratory Analysis

Two steps were essential to enable the emergence of digital platforms: the creation of the physical structure of the network of networks, also known as the Internet; and the definition of a standard for navigation and guidance on the internet via common protocols, the WWW (Mansell and Steinmueller 2020). In its origin, before all commercial restrictions on its use were lifted in 1995, the Internet was used mainly by academic communities (Vickery 1999). Notwithstanding that, other actors, including funding bodies and profit-seeking companies, were also responsible for developing new technological trajectories. Our analysis revolves around three social subsystems for analytical simplicity: Science (e.g., research community), State (e.g., public administration), and Market (e.g., private sector).

Science Subsystem

Archives and Repositories: Quick and Dirty Access

When Tim Berners-Lee developed the WWW in the early 1990s, within the Conseil Européen pour la Recherche Nucléaire (CERN), the aim was to facilitate scientific communication and the dissemination of scientific research (Tim Berners-Lee and Fischetti 2000). It quickly disseminated and allowed the upsurge of new artifacts. Therefore, it has dramatically reshaped the way scientific production could be stored and shared, allowing research communities to create their web platforms. That was the case of Paul Ginsparg, who wanted to develop an online academic repository. He envisioned “an expedient hack, a quick-and-dirty email-transponder written in csh to provide short-term access to electronic versions of preprints” (Ginsparg 2011: 2620) and then came up with ArXivFootnote 2 in 1991. Ginsparg's "expedient hack" became, in 2020, a central platform for scientific dissemination of results, registering 4.2 million active users, storing 1.8 million articles, and managing 1.89 billion downloads (ArXiv 2020).

ArXiv’s success is due to a set of characteristics. Perhaps the main one is the balance between the speed of dissemination and the maintenance of the quality of the material admitted to the platform, which are essential shared values for the scientific communities. The voluntary curatorship of scientists who assess whether the submitted material reaches a minimum degree of validity and interest to the community achieves this balance. Given the information overload (ArXiv received 16,000 articles per month in 2020), the platform complements the curatorial work with machine learning systems that warn of possible items that deserve special attention (Ginsparg 2011: 2623).

In addition to the balance mentioned, the platform's governance also stands out: it is maintained by contributions from user institutions, and Cornell University Library conducts its maintenance and operation. Important decisions are made in committees that involve both the maintainers and the user community, following the participatory ethos of the community that developed and used the platform (Ginsparg 2011). ArXiv has always valued openness and transparency and provides application program interfaces (API) so that third parties can use their data responsibly. In addition to the open data policy, the platform promotes ArXivLabs, a window through which the community proposes innovations as additional features for the platform.

Since its inception, the platform has paved the way for innovations attributed to the users themselves, such as decentralized data collection. This pioneering type of crowdsourcing “foreshadowed the 'interactive web', insofar as it provided a rudimentary framework for users to deposit content” (Ginsparg 2011: 146).

ArXiv was disruptive, and it became the model for many followers. For instance, in 1993, NetEc—a website to improve the communication of research in Economics—was created, and in 1997, it became RePEcFootnote 3, a decentralized repository of scientific articles in that field. Another example is the “e-biomed” initiative, which looked for ways to update the dissemination of results in life sciences. The results of “e-biomed” are now PubMedCentral and PLoS, essential centers of open science (Ginsparg 2011). Other initiatives were flourishing worldwide, as the development of the network for geological and environment data (Pangea) in 1993 in Germany, conceived to be a data archive and a scientific tool (Diepenbroek et al. 2002).

During the 1990s, the scientific community had used the WWW to bypass traditional means of publication, using platforms such as ArXiv. In the first decade of the 2000s, part of the scientific community pressured the private institutions that dominated the cycle of publication and distribution of scientific literature: the publishers. They did so by promoting open science, defined as “a scientific culture that is characterized by its openness. Scientists share results almost immediately and with a vast audience” (Bartling and Friesike 2014: 10). Traditional journals' practice of charging for access to scientific results became a natural enemy of open science.

The 2000s testified the growth of this movementFootnote 4. In 2002 came the Budapest Open Access Initiative (BOAI), and the declarations of Berlin (2003) and Bethesda (2003) soon followed. According to Suber (2012), this “BBB [Budapest, Berlin and Bethesda] definition of open access” was devoted to removing price tags and permission barriers (copyrights) from peer-reviewed research. These and other initiatives focused on the wide dissemination of scientific results constitute what Fecher and Friesike (2014) call the “democratic school” of open science. This stream within open science is concerned with access to knowledge and advocates that “any research product should be freely available […] everyone should have the equal right to access knowledge, especially when it is state-funded” (Fecher and Friesike 2014: 25).

The technical possibility of opening science through the web 2.0 is responsible for the growing momentum of open science in the 2000sFootnote 5. The BOAI highlighted how the Internet would allow the free distribution of scientific information. Bartling and Friesike (2014) find that any open science approach is closely linked to the technological developments of the Internet. These developments make up a system of intertwined components (e.g., unique researchers' IDs, platforms, altmetrics, social networking) that make open science viable when established. From the global Directory of Open Access Repositories (OpenDOAR), founded in 2005, it is possible to see the increase from just 78 directories/archives in December 2005 to 1,322 in December 2009 (Figure 3, Annex).

On the agenda, the democratic open science school promotes both open data and open access. Open data has a more researcher-centered connotation, as it intends to enable reproducibility, leverage the confidence of scientific results, and optimize research efforts. On the other hand, open access is less researcher-centric and results in the view of scientific knowledge as a universal right (Fecher and Friesike 2014). The 2000–2010 decade saw a multiplication of digital platforms to enable open data and open access.

Archives and repositories’ platforms connect knowledge producers with knowledge consumers. Like mundane sharing economy platforms, they thrive on network effects: platforms with great articles will be more attractive to the knowledge seeker. However, due to their non-commercial nature, these repositories often follow the guidance of the Open Archives Initiative (OAI) Protocol for Metadata Harvesting (PMH), which makes them interoperable. By including this protocol in the architecture and governance of the platform, repositories allow users to use a single search tool to find the information they are looking for (Suber 2012: 56). This is an essential difference between digital platforms emerging from the research community and commercial digital platforms: while the former seek to reduce the balkanization of knowledge and reduce search frictions, the latter only seek to do this within their closed gardens, where monetization strategies can be applied. It follows from there that, in a commercial-dominated platform universe, reducing the fragmentation of scientific knowledge can only occur if there is only one dominant platform, i.e., a monopoly. Interoperability is antagonistic to monetization.

Citizen Science Platforms: Extending the Republic of Science

Digitally enabled citizen science is defined as “the emerging practice of using digital technologies to crowdsource information about natural phenomena” (Wynn 2017: 02). This definition implicitly emphasizes the productivity-enhancing role of scientific activity based on the engagement of ordinary citizens. However, according to Sauermann et al. (2020), the "productivity view" is only half the history of citizen science. Other authors emphasize the "democratization view" of citizen empowerment through participation in generating scientific knowledge. This second view, particularly, leads Fecher and Friesike (2014) to place citizen science within the larger trend of the open science’s public school, in which “Web 2.0 technologies allow scientists, on the one hand, to open up the research process and, on the other, to prepare the product of their research for interested non-experts.”

Citizen science recent developments are due to the new technical possibilities of web 2.0, the use of smartphones, and the diffusion of digital platforms (Sauermann et al. 2020; Kullenberg and Kasperowski 2016; Lemmens et al. 2021). Kullenberg and Kasperowski (2016) emphasize that, although the voluntary participation of lay people in science is not new, it is no longer invisible due to the digital records afforded by digital platforms. They identify a growing trend in the number of scientific articles on citizen science around 2010, which coincides with an increase in the number of citizen science projectsFootnote 6 hosted by dedicated digital platforms. Scientific articles that resulted from projects hosted on digital platforms also grow in number: “citizens can be involved in new instances of the scientific process, and in much larger numbers due to the logistical affordances of digital platforms” (Kullenberg and Kasperowski 2016: 13). Citizen science digital platforms exhibit five characteristics according to Liu et al. (2021: 440):

-

i.

present active citizen science projects and activities;

-

ii.

display citizen science data and information;

-

iii.

provide overall guidelines and tools that can be used to support citizen science projects and activities in general;

-

iv.

present good practice examples and lessons learned; and,

-

v.

offer relevant scientific outcomes for people who are involved or interested in citizen science.

The first digital platform for citizen science (and the biggest one, according to Simpson, Page, and De Roure (2014) and Liu et al. (2021)) is Zooniverse (formerly Galaxy Zoo), launched in 2007 (Kullenberg and Kasperowski 2016). Zooniverse, hosted and managed by the University of Oxford, the Adler Planetarium, and the University of Minnesota, evolved from Galaxy Zoo, a project to crowdsource the classification of galaxies images. At the time of writing, the platform boastsFootnote 7 622 million classifications, filled by approximately 2.3 million volunteers. The projects include space to discuss and socialize, and some volunteers are granted specific roles and privileges (Tinati et al. 2015) whose purpose is to encourage participants further to fulfill their tasks (Kraut and Resnick 2011).

Zooniverse allows scientists and citizen scientists to propose projects to the crowd. Then it displays projects and lets volunteers engage in the classification efforts. It also connects volunteers with project managers. In a sense, it matches science project needs with crowd volunteers. Among the results, Zooniverse projects already classified “more than a million galaxies, the discovery of nearly a hundred exoplanet candidates, the recovery of lost fragments of ancient poetry, and the classification of more than 18,000 thousand wildebeest in images from motion sensitive cameras in the Serengeti” (Simpson, Page, and De Roure 2014: 1049).

Citizen science platforms are thus important ways to democratize science and publicly promote scientific data and information and facilitate knowledge transfer to wider audiences (Wagenknecht et al. 2021), which are typical values of open scientific communities. Moreover, they may “facilitate mutual learning and multi-stakeholder collaboration, get inspiration, integrate existing citizen science activities, develop new citizen science initiatives and standards, and create social impact in science and society” (Liu et al. 2021: 441).

State Subsystem

National e-Portfolios: Ordering the Ivory Tower

Government platformization is directly linked to the development of systems for scientific and technical information. This field of information science which intersects with science and technology (S&T) policy, has evolved with UNESCO's post-war efforts to create an International Scientific Information System (called UNISIST) (UNESCO 1971). It was a worldwide bibliographic system that, for various reasons, has not materialized (Coblans 1970). Notwithstanding that failure, UNESCO successfully promoted scientific and technical information systems worldwide (Saracevid 1980). In the 1970s, for example, several developing countries created their own S&T information offices, presuming a positive relationship between S&T with economic development (Avgerou 1993).

While most developing countries designed national or sectorial scientific and technical information systems, developed countries had organic specific-subject ones (e.g., chemistry systems, physics systems). Thus, different actors were involved in the process: in developing countries, the State was the main responsible for developing and operating the systems; in developed countries, they were run mainly by research communities (Saracevid 1980: 224).

Thus, in the 1990s, governments in developing (and in a few developed) countries took advantage of new ICT-based technologies to improve their scientific and technical information systems. The euphoria was such that “[in many less developed countries], ‘Computer’ seems to be in many writings a magic word, the expectations somewhat naive and unrealistic” (Saracevid 1980: 241). State platforms were generally e-portfolios that standardized researchers' outputs. By centralizing and standardizing the scientific production of researchers, e-portfolios systematized the inputs that informed funding allocation decisions. Some have also become, both de facto and de jure, the standard for disseminating scientific production. According to Saracevid (1980), scientific and technical information systems had different users: scientists and engineers; business managers and industrialists; administrators and policymakers; extension workers; semi-educated persons and illiterates.

Lattes PlatformFootnote 8 is an example resulting from scientific and technical information system developments in Brazil. It was developed in a fruitful collaborative network formed by government agencies, public universities, and the private sector. The main goal with Lattes Platform was the standardization of Brazilian researchers' curricula and the provision of unique research IDs (before ORCID) This standardization aimed at constructing a database, making it possible to find specialists and provide statistics on the distribution of scientific research countrywide (Lane 2010). Since it was launched, Lattes Platform has been increasing its scope and its base of users. Brazilian government implemented features to spur users' connectivity based on the stimuli to join all sub platforms. For instance, having updated information in Lattes Curricula is a precondition for accessing public funding and scientific research. Lattes Curricula homepage provides an outlet that allows users to monitor other scholars' activities (main researches, publications, filiation) (Chiarini and Silva Neto 2022). Network effects are present in the Lattes Platform. There are one-sided effects: the more researchers use the platform, the greater the value perceived by an individual researcher of participating in the network. There are also cross-network effects: the more researchers make their information available on the Lattes platform, the more institutions require it as a document proving scientific production. The more institutions that use the Lattes curriculum, the greater the incentive for a researcher to update theirs (Chiarini and Silva Neto 2022).

Cyberinfrastructure Networks: Power to the Grid

In the early 2000s, developed countries invested in large digital infrastructure projects for science. In Europe, the UK took the lead: John Taylor, then director-general of Research Councils at the Office of Science and Technology (OST), led a five-year, £ 250 million e-Science program. In the US, the Atkins Report (2003) became the landmark of the cyber-infrastructure promoted by the US government through funding from the National Science Foundation (NSF), leading to the establishment of the Office of Advanced Cyberinfrastructure (OAC)Footnote 9 (Jankowski 2007: 551). As OAC’s name reveals, this initiative (as well as its British counterpart) was focused on infrastructure for science in the digital era, as Branscomb (1992) had advertised a decade before: investment in public goods as infrastructure would be an S&T policy aimed at "enabling innovation" instead of "picking winners."

The British program supported “the e-Science pilot projects of the different Research Councils and work[ed] with industry in developing robust, “industrial strength” generic Grid middleware” (Hey and Trefethen 2002: 1019). This short quotation reveals that the program was (i) infrastructure-focused, (ii) experimental, (iii) interdisciplinarity (iv) interactive with the private sector.

Grid computing had its name inspired by the traditional infrastructure of electric energy: computational services offered on-demand, as well as electric energy. To achieve this vision of e-utility, it was necessary to put in place the Grid. In its genesis, there was the idea of using idle cycles of distributed computing, i.e., computers with processing power would connect to a network and operate on demand.Footnote 10 Matching users with idle resources seem to be a direct ancestor of what became the sharing economy platform’s principles (Frenken and Schor 2017). However, e-Science scope was much broader than the pooling of resources via aggregation systems, encompassing: "international collaboration among researchers; increasing use of high-speed interconnected computers, applying Grid architecture; visualization of data; development of Internet-based tools and procedures; construction of virtual organizational structures for conducting research; electronic distribution and publication of findings" (Jankowski 2007: 552). Platforms were among the middleware layer, but the main goal was more ambitious: “Our emphasis is thus on Grid middleware that enables dynamic interoperability and virtualization of IT systems, rather than Grid middleware to connect high-performance computing systems, to exploit idle computing cycles or to do ‘big science’ applications” (Hey and Trefethen 2003). As we will see, this goal would have to wait for the next generation of State science platforms.

It is worth noting a representative case briefly. The NSF launched a call in 2000 for what would be the seed of the TeraGrid project. The USD 51 million awards directed to the MPC Corporation aimed to develop the Terascale Computing System: “The Pittsburgh Supercomputer Center (PSC), a jointly supported venture of The University of Pittsburgh and Carnegie Mellon University acting through the MPC Corporation, in collaboration with Westinghouse Electric Corporation, will put in place at 6 peak teraflop computing system for use by U.S. researchers in all science and engineering disciplines.”Footnote 11 In 2001, the project gained scale: approximately USD 80 million were allocated to it, through two awards, to develop what was called TeraGrid: centered at the University of Illinois, in partnership with four more national research centers and with IBM, Intel, Myrinet, Qwest, Oracle and SUN, the NSF expected to see a distributed terascale facility “with an aggregate of 11.6 TF of computing capability”Footnote 12 at the end of the project. The NSF granted seven more awards until 2005, in a new contribution of USD 80 million. In 2011, the project was replaced by XSEDE: eXtreme Science and Engineering Discovery Environment. XSEDE's first awardFootnote 13, worth USD 121 million, provided for the development and operation of the grid between 2011 and 2016. The secondFootnote 14, at the cost of another USD 110 million, financed the structure for another five years, until 2021. This case demonstrates the government’s leadership, represented by the NSF, in setting the goal and funding via awards to develop a broad grid computing infrastructure.

The focus on virtualization of IT systems directed the e-Science project to the utility model or servitization of computing. Servitization was also a central goal of the companies usually involved in funded projects: IBM, Microsoft, Sun (Hey and Trefethen 2002). It was not by chance that the leader of the e-Science project, Tony Hey, was hired by Microsoft Research after leaving command and that the head of the U.K. OST John Taylor had worked at Hewlett-Packard. This "revolving door" movement facilitated cooperation between the government and the private sector. The millions of pounds and dollars invested in e-Science and Cyberinfrastructure projects acted as "public procurement," in which private companies responsible for the development of grid systems evolved into servitization years later (PaaS, SaaS, IaaS). It is not surprising, therefore, that “cloud computing can be seen as a natural next step from the grid or utility model” (Allan 2009: 135). Protected from the risk by public money, Big Techs had "intensive training" on providing IT infrastructure during the 2000s.

Science Gateways: The Science App Store

As e-science projects advanced, it became more apparent that grid middleware alone would not be enough to allow scientific research to enter the digital era. There was too much focus on middleware, and there was a lack of focus on end applications for users (scientists, researchers, scholars) who could not always customize digital toolsFootnote 15. Around 2010, virtual research environments (VRE) projects began to multiply to fill this gap, since they could “lower barriers by hiding the complexity of the underlying digital research infrastructure and simplifying access to best-practice tools, data and resources, thereby democratizing their usage” (Barker et al. 2019: 243). They were called science gateways in the USA.

Science gateways are “digital platforms that facilitate the use of complex research and computing resources” (Parsons et al. 2020: 491). They focus “on end-to-end solutions for a single domain” (Madduri et al. 2015: 02), so even if there are multidisciplinary gateways, the majority are discipline-based. Specifying a gateway concerning the needs of a specific research community (e.g., GHubFootnote 16, the science gateway for Glaciology) “also differentiates between science gateways and the generic cyberinfrastructure on which they build” (Barker et al. 2019: 241). As of this writing, the Science Gateway CatalogFootnote 17 of the Science Gateway Community Institute (SGCI) (funded by the National Science Foundation) registers 531 gateways.

Over the past decade, many governments have created programs to fund the development of science gateways. Allan (2009) presents a list of projects that, in the late 2000s, moved from e-science to science gateways and VRE. Barker et al. (2019) mention CANARIE, Canada's government-funded initiative; SGCI, founded in 2016 by NSF with initial funding of US$15 million; SCI-BUS, financed by the Horizon 2020 program of the European Union; and National eResearch Collaboration Tools and Resources (Nectar), funded by the Australian Government (2011–2017).

Although we relate science gateways with the State subsystem, it is essential to emphasize that the scientific community has a central role in the subsequent development of these platforms: in the design of the platform, providing input on its idiosyncratic needs; in the continuous construction of new platform features when it is open source and therefore extensible (Allan 2009: 12); in the maintenance of the platform itself, which demands new jobs and career-paths for facilitators, research software engineers, and science gateway creators (Parsons et al. 2020), generation of content that is shared by it, among other functions. It is critical to highlight the government projects' initiative, funding, and institutionality given to science gateways.

The contribution of the NSF was fundamental for the development of HUBzero, “an open-source software platform for building powerful websites that host analytical tools, publish data, share resources, collaborate and build communities in a single web-based ecosystem.”Footnote 18 In other words, HUBzero is a customizable platform for developing other platforms. Its intention to facilitate “Gateway as a Service” was responsible for bringing the digital scientific workflow to the masses (McLennan et al. 2015). Funded by the NSF to create the Nanotechnology Science Gateway (NanoHUB), the project successfully created a customizable framework that houses more than 25 other active science gateways. The science gateways built on the HUBzero framework register a flow of more than 2 million users—serving over 280 thousand users annually distributed over 172 countries (Madhavan et al. 2013)—and include features ranging from the conceptualization of research projects to the publication of data sets, in addition to, of course, accessing high-performance computational resources on the grid. In addition to being free to use, the platform is open and allows for community additions, with codes made available via GitHub.

Market Subsystem

Publishers: Knowledge Oligopolies?

Academic publishers own the most traditional scientific platform. Controllers and disseminators of scientific journals were established about 350 years ago as bridges between writers and readers. In the late 1990s, they moved into a dual process of market consolidation and digitization. Larivière et al. (2015) demonstrated that from 2000 onwards, journal acquisitions increased, and five commercial houses—Reed-Elsevier, Springer, Wiley-Blackwell, SAGE Publications, and Taylor & Francis—became an oligopoly (Larivière et al. 2015).

In some scientific disciplines, such as psychology, the Big 5 controls more than 70% of the journals present in WoS. Their profit margin exceeded that of industries such as pharmaceuticals, and operating profits grew nearly sixfold (for Scientific, Technical & Medical division of Reed-Elsevier) between 1991 and 2013 (Larivière et al. 2015). It should be noted how, in the 2000s, publishers integrated other platforms with journals. Hence the launch of Scopus by Elsevier in 2004, competing with the WoS index. Today, Reed-Elsevier.

The advancement of the digital age has led publishers to reposition themselves. Elsevier's corporate brochureFootnote 19 states that “while the proliferation of information brings opportunity, it also brings challenges.” For this reason, the company calls itself “a global leader in information and analytics.” This broadening of scope relates to the expansion of scientific knowledge flows in the digital age. As pointed out by Delfanti (2021: 08), “the boundaries between ‘gray’ and ‘formal’ scholarly objects blur” due to “the technological affordances of digital media, which can publish any number of objects and are not limited by the constraints that limit journals, with their periodical schedules and cumbersome peer review processes.”

Since academic publishers could not contain the leakage of scientific knowledge flows, the solution for their business model is to control as many platforms (and as many scientific processes phases) as possible that position themselves as curators and distributors of these flows:

In 2016, the owner of Web of Science spun off that unit to purchase by a private equity firm, where it was renamed ‘Clarivate Analytics.' Then, in 2017, Clarivate bought Publons, with the justification that it would now be able to sell science funders and publishers ‘new ways of locating peer reviewers, finding, screening and contacting them’ […] Elsevier first purchased Mendeley (a Facebook-style sharing platform) in 2016, then followed that by swallowing the Social Science Research Network, a pre-print service with strong representation in the social sciences (Pike, 2016). In 2017 it purchased Berkeley Economic Press, as well as Hivebench and Pure” (Mirowski 2018: 197).

That occurred without the decentralization of the traditional peer-reviewed journals market. In 2020, “Elsevier’s article output accounts for about 18% of global research output while garnering approximately 27% share of citations.”Footnote 20 The expansion to new digital platforms reflects the emergence of a new competitor in scientific platforms: academic social networks.

Academic Social Networks: You have a New Follower!

Despite HASTACFootnote 21 being considered the world's first ASN developed within the research community, many for-profit venture capital-funded technology startup companies created virtual loci for personal communication and information change, creating specific online communities for researchers (Jordan 2019). Those for-profit platforms started to pop up in the late 2000s—following the footsteps of conventional and generic social networks such as MySpace, Facebook, and LinkedIn—and their growth from 2010 started to call attention to their benefits but also about how they could disrupt the research landscape by capturing public content (Van Noorden 2014).

The three giant ASN platforms are today Academia.edu, ResearchGate, and Mendeley (acquired by Elsevier in 2013) (Table 4, Annex). However, their historical development is not similar, and it is possible to divide them into two main categories. First, there are platforms whose aim was to facilitate profile creation and connection (as Academia.edu and ResearchGate); secondly, there are those whose primary aim was to store and share academic-related content, which later combined social network capabilities (as Mendeley) (Jordan 2019). Despite the two categories, ASN positions itself in competition with academic publishers rather than social media (Jordan 2019).

As of ResearchGate, for example, it was built referencing prior technologies such as Google’s system and method for searching and recommending objects from a categorically organized information repository (Pitkow and Schuetze 2006) and LinkedIn’s method and system for reputation evaluation of online users in a social networking scheme (Work et al. 2005). It was then in its original idea the development of a system for sharing academic content for academic users (Hofmayer et al. 2015).

Jordan (2019) provides a comprehensive review of the empirical literature related to ASNs, and throughout assessing over 60 publications, she concluded that despite open access dissemination of academic publications being the most used benefit related to the role of ASNs, they also benefit in terms of speed in comparison to academic repositories and enhance reach and citations. Notwithstanding that ASNs are more than publishing and repository digital platforms, they also allow scholars to interact virtually—but it is also true that interaction is undertaken by a minority of users so far. As for all sorts of technologies, not only does ASN provide benefits, but it may also open unprecedented risks for the structure and evolution of science.

The rapidly growing academic inputs into ASNs generate scholarly big data that can be used by research communities to understand scientific development and academic interactions and for policymakers to solve resource allocation issues (Kong et al. 2019). However, scholarly big data gathered by ASNs are kept privately. ASN are also expanding to other scientific phases; for instance, Academia.edu has developed its "Academia Letters," which “aim to rapidly publish short-form articles such as brief reports, case studies, 'orphaned' findings, and ideas dropped from previously-published work.”Footnote 22 Publishers have been threatening to remove millions of papers from ASN (Van Noorden 2017) and have sued ResearchGate over copyright infringement (Else 2018; Chawla 2017) which had to remove thousands of publications from its website: the dispute is far from being solved.

Crowdwork: Over the Shoulders of Crowds

Outsourcing work is not a new trend; however, digital platforms have opened up new horizons. “Crowdwork functions as a marketplace for the mediation of both physical as well as digital services and tasks” (Howcroft and Bergvall-Kåreborn 2019: 23). This marketplace has five main constitutive elements: the digital platform, the labor pool, employment contracts, algorithmic control and, digital trust. Among several types, online task crowdwork platforms (Howcroft and Bergvall-Kåreborn 2019) are the most active in the context of scientific research phases.

The online task crowdwork platforms “offers paid work (sometimes subject to requester satisfaction) for specified tasks and the initiating actor is the requester. The tasks are modular, ranging from microtasks to more complex projects, with the potential for further Taylorisation” (Howcroft and Bergvall-Kåreborn 2019: 26). Researchers have used these platforms to carry out surveys quickly and cheaply, when compared to traditional ways. The main platformFootnote 23 dedicated to this type of mediation between researchers and respondents is Prolific Academic (PoA).

PoA was founded in 2014 and received its first round of venture capital (USD 1.2 million) in 2019. The platform matches over 130,000 respondents with 25,000 researchers from over 3,000 institutions around the worldFootnote 24. Researchers can select specific profiles of respondents: “Our participant pool is profiled, high quality and fast. The average study is completed in under 2 hours. Filter participants using 250+ screeners (e.g., sex, age, nationality, first language), create demographic custom screeners, or generate a UK/US representative sample.”Footnote 25

The increased use of this solution in behavioral research has led to questions about the quality of these data. Eyal et al. (2021) conducted a study comparing the quality of data on three crowdwork platforms (Amazon Mechanical Turk—AMT, CloudResearch and PoA) and two panels (Qualtrics and Dynata) used in behavioral research. They tested the quality of the data in terms of respondent’s attention, comprehension, reliability, and dishonesty. Their results point to PoA as a platform with better data quality and AMT as a platform with worse data quality. Although the authors see an improvement in data mediation patterns, they point out that “data quality remains a concern that researchers must deal with before deciding where to conduct their online research” (Eyal et al. 2021).

Discussion

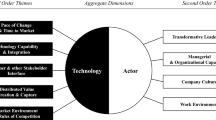

The science platform ecosystem is complex and there is a constellation of scientific platforms from varying historical moments coexisting (Figure 1). The types of platforms analyzed in the previous section have in common the fact they are governing systems of virtual spaces that leverage network effects towards one (or more) phase(s) of the scientific research process. Notwithstanding that, they also differ in terms of the social subsystem (Science, Market and State, as presented previously), and at least, in two other aspects: (i) the degree of integration into the research life cycle; and, (ii) the research phase they target.

Degree of Integration into the Research Life Cycle

It is possible to identify scientific digital platforms according to their degree of integration into the research life cycle. When scientific digital platforms are so embedded into the scientific practice, being more than just technical assemblage of things, they can be considered part of the research infrastructure, which can be understood “as deeply relational and adaptive systems where the material and social aspects are in permanent interplay. They are embedded in the social practice of research and influenced by environmental factors” (Fecher et al. 2021: 500). In other words, when platforms are part of the actual scientific practice, they can be considered part of the infrastructure, otherwise, they are purely a service.

For example, while scientific journals going online shows the platformization of an infrastructure, national e-portfolios becoming indispensable (Lattes Platform is an example) is an example of infrastructuralization of a platform. In 2021, Lattes Platform suffered a breakdown and was unavailable for more than a month, clarifying its infrastructural character: its criticality was revealed when it failed and lost its “invisibility” (Chiarini and Silva Neto 2022). Other platforms, on their turn, are still not indispensable or ubiquitous, e.g., crowdwork is restricted to a specific type of research—surveys—and there are traditional substitutes. Still others, such as ASNs seem to be aiming at dominance through infrastruturalization, but they seem to be in a process of embedding into scientific practices (Fecher et al. 2021). This process that naturally takes some time due to the need to "conquer" users, may take a little longer in this case. As Figure 2 demonstrates, ASNs and publishers overlap in terms of the phase of the scientific process; this puts them on a collision course, as already discussed.

Science Phases: From Data Collection to Dissemination

Data Collection

Digitization has impacted one of the most fundamental and traditional phases of the scientific research process: data collection. With digitization, trends such as open data, open government data and citizen science have created new ways of obtaining data for scientific purposes (Dai et al. 2018).

We presented that State actors sponsored the development of two types of digital platforms with impacts on the data collection phase. Both cyberinfrastructure and science portals offer tools to expand the potential for capturing and manipulating data. This is due not only to access to computational power and new customized software, but also to the network of researchers formed around science gateways. Both types are infrastructures, but science gateways are fragmented and differ in terms of research areas. Although the funding is from the State, the management of these platforms is carried out closely within the Science subsystem. Therefore, these platforms present participatory governance (which aggregates actors from more than one social subsystem).

Citizen science platforms enable distributed capture and centralized analysis of data. There are many challenges related to obtaining this type of data: public mobilization, correctly selected audience, organizational obstacles, technical difficulties and questions about the validity of the data (Dai et al. 2018). It should be noted that citizen science platforms are voluntary initiatives led by the research community. Their governance is participatory and their scope is limited to some research areas, positioning them as a service and not as an infrastructure. Crowdwork platforms offer a cheap and efficient way to collect data for surveys. Because they are proprietary, they do not face some of the difficulties that citizen science platforms do, such as encouraging participation (which occurs via remuneration). However, there are questions about the quality of these data. Crowdwork platforms offer a service to a very specific niche of the Science subsystem: researchers whose method is based on surveys. Even if this makes the use more concentrated in the areas of psychology and medicine, for example, this service may, at some point in the future, become a proprietary scientific infrastructure.

Experimentation/Analysis

Experimentation is the leitmotif of grid computing enabled by cyberinfrastructure initiatives. As we have seen, without the friendly and customizable complement of science gateways, their effectiveness was constrained. Together, they engender the “platformization of [experimentation] infrastructure” (Plantin et al. 2018). As we have seen, its governance is participatory, which does not exempt it from problems, but it seems to guarantee that major conflicts are resolved through consultation within the platforms themselves. According to Dai et al. (2018), citizen science platforms are in the process of extending their capabilities. They would become “a powerful mechanism for co-designing experiments and co-producing scientific knowledge with large communities of interested actors” (Dai et al. 2018: 15).

Publication

The publishing phase witnesses friction between platform types in a quest for space. It is marked by the platforming of an infrastructure almost as old as science: scientific journals are owned by half a dozen publishers and have been digitized over the last two decades. A few years ago, publishers were so well positioned that some researchers saw no solution other than to rebel against the transfer of resources (mostly public) from universities to the cashier of an oligopoly of scientific publishing. Today, ASNs are fighting a battle over the interface (Srnicek 2017): by making articles available on their platforms, ASNs seek to neutralize the utility of publishers and redirect the flow of attention to their interface. As publishers activated legal mechanisms to reverse this strategy, ASNs now seem to have adopted two complementary strategies: approaching the low-end of the market, i.e., small publishing houses; and offer a new publishing medium embedded in their own platforms (e.g., Academia Letters). Today ASNs mainly offer two services: alternative publishing to traditional publishers and dissemination. Their strategy is clear: move from a service provider to a provider of proprietary scientific infrastructure (Fecher et al. 2021). For this reason, they are "transitioning" from service to infrastructure (Table 2).

Dissemination/Outreach

Mostly all groups of digital platforms contribute to the dissemination phase of the research process. ArXiv is dedicated to “the rapid dissemination of scholarly scientific research”Footnote 26 and Zooniverse curates “datasets useful to the wider research community, and many publications.”Footnote 27 Within State subsystem, national e-portfolios such as Lattes Platform can assist in the preservation and dissemination of scientific productionFootnote 28 and science gateways as HUBzero “publish data, [and] share resources”Footnote 29 and, although it serves different communities, “the hubs all support (…) dissemination of scientific models” (McLennan and Kennell 2010: 48). Finally, considering the Market subsystem, publishers like Elsevier have the “job to disseminate research and improve understanding of science”Footnote 30 and ASNs as ResearchGate “offer[s] a home for you—a place to share your work and connect with peers around the globe”Footnote 31 and Academia.edu aims at “broad dissemination.”Footnote 32

Conclusions

We defined scientific digital platforms and outlined a typology based on three criteria: association with a specific social sub-system, degree of integration into the research process and phase of the scientific research process. We observe that there are a large number of platforms that become infrastructures and infrastructures that have become platformed, although this does not invariably occur. Our long-term perspective and broad scope allow us to frame the platformization of science as a process that already has a broad legacy constituted from the development of platforms by actors from different social subsystems (Market, State and Science). Research communities and the public administration were the first actors to be engaged in this process in the first half of the 1990s, while private companies engaged in this process in the late 1990s. We now observe a very dynamic commitment of the Market to be part of the science platformization process.

The limitations of the article stem from our scope and methodological choices. When trying to understand the big picture, there is no space for detailing each of the taxonomy presented, and the mini-cases described offer only a glimpse of their main features. Furthermore, by choosing to structure our paper around the notion of large groups of platforms, we can bypass platforms that exhibit mixed characteristics, which would place them in more than one group in our taxonomy. Our clustering proposal is limited in the sense that digital platforms are highly flexible and dynamic (van Dijck 2020). New configurations and functionalities are added and deleted overnight, super platforms emerge and coalesce once separate categories. Still, to understand the historical movement in the last thirty years, the groupings come close to a rough taxonomy.

As a result of our exploratory study, we conclude with three issues that deserve further attention. First, the relationship between scientific platforms and platform economics in general deservers further investigation. We found evidence that platforms for science exhibited traces of avant-garde platform economics. Platforms from the Market subsystem were not always the "innovation locomotive" as one may expect. Many traits of private scientific platforms, and even platform economy general features, can be traced back to State or Science social subsystems initiatives. In order to understand the genesis of the platform economy, this topic comes up with great importance.

Although it was not the scope of this paper, we also believe that the science platformization process might have considerable impacts on science production as a social process, and it may alter established principles and values of the scientific communities. “Research communities have always been vitual communities that cross national and cultural borders. (…)”, however, these interactions were “limited by constraints, both physical and technical; now, as a result of advances in ICT, interaction is unconstrained, and instantaneous” (Nowotny, Scott, and Gibbons 2003: 187).

There are already studies on how ICT-based technologies, such as ASNs transform the ways scholars conduct their work (Borgman 2007; Weller 2011; Veletsianos and Kimmons 2012; Veletsianos 2016; Veletsianos et al. 2019), for example, by shaping e-publishing (Taha et al. 2017). We wonder whether science digital platforms could be considered a Grilichesian “invention of a method of inventing” (IMI) (Griliches 1958), with a much larger impact on science process than simply considering the “general purpose technology” (GPT) character of digital platforms.

Finally, it is necessary to critically analyze digital platforms as scientific infrastructures. In particular, consideration should be given to the extent to which private scientific infrastructures serve the public interest well. Coming up with the classes and classifying them according to the framework provides only a very superficial idea of how these structures that co-opt scientific flows affect society beyond the scientific sphere. An in-depth investigation could consider how each platform group is positioned, considering whether there are subordinate relationships between them (van Dijck 2020) and how these relations affect social-relevant values and outputs.

Notes

We thus disregard general purposed platforms that may also be used at some stage of the scientific process, e.g., LinkedIn, Twitter, Amazon Mechanical Turk.

https://arxiv.org, accessed on 04/20/2022.

http://repec.org, accessed on 04/20/2022.

Suber's (2012) extensive notes allow us to observe how open science initiatives existed before the 2000s. At the same time, his records demonstrate how the movement took off from this decade on. View the timeline at https://dash.harvard.edu/bitstream/handle/1/4724185/suber_timeline.htm, accessed on 04/20/2022.

An old tradition and a new technology have converged to make possible an unprecedented public good. The old tradition is the willingness of scientists and scholars to publish the fruits of their research in scholarly journals without payment for the sake of inquiry and knowledge. The new technology is the internet. The public good they make possible is the worldwide electronic distribution of the peer-reviewed journal literature and unrestricted access to it by all scientists, scholars, teachers, students, and other curious minds.

Citizen science projects vary in terms of the depth of citizen participation. While all projects involve data collection and recording, not all allow laypersons to analyze results or set goals. Sauermann et al. (2020) propose four categories: from more restricted participation to data collection (collaborative projects) to more decisive participation, in which there are no professional scientists in the project (autonomous projects).

https://www.zooniverse.org, accessed on 04/20/2022.

https://lattes.cnpq.br/, accessed on 05/03/2022.

https://www.nsf.gov/div/index.jsp?div=OAC, accessed on 04/20/2022.

Such as in the SETI@home project (Hey and Trefethen 2003).

https://www.nsf.gov/awardsearch/showAward?AWD_ID=0085206, accessed on 04/20/2022.

https://www.nsf.gov/awardsearch/showAward?AWD_ID=0122296, accessed on 04/20/2022.

https://www.nsf.gov/awardsearch/showAward?AWD_ID=1053575, accessed on 04/20/2022.

https://www.nsf.gov/awardsearch/showAward?AWD_ID=1548562, accessed on 04/20/2022.

One clue to the need to focus on usability is found in the award for the second iteration of XSEDE, the grid computing network mentioned in the previous session: XSEDE 2 will also respond to the evolving needs and opportunities of science and technology. [...] The project will continue to innovate the use of "e-science portals" (also known as Science Gateways). Science gateways provide interfaces and services that are customized to a domain science and have an increasing role with facilities and research centers, collaborating on large research undertakings (e.g., Advanced LIGO, Polar Geospatial Center). This approach facilitates broad community access to advanced computers and data resources. Science gateways are now serving more than 50% of the user community.” (https://www.nsf.gov/awardsearch/showAward?AWD_ID=1548562, accessed on 04/20/2022).

https://vhub.org/groups/ghub, accessed on 04/20/2022.

https://catalog.sciencegateways.org/#/home, accessed on 04/20/2022.

https://hubzero.org/about, accessed on 04/20/2022.

https://www.elsevier.com/__data/assets/pdf_file/0010/1143001/Elsevier-corporate-brochure-2021.pdf, accessed on 04/20/2022.

Ibidem.

https://www.hastac.org/, accessed on 04/20/2022.

https://www.academia.edu/letters/about, accessed on 04/20/2022.

Amazon Mechanical Turk is used extensively for the same purpose, but it is not dedicated to the mediation of a scientific activity. As a general-purpose crowd work platform, it falls outside the scope of analysis of this article.

https://www.prolific.co/about, accessed on 05/03/2022.

https://www.prolific.co/#audience, accessed on 05/03/2022.

https://arxiv.org/help/policies/code_of_conduct, accessed on 05/03/2022.

https://www.zooniverse.org/about, accessed on 05/03/2022.

https://lattes.cnpq.br/, accessed on 05/03/2022.

https://hubzero.org/, accessed on 05/03/2022.

https://www.elsevier.com/open-science/science-and-society, accessed on 05/03/2022.

https://www.researchgate.net/about, accessed on 05/03/2022.

https://www.academia.edu/journals/1/about, accessed on 05/03/2022.

References

Acs, Zoltan J., Keunwon Song, Laszlo Szerb, David B. Audretsch, and Eva Komlosi. 2021. The Evolution of the Global Digital Platform Economy: 1971–2021. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3785411.

Allan, Robert. 2009. Virtual Research Environments: From Portals to Science Gateways. Cambridge: Chandos Publishing.

ArXiv. 2020. ArXiv Annual Report 2020. New York: Cornell Tech. https://indd.adobe.com/view/fdd63397-e4b0-41af-b479-4845dc1ef48e.

Avgerou, C. 1993. Information Systems for Development Planning. International Journal of Information Management 13: 260–273.

Barker, Michelle, Silvia Delgado Olabarriaga, Nancy Wilkins-Diehr, Sandra Gesing, Daniel S. Katz, Shayan Shahand, Scott Henwood, et al. 2019. The Global Impact of Science Gateways, Virtual Research Environments and Virtual Laboratories. Future Generation Computer Systems 95(June): 240–248. https://doi.org/10.1016/j.future.2018.12.026.

Bartling, Sönke, and Sascha Friesike. 2014. Towards Another Scientific Revolution. In Opening Science. The Evolving Guide on How the Internet Is Changing Research, Collaboration and Scholarly Publishing, eds. Sönke Bartling and Sascha Friesike, 3–15. New York: Springer.

Borgman, Christine L. 2007. Scholarship in the Digital Age Information, Infrastructure, and the Internet. Cambridge (USA): MIT University Press.

Branscomb, Lewis M. 1992. U.S. Scientific and Technical Information Policy in the Context of a Diffusion-Oriented National Technology Policy. Government Publications Review 19: 469–482.

Chawla, Dalmeet. 2017. Publishers Take ResearchGate to Court, Alleging Massive Copyright Infringement. Science, October. https://doi.org/10.1126/science.aaq1560.

Chiarini, Tulio, and Victo José Silva Neto. 2022. The Platformization of Science: Lattes Platform in a Crossroad? Discussion Paper n 268. Brasilia: IPEA. https://repositorio.ipea.gov.br/bitstream/11058/11324/2/dp_268.pdf

Coblans, Herbert. 1970. Control and Use of Scientific Information. Nature 226: 319–321.

Dai, Qian, Eunjung Shin, and Carthage Smith. 2018. Open and Inclusive Collaboration in Science: A Framework. 2018/07. OECD Science, Technology and Industry Working Papers. Paris: Organisation for Economic Co-operation and Development (OECD).

Delfanti, Alessandro. 2021. The Financial Market of Ideas: A Theory of Academic Social Media. Social Studies of Science 51(2): 259–276. https://doi.org/10.1177/0306312720966649.

Diepenbroek, Michael, Hannes Grobe, Manfred Reinke, Uwe Schindler, Reiner Schlitzer, Rainer Sieger, and Gerold Wefer. 2002. PANGAEA—an Information System for Environmental Sciences. Computers & Geosciences 28(10): 1201–1210. https://doi.org/10.1016/S0098-3004(02)00039-0.

EC. 2016. Open Innovation, Open Science, Open to the World. Brussels: Directorate-General for Research and Innovation: European Commission.

Else, Holly. 2018. Major Publishers Sue ResearchGate over Copyright Infringement. Nature. https://doi.org/10.1038/d41586-018-06945-6.

Eyal, Peer, David Rothschild, Andrew Gordon, Zak Evernden, and Ekaterina Damer. 2021. Data Quality of Platforms and Panels for Online Behavioral Research. Behavior Research Methods, September, 1–20. https://doi.org/10.3758/s13428-021-01694-3.

Fecher, Benedikt, and Sascha Friesike. 2014. Open Science: One Term, Five Schools of Thought. In Opening Science. The Evolving Guide on How the Internet Is Changing Research, Collaboration and Scholarly Publishing, eds. Sönke Bartling and Sascha Friesike, 17–47. New York: Springer.

Fecher, Benedikt, Rebecca Kahn, Nataliia Sokolovska, Teresa Völker, and Philip Nebe. 2021. Making a Research Infrastructure: Conditions and Strategies to Transform a Service into an Infrastructure. Science and Public Policy 48(4): 499–507. https://doi.org/10.1093/scipol/scab026.

Frenken, Koen, and Lea Fuenfschilling. 2020. The Rise of Online Platforms and the Triumph of the Corporation. International Journal for Sociological Debate 14(03): 101–13. https://doi.org/10.6092/issn.1971-8853/11715.

Frenken, Koen, and Juliet Schor. 2017. Putting the Sharing Economy into Perspective. Environmental Innovation and Societal Transitions 23(June): 3–10. https://doi.org/10.1016/j.eist.2017.01.003.

Gawer, Annabelle. 2021. Digital Platforms and Ecosystems: Remarks on the Dominant Organizational Forms of the Digital Age. Innovation, Organization & Management, September, 1–15. https://doi.org/10.1080/14479338.2021.1965888.

Ginsparg, Paul. 2011. ArXiv at 20. Nature 476(7359): 145–147. https://doi.org/10.1038/476145a.

Ginsparg, Paul. 2016. Preprint Déjà Vu. The EMBO Journal 35(24): 2620–25. https://doi.org/10.15252/embj.201695531.

Griliches, Zvi. 1958. Research Costs and Social Returns: Hybrid Corn and Related Innovations. Journal of Political Economy 66(5): 419–431. https://doi.org/10.1086/258077.

Helmond, Anne. 2015. The Platformization of the Web: Making Web Data Platform Ready. Social Media + Society 1(2): 1–11. https://doi.org/10.1177/2056305115603080.

Helmond, Anne, David B. Nieborg, and Fernando N. van der Vlist. 2019. Facebook’s Evolution: Development of a Platform-as-Infrastructure. Internet Histories 3(2): 123–146. https://doi.org/10.1080/24701475.2019.1593667.

Hey, Tony, and Anne Trefethen. 2003. E-Science and Its Implications. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 361: 1809–25.

Hey, Tony, and Anne E. Trefethen. 2002. The UK E-Science Core Programme and the Grid. Future Generation Computer Systems 18: 1017–1031.

Hodgson, Geoffrey M. 2019. Taxonomic Definitions in Social Science, with Firms, Markets and Institutions as Case Studies. Journal of Institutional Economics 15(2): 207–233. https://doi.org/10.1017/S1744137418000334.

Hofmayer, Soren, Viacheslav Zholudev, Ijad Madisch, Horst Fickenscher, Michael Hausler, and Alex Tolke. 2015. System, computer program product and computer-implemented method for sharing academic user profiles and ranking academic users. US 20130346497 A1, issued 2015.

Howcroft, Debra, and Birgitta Bergvall-Kåreborn. 2019. A Typology of Crowdwork Platforms. Work, Employment and Society 33(1): 21–38. https://doi.org/10.1177/0950017018760136.

Jankowski, Nicholas W. 2007. Exploring E-Science: An Introduction. Journal of Computer-Mediated Communication 12(2): 549–562. https://doi.org/10.1111/j.1083-6101.2007.00337.x.

Jordan, Katy. 2019. From Social Networks to Publishing Platforms: A Review of the History and Scholarship of Academic Social Network Sites. Frontiers in Digital Humanities 6(5): 1–53. https://doi.org/10.3389/fdigh.2019.00005.

Kenney, Martin, and John Zysman. 2020. The Platform Economy: Restructuring the Space of Capitalist Accumulation. Cambridge Journal of Regions, Economy and Society 13(1): 55–76. https://doi.org/10.1093/cjres/rsaa001.

Kong, Xiangjie, Yajie Shi, Yu Shuo, Jiaying Liu, and Feng Xia. 2019. Academic Social Networks: Modeling, Analysis, Mining and Applications. Journal of Network and Computer Applications 132(April): 86–103. https://doi.org/10.1016/j.jnca.2019.01.029.

Kraut, Robert E., and Paul Resnick. 2011. Encouraging Contribution to Online Communities. In Building Successful Online Communities: Evidence-Based Social Design, eds. Robert E. Kraut and Paul Resnick, 21–76. Cambridge (MA): Massachusetts Institute of Technology and Center for International Development, Harvard University: The MIT Press.

Kullenberg, Christopher, and Dick Kasperowski. 2016. What Is Citizen Science? – A Scientometric Meta-Analysis. Edited by Pablo Dorta-González. PLOS ONE 11(1): e0147152. https://doi.org/10.1371/journal.pone.0147152.

Lane, Julia. 2010. Let’s Make Science Metrics More Scientific. Nature 464: 488–489.

Langlois, Richard N. 2012. Design, Institutions, and the Evolution of Platforms. Journal of Law, Economics & Policy 9(1): 1–13.

Larivière, Vincent, Stefanie Haustein, and Philippe Mongeon. 2015. The Oligopoly of Academic Publishers in the Digital Era. Edited by Wolfgang Glanzel. PLOS ONE 10(6): e0127502. https://doi.org/10.1371/journal.pone.0127502.

Lemmens, Rob, Gilles Falquet, Chrisa Tsinaraki, Friederike Klan, Sven Schade, Lucy Bastin, Jaume Piera, et al. 2021. A Conceptual Model for Participants and Activities in Citizen Science Projects. In The Science of Citizen Science, eds. Katrin Vohland, Anne Land-Zandstra, Luigi Ceccaroni, Rob Lemmens, Josep Perelló, Marisa Ponti, Roeland Samson, and Katherin Wagenknecht, 159–182. Cham: Springer.

Liu, Hai-Ying., Daniel Dörler, Florian Heigl, and Sonja Grossberndt. 2021. Citizen Science Platforms. In The Science of Citizen Science, eds. Katrin Vohland, Anne Land-Zandstra, Luigi Ceccaroni, Rob Lemmens, Josep Perelló, Marisa Ponti, Roeland Samson, and Katherin Wagenknecht, 439–459. Cham: Springer.

Madduri, Ravi, Kyle Chard, Ryan Chard, Lukasz Lacinski, Alex Rodriguez, Dinanath Sulakhe, David Kelly, Utpal Dave, and Ian Foster. 2015. The Globus Galaxies Platform: Delivering Science Gateways as a Service. Concurrency and Computation: Practice and Experience 27(16): 4344–4360. https://doi.org/10.1002/cpe.3486.

Madhavan, Krishna, Michael Zentner, and Gerhard Klimeck. 2013. Learning and Research in the Cloud. Nature Nanotechnology 8(11): 786–789. https://doi.org/10.1038/nnano.2013.231.

Mansell, Robin, and W. Edward Steinmueller. 2020. Advanced Introduction to Platform Economics. Cheltenham: Edward Elgar.

McLennan, Michael, Steven Clark, Ewa Deelman, Mats Rynge, Karan Vahi, Frank McKenna, Derrick Kearney, and Carol Song. 2015. HUBzero and Pegasus: Integrating Scientific Workflows into Science Gateways. Concurrency and Computation: Practice and Experience 27(2): 328–343. https://doi.org/10.1002/cpe.3257.

McLennan, Michael, and Rick Kennell. 2010. HUBzero: A Platform for Dissemination and Collaboration in Computational Science and Engineering. Computing in Science & Engineering 12(2): 48–53. https://doi.org/10.1109/MCSE.2010.41.

Mirowski, Philip. 2018. The Future(s) of Open Science. Social Studies of Science 48(2): 171–203. https://doi.org/10.1177/0306312718772086.

Mukhopadhyay, Sandip, Harry Bouwman, and Mahadeo Prasad Jaiswal. 2019. An Open Platform Centric Approach for Scalable Government Service Delivery to the Poor: The Aadhaar Case. Government Information Quarterly 36(3): 437–448. https://doi.org/10.1016/j.giq.2019.05.001.

Nowotny, Helga, Peter Scott, and Michael Gibbons. 2003. Introduction: ‘Mode 2’ Revisited: The New Production of Knowledge. Minerva 41: 179–94. https://doi.org/10.1023/A:1025505528250.

Otto, Boris, and Matthias Jarke. 2019. Designing a Multi-Sided Data Platform: Findings from the International Data Spaces Case. Electronic Markets 29(4): 561–580. https://doi.org/10.1007/s12525-019-00362-x.

Parsons, Paul, Sandra Gesing, Claire Stirm, and Michael Zentner. 2020. SGCI Incubator and Its Role in Workforce Development: Lessons Learned from Training, Consultancy, and Building a Community of Community-Builders for Science Gateways. In Practice and Experience in Advanced Research Computing, 491–94. New York, NY, USA: ACM. https://doi.org/10.1145/3311790.3400850.

Pitkow, James B., and Hinrich Schuetze. 2006. System and method for searching and recommending objects from a categorically organized information repository. US 20020016786 A1, issued 2006.

Plantin, Jean-Christophe, Carl Lagoze, Paul N. Edwards, and Christian Sandvig. 2018. Infrastructure Studies Meet Platform Studies in the Age of Google and Facebook. New Media & Society 20(1): 293–310.

Poell, Thomas, David Nieborg, and José van Dijck. 2019. Platformisation. Internet Policy Review 8(4): 1–13. https://doi.org/10.14763/2019.4.1425.

Saracevid, Tefko. 1980. Progress in Documentation. Perception of the Needs for Scientific and Technical Information in Less Developed Countries. Journal of Documentation 36(3): 214–67.

Sauermann, Henry, Katrin Vohland, Vyron Antoniou, Bálint Balázs, Claudia Göbel, Kostas Karatzas, Peter Mooney, et al. 2020. Citizen Science and Sustainability Transitions. Research Policy 49(5): 103978. https://doi.org/10.1016/j.respol.2020.103978.

Schwarz, Jonas Andersson. 2017. Platform Logic: An Interdisciplinary Approach to the Platform-Based Economy. Policy & Internet 9(4): 374–394. https://doi.org/10.1002/poi3.159.

Simpson, Robert, Kevin R. Page, and David De Roure. 2014. Zooniverse: Observing the World’s Largest Citizen Science Platform. In Proceedings of the 23rd International Conference on World Wide Web, 1049–54. New York, NY, USA: ACM. https://doi.org/10.1145/2567948.2579215.

Suber, Peter. 2012. Open Access. Cambridge: The MIT Press.

Tabarés, Raúl. 2021. HTML5 and the Evolution of HTML; Tracing the Origins of Digital Platforms. Technology in Society 65(May): 101529. https://doi.org/10.1016/j.techsoc.2021.101529.

Taha, Nashrawan, Rizik Al-Sayyed, Ja’far Alqatawna, and Ali Rodan, eds. 2017. Social Media Shaping E-Publishing and Academia. Springer International Publishing.

Thompson, Mark, and Will Venters. 2021. Platform, or Technology Project? A Spectrum of Six Strategic ‘Plays’ from UK Government IT Initiatives and Their Implications for Policy. Government Information Quarterly 38(4): 101628. https://doi.org/10.1016/j.giq.2021.101628.

Berners-Lee, Tim, and Mark Fischetti. 2000. Weaving the Web: The Original Design and Ultimate Destiny of the World Wide Web. New York: Harper Business.

Tinati, Ramine, Max Van Kleek, Elena Simperl, Markus Luczak-Rösch, Robert Simpson, and Nigel Shadbolt. 2015. Designing for Citizen Data Analysis. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, 4069–78. New York, NY, USA: ACM. https://doi.org/10.1145/2702123.2702420.

UNESCO. 1971. UNISIST: Study Report on the Feasibility of a World Science Information System. Paris: United Nations Educational, Scientific and Cultural Organization. https://unesdoc.unesco.org/ark:/48223/pf0000064862.

van Dijck, José. 2020. Seeing the Forest for the Trees: Visualizing Platformization and Its Governance. New Media & Society, July, 1–19. https://doi.org/10.1177/1461444820940293.

van Dijck, José, Thomas Poell, and Martijn de Waal. 2018. The Platform Society: Public Values in a Connective World. Oxford: Oxford University Press.

Van Noorden, Richard. 2014. Online Collaboration: Scientists and the Social Network. Nature 512(7513): 126–129. https://doi.org/10.1038/512126a.

Van Noorden, Richard. 2017. Publishers Threaten to Remove Millions of Papers from ResearchGate. Nature. https://doi.org/10.1038/nature.2017.22793.

Veletsianos, George. 2016. Social Media in Academia. Networked Scholars: Routledge.

Veletsianos, George, Nicole Johnson, and Olga Belikov. 2019. Academics’ Social Media Use over Time Is Associated with Individual, Relational, Cultural and Political Factors. British Journal of Educational Technology 50(4): 1713–1728. https://doi.org/10.1111/bjet.12788.