Abstract

Consider the inhomogeneous Erdős-Rényi random graph (ERRG) on n vertices for which each pair \(i,j\in \{1,\ldots ,n\}\), \(i\ne j,\) is connected independently by an edge with probability \(r_n(\frac{i-1}{n},\frac{j-1}{n})\), where \((r_n)_{n\in \mathbb {N}}\) is a sequence of graphons converging to a reference graphon r. As a generalisation of the celebrated large deviation principle (LDP) for ERRGs by Chatterjee and Varadhan (Eur J Comb 32:1000–1017, 2011), Dhara and Sen (Large deviation for uniform graphs with given degrees, 2020. arXiv:1904.07666) proved an LDP for a sequence of such graphs under the assumption that r is bounded away from 0 and 1, and with a rate function in the form of a lower semi-continuous envelope. We further extend the results by Dhara and Sen. We relax the conditions on the reference graphon to \((\log r, \log (1- r))\in L^1([0,1]^2)\). We also show that, under this condition, their rate function equals a different, more tractable rate function. We then apply these results to the large deviation principle for the largest eigenvalue of inhomogeneous ERRGs and weaken the conditions for part of the analysis of the rate function by Chakrabarty et al. (Large deviation principle for the maximal eigenvalue of inhomogeneous Erdoös-Rényi random graphs, 2020. arXiv:2008.08367).

Similar content being viewed by others

1 Introduction

1.1 Motivation and Outline

The large deviation principle (LDP) for the Erdős-Rényi random graph (ERRG) was introduced and proved by Chatterjee and Varadhan in their seminal paper [6]. They viewed the sequence of ERRGs as graphons and obtained the LDP in the space of graphons with the cut topology. With this LDP, Chatterjee and Varadhan completely solved a long-standing open problem regarding upper tails for large deviations of triangle counts in ERRGs. This spurred many further developments in the area of large deviations for random graphs. We refer to [4] for an overview.

In this paper, we study the inhomogeneous Erdős-Rényi random graph, which is a generalisation of the ERRG in that different edges do not necessarily occur with the same probability. This probability is controlled by a reference graphon. Recently, Dhara and Sen [7, Proposition 3.1] proved the LDP for the inhomogeneous ERRG model under the assumption that the reference graphon r is bounded away from 0 and 1, i.e., there exists \(\eta >0\) such that \(\eta \le r(x,y)\le 1-\eta \) for all \((x,y)\in [0,1]^2\). The rate function \(J'_r\) for their LDP has the form of a lower semi-continuous envelope of another rate function \(I_r\), which complicates its analysis.

We extend their proof to reference graphons that satisfy the mild integrability condition \(\log r,\log (1-r)\in L^1([0,1]^2)\). Furthermore, we show that \(J'_r\) is also the lower semi-continuous envelope of another, more tractable rate function \(J_r\) that is already semi-continuous, which implies that \(J'_r\) actually equals \(J_r\). The relaxation of the conditions broadens the scope of applications, and the simplification of the rate function makes the LDP more suitable to such applications. As an example application, we consider the LDP for the largest eigenvalue of an inhomogeneous ERRG. We show that the conditions for the analysis by Chakrabarty, Hazra, Den Hollander and Sfragara [2] can partially be weakened.

Random graphs with inhomogeneities and constraints have many applications in complex networks, physics and statistics. As a consequence, recent interest in large deviations for inhomogeneous random graphs has grown considerably. The LDP for ERRGs was applied by Chatterjee and Diaconis [5] to the exponential random graph, Dhara and Sen [7] applied the LDP for inhomogeneous ERRGs to random graphs with fixed degrees, and recently, Borgs et al. [1] proved an LDP for block models. This paper is part of a general effort to better understand large deviations for inhomogeneous random graphs.

Outline In Sect. 1.2, we briefly introduce the necessary concepts and definitions from graph limit theory. The LDP for inhomogeneous ERRGs is stated in Sect. 1.3, and the rate function is introduced. In Sect. 1.4, we introduce large deviations for the largest eigenvalue of the inhomogeneous ERRG. The proof that the rate function is well-defined, lower semi-continuous, and equal to the rate function of Dhara and Sen [7] is given in Sect. 2. We generalise Dhara and Sen’s proof of the LDP upper bound in Sect. 3. In Sect. 4, we finish by showing that the results from this paper can be used to weaken the conditions of the analysis of the rate function for the largest eigenvalue by Chakrabarty et al. [2].

1.2 Graphons

A graphon is a Borel measurable function \(h:[0,1]^2\rightarrow [0,1]\) such that \(h(x,y)=h(y,x)\) for all \((x,y)\in [0,1]^2\). We denote the set of graphons by \(\mathcal {W}\). Every finite simple graph \(G=(V(G),E(G))\) with \(V(G)=[n]:=\{1,\ldots ,n\}\) can be represented as the graphon \(h^G\) defined as

with \(B(i,j,n):=[\frac{i-1}{n},\frac{i}{n})\times [\frac{j-1}{n},\frac{j}{n})\). We call \(h^G\) the empirical graphon of G. Let \(\mathcal {M}\) denote the set of Lebesgue measure-preserving bijections \(\phi :[0,1]\rightarrow [0,1]\). The cut distance on \(\mathcal {W}\) is defined as

and the cut metric is defined as

where \(h^\phi (x,y):=h(\phi (x),\phi (y))\). See [9, Theorem 8.13] for several equivalent definitions for \(\delta _\square \). The cut metric induces an equivalence relation \(\sim \) on \(\mathcal {W}\) where \(h_1\sim h_2\) if \(\delta _\square (h_1,h_2)=0\). Define \(\widetilde{\mathcal {W}}:=\mathcal {W}/{\sim }\) and denote the equivalence class of \(h\in \mathcal {W}\) by \(\widetilde{h}\). The space \((\widetilde{\mathcal {W}},\delta _\square )\) is a compact metric space [9, Theorem 9.23].

1.3 Main Theorem

For some \(h\in \mathcal {W}\), define the random graph \(G_n\) with vertex set [n] by connecting every pair of vertices \(i,j\in [n]\) with probability \(h(\frac{i-1}{n},\frac{j-1}{n})\). Denote the law of the empirical graphon \(h^{G_n}\) of \(G_n\) on \(\mathcal {W}\) by \(\mathbb {P}_{n,h}\) and the law of \(\widetilde{h}^{G_n}\) on \(\widetilde{\mathcal {W}}\) by \(\widetilde{\mathbb {P}}_{n,h}\).

We define a sequence of random graphs as follows. Fix a graphon \(r\in \mathcal {W}\) called the reference graphon and let \((r_n)_{n\in \mathbb {N}}\) be a sequence of block graphons of the form

such that \(0<r<1\) and \(r_n\rightarrow r\) Lebesgue-almost everywhere and in \(L^1\)-norm as \(n\rightarrow \infty \). Further assume that

and

as \(n\rightarrow \infty \).

We show that \((\widetilde{\mathbb {P}}_{n,r_n})_{n\in \mathbb {N}}\) satisfies an LDP. First, we define a suitable rate function. For \(a\in [0,1]\) and \(b\in (0,1)\), let

where we use the convention \(0\log 0=0\). For \(h,r\in \mathcal {W}\) such that \(0<r<1\) Lebesgue-almost everywhere, this map can be extended to a map \(I_r\) on \(\mathcal {W}\) by defining

In the case \(r\equiv p\), \(I_r\) is invariant under measure-preserving bijections. Hence, \(I_r\) can be extended to \(\widetilde{\mathcal {W}}\) as \(J_r(\widetilde{h}):=I_r(h)\) [4, Proposition 5.1]. For inhomogeneous reference graphons, this is no longer the case. To solve this problem, Dhara and Sen extend the function to its lower semi-continuous envelope, i.e.,

where \(B(\widetilde{h},\eta ):=\{g\in \mathcal {W}\mid \delta _\square (\widetilde{h},\widetilde{g})\le \eta \}\). This extension is well-defined and lower semi-continuous [7, Lemma 2.1], but analytic manipulation can be somewhat difficult. Instead, we propose the following more tractable rate function:

A priori, it is not clear whether \(J_r\) a good rate function on \(\widetilde{\mathcal {W}}\) or even well-defined. In Sect. 2, we show that it is, and that \(J_r\) in fact equals \(J'_r\) under the condition (1.5). This is one of the main results of this paper.

We are now ready to state the main theorem.

Theorem 1.1

Subject to (1.5) and (1.6), the sequence \((\widetilde{\mathbb {P}}_{n,r_n})_{n\in \mathbb {N}}\) satisfies the large deviation principle on \((\widetilde{\mathcal {W}},\delta _\square )\) with rate \(\frac{n^2}{2}\) and rate function \(J_r\), i.e., for all closed sets \(\widetilde{F}\subset \widetilde{\mathcal {W}}\) and open sets \(\widetilde{U}\subset \widetilde{\mathcal {W}}\),

This theorem was proved by Dhara and Sen [7, Proposition 3.1] under the condition that there exists an \(\eta >0\) such that \(\eta \le r(x,y)\le 1-\eta \) and \(\eta \le r_{n,ij}(x,y)\le 1-\eta \) for all \((x,y)\in [0,1]^2\), \(n\in \mathbb {N}\) and \(i\ne j\). The novelty in this paper lies in weakening the conditions and showing that \(J_r=J'_r\).

The proof of the lower bound requires only minor adjustments of the proof in [4]. Therefore, we only prove the upper bound in this paper. For a detailed proof of the lower bound, we refer to [10, Sect. 6]. Throughout the rest of the paper, we implicitly assume that (1.5) and (1.6) hold and no longer mention it in the statement of our results.

1.4 Large Deviations for the Largest Eigenvalue

Let \(\lambda _n\) be the largest eigenvalue of the adjacency matrix of \(G_n\). Then, \(\frac{\lambda _n}{n}\) also satisfies an LDP. Chakrabarty et al. [2] studied the rate function \(\psi _r\) under the conditions that r is bounded away from 0 and 1 and of rank 1, i.e. \(r(x,y)=\nu (x)\nu (y)\) for some \(\nu :[0,1]\rightarrow [0,1]\). They analysed the scaling of the rate function and identified the form of the minimisers near the rate function’s minimum and near the boundaries 0 and 1.

The requirement that r is bounded away from 0 and 1 stems in part from the fact that the results from [2] are obtained using the LDP by Dhara and Sen [7]. They posed the question whether the boundedness condition could be weakened to some form of integrability condition. In this paper, we show that the condition can partially be relaxed to (1.5). In particular, we extend their analysis of the rate function near its minimum.

From the LDP for inhomogeneous ERRGs, Chakrabarty et al. [2] derive the following LDP for the largest eigenvalue. Note that for this theorem, we do not yet require r to be of rank 1.

Theorem 1.2

Let \(\mathbb {P}^*_n\) denote the law of \(\lambda _n/n\). Subject to (1.5) and (1.6), the sequence \((\mathbb {P}^*_n)_n\in \mathbb {N}\) satisfies the LDP on \(\mathbb {R}\) with rate \(n^2/2\) and with rate function

with \(T_h\) the operator on \(L^2([0,1]^2)\) defined as

for \(h\in \mathcal {W}\), \(u\in L^2([0,1]^2)\) and \(x\in [0,1]\), and where \(\Vert T_h\Vert \) is the operator norm of \(T_h\) with respect to the \(L^2\)-norm on \(L^2([0,1]^2)\).

Proof

The proof is standard and follows from Theorem 1.1, combined with the observation that \(\lambda _n/n=\Vert T_{h^{G^n}}\Vert \). See [2, Theorem 1.4]. \(\square \)

Note that Chakrabarty et al. [2] already use the rate function \(J_r\), so their result is already an application of the results from this paper.

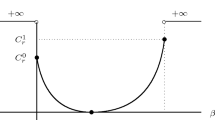

Put \(C_r=\Vert T_r\Vert \). Then, Chakrabarty et al. [2] show that the rate function \(\psi _r\) is continuous and unimodal on [0, 1], with a unique zero at \(C_r\), and that it is strictly decreasing and strictly increasing on \([0,C_r]\) and \([C_r,1]\) respectively. Furthermore, for every \(\beta \in [0,1]\), the set of minimisers of the variational formula for \(\psi _r(\beta )\) in (1.12) is non-empty and compact in \(\widetilde{\mathcal {W}}\). For \(\beta \not \in [0,1]\), \(\psi _r(\beta )=+\infty \). These results do not require boundedness away from 0 and 1 of the reference graphon.

One of the main results by Chakrabarty et al. [2] is the scaling of the rate function and the minimisers near \(\beta =C_r\) under the condition that r is bounded away from 0 and 1. We generalise it to the following theorem, which we prove in Sect. 4.

Theorem 1.3

Assume there exists \(\nu :[0,1]\rightarrow [0,1]\) such that \(r(x,y)=\nu (x)\nu (y)\). Then, subject to (1.5),

with

where

Furthermore, let \(h_\beta \in \mathcal {W}\) be any minimiser of the second infimum in (1.12). Then,

with

1.5 Discussion

1.5.1 Conditions on the Reference Graphon

In [1], Borgs et al. proved an LDP for a block model in which the reference graphon consists of rational-length blocks. This result was later strengthened to include blocks of arbitrary length by Grebík and Pikhurko [8]. In their model, the reference graphon may take on the values 0 or 1. It would be interesting to generalise both LDPs to a single LDP for graphons that are partly block graphons (that can attain the values 0 and 1), and partly satisfy the integrability condition of this paper. Since the condition \(\log r,\log (1-r)\in L^1([0,1]^2)\) pervades almost every step of the proof of Theorem 1.1, it appears to be difficult to obtain an LDP for arbitrary reference graphons. It might be the case that the inhomogeneous ERRG model does not satisfy an LDP for certain reference graphons.

1.5.2 Non-Dense Random Graphs

Graph limit theory provides the right framework for studying sequences of dense graphs, since non-dense graphs converge to the zero graphon. A similar framework for non-dense graphs is still in development, so not much is known yet for large deviations of non-dense random graphs. We refer to the bibliographical notes in [4, Chapter 6] and [3, Sect. 3] for a short review of recent results in sparse graph limit theory and sparse large deviations. Although this paper also considers dense random graphs, the reference graphon is allowed to approach 0. Such reference graphons can induce dense random graphs with non-dense subgraphs. This is in contrast to the setting of Dhara and Sen [7], where every subgraph is also dense.

1.5.3 Rate Function for the LDP for Block Models

Like Dhara and Sen [7], Borgs et al. [1] used a lower semi-continuous envelope as their rate function. The rate function from this paper can also be used in [1]. The authors also derive an LDP for homomorphism densities and define a symmetric and symmetry breaking regime. The existence of a symmetry breaking regime is only established for specific block models, in part due to the intractable nature of the rate function. The precise boundary between the symmetric and non-symmetric regimes was also only identified for bipartite ERRGs, again due to the intractability of the rate function. The results from this paper might aid in resolving the general cases.

2 The Rate Function

We show that the candidate rate function is a good rate function, i.e., does not equal infinity everywhere and has compact level sets. Since the first requirement is clear and \(\widetilde{\mathcal {W}}\) is compact, it suffices to show that \(J_r\) is lower semi-continuous. First, we need to check that \(J_r\) is well-defined on the quotient space \(\widetilde{\mathcal {W}}\). As a consequence of lower semi-continuity, we prove that \(J_r\) equals the rate function \(J'_r\) as defined by Dhara and Sen [7] (see 1.9).

Lemma 2.1

The function \(I_r\) is continuous in the \(L^2\)-topology on \(\mathcal {W}\).

Proof

Let \((f_n)_{n\in \mathbb {N}}\subset \mathcal {W}\) and \(f\in \mathcal {W}\) such that \((f_n)_{n\in \mathbb {N}}\) converges to f in \(L^2([0,1]^2)\). Note that for all \(h\in \mathcal {W}\),

for all \((x,y)\in [0,1]^2\), where we use that \(x\mapsto x\log x\) has a minimum \(-\frac{1}{e}\) on [0, 1]. Because \(\log r,\log (1- r)\in L^1([0,1]^2)\), the bound above is integrable.

Let \((n_k)_{k\in \mathbb {N}}\) be a sequence of integers tending to infinity. Since \(f_n\rightarrow f\) as \(n\rightarrow \infty \) in \(L^2([0,1]^2)\), there exists a subsequence \((n_{k_l})_{l\in \mathbb {N}}\) such that \(f_{n_{k_l}}\rightarrow f\) as \(l\rightarrow \infty \) Lebesgue-almost everywhere. By continuity, \(\mathcal {R}(f_{n_{k_l}}(x,y)\mid r(x,y))\rightarrow \mathcal {R}(f(x,y)\mid r(x,y))\) Lebesgue-almost everywhere. By the dominated convergence theorem, using the bound from (2.1), we have \( I_r(f_{n_{k_l}})\rightarrow I_r(f)\) as \(l\rightarrow \infty \). Thus, for every sequence \((n_k)_{k\in \mathbb {N}}\) there exists a subsequence \((n_{k_l})_{l\in \mathbb {N}}\) such that \( I_r(f_{n_{k_l}})\rightarrow I_r(f)\) as \(l\rightarrow \infty \). Hence, \( I_r(f_n)\rightarrow I_r(f)\) as \(n\rightarrow \infty \). \(\square \)

Lemma 2.2

Let \(f,g\in \mathcal {W}\) be such that \(\delta _\square (f,g)=0\). Then \(\inf _{\phi \in \mathcal {M}} I_{r}(f^\phi )=\inf _{\phi \in \mathcal {M}} I_{r}(g^\phi )\).

Proof

Note that by [9, Corollary 8.14], \(\delta _\square (f,g)=0\) if and only if there exists a sequence \((\phi _n)_{n\in \mathbb {N}}\subset \mathcal {M}\) such that \(\Vert f ^{\phi _n}-g\Vert _{L^2}\rightarrow 0\) as \(n\rightarrow \infty \). Since \(\Vert h_1^\phi -h_2^\phi \Vert _{L^2}=\Vert h_1-h_2\Vert _{L^2}\) for all \(h_1,h_2\in \mathcal {W}\) and \(\phi \in \mathcal {M}\), we find that \(\Vert (f ^{\phi _n}) ^\phi -g ^\phi \Vert _{L^2}\rightarrow 0\) for all \(\phi \in \mathcal {M}\). Thus, by Lemma 2.1, \( I_r((f ^{\phi _n}) ^\phi )\rightarrow I_r(g ^\phi )\) as \(n\rightarrow \infty \). Because this holds for all \(\phi \in \mathcal {M}\), we obtain

By symmetry, the reverse inequality also holds. \(\square \)

By Lemma 2.2, \(J_r\) can also be expressed as

so

Since \(J_r\ge J_r'\), we have \(J_r=J_r'\) if \(J_r\) is lower semi-continuous on \(\widetilde{\mathcal {W}}\).

Theorem 2.3

Subject to (1.5) and (1.6), the function \( J_r\) is lower semi-continuous on \(\widetilde{\mathcal {W}}\).

Proof

Let \(\widetilde{f}\in \widetilde{\mathcal {W}}\) and \((\widetilde{f}_n)_{n\in \mathbb {N}}\subset \widetilde{\mathcal {W}}\) such that \(\widetilde{f}_n\rightarrow \widetilde{f}\) in \(\delta _\square \). Without loss of generality, we may assume that \(f_n\rightarrow f\) in \(d_\square \). Let \(\Delta _n:=f_n-f\in \mathcal {W}_1:=\{f-g\mid f,g\in \mathcal {W}\}\). Then, \(\Delta _n\rightarrow 0\) in \(d_\square \) and by an easy computation,

for every \(n\in \mathbb {N}\), \(\phi \in \mathcal {M}\) and \(p\in (0,1)\). Thus,

for some \(p\in (0,1)\), arbitrary \(\varepsilon >0\) and some sequence \((\phi _n)_{n\in \mathbb {N}}\subset \mathcal {M}\). The second inequality follows from the lower semi-continuity of \(I_p\) on \(\mathcal {W}\). The last inequality is obtained by noting that \(\Delta _{n}^{\phi _n}\rightarrow 0\) in \(d_\square \) and \(\log r,\log (1-r)\in L^1([0,1]^2)\) and applying [9, Lemma 8.22]. Because \(\varepsilon >0\) is arbitrary, the proof is complete. \(\square \)

Corollary 2.4

For all \(\widetilde{f}\in \mathcal {W}\), \( J_r(\widetilde{f})= J_r'(\widetilde{f})\).

3 The Upper Bound

The proof of the upper bound is an adaptation of the proof by Dhara and Sen [7, Proposition 3.1]. It suffices to prove the following result.

Theorem 3.1

Let \(\varepsilon >0\) and \(\widetilde{f}\in \widetilde{\mathcal {W}}\). Then, there exists an \(\eta (\varepsilon )>0\) such that, for all \(\eta \in (0,\eta (\varepsilon ))\),

with \(\widetilde{B}(\widetilde{f},\eta )=\{\widetilde{g}\in \widetilde{\mathcal {W}}\mid \delta _\square (\widetilde{f},\widetilde{g})\le \eta \}\) and \(B(\widetilde{f},4\varepsilon )=\{g\in \mathcal {W}\mid \delta _\square (\widetilde{f},\widetilde{g})\le 4\varepsilon \}\).

The proof is done via the level-n approximants \((\overline{r}_n)_{n\in \mathbb {N}}\) of r, which are of the form (1.4), with

Dhara and Sen show that the distributions \(\frac{1}{n^2}\log \mathbb {P}_{n,r_n}\) are well-approximated by \(\frac{1}{n^2}\mathbb {P}_{n,\overline{r}_k}\) for n large enough and some fixed k and that the rate function \(I_r\) is well-approximated by \(I_{\overline{r}_k}\) for k large enough. In the case that r is bounded away from 0 and 1, Dhara and Sen use Lipschitz continuity of the logarithm on a closed interval. If r tends to 0, \(\log \mathbb {P}_{n,r_n}\) and \(\log \mathbb {P}_{n,\overline{r}_k}\) might differ by large amounts as \(n\rightarrow \infty \). Thus, we require more control over the approximation in this paper. We obtain this by precisely counting the points in the unit square where \(\log r_n\) and \(\log \overline{r}_k\) are far apart and showing that this area tends to 0 sufficiently fast. The proof is given in Sect. 3.2. In Sect. 3.1, we show that the rate function induced by the level-n approximants approximates the rate function induced by r well. In Sect. 3.3, we finish the proof of Theorem 3.1. This part of the proof does not require r to be bounded away from 0 and 1. Hence, this section does not contain any original content, but we still include it for the sake of completeness.

3.1 Block Graphon Approximants

By [4, Proposition 2.6], the level-n approximants converge to r in \(L^1\)-norm, and convergence almost everywhere follows from the Lebesgue differentiation theorem for sets of bounded eccentricity [11, Chapter 3, Corollary 1.7]. The following lemma shows that the level-n approximants satisfy (1.6). The second lemma is a generalisation of [7, Lemma 2.3].

Lemma 3.2

\(\Vert \log \overline{r}_n-\log r\Vert _{L^1}\rightarrow 0\) and \(\Vert \log (1-\overline{r}_n)-\log (1-r)\Vert _{L^1}\rightarrow 0\) as \(n\rightarrow \infty \).

Proof

First, note that, for \((x,y)\in B(i,j,n)\),

The equality follows from the fact that \(0\le r\le 1\), and the inequality follows from Jensen’s inequality. This upper bound is integrable, since

By the dominated convergence theorem and the fact that \(\overline{r}_n\) converges to r almost everywhere, \(\Vert \log \overline{r}_n-\log r\Vert _{L^1}\rightarrow 0\). The proof that \(\Vert \log (1-\overline{r}_n)-\log (1-r)\Vert _{L^1}\rightarrow 0\) is completely analogous. \(\square \)

Lemma 3.3

Let \(r\in \mathcal {W}\) and \((r_n)_{n\in \mathbb {N}}\subset \mathcal {W}\) that satisfy (1.5) and (1.6). Then, \(I_{r_n}(\widetilde{f})\rightarrow I_r(\widetilde{f})\) uniformly over \(f\in \mathcal {W}\) as \(n\rightarrow \infty \).

Proof

First note that \(\Vert \log r_n-\log r\Vert _{L^1}\rightarrow 0\) and \(\Vert \log (1-r_n)-\log (1-r)\Vert _{L^1}\rightarrow 0\) by Lemma 3.2. Furthermore, for all \(f\in \mathcal {W}\),

Hence, by the definition of the rate function on \(\widetilde{\mathcal {W}}\),

Since the bound is uniform over all \(\widetilde{f}\in \widetilde{\mathcal {W}}\), we obtain the desired result. \(\square \)

3.2 Approximation by a Fixed Block Graphon

The following lemma is a generalisation of [7, Lemma 3.2].

Lemma 3.4

Let \(r\in \mathcal {W}\) and \((r_n)_{n\in \mathbb {N}}\subset \mathcal {W}\) that satisfy (1.5) and (1.6). For all \(\varepsilon >0\) sufficiently small, there exist \(N_0=N_0(\varepsilon )\) and \(N_1=N_1(\varepsilon )\) such that for all \(n\ge N_1\ge k\ge N_0\), \(f\in \mathcal {W}\) and \(\eta >0\),

where \(\overline{r}_k\) is the level-k approximant of r as defined in Sect. 3.1.

Proof

Let \(\varepsilon >0\). Since \(\Vert \log r_n-\log r\Vert _{L^1},\Vert \log \overline{r}_n-\log r\Vert _{L^1}\rightarrow 0\) and \(\Vert \log (1-r_n)-\log (1-r)\Vert _{L^1},\Vert \log (1-\overline{r}_n)-\log (1-r)\Vert _{L^1}\rightarrow 0\) by Lemma 3.2, there exists an \(N_0\in \mathbb {N}\) such that \(\Vert \log r_n-\log \overline{r}_k\Vert _{L^1}<\varepsilon /4\) and \(\Vert \log (1-r_n)-\log (1-\overline{r}_k)\Vert _{L^1}<\varepsilon /4\) for all \(n\ge k\ge N_0\).

Note that

Fix \(n\ge k\ge N_0\), and let \(g\in \mathcal {W}\) be of the form (1.4). Denote by \(r_{n,uv}\) and \(g_{uv}\) the values of \(r_n\) and g in B(u, v, n) respectively. Then,

The quantity above closely resembles \(\Vert \log r_n-\log \overline{r}_k\Vert _{L^1}+\Vert \log (1-r_n)-\log (1-\overline{r}_k)\Vert _{L^1}\), except that it ‘over-counts’ part of \([0,1]^2\) and ignores other parts. We will make this precise.

For points \((\frac{u-1}{n},\frac{v-1}{n})\in [0,1]^2\) such that \(B(u,v,n)\subset B(i,j,k)\) for i, j with \(\left( \frac{u-1}{n},\frac{v-1}{n}\right) \in B(i,j,k)\), we have

Now define

and

The set \(C_{i,j,k}\) consists of the right-most and top-most points in the square B(i, j, k), and the set \(A_n\) is the part of \([0,1]^2\) which is over-counted in (3.9). For \((u,v)\in C_{i,j,k}\),

where we use that \(\lambda (B(u,v,n)\cap B(i,j,k))=(\frac{i}{k}-\frac{u-1}{n})(\frac{j}{k}-\frac{v-1}{n})\ge \frac{1}{k ^2n^2}\). Hence,

Since the second part tends to 0 as \(n\rightarrow \infty \), there exists an \(N_1=N_1(\varepsilon )\ge k\) such that \(k^2\Vert \log r_n-\log \overline{r}_k\Vert _{L^1(A_n)}<\varepsilon /4\) for all \(n\ge N_1\). The argument for the terms \(\left| \log \frac{1-r_{n,uv}}{1-\overline{r}_{k,ij}}\right| \) is completely analogous. Then

for all \(n\ge N_1\ge k\ge N_0\). Substituting this inequality into (3.8), we obtain

\(\square \)

3.3 Proof of the Upper Bound

The rest of the proof now follows as in [7]. For the sake of completeness, we repeat their argument in this section using the notation introduced in this paper.

Let \(\mathcal {M}_n\) be the set of permutations of n objects, and let \(G^{\phi _n}_n\) be the graph obtained by relabelling the vertices of \(G_n\) with the permutation \(\phi _n\in \mathcal {M}_n\). The following lemma shows that there exists a finite subset \(\mathcal {T}\subset \mathcal {M}\) such that, for all n large enough, the distribution of \(h^{G_n^{\phi _n}}\) can be approximated by \(h^{G_n,\tau }\) for some \(\tau \in {\mathcal {T}}\).

Lemma 3.5

Let \(r_k,f\in \mathcal {W}\) be of the form (1.4) with \(k\ge 1\). Then, for any \(\varepsilon >0\), there exists \(n_0=n_0(k,\varepsilon )\) and a finite set \(\mathcal {T}=\mathcal {T}(k,\varepsilon )\) such that for all \(n\ge n_0\) and \(\phi _n\in \mathcal {M}_n\), there exists \(\tau \in \mathcal {T}\) satisfying

Proof

See [7, Lemma 3.3] \(\square \)

We are now ready to prove Theorem 3.1.

Proof of Theorem 3.1

Fix \(\varepsilon >0\) and \(\widetilde{f}\in \widetilde{\mathcal {W}}\). Recall the setup of Theorem 3.1. Because of Lemma 3.4, it suffices to prove that there exists an \(\eta (\varepsilon )>0\) such that, for all \(0<\eta <\eta (\varepsilon )\),

where k is chosen to be sufficiently large according to Lemma 3.4. Next, we apply a version of Szeméredi’s regularity lemma, as formulated in [4, Theorem 3.1]. This states that, for every \(\varepsilon >0\), there exists a finite set \(\mathcal {W}(\varepsilon )\subset \mathcal {W}\) such that, for every finite simple graph \(G_n\) on n vertices, there exist \(\phi _n\in \mathcal {M}_n\) and \(h\in \mathcal {W}(\varepsilon )\) such that \(h^{G^{\phi _n}_n}\in B(h,\varepsilon )\).

Let \(G_n\) be a graph drawn according to \(\mathbb {P}_{n,\overline{r}_k}\). Define

Then, by the result above,

Since \(\mathcal {W}(\varepsilon )\) is a finite set, it suffices to show that

for all \(h\in \mathcal {W}(\varepsilon )\) and \(\eta <\varepsilon \). Lemma 3.5 yields that the left-hand side of (3.21) is at most

where we use that \(|\mathcal {M}_n|=n!\) and \(\log n!=o(n^2)\). Since \(\mathcal {T}\) is a finite set, it is enough to show that for each \(\tau \in \mathcal {T}\),

By [4, Lemma 5.4], \(B(h_{\tau ^{-1}},2\varepsilon )\) is closed in the weak topology. Hence, we can apply the LDP upper bound in the weak topology, stated in [4, Theorem 5.1]. Although that the upper bound in the weak topology was proved only for the homogeneous ERRG, the argument generalises to the inhomogeneous ERRG model from this paper. We refer to [10, Sect. 4] for more detail. Thus,

If the event in (3.21) is empty, then the bound is trivial. In order for the event to be non-empty, we must have that \(d_\square (\widetilde{h^{G_n}},\widetilde{f})\le \eta <\varepsilon \) and \(d_\square (\widetilde{h^{G_n}},\widetilde{h})\le \varepsilon \), so that \(d_\square (\widetilde{f},\widetilde{h})\le 2\varepsilon \). Hence, \(B(\widetilde{h},2\varepsilon )\subset B(\widetilde{f},4\varepsilon )\) and we obtain (3.21). The proof is finished by letting \(k\rightarrow \infty \) and applying Lemma 3.3. \(\square \)

4 The Rate Function for the Largest Eigenvalue

We sketch the proof of Theorem 1.3 as given by Chakrabarty et al. [2]. We only give details for Lemmas 4.1 and 4.2, which are generalisations of results in [2].

Note that when \(\beta =C_r\), the infimum in (1.12) is attained at \(h=r\) and \(\psi _r(C_r)=0\). Take \(\beta =C_r+\varepsilon \) with \(\varepsilon >0\) small, and assume that the infimum is attained by a graphon of the form \(h=r+\Delta _\varepsilon \), where \(\Delta _\varepsilon :[0,1]^2\rightarrow \mathbb {R}\) represents a perturbation of the graphon r.

The proof of Theorem 1.3 consists of showing that it suffices to consider \(\Delta _\varepsilon \) of the form \(\varepsilon \Delta \), identifying the optimal \(\Delta \) and calculating \(\psi _r\). Chakrabarty et al. [2] first prove that \(I_r(r+\Delta _\varepsilon )\ge 2\varepsilon ^2\) for any perturbation \(\Delta _\varepsilon \). The following lemma is an adaptation of [2, Lemma 3.1 and Lemma 3.2] and shows that \(I_r(r+\Delta _\varepsilon )\) is of order \(\varepsilon ^2\) in the case \(\Delta _\varepsilon =\varepsilon \Delta \).

Lemma 4.1

If \(\Delta _\varepsilon =\varepsilon ^\alpha \Delta \) on some measurable set \(B\subset [0,1]^2\), with \(\varepsilon ,\alpha >0\) and \(\Delta :[0,1]^2\rightarrow \mathbb {R}\), then the contribution of B to the cost \(I_r(r+\Delta _\varepsilon )\) is

Proof

Because \(\chi (a):=\mathcal {R}(a\mid b)\) is analytic for every \(a\in [0,1]\) and \(b\in (0,1)\), we have

where we can swap the integral and sum due to the fact that \(\int _{[0,1]^2}|\mathcal {R}(f(x,y)\mid r(x,y))|\,dx\,dy\) is uniformly bounded over all \(f\in \mathcal {W}\), since \(\log r,\log (1-r)\in L^1([0,1]^2)\). Furthermore, \(\chi (b)=\chi '(b)=0\) and \(\chi ''(b)=\frac{1}{b(1-b)}\). Hence,

\(\square \)

From the proof of Lemma 4.1 and [2, Lemma 3.1], it follows that optimal perturbations with \(\Delta _\varepsilon \) must satisfy \(\Vert \Delta _\varepsilon \Vert _{L^2}\asymp \varepsilon \), and hence it is desirable to have \(\Delta _\varepsilon =\varepsilon \Delta \). Chakrabarty et al. [2] argue through block graphon approximants that this is indeed the case. For this argument, we need the following lemma, which shows that block graphons approximate the rate function well.

Lemma 4.2

Let \(\overline{r}_n\) and \(\overline{f}_n\) be the level-n approximants of r and \(f\in \mathcal {W}\), as defined in Sect. 3.1. Then, \(\lim _{n\rightarrow \infty }I_{\overline{r}_n}(\overline{f}_n)=I_r(f)\).

Proof

Let \(\varepsilon >0\). By Lemma 3.3, \(I_{r_N}(f)\rightarrow I_r(f)\) uniformly over all \(f\in \mathcal {W}\). Hence, there exists \(M_1=M_1(\varepsilon )\) such that for all \(N\ge M_1\), \(|I_{r_N}(f_N)-I_{r}(f_N)|<\varepsilon /2\). Furthermore, \(f_N\rightarrow f\) in \(L^2\) and \(I_r\) is continuous in the \(L^2\)-topology in \(\mathcal {W}\) by Lemma 2.1. Thus, there exists \(M_2=M_2(\varepsilon )\) such that \(|I_r(f_N)-I_r(f)|<\varepsilon /2\) for all \(N\ge M_2\). Choosing \(M:=\max \{M_1,M_2\}\) completes the proof. \(\square \)

The remainder of the proof now follows as in [2]. We give a brief summary. Using Lemma 4.1 and exploiting the property that r is of rank 1, Chakrabarty et al. [2] show that, in the case \(\Delta _\varepsilon =\varepsilon \Delta \),

with

Note that the integral in the expression above may be infinite, so the infimum is minimised for some \(\Delta \) that counteracts the expression \(\frac{1}{r(1-r)}\). Using Lagrange multipliers, we obtain that the infimum is minimised for

and

with \(B_r\) as defined in (1.16). Chakrabarty et al. [2] conclude the proof by showing that perturbations \(\Delta _\varepsilon \) that are not of the form \(\Delta _\varepsilon =\varepsilon \Delta \) are asymptotically worse as \(\varepsilon \rightarrow 0\). They do this via block graphons, and use Lemma 4.2.

References

Borgs, C., Chayes, J., Gaudio, J., Petti S., Sen, S.: A large deviation principle for block models. arXiv:2007.14508 (2020)

Chakrabarty, A., Hazra, R.S., den Hollander, F., Sfragara, M.: Large deviation principle for the maximal eigenvalue of inhomogeneous Erdős-Rényi random graphs. (2020). arXiv:2008.08367

Chatterjee, S.: An introduction to large deviations for random graphs. Bull. Am. Math. Soc. 53(4), 617–642 (2016)

Chatterjee, S.: Large Deviations for Random Graphs, 1st edn. Springer, New York (2017)

Chatterjee, S., Diaconis, P.: Estimating and understanding exponential random graph models. Ann. Statist. 41(5), 2428–2461 (2013)

Chatterjee, S., Varadhan, S.R.S.: The large deviation principle for the Erdős-Rényi random graph. Eur. J. Comb. 32(7), 1000–1017 (2011). (Homomorphisms and Limits)

Dhara S., Sen, S.: Large deviation for uniform graphs with given degrees. (2020). arXiv:1904.07666

Grebík, J., Pikhurko, O.: Large deviation principles for block and step graphon random graph models. (2021). arXiv:2101.07025

Lovász, L.: Large Networks and Graph Limits, 1st edn. American Mathematical Society, USA (2012)

Markering, M.J.R.: The large deviation principle for inhomogeneous Erdős-Rényi random graphs. Bachelor thesis, Leiden University (2020)

Stein, E.M., Shakarchi, R.: Real analysis: measure theory, integration & Hilbert spaces. Princeton Lectures in Analysis, III. Princeton University Press (2005)

Acknowledgements

This work was initiated as a Bachelor thesis at Leiden University under the supervision of Frank den Hollander [10]. The author thanks him for his continued guidance and support the past year. The author is very grateful for the many mathematical discussions, as well as his thorough feedback on the thesis and help with this paper. The author also thanks an anonymous referee for their careful review.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Markering, M. The Large Deviation Principle for Inhomogeneous Erdős–Rényi Random Graphs. J Theor Probab 36, 711–727 (2023). https://doi.org/10.1007/s10959-022-01181-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-022-01181-1