Abstract

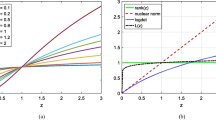

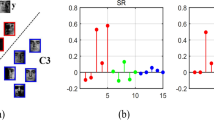

In this paper, the optimization problem of supervised distance preserving projection (SDPP) for data dimensionality reduction is considered, which is equivalent to a rank constrained least squares semidefinite programming (RCLSSDP). Due to the combinatorial nature of rank function, the rank constrained optimization problems are NP-hard in most cases. In order to overcome the difficulties caused by rank constraint, a difference-of-convex (DC) regularization strategy is employed, then RCLSSDP is transferred into a DC programming. For solving the corresponding DC problem, an inexact proximal DC algorithm with sieving strategy (s-iPDCA) is proposed, whose subproblems are solved by an accelerated block coordinate descent method. The global convergence of the sequence generated by s-iPDCA is proved. To illustrate the efficiency of the proposed algorithm for solving RCLSSDP, s-iPDCA is compared with classical proximal DC algorithm, proximal gradient method, proximal gradient-DC algorithm and proximal DC algorithm with extrapolation by performing dimensionality reduction experiment on COIL-20 database. From the computation time and the quality of solution, the numerical results demonstrate that s-iPDCA outperforms other methods. Moreover, dimensionality reduction experiments for face recognition on ORL and YaleB databases demonstrate that rank constrained kernel SDPP is efficient and competitive when comparing with kernel semidefinite SDPP and kernel principal component analysis in terms of recognition accuracy.

Similar content being viewed by others

Data Availability

Enquiries about data availability should be directed to the authors.

References

Zhu, Z.-X., Similä, T., Corona, F.: Supervised distance preserving projections. Neural Process. Lett. 38(3), 445–463 (2013)

Jahan, S.: On dimension reduction using supervised distance preserving projection for face recognition. Univ. J. Appl. Math. 6(3), 94–105 (2018)

Gao, Y.: Structured low rank matrix optimization problems: a penalty approach. PhD thesis, National University of Singapore (2010)

Gao, Y., Sun, D.-F.: A majorized penalty approach for calibrating rank constrained correlation matrix problems. http://www.math.nus.edu.sg/~matsundf/MajorPen_May5.pdf (2010)

Qi, H.-D., Yuan, X.-M.: Computing the nearest euclidean distance matrix with low embedding dimensions. Math. Program. 147(1), 351–389 (2014)

Singer, A.: A remark on global positioning from local distances. Proc. Natl. Acad. Sci. 105(28), 9507–9511 (2008)

Shang, Y., Rumi, W., Zhang, Y., Fromherz, M.: Localization from connectivity in sensor networks. IEEE Trans. Parallel Distrib. Syst. 15(11), 961–974 (2004)

Torgerson, W.S.: Multidimensional scaling: I. theory and method. Psychometrika 17(4), 401–419 (1952)

Buss, J.F., Frandsen, G.S., Shallit, J.O.: The computational complexity of some problems of linear algebra. J. Comput. Syst. Sci. 58(3), 572–596 (1999)

Candes, E.J., Plan, Y.: Tight oracle inequalities for low-rank matrix recovery from a minimal number of noisy random measurements. IEEE Trans. Inf. Theory 57(4), 2342–2359 (2011)

Recht, B., Fazel, M., Parrilo, P.A.: Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Rev. 52(3), 471–501 (2010)

Toh, K.-C., Yun, S.: An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pacific J. Opt. 6(615–640), 15 (2010)

Jiang, K.-F., Sun, D.-F., Toh, K.-C.: A partial proximal point algorithm for nuclear norm regularized matrix least squares problems. Math. Program. Comput. 6(3), 281–325 (2014)

Cai, T.T., Zhang, A.: Sparse representation of a polytope and recovery of sparse signals and low-rank matrices. IEEE Trans. Inf. Theory 60(1), 122–132 (2014)

Lee, J., Recht, B., Salakhutdinov, R.R., Srebro, N., Tropp, J.A.: Practical large-scale optimization for max-norm regularization. Neural Inform. Process. Syst. 23, 1297–1305 (2010)

Bi, S.-J., Pan, S.-H.: Error bounds for rank constrained optimization problems and applications. Oper. Res. Lett. 44(3), 336–341 (2016)

Gotoh, J.Y., Takeda, A., Tono, K.: Dc formulations and algorithms for sparse optimization problems. Math. Program. 169(1), 141–176 (2018)

Jiang, Z.-X., Zhao, X.-Y., Ding, C.: A proximal dc approach for quadratic assignment problem. Comput. Optim. Appl. 78(3), 825–851 (2021)

Tao, P.D., An, L.T.H.: Convex analysis approach to dc programming: theory, algorithms and applications. Acta Math. Vietnam 22(1), 289–355 (1997)

An, L.T.H., Tao, P.D.: The dc (difference of convex functions) programming and DCA revisited with dc models of real world nonconvex optimization problems. Ann. Oper. Res. 133(1–4), 23–46 (2005)

Le Thi, H.A., Pham Dinh, T., Muu, L.D.: Exact penalty in d.c. programming. Vietnam J. Math. 27(2), 169–178 (1999)

Le Thi, H.A., Pham Dinh, T., Van Ngai, H.: Exact penalty and error bounds in dc programming. J. Global Optim. 52(3), 509–535 (2012)

Souza, J.C.O., Oliveira, P.R., Soubeyran, A.: Global convergence of a proximal linearized algorithm for difference of convex functions. Optim. Lett. 10(7), 1529–1539 (2016)

Gaudioso, M., Giallombardo, G., Miglionico, G., Bagirov, A.M.: Minimizing nonsmooth dc functions via successive dc piecewise-affine approximations. J. Global Optim. 71(1), 37–55 (2018)

de Oliveira, W.: Proximal bundle methods for nonsmooth dc programming. J. Global Optim. 75(2), 523–563 (2019)

Liu, T.-X., Pong, T.-K., Takeda, A.: A refined convergence analysis of pdcae with applications to simultaneous sparse recovery and outlier detection. Comput. Optim. Appl. 73(1), 69–100 (2019)

Wen, B., Chen, X.-J., Pong, T.-K.: A proximal difference-of-convex algorithm with extrapolation. Comput. Optim. Appl. 69(2), 297–324 (2018)

Sun, D.-F., Toh, K.-C., Yuan, Y.-C., Zhao, X.-Y.: Sdpnal+: a matlab software for semidefinite programming with bound constraints (version 1.0). Optim. Methods Softw. 35(1), 87–115 (2020)

Bai, S., Qi, H.-D., Xiu, N.: Constrained best euclidean distance embedding on a sphere: a matrix optimization approach. SIAM J. Optim. 25(1), 439–467 (2015)

Liu, T., Lu, Z., Chen, X., Dai, Y.-H.: An exact penalty method for semidefinite-box-constrained low-rank matrix optimization problems. IMA J. Numer. Anal. 40(1), 563–586 (2020)

Jiang, K.-F., Sun, D.-F., Toh, K.-C.: An inexact accelerated proximal gradient method for large scale linearly constrained convex sdp. SIAM J. Optim. 22(3), 1042–1064 (2012)

Sun, D.-F., Toh, K.-C., Yang, L.-Q.: An efficient inexact abcd method for least squares semidefinite programming. Mathematics 26(2), 1072–1100 (2015)

Wang, Y.-Y., Liu, R.-S., Ma, L., Song, X.-L.: Task embedded coordinate update: a realizable framework for multivariate non-convex optimization. Proc. AAAI Conf. Art. Intell. 33, 277–286 (2019)

Beck, A.: First-order Methods in Optimization. SIAM, Philadelphia (2017)

Candes, E.J.: The restricted isometry property and its implications for compressed sensing. C.R. Math. 346(9–10), 589–592 (2008)

Luo, Y.-T., Huang, W., Li, X.-D., Anru R, Z.: Recursive importance sketching for rank constrained least squares: algorithms and high-order convergence. arXiv preprint arXiv:2011.08360 (2020)

Bhojanapalli, S., Neyshabur, B., Srebro, N.: Global optimality of local search for low rank matrix recovery. In: Proceedings of the 30th International Conference on Neural Information Processing Systems, pp. 3880–3888 (2016)

Hiriart, J.B.: Convex Analysis and Minimization Algorithms II. Springer, New York (1993)

Mishra, B.: Algorithmic algebra. Springer, New York (1993)

Bental, A., Nemirovski, A., Overton, M.: Lectures on modern convex optimization: analysis, algorithms, and engineering applications. SIAM, New York (2001)

Ioffe, A.: An invitation to tame optimization. SIAM J. Optim. 19(4), 1894–1917 (2009)

Attouch, H., Bolte, J.: On the convergence of the proximal algorithm for nonsmooth functions involving analytic features. Math. Program. 116(1), 5–16 (2009)

Bolte, J., Pauwels, E.: Majorization-minimization procedures and convergence of sqp methods for semi-algebraic and tame programs. Math. Oper. Res. 41(2), 442–465 (2016)

Bolte, J., Sabach, S., Teboulle, M.: Proximal alternating linearized minimization for nonconvex and nonsmooth problems. Math. Program. 146(1), 459–494 (2014)

Georghiades, A.S., Belhumeur, P.N., Kriegman, D.J.: From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 23(6), 643–660 (2001)

Povh, J., Rendl, F., Wiegele, A.: A boundary point method to solve semidefinite programs. Computing 78(3), 277–286 (2006)

Lu, Z., Zhang, Y.: Sparse approximation via penalty decomposition methods. SIAM J. Optim. 23(4), 2448–2478 (2012)

Lu, Z., Zhang, Y., Li, X.: Penalty decomposition methods for rank minimization. Optim. Methods Softw. 30(3), 531–558 (2014)

Acknowledgements

The authors would like to thank the Associate Editor and anonymous referees for their helpful suggestions. The Bo Yu’s and Mingcai Ding’s work was supported by the National Natural Science Foundation of China (Grand No. 11971092). Xiaoliang Song’s work was supported by the Fundamental Research Funds for the Central Universities (Grand No. DUT20RC(3)079).

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A Derivations of (2) and (62)

Firstly, we give the derivation of (2). Let \({\mathbf {U}} = {\mathbf {P}}{\mathbf {P}}^{\top }\), then the SDP relaxation of (1) can be formulated as

Suppose the number of non-zero elements in i-th row of the graph matrix \({\mathbf {G}}\) is \(n_i\) and \({\mathbf {G}}_{i,j_k}=1,k=1,\cdots ,n_i\), then (A1) can be simplified to

Let \(l := \sum _{s=1}^{i-1}n_s+k\) be the index of the k-th non-zero element in i-th row of G. Let \(\varvec{\tau }_l = {\varvec{x}}_{i} -{\varvec{x}}_{j_k}\) and \(\varvec{b}_l = \Vert {\varvec{y}}_{i}-{\varvec{y}}_{j_k}\Vert ^2\). Then (A1) can be formulated as

where \(p=\sum _{i,j=1}^n {\mathbf {G}}_{i,j}\). Clearly, (A3) can be formulated into a least squares form:

where \({\mathcal {A}}:{\mathcal {S}}^d_+\rightarrow {\mathbb {R}}^p\) is a linear operator that can be explicitly represented as

Then \({\mathcal {A}}({\mathbf {U}})\) can be computed as

its computation cost is \(O((d^2+d)p)\).

It is noted that the least squares SDP in (62) can be obtained from (61) by the same way, then we omit its derivation.

Appendix B Derivation of (19)

The subproblem of Algorithm 3 can be formulated as

By ignoring the constant term, (B5) can be simplified as

where \({\varvec{\Phi }}_c^{k} = {\mathbf {W}}^k+\alpha {\mathbf {U}}^k - c{\mathbf {I}}\). To obtain the dual problem, we introduce two auxiliary variables: \({\varvec{s}}\) and \({\mathbf {T}}\) such that (B6) is equivalent to

The Lagrange function of (B7) can be expressed as

Then the objective function of the dual problem of (B5) can be obtained by

where the last equality uses the fact that \(\delta _{{\mathcal {S}}_+^d}^*(-{\mathbf {Y}}) = \delta _{{\mathcal {S}}_-^d}(-{\mathbf {Y}}) = \delta _{{\mathcal {S}}_+^d}({\mathbf {Y}})\). Then the dual problem of (B6) can be expressed as

Thus the dual problem of (B5) can be equivalently formulated as the following minimization problem:

Appendix C Proof of the Statements (1)–(5) in Proposition 7

For statement (1), since \({\mathbf {U}}^{k_{l+1}} = {\mathbf {V}}^{k+1}\) is the stability center generated in serious step, then the condition (18) holds, shown as

Then we have

Consequently,

where the second equality follows from the convexity of \(g_c\) and the fact that \({\mathbf {W}}^{k_l}\in \partial g_c({\mathbf {U}}^{k_l})\), and the first inequality follows from the convexity of \(g_c\).

For statement (2), since \({\mathbf {U}}^{k_{l+1}}\in {\mathcal {S}}_+^d\) is an inexact solution of (15) with inexact term \(\varvec{\Delta }^{k_{l+1}}\), we have

Since \(G_c^{k_l}({\mathbf {U}})-\langle \varvec{\Delta }^{k_{l+1}},{\mathbf {U}}\rangle \) is strongly convex, then the following inequality holds:

Thus, we have

where the last equality follows from the convexity of \(g_c\) and the fact that \({\mathbf {W}}^{k_l}\in \partial g_c({\mathbf {U}}^{k_l})\). Similar to (B13), the following inequality holds:

Consequently, we have

where the second inequality follows from the convexity of \(g_c\) and the Young’s inequality applied to \(g_c\). The last inequality is due to (B15).

For statement (3), we first note from Proposition 6 that \(\left\{ {\mathbf {U}}^{k_l} \right\} \) is bounded. The boundedness of \(\left\{ {\mathbf {W}}^{k_l} \right\} \) follows immediately from the finite-valued property and the convexity of \(g_c\) and the fact that \({\mathbf {W}}^{k_l}\in \partial g_c({\mathbf {U}}^{k_l})\). The boundedness of \(\left\{ \varvec{\Delta }^{k_{l}} \right\} \) is followed by the fact that \(\lim _{l\rightarrow \infty }\epsilon _{k_{l+1}} = 0\). Then, the bounded sequence \(\left\{ ({\mathbf {U}}^{k_{l+1}}, {\mathbf {W}}^{k_l}, {\mathbf {U}}^{k_l},\varvec{\Delta }^{k_{l+1}})\right\} \) has nonempty accumulation point set \({\varvec{\Omega }}\).

For statement (4), since \(J_c\) is bounded below, from (54) and (55), we have that \(\left\{ E({\mathbf {U}}^{k_{l+1}},{\mathbf {W}}^{k_l},{\mathbf {U}}^{k_l},\varvec{\Delta }^{k_{l+1}}) \right\} \) is nonincreasing and bounded below. Thus, the limit \(\Upsilon = \lim _{l\rightarrow \infty }E({\mathbf {U}}^{k_{l+1}},{\mathbf {W}}^{k_l},{\mathbf {U}}^{k_l},\varvec{\Delta }^{k_{l+1}})\) exists. Next, we will prove that \(E \equiv \Upsilon \) on \({\varvec{\Omega }}\). Take any \((\widehat{{\mathbf {U}}},\widehat{{\mathbf {W}}},\widehat{{\mathbf {U}}},\widehat{\varvec{\Delta }})\in {\varvec{\Omega }}\). Since the above limit exists, there exists a subset \({\mathcal {L}}^{\prime }\subset {\mathcal {L}}\) such that

From the optimality of \({\mathbf {U}}^{k_{l+1}}\) and the feasibility of \(\widehat{{\mathbf {U}}}\) for solving \(\min _{{\mathbf {U}}}G_c^{k_l}({\mathbf {U}})\), we have

Rearranging terms in the above inequality, we obtain that

From the boundedness of \(\left\{ {\mathbf {U}}^{k_l}\right\} \), \(\left\{ {\mathbf {W}}^{k_l}\right\} \) and \(\left\{ \varvec{\Delta }^{k_{l}}\right\} \), we have

Then, we have

where the fourth equality follows from the convexity of \(g_c\) and \({\mathbf {W}}^{k_l}\in \partial g_c({\mathbf {U}}^{k_l})\), and the last inequality holds from (54) with l trending to infinity. Since E is lower semicontinuous, we also have

Thus, \(E \equiv \Upsilon \) on \({\varvec{\Omega }}\).

For statement (5), since the subdifferential of the function E at the point \(({\mathbf {U}}^{k_{l+1}},{\mathbf {W}}^{k_l},{\mathbf {U}}^{k_l},\varvec{\Delta }^{k_{l+1}})\) is

Since \({\mathbf {U}}^{k_{l+1}}\) is the optimal solution of (16), we have

Since \({\mathbf {U}}^{k_l}\in \partial g_{c}^{*}({\mathbf {W}}^{k_l})\), then

Since \({\mathbf {U}}^{k_{l+1}}\) is the stability center of serious step, so it satisfies the test (18), i.e., \(\Vert \varvec{\Delta }^{k_{l+1}}\Vert _F\le (1-\kappa )\alpha \Vert {\mathbf {U}}^{k_{l+1}}-{\mathbf {U}}^{k_l}\Vert _F\). Thus there exists a constant \(\rho \) such that the following inequality holds:

This completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Ding, M., Song, X. & Yu, B. An Inexact Proximal DC Algorithm with Sieving Strategy for Rank Constrained Least Squares Semidefinite Programming. J Sci Comput 91, 75 (2022). https://doi.org/10.1007/s10915-022-01845-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01845-4