Abstract

In this work, we extend the regularization framework from Kronqvist et al. (Math Program 180(1):285–310, 2020) by incorporating several new regularization functions and develop a regularized single-tree search method for solving convex mixed-integer nonlinear programming (MINLP) problems. We propose a set of regularization functions based on distance metrics and Lagrangean approximations, used in the projection problem for finding new integer combinations to be used within the Outer-Approximation (OA) method. The new approach, called Regularized Outer-Approximation (ROA), has been implemented as part of the open-source Mixed-integer nonlinear decomposition toolbox for Pyomo—MindtPy. We compare the OA method with seven regularization function alternatives for ROA. Moreover, we extend the LP/NLP Branch and Bound method proposed by Quesada and Grossmann (Comput Chem Eng 16(10–11):937–947, 1992) to include regularization in an algorithm denoted RLP/NLP. We provide convergence guarantees for both ROA and RLP/NLP. Finally, we perform an extensive computational experiment considering all convex MINLP problems in the benchmark library MINLPLib. The computational results show clear advantages of using regularization combined with the OA method.

Similar content being viewed by others

Notes

Retrieved on May 7, 2021, from http://www.minlplib.org/.

The datasets generated during and analysed during the current study are available in the GitHub repository, https://zedongpeng.github.io/ROA-RLPNLP-Benchmark/.

References

Abhishek, K., Leyffer, S., Linderoth, J.: FilMINT: An outer approximation-based solver for convex mixed-integer nonlinear programs. INFORMS J. Comput. 22(4), 555–567 (2010)

Bagirov, A., Karmitsa, N., Mäkelä, M.M.: Introduction to Nonsmooth Optimization: Theory, Practice and Software. Springer, Berlin (2014)

Bernal, D.E., Chen, Q., Gong, F., Grossmann, I.E.: Mixed-integer nonlinear decomposition toolbox for Pyomo (MindtPy). In: Computer Aided Chemical Engineering, vol 44, pp. 895–900. Elsevier (2018)

Bernal, D.E., Vigerske, S., Trespalacios, F., Grossmann, I.E.: Improving the performance of DICOPT in convex MINLP problems using a feasibility pump. Optim. Methods Softw. 35(1), 171–190 (2020)

Boggs, P.T., Tolle, J.W.: Sequential quadratic programming. Acta Numer. 4, 1–51 (1995)

Bonami, P., Biegler, L.T., Conn, A.R., Cornuéjols, G., Grossmann, I.E., Laird, C.D., Lee, J., Lodi, A., Margot, F., Sawaya, N., Wächter, A.: An algorithmic framework for convex mixed integer nonlinear programs. Discret. Optim. 5(2), 186–204 (2008). https://doi.org/10.1016/j.disopt.2006.10.011

Bonami, P., Cornuéjols, G., Lodi, A., Margot, F.: A feasibility pump for mixed integer nonlinear programs. Math. Program. 119(2), 331–352 (2009)

Boukouvala, F., Misener, R., Floudas, C.A.: Global optimization advances in mixed-integer nonlinear programming, MINLP, and constrained derivative-free optimization. CDFO. Eur. J. Oper. Res. 252(3), 701–727 (2016)

Bussieck, M.R., Drud, A.S., Meeraus, A.: MINLPLib-a collection of test models for mixed-integer nonlinear programming. INFORMS J. Comput. 15(1), 114–119 (2003)

Bussieck, M.R., Dirkse, S.P., Vigerske, S.: PAVER 2.0: an open source environment for automated performance analysis of benchmarking data. J. Glob. Optim. 59(2–3), 259–275 (2014)

Coey, C., Lubin, M., Vielma, J.P.: Outer approximation with conic certificates for mixed-integer convex problems. Math. Program. Comput. 12, 249–293 (2020)

Conn, A.R., Gould, N.I., Toint, P.L.: Trust Region Methods. SIAM, Philadelphia (2000)

Dakin, R.J.: A tree-search algorithm for mixed integer programming problems. Comput. J. 8(3), 250–255 (1965)

Delfino, A., de Oliveira, W.: Outer-approximation algorithms for nonsmooth convex MINLP problems. Optimization 67(6), 797–819 (2018)

Duran, M.A., Grossmann, I.E.: An outer-approximation algorithm for a class of mixed-integer nonlinear programs. Math. Program. 36(3), 307–339 (1986)

Fletcher, R.: Practical Methods of Optimization. Wiley, Hoboken (2013)

Fletcher, R., Leyffer, S.: Solving mixed integer nonlinear programs by outer approximation. Math. Program. 66(1), 327–349 (1994)

Floudas, C.A.: Nonlinear and Mixed-Integer Optimization: Fundamentals and Applications. Oxford University Press, Oxford (1995)

Geoffrion, A.M.: Generalized Benders decomposition. J. Optim. Theory Appl. 10(4), 237–260 (1972)

Gupta, O.K., Ravindran, A.: Branch and bound experiments in convex nonlinear integer programming. Manage. Sci. 31(12), 1533–1546 (1985)

Han, S.P.: Superlinearly convergent variable metric algorithms for general nonlinear programming problems. Math. Program. 11(1), 263–282 (1976)

Hart, W.E., Laird, C.D., Watson, J.P., Woodruff, D.L., Hackebeil, G.A., Nicholson, B.L., Siirola, J.D., et al.: Pyomo-optimization Modeling in Python, vol. 67. Springer, Berlin (2017)

den Hertog, D., Kaliski, J., Roos, C., Terlaky, T.: A logarithmic barrier cutting plane method for convex programming. Ann. Oper. Res. 58(2), 67–98 (1995)

HSL: A collection of Fortran codes for large scale scientific computation (2007). http://www.hsl.rl.ac.uk

Hunting, M.: The AIMMS outer approximation algorithm for MINLP. Technical report, AIMMS B.V (2011)

IBM Corp, IBM: V20.1: User’s Manual for CPLEX. International Business Machines Corporation (2020) https://www.ibm.com/docs/en/icos/20.1.0?topic=cplex

Kelley, J.E., Jr.: The cutting-plane method for solving convex programs. J. Soc. Ind. Appl. Math. 8(4), 703–712 (1960)

Khajavirad, A., Sahinidis, N.V.: A hybrid LP/NLP paradigm for global optimization relaxations. Math. Program. Comput. 10(3), 383–421 (2018)

Kiwiel, K.C.: Proximal level bundle methods for convex nondifferentiable optimization, saddle-point problems and variational inequalities. Math. Program. 69(1–3), 89–109 (1995)

Kreyszig, E.: Introductory Functional Analysis with Applications, vol. 1. Wiley, New York (1978)

Kronqvist, J., Lundell, A., Westerlund, T.: The extended supporting hyperplane algorithm for convex mixed-integer nonlinear programming. J. Glob. Optim. 64(2), 249–272 (2016)

Kronqvist, J., Lundell, A., Westerlund, T.: Reformulations for utilizing separability when solving convex MINLP problems. J. Glob. Optim. 71(3), 571–592 (2018)

Kronqvist, J., Bernal, D.E., Lundell, A., Grossmann, I.E.: A review and comparison of solvers for convex MINLP. Optim. Eng. 20(2), 397–455 (2019)

Kronqvist, J., Bernal, D.E., Lundell, A., Westerlund, T.: A center-cut algorithm for quickly obtaining feasible solutions and solving convex MINLP problems. Comput. Chem. Eng. 122, 105–113 (2019)

Kronqvist, J., Bernal, D.E., Grossmann, I.E.: Using regularization and second order information in outer approximation for convex MINLP. Math. Program. 180(1), 285–310 (2020)

Lee, J., Leyffer, S.: Mixed Integer Nonlinear Programming, vol. 154. Springer, Berlin (2011)

Lemaréchal, C., Nemirovskii, A., Nesterov, Y.: New variants of bundle methods. Math. Program. 69(1–3), 111–147 (1995)

Liberti, L., Marinelli, F.: Mathematical programming: turing completeness and applications to software analysis. J. Comb. Optim. 28(1), 82–104 (2014)

Liberti, L., Cafieri, S., Tarissan, F.: Reformulations in mathematical programming: a computational approach. In: Foundations of Computational Intelligence, vol. 3, pp. 153–234. Springer (2009)

Lundell, A., Kronqvist, J.: Integration of polyhedral outer approximation algorithms with mip solvers through callbacks and lazy constraints. In: AIP Conference Proceedings, AIP Publishing LLC, vol. 2070. p. 020012 (2019)

Lundell, A., Kronqvist, J., Westerlund, T.: The supporting hyperplane optimization toolkit for convex MINLP. J. Glob. Optim. 1–41 (2022)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, vol. 87. Springer, Berlin (2004)

de Oliveira, W.: Regularized optimization methods for convex MINLP problems. TOP 24(3), 665–692 (2016)

Quesada, I., Grossmann, I.E.: An LP/NLP based branch and bound algorithm for convex MINLP optimization problems. Comput. Chem. Eng. 16(10–11), 937–947 (1992)

Sawaya, N., Grossmann, I.E.: Reformulations, relaxations and cutting planes for linear generalized disjunctive programming (2008)

Slater, M.: Lagrange multipliers revisited. Cowles Foundation for Research in Economics, Yale University, Technical reports (1950)

Su, L., Tang, L., Bernal, D.E., Grossmann, I.E.: Improved quadratic cuts for convex mixed-integer nonlinear programs. Comput. Chem. Eng. 109, 77–95 (2018)

Tawarmalani, M., Sahinidis, N.V.: Convexification and Global Optimization in Continuous and Mixed-Integer Nonlinear Programming: Theory, Algorithms, Software, and Applications, vol. 65. Springer, Berlin (2013)

Trespalacios, F., Grossmann, I.E.: Review of mixed-integer nonlinear and generalized disjunctive programming methods. Chem. Ing. Tec. 86(7), 991–1012 (2014)

Wächter, A., Biegler, L.T.: On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 106(1), 25–57 (2006)

Westerlund, T., Petterson, F.: An extended cutting plane method for solving convex MINLP problems. Comput. Chem. Eng. 19, S131–S136 (1995)

Acknowledgements

David E. Bernal and Ignacio E. Grossmann would like to thank the Center Advanced Process Decision Making (CAPD) for its financial support. David E. Bernal also acknowledges USRA NASA Academic Mission Services (contract NNA16BD14C). Zedong Peng is grateful for the financial support from the China Scholarship Council (CSC) (No. 201906320320). Jan Kronqvist is grateful for the Newton International Fellowship by the Royal Society (NIF\R1\182194), a grant by the Swedish Cultural Foundation in Finland, and support by Digital Futures at KTH.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Algorithmic description of OA and LP/NLP branch and bound

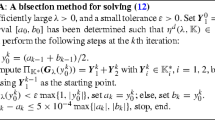

This section presents the algorithmic description of the Outer-Approximation method [15, 17], in Algorithm 3, and the LP/NLP Branch and Bound method [6, 44], in Algorithm 4.

1.2 Representing \(\ell _1\) and \(\ell _\infty \) norms using linear programming

This section shows the valid reformulations of optimization problems with norms one and infinity in the objective function using auxiliary variables and linear constraints. This reformulation is exact in the sense that they preserve the local and global optima from the original problem [39]. These reformulations are particularly interesting since they allow the regularization problem MIP-Proj to be written as Mixed-Integer Linear Programming (MILP) problems, instead of Mixed-Integer Quadratic Programming (MIQP) problems, as in the work by Kronqvist et al. [35]. MILP solution methods’ maturity over MIQP allows these problems to be more quickly solvable in practice.

The norm-1 of a vector \({\mathbf {v}} \in V \subseteq {\mathbb {R}}^N\) whose components might be negative or positive, \(\ell _1({\mathbf {v}})=\left\| {\mathbf {v}}\right\| _1 = \sum _{i=1}^{N} |v_i|\) can be reformulated in the case that this term appears in the objective function with a set of linear constraints. Through the addition of 2N non-negative slack variables \({\mathbf {s}}^+,{\mathbf {s}}^- \in {\mathbb {R}}_+^{N}\), and N linear equality constraints the following reformulation is valid:

This reformulation is applied to the regularization problem MIP-Proj when considering the \(\ell _1\) regularization function as in (4), resulting in problem MIP-Proj-\(\ell _1\). It can also be potentially applied to the feasibility NLP problem NLP-f.

The norm-\(\infty \) of a vector \({\mathbf {v}} \in V \subseteq {\mathbb {R}}^N\) whose components might be negative or positive, \(\ell _\infty ({\mathbf {v}})=\left\| {\mathbf {v}}\right\| _\infty = \max _{i=\{1,\ldots ,N\}} |v_i|\) can be reformulated in the case that this term appears in the objective function with a set of linear constraints. Through the addition of one non-negative slack variable \(s \in {\mathbb {R}}_+\), and 2N linear inequality constraints, the following reformulation is valid:

This is the usual choice for reformulating problem NLP-f, and can also be used to reformulate problem MIP-Proj with \(\ell _\infty \) regularization objective function, as in (5). This last problem formulation is:

1.3 Performance profiles for problem set 1

This section of the Appendix presents the performance profiles for the multi-tree and single-tree implementation of the methods included in this manuscript when solving all 358 convex MINLP problems in Problem Set 1. Figures 11 and 12 include the time and iteration performance profiles for the multi-tree implementation, respectively. Figures 13 and 14 include the time and iteration performance profiles for the single-tree implementation, respectively. Notice that we define iterations in the single-tree context as the number of NLP-I problems solved.

Rights and permissions

About this article

Cite this article

Bernal, D.E., Peng, Z., Kronqvist, J. et al. Alternative regularizations for Outer-Approximation algorithms for convex MINLP. J Glob Optim 84, 807–842 (2022). https://doi.org/10.1007/s10898-022-01178-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-022-01178-4