Abstract

This article is dedicated to the weight set decomposition of a multiobjective (mixed-)integer linear problem with three objectives. We propose an algorithm that returns a decomposition of the parameter set of the weighted sum scalarization by solving biobjective subproblems via Dichotomic Search which corresponds to a line exploration in the weight set. Additionally, we present theoretical results regarding the boundary of the weight set components that direct the line exploration. The resulting algorithm runs in output polynomial time, i.e. its running time is polynomial in the encoding length of both the input and output. Also, the proposed approach can be used for each weight set component individually and is able to give intermediate results, which can be seen as an “approximation” of the weight set component. We compare the running time of our method with the one of an existing algorithm and conduct a computational study that shows the competitiveness of our algorithm. Further, we give a state-of-the-art survey of algorithms in the literature.

Similar content being viewed by others

1 Introduction

Multiobjective optimization problems have gained a lot of attention lately, especially when it comes to problems with integer or both integer and continuous variables. From a theoretical point of view, many well-studied single objective optimization problems have been extended to a multiobjective setting, since real-world problems naturally often have more than one objective function. Instead of combining these to one objective function via weights and thereby fixing the importance of the different objective functions, one is interested in getting various or all “optimal” solutions to provide a decision support for the user. All optimal solutions for a multiobjective problem, also known as efficient solutions, and their objective function values denoted as nondominated images, are in general not computable by combining objective functions and then solving such a weighted sum problem. Still, this weighted sum scalarization is a useful and broadly applied scalarization for multiobjective optimization even though the optimal solutions obtained, the so-called supported efficient solutions and their corresponding supported nondominated images, are only a fraction of all efficient solutions and all nondominated images, respectively. Though the number of supported nondominated images may be exponential in the size of the input, this may hold true even if we restrict ourselves to extreme supported nondominated images, which are additionally extreme points of the convex hull of all images.Footnote 1

The weight set decomposition has become a useful tool to find all extreme supported nondominated images: The weight set is the set of eligible parameters for the weighted sum scalarization, i.e. all positive weights. This set can be decomposed into weight set components for every extreme supported nondominated image such that each component consists of those weights for which the corresponding image is optimal for a weighted sum problem with this particular weight. As the weight set components are convex polytopes, this structure is of considerable aid when computing extreme supported nondominated images. Moreover, knowledge about the weight set provides insights about the adjacency structure of the nondominated set which expands the work of Gorski, Klamroth, and Ruzika [13].

In this article, we present a new algorithm for computing the weight set decomposition and, thereby, the set of extreme supported nondominated images of a triobjective mixed-integer linear problem. It is applicable to a broad class of multiobjective problems. For this purpose, we make use of the properties of the weight set components and the Dichotomic Search. Furthermore, we prove that our algorithm runs in output polynomial time, that is, its running time is polynomial in the encoding length of both the input and output. In a computational study, we examine and present the benefits of our algorithm for practical usages. Additionally, the algorithm has various innovative properties such as at every intermediate step of the algorithm, the current subset of the weight set component has a polyhedral structure and is convex. This property can be used for the computation of an “approximation” of weight set components. However, this is not in the focus of this article.

1.1 Related work

For biobjective problems, the set of all extreme supported nondominated images can be computed by Dichotomic Search [2, 7]: Starting with the two lexicographically optimal images, the normalized normal vector of the line passing through these images is used as a weight vector for the weighted sum problem. Solving this, either the two existing points are optimal or a new supported nondominated image is computed which then yields a better objective function value. In the former case, the algorithm stops searching new nondominated points between the existing points and continues investigating other pairs of points. In the latter case, this procedure is repeated with the newly found point and either of the original points. This procedure yields a superset of the set of the extreme supported nondominated images and it also provides the weight set decomposition of the one dimensional weight set.

For problems with more than two objective functions, only a few methods for finding all extreme supported nondominated images are known. These methods can be categorized into two approaches: The methods of Bökler and Mutzel [5], Özpeynirci and Köksalan [21] and Przybylski, Gandibleux, and Ehrgott [22] iteratively decompose implicitly or explicitly the weight set using supersets of the components for any known supported nondominated image. In contrast, Alves and Costa [1] use subsets of the components for any known supported nondominated image and iteratively extend the known area of the weight set components.

Przybylski, Gandibleux, and Ehrgott [22] propose the first algorithm to find all extreme supported nondominated images for general multiobjective (mixed-) integer problems and present basic properties of the weight set. Originally, their algorithm has been designed for three objectives but can be recursively applied to problems with more objectives. Initialized with lexicographically optimal images, it decomposes the weight set into components using the known images. That is, the weight set is decomposed such that a weight vector belongs to the component of an image if the weighted sum of this image is better than for the other known images. For two adjacent components the common subedge is searched using Dichotomic Search and either it is stated that this is indeed a common edge or a new image is found. This is repeated until all edges for all supersets of the weight set components are indeed edges of the components. Additionally, Przybylski, Gandibleux, and Ehrgott [22] are the first to provide a computational study of a weight set decomposition algorithm for problems with three objectives.

Özpeynirci and Köksalan [21] present an algorithm that implicitly utilizes supersets of weight set components. It generalizes the work of Aneja and Nair [2] and Cohon [7] and can be seen as a multiobjective Dichotomic Search. The algorithm is initialized with infeasible but nondominated “dummy images” and the corresponding hyperplane of these points is considered. Using the (positive valued) normal vector of this hyperplane as a weight vector for the weighted sum scalarization, a new nondominated supported image is returned. In the following, the hyperplanes where the new image replaces one previous image are considered. If the normal vector of such an hyperplane is not positive, they use an existing image to compute a new weight vector and the algorithm proceeds with a call to the weighted sum procedure. This is repeated, until no new nondominated image is found. The authors provide additional improvements and give the first computational study of weight set decomposition for problems with four objectives. However, even though it is stated that the algorithm works for multiple objective mixed-integer problems, the algorithm that computes the dummy points presented by Özpeynirci [20] needs integer objective function values.

In their work on finding extreme supported solutions for multiple objective combinatorial problems, Bökler and Mutzel [5] make use of the previous work of Ehrgott, Löhne, and Shao [9], Hamel et al. [15], and Heyde and Löhne [16], where a geometric dual problem is introduced. They show that there is a one-to-one correspondence between optimal facets of the dual polyhedron(using the extreme points of the dual) and the weight set components of the extreme supported nondominated images. This gives rise to an implicit way to compute the weight set decomposition. Starting with one lexicographically optimal image of the original problem they compute a polyhedron containing the dual polyhedron. Using an extreme point of this polyhedron as a weight vector, either the corresponding weighted sum problem states that the extreme point is also an extreme point of the dual polyhedron or a new supported nondominated solution and a face defining inequality to trim the polyhedron is returned. This is repeated until the polyhedron is indeed the dual polyhedron. A further improvement is made using a lexicographic variant of the weighted sum scalarization and it is proven that the algorithm runs in output polynomial and even incremental polynomial time for fixed number of objective functions, given a polynomial time algorithm for the single objective problem. Bökler et al. are the first to state a running time for their algorithm and to provide a computational study for more than four objectives.

Alves and Costa [1] propose a method that explicitly utilizes subsets of the weight set component for any known supported nondominated image. This last method has been mainly designed for an interactive usage, and specifically for the triobjective case. However, one can also determine the set of all extreme supported nondominated images with this algorithm. Starting with an arbitrary weight vector of the weight set, any time a weighted sum problem is solved obtaining an optimal solution, information from the branch and bound tree is applied to obtain a subset of the corresponding weight set component. Given such subsets the algorithm enlarges the current subset of the weight set component by merging subsets of the same component via a convex hull routine or using theoretical results on adjacency between components. Alternatively, Alves and Costa [1] take again information from the weighted sum problem using a weight vector slightly outside of the current subset. By adjacency, this procedure can be rerun with any adjacent component to iteratively explore and compose the whole weight set.

1.2 Our contribution

Given a triobjective mixed-integer linear problem, we propose an algorithm for computing the weight set decomposition and all extreme supported nondominated images. The algorithm utilizes subsets of the weight set components of all known extreme supported nondominated images. These subsets are also convex polytopes, a fact that we take advantage of in the algorithm. Also, the algorithm heavily relies on a variant of the Dichotomic Search that we apply in a various ways: For the initialization, we examine the boundary with Dichotomic Search in order to get some extreme supported nondominated images and the common edge segments of the boundary and the related components. Further, given a subset of a component, this can also consist of an edge, we examine the weight set by searching via Dichotomic Search on the line perpendicular to an edge of the subset, of which we do not know if it is an edge of the component. From this line search, we get new points on the boundary of the weight set component and possible adjacent images. This information is then used by several theoretical results that state, if we have found an edge or an sub-edge of the component. In the latter case, we apply again Dichotomic Search in order to search on the extended line of the sub-edge to find the whole edge of the component.

For our algorithm, we are able to provide a running time that is output polynomial for fixed number of objective functions, and with some minor requirements the algorithm runs in incremental polynomial time. The asymptotic running time is competitive regarding the method of Bökler and Mutzel [5] and is besides their algorithm the only algorithm with an output sensitive (and incremental) running time.

Not only is this algorithm a new combination of well known methods with further developments and therefore easy to comprehend, it also has several innovative traits that are of advantage for the practical use. The algorithm explores the weight set components one by one, so if the user is interested in the weight set component of one image, he can use this algorithm solely for this particular image. Also, when exploring a component, at any intermediate step the subset of the component computed by the algorithm is a convex polytope and therefore fulfils important properties of the weight set component. Further, all extreme points of the subset are on the boundary of the component. Due to these properties, the intermediate subset can be seen as an “approximation” of the weight set component, which has some practical benefits.

Additionally, if we expand the subset in a certain direction, it is guaranteed that the maximum expansion in this direction is achieved.

The remainder of the article is structured as follows: In Sect. 2 we introduce necessary notation, definitions and properties concerning multiobjective optimization and weight set decomposition. In the next section, we state further theoretical results for weight set decomposition and weight set components. On these results we build our algorithm, which we present in Sect. 4. Additionally, we prove correctness, analyse the running time, and compare our algorithm with existing ones in the literature both via a computational study and via an analysis of the asymptotic running time and general advantages and disadvantages of the approaches. We conclude the article in Sect. 5 with a summary of our results and an outlook to future topics of research.

2 Preliminaries

In the following, some basic definitions and concepts of multiple objective programming and polyhedral theory are stated. For more detailed introductions, we refer to Ehrgott [8] and Ziegler [25].

A multiobjective mixed-integer linear problem with \(p\ge 2\) objectives, \(n_1>0\) discrete, and \(n_2\ge 0\) continuous variables can be concisely stated as

with \(n{:}{=}n_1+n_2, A\in {\mathbb {R}}^{m\times n}, m\in {\mathbb {N}}\), \(C\in {\mathbb {R}}^{p\times n}, b\in {\mathbb {R}}^m\). Remark, we allow the case that \(n_2=0\), i.e., integer and combinatorial problems.

Let \(X{:}{=}\{x\in {\mathbb {Z}}^{n_1}\times {\mathbb {R}}^{n_2}: Ax\le b\}\) be the set of feasible solutions (referred to as feasible set) and \(Y{:}{=}\{Cx: x\in X\}\subseteq {\mathbb {R}}^p\) the set of images. The Euclidean vector spaces \({\mathbb {R}}^{n}\) and \({\mathbb {R}}^{p}\) comprising the set of feasible solutions and the set of images are called decision space and criterion space or image space, respectively. We clarify the relation between these sets by the function \(z:X\rightarrow Y, z(x)=Cx\).

Since there does not exist a canonical order in \({\mathbb {R}}^{p}\) for \(p\ge 2\), the following variants of the componentwise order are used. For \(y^1, y^2\in {\mathbb {R}}^{p}\) and \(N=\{1,\ldots ,p\}\), we define

For \(p\in {\mathbb {N}}\), the nonnegative orthant is defined as \({\mathbb {R}}^p_\ge {:}{=}\{r\in {\mathbb {R}}^p : r\ge 0\}\) and, likewise, the sets \({\mathbb {R}}^p_\geqq \) and \({\mathbb {R}}^p_>\) are defined.

An image \(y^*\in Y\) is nondominated (weakly nondominated) if there is no other image \(y\in Y\) such that \(y\le y^*\) (\(y< y^*\)). Analogously, \(x\in X\) is called efficient (weakly efficient), if z(x) is nondominated (weakly nondominated). The set of all nondominated (weakly nondominated) images is referred to by \(Y_N\) (\(Y_{wN})\) and the set of (weakly) efficient solutions by \(X_E\) (\(X_{wE}\)).

The convex hulls of X and Y, denoted by \({{\,\mathrm{conv}\,}}X\) and \({{\,\mathrm{conv}\,}}Y\), are polyhedra. Recall that the dimension of a polyhedron \(P\subseteq {\mathbb {R}}^p\) is the maximum number of affinely independent points of P minus one. For \(w\in {\mathbb {R}}^n\) and \(t\in {\mathbb {R}}\), the inequality \(w^\top y\le q\) is called valid for P if \(P\subseteq \{y\in {\mathbb {R}}^n: w^\top y\le q\}\). A set \(F\subseteq P\) is a face of P if there is some valid inequality \(w^\top y\le q\) such that \(F=\{y\in P : w^\top y= q\}\). As a face is itself a polyhedron, we can adopt the notion of dimension and denote special faces: An extreme point is a face of dimension 0, an edge is a 1-dimensional face and a facet has dimension \(p-1\).

The definition of nondominance can also be applied to any subset S in \({\mathbb {R}}^p\), in particular to a polytope \(P \subset {\mathbb {R}}^p\) and to its faces. Consequently, the set of nondominated points of \(S \subset {\mathbb {R}}^p\) is denoted by \(S_N\).

Given some permutation \(\pi \) of the set \(\{1, \dots ,p\}\) and the associated order for the objective functions, we refer to the problem

as the lexicographic mathematical programming problem with respect to permutation \(\pi \). This optimization problem can be understood as minimizing the objective function \(z_{\pi (1)}\) first. Then, over the set of minimizers of \(z_{\pi (1)}\), the next objective function \(z_{\pi (2)}\) is minimized and so on. Varying the permutation \(\pi \), different solutions and their images can be found, the so-called lexicographically optimal solutions (images). Clearly, these images are nondominated. For biobjective problems, there exist two lexicographic optimal images, \(y^{UL}=z^{UL}{:}{=}{{\,\mathrm{lexmin}\,}}(z_1, z_2)_{\{x\in X\}}\) and \(y^{LR}=z^{LR}{:}{=}{{\,\mathrm{lexmin}\,}}(z_2, z_1)_{\{x\in X\}}\).

Scalarization methods are useful tools to compute efficient solutions. The most common method is the weighted sum method introduced by Zadeh [24] which linearly combines the objective functions to get the scalar-valued optimization problem

Every optimal solution to \(\varPi _\lambda \) is a (weakly) efficient solution of the original problem if \(\lambda \in {\mathbb {R}}^p_{>}\) (\(\lambda \in {\mathbb {R}}^p_{\ge }\)) [11]. The image y of a solution x that can be obtained by the weighted sum method is called a supported nondominated image. The set of supported nondominated images is denoted by \(Y_{SN}\). All other images are called unsupported. A supported nondominated image \(y\in Y\) is an extreme supported nondominated image, if y cannot be expressed by a convex combination of points in \(Y_N\backslash \{y\}\). Prominent examples of such images are the lexicographically optimal images. The set of extreme supported nondominated images is denoted by \(Y_{ESN}\). All other supported nondominated images are called nonextreme. For efficient solutions in \(X_E\), we use these terms analogously.

Since normalization of the weight vector does not change the set of optimal solutions, we consider the normalized weight set for the weighted sum scalarization

Note that \(\varLambda ^>\) is not closed and thus not compact. It turns out to be convenient to consider the closure of the normalized weight set

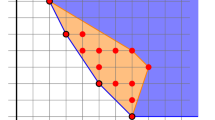

The set \(\varLambda \) is a polytope of dimension \(p-1\) and in particular, it is two-dimensional in the triobjective case, and there is a bijection between \(\varLambda \) and \(\left\{ \lambda \in {\mathbb {R}}_\geqq ^{p-1}: \sum _{k = 1}^{p} \lambda _k \le 1 \right\} \), see Fig. 1 for an example. In the following, for the sake of simplicity, we address both sets as the weight set denoted by \(\varLambda \). It should be clear by the usage of \(\lambda \in \varLambda \), whether its dimension is p or \(p-1\) and we automatically add or remove one entry of the vector if needed. However, we denote the p-dimensional \(\lambda \) as weight vector and the \((p-1)\)-dimensional \(\lambda \) as weight point in order to highlight the usage in a scalarization or polyhedral context.

For \(y\in Y\), we denote by \(\varLambda (y)\) the weight set component of y defined by

This corresponds to the subset of weights \(\lambda \in \varLambda \) for which y is the image of an optimal solution of (\(\varPi _\lambda \)).

Przybylski et al. [22] presented basic properties of the weight set.

Proposition 2.1

(Przybylski et al. [22]) Let y be a supported nondominated image. Then, it holds:

-

1.

\(\varLambda (y) = \{\lambda \in \varLambda : \lambda ^\top y \le \lambda ^\top y' \text{ for } \text{ all } y' \in Y_{ESN} {\setminus } \{y\}\}.\)

-

2.

\(\varLambda (y)\) is a convex polytope.

-

3.

A nondominated image y is an extreme supported nondominated image of Y if and only if \(\varLambda (y)\) has dimension \(p-1\).

-

4.

\(\varLambda = \bigcup _{y \in Y_{ESN}} \varLambda (y).\)

-

5.

Let S be a set of supported nondominated images. Then

$$\begin{aligned} Y_{ESN} \subseteq S \Longleftrightarrow \varLambda = \bigcup _{y \in S} \varLambda (y). \end{aligned}$$

Proposition 2.1(5) gives a certificate for a set of supported nondominated images S to subsume the set \(Y_{ESN}\). This certificate requires to be able to compute \(\varLambda (y)\) for any supported nondominated image y which can be easily done if \(Y_{ESN}\) is known, using Proposition 2.1(1). However, \(Y_{ESN}\) is not known initially. Additionally, the notion of adjacency between extreme supported nondominated images is introduced and a result which justifies that adjacency is well-defined and symmetric is provided.

Definition 2.2

(Przybylski et al. [22]) Two extreme supported nondominated images \(y^1\) and \(y^2\) are called adjacent if their common facet \(\varLambda (y^1) \cap \varLambda (y^2)\) in the weight set is a polytope of dimension \(p - 2\).

Proposition 2.3

(Przybylski et al. [22]) Let \(y^1\) and \(y^2\) be two supported nondominated images and \(\varLambda (y^1) \cap \varLambda (y^2) \ne \emptyset \). Then \(\varLambda (y^1) \cap \varLambda (y^2)\) is the common face of \(\varLambda (y^1)\) and \(\varLambda (y^2)\) with maximal dimension.

A similar result for the two dimensional weight set has been conducted by Alves and Costa [1] which gives another perspective on this issue:

Proposition 2.4

(Alves and Costa [1]) Let \(\lambda ^0\), \(\lambda ^1\), \(\lambda ^2 \in \varLambda \) be three weight vectors that are located on the same line segment. Let the nondominated images \(y^1\) and \(y^2\) be the images of optimal solutions for \((\varPi _{\lambda ^0})\) and \((\varPi _{\lambda ^1})\). Let \(y^1\) be also the image of an optimal solution for \((\varPi _{\lambda ^2})\). Then \(y^2\) is also the image of an optimal solution of \((\varPi _{\lambda ^2})\).

Visualization of Propositions 2.3 and 2.4: On the left the weight set components \(\varLambda (y^1)\), \(\varLambda (y^2)\) and \(\varLambda (y^3)\) share an edge among themselves. However, due to the propositions the case on the right is not possible, as one edge of \(\varLambda (y^2)\) is partially an edge of both \(\varLambda (y^1)\) and \(\varLambda (y^3)\)

The last two results are visualized in Fig. 2 and give rise to an algorithmic idea: If two subsets of the weight set components of \(y^1\) and \(y^2\) have an intersection that is not reduced to a single weight vector, then this intersection either defines the complete common edge of both subsets or it can be extended for one or both subsets, e.g. the one of \(y^2\) up to \(\lambda ^2\).

At last, we recall notions regarding running time and complexity of optimization problems, for a basic introduction see the book of Arora and Barak [3]. General multiple objective mixed-integer and integer problems are NP-hard and only particular cases are polynomially time solvable, see Figueira et al. [10]. In order to quantify the asymptotic running time for problems with a large output, the notion of output-sensitive complexity was developed by Johnson et al. [17] and recently reused by Bökler and Mutzel [5] and Bökler et al. [4] in the multiple objective context. Instead of defining the complexity for enumeration problems and enumeration algorithms, we introduce it solely for multiple objective problems. Given such a problem with reasonably small encoding length of the outputFootnote 2 and let the cardinality of the output denoted by k, then this problem is in

-

1.

TotalP (Output Polynomial Time/Output Sensitive),

-

2.

IncP (Incremental Polynomial Time),

if there is an algorithm that solves the MOP such that

-

1.

its running time is a polynomial of the encoding length of the input and the output,

-

2.

the ith delay for \(i=0,\ldots ,k\), is a polynomial of the encoding length of the input and of that subset of the output that has been returned before the ith solution has been returned.

Here, the ith delay for \(i=1,\ldots ,k-1\) characterizes the time between the output of the ith and \((i+1)\)th solution. The 0th delay is the time between the start of the algorithm and its first output and likewise the kth delay is the time between the last output and the termination of the algorithm.

3 Foundations

In this section, we present the fundamental parts of our algorithm.

Given a (extreme) supported nondominated image y, we compute its weight set component \(\varLambda (y)\) by starting with a subset of the component. We demand some specific requirements of this subset:

Definition 3.1

A subset \(\emptyset \not =L(y)\subseteq \varLambda (y)\) is called an inner approximate component of \(\varLambda (y)\), if L(y) is a convex polytope and every extreme point of L(y) is on the boundary of \(\varLambda (y)\). It is full-dimensional if \(\dim L(y)= \dim \varLambda (y)\).

Basically, the inner approximate component fulfills almost every property of a weight set component except for completeness, that is, every weight vector for which y is optimal is not necessarily contained in L(y). Also, adjacency between inner approximate components or between an inner approximate component and a weight set component may not hold, although the corresponding weight set components are adjacent.

Next, we specify the faces of such an inner approximate component.

Definition 3.2

Let L(y) be an inner approximate component of \(\varLambda (y)\) and F a face of L(y). Then, F is a final face if it is known that F is a face of \(\varLambda (y)\). Otherwise, F is an interim face. In particular, for edges we have interim and final edges.

Remark that every face of \(\varLambda (y)\) and every extreme point of L(y) is by definition a final face. As these extreme points are located on the boundary of the weight set component and in order to avoid confusion when using the notion of extreme, we call a point \(\lambda \) on the boundary of \(\varLambda (y)\) an intersection point. If an intersection point \(\lambda \) is indeed an extreme point of \(\varLambda (y)\), then it is an extreme intersection point. The notion emerges from the fact that these points are in the intersection of two weight set components of supported nondominated images, \(\lambda \in \varLambda (y)\cap \varLambda (y')\) with \(y\not =y'\). In this case, we also say that \(\lambda \)separatesy and \(y'\) and \(L(y)\cap L(y')\not =\emptyset \) holds. The same terminology can be used for other faces. Further, we assume that \(L(y) {\setminus } L(y') \ne \emptyset \) and \(L(y') {\setminus } L(y) \ne \emptyset \). This assumption will be verified by construction, as we will see in the description of the algorithm in Sect. 4.

The algorithm for triobjective optimization problems utilizes inner approximate components and successively enlarges the components until it is verified that \(L(y)=\varLambda (y)\) holds true for some y and later for every extreme supported nondominated image. This general technique is split into its main features and these will be discussed in the following subsections:

-

Enlarging the inner approximate component by finding new intersection points (Perpendicular Search),

-

Identifying interim edges of L(y) as final edges or as subsets of final edges (Check Edge),

-

Enlarging an interim edge that is a subset of a final edge to the final edge (Extend Edge),

-

Initializing and updating the inner approximate components.

In most of these features, a line search in the weight set is conducted: Given two points \(\lambda ^1,\lambda ^2\in \varLambda \), we search the line between these points by transforming the triobjective problem into a biobjective problem:

where \(W{:}{=}\begin{pmatrix} \lambda ^1_1 &{} \lambda ^1_2 &{} \lambda ^1_3\\ \lambda ^2_1 &{} \lambda ^2_2 &{} \lambda ^2_3 \end{pmatrix}\) is a Partial Weighted Sum Matrix (PWS Matrix). We call (\(\varPi _W\)) a Partial Weighted Sum Problem.

Solving this problem via Dichotomic Search returns a weight set decomposition for the biobjective problem: We get solutions \(x^1,\ldots ,x^d\) of (\(\varPi _W\)) and weight vectors \(w^0,w^1,\ldots ,\)\(w^d\in {\mathbb {R}}^2\), \(d\in {\mathbb {N}}\), such that \(\varLambda (WCx^i)=[w^{i-1},w^i]\). These weight vectors can be used to get information for the weight set decomposition of (MOP) as it is proven in [14]:

Remark 3.3

If W is a matrix described as above such that W does not have zero-valued columns, then the Dichotomic Search on (\(\varPi _W\)) returns supported nondominated images \(z(x^1)\), \(\ldots \), \(z(x^d)\) of (MOP) and weight vectors \(\lambda ^1=W^\top w^0,W^\top w^1,\ldots ,W^\top w^d=\lambda ^2\in {\mathbb {R}}^3\) such that \([W^\top w^{i-1},W^\top w^i]\subseteq \varLambda (z(x^i))\) for \(i=1,\ldots ,d\). Further, the corresponding weight points of \(W^\top w^i\) for \(i=1,\ldots ,d-1\) are intersection points.

This result on line search is applied by the perpendicular search, the extend edge routine, and in a slightly different fashion for the initialization.

3.1 Perpendicular search

Given an inner approximate component L(y) for a supported nondominated image y, it is clear that in the triobjective case \(\dim L(y)\le 2\). In particular, we are interested in the case where L(y) does not consist of only one point, so we have at least one edge of L(y). Suppose we have an interim edge E of L(y), we will discuss other cases later. Now, as the goal is to compute \(\varLambda (y)\), we aim to enlarge L(y). For this purpose, we use the procedure Perpendicular Search, see Algorithm 1.

Basically, a line perpendicular to E starting from the middle point of E to some weight point \(\lambda ^b\) is searched via line search. In particular, \(\lambda ^b\) may be chosen on the boundary of \(\varLambda \). Then, depending if y is found or not, we get a new intersection point, which may lie on the interim edge E. Next, the correctness of this procedure is proven.

Proposition 3.4

The weight point \(\lambda ^*\) returned by Perpendicular Search is an intersection point of \(\varLambda (y)\). Moreover, \(y^*\) is a supported nondominated image.

Proof

When performing the line search on I, the corresponding PWS matrix W does not have a zero-valued column. W has a zero-valued column, if both \(\lambda ^a\) and \(\lambda ^b\) are located on the same edge of the boundary of \(\varLambda \). However, as I is perpendicular to E, either \(\lambda ^1\notin \varLambda \) or \(\lambda ^2\notin \varLambda \).

Hence, as W fulfils the requirements of Remark 3.3, we get that \(y^*\) is a supported nondominated image. If \(\lambda ^*=\bar{\lambda ^1}\), then it also follows that \(\lambda ^*\) is an intersection point of \(\varLambda (y)\). Otherwise, the corresponding solutions of both y and \(y^1\) are optimal for \(\varPi _{\bar{\lambda ^0}}\) and hence \(\bar{\lambda ^0}\in \varLambda (y)\cap \varLambda (y^1)\). Hence, \(\bar{\lambda ^0}\) is an intersection point of \(\varLambda (y)\). \(\square \)

An example of Perpendicular Search: Performing a line search on the line \([\lambda ^a,\lambda ^b]\) returns \(y^1=y\) and \(y^2\) and the intersection point \(\lambda ^*={\bar{\lambda }}^1\). Thus, we replace the edge \([\lambda ^1,\lambda ^2]\) by the edges \([\lambda ^1,\lambda ^*]\) and \([\lambda ^*,\lambda ^2]\)

Remark 3.5

By construction, there is a subinterval of I that is given by either \([\bar{\lambda ^1},\bar{\lambda ^2}]\) (if \(\lambda ^* = \bar{\lambda ^1}\)) or \([\bar{\lambda ^0},\bar{\lambda ^1}]\) (otherwise) such that this subinterval belongs to \(L(y^*)\) and consequently, we have \(L(y^*){\setminus } L(y) \ne \emptyset \).

The next proposition states and proves how we can enlarge L(y).

Proposition 3.6

After Perpendicular Search, if \(\lambda ^*\not =\lambda ^a\), replace E by \([\lambda ^1,\lambda ^*]\) and \([\lambda ^*,\lambda ^2]\), and L(y) remains an inner approximate component.

Proof

By Proposition 3.4, \(\lambda ^*\) is an intersection point of \(\varLambda (y)\). L(y) can be described by a sequence of intersection points. In case that \(\lambda ^*\not =\lambda ^a\), we insert \(\lambda ^*\) between \(\lambda _1\) and \(\lambda _2\).

By construction, we only have to ensure that L(y) remains convex. As \(\lambda ^*\) is on the boundary of \(\varLambda (y)\) and the same holds true for every extreme point of L(y) the convexity immediately follows by the convexity of \(\varLambda (y)\). This shows the statement. \(\square \)

An example of Perpendicular Search can be seen in Fig. 3. The procedure Perpendicular Search can be seen as an improvement in comparison to the procedure used by Alves and Costa [1] to determine weight points outside of \(\varLambda (y)\). Indeed, it is interesting to note that the weight point obtained in the case of the procedure proposed by Alves and Costa [1] is located in the line segment of the weight set that is considered in the procedure Perpendicular Search. This last procedure has the advantage to generate, in one call, one point located on the boundary of \(\varLambda (y)\), which could require an undefined number of calls in the case of the procedure proposed by Alves and Costa [1].

At last, we take a look at some details of Perpendicular Search: Given L(y) has at least one edge, we assumed that we have at least one interim edge. Clearly, if it is determined that \(\varLambda (y)=L(y)\), a further search is not necessary. However, it may occur that \(\dim (L(y))=1\), hence there is only one final edge E. Then, we install a dummy interim edge which is identical to E and perform perpendicular search, but we do not know in which direction perpendicular to E we have to search. As it is a final edge which has been marked final in a prior point in time, we know one adjacent extreme supported nondominated image \({\bar{y}}\) and hence this “side” of E has been already explored. So, we search in the opposite direction of the component of this adjacent image. We tackle the two cases of Perpendicular Search:

-

If \(y^1=y\), we can replace the dummy interim edge by the two new edges and resume the algorithm.

-

Otherwise, we can state that \(\varLambda (y)=L(y)\): \(\lambda ^*\) is an intersection point that separates \(y,{\bar{y}}\) and \(y^*\). In both directions L(y) cannot be enlarged and it consists solely of the edge E. In particular, \(\dim \varLambda (y)=1\) and y is a nonextreme supported nondominated image.

If we consider an image y such that \(L(y)=\{\lambda \}\), we dismiss y from consideration until \(dim(L(y))=1\). If this does not occur, we omit y.

Also, as we are only interested in the intersection point of the line search that separates y from another image, we do not have to perform a full Dichotomic Search. In fact, we only consider a Partial Dichotomic Search. Recall that in a full Dichotomic Search, if a new optimal image improving the weighted sum objective value has been found, two weight vectors that are the normal vectors of the lines connecting one of the old images to the new one are computed to define new weighted sum problems. In a partial Dichotomic Search, we only consider the weight vector for which one of the defining points is y.

3.2 Check edge

Given an interim edge, one needs to check, whether this edge is indeed a final edge or at least a subset of a final edge. First, we clarify which subsets of edges we aim for.

Definition 3.7

A subface of a face F is a subset \(G\subseteq F\) such that G is a convex polytope with \(\dim (G)=\dim (F)\). In particular, for edges, we have subedges.

This definition simplifies the notation of the following Separation Theorem:

Theorem 3.8

(Separation Theorem)

-

1.

If two intersection points \(\lambda ^1\) and \(\lambda ^2\) separate the same pair of images y and \(y'\) then \([\lambda ^1,\lambda ^2]\) is a subface of \(\varLambda (y)\cap \varLambda (y')\) and, hence, a subedge of the common edge of \(\varLambda (y)\) and \(\varLambda (y')\).

-

2.

If \([\lambda ^1,\lambda ^2] \subseteq \varLambda (y)\cap \varLambda (y')\) and if \(\lambda ^1\) and/or \(\lambda ^2\) are extreme intersection points, then \(\lambda ^1\) and/or \(\lambda ^2\) are extreme points of \(\varLambda (y)\cap \varLambda (y')\).

-

3.

Let y be a (extreme) supported nondominated image and let \(\lambda ^1\), \(\lambda ^2\) and \(\lambda ^3\) be three intersection points such that:

-

\(\lambda ^1\), \(\lambda ^2\) and \(\lambda ^3\) are located on a same line,

-

\(\lambda ^1\), \(\lambda ^2\) and \(\lambda ^3\) separate y and respectively \(y^1\), \(y^2\) and \(y^3\) (with possible equalities between these points),

-

\(\lambda ^2\) is located in the relative interior of the edge \([\lambda ^1,\lambda ^3]\),

then \([\lambda ^1,\lambda ^3]\) is a subedge of \(\varLambda (y)\cap \varLambda (y^2)\) and \(y^2\) is an extreme supported nondominated image, if \(\lambda ^2\) was found by Perpendicular Search. If \(y^1 \ne y^2\) and/or \(y^3 \ne y^2\), then \(\lambda ^1\) and/or \(\lambda ^3\) are extreme intersection points of \(\varLambda (y)\cap \varLambda (y^2)\).

-

Proof

-

1.

By definition of the intersection points, \(\lambda ^1\) and \(\lambda ^2\) are located in \(\varLambda (y) \cap \varLambda (y')\). As \(\varLambda (y) \cap \varLambda (y')\) is a convex polytope, the statement follows immediately.

-

2.

This statement is a direct consequence of Proposition 2.3.

-

3.

As \(\lambda ^1\), \(\lambda ^2\) and \(\lambda ^3\) are all located on the boundary of \(\varLambda (y)\), and as they are located on the same line, \({{\,\mathrm{conv}\,}}\{\lambda ^1,\lambda ^2,\lambda ^3\} = [\lambda ^1,\lambda ^3]\) is a subedge of an edge E of \(\varLambda (y)\). Next, as \(\lambda ^2\) is located in the relative interior of \([\lambda ^1,\lambda ^3]\), it is also located in the relative interior of E. As the optimal solutions are the same for all weight vectors corresponding to weight points in the interior of E, E is a subedge of the common edge of \(\varLambda (y)\) and \(\varLambda (y^2)\). Finally, if \(\lambda ^2\) is found by Perpendicular Search and as \(\varLambda (y^2) {\setminus } \varLambda (y) \ne \emptyset \), \(\varLambda (y^2)\) is composed of more than the edge \([\lambda ^1,\lambda ^3]\) and is therefore a polytope of dimension 2. We conclude that \(y^2\) is an extreme supported nondominated image by application of Proposition 2.1(3).

The rest is again a direct consequence of Proposition 2.3.

\(\square \)

In Fig. 4, some cases of Theorem 3.8 are visualized. In the algorithm, we use Theorem 3.8, when a new interim edge is constructed or the middle point of an edge is identified as an intersection point by Perpendicular Search. We call this test by Theorem 3.8Check Edge.

Two examples of the Separation Theorem: On the left we have that both \(\lambda ^1\) and \(\lambda ^2\) separate y and \(y'\), hence \([\lambda ^1,\lambda ^2]\) is a subedge by Theorem 3.8 1. Even more, as \(\lambda ^1\) is on the boundary of \(\varLambda \), it is an extreme intersection point. On the right, we are in the case of Theorem 3.8, 3.: We have a final edge of L(y) that separates y and \(y^2\)

3.3 Extend edge

Assume that the Separation Theorem returns that a given interim edge E is a subedge of a final edge. In order to extend this subedge to the final edge, we again make use of the line search, which is similar to Perpendicular Search.

Let \(E=[\lambda ^1,\lambda ^2]\) and \(\lambda ^1,\lambda ^2\) are intersection points that separate y and \(y'\) and without loss of generalization \(\lambda ^1\) is not known as extreme intersection point. Then, a line search parallel to E starting from \(\lambda ^1\) in opposite direction of \(\lambda _2\), and ending at a known weight point, e.g. on the boundary of \(\varLambda \), is performed, see Algorithm 2.

Like for Perpendicular Search, a Partial Dichotomic Search is sufficient.

Proposition 3.9

The weight point \(\lambda ^*\) returned by Extend Edge is an extreme intersection point of \(\varLambda (y)\) separating \(y,y'\) and \(y^*\).

Proof

As the PWS matrix W corresponding to the line search on I has clearly no zero-valued column, by Remark 3.3, \(\lambda ^*\) is an intersection point and \(y^*\) is a supported nondominated image. If \(({\bar{\lambda }}^1)^\top \cdot y^1=({\bar{\lambda }}^1)^\top \cdot y\), the solution belonging to y is an optimal solution for the weighted sum problem with weight \({\bar{\lambda }}^1\) and \(\lambda ^1\). Otherwise, this holds only for \(\lambda ^1\). In both cases, as the line I is parallel to an edge of \(\varLambda (y)\), it follows that \(\lambda ^*\) is an intersection point of L(y). By Proposition 2.3, the same holds for \(y'\), hence \(\lambda ^*\) separates \(y,y'\) and \(y^*\). Further, \(\lambda ^*\) is extreme, as for every \(\lambda \in (\lambda ^*,\lambda ^b]\) the solution belonging to y is not an optimal solution for the weighted sum problem. \(\square \)

As \(\lambda ^*\) is indeed an intersection point of L(y), we describe how to enlarge L(y).

Proposition 3.10

After Extend Edge, if \(\lambda ^*\not =\lambda ^1\), replace \(\lambda ^1\) by \(\lambda ^*\) and L(y) remains an inner approximate component.

Proof

Similarly to the proof of Proposition 3.6, \(\lambda ^*\) must be added in the sequence of intersection points. The difference is that the alignment of \(\lambda _2,\lambda _1,\lambda ^*\) makes \(\lambda _1\) redundant in the sequence. \(\square \)

In Fig. 5, an example of Extend Edge is visualized. At last, if both endpoints of E are not known as extreme intersection points, the whole line passing through E with endpoints on the boundary of \(\varLambda \) can be searched via a full Dichotomic Search, instead of two lines with a Partial Dichotomic Search.

An example of Extend Edge: We know that the edge \([\lambda ^1,\lambda ^2]\) is a subedge and \(\lambda ^2\) is an extreme intersection point. Extend Edge searches the line \([\lambda ^a,\lambda ^b]\) and finds a new intersection point \(\lambda ^*=\bar{\lambda ^1}\) of L(y). Hence we replace \(\lambda ^1\) by \(\lambda ^*\) and the edge \([\lambda ^2,\lambda ^*] \) becomes a final edge

3.4 Initialization

At last, we deal with the question of how we initialize at least one inner approximate component. As pointed out in Perpendicular Search, the dimension of L(y) has to be at least one, i.e., we have at least one edge. In order to generate such inner approximate components, we perform line searches on the boundary of \(\varLambda \): We use the extreme points \(\begin{pmatrix} 0&0^\top \end{pmatrix}^\top ,\begin{pmatrix} 1&0 \end{pmatrix}^\top \) and \(\begin{pmatrix} 0&1 \end{pmatrix}^\top \) of \(\varLambda \) as start and endpoints of the line search. However, with these points for the line search we do not fulfil the requirements of Remark 3.3: For example, the line search for the first two extreme points has

as PWS Matrix, which clearly has zero-valued column and therefore solving the problem with Dichotomic Search \(\min WCx\) returns not necessarily efficient solutions for (MOP) and therefore no intersection points. We can overcome this problem in the following way: whenever a weighted sum problem is solved during Dichotomic Search, for example for a weight vector \(w\in {\mathbb {R}}^2\)\(\min w^\top \cdot WCx\) has to be solved, solve for this particular W

instead, where \(C_{2\cdot }\) is the second row of C.

Remark 3.11

If W is a PWS Matrix with one zero-valued column, then the Dichotomic Search that utilizes (\(\varPi ^{\text {lex}}_w\)) instead of weighted sum problems on (\(\varPi _W\)) returns supported nondominated images \(z(x^1),\ldots ,z(x^d)\) of (MOP) and weight vectors \(\lambda ^1=W^\top \cdot w^0,W^\top \cdot w^1,\ldots ,W^\top \cdot w^d=\lambda ^2\in {\mathbb {R}}^3\) such that \([W^\top \cdot w^{i-1},W^\top \cdot w^i]\subseteq \varLambda (z(x^i))\) for \(i=1,\ldots ,d\). Further, the corresponding weight points of \(W^\top \cdot w^i\) for \(i=1,\ldots ,d-1\) are intersection points.

Proof

As the solutions we get by (\(\varPi ^{\text {lex}}_w\)) are efficient, the proof is analogously to the proof of Remark 3.3 which has been done by Halffmann, Dietz, and Ruzika [14]. \(\square \)

Regarding the solvability of (\(\varPi ^{\text {lex}}_w\)), by Glasser [12] only minor requirements are needed to ensure that if the weighted sum problem is polynomially solvable so is the lexicographic problemFootnote 3. For many cases, especially with linear objective functions, the asymptotic running times of the weighted sum problem and the lexicographic problem coincide, however there may be problems, where the algorithm for the lexicographic problem is significantly slower but still running in polynomial time. Clearly, for well-known combinatorial problems like shortest path we either can replace the comparison operator in the algorithm by its lexicographical version or - if the objective function values are non-negative and integer - we can solve two weighted sum problems like it is proposed in Ehrgott [8].

If the requirements of Glaßer et al. are not met or the algorithm for the lexicographic problem is significantly worse than the one of the weighted sum problem, we simply apply the Dichotomic Search on the boundary of the weight set. Then, we either get an extreme supported nondominated image or an image that is weakly dominated by such an ESN image. This is an immediate consequence of the following result:

Proposition 3.12

([23]) Let (P) be a problem with p objectives and let \((P_I)\) be a subproblem with |I| objectives given by the objectives indexed by \(I \subset \{1,\ldots ,p\}\). Let y be an extreme supported image for \((P_I)\), then if y is nondominated for (P), it is also an extreme supported image for (P).

Clearly, even if the returned image is only weakly nondominated, we get the correct intersection points. The first attempt to extend the weight set component of this image will show that it is reduced to only one edge, and will allow to discover the extreme supported nondominated image dominating it.

In Fig. 6, a possible initialization is depicted.

4 Algorithm

4.1 Presentation of the algorithm and correctness

As described in the preceding section, the algorithm will maintain a set S of supported nondominated images, and for each known supported nondominated image \(y \in S\), a polytope \(L(y) \subseteq \varLambda (y)\) will also be maintained. This will be done by storing a set P of intersections points and a set \({\mathcal {E}}\) of final edges (i.e. edges of polytopes L(y) that are identified as edges of \(\varLambda (y)\)). Algorithm 3 starts with the initialization described in Sect. 3.4 followed by a main loop that will iteratively enlarge the sets L(y) to \(\varLambda (y)\) for any known supported image y, and simultaneously discover new supported images. This loop simply relies on the successive application of the Perpendicular Search procedure applied on an interim edge, the checking of an edge to identify if it is a subedge of a final edge (implicit in Algorithm 3) and next the application of the Extend Edge procedure if necessary, as described in Sect. 3. Updating P, S, and L(y) is done by adding \(\lambda ^*\) to P, \(y^*\) to S and following the rules of Proposition 3.6 and Proposition 3.10. In the pseudocode of the algorithm we use \(\downarrow \) to indicate the input of the procedures and \(\uparrow \) for the output.

Lemma 4.1

The identification of a final edge using the loop of lines 11-18 of Algorithm 3 is done with at most three searches in total (perpendicular search and extend edge).

Proof

Theorem 3.8 shows in which case we find a subedge of an edge. Suppose we examine \(y^1\) and we are in a particular case of the third statement of Theorem 3.8, which is clearly the worst case. We have found three intersection points in that order with the following separation, see also Figure 7.

-

\(\lambda ^1\) separates \(y^1\) and \(y^3\),

-

\(\lambda ^2\) separates \(y^1\) and \(y^2\),

-

\(\lambda ^3\) separates \(y^1\) and \(y^2\).

Hence, by these points we can conclude that we have found a subedge and \(\lambda ^1\) is an extreme point; regarding the chronological order of the points, each point is necessary for this result. Hence, we need three perpendicular searches and additionally one call to extend edge, as the line segment \([\lambda ^1,\lambda ^3]\) is only a subedge, see Fig. 7.

Even though we have performed four searches for the edge \([\lambda ^1,\lambda ^4]\), we can rearrange the points such that \(\lambda ^4\) is counted for the edge that has \(\lambda ^4\) as common endpoint with the edge \([\lambda ^1,\lambda ^4]\). Further we can show that, using the same construction as above, we need additionally two perpendicular searches and one extend edge, so again we get four searches. We can continue like that, however, at one point we reach a known definitive edge D, as each L(y) is initialized by at least one known edge: As in Fig. 7, we have the extreme point of the edge D\(\lambda ^5\), which separates \(y^1\) and some image \(y^4\). Hence we need one additional perpendicular search to find \(\lambda ^6\) and by Theorem 3.8 3. we can conclude that \([\lambda ^4,\lambda ^5]\) is a final edge. So, by this reassignment of intersection points, we need one search for the last edge and at most three for any other edge. \(\square \)

Illustration of known intersection points to finally obtain all final edges of a polytope L(y) (implying that \(L(y) = \varLambda (y)\)). In particular, the first detected subedge (of the final edge \([\lambda ^1,\lambda ^4]\)) is obtained by the knowledge of the intersection points \(\lambda ^1,\lambda ^2,\lambda ^3\). For the last final edge \([\lambda ^4,\lambda ^5]\), no extension is necessary as \(\lambda ^1\) and \(\lambda ^5\) are already known as extreme

Definition 4.2

Let \(Y_{ESN}\) be the set of extreme supported images of a MOP with p objectives, we construct the adjacency graph\(G_{WS}\)of the weight set as follows: \(G_{WS}=(V,E)\) with \(V=Y_{ESN}\) and \(E=\{(y^i,y^j), y^i, y^j\in V| \dim \varLambda (y^i)\cap \varLambda (y^j)=p-2\}\).

An example of the adjacency graph can be seen in Fig. 1. The corresponding image space with the lines between the extreme supported nondominated images resemble the adjacency graph.

Observation 4.3

The adjacency graph \(G_{WS}\) of the weight set is planar and connected for \(p=3\). This is not the case for \(p\ge 4\).

Theorem 4.4

Algorithm 3 terminates in finite time and determines the set of all nondominated extreme images.

Proof

We show that for each \(y \in S\), the loop of lines 11-18 terminates in finite time and that \(L(y) = \varLambda (y)\) when it terminates. The number of edges and extreme points of \(\varLambda (y)\) (except edges and extreme points on the boundary of \(\varLambda \) that are found with the initialization), is limited by the number of adjacent extreme supported points to y, which is necessarily finite. Lemma 4.1 implies that the identification of an edge of \(\varLambda (y)\) requires at most three searches in total (Perpendicular Search and Edge Extend). Further, for every edge, the algorithm will find at most one non-extreme image. The non-extreme characteristic is proven with one Perpendicular Search. We conclude the finiteness of the algorithm.

Suppose now that at termination of the while loop of lines 11-18, we have for \(y \in S\), \(L(y) \subset \varLambda (y)\). This implies that at least one extreme point \(\lambda \) of \(\varLambda (y)\) is not an extreme point of L(y). Consequently, there are two (sub)edges (incident to \(\lambda \)) of \(\varLambda (y)\) that are not edges of L(y). Fig. 8 illustrates possible situations for a missed extreme point \(\lambda \).

On these figures, only one extreme point of \(\varLambda (y)\) does not belong to L(y). To have several consecutive missed extreme points would not modify the idea of the proof. In Fig. 8 on the left, \(\lambda \) is missed because two subedges of \(\varLambda (y)\) have not been extended. This cannot happen as at termination of the while loop of lines 11-18, all edges of L(y) are final, i.e. are edges of \(\varLambda (y)\) according to Proposition 3.8. On the right, two full edges of \(\varLambda (y)\) are missed, and an edge E of L(y) is cutting the interior of \(\varLambda (y)\). However, the final character of E contradicts the conditions to detect subedges of \(\varLambda (y)\), described by Proposition 3.8. Indeed, the two extreme intersection points of E clearly separate two different pairs of extreme supported images, and there cannot be any intersection point in the relative interior of E, as intersection points are located on the boundary of \(\varLambda (y)\). We conclude that at termination of the while loop of lines 11-18, \(\varLambda (y)\) and L(y) have the same set of extreme points and are therefore equal.

We show next that \(Y_{ESN} \subseteq S\). For all \(y \in S\), \(\varLambda (y)\) is computed and we know all adjacent extreme supported images. We consider the adjacency graph \(G_{WS}\) from Definition 4.2. As \(G_{WS}\) is connected, we necessarily have \(Y_{ESN} \subset S\). \(\square \)

Theorem 4.5

Let \(v{:}{=}|Y_{ESN}|\). Then the overall running time of Algorithm 3 is \({\mathcal {O}}(v^2\cdot T_{WS})\), where \(T_{WS}\) is the running time of the weighted sum algorithm.

Proof

We show this by proving several claims.

Claim 1: Each search with partial Dichotomic Search needs at most \({\mathcal {O}}(v)\) calls to the weighted sum algorithm.

In the worst case the line on which we perform a partial Dichotomic Search crosses every weight set component and we examine every extreme supported nondominated image. Non-extreme supported nondominated images may also be found if the line passes through the boundary of a weight set component. This does not change the worst case.

Claim 2: The initialization of the algorithm needs at most \({\mathcal {O}}(v)\) calls to the weighted sum algorithm.

As we search on the boundary of the weight set, we can find at most all v extreme supported images on each edge of the boundary of \(\varLambda \). Hence, we need at most \({\mathcal {O}}(v)\) calls to the weighted sum algorithm to find all edges of weight set components on the boundary. Remark, that we assume by Sect. 3.4 that the found images are nondominated and the found edges are indeed final edges of the components.

Claim 3: All initial constructions of the sets L(y) can be done in total in \({\mathcal {O}}(v\log v)\) time.

Each initialization of L(y) can be done by a planar convex hull algorithm, which has a running time of \({\mathcal {O}}(k\cdot \log k)\) for k points [6]. For this initialization, some final edges will already be known. In particular, we use the endpoints of these edges to construct the convex hull that defines L(y). The number of initially known edges in this convex hull is not known. On the other hand, each edge of the weight set decomposition is used exactly once for the convex hull algorithm: a final edge \(\varLambda (y^1)\cap \varLambda (y^2)\) is found, when \(y^1\) has been examined, and is only necessary for the initialization of \(L(y^2)\). Analogously, we need the edges on the borderline only once. By planarity of the adjacency graph, Observation 4.3, the number of edges of the graph, which is the number of edges of the weight set decomposition not on the borderline, is at most \(3\cdot v-6\). On the borderline we have at most \(3\cdot v\) edges. Hence, we need \({\mathcal {O}}(v)\) edges for all initializations. As the function \(f(k)=k\cdot \log k\) is superadditive, we can bound the total time for all initializations by \({\mathcal {O}}(v\cdot \log v)\).

Claim 4: Checking if an interim edge is a final edge or a subedge of a final edge can be done in constant time.

This is only a matter of storage of the intersection points. Indeed, if the interim edge is linked to the intersection points on this edge, their number cannot be more than three by Theorem 3.8. Checking if the separated images are the same for at most three intersection points is obviously doable in constant time.

Using these results we can construct the total running time as follows:

The initialization can be done in \({\mathcal {O}}(v\cdot T_{WS})\) (Claim 2) plus the initialization of the weight set components in \({\mathcal {O}}(v\log v)\) (Claim 3). For each edge we need \(3\cdot v\) calls to the weighted sum algorithm and checking an interim edge is done in constant time (Claim 4). By Observation 4.3 the adjacency graph is planar and therefore has at most \(3\cdot v-6\) edges and each edge corresponds to an edge of the weight set decomposition (without the edges on the boundary of the weight set). Hence, we need a computation time of \({\mathcal {O}}(v^2\cdot T_{WS})\) to find all edges. As this term dominates all other terms in running time, it is the total running time of our algorithm. \(\square \)

Corollary 4.6

If the running time of the weighted sum algorithm \(T_{WS}\), which is usually the algorithm for the single objective problem, is polynomial in the encoding length of the input (and output), the problem is in TotalP and our algorithm has a running time polynomial in the encoding length of the input and output.

However, due to the usage of the Dichotomic Search this version of the algorithm is not in incremental polynomial time: Bökler et al. [4] have shown that the Dichotomic Search Algorithm for biobjective problems may have a delay exponential in the input and preceeding output even though the weighted sum algorithm has polynomial running time.

From the mentioned article, we can deduce that a lexicographic variant of the Dichotomic Search runs in incremental polynomial time, if we have a polynomial time algorithm to solve the lexicographic problem \(Lex_{MOP}(\pi )\). This lexicographic variant is similar to the problem \(\varPi ^{\text {lex}}_w\) presented in the initialization phase of our algorithm. We can apply this in the procedures Perpendicular Search and Extend Edge as well to ensure an incremental polynomial time running time of our algorithm.

At last, we can state the overall result:

Theorem 4.7

(WSD-Approx Algorithm) Given an multiobjective mixed-integer problem with three objectives, the WSD-Approx Algorithm returns a weight set decomposition in \({\mathcal {O}}(v^2\cdot T_{WS})\), where \(T_{WS}\) is the running time of the weighted sum algorithm. If the weighted sum algorithm runs in output polynomial time, the WSD-Approx Algorithm runs in output polynomial time.

Moreover, if the lexicographic version of MOP is solvable in polynomial time, then the WSD-Approx Algorithm equipped with the lexicographic variant of the Dichotomic Search runs in incremental polynomial time. The ith delay is in \({\mathcal {O}}(v^i\cdot T_{WS-lex}+ T_{WS-lex})\), where \(v^i\) is the number of extreme supported nondominated images found so far and \(T_{WS-lex}\) is the running time of an algorithm for problem \(\varPi ^{\text {lex}}_w\).

4.2 Illustrative example

To illustrate our method, we solve the instance of a tri-objective assignment problem [22] defined by the following cost matrices:

The initialization explores the boundary of the weight set, we obtain the following solutions:

-

\(x^1\) with \(x_{11} = x_{22} = x_{34} = x_{43} = 1\) with image \(y^1 = (9,13,16)\),

-

\(x^2\) with \(x_{11} = x_{24} = x_{32} = x_{43} = 1\) with image \(y^2 = (19,11,17)\),

-

\(x^3\) with \(x_{12} = x_{23} = x_{31} = x_{44} = 1\) with image \(y^3 = (18,20,13)\),

-

\(x_4\) with \(x_{11} = x_{23} = x_{34} = x_{42} = 1\) with image \(y^4 = (14,20,14)\),

-

\(x_5\) with \(x_{11} = x_{23} = x_{32} = x_{44} = 1\) with image \(y^5 = (20,17,14)\).

The set S is therefore initialized with these supported images and subsequently we get a set of extreme intersection points \(P=\{\lambda ^1,\ldots ,\lambda ^5\}\):

-

\(\lambda ^1\) separates \(y^3\) and \(y^4\),

-

\(\lambda ^2\) separates \(y^1\) and \(y^4\),

-

\(\lambda ^3\) separates \(y^1\) and \(y^2\),

-

\(\lambda ^4\) separates \(y^2\) and \(y^5\),

-

\(\lambda ^5\) separates \(y^3\) and \(y^5\).

From this we obtain the final edges \({\mathcal {E}}\). We show the exploration of the weight set components in Fig. 9. We focus on \(\varLambda (y^1)\), as the other components are very easy to compute. The full weight set decomposition is depicted in Fig. 1.

4.3 Comparison with the literature

Przybylski, Gandibleux, and Ehrgott [22], similar to our approach, use the Dichotomic Search but they start with supersets of the weight set components instead of subsets. While our algorithm enlarges an “inner approximation” of the weight set components by applying the Dichotomic Search and separation results, they apply some of these methods in a different fashion to shrink an “outer approximation”. Due to these different approaches, a comparison would automatically become a discussion about the up- and downsides of supersets and subsets, respectively. We compare these approaches more thoroughly in the computational study, see Sect. 4.4.

Therefore, we focus on the articles by Bökler and Mutzel [5] and Alves and Costa [1], as the former also present a running time and the latter use subsets of weight set components as well.

In the case of \(p=3\), Bökler and Mutzel [5] achieve a running time of \({\mathcal {O}}(v\cdot (\text {poly}(n,m,L)\)\(+v^{\frac{9}{4}}\log v)\)\(+\text {poly}(n,v,L))\) and \({\mathcal {O}}(v\cdot (\text {poly}(n,m,L)+v\log v)\), if the lexicographic problem can be solved in polynomial time. Here, \(\text {poly}(n,m,L)\) is a polynomial in the number of variables n, number of constraints m and L is the encoding length of the largest number in the constraint matrix.

Clearly, in the absence of a polynomial time algorithm for the lexicographic problem we have a faster worst-case running time than Bökler and Mutzel: If the running time of the weighted sum algorithm is for example smaller than \({\mathcal {O}}(v^{\frac{5}{4}}\cdot \log v)\), which is the case for combinatorial problems with polynomial time weighted sum algorithms and exponentially large number of extreme supported nondominated images, we outperform their algorithm. However, as mentioned in Sect. 3.4, we easily met the requirement of Glasser et al. [12] for combinatorial problems. In this case, it is hard to compare the running times: It depends on the actual size of \(\text {poly}(n,m,L)\) and the number of extreme supported nondominated images in comparison to the encoding length of the input. Hence, we have to compare \(\log v\) with \(T_{WS-lex}\) (or \(T_{WS}\)) which is not comparable in general. Surely there are instances where their algorithm has a faster or the same or a slower running time than ours. But, instead of only returning extreme supported images and related solutions, we additionally provide the user of our algorithm with an explicit description of the weight set decomposition. And further, we can return each component individually as soon as we have fully discovered the component or give intermediate results like an “approximation” of the component which creates additional value. We discuss this further in Sect. 5.

As mentioned before, Alves and Costa [1] use subsets of the weight set components. They enlarge these subsets by solving a weighted sum algorithm slightly outside of the subset and returning information of the Branch and Bound tree. There are some drawbacks with these methods where our usage of the Dichotomic Search gives a guaranteed enlargement of the subsets.

First, the new part of the subset is generated from the Branch and Bound tree and could be very small, if the tree structure or the solution structure is unfortunate. Hence, arbitrarily many searches outside of the subsets have to be conducted to complete the weight set component, which results in as many convex hull operations.

Second, the search slightly outside of the subset is executed by using a weight point that is perpendicular to an edge of the subset with a certain distance, e.g. a distance of \(\varepsilon =0.01\) as used in the example by Alves and Costa. As it can be seen in their own example in [1], weight set components (for mixed-integer problems) can get very small even for small instances. Hence, it can occur that we “jump” a weight set component by setting \(\varepsilon =0.01\). That is, we get a new image whose component is not adjacent to the one of the current image. Clearly, the choice of \(\varepsilon \) is for practical purposes only and is set by experience. On the contrary, our algorithm overcomes these difficulties, as we get the maximum expansion in a certain direction, we automatically ensure a convex polyhedral structure, and we guarantee that we find the adjacent image. Nevertheless, the algorithm by Alves and Costa is adequate for practical purposes and may be faster in the average case. And, as it is built for an interactive use, it has a different intention and hence advantages for other settings.

4.4 Computational study

We compare the performance of our algorithm with those methods of Przybylski, Gandibleux, and Ehrgott [22] (PGE2010), Bökler and Mutzel [5] (BM2015), and Özpeynirci and Köksalan [21] (OK2015). However, only the code of PGE2010 was available and executed on the same computer. For the comparison with the other approaches, we have used a computer with the same specifications as in [5]. Therefore, the differences in running times reported below are subject to different programming skills, different programming languages and partly different computer architectures. As a consequence, our study can only roughly compare the competitiveness of the four algorithms.

We use instances of the Multiobjective Assignment Problem (MOAP) and the Multiobjective Knapsack Problem (MOKP) with three objectives for our tests. These instances are the same as the ones in the computational study in [22] and the MOAP instances used in [5, 21] are generated in the same fashion. For MOAP, we use 10 series of 10 instances of 3AP with a size varying from \(5 \times 5\) to \(50 \times 50\) with a step of 5 and randomly generated objective function coefficients in [0, 20]. For MOKP, we take into account 10 instances per series with a size varying from 50 to 350 with a step of 50. The objective function and constraint coefficients are uniformly generated in [1, 100]. Our algorithm is implemented in Julia 1.2 and using an implementation of the Hungarian Method [18] coded in C that is callable by Julia to solve single objective assignment problems. For the knapsack problems, a dynamic programming based approach is coded in C. The implementation of the PGE2010 algorithm has been done in C and uses the same single objective solvers. All C code is compiled using GCC 9.2 with optimizer option -O3. In the study by Bökler and Mutzel [5], the BM2015 and the OK2015 algorithm have been implemented in C++ using double precision arithmetic and compiled using LLVM 3.3. For both studies the experiments are performed on an Intel Core i7-3770, 3.4 GHz and 16GB of memory running Ubuntu Linux.

In Table 1, we present the results on the MOAP instances for WSD_APPROX and PGE2010 and report the mean on running time, number of extreme supported images, and number of solver calls per image found. Both implementations have been able to solve all instances in this study. The minor discrepancy in the number of extreme supported images is due to numerical precision: On the one hand, non-extreme supported images may have been identified as extreme, on the other hand some extreme supported images may have such minuscule weight set components that they are identified as non-extreme.

The PGE2010 algorithm is faster than our algorithm. However, the running times are of the same order of magnitude and may be explained by the issues listed above. Note that the third column of the table shows that our algorithm needs 2.5 times more calls to the single objective solver. This hints to the main difference between the “inner approximation” and the “outer approximation” approach. In our algorithm, a new neighbour is found via perpendicular search, which needs at least three calls of the single objective solver. In fact, our algorithm heavily relies on solving single objective problems to obtain information while less geometry is involved. Only the end point of the line search and the construction of the initial subsets need some geometry induced computations. For the “outer approximation” approach, this observation is inverted: Whenever a new image is found, the computation of the superset of its weight set component needs significantly more computational effort, as its facets with its (possible) adjacent images have to be computed. However, finding a new image by checking a facet of the superset often needs only one solver call. As the weight set is only two dimensional, the geometry of the weight set and its components is rather simple. Hence, it seems that the computation of the supersets is not that time consuming and an “outer approximation” approach may be slightly favourable for these instance sizes and three objectives.

The results for the knapsack instances in Table 2 underline our findings for the assignment instances. The running times of the two algorithms are competitive. One peculiar difference in the outcome of the computational study of these algorithms for knapsack problems is that one of the largest instances could not be solved by PGE2010. This is due to a problem in numerical precision that induces a segmentation fault. However, issues on numerical precision are immanent in the computation of the weight set decomposition: Weight set components get very small very quickly with increasing problem size. We also face these problems, as the inner approximate components are even smaller than the actual components. It seems that our algorithm is more stable in that matter.

In Table 3, we compare our algorithm with the results for MOAP reported in [5] and present the median and the mean absolute deviation of the running times. Clearly, we are competitive with the algorithm in [5] as we have slightly faster running times and less deviation. Also, our study shows that we outperform the algorithm by Özpeynirci and Köksalan [21], as we are a factor of almost 1300 faster at the largest instances. The instances are generated similarly but not the same which explains the discrepancy in the number of extreme supported images. Overall, we can conclude that our algorithm is competitive with the algorithms by Przybylski, Gandibleux, and Ehrgott [22] and Bökler and Mutzel [5] and is substantially faster than the one by Özpeynirci and Köksalan [21].

5 Conclusion

In this article, we have proposed a new algorithm to compute the weight set decomposition and consequently all extreme supported nondominated images for a multiobjective (mixed-) integer linear problem with three objectives. This algorithm can be described as a graphical approach and makes use of the biobjective Dichotomic Search to explore the weight set. Further, we have established necessary results that determine whether a line segment is part of the common face of two weight set components, hence separates these components.

In comparison to the literature, our algorithm can return the whole weight set decomposition but also one particular weight set component as soon as it is examined. Further, it is able to return an intermediate subset of the component, while the extreme points of subset are always on the boundary of the component and hence we have a guaranteed maximal improvement of the subset in every step of the algorithm. As the subset is a convex polyhedron at any time, intermediate results can also be returned that have valuable properties for the user. In the case of a polynomial time algorithm to solve the weighted sum problem, and hence also for the lexicographic problem, our algorithm is output sensitive and even runs in incremental polynomial time. The running time is on the same level of the algorithm by Bökler and Mutzel [5]. Also, the results of the computational study show that practical running times of our algorithm are better than or at the same level as available algorithms. In conclusion, our algorithm is an easily comprehensible, running time competitive algorithm with original features especially for practical purposes.

There are two immediate ideas for future research: one is the generalization of our algorithm to more than three objectives, as some parts like the perpendicular search can be taken over, while other parts could be solved in a recursive fashion. The other one concerns the “approximation” of weight set components. We think that our general approach is able to provide approximate weight set components, if applied properly. However, there are several issues that prevent us from an immediate adaption of the algorithm to generate an approximation method: as this has not been done before, a measurement for the quality of an approximation has to be introduced. Also, a proper selection of the edges that a perpendicular search is applied to goes hand in hand with the approximation ratio. While for the exact method we do this selection in a depth-first manner, a breadth-first approach or other strategies may be better in terms of approximation.

In general, a deeper analysis of the weight set and its components for both general and special problems is desired as well as further practical applications of the weight set decomposition.

Notes

For example for the shortest path problem, this has been proven by Müller-Hannemann and Weihe [19].

Reasonably small means here, that each element of the output has an encoding length bounded by a polynomial of the encoding length of the input.

It is required that the solutions and images can be computed in polynomial time regarding the encoding length of the input and the same has to hold for the encoding length of the images and solutions.

References

Alves, M.J., Costa, J.P.: Graphical exploration of the weight space in three-objective mixed integer linear programs. Eur. J. Oper. Res. 248(1), 72–83 (2016). https://doi.org/10.1016/j.ejor.2015.06.072

Aneja, Y.P., Nair, K.P.: Bicriteria transportation problem. Manag. Sci. 25(1), 73–78 (1979). https://doi.org/10.1287/mnsc.25.1.73

Arora, S., Barak, B.: Computational Complexity: A Modern Approach. Cambridge University Press, Cambridge (2009)

Bökler, F., Ehrgott, M., Morris, C., Mutzel, P.: Output-sensitive complexity of multiobjective combinatorial optimization. J. Multi-Criteria Decision Anal. 24(1–2), 25–36 (2017). https://doi.org/10.1002/mcda.1603.Mcda.1603

Bökler, F., Mutzel, P.: Output-sensitive algorithms for enumerating the extreme nondominated points of multiobjective combinatorial optimization problems. In: N. Bansal, I. Finocchi (eds.) Algorithms - ESA 2015: 23rd Annual European Symposium, Patras, Greece, September 14–16, 2015, Proceedings, pp. 288–299. Springer, Berlin (2015). https://doi.org/10.1007/978-3-662-48350-3_25

Chan, T.M.: Optimal output-sensitive convex hull algorithms in two and three dimensions. Discrete Comput. Geom. 16(4), 361–368 (1996). https://doi.org/10.1007/BF02712873

Cohon, J.L.: Multiobjective Programming and Planning. Dover Books on Computer Science. Dover, Mineola (2004)

Ehrgott, M.: Multicriteria Optimization. Springer, Berlin (2005)

Ehrgott, M., Löhne, A., Shao, L.: A dual variant of benson’s “outer approximation algorithm” for multiple objective linear programming. J. Global Optim. 52(4), 757–778 (2012). https://doi.org/10.1007/s10898-011-9709-y

Figueira, J.R., Fonseca, C.M., Halffmann, P., Klamroth, K., Paquete, L., Ruzika, S., Schulze, B., Stiglmayr, M., Willems, D.: Easy to say they are hard, but hard to see they are easy—towards a categorization of tractable multiobjective combinatorial optimization problems. J. Multi-Criteria Decis. Anal. 24(1–2), 82–98 (2017). https://doi.org/10.1002/mcda.1574

Geoffrion, A.M.: Proper efficiency and the theory of vector maximization. J. Math. Anal. Appl. 22(3), 618–630 (1968). https://doi.org/10.1016/0022-247X(68)90201-1

Glaßer, C., Reitwießner, C., Schmitz, H., Witek, M.: Approximability and hardness in multi-objective optimization. In: F. Ferreira, B. Löwe, E. Mayordomo, L. Mendes Gomes (eds.) Programs, Proofs, Processes. CiE 2010, Lecture Notes in Computer Science, vol. 6158, pp. 180–189. Springer, Berlin (2010)

Gorski, J., Klamroth, K., Ruzika, S.: Connectedness of efficient solutions in multiple objective combinatorial optimization. J. Optim. Theory Appl. 150(3), 475–497 (2011). https://doi.org/10.1007/s10957-011-9849-8

Halffmann, P., Dietz, T., Ruzika, S.: Partial scalarization: A new way to solve multiobjective problems?. Private Communication (2018)

Hamel, A.H., Löhne, A., Rudloff, B.: Benson type algorithms for linear vector optimization and applications. J. Global Optim. 59(4), 811–836 (2014). https://doi.org/10.1007/s10898-013-0098-2