Abstract

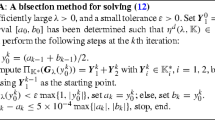

Optimization problems whose objective function and constraints are quadratic polynomials are called quadratically constrained quadratic programs (QCQPs). QCQPs are NP-hard in general and are important in optimization theory and practice. There have been many studies on solving QCQPs approximately. Among them, a semidefinite program (SDP) relaxation is a well-known convex relaxation method. In recent years, many researchers have tried to find better relaxed solutions by adding linear constraints as valid inequalities. On the other hand, the SDP relaxation requires a long computation time, and it has high space complexity for large-scale problems in practice; therefore, the SDP relaxation may not be useful for such problems. In this paper, we propose a new convex relaxation method that is weaker but faster than SDP relaxation methods. The proposed method transforms a QCQP into a Lagrangian dual optimization problem and successively solves subproblems while updating the Lagrange multipliers. The subproblem in our method is a QCQP with only one constraint for which we propose an efficient algorithm. Numerical experiments confirm that our method can quickly find a relaxed solution with an appropriate termination condition.

Similar content being viewed by others

Notes

Block-SDP could not solve one of ten instances with \(n=10^3\) when \(m= \text{ floor }(0.3 n)\) due to the numerical errors that would have been caused by large scale calculations.

References

Adachi, S., Iwata, S., Nakatsukasa, Y., Takeda, A.: Solving the trust region subproblem by a generalized eigenvalue problem. SIAM J. Optim. 27, 269–291 (2017)

Al-Khayyal, F.A., Larsen, C., Voorhis, T.V.: A relaxation method for nonconvex quadratically constrained quadratic programs. J. Global Optim. 6, 215–230 (1995)

Anstreicher, K.M.: Semidefinite programming versus the reformulation-linearization technique for nonconvex quadratically constrained quadratic programming. J. Global Optim. 43, 471–484 (2009)

Armijo, L.: Minimization of functions having Lipschitz continuous first partial derivatives. Pac. J. Math. 16, 1–3 (1966)

Audet, C., Hansen, P., Jaumard, B., Savard, G.: A branch and cut algorithm for nonconvex quadratically constrained quadratic programming. Math. Program. 87, 131–152 (2000)

Bai, L., Mitchell, J.E., Pang, J.S.: Using quadratic convex reformulation to tighten the convex relaxation of a quadratic program with complementarity constraints. Optim. Lett. 8, 811–822 (2014)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2010)

Boyd, S., Xiao, L., Mutapcic, A.: Subgradient Methods. Lecture Notes of EE392o, Autumn Quarter. Stanford University, Stanford (2003)

Burer, S., Kim, S., Kojima, M.: Faster, but weaker, relaxations for quadratically constrained quadratic programs. Comput. Optim. Appl. 59, 27–45 (2014)

Chen, Y., Ye, X.: Projection onto a simplex (2011), ArXiv:1101.6081v2. Accessed 17 May 2016

Fujie, T., Kojima, M.: Semidefinite programming relaxation for nonconvex quadratic programs. J. Global Optim. 10, 367–380 (1997)

Goemans, M.X.: Semidefinite programming in combinatorial optimization. Math. Program. 79, 143–161 (1997)

Goemans, M.X., Williamson, D.P.: Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. Assoc. Comput. Mach. 42, 1115–1145 (1995)

Hu, Y., Yang, X., Sim, C.: Inexact subgradient methods for quasi-convex optimization problems. Eur. J. Oper. Res. 240, 315–327 (2015)

Hu, Y., Yu, C., Li, C.: Stochastic subgradient method for quasi-convex optimization problems. J. Nonlinear Convex Anal. 17, 711–724 (2016)

Jiang, R., Li, D.: Convex relaxations with second order cone constraints for nonconvex quadratically constrained quadratic programming (2016). ArXiv:1608.02096v1. Accessed 8 Dec 2016

Kim, S., Kojima, M.: Second order cone programming relaxation of nonconvex quadratic optimization problems. Optim. Methods Softw. 15, 201–224 (2001)

Lu, C., Fang, S., Jin, Q., Wang, Z., Xing, W.: KKT solution and conic relaxation for solving quadratically constrained quadratic programming problems. SIAM J. Optim. 21, 1475–1490 (2011)

Luo, Z.Q., Ma, W.K., So, A.M.C., Ye, Y., Zhang, S.: Semidefinite relaxation of quadratic optimization problems. IEEE Signal Process. Mag. 27, 20–34 (2010)

Moré, J.J., Sorensen, D.C.: Computing a trust region step. SIAM J. Sci. Stat. Comput. 4, 553–572 (1983)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, Berlin (2006)

Novak, I.: Dual bounds and optimality cuts for all-quadratic programs with convex constraints. J. Global Optim. 18, 337–356 (2000)

Pardalos, P.M., Vavasis, S.A.: Quadratic programming with one negative eigenvalue is NP-hard. J. Global Optim. 1, 15–22 (1991)

Rendl, F., Rinaldi, G., Wiegele, A.: Solving max-cut to optimality by intersecting semidefinite and polyhedral relaxations. Math. Program. Ser. A 121, 307–335 (2010)

SeDuMi optimization over symmetric cones. http://sedumi.ie.lehigh.edu/

Sherali, H.D., Fraticelli, B.M.P.: Enhancing RLT relaxation via a new class of semidefinite cuts. J. Global Optim. 22, 233–261 (2002)

Sturm, J.F., Zhang, S.: On cones of nonnegative quadratic functions. Math. Oper. Res. 28, 246–267 (2003)

Tuy, H.: On solving nonconvex optimization problems by reducing the duality gap. J. Global Optim. 32, 349–365 (2005)

Vandenberghe, L., Boyd, S.: Semidefinite programming. SIAM Rev. 38, 49–95 (1996)

Voorhis, T.V.: A global optimization algorithm using Lagrangian underestimates and the interval newton method. J. Global Optim. 24, 349–370 (2002)

Zheng, X.J., Sun, X.L., Li, D.: Convex relaxations for nonconvex quadratically constrained quadratic programming: matrix cone decomposition and polyhedral approximation. Math. Program. 129, 301–329 (2011)

Zheng, X.J., Sun, X.L., Li, D.: Nonconvex quadratically constrained quadratic programming: best D.C.decompositions and their SDP representations. J. Global Optim. 50, 695–712 (2011)

Author information

Authors and Affiliations

Corresponding author

Additional information

This research was done at The University of Tokyo before joining the company, not a research conducted by the company.

Proofs of Theorems

Proofs of Theorems

Proof of Theorem 1

Proof

The vector \(\tilde{{\varvec{g}}}_{{{\varvec{\lambda }}}}\) of (21) can be found from

We prove that the vector \(\tilde{{\varvec{g}}}_{{{\varvec{\lambda }}}}\) is in the quasi-subdifferential \(\partial \psi \) defined by (22). Note that in [14, 15], the quasi-subdifferential is defined for a quasi-convex function, but \(\psi \) is quasi-concave. Therefore (22) is modified from the original definition of \(\partial \psi \) for a quasi-convex function. We further consider (22) as

Now we show that \(\tilde{{\varvec{g}}}_{{{\varvec{\lambda }}}}\) is in (37). When \(\bar{\mu }_{{{\varvec{\lambda }}}}=0\), \(\tilde{{\varvec{g}}}_{{{\varvec{\lambda }}}}={\varvec{0}}\) satisfies (22) and \(\tilde{{\varvec{g}}}_{{{\varvec{\lambda }}}}\in \partial \psi ({\varvec{\lambda }})\) holds. When \(\bar{\mu }_{{{\varvec{\lambda }}}}>0\), it is sufficient to consider the vector

instead of \(\tilde{{\varvec{g}}}_{{{\varvec{\lambda }}}}\) because \(\partial \psi ({\varvec{\lambda }})\) forms a cone. Then, since \(\bar{{\varvec{x}}}_{{{\varvec{\lambda }}}}\) is feasible for \({\varvec{\lambda }}\), we have

From the definition of \({\varvec{g}}_{{{\varvec{\lambda }}}}\), we can rewrite (38) as

Then, we have to prove that for an arbitrary vector \({\varvec{\nu }}\) which satisfies \({{\varvec{g}}_{{{\varvec{\lambda }}}}}^\top {\varvec{\nu }}<{{\varvec{g}}_{{{\varvec{\lambda }}}}}^\top {\varvec{\lambda }}\),

holds. From (39), we get \({{\varvec{g}}_{{{\varvec{\lambda }}}}}^\top {\varvec{\nu }}\le 0\) and it means that \(\bar{{\varvec{x}}}_{{{\varvec{\lambda }}}}\) is feasible for \({\varvec{\nu }}\). This implies that at an optimal solution \(\bar{{\varvec{x}}}_{{{\varvec{\nu }}}}\) for \({\varvec{\nu }}\), the optimal value of (17) is less than or equal to the one at \(\bar{{\varvec{x}}}_{{{\varvec{\lambda }}}}\). Therefore, we have \(\psi ({\varvec{\nu }})\le \psi ({\varvec{\lambda }})\).

Next, we prove (ii)–(iv). First, \(\phi ({\varvec{\lambda }})\) defined by (3) is concave for \({\varvec{\lambda }}\). It is a general property of the objective function of the Lagrangian dual problem (e.g. [7]). Furthermore, note that \(\psi ({\varvec{\lambda }})\) is the maximum value of \(\phi (\mu {\varvec{\lambda }})\) with respect to \(\mu \ge 0\). Therefore, \(\psi ({\varvec{\lambda }})\ge \phi ({\varvec{0}})\) holds for all \({\varvec{\lambda }}\in \Lambda _{\mathrm {s}}\).

We show (ii) first. Let \({\varvec{\lambda }}_1,{\varvec{\lambda }}_2\;({\varvec{\lambda }}_1\ne {\varvec{\lambda }}_2)\) be arbitrary points in \(\Lambda _{\mathrm {s}}\), and \(\bar{\mu }_1\) and \(\bar{\mu }_2\) be optimal solutions of (16) with fixed \({\varvec{\lambda }}_1\) and \({\varvec{\lambda }}_2\), respectively. Without loss of generality, we assume that \(\psi ({\varvec{\lambda }}_1)\ge \psi ({\varvec{\lambda }}_2)\). Now, it is sufficient to prove that for any fixed \(\alpha \in [0,1]\),

where \({\varvec{\lambda }}_\alpha :=\alpha {\varvec{\lambda }}_1+(1-\alpha ){\varvec{\lambda }}_2\). If \({\varvec{\lambda }}_1\notin \Lambda _+\) or \({\varvec{\lambda }}_2\notin \Lambda _+\) holds, we get \(\psi ({\varvec{\lambda }}_2)=\phi ({\varvec{0}})\) and (40) holds. Therefore, we only have to consider the case when both \({\varvec{\lambda }}_1\) and \({\varvec{\lambda }}_2\) are in \(\Lambda _+\), implying that \(\bar{\mu }_1\) and \(\bar{\mu }_2\) are positive by (20). Since \(\phi ({\varvec{\lambda }})\) is concave for \({\varvec{\lambda }}\), we can see that for any \(\beta \in [0,1]\),

where \({\varvec{\xi }}(\beta ):=\beta \bar{\mu }_1{\varvec{\lambda }}_1+(1-\beta )\bar{\mu }_2{\varvec{\lambda }}_2\). Accordingly, we can confirm that there exists

which satisfies

For this \(\bar{\beta }\), we get

where \(\bar{\mu }_{{{\varvec{\lambda }}}_\alpha }\) is an optimal solution for \({\varvec{\lambda }}_\alpha \). Therefore, (ii) holds.

We can easily prove (iii). In the above proof of (ii), we assume \({\varvec{\lambda }}_1,{\varvec{\lambda }}_2\) is in \(\Lambda _+\). Then, (41) means that \(\psi ({\varvec{\lambda }}_\alpha )\ge \psi ({\varvec{\lambda }}_2)>\phi ({\varvec{0}})\), and we get \({\varvec{\lambda }}_\alpha \in \Lambda _+\) for any \(\alpha \in [0,1]\).

Lastly, we prove (iv). Let \({\varvec{\lambda }}^\dag \) be an arbitrary stationary point in \(\Lambda _+\), and let \(\bar{\mu }_{{{\varvec{\lambda }}}^\dag }\) be an optimal solution of (16) for \({\varvec{\lambda }}^\dag \). Moreover, \(\bar{{\varvec{x}}}_{{{\varvec{\lambda }}}^\dag }\) denotes an optimal solution of \(\phi (\bar{\mu }_{{{\varvec{\lambda }}}^\dag }{\varvec{\lambda }}^\dag )\). From (21) and (20), we have

On the other hand, it can be confirmed that

is a subgradient vector for \(\phi ({\varvec{\xi }}^\dag )\), where \({\varvec{\xi }}^\dag =\bar{\mu }_{{{\varvec{\lambda }}}^\dag }{\varvec{\lambda }}^\dag \). Hence, (42) implies that \(\bar{\mu }_{{{\varvec{\lambda }}}^\dag }{\varvec{\lambda }}^\dag \) is a stationary point of \(\phi \). From the properties of concave functions, all stationary points are global optimal solutions. Therefore, \(\bar{\mu }_{{{\varvec{\lambda }}}^\dag }{\varvec{\lambda }}^\dag \) is a global optimal solution of \(\phi \) and \({\varvec{\lambda }}^\dag \) is also a global optimal solution of \(\psi \). \(\square \)

Proof of Theorem 2

Proof

Let \(\Delta \) be the feasible region for (\({\varvec{x}},t\)) of (23) and \(\Delta _{\mathrm {rel}}\) be the feasible region of the relaxed problem (26). Let \(\mathrm {conv}(\Delta )\) be the convex hull of \(\Delta \). We will prove that \(\Delta _{\mathrm {rel}}=\mathrm {conv}(\Delta )\). In this proof, we write “\({\varvec{a}}\xleftarrow {conv}\{{\varvec{b}},{\varvec{c}}\}\)” if

holds. From the definition, it is obvious that \(\Delta _{\mathrm {rel}}\) is convex and \(\Delta \subseteq \Delta _{\mathrm {rel}}\) holds. Meanwhile, the definition of the convex hull is the minimum convex set that includes \(\Delta \), which implies \(\mathrm {conv}(\Delta )\subseteq \Delta _{\mathrm {rel}}\) is obvious. The convex hull consists of all the points obtained as convex combinations of any points in \(\Delta \). Therefore, if the proposition,

holds, it leads to \(\Delta _{\mathrm {rel}}\subseteq \mathrm {conv}(\Delta )\) and we get \(\Delta _{\mathrm {rel}}=\mathrm {conv}(\Delta )\). To show (43), let us choose an arbitrary point \(({\varvec{x}}^*,t)\in \Delta _{\mathrm {rel}}\) and let \(t^*\) be the lower bound of t for \({\varvec{x}}^*\) in \(\Delta _{\mathrm {rel}}\). Since \(({\varvec{x}}^*,t)\in \Delta _{\mathrm {rel}}\) holds for all \(t\ge t^*\), if

holds, then for any \(\delta _t\ge 0\), \(({\varvec{x}}^*,t^*+\delta _t)\in \Delta _{\mathrm {rel}}\) \(\xleftarrow {conv} \{({\varvec{x}}_1,t_1+\delta _t), ({\varvec{x}}_2,t_2+\delta _t)\}\). These points are in \(\Delta \), and therefore, it is sufficient to focus on the case of \(t=t^*\).

To prove (44), we claim that if a point \(({\varvec{x}},t)\in \Delta _{\mathrm {rel}}\) satisfies both inequalities of (26) with equality, then the point is also in \(\Delta \). Since \(\underline{\sigma }<\bar{\sigma }\), we can see that \({\varvec{x}}^\top Q_{{{\varvec{\lambda }}}}{\varvec{x}}+2{\varvec{q}}_{{{\varvec{\lambda }}}}^\top {\varvec{x}}+\gamma _{{{\varvec{\lambda }}}}=0\) by setting the inequalities of (26) to equality and taking their difference. Then, we can easily get \({\varvec{x}}^\top Q_0{\varvec{x}}+2{\varvec{q}}_0^\top {\varvec{x}}+\gamma _0=t\). Therefore, \(({\varvec{x}},t)\) is feasible for (23) and in \(\Delta \). In what follows, we focus on when only one of the two inequalities is active.

Then, we have to prove (44) for when \(Q_{{{\varvec{\lambda }}}}\succeq O\) and \(Q_{{{\varvec{\lambda }}}}\nsucceq O\). However, due to space limitations, we will only show the harder case, i.e., when \(Q_{{{\varvec{\lambda }}}}\nsucceq O\), implying \(0<\bar{\sigma }<\infty \). The proof of the other case is almost the same. In the following explanation, we want to find two points in \(\Delta \) (i.e., points which satisfy both inequalities of (26) with equality). Figure 3 illustrates \(\Delta \) and \(\Delta _{\mathrm {rel}}\). In the figure, we want to find P and Q.

The optimal solution \(({\varvec{x}}^*,t^*)\) of the relaxation problem (26) satisfies at least one of the two inequalities with equality. Here, we claim that the matrix \(Q_0+\sigma Q_{{{\varvec{\lambda }}}}\) (\(\sigma \in \{\bar{\sigma },\underline{\sigma }\}\)) in the active inequality has at least one zero eigenvalue and the kernel is not empty if \(({\varvec{x}}^*,t^*)\notin \Delta \) (the claim is proved at the end of this proof). We denote the matrix in the inactive inequality as \(Q_0+\sigma ' Q_{{{\varvec{\lambda }}}}\) (\(\sigma '\in \{\bar{\sigma },\underline{\sigma }\}\)). By using \(\sigma \) and \(\sigma '\), \(({\varvec{x}}^*,t^*)\) satisfies (26) as follows:

Since \(Q_0+\sigma Q_{{{\varvec{\lambda }}}}\;(\succeq O)\) has a zero eigenvalue, we can decompose \({\varvec{x}}^*\) into

Substituting these expressions into the constraints of (45), we get

By fixing \({\varvec{u}}^*\) and \({\varvec{v}}^*\), we can see that (47) is of the form,

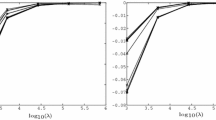

where \((A,B,\alpha ,\beta ,\gamma )\) are appropriate constants. Here, we regard \(\tau ^*\) and \(t^*\) in (48) as variables \((\tau , t)\) and illustrate (48) in Fig. 9. The feasible region of (26) for fixed \({\varvec{u}}^*\) and \({\varvec{v}}^*\) is shown by the bold line. Note that the line and the parabola have at least one intersection point \(({\varvec{x}}^*, t^*)\). Here, both points P\((\tau _1,t_1)\) and Q\((\tau _2,t_2)\) in Fig. 9 satisfy both formulas of (48) with equality, so these points are in \(\Delta \). Furthermore, it is obvious from Fig. 9 that \((\tau ^*,t^*)\xleftarrow {conv}\{(\tau _1,t_1),(\tau _2,t_2)\}\). Therefore, (44) holds for any \(({\varvec{x}}^*,t^*)\).

Now let us check that \(\gamma >0\) and thereby show that the second formula of (48) actually forms a parabola. In (48), \(\gamma ={{\varvec{v}}^*}^\top (Q_0+\sigma 'Q_{{{\varvec{\lambda }}}}){\varvec{v}}^*\ge 0\). However, if \(\gamma =0\), then \({\varvec{v}}^*\in \mathrm {Ker}(Q_0+\sigma 'Q_{{{\varvec{\lambda }}}})\), so \((Q_0+\sigma 'Q_{{{\varvec{\lambda }}}}){\varvec{v}}^*=0\) holds. Meanwhile, from the definition of \({\varvec{v}}^*\), we have \((Q_0+\sigma Q_{{{\varvec{\lambda }}}}){\varvec{v}}^*=0\). Since \(\sigma \ne \sigma '\), we get \({\varvec{v}}^*\in \mathrm {Ker}(Q_0)\cap \mathrm {Ker}(Q_{{{\varvec{\lambda }}}})\), and this contradicts the Dual Slater condition.

Finally, we prove that \(Q_0+\sigma Q_{{{\varvec{\lambda }}}}\;(\succeq O)\) has a zero eigenvalue if \(({\varvec{x}}^*,t^*)\notin \Delta \). From the definition of \(\bar{\sigma }\) and \(\underline{\sigma }\) (see (24) and (25)), \(Q_0+\bar{\sigma }Q_{{{\varvec{\lambda }}}}\) or \(Q_0+\underline{\sigma }Q_{{{\varvec{\lambda }}}}\) has a zero eigenvalue if \(\bar{\sigma }\) or \(\underline{\sigma }\) is positive. Moreover, from the Dual Slater condition, \(\bar{\sigma }>0\) holds, so \(Q_0+\bar{\sigma }Q_{{{\varvec{\lambda }}}}\) always has a zero eigenvalue. Therefore, we only have to consider the case when \(\underline{\sigma }=0\), i.e., \(Q_0+\underline{\sigma } Q_{{{\varvec{\lambda }}}}=Q_0\), implying \(Q_0\succeq O\). If \(Q_0\) does not have a zero eigenvalue (i.e. \(Q_0\succ O\)), the claim does not hold. However, we can confirm that in this case \(({\varvec{x}}^*,t^*)\) is already feasible for (23) (i.e. \(({\varvec{x}}^*,t^*)\in \Delta \)) and we do not need to consider this case. We can check its feasibility for (23) by subtracting an equality with \(\underline{\sigma }\;(=0)\) from an inequality with \(\bar{\sigma }\) and dividing the resulting inequality by \(\bar{\sigma }\;(>0)\). \(\square \)

Remark 3

This proof suggests that if an optimal solution \({\varvec{x}}^*\) of (26) is found and \({\varvec{x}}^*\) is not feasible for (23), we can find an optimal feasible solution of (23). If \(({\varvec{x}}^*,t^*)\) is an optimal solution of (26), then in Fig. 9, the slope \(\alpha \) of the line \(A+\alpha \tau ^*=t^*\) is zero or \(({\varvec{x}}^*,t^*)\) is equal to the end point P or Q and is already feasible for (23) because \(t^*\) is the minimum value such that we can not obtain a t any smaller than \(t^*\) in the bold line part in Fig. 9. In the former case, we can find an optimal feasible solution of (23) by moving \({\varvec{x}}^*\) in the direction of \(\pm {\varvec{v}}^*\) defined in (46). We find an optimal feasible solution of (\(\mathrm {P}_k\)) in this way in SLR.

Rights and permissions

About this article

Cite this article

Yamada, S., Takeda, A. Successive Lagrangian relaxation algorithm for nonconvex quadratic optimization. J Glob Optim 71, 313–339 (2018). https://doi.org/10.1007/s10898-018-0617-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-018-0617-2