Abstract

The use of virtual design studios (VDS) in practice-based STEM education is increasing but requires further research to inform understanding of student learning and success. This paper presents a longitudinal, large-scale study (3 years, 3000 students) of student behaviour in an online design studio used as part of a distance learning Design and Innovation qualification, within the School of Engineering and Innovation at The Open University (UK). The sample size and time period of the study is unprecedented and provides unique insights into student behaviours. Moderate correlations between overall VDS use and student success were identified in early stages of study but were weaker in later stages. Detailed results identify specific behaviour correlations, such as ‘listening-in’ (viewing other students’ work) and student success, as well as behaviour shifts from ‘passive’ to ‘active’ engagement. Strong intrinsic motivations for engagement were observed throughout and selected social learning mechanisms are presented to explain the empirical results, specifically: social comparison, presence, and communities of practice. The contribution of this paper is the framing of these mechanisms as steps in the longitudinal development of design students in a distance setting, providing an informed basis for the understanding, design, and application of virtual design studios.

Similar content being viewed by others

Introduction

The design studio is a signature pedagogy in art and design education (Crowther 2013; Shulman 2005). It provides a physical, social and cultural place within which students can simulate real world practice without the associated risks and with expert practitioner and pedagogical support in the form of the studio tutor (Schön 1987; Kimbell 2011). But the studio is changing in higher education and many schools now augment or even replace physical (proximate) studios with virtual design studios (VDS) of some kind (Rodriguez et al. 2018; Robbie and Zeeng 2012; Arvola and Artman 2008). In addition, the continuing growth of distributed digital prototyping and design (such as Building Information Modelling (BIM) or Product Lifecycle Modelling (PLM)), requires a shift in how educators prepare students for professional collaboration (Jones and Dewberry 2013). In the higher education setting, VDS use is partly driven by the need to develop student competence in online spaces (Schadewitz and Zamenopoulos 2009), but a further motivation is driven by pressures on curriculum resources and the relatively high costs of studio space, requiring alternative studio solutions (Bradford 1995; Richburg 2013).

Beyond core art and design subjects, studio use is expanding in response to national, strategic or market-led calls for development of student competencies, such as creativity, tackling complex problems, and interdisciplinary working skills. The studio is particularly well suited to problem based, constructivist and professional learning models (see Clinton and Rieber (2010) and Orr and Shreeve (2018, p. 114) for examples—the latter for a particularly good overview of distinctions) and, in design-related subjects, supporting the development of general study skills (e.g. Thomson et al. 2005). For open-ended inquiry or problem exploration, the studio can provide a practical place where students can experience creative and ‘designerly’ modes of inquiry that benefit from ‘productive ambiguity’ (Orr and Shreeve 2018), as well as grounding a learning experience that many students may not have been introduced to in their previous educational experience (Sochacka et al. 2016).

However, our understanding of proximate studios is still incomplete, based principally on tacit pedagogical approaches (Houghton 2016). In the history of design education it is only relatively recently that the complexity of what comprises ‘studio’ as a social, pedagogical and professional space has been explored systematically (e.g. Mewburn 2011; Sidawi 2012; Marshalsey 2015; Orr and Shreeve 2018). More recently, research into VDS has made some progress in understanding cases and use of technologies but there are still too few studies comparing virtual and proximate studios (Broadfoot and Bennett 2003; Saghafi et al. 2012) and even fewer studies (if any) that consider the longitudinal effects on students using a VDS, a parallel observation made by Karabulut et al. (2018) with respect to engineering education. Partly this is a function of the relative novelty and changing nature of the technology but it is also part of a wider gap in deeper understanding and analysis of complex social systems of learning (Crick 2012).

Background and purpose

This paper presents the results from a large-scale, longitudinal study of design students working in a VDS as part of a distance learning higher design qualification at The Open University (OU) in the United Kingdom. In previous work the general link between student engagement in a VDS and assessment outcomes was established in a single group of early stage design students (Lotz et al. 2015), and then confirmed in multiple groups of early stage students (Jones et al. 2017). The study reported here includes cohorts across all HE stages of learning, allowing a longitudinal view of students’ use of, and development in, an online, virtual design studio.

What emerged was a picture of student behaviour more complex and nuanced than originally expected. Far more emphasis seemed to be placed (by students) on the personal, psychological and social learning affordances in the virtual studio. Students were noted to be intrinsically motivated to make use of the studio without assessment or other extrinsic motivators. Simple and complex social learning mechanisms were also evident, and the personal learning taking place was happening independently of any specifically planned learning activities, albeit supported by the overall curriculum. With such rich behaviours in evidence in a distance, online and virtual environment, it is important to understand how these emerge, what sustains or supresses them, and how they contribute to student outcomes and success.

As the use of VDS increases, educators and learning designers need to understand this particular mode of education, its relationship to traditional studio pedagogy, and the theoretical and practical bases to inform VDS use.

The learning context

The OU is the largest provider of higher, distance education in the UK with over 160,000 students studying part time and at a distance (at the time of writing). Students study individual courses (known as modules) and these can count towards a named degree qualification. The core of the Design and Innovation qualification comprises three design modules, one at every stage of study, and each equivalent to half a traditional UK university year (60 CAT points). Students choose additional modules from a number of complementary subjects, including, engineering, environment, arts, and business, allowing a student to qualify with either a BA or BSc in Design and Innovation (Hons). This degree is not discipline or industry specific, instead focusing on general design methods and cognition.

Central to OU design teaching is the use of design methods and thinking to allow students the opportunity to learn from the experience of, and reflection on, design projects. This blend of academic study, design practice and cognitive skill development requires very active student engagement leading to deep and authentic learning, which can be challenging for students used to only certain pedagogical forms (Rowe 2016; Lloyd 2011). The blend of theoretical, practical and project work is common to all design modules and is introduced, developed and formalised across each stage of study. The entry module (stage 1), U101: Design Thinking, introduces students to design as a general subject, to common methods and design processes, as well as distance and online learning methods used in all design modules. The stage 2 module, T217: Design Essentials, builds on this general foundation and expands the activity of students at each stage of the design process, focusing more on the craft and practice of being a designer. T317: Innovation: Designing for Change is the final (stage 3) module, giving students the opportunity to complete a larger scale project of their own as well as the chance to apply more advanced theoretical and practice-based design methods.

OU modules have student populations of hundreds (sometimes thousands) of students. The entry design module presents twice a year with between 300–800 students in each presentation. The university’s open access policy means that pre-requisite qualifications are not needed for enrolment on courses. OU students typically study part time and their demographic makeup is often very different to that of design courses in other institutions: on average they tend to be older and have a higher proportion of additional educational requirements when compared to other university populations. This requires modules be designed for novice design students with diverse backgrounds and capabilities.

For each module students are allocated to a tutor group of around 20 peers supported by a tutor responsible for subject tuition and pastoral care. The tutor–student relationship is key to being able to scale this educational model whilst retaining an appropriate level of individual student attention and support. Tuition is undertaken through a range of communication modes (online conferencing, phone, text, email, forums, social media, etc.) but the principle mode of tuition is feedback on student work. Students submit work through an online system, which is assessed by their tutor and processed through this same system for quality control and administration. Tutors provide extended, detailed feedback on student work allowing them to focus on specific learning needs based on their knowledge of the student. Put together, this is known as the Supported Open Learning (SOL) model (Ison 2000).

This model is central to learning at the OU and enables a response to the challenge of providing a studio education in a distance learning environment. Ensuring the optimum balance between learning, teaching and tuition requires careful consideration and design. When this balance is achieved a suitable environment for learning is possible (Lloyd 2012). At the OU a key catalyst in creating such an environment is an online studio tool, OpenDesignStudio (ODS), where students upload and share their work with peers and tutors in a module. ODS was designed and developed to fit within the University’s general Virtual Learning Environment (VLE) (Lotz et al. 2019). Together, the VLE, ODS, and the SOL model make the virtual studio at the OU. This study focuses on ODS as an active catalyst of the studio, but it should be considered as part of the wider learning and design environment described.

The studio context

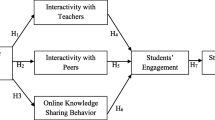

Early online studios relied on a translation of proximate studio practices using the technologies available (Wojtowicz 1995; Malins et al. 2003). Since then a range of studio types have emerged with varying characteristics, functions and features. With these come a range of pedagogical assumptions and variances and the specifics of a studio situated within particular subject domains are rarely fully considered (Little and Cardenas 2001). But there are two underlying commonalities: firstly, the notion of a shared learning and practice space and, secondly, a focus on activity and tangible outputs (e.g. Maher and Simoff 1999; Maher et al. 2000; Wilson and Jennings 2000; Broadfoot and Bennett 2003). These two features underpin the design of ODS: the main function of ODS is the communication and sharing of digital artefacts in a primarily visual way. Students upload or embed digital artefacts, such as images, to a series of slots that are then visible to other students and tutors (Fig. 1).

As can be seen in Fig. 1, the studio is divided into 4 virtual spaces (Module, Group, Studio Work and Pinboard), each a spatial analogy to a proximate studio. Firstly, there are two ‘semi-public’ spaces, analogous to studio pinup or presentation spaces: My Module shows work for the entire module; My Group, shows only the work of peers in the same tutor group. Secondly, there are two ‘semi-private’ spaces, analogous to a student’s personal work area: My Studio Work, in which students place specific design work; and My Pinboard, where students can place anything they wish.

Students upload their work to the My Studio Work area (Fig. 2), which provides a series of predefined slots (Studio Slots) specified in the module they are studying. Students are directed to complete the work and upload it as they progress through a module or at key points during assessment and this offers a certain degree of synchronicity, when many other students will be completing exactly the same activities around the same time. Completion of slots offers peers and tutors a good insight into student progress and is one of the main benefits of the studio.

In the My Pinboard area students may create and add as many Pinboard Slots as they wish and upload any type of material. Each design module encourages students to use Pinboard Slots in slightly different ways. Stage 1 students are assumed to need support to learn how to use this area effectively and are directed to use it at certain points in the learning design. However, stage 3, students are assumed to have developed their own ways of working and are encouraged to use the pinboard however it suits them.

All slots function in the same way; clicking on the thumbnail opens the full slot view (Fig. 3). In Slot View students can interact with their own and other students’ slots by adding text comments and using quick interaction buttons (favourite, smile, inspired, etc.).

All of the engagement and interaction criteria referred to in this paper come from measuring interaction with the ODS interface and functions just described.

Purpose and research questions

The aim behind the research project was to see how students progress in ODS across stages of study and, whether there are patterns of activity correlated to this progress. Previous research had focused on single cohorts and stage 1 study and the inquiry reported here expanded the research to include all other core design modules in the Design and Innovation Qualification.

The research questions, amended through the approach taken, were:

-

1.

Can we confirm the findings from the earlier stages of the project across all stages of study? Specifically, can we:

-

1.1

confirm the generally high engagement levels and describe particular engagement patterns?

-

1.2

confirm any correlation(s) between engagement actions and student success?

-

1.3

confirm the strong correlation between viewing and commenting engagement measures?

-

1.1

-

2.

Can we identify theory that fits these data, in particular well-described theories in general education research? Are there gaps in existing theory that emerge from the study?

These research questions guided the approach and methods throughout, constructing the overall methodology as part of the research process.

Approach and methods

Simple, single causative factors are difficult to establish in education research generally (Cohen et al. 2011) and are harder to identify in a distance learning setting, where it is not possible to observe students directly (Phipps and Merisotis 1999; Bernard et al. 2004). Hence, purely deductive approaches in distance education tend to be relatively rare and more usually include a blend of both deductive and inductive approaches (Cohen et al. 2011, p. 3). Beyond these, an abductive approach is required to construct theory, or generate ‘suitable hypotheses’, when current theories are insufficient, such as the case of much of education technology research (Hew et al. 2019). This aligns with Peirce’s original form of abduction as a pragmatic activity of exploration (Peirce 1955), perhaps best encapsulated by Frankfurt’s summary of it as a process to construct “the admissibility of hypotheses to rank as hypotheses.” (Frankfurt 1958). It also reflects approaches taken elsewhere in distance education research, intended to provide a ‘useful framework’ (Kear 2011) within which sense can be made of emerging observations, hypotheses and explanations in order to provide ‘useful answers’ (Denscombe 2008).

Hence, understanding issues around learning at a distance very often requires mixed methods applied in a process of inquiry. This project, like many others, required a critical interpretation of both quantitative and qualitative analyses iteratively in order to provide explanations of the data. General methods of descriptive statistical analyses, for example, are only of use when suitably and critically interpreted with an understanding of particular issues in online distance learning spaces (Bernard et al. 2004). This iteration of methodology reflects the argument made by Borrego et al. (2009): that, to make sense of the human process of learning, we need a ‘both-and’ approach to research methods.

The mixed methods approach taken, and reported in this paper, maintained a research ‘priority’ (Teddlie and Tasshakori 2006) of the analysis and explanation of the quantitative data. Qualitative methods supported reframing of analysis of the quantitative results and the subsequent testing of these to fit general and distance education theories. The focus of this paper is on these quantitative results and how they fit (or don’t) with such theories. Towards the end of the paper, existing theories are connected to create an overall developmental framework that better fits the results obtained, as well as proposing ways of future validation.

Approach to engagement and success

Early in the process a range of definitions of both success and engagement were established to understand how both could be measured and analysed in a rigorous way using the information available. A normative definition of engagement is difficult since it is necessarily a socio-psychological concept that can depend on a huge range of factors and theories (see Christenson et al (2012) for a comprehensive overview and anthology). An online system will only measure and record actions and information it has been designed to capture, and in any such specification there will be choices, prejudices and biases in terms of what is recorded or considered worth measuring. This often means that the information available for analysis is predominantly behavioural in nature (Fredricks and McColskey 2012). No specific theory of engagement activity was hypothetically applied other than a general, positive relationship between active use of ODS as time on task (Chickering and Gamson 1987), and student success. Hence, the initial approaches considered data that could be extracted from existing records and that related to activity within the studio as a proxy for time-on-task type engagements.

Measures of student engagement were defined using the following actions within ODS:

-

Studio slots the number of slots completed in the My Studio area by a student (or the percentage of required slots completed). Studio slots are work students complete as part of a module (see Fig. 2).

-

Slot views the number of times a student viewed any other slot completed by another student. (these data were only available for version 2 of ODS).

-

Comments (own) the number of comments a student made on one of their own slots

-

Comments (other) the number of comments a student made on other students’ slots

-

Feedback requests the number of times a student clicked the ‘Request feedback’ button on a slot.

-

Pinboard Slots the number of slots completed in the ‘My Pinboard’ area of ODS.

The measure of success per student was defined as follows:

-

Module result the overall grade (by ranking) awarded a student for completing a module according to standard university examinations procedures.

-

Qualification result the classification of the qualification awarded to a student based on module results.

Finally, all engagement data captured had a time dimension associated that allowed time-based analyses of student activity. This proved valuable in analysing individual student behaviour otherwise unavailable using general descriptive methods.

Quantitative data and analysis

Data were obtained from 8 module presentations across all three stages of study. The stage 2 and 3 modules have only one presentation per year, whereas the stage 1 module presents twice. This provided data covering the activity of nearly 3,000 students over a period of 3 years (Table 1). During this time period there was an update to ODS to refresh the interface and, although the functionality and learning design remained identical, the database redesign means that one engagement measure (Slot Views) does not have a full data set.

The column ‘Studio slots’ in Table 1 indicates the number of pieces of work students are directed to complete as part of the learning design (see Fig. 3). At stages 1 and 3 this number is fixed, but at stage 2 multiple images can be gathered together in a single ‘collection’ slot (for the analysis, the number of slots expected by the module design was used).

A further dataset of students was prepared and included students who had completed all three design modules as part of the Design and Innovation degree. This provided a dataset of 37 students, the ‘Qualification Group’, which was analysed using descriptive statistics. Students in this group were also interviewed (see below).

The engagement data was extracted from the databases of each ODS and module and qualification results data from relevant institutional repositories. All data were cleaned, sorted and formatted prior to analysis and additional tests were carried out to check the consistency of data and results from the analysis.

The following principle quantitative methods were used as part of this process:

-

Basic descriptive analyses in and across all presentations and stages;

-

Pearson Product Moment of Correlation and Spearman Rank Correlation

-

Specific descriptive statistical measures and ratio analyses

-

Time-based analysis using descriptive statistics and visual analysis of individual / cohort comparisons

Qualitative data and analysis

As mentioned, mixed methods were used to respond to the general research question. Whilst the priority of this study was the quantitative analysis, the qualitative data were used to triangulate, confirm (or contradict) the findings for the quantitative findings. The qualitative results were also important to the later abductive approach to theory building, outlined in the Discussion section.

This Qualification Group sample were invited to take part in a follow up interview and 11 students responded to this invitation (29.7% response rate) and were subsequently interviewed by 4 interviewers using a common interview guide. Students were further encouraged to respond discursively and in their own words and follow up questions were asked to draw out observations and comments. Each interview was audio recorded and hand-written notes were used to fill in a response-template which was then used for content analysis. The audio recordings were used to clarify any notes taken, when needed.

Content analysis was conducted using open coding (Corbin and Strauss 2015) to collect similar comments and categorise these into general themes on the use, properties and affordances of ODS as perceived by students. The coding and themes were checked by a second paper author assessing the interview notes. This resulted in comments coded into 6 main themes: Interface; Interaction; Comments; Value; Feedback requests; Skill development.

A third member of the project team triangulated the themes against other project results, particularly in terms of any themes that contradicted the findings. No such contradictions were identified and all comments supported the quantitative findings presented in this paper. Examples of student comments are given in the Discussion section.

Results

The results presented are organised around the methods and then thematically to construct a response to the initial research questions.

Early progress, later disengagement

The raw totals for all engagement measures captured are set out in Table 2.

These data are more usefully read comparing module presentations and using the average engagement per student, as presented in Table 3 and visualised in Fig. 4.

To get a better sense of the differences between study stages, averaging the engagement values gives a better comparison. Similarly, the number of Studio Slots students are required to complete does differ between stages, so a more useful measure when comparing stages is the percentage completion. These overall averages of engagement by study stage are shown in (Table 4 and Fig. 5).

A further useful visualisation of changes in engagement measures across stages is provided in Fig. 6.

All of these results show a clear drop in almost all engagement measures as the stage of study increases (Figs. 5 and 6). The drops in engagement measures appears reasonably linear with some slight indication that a higher magnitude drop occurs between stage 1 and stage 2 study.

Engagement levels at stage 1 are generally high across all measures (Fig. 4), from module required work in Studio slots to elective activity as suggested by Pinboard Slots and Viewing numbers (by a significant factor). Similarly, stage1 students are 2–3 times more likely to comment on their own slots compared to stage 3 students and are 3–4 times more likely to comment on other slots (Fig. 5).

As a further check, the engagement of the Qualification Group was analysed and a decrease in engagement measures was also noted. Examples of Qualification Group engagement measures are shown in Fig. 7 and data for all show a similar reduction in engagement measures as the stage of study progresses.

The Qualification Group students identified here are generally representative of the distribution of engagement measures for the overall student population, making them a useful and representative triangulation when checking results. All engagement levels for the Qualification Group reduce as study stage progresses (Fig. 7), for example, the number of Studio slots completed, averaged across the Qualification Group, drops from 80% at Stage 1 to 53% at stage 2, ending at 11% at stage 3.

The engagement measure Feedback Requests was consistently low for all stages, and across all groups, confirming previous findings (as well as comments from students and tutors) that this feature was simply not used or considered valuable.

Linking engagement and success

Pearson Product Moment of Correlation (PPMC) was calculated to test the relationship of all engagement measures to student results (Table 4).

Earlier studies suggested that a nonlinear relationship might exist (Jones et al. 2017; Lotz et al. 2015) and the data contains individual outlying data points (a small number of students who have very high levels of engagement). Both of these factors can have a significant effect on the results from a PPMC test, hence, Spearman Rank Correlations were calculated by ranking engagement measures and correlating to the (existing) rank of module results. These results are given in Table 5.

Both correlation analyses allow us to rule out the possibility of a non-linear correlative relationship and confirms that divergent data points have had no statistically significant effect on the results obtained.

Generally, these results show no consistent correlations between individual or overall engagement measures and module results across all stages of study. There are, however, relatively consistent, statistically significant, weak-moderate, and moderate-strong correlations for some engagement measures at Stage 1 (Fig. 7). In particular: Comments (own) (ranging from ρ = 0.316 to ρ = 0.467), Comments (other) (ranging from ρ = 0.404 to ρ = 0.591), and Pinboard Slots (ranging from ρ = 0.316 to ρ = 0.467). The single strongest correlations were between Views (other) and student success in stage 1 study (a consistently moderate statistically significant correlation ranging from ρ = 0.468 to ρ = 0.610). This result was first reported using a smaller dataset in Jones et al. (2017) and can be confirmed as holding true in this larger study.

Another previous finding to be tested was any positive correlation between students viewing and then commenting on slots – that students who viewed work were more likely to go on and comment on work. The results of the analysis of these data are provided in Table 6.

For Stage 1, there would appear to be a reasonably consistent conversion ratio of about 15% – that is, for every 10 slots viewed made, 1.5 comments are made. At stage 3, interestingly, this ratio is far higher at just over 1:1. Given the relatively low numbers of active contributors on that module, however, it is not possible to claim a general pattern with confidence. What is consistent is that students are more likely to make comments on other students’ slots than they are on their own slots. The correlation of Views to Comments is one of the strongest in the entire study and a significant identifier of student success.

Student behaviour and patterns of engagement

The final method applied descriptive statistics over time to consider what types and patterns of engagement there might be. Weekly totals and weekly averages for all engagement measures were plotted for each module over a period of 35 weeks (all OU modules have a 30 week standard pattern). An example of these plots is shown in Fig. 8.

Analysing the time-based data indicated expected patterns and changes in engagement behaviour and Fig. 8 is quite typical of the clear peaks and troughs of engagement at certain points in a module. Almost all engagement measures increase around assessment points and reduce around certain external events and calendar points in the year (e.g. New Year, school holidays, and even weather). These were expected results, well-documented for all modes of learning and teaching (Snyder 1971 in Gibbs and Simpson (2004)). What is confirmed in this study is that these factors influencing engagement apply to online, distance studio behaviour and may, depending on the blend of VLE tools used, be a more effective means of measuring ongoing engagement in design modules.

The timing of online learning activity has been linked to student success generally (Nguyen et al. 2018) and early results from this project indicate that, whilst this may be true across an entire cohort, individual student engagement is far more complex than simple predictive models might suggest. Some of the mechanisms behind these behaviours are presented in the discussion and future work will report on these findings in greater detail.

All modules demonstrated a general decrease in engagement over time, another well-documented effect in distance education, influenced by the factors of part time education (e.g. NAO 2007) distance learning (e.g. Simpson 2013), and the use of online learning environments (e.g. Clay et al. 2008). The exception was Studio Slots, which tended to increase over time. This is believed to be due to students catching up as they progress through each module and culminating in a larger catch up for the final assessment.

The rate of change in engagement varied by stage of study. At stage 1, average and total weekly engagement levels begin generally high and all measures (except Studio Slots) have a relatively consistent and small drop-off as the module progresses. This demonstrates a high and consistent level of engagement from a relatively large proportion of the student population. For both stages 2 and 3 study, weekly totals start very high and drop a third of the way into the module, whilst average weekly engagement remains constant. This demonstrates that both engagement and the number of students engaging drop as the module progresses.

At stage 3 the general drop in average weekly engagement is less acute and for some measures it actually increases. Given the generally low initial engagement levels, this could indicate the presence of a strong core network of students who have identified a personal value in engagement in the studio.

Discussion

Responding to research question 1.1, the results confirm the previous findings that there are generally high levels of engagement at stage 1 and that students are clearly motivated to do more than simply respond to module and assessment requirements. A lower but reasonably high initial engagement is in evidence at stage 2 but this drops off at a slightly faster rate than. Stage 3 engagement is the lowest, drops off quickly and never recovers, although a small, persistent core network of students maintains engagement.

Engagement measures at stage 1 can be correlated moderately and strongly to student outcomes but this correlation varies at stages 2 and 3 (question 1.2). There are some persistent patterns of clustered behaviour in smaller numbers of students at all stages, such as maintaining a personal network and the behaviour in research question 1.3 (the ‘conversion’ of viewing to commenting) is a similarly persistent pattern of behaviour that holds across all stages, albeit at different intensities.

These activity behaviours and the ‘shape’ of student engagement suggests a response to Question 2, in terms of what theories might explain them. The most relevant theories are now considered in turn. All quotes are from students in the Qualification Group to illustrate discussion and analysis.

Listening-in

A surprise finding from the project is the correlation between student success and viewing other students’ work. This was the single strongest correlation in the entire study and suggests that students are strongly motivated to look at the work of their peers and that this action correlates to successful academic outcomes.

Viewing other students’ work is often considered a passive behaviour. Indeed, it was an action not even measured in the first version of ODS in favour of more active measures. We argue that this activity is analogous to three related theories of learning that focus on less demonstrably active processes. Firstly, legitimate peripheral learning within a community of practice identified by Lave and Wenger (1991). Secondly, based on Lave and Wenger, the activity of listening-in identified in a design studio setting by Cennamo and Brandt (2012). Thirdly, the online equivalent of the former two known as ‘lurking’ in distance learning, where students are viewing/reading but not contributing to online tools such as forums, online tutorials, or discussion groups (Beaudoin 2002; Dennen 2008; Schneider et al. 2013).

Lurking, listening-in and legitimate peripheral learning all suggest that informal activities benefit students and explicitly active forms of interaction are not the only means by which learning takes place. The present study confirms this contention with respect to virtual studios – specifically, that there is a significant, positive correlation between viewing slots and student success. Students also recognise the value in this viewing behaviour by how it supports the development of confidence and design ability:

“…sometimes you think ‘Am I doing the right thing? Have I taken this idea the right way?’ So, it is quite nice to see what everyone else is doing; nice to know you’re thinking along the same lines but at the same time people are thinking slightly differently.” (Qualification Group student).

However, this doesn’t fully explain the levels of engagement and in particular why these are so high at the start of any module. It is unlikely that a novice student and designer would fully engage in listening-in until they know how to get some benefit from it. We argue that a simpler mechanism leading to listening is evidenced by the results reported here: social comparison.

Automatic social comparison

Social learning mechanisms are important in self-assessing personal capability (Festinger 1954) and all students engage in automatic acts of social comparison (Gilbert et al. 1995). Student feedback in this study confirmed that students make use of ODS (explicitly and implicitly) to compare their work to other students: to gauge their progress or to compare the quality of their work, as identified in the use of ODS elsewhere (Thomas et al. 2016).

We propose that social comparison explains why there are high initial levels of engagement reported in all modules followed by differing magnitudes of drop in engagement. All three modules have early activities in ODS and students use these to immediately compare their work with other students. At stage 1 the sustained use of social comparison is evidenced by the correlation of viewing slots to student success, a finding triangulated in student feedback identifying comparison as a specific motivation for engagement (Lotz et al. 2015):

“Because you were all working on the same task and looking at others interpretations it made you realize you had to be open and just look and not judge. You learn to see the good points in others approaches and how to build on it in your own work.”(Qualification Group student).

At stage 3, the very quick drop in engagement suggests some initial motivation for engagement but that this is not sustained. Student feedback noted (specifically) that the absence of other students’ work led to disengagement:

“I think that the interaction in U101 (Year 1) was brilliant but decreasing levels of interaction at higher levels affected my own interaction and enjoyment. There are areas that could be worked in higher levels where interaction could be increased.” (Qualification Group student).

This suggests that, unless sufficient initial use (and motivation) is achieved, that a ‘negative feedback loop’ occurs, where lower numbers of students engaging reduce the opportunities to find the ‘right’ level of comparison (Festinger 1954) in turn leading to a further reduction in engagement, and so on. However, for the (very) small group of students who continued to use it, a core stable network was established and deemed valuable to students, at least in part due to the value placed on opportunities for comparison. Reasons for the differences between these stages are discussed below.

Online presence

In order to engage in such comparisons, social presence (Short et al. 1976; Gunawardena and Zittle 1997) is a vital proxy for physical proximity, particularly in a distance learning context (Munro 1991). Social presence is (initially) taken here to mean the extent to which a person is ‘perceived to be real’ and we propose that ODS supports presence directly through student work and interactions, providing the ‘social glue’ necessary to support communication and community formation (Kear 2011). It was clear that students also recognised the personal value in projecting their work socially:

“For me, as not very confident, it was important to share and extend my ideas …….. I was often surprised what the variety was in how people had interpreted the task” (Qualification Group student).

This may explain why Feedback Requests are not used (as reported in the results section): because this feature forces students to place both their work and their online presence in the same artefact, they are forced to associate ‘themselves’ with an immediate position of needing help. Announcing that ‘I need help’ to hundreds of students is not the same as asking for constructive feedback on a piece of work. This latter detail, the higher than expected student engagement, and the social nature of comments at stage 1 study supports Armellini and De Stefani (2016) proposal that social presence is ‘central to higher order thinking’ and a necessary condition of both teaching and cognitive presence, a point not lost on students themselves:

“It gave an element of community, a place where all us newbies could rock up and share our wares and not be embarrassed (well maybe the first time)’. Distance learning is hard so the more opportunity you have to interact with people the easier your journey will be” (Qualification Group student).

In this quote there are clear indicators of identities emerging and that these are shared experiences in a common group of people, a key attribute in constructing (or contributing to) a community of practice.

Expanding findings to higher stages of study

The second research question considered extending the findings at stage1 to stages 2 and 3 and the results show that this is only partially possible. There were signs of similarly high engagement levels at the start of stage 2 but these reduced quickly in the first part of the module. Stage 3 showed the lowest initial engagement levels and a similar drop in relative, overall engagement to the stage 2 module. No consistent, significant correlation between engagement and student success at stages 2 or 3 was identified.

Finally, at all stages of study there was evidence of high, persistent engagement amongst a core group of students, creating a stable core network, evidenced by usage data, student interviews, and general student feedback. For many of these students, the value of maintaining this network was explicit:

“I tended to have my favourites … people that I’d be constantly looking at their things […[ they were my role models.” (Qualification Group student).

The fact that the same software is used across all modules, that modules do not substantially change from year to year, and the high student numbers in this study allow us to confirm another important finding: individual tools and software are necessary, but insufficient, conditions in creating a successful online design studio. In other words, the tools and software are not enough to fully explain the complex range of positive and negative behaviours and experiences observed – the whole learning environment contributes to the success (or failure) of a virtual studio.

Stage 2 is composed mostly of students progressing from stage 1 and we see similar levels of initial engagement at both stages of study. The stage 2 module is, however, slightly different in the focus of ODS use. Students concentrate more on their own design work as they develop advanced design skills through a series of focused periods of design activity. Social comparison is still encouraged, and it does take place in the first part of the module, but it drops off after a holiday period. This indicates that the size and momentum required to sustain a learning community are most likely insufficient (Kear 2011; Donelan et al. 2010), partly due to the lower overall numbers of students at stage 2 (on average about half of the population at stage 1) and partly due to the focus on the development of individual design competency of the module. Once again, this is a point that students are not only aware of through online engagement, but also have a sense of the factors that that affect it:

That sharing aspect of the community, that vision, is a golden goal. However it can disintegrate if people do not participate fully.” (Qualification Group student).

At stage 3 the low initial engagement is affected by having a large proportion of students who are completely new to design and working in a studio of any kind, not just a VDS. Whilst the stage 3 module has a short induction to using ODS, it seems that this is not enough to initiate the necessary personal and social behaviours required for sustained, positive engagement. This particular student group has little or no experience of social comparison or presence and their experience of a community of practice is a very different one to that required in design. As a result, the necessary habits formed from listening-in, social comparison and the development of community of practice observed at prior stages of study, have not been formed, far less developed. This change in group dynamic seems to affect the majority of the remaining student cohort, reducing engagement in already inducted sub-groups.

“I really loved the concept and started using it. But I stopped […] because there was no feedback; no interaction […] from other students” (Qualification Group student, Stage 3 only).

This ‘break’ in the continuity of staged development indicates that experience in studio working is as important to develop as other design attributes. Such implicit capabilities are very often taken for granted in proximate studios because they simply take place over long periods of continuous time. In a distance setting this is often not possible, suggesting that an alternative approach to the longitudinal development of these abilities is required.

A hybrid social learning model

None of the theories just outlined explain all the results of all individual models of student engagement across all study stages. This is perhaps unsurprising since, as many design educators will know intuitively, the process of learning to become a designer is neither a simple nor a linear one. Hence, it is a good reminder that single and simple theories have their limitations and the work here confirms this in a distance setting. The following quote by a student in the Qualification Group outlines the depth and complexity of interaction in ODS:

“As time went on I became less scared of uploading something and what the response would be, you can think harder about something when you get critical feedback.” (Qualification Group student).

For this student, there is a clear sense of development in terms of how they related to working in ODS. It demonstrates, supported by the results of the study, a strongly social model of learning taking place, a finding aligning with others in contemporary design studio research (e.g. Schnabel and Ham 2012; Sidawi 2012). But this social model is not a single or simple one: it requires consideration of a series of learning theories and models and evidence of their interrelations are emerging from the work.

Engagement is initiated by social comparison and builds on social and cognitive presence to enable networks to form communities of practice, within which students are personally motivated to engage to a learning purpose, as previously reported (Lotz et al. 2015), not unlike the development of presence and motivation required in instant messaging environments (Huang 2017). A significant part of this activity is in the form of legitimate peripheral learning, which can reinforce motivations of students to continue (and value) use of VDS’s. This social activity and presence is argued to be similar to Vygotsky’s (Vygotsky 1978) Zone of Proximal Development (ZPD), or perhaps more closely aligned with the ideas proposed in (Bronack et al. 2006), a version of ZPD applied in a proximate studio setting. In the online setting, physical proximity is replaced by online social activity and presence. Continued activity then leads to encultured behaviours, consisting of actions, discussions, habits etc., associated with a practice identity, similar to Lave and Wenger’s community of practice. The position of ODS in this hybrid theory is that of catalyst to the activities, behaviours and formation of a community around a common identity and purpose that gives value to individual students in their learning journey:

“Really liked sharing, we can all learn from the block (course) materials but design is about being inspired by other people’s work.” (Qualification Group student).

The studio, then, is a necessary, but insufficient, condition for the emergence of learning and a community of practice. It is clear from the results presented here that without careful design and consideration of how the studio should operate that it can inhibit student development, just as any other poorly run studio would. Indeed, that is perhaps the most important lesson we would wish to convey to other educators: the design and emergence of a virtual design studio is at least as complex as its physical counterpart. Hence, the same time, attention and resource is required: a VDS may be a significant catalyst for individual student interactions to take place, but it is the community of practice that emerges and develops that creates the studio ‘place’ itself.

Limitations and further work

As noted in the introduction, this study is a unique longitudinal study of VDS use in design education without any major precedents. A large amount of design education research is based on small-scale studies (Lyon 2011) and this is compounded by the rapid development of technology making platforms obsolete far more quickly than they can be studied. Hence it can be difficult to assess such results using comparison or contrast to other educational settings. This limitation highlights the urgent need for further research in this area and we set out a few further limitations of the study with this in mind.

Firstly, confirmations are required to validate certain findings, in particular, any patterns in later study stages. The current study has a high number of early study stage results but would benefit from further results at all subsequent stages. The particular uses students make of a virtual studio to develop as a design practitioner has yet to be fully explored in as much detail as it has in proximate studios. Some of the key characteristics shared by proximate and virtual studio use could make useful references for such work. For example, how do individual behaviours adapt and change with study level with respect to the development of a student’s design personality, similar to the development of a ‘Gestalterpersönlichkeit’ presented by Lanig (2019)?

Secondly, the data and results presented here are useful in terms of direct comparison and contrast to other similar studies. This could expand the contexts within which the results remain valid or to refine (or even adjust) the results themselves. In addition, testable models can be created using the theoretical framework outlined above, including the engagement measures as guides (and possibly metrics) for empirical testing. For example, by controlling for other engagement factors, Listening-in could be measured in a range of contexts using any proxy for the engagement measure presented here.

Finally, further work is needed on defining what forms of social learning are taking place and how these relate to proximate studios. We informally identified many examples of social comparison and there was no apparent intersection of literature with design education that focused specifically on social learning (only relatively sparse individual studies). The literature on general social learning was applicable but not completely or perfectly. Hence, further work to develop ideas of social learning within a design education setting would be of benefit, as exemplified by the development of listening-in as the design relevant form of legitimate peripheral learning (Cennamo and Brandt 2012).

Conclusion

This study shows that a VDS can support complex social learning and interaction, leading to successful student experiences and outcomes. Using suitable learning design, students can be intrinsically motivated to use social comparison when viewing other students’ work. To achieve this, students use and develop social presence, even when they are engaging in less active behaviours, such as ‘listening-in’. As these become valuable, habitual actions, students are more likely to engage in further active engagements, which in turn, can lead to communities of practice emerging.

This novel theoretical frame may be used by other scholars and educators to implement and test VDS based learning designs to improve social and peer learning in their courses. It will also inform and update models of social learning applied in proximate studio contexts, where the intersection with general education literature remains largely undeveloped.

To successfully support this social mode of learning in a VDS a number of important conditions must be considered. Firstly, the ‘studio’ has to be considered as comprising the learning design, the VDS software or tools, and the subsequent support of emergent activity and behaviours. These, together, bring the studio to ‘life’. Secondly, student induction into the use of a VDS is vital and should be seen as part of an ongoing development of studio practice as a mode of learning. At a distance this has to be an explicit part of the learning design, not an implicit assumption. Thirdly, the learning design must be considered at a range of scales to develop ‘simple’ activities into valuable design behaviours. Simple social comparison can lead to communities of practice when considered at appropriate scales.

Finally, to end on a reflective note, assumptions we make as teachers and learning designers are often incorporated in our practices. Opportunities to make implicit assumptions ‘visible’ are exceptionally valuable and we suggest that VDS research can allow this. What we as learning designers measure is largely driven by what we think is valuable. But this is not necessarily indicative of learning taking place and this study shows it certainly does not measure all learning taking place. Given that, what other learning might we not be measuring?

References

Armellini, A., & De Stefani, M. (2016). Social presence in the 21st century: An adjustment to the community of inquiry framework: Social presence and the community of inquiry framework. British Journal of Educational Technology, 47(6), 1202–1216. https://doi.org/10.1111/bjet.12302.

Arvola, M., & Artman, H. (2008). Studio life: The construction of digital design competence. Digital Kompetanse, 3, 78–96.

Beaudoin, M. F. (2002). Learning or lurking? Tracking the “invisible” online student. Internet and Higher Education, 5(2), 147–155. https://doi.org/10.1016/S1096-7516(02)00086-6.

Bernard, R., Abrami, P., Lou, Y., & Borokhovski, E. (2004). A methodological morass? How we can improve quantitative research in distance education. Distance Education, 25(2), 175–198. https://doi.org/10.1080/0158791042000262094.

Borrego, M., Douglas, E. P., & Amelink, C. T. (2009). Quantitative, qualitative, and mixed research methods in engineering education. Journal of Engineering Education, 98(1), 53–66.

Bradford, J. (1995). Critical Reflections 1. In J. Wojtowicz (Ed.), Virtual Design Studio (1st ed.). Hong Kong: Hong Kong University Press.

Broadfoot, O., & Bennett, R. (2003). Design studios: Online? Comparing traditional face-to-face design studio education with modern internet-based design studios. In Apple University consortium academic and developers conference proceedings 2003 (pp. 9–21).

Bronack, S., Riedl, R., & Tashner, J. (2006). Learning in the zone: A social constructivist framework for distance education in a 3-dimensional virtual world. Interactive Learning Environments, 14(3), 219–232. https://doi.org/10.1080/10494820600909157.

Cennamo, K., & Brandt, C. (2012). The “right kind of telling”: Knowledge building in the academic design studio. Educational Technology Research and Development, 60(5), 839–858. https://doi.org/10.1007/s11423-012-9254-5.

Chickering, A. W., & Gamson, Z. F. (1987). Seven principles for good practice in undergraduate education. AAHE Bulletin, 39(7), 3–7. https://doi.org/10.1016/0307-4412(89)90094-0.

Christenson, S. L., Reschly, A. L., & Wylie, C. (Eds.) (2012). Handbook of research on student engagement. Boston, MA, Springer US. https://doi.org/10.1007/978-1-4614-2018-7. Retrieved December 28, 2017

Clay, M. N., Rowland, S., & Packard, A. (2008). Improving undergraduate online retention through gated advisement and redundant communication. Journal of College Student Retention: Research, Theory & Practice, 10(1), 93–102. https://doi.org/10.2190/CS.10.1.g.

Clinton, G., & Rieber, L. P. (2010). The studio experience at the University of Georgia: An example of constructionist learning for adults. Educational Technology Research and Development, 58(6), 755–780. https://doi.org/10.1007/s11423-010-9165-2.

Cohen, L., Manion, L., & Morrison, K. (2011). Research methods in education (7th ed.). Oxon: Routledge.

Corbin, J., & Strauss, A. (2015) Basics of qualitative research: Techniques and procedures for developing grounded theory, 4th edn. Sage Publications Ltd. Available at https://uk.sagepub.com/en-gb/eur/basics-of-qualitative-research/book235578.

Crick, R. D. (2012). Deep engagement as a complex system: Identity, learning power and authentic enquiry. In: S.L. Christenson, A.L. Reschly, C. Wylie C. (Eds.), Handbook of Research on student engagement (pp. 675–694). Boston, MA: Springer. https://doi.org/10.1007/978-1-4614-2018-7_32.

Crowther, P. (2013). Understanding the signature pedagogy of the design studio and the opportunities for its technological enhancement. Journal of Learning Design, 6(3), 18–28.

Dennen, V. P. (2008). Pedagogical lurking: Student engagement in non-posting discussion behavior. Computers in Human Behavior, 24(4), 1624–1633. https://doi.org/10.1016/j.chb.2007.06.003.

Denscombe, M. (2008). Communities of practice: A research paradigm for the mixed methods approach. Journal of Mixed Methods Research, 2(3), 270–283. https://doi.org/10.1177/1558689808316807.

Donelan, H., Kear, K., & Ramage, M. (Eds.). (2010). Online communication and collaboration: A reader (1st ed.). New York: Routledge.

Festinger, L. (1954). A theory of social comparison processes. Human Relations, 7(2), 117–140. https://doi.org/10.1177/001872675400700202.

Frankfurt, H. G. (1958). Peirce’s notion of abduction. The Journal of Philosophy, 55(14), 593–597. https://doi.org/10.2307/2021966.

Fredricks, J. A., & McColskey, W. (2012) The measurement of student engagement: A comparative analysis of various methods and student self-report instruments. In S.L. Christenson, A.L. Reschly, C. Wylie (Eds.), Handbook of research on student engagement (pp. 763–782). Boston, MA: Springer. https://doi.org/10.1007/978-1-4614-2018-7_37

Gibbs, G., & Simpson, C. (2004). Conditions under which assessment supports students Learning. Learning and Teaching in Higher Education, 1, 5–33.

Gilbert, D. T., Giesler, R. B., Morris, K. A. (1995). When comparisons arise. Journal of Personality and Social Psychology, 69(2), 227–236.

Gunawardena, C. N., & Zittle, F. J. (1997). Social presence as a predictor of satisfaction within a computer-mediated conferencing environment. American Journal of Distance Education, 11(3), 8–26. https://doi.org/10.1080/08923649709526970.

Hew, K. F., Lan, M., Tang, Y., Jia, C., & Lo, C. K. (2019). Where is the “theory” within the field of educational technology research? British Journal of Educational Technology, 50(3), 956–971. https://doi.org/10.1111/bjet.12770.

Houghton, N. (2016). Six into one: The contradictory art school curriculum and how it came about. International Journal of Art & Design Education, 35(1), 107–120. https://doi.org/10.1111/jade.12039.

Huang, Y.-M. (2017). Exploring students’ acceptance of team messaging services: The roles of social presence and motivation: Exploring students’ acceptance of team messaging service. British Journal of Educational Technology, 48(4), 1047–1061. https://doi.org/10.1111/bjet.12468.

Ison, R. (2000). Supported open learning and the emergence of learning communities. The case of the Open University UK’. In R. Miller. (Ed.), Creating learning communities. models, resources, and new ways of thinking about teaching and learning (pp. 99–96). Foundation for Educational Renewal, Inc., pp. 90–96. https://oro.open.ac.uk/37380/.

Jones, D., & Dewberry, E. (2013). Building information modelling design ecologies: A new model? International Journal of 3-D Information Modelling, 2(1), 53–64. https://doi.org/10.4018/ij3dim.2013010106.

Jones, D., Lotz, N., & Holden, G. (2017) Lurking and learning: Making learning visible in a virtual design studio. In Proceedings of the LearnXdesign London 2017 conference, London, (pp. 176–183). https://oro.open.ac.uk/52977/.

Karabulut-Ilgu, A., Jaramillo Cherrez, N., & Jahren, C. T. (2018). A systematic review of research on the flipped learning method in engineering education: Flipped learning in engineering education. British Journal of Educational Technology, 49(3), 398–411. https://doi.org/10.1111/bjet.12548.

Kear, K. (2011). Online and social networking communities: A best practice guide for educators. London: Routledge.

Kimbell, L. (2011). Rethinking design thinking: part I. Design and Culture, 3(3), 285–306. https://doi.org/10.2752/175470811X13071166525216.

Lanig, A. K. (2019). Educating designers in virtual space: A description of hybrid studios. In Proceedings of DRS learn X design 2019, Ankara, Turkey (pp. 247–256). https://doi.org/10.21606/learnxdesign.2019.01079.

Lave, J., & Wenger, E. (1991). Situated learning : legitimate peripheral participation, learning in Doing: Social, cognitive, and computational perspectives (1st ed.). Cambridge: Cambridge University Press.

Little, P., & Cardenas, M. (2001). Use of “studio” methods in the introductory engineering design curriculum. Journal of Engineering Education, 90(3), 309–318.

Lloyd, P. (2011). Does design education always produce designers? In Conference for the international association of colleges for art, design and media (CUMULUS), Paris, France, (Vol. Paris, p. tbc).

Lloyd, P. (2012). Embedded creativity: teaching design thinking via distance education. International Journal of Technology and Design Education, 23(3), 749–765. https://doi.org/10.1007/s10798-012-9214-8.

Lotz, N., Jones, D., Holden, G. (2015) Social engagement in online design pedagogies Conference Item. In R.V. Zande, E. Bohemia, & I. Digranes (Eds.), Proceedings of the 3rd international conference for design education researchers (pp. 1645–1668). Chicago, IL: Aalto University. https://doi.org/10.13140/RG.2.1.2642.5440.

Lotz, N., Jones, D., & Holden, G. (2019) OpenDesignStudio: Virtual studio development over a decade. In Proceedings of DRS learn X design 2019, Ankara, Turkey (pp. 267–280). https://doi.org/10.21606/learnxdesign.2019.01079.

Lyon, P. (2011). Design education—learning, teaching and researching through design (1st ed.). Farnham: Gower Publishing Ltd.

Maher, M. L., & Simoff, S. J. (1999). Variations on the virtual design studio. In Proceedings of fourth international workshop on CSCW in design (pp. 159–165).

Maher, M. L., Simoff, S. J., & Cicognani, A. (2000). Understanding virtual design studios (1st ed.). London: Springer.

Malins, J., Gray, C., Pirie, I., Cordiner, S., & Mckillop, C. (2003) The virtual design studio: Developing new tools for learning , practice and research in design. In Proceedings of the 5th European academy of design conference (pp. 1–7). https://openair.rgu.ac.uk/handle/10059/622.

Marshalsey, L. (2015). Investigating the experiential impact of sensory affect in contemporary communication design studio education. International Journal of Art & Design Education, 34(3), 336–348.

Mewburn, I. (2011). Lost in translation: Reconsidering reflective practice and design studio pedagogy. Arts and Humanities in Higher Education, 11(4), 363–379. https://doi.org/10.1177/1474022210393912.

Munro, P. J. (1991) Presence at a distance: the educator-learner relationship in distance education and dropout. The University of British Columbia

NAO. (2007). Staying the course: The retention of students in higher education, London.

Nguyen, Q., Huptych, M., & Rienties, B. (2018) Linking students’ timing of engagement to learning design and academic performance. In Proceedings of the 8th international conference on learning analytics and knowledge (LAK ’18), Sydney, New South Wales, Australia (pp. 141–150), ACM Press. https://doi.org/10.1145/3170358.3170398. Retrieved November 7, 2018

Orr, S. and Shreeve, A. (2018) Art and design pedagogy in higher education: knowledge, values and ambiguity in the creative curriculum. Routledge research in education, London ; New York, Routledge, Taylor & Francis Group.

Peirce, C. S. (1955). Philosophical writings of peirce. North Chelmsford: Courier Corporation.

Phipps, R., & Merisotis, J. (1999). What’s the difference? A review of contemporary research on the effectiveness of distance learning in higher education. Washington, DC: Information Analyses, Institute for Higher Education Policy.

Richburg, J. E. (2013). Online learning as a tool for enhancing design education. Kent: Kent State University.

Robbie, D., & Zeeng, L. (2012). Flickr: Critique and collaborative feedback in a design course. In C. Cheal, J. Coughlin, & S. Moore (Eds.), Transformation in teaching: Social media strategies in higher education (1st ed., pp. 73–91). Santa Rosa: Informing Science Press.

Rodriguez, C., Hudson, R., & Niblock, C. (2018). Collaborative learning in architectural education: Benefits of combining conventional studio, virtual design studio and live projects: Collaborative Learning in Architectural Education. British Journal of Educational Technology, 49(3), 337–353. https://doi.org/10.1111/bjet.12535.

Rowe, M. (2016). Developing graduate attributes in an open online course: Open online courses and graduate attributes. British Journal of Educational Technology, 47(5), 873–882. https://doi.org/10.1111/bjet.12484.

Saghafi, M. R., Franz, J., & Crowther, P. (2012). Perceptions of physical versus virtual design studio education. International Journal of Architectural Research, 6(1), 6–22.

Schadewitz, N., & Zamenopoulos, T. (2009) Towards an online design studio: A study of social net- working in design distance learning Conference Item. In International association of societies of design research (IASDR) conference 2009, Seoul.

Schnabel, M. A., & Ham, J. J. (2012). Virtual design studio within a social network. Journal of Information Technology in Construction, 17, 397–415.

Schneider, A., Von Krogh, G., & Jager, P., (2013). What’s coming next? Epistemic curiosity and lurking behavior in online communities. Computers in Human Behavior, 29(1), 293–303. https://doi.org/10.1016/j.chb.2012.09.008.

Schön, D. A. (1987). Educating the reflective practitioner (1st ed.). San Francisco: Wiley.

Short, J., Williams, E., & Christie, B. (1976). The social psychology of telecommunications. Hoboken: Wiley.

Shulman, L. S. (2005). Signature pedagogies in the professions. Daedalus, 134(3), 52–59. https://doi.org/10.1162/0011526054622015.

Sidawi, B. (2012). The impact of social interaction and communications on innovation in the architectural design studio. Buildings, 2(4), 203–217. https://doi.org/10.3390/buildings2030203.

Simpson, O. (2013). Student retention in distance education: are we failing our students? Open Learning: The Journal of Open, Distance and e-Learning, 28(2), 105–119. https://doi.org/10.1080/02680513.2013.847363.

Sochacka, N. W., Guyotte, K. W., & Walther, J. (2016). Learning together: A collaborative autoethnographic exploration of STEAM (STEM + the Arts) education: A collaborative autoethnographic study of steam education. Journal of Engineering Education, 105(1), 15–42. https://doi.org/10.1002/jee.20112.

Teddlie, C., & Tasshakori, A. (2006). A general typology of research designs featuring mixed methods. Research in the Schools, 13(1), 12–28.

Thomas, E., Barroca, L., Donelan, H., Kear, K., & Jefferis, H. (2016) ‘Online conversations around digital artefacts: the studio approach to learning in STEM subjects. In S. Cranmer, M. de Laat, T. Ryberg, & J.A. Sime (Eds.), Proceedings of the 10th international conference ISBN 978-1-86220-324-2 on networked learning (pp. 1–8).

Thomson, N. S., Alford, E. M., Liao, C., Johnson, R., & Matthews, M. A. (2005). Integrating undergraduate research into engineering: A communications approach to holistic education. Journal of Engineering Education, 94(3), 297–307.

Vygotsky, L. S. (1978). Mind in society: Development of higher psychological processes. Cambridge, MA: Harvard University Press.

Wilson, J. M., & Jennings, W. C. (2000). Studio courses: How information technology is changing the way we teach, on campus and off. Proceedings of the IEEE, 88(1), 72–80.

Wojtowicz, J. (Ed.). (1995). Virtual design studio (1st ed.). Hong Kong: Hong Kong University Press.

Funding

This project was part funded by eSTEeM, an Open University initiative to support scholarship in STEM education.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical approval

All work was carried out strictly in accordance with institutional ethical standards.

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jones, D., Lotz, N. & Holden, G. A longitudinal study of virtual design studio (VDS) use in STEM distance design education. Int J Technol Des Educ 31, 839–865 (2021). https://doi.org/10.1007/s10798-020-09576-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10798-020-09576-z