Abstract

Coordinated disinformation campaigns are used to influence social media users, potentially leading to offline violence. In this study, we introduce a general methodology to uncover coordinated messaging through an analysis of user posts on Parler. The proposed Coordinating Narratives Framework constructs a user-to-user coordination graph, which is induced by a user-to-text graph and a text-to-text similarity graph. The text-to-text graph is constructed based on the textual similarity of Parler and Twitter posts. We study three influential groups of users in the 6 January 2020 Capitol riots and detect networks of coordinated user clusters that post similar textual content in support of disinformation narratives related to the U.S. 2020 elections. We further extend our methodology to Twitter tweets to identify authors that share the same disinformation messaging as the aforementioned Parler user groups.

Similar content being viewed by others

1 Introduction

The free speech social network Parler has been under scrutiny for its role as a preparatory medium for the 6 January 2020 Capitol riots, where supporters of then-president Donald Trump stormed the Capitol building. The United States has previously seen movements like the BlackLivesMatter and the StopAsianHate affected by disinformation narratives on social media, which can lead to offline violence (Lyu et al. 2021; Zannettou et al. 2019).

A key part of the field of social cybersecurity is the detection of disinformation on social media platforms. Disinformation, in this context, is a deliberately manipulated narrative or distortion of facts to serve a particular purpose (Ireton and Posetti 2018). In particular, we are interested in the coordination of spreading disinformation by online actors as coordinated operations can pose threats to the social fabric (Carley 2020). Within the realm of coordinated disinformation campaigns, we aim to identify key actors and disinformation narratives surrounding the U.S. Capitol Riots. In particular, this study seeks to address which actors, or classes of actors, are involved in disinformation spread on Parler. Additionally, we wish to investigate whether there are cross-domain disinformation narratives across Parler and Twitter.

For this study, we analyzed the activity of the users on Parler, with a special focus on three account types: military/veteran-identifying, patriot-identifying and QAnon-identifying accounts. We then combined textual and user-level information to analyze coordinated messaging and find influential accounts among those identified classes of users. Finally, we identified Twitter users that tweet textual information that matches the narratives of these three identified classes of Parler users to analyze cross-platform textual coordination.

The main contribution of our work are as follows:

-

1.

We identify three classes of accounts, namely those that identify as military or “patriot” or QAnon that are very influential on Parler after the January 6th Capitol riot and active in perpetrating disinformation themes.

-

2.

We propose a new technique to find coordinated messaging among users using features of their parleys. The Coordinating Narratives Framework is a text-coordination technique that reduces complex networks into core members, providing leads for further analysis.

-

3.

Using the framework, we perform cross-platform analysis by identifying Twitter authors that coordinate in narratives with the three classes of Parler authors.

2 Background

Parler positions itself as a free speech social network where its users can “speak freely and express yourself openly”. A significant portion of its user base are supporters of former U.S. President Donald Trump, U.S. political conservatives, QAnon conspiracy theorists and far-right extremists (Aliapoulios et al. 2021). Additionally, the platform has been in the spot light for being a coordination platform for the January 6 2021 Capitol riots (Munn 2021), where hundreds of individuals stormed the US Capitol Hill building, emboldened by calls from then-president Donald Trump to stop the certification the 2020 US election. The social network was shut down on January 11, but has since been reactivated under a different hosting service (Horwitz and McMillan 2021).

Prior work analyzed Parler’s role in shaping the narratives of different contexts. Parleys (posts on Parler) between August 2018 and January 2021 revealed a huge proportion of hashtags supporting Trump on the elections (Aliapoulios et al. 2021). Analysis of the platform on the COVID-19 vaccine disproportionately slants towards anti-vaccine opinions (Baines et al. 2021). Parler also hosts a large number of supporters of QAnon, a far-right conspiracy theory claiming a group of elites control global politics. These supporters are considered to have influenced the 2020 US Presidential Elections (Bär et al. 2022). Specific to the capitol riots, a comparative study between Twitter and Parler shows that a huge proportion of Parler’s activity was in support of undermining the validity of the 2020 US Presidential elections, while the Twitter conversation disapproved Trump (Hitkul et al. 2021).

One point of concern is the detection of coordinated campaigns used to influence users, which may therefore lead to real-world violence like the Capitol riot. Several techniques of coordination detection on social media have been previously researched: using network community detection algorithms (Nizzoli et al. 2020), statistical analysis of retweets, co-tweets, hashtags and other variables (Magelinski et al. 2022; Vargas et al. 2020), and constructing a synchronized action framework (Ng and Carley 2022). Another way of assessing coordinated behavior between social media websites is by comparing the textual content that users are sharing. These works, however, depend largely on an explicit user-to-user network-based structure like retweets, which is not dominant in Parler nor across platforms. Pachecho et al. have recently utilized a textually-based user comparison as a means of overcoming limitations in finding coordinated user behavior with just explicit user-to-user networks (Pacheco et al. 2020). We adapt textually-based user comparison and extend the methodology to a scalable one that can be used for large data sets.

Another point of concern is disinformation campaigns being “cross-platform”, or utilizing more than one social media platform. While most studies of disinformation campaigns focus on just one social media platform for analysis, some recent works have highlighted that these campaigns can be cross-platform in nature. Recent work has found that social media sites like Twitter or Facebook can be used as an amplification platform for disinformation content on YouTube (Iamnitchi et al. 2020; Wai Ng et al. 2021). Studies have discovered language similarities between fringe social media sites like Parler and more mainstream sites like Twitter (Sipka et al. 2022). We extend these prior studies to explore the relationships in disinformation campaigns between these two types of social media sites.

3 Data and methodology

In this section, we detail the data used in our study along with the analysis techniques for understanding the actors who spread content on Parler and the narratives present within that content. We first detail the data used and how it was processed for analysis. We then detail how we analyzed the textual content for disinformation themes and coordinated propagation of disinformation.

3.1 Data collection and processing

This study performs a multi-platform examination of textual narratives across two platforms: Parler and Twitter. We summarize the corresponding terminologies used by both platforms in Table 1.

The Parler data used for this study comes from the Parler data leak following the January 6th Capitol Riot. After the Capitol riots, Amazon Web Services banned Parler from being hosted on its website. Shortly before that, an independent researcher and other internet users sought to preserve the data by performing a scrape of its posts, or “parleys” (Lyons 2021). In particular, we use a partial HTML scrape of the postsFootnote 1. The data set consists of 1.7 million posts from 290,000 unique users and has 98% of its posts from January 3rd to January 10th of 2021.

We developed a parsing tool to parse the useful elements from the HTML: users, text, external website URLs, echo counts, impression counts, and so onFootnote 2

Twitter data was collected with Twitter V2 REST API using the following well known hashtags associated with the events of January 6th: #stopthesteal, #stopthefraud, #marchfortrump, #marchtosaveamerica, #magacivilwar, #saveamericarally and #wildprotest. This dataset consists of 2.08 million posts from 923,008 users from January 3rd to January 12th of 2021.

3.2 Parler user class analysis

For the Parler users, we focused on those users that are most likely to be involved with disinformation or who had an outsize influence in the January 6th Capitol Riot. In particular, we coded for three classes of users based upon news of those being involved in the January 6th events: those who openly display a military or veteran affiliation, those who identify themselves with the term “patriot” and those who use QAnon-related terms in their names (NPR Staff 2021). To find these users, we used regex string matching on their “author names” and their “author user names”. Each user had both an “author user names” which is his actual account handle, or @-name, and an “author names” which is a short free-text description the user writes themselves. Table 2 summarizes the terms used for finding accounts belonging to these three classes of authors. We then analyzed the social media usage and artifacts of these three classes of users. Using these three user classes, we analyzed the textual content of these three classes of users and identified coordinated messaging among the user classes.

3.3 Identification of coordinated messaging

Finally, we use textual information to evaluate user coordination in spreading themes within messages. The Parler data shows a relatively low proportion of parley echoes (see Table 3), which means users are not actively sharing other users’ parleys. In contrast, Twitter data has a huge proportion of retweets (Hitkul et al. 2021). This renders using methods of identifying user coordination through retweets and co-tweets inadequate, hence we turn to analyzing similar texts.

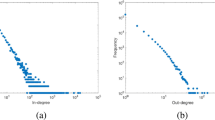

Drawing ideas from previous work, we create user-to-user graphs based on users’ textual content to analyze user accounts with very similar textual messages (Pacheco et al. 2020). However, instead of only creating an edge if there is at least one pair of posts between two users that are above an arbitrary similarity threshold, we use a text-to-text graph to induce a user-to-user graph, and set the threshold of which we filter edges through a statistical analysis of all graph edge weights.

We consider only original parleys and not echoed-parleys in order to highlight authors who are consciously writing similar texts, but may be trying to hide their affiliation by not explicitly echoing each other. We first begin by creating a user-to-text binary graph P, which represents the users that wrote each parley. Next, we create a text-to-text graph A through a k-Nearest Neighbor (kNN) representation of the parley texts, with \(k=log_2{N}\) where N is the number of parleys (Maier et al. 2009). To do so, we perform BERT vectorization on each parley text create contextualized embeddings into a 768-dimensional latent semantic space (Devlin et al. 2018). We make use of the FAISS library to index the text vectors and perform an all-pairs cosine similarity search to determine the top k closest vectors to each parley vector (Johnson et al. 2017). We then symmetrize this kNN-graph via \(P' = \frac{P+P^{T}}{2}\) to produce a symmetric k-Nearest Neighbor (kNN) graph of the posts, as this tends to better maintain meso-structures from the data, like clusters, in the graph (Campedelli et al. 2019; Maier et al. 2011; Ruan 2009). The edges of the graph are weighted between [0, 1] by the cosine similarity of the two parleys.

Having found a latent graph of the textual content of the posts we then induce a user-to-user graph. We do this through matrix Cartesian product formula of:

Where U is the user-to-user graph, A is a user-to-text bipartite graph where an edge indicates that a user posted a given parley, and \(P'\) is the text-to-text kNN graph as previously defined. The resulting graph, U has edges that represent the strength of textual similarity between two users, given how close in similarity their posts are in a latent semantic space. U better accounts for not only having multiple, similar posts but better respect the textual data manifold when measuring between two users as compared at only identifying the most similar posts between two users (Pacheco et al. 2020).

To sieve out the core structure of the graph, we further prune the graph U based on link weights, forming \(U'\), keeping only the links that weigh greater than one standard deviation away from the mean link weight. This leaves behind users that strongly resemble each other in terms of the semantic similarity of their parley texts.

We first perform this procedure upon Parleys to sieve out the core structure of Parler users that are coordinated in their narratives on the platform. We then perform this same procedure on Parler users against the Twitter dataset, comparing tweets against the parleys from the users identified from the three user groups. From this, we construct the core structure of the user-to-user graph \(U'\) representing narrative coordination between Parler and Twitter users and perform further user analysis on the Twitter users in terms of the date the accounts were created.

4 Results

In this section, we detail the results of our analysis. We begin by presenting the social media usage statistics of the three classes of users. We then look at the textual content being spread for disinformation narratives. Finally, we present the results of analyzing users and textual content.

4.1 Parler account usage and artifacts

After having identified classes of users (i.e. military/veteran, patriot, QAnon) using regex searches within their author names, we then analyzed the differences in their activity on Parler. For the military/veteran users, we identified 975 accounts (0.3% of all accounts) that posted 14,745 Parleys (0.8% of all Parleys). For the patriot users, we identified 5,472 accounts (1.9% of all accounts) that posted 81,293 Parleys (4.7% of all Parleys). For the QAnon users, we identified 3,400 accounts (1.2% of all accounts) that posted 71,998 Parleys (4.1% of all Parleys). So, while these accounts make up a small portion of the users, less than 5% of all the user accounts, they account for close to 10% of all of the posts by volume. Table 3 summarizes the social media engagement and use of various social media artifacts across all of the accounts.

Generally, we observe that the social engagement is different for the accounts that identify with QAnon and patriot terms, as compared to the overall Parler population. The military, patriot and QAnon accounts are all echoed more than the population average. This trend of having posts be highly echoed is especially true for the QAnon accounts which, on average, see three times as much echoing on their posts than the average user does. Additionally, the QAnon user category also sees more impressions, upvotes, and comments than does any other category of user. The military, patriot, and QAnon accounts also use more social media artifacts, like hashtags or mentions than do the average account. So, those accounts that openly identify with QAnon terms tend to see more social engagement with their posts than any other class of accounts and all of the special types of accounts use more social media artifacts than average users.

Additionally, the verbiage used by these accounts tends toward those associated with disinformation campaigns. The top words by their counts within the textual content of all the posts of all three accounts include the following words: trump, pence, patriot, people, antifa, country, president, dc, echo, election, need, today, wwg1wga, and stopthesteal. While some of these terms are common throughout the data set, like ‘trump’, ‘need’, ‘president’, or ‘people’, others show up more prominently in the three classes of accounts, like ‘wwg1wga’ and ‘antifa’, than they do across all posts. These usage of these words is almost uniquely associated with disinformation campaigns like the 2020 U.S. Election being fraudulent or Antifa being responsible for the January 6th Capitol Riot. Thus, we observe that the three classes of users also frequently employ verbiage associated with disinformation.

4.2 Coordinated messaging between users

We present the graph \(U'\), the core structure of the induced user-to-user graph U for Parler in Figure 1, and the Parler x Twitter multi-platform induced user-to-user graph U in Figure 2. Both graphs have distinct core structures which are due to the fact that almost all of the conversation surrounds the 2020 U.S. Election. The graphs are annotated with clusters of coordinated messaging between users derived from the analysis of textual similarity and the strength of the coordination is represented by the link weights. We segment each graph into subgraphs by visual inspection for better clarity of each portion of the graph. The messaging of each subgraph is consistent, showing the effectiveness of our approach in uncovering coordination between users as well as distinct disinformation themes spread by those users.

4.2.1 Coordinating narratives in Parler

We segment the graph using Leiden clustering method and manually interpret the main clusters. The Leiden community clustering algorithm is an iterative three-step algorithm that moves nodes into partitions, refines the partitions and then aggregates the network (Traag et al. 2019). Because the communities formed through the algorithm are guaranteed to be well-connected, we can extract the interesting communities for deeper analysis. in Figure 1 into subgraphs (a) to (g) and describe the narratives of each portion.

Subgraph (a) represents Patriot users who have every conviction they will fight for their Republic and president. Subgraph (b) calls for freedom in French, with many users having handles that end with the different Canadian provinces. The users associate themselves with different user groups. Subgraph (c) sieves out users in the center of the graph that strongly coordinate with each other in disputing the electoral vote count. These are predominantly patriot users and a handful of military users disputing the vote.

Subgraph (d) in which the core patriot in the center of the graph strongly coordinates with each other. This user group mainly advocates that the truth about the elections will come to pass and the storm is upon us. These messages express support for the disinformation narratives of the 2020 U.S. Election being fraudulent as well as QAnon conspiracy theories relating to then President Donald Trump seizing power in the U.S.

Subgraph (e) represents QAnon users, who mostly purport that Trump will win, and provide updates about the capitol situation in terms of spurring the group on (“PLS SPREAD TrumpVictory FightBack ProtectPatriots”) and information updates (“Protesters are now in Nancy Pelosi’s office”). Some of these narratives blame BLM and a deliberately designed terrorist Antifa who infiltrated protesters who stormed the Capitol.

Subgraph (f) sieves out users at the fringe of the graph that calls for justice and revolution and not to be let down. Subgraph (g) represents military users that hope for a free world and generally call to Stop the Steal of the 2020 elections. “Stop the Steal” has been a recurring phrase throughout Trump’s political career, peaking in the Capitol riots as a movement to overturn the 2020 US election results and has been a key phrase associated with the disinformation campaign trying to de-legitimize the 2020 US election. This #StopTheSteal narrative recurs on Twitter and we will present it in the next section.

4.2.2 Coordinating narratives across Parler and Twitter

Having analyzed the textual and user-level information for the Parler accounts alone, we then turned to analyzing the similarity of Twitter accounts with Parler accounts. Figure 2 represents a multi-platform view of coordinating narratives across Parler and Twitter platforms. The graph investigates the similarity in narratives across the two platforms Parler and Twitter. We segment the graph using Leiden clustering method and manually interpret the main subgraphs, indicated as subgraphs (a) to (f).

Subgraph (a) represents users that provide real-time updates on the Capitol Riot situation and support the election fraud narrative. Subgraph (b) encourages the #StopTheSteal narrative, spurring others on the capitol protests. They spread disinformation narratives like #antifa-infiltrated members are trying to stop the truth about the election fraud from coming to light, and the blame of #BLM for the storming of the Capitol.

Subgraph (c) are fringe conversations among Twitter users on election fraud, calling for this huge election lie to come out to light. Subgraph (d) propagates #StopTheSteal narratives in Italian, while subgraph (f) propagates that narrative in Spanish. Subgraph (f) additionally propagates other QAnon narratives in the Spanish language.

Subgraph (e) takes a different twist from all the other graphs, calling out that there is no election fraud and that the lies and the reckless tweets have incited violence and resulted in deaths and should be stopped immediately. These users call for the social media platforms to remove users propagating these narratives and for peace in the country.

4.3 Twitter users and artifacts

We segment the graph using Leiden clustering method and manually interpret the main subgraphs. Having identified these distinct subgraphs in the textually-induced Twitter and Parler user graph, we analyzed the nature of the Twitter accounts in the subgroups.

The largest clusters of users in these graphs are in subgraphs (a) and (b), which propagate the Capitol Riot situation, election fraud and disinformation narratives. However, accounts in these two subgraphs have lesser of a follower count than subgraphs (d) and (f) that propagate the same messages in Spanish and Italian. These groups may have been targeting the Hispanic population, while subgraphs (a) and (b) targeted the general English-speaking population.

All subgraphs have an almost-equal proportion of accounts suspended. The subgraphs with the highest proportion of accounts suspended is subgraphs (d) and (e), which purport #StopTheSteal narratives and call out election fraud. Similarly, these two subgraphs also have the highest proportion of accounts created in 2021. When analyzing the account creation in 2020, the subgraphs with the highest proportion of these accounts propagate information in languages other than English.

5 Discussion and conclusion

In this work, we analyzed actors involved in disinformation spread on Parler and related twitter data, during the January 6th Capitol Riot event. We looked at three main user groups based upon unusual online activity and their salience in stories associated with the January 6th riots: users that openly present a military or veteran affiliation, users that use the moniker ‘patriot’, and users that identify with QAnon-related terms. We found that the posts of these three groups of users are echoed more and receive more impressions on Parler than the population average, with the QAnon users having most of all. These user groups also employ typical tactics of using more hashtags and mentions than the average accounts to spread their messages, as well as key verbiage relating to disinformation campaigns. Overall, we find that certain users that self-identify with particular identities typically revered by the politically right in the US (i.e. military service, patriotism, etc.) tend to be more involved in guiding the conversation within right-leaning social media spheres.

Having identified these influential accounts, we then looked at textual coordination between actors. Identifying the strength of user-to-user coordination over link weights presents coordinated activity as a spectrum rather than a binary state of shared activity (Vargas et al. 2020). This shows which users typically post similar texts, allow identification of how similar user accounts are to each other. Our approach of inducing a user-to-user graph by their textual similarity produced distinct clusters of users that had similar textual themes. These themes include known disinformation themes like the dispute of the electoral vote count and that Trump won the 2020 US elections (the 2020 U.S. election was stolen from former U.S. President Donald Trump). Within Parler, we observed distinct groups of users typically that identify as patriot or QAnon that did engage in posting the same textual content, that aligns to known 2020 election narratives (Table 4).

Through the textually-induced, cross-platform user-to-user network of Twitter and Parler conversations surrounding the Capitol Riots, we observed similar disinfomation messaging occurring. Additionally, a large percentage of these Twitter accounts were created in 2020 and 2021, and were suspended by the Twitter platform. This leads us to believe that some of these accounts were created as disposable accounts in preparation to the event.

In analyzing the compositions of Twitter accounts that propagate key narratives, we identify that clusters with Spanish and Italian language usage gather a larger following cloud and tweet more often than clusters writing in English. These clusters also have more accounts created in 2020–2021 and a larger percentage of accounts suspended. At this time, its not clear what the nature of these accounts are and what they are attempting to do to the overall January 6th conversation, and we plan to investigate this further in future work.

After establishing the Parler narratives, we look towards our Twitter corpus to identify narratives that coordinate with the Parler disinformation themes. Not only does this reduce the data size of the Twitter corpus to a much more manageable one, it also anchors the search in narratives that have been already determined. This methodology can be further extended to identify coordinating narratives and actors across platforms—begin the search in one platform as an anchor to identify coordinating messaging from actors from another platform.

In terms of user privacy, we examined users only at the aggregate level, in terms of the three defined user classes and their social media platforms. We did not examine any individual users’ data, apart from the user name which was only used to categorize the users into military/patriot/QAnon classes.

There are some important limitations to this study. First, the data collected was only a partial scrape of the parleys made on the platform surrounding the Capitol riots event. As the platform was shut down after the Capitol Riots event, we cannot conclude this was the entire set of parleys related to the event. Similarly, the collection of Twitter data involves Twitter API restrictions and we may not have collected all the tweets related to the event. Second, we examined user classes via self-identified users. This means that the analysis is limited to users who self-presented their affiliations, and the silent users are not considered in the analysis. Other ways of using implicit signals might be explored in order to capture a more accurate user base. Lastly, we have not explored all of the means of properly learning the textual similarity graph or sieving out the main graph structure from the induced graph. So, we do not know if we have found the optimal graph representation. Future work will involve investigating different types of dimensionality reduction techniques to identify techniques that are effective on large-scale datasets.

Future avenues of study in this line include looking at a multimodal coordination effort on the platform in contributing to the spread of disinformation, rather than only the text field, taking into account the multitude of images and videos posted on Parler, resulting in the offline violence. Another important future direction is to study dynamic coordination networks, based on temporal information to characterize the change in coordinated messaging among users.

In this study, we used a general approach to sieve out the core structure of a user-to-user coordination graph which relies upon textual content of social media data. This Coordinating Narratives Framework provides a more robust filtering approach as compared to work that relies on an arbitrary link weight threshold value, below which the links are discarded. Our approach can further help to identify disinformation campaigns where automated groups of user accounts consistently post a similar message, and can overcome shortcomings with just trying to find coordination among disinformation actors by their explicit networks (i.e. echoing on Parler). We extend this approach to identify coordinating messaging from another platform (i.e. Twitter), to point out similar cross-platform narratives, providing a method to identify actors that coordinate across platforms. We hope the techniques here can be used to better analyze social media platforms and streamline network structures into core components for further investigation. The early detection of coordinated efforts to organize riots and political violence online may present the possibility of stopping that violence in the real world.

Notes

Code for parsing Parler HTML is available at: https://github.com/...

References

Aliapoulios M, Bevensee E, Blackburn J, Bradlyn B, Cristofaro ED, Stringhini G, Zannettou S (2021) An early look at the parler online social network

Baines A, Ittefaq M, Abwao M (2021) # scamdemic,# plandemic, or# scaredemic: what parler social media platform tells us about covid-19 vaccine. Vaccines 9(5):421

Bär D, Pröllochs N, Feuerriegel S (2022) Finding qs: profiling Qanon supporters on Parler. arXiv preprint arXiv:2205.08834

Campedelli GM, Cruickshank IM, Carley K (2019) A complex networks approach to find latent clusters of terrorist groups. Appl Netw Sci 4(1):59. https://doi.org/10.1007/s41109-019-0184-6

Carley KM (2020) Social cybersecurity: an emerging science. Comput Math Organ Theory 26(4):365–381

Devlin J, Chang M, Lee K, Toutanova K (2018) BERT: pre-training of deep bidirectional transformers for language understanding. CoRR abs/1810.04805, http://arxiv.org/abs/1810.04805

Hitkul, Prabhu A, Guhathakurta D, jain J, Subramanian M, Reddy M, Sehgal S, Karandikar T, Gulati A, Arora U, Shah RR, Kumaraguru P (2021) Capitol (pat)riots: a comparative study of twitter and parler

Horwitz J, McMillan R (2021) Parler resurfaces online after monthlong service disruption (2). https://www.wsj.com/articles/parler-resurfaces-online-after-monthlong-service-disruption-11613422725

Iamnitchi A, Ng K, Horawalavithana S (2020) Twitter is the megaphone of cross-platform messaging on the white helmets. In: Proceedings of the 13th international conference in social, cultural, and behavioral modeling (11), https://www.springerprofessional.de/en/twitter-is-the-megaphone-of-cross-platform-messaging-on-the-whit/18470618

Ireton C, Posetti J (2018) Journalism, fake news & disinformation: handbook for journalism education and training. UNESCO, https://unesdoc.unesco.org/ark:/48223/pf0000265552

Johnson J, Douze M, Jégou H (2017) Billion-scale similarity search with gpus. arXiv preprint arXiv:1702.08734

Kin Wai N, Horawalavithana S, Iamnitchi A (2021) Multi-platform information operations: Twitter, facebook and youtube against the white helmets. In: Proceedings of the 15th international AAAI conference on web and social media (6). https://doi.org/10.36190/2021.36. http://workshop-proceedings.icwsm.org/abstract?id=2021_36

Lyons K (Jan 2021) Parler posts, some with gps data, have been archived by an independent researcher, https://www.theverge.com/2021/1/11/22224689/parler-posts-gps-data-archived-independent-researchers-amazon-apple-google-capitol

Lyu H, Fan Y, Xiong Z, Komisarchik M, Luo J (2021) State-level racially motivated hate crimes contrast public opinion on the# stopasianhate and# stopaapihate movement. arXiv preprint arXiv:2104.14536

Magelinski T, Ng L, Carley K (2022) A synchronized action framework for detection of coordination on social media. J Online Trust Saf https://doi.org/10.54501/jots.v1i2.30

Maier M, Hein M, von Luxburg U (2009) Optimal construction of k-nearest neighbor graphs for identifying noisy clusters. arXiv e-prints arXiv:0912.3408 (12)

Maier M, von Luxburg U, Hein M (2011) How the result of graph clustering methods depends on the construction of the graph. arXiv e-prints arXiv:1102.2075 (2)

Munn L (2021) More than a mob: Parler as preparatory media for the U.S. capitol storming. First Monday 26(3). https://doi.org/10.5210/fm.v26i3.11574. https://firstmonday.org/ojs/index.php/fm/article/view/11574

Ng LHX, Carley KM (2022) Online coordination: methods and comparative case studies of coordinated groups across four events in the united states. In: 14th ACM web science conference 2022, pp 12–21

Nizzoli L, Tardelli S, Avvenuti M, Cresci S, Tesconi M (2020) Coordinated behavior on social media in 2019 UK General Election. arXiv preprint arXiv:2008.08370

NPR Staff: The capitol siege: the arrested and their stories (2021). https://www.npr.org/2021/02/09/965472049/the-capitol-siege-the-arrested-and-their-stories

Pacheco D, Flammini A, Menczer F (2020) Unveiling coordinated groups behind white helmets disinformation. In: Companion proceedings of the web conference 2020. WWW ’20, Association for Computing Machinery, New York, NY, USA, pp 611–616. https://doi.org/10.1145/3366424.3385775

Ruan J (2009) A fully automated method for discovering community structures in high dimensional data. In: 2009 ninth IEEE international conference on data mining, pp 968–973 (12). https://doi.org/10.1109/ICDM.2009.141

Sipka A, Hannak A, Urman A (2022) Comparing the language of qanon-related content on parler, gab, and twitter. In: 14th ACM web science conference 2022. WebSci ’22, Association for Computing Machinery, New York, NY, USA, pp 411–421. https://doi.org/10.1145/3501247.3531550

Traag VA, Waltman L, Van Eck NJ (2019) From louvain to leiden: guaranteeing well-connected communities. Sci Rep 9(1):1–12

Vargas L, Emami P, Traynor P (2020) On the detection of disinformation campaign activity with network analysis. arXiv preprint arXiv:2005.13466

Zannettou S, Caulfield T, De Cristofaro E, Sirivianos M, Stringhini G, Blackburn J (2019) Disinformation warfare: understanding state-sponsored trolls on twitter and their influence on the web. In: Companion proceedings of the 2019 world wide web conference, pp 218–226

Acknowledgements

The research for this paper was supported in part by the Knight Foundation and the Office of Naval Research Grant (N000141812106) and an Omar N. Bradley Fellowship, and by the center for Informed Democracy and Social-cybersecurity (IDeaS) and the center for Computational Analysis of Social and Organizational Systems (CASOS) at Carnegie Mellon University. The views and conclusions are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Knight Foundation, Office of Naval Research or the US Government.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ng, L.H.X., Cruickshank, I.J. & Carley, K.M. Coordinating Narratives Framework for cross-platform analysis in the 2021 US Capitol riots. Comput Math Organ Theory 29, 470–486 (2023). https://doi.org/10.1007/s10588-022-09371-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10588-022-09371-2