Abstract

Contingency-theorists have gestured to a series of phenomena such as random mutations or rare Armageddon-like events as that which accounts for evolutionary contingency. These phenomena constitute a class, which may be aptly called the ‘sources of contingency’. In this paper, I offer a probabilistic conception of what it is to be a source of contingency and then examine two major candidates: chance variation and genetic drift, both of which have historically been taken to be ‘chancy’ in a number of different senses. However, contra the gesturing of contingency-theorists, chance variation and genetic drift are not always strong sources of contingency, as they can be non-chancy (and hence, directional) in at least one sense that opposes evolutionary contingency. The probabilistic conception offered herein allows for sources of contingency to appropriately vary in strength. To this end, I import Shannon’s information entropy as a statistical measure for systematically assessing the strength of a source of contingency, which is part and parcel of identifying sources of contingency. In brief, the higher the entropy, the greater the strength. This is also empirically significant because molecular, mutational, and replicative studies often contain sufficient frequency or probability data to allow for entropies to be calculated. In this way, contingency-theorists can evaluate the strength of a source of contingency in real-world cases. Moreover, the probabilistic conception also makes conceptual room for the converse of sources of contingency: ‘sources of directionality’, which ought to be recognised, as they can interact with genuine sources of contingency in undermining evolutionary contingency.

Similar content being viewed by others

Introduction

There is, presently, no consensus as to what evolutionary contingency amounts to. Minimally, the idea is that evolutionary outcomes could have been otherwise. Perhaps, had the evolutionary past been different, then present or future evolutionary outcomes would have been different. Or, perhaps, the biological natural laws allow for a multiplicity of possible outcomes—in other words, the biological natural laws underdetermine the evolutionary outcome.

A number of contingency-theorists have attempted to characterise, or offer an account of, evolutionary contingency by satisfying a range of desiderata, such as (1) being faithful to Gould (1989), (2) concordance with macroevolutionary data, (3) emphasising the importance of history, and (4) avoiding tension with indeterminism. Beatty (2006, 2016, 2017) has suggested that evolutionary outcomes can be ‘contingent per se’ (formerly, the unpredictability sense). Powell (2009, 2012) and Powell and Mariscal (2015) have suggested that evolutionarily contingent outcomes are ones that are ‘sensitive to initial conditions’. Desjardins (2011a, b, 2016) has argued that evolutionarily contingent outcomes are ‘path dependent’. And, more recently, Wong (2020a) has argued that evolutionarily contingent outcomes have non-trivial objective probabilities in some to be defined ‘modal range’.

Despite the lack of consensus, contingency-theorists have proceeded to gesture to a series of phenomena ranging from processes central to modern evolutionary theory, like genetic drift or random mutations, to rare Armageddon-like events as what accounts for evolutionary contingency. These phenomena constitute a class, which may be aptly called the ‘sources of contingency’. The idea is that these phenomena lead to evolutionarily contingent outcomes in virtue of some inherent chanciness. However, it is not clear what a ‘source of contingency’ is, nor which sense of chance is invoked. With two exceptions (i.e. McConwell 2019; Wong 2020b), there has been little beyond mere gesturing at possible sources of contingency, resulting in a noticeable paucity of systematic investigation into this class of phenomena.

In this paper, I offer a probabilistic conception of a ‘source of contingency’. The idea is that, for any given biological process that admits of multiple possible outcomes, there is an array of possible outcomes, each with a varying objective probability that, altogether, sum to 1. But, depending on features of the possible outcome array, a source of contingency can be said to be stronger or weaker. In fact, sources of contingency account for varying levels of evolutionary contingency precisely by entailing differently shaped probability distributions. For instance, a source of contingency is strongest when there is a uniform distribution whereby there is absolutely no probability bias towards any particular outcome (i.e. the state of equiprobability). This is intuitive, as all possible outcomes have an equal probability of occurence, and it is highly uncertain which one will occur. However, as the probability distribution diverges from uniformity, the source of contingency becomes weaker. In this case, there is an outcome that has a relatively greater objective probability than (some of) its alternatives in the possible outcome array, and the source is said to be weak. After all, there was one outcome that was relatively more probable to occur.

The modern synthesis prescribes two obvious candidates for strong sources of contingency: the group of evolutionary factors known as (1) ‘chance variation’, and the group of evolutionary factors known as (2) ‘genetic drift’.Footnote 1 Despite previous, extensive philosophical discussions of these two groups of factors in the philosophy of biology (e.g. Beatty 1984, 2002, 2004, 2006; Brandon 2006; Lennox 2015; Millstein 1997, 2002; Sober 1984; Walsh et al. 2002), there is still much ambiguity in both terms. The former is an antiquated term employed by Darwin and his contemporaries to refer to evolutionary material, upon which natural selection acts, that is generated in a supposedly non-directed fashion in contradistinction to the alternative of the day—Lamarckism.Footnote 2 For whilst Lamarck thought that the generation of variation was continually directed at improving forms and the perpetuation of those variations neutral, Darwin took the converse view in that he thought evolution was proceeded by a non-chancy, selective process (i.e. natural selection) acting on variations generated by chance (Lennox 2015). It was, however, not clear what Darwin meant by ‘chance’, nor what he meant by ‘variation’.

Fortunately, the modern synthesis progressed on both fronts. Firstly, within the modern synthesis tradition, ‘variation’ was taken to be chiefly genetic ever since Fisher’s The Correlation between Relatives on the Supposition of Mendelian Inheritance (1918). Secondly, modern synthesis authors took these processes to be ‘chancy’ in the sense that they were random with respect to fitness (e.g. Haldane 1930; Dobzhansky 1970). That is—mutation and recombination do not occur in response to any adaptive benefit they may incur. Despite the general acceptance of this sense of chance amongst practicing biologists, other interpretations occasionally crop up in the modern biological literature. This is, as we shall see, partly owing to the fact that certain empirical findings have brought the modern synthesis sense of chance into serious question.

As for genetic drift, Beatty described it as a “heterogenous concept” (1992) of disparate processes and outcomes. These processes and outcomes, not without controversy, include (but are not limited to): (1) indiscriminate parent sampling, (2) indiscriminate gamete sampling, (3) sampling error, (4) the Sewall-Wright effect, (5) the founder effect, and, (6) the Hagedorn effect (Fisher 1930; Walsh et al. 2002). However, what seems to unite all these processes and outcomes under the same header of ‘drift’ is some “notion of chance” (Walsh et al. 2002).

Part of the trouble, however, is that the notion of chance, itself, is deeply ambiguous. Within the concepts of genetic drift and chance variation alone, there are, at least, seven distinct senses of chance at play (Millstein 2011). As we shall see, some senses of chance stipulate only that certain outcomes—say, an increase in an allele’s frequency—do not come about because they are adaptive whilst other senses merely deny that certain outcomes are more probable than their alternatives. Yet, others instil an epistemological dimension in the concept, further complicating the issue.

So, whilst certain biological processes are taken to be ‘sources of contingency’ on the basis of their supposed chanciness (e.g. Travisano et al. 1995; Beatty 2006; Erwin 2006; Turner 2011; Powell 2009, 2012), it is not clear which sense of chance is invoked. This is particularly relevant because not all senses of chance actually engage with the assertations of an evolutionary contingency thesis. In other words, there are senses of chance that do not assert anything contrary to, for example, trivial (1 or 0) objective probabilities of evolutionary outcomes (Wong 2020a) or that evolutionarily contingent outcomes are path dependent (Desjardins 2011a, b, 2016). For example, if a process is chancy in the sense that one is ignorant of the outcomes that will result, then this says nothing about an outcome’s objective probability of evolution nor its path dependence. That is—one’s ignorance about which outcome will occur is compatible with an outcome having a high probability of occurence or its being not path dependent at all. Thus, even if chance variation or genetic drift are chancy in some senses, it may not be enough for these processes to be sources of contingency. In light of this complication, I am impelled to consider some of the ways in which chance variation and genetic drift can be said to be chancy.

Despite some other plausible sources of contingency, I will confine my focus in this paper to only these two microevolutionary factors: chance variation and genetic drift. The reason for this is that these factors have been emblematic of the contingency debate (e.g. Beatty 2006; Powell 2012) and, therefore, benefit most from elucidation.

The plan of the present paper is as follows: I begin by offering a probabilistic conception of a ‘source of contingency’. With this conception in mind, in the “Chance variation” section, I explicate the concepts of chance variation and genetic drift, both of which have had a long and convoluted history. In particular, I briefly consider Darwin’s usage of chance variation and then outline some of its modern equivalents: (1) random mutation and (2) recombination (in meiosis). Biologists and philosophers have interpreted, at least, five different senses of chance for these processes. By way of evaluating the plausibility of each of these senses, I entertain some empirical evidence concerning mutagens, DNA repair mechanisms, and, mutational and recombinational hotspots. Although the empirical evidence is currently inconclusive regarding some senses of chance, I conclude that there is sufficient evidence that random mutations can be highly non-random in the statistical sense, such to render it a weak source of contingency at best. That is—many instances of mutagenesis result in an outcome that is relatively more probable than its alternatives.

The case is different with genetic drift, to which I turn in the “Genetic drift” section. I shall argue that genetic drift as indiscriminate sampling is a maximally strong source of contingency on conceptual grounds. However, genetic drift as sampling error can be directional in a logically restricted sense. Regarding this, Stephens (2004) has argued that genetic drift as sampling error necessarily reaches homozygosity given enough time. But, as we shall see, such restricted logical entailment about homozygosity does nothing to threaten ordinary specifications of evolutionary contingency. Thus, genetic drift as sampling error fails to constitute a source of contingency only when it relates to certain contingency claims with idiosyncratically specificied subjects and/or unrealistic modal range indices.

Given that these evolutionary factors can differ in directionality, it is important to note that sources of contingency seldomly exist in a vacuum. Sources of contingency ought to be considered in the presence of other sources of contingency, and, what might be called, sources of directionality. Much like in a Newtonian framework, whereby the determination of the movement of a ball succumbs to a variety of forces and their interactions, a source of contingency may be overridden or influenced by other evolutionary factors. As a result, a genuine source of contingency may not always entail a highly evolutionarily contingent outcome if sources of directionality impinge. All of this shall be the topic of discussion in the “Sources of contingency versus sources of directionality” section.

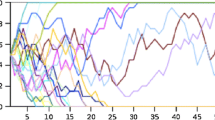

Finally, sources of contingency, as per the probabilistic conception, are highly amenable to quantitative analysis and empirical evaluation. In order to evaluate the strength of a source of contingency, a contingency-theorist may use an appropriate statistical measure that captures the collective differences of each combinatorically possible pair of probabilities in the possible outcome array. That is—an appropriate measure would denote a level of equitability amongst all the possible outcomes of a source of contingency. Although there are a few statistical candidates, in the “Shannon's information entropy” section, I propose that Shannon’s (1948) information entropy is most appropriate. Accordingly, as the probabilities of the outcomes in a possible outcome array differ less from each other (i.e. there is no one outcome that is relatively more probable to occur), entropy increases, and the source of contingency is said to be stronger. On the other hand, if there is an outcome with a probability of 1 and, hence, entropy is equal to 0, then the source of contingency is at its absolute weakest (it is, in fact, a source of directionality). All in all, when there is sufficient frequency or probability data regarding a source of contingency, entropy can be calculated. This presents a bridge to empirical applicability. On this note, I end by considering a case of E. coli mutagenesis that is not only exemplary in illustrating how entropies can be calculated, but also how particular instances of mutagenesis can radically differ in strength amongst different variants of E. coli due to reasons that will come to make good biological sense.

Conceptualising sources of contingency

The term ‘sources of contingency’ has cropped up from time to time in the evolutionary contingency literature and refers to a phenomenon or process that accounts for evolutionary contingency (e.g. Beatty 2006, 2008; Powell 2009; McConwell and Currie 2016). As for the idea of evolutionary contingency, minimally put, it asserts (amongst other things) that certain evolutionary outcomes could have been otherwise. That is—one uniting feature amongst the different senses of contingency (e.g. Beatty 2006; Powell 2012; Desjardins 2011b; Wong 2020a) is that there is an evolutionary ‘outcome’ that may not ‘necessarily’ occur. Whilst many contingency-theorists may differ on what kind of outcomes are at hand, particularly in light of the so-called ‘grain problem’, or how modally strong a contingency claim is meant to be (e.g. contingent in all of evolutionary history or within more restricted contexts),Footnote 3 contingency-theorists agree that evolutionary contingency is about the objective evolution, or lack thereof, of outcomes. In other words, a contingency-theorist is not concerned with what an epistemic agent can predict about future evolutionary outcomes given some set of facts. Rather, a contingency-theorist is concerned with the fact of the matter regarding whether an outcome will indeed evolve (or its tendency to evolve).Footnote 4

Accordingly, a good concept of a source of contingency ought to fall on the right side of this objective versus epistemic distinction. This is particuarly important because, as we shall see, many senses of chance pertinent to random mutations or genetic drift are epistemic, and not objective. For this reason, a genuine source of contingency ought to be a phenomenon or process that results in an outcome that is objectively uncertain in its occurence.

Moreover, contingency-theorists have also emphasised that evolutionary contingency can vary in degree (Beatty 2006; Desjardins 2011b; Powell 2012; Turner 2011; Wong 2020a). One way in which evolutionarily contingent outcomes can vary in degree is for the sources of contingency, themselves, that account for these outcomes to vary in degree.

These considerations suggest a conception of sources of contingency that is founded in objective probabilities. Firstly, it would allow for the concept to be able to straightforwardly satisfy the aforementioned objectivity requirement. Secondly, it would allow for sources to be able to vary in degree. However, sources of contingency would not vary in degree by simply entailing a higher or lower probability for an outcome, but, rather, would vary according to how different in probability the possible outcomes are to each other. There is a population-level statistic that conveys the degree of bias towards any particular possible outcome (more on this later). Thirdly, a probabilistic construal of sources of contingency is in line with some major accounts of evolutionary contingency that employ (objective) probabilities; namely, Powell and Mariscal (2015), Wong (2020a), Desjardins (2011b). These three accounts assert, respectively, that evolutionarily contingent events are some function of a low probability event, are of non-trivial probability themselves, or whose probabilities change as a result of previous events realised. A source of contingency that trades in probabilities is thus able to account for these features. Finally, and as the second half of this paper will soon speak to, chance variation and genetic drift naturally integrate with a probabilistic conception of sources of contingency. After all, probability has been inherent within evolutionary biology, instantiating within both chance variation and genetic drift. Empirical studies of random mutations and genetic drift often explicitly operate with probabilities. This natural intergration between sources of contingency, on the one hand, and chance variation and genetic drift, on the other hand, will be particularly useful when attempting to evaluate the strength of a source of contingency in real-world cases (c.f. “Shannon's information entropy” section).

Accordingly, a source of contingency can be understood as a phenomenon or process that leads to an outcome with less than an objective probability of 1. Whilst this may seem, at first glance, to be easily satisfied by any number of phenomena or processes (unless one were to absolutely reject non-trivial objective probabilities at the evolutionary level),Footnote 5 the more pertinent question, when it comes to identifying sources of contingency, is to what extent is a particular phenomenon or process a source of contingency. In other words, how strong or weak are they as sources of contingency?

There is more to be to said about this definition of sources of contingency: importantly, if an outcome does not have an objective probability of 1, then there exists, at least, one alternative outcome that has a probability greater than 0 (for discrete random variables). This is because objective probabilities must sum to 1. In other words, there is at least one other outcome that is possible from the earlier evolutionary state.

In fact, the preceding evolutionary state may lead to many possible outcomes insofar as the outcomes sum to 1. Let us call the string of possible outcomes, the possible outcome array where each outcome will have a non-zero objective probability. Accordingly, the array will also have a particular probability distribution specifying each outcome’s probability. That is—the distribution can take a uniform shape or a variety of non-uniform shapes. It is easy to see that the possible outcome array has, at least, two informative features. Firstly, it contains the number of possible outcomes reachable by a preceding or initial evolutionary state. Secondly, it specifies the objective probability of each possible outcome.

Consider the example, in Fig. 1, where \({O_1}\) denotes an organism that can experience an array of four possible mutations with different objective probabilities that sum to 1 (adaptive values of the mutations are not of concern).

In this example, the process is an instance of mutagenesis since the outcomes are mutational. Now, the extent to which this instance of mutagenesis is a source of contingency is dependent on the two aforementioned features of the possible outcome array. From the supposition of probabilities, one can see that there is no outcome with an objective probability of 1 (and that there is more than one outcome). So, there is already some contingency in the example. But how much? This is partly determined by the existence of any bias(es) towards a particular outcome(s), or, in other words, the shape of the probability distribution. In this case, \(M_2\) is more probable than any of the other possible outcomes such that the probability distribution takes a non-uniform shape that is biased towards \(M_2\). We may graphically represent this in the form of a histogram (Fig. 2).

However, probability distributions of possible outcome arrays need not have one peak but can have many peaks insofar as probabilities sum to 1. In technical parlance, they need not be uni-modal but can be multi-modal. There may be instances of mutagenesis or drift whereby one or two outcomes have significantly higher probability than the rest of the alternative outcomes. There would be a high level of contingency in this case because either of the two peak probability outcomes may result. Intuitively, this makes sense as there is not one outcome that is certain to come about even if many of the other outcomes are of low probability; there is still a great sense in which the outcome could have been otherwise.

In general, the strength of a source of contingency is determined by two features: (1) the probability distribution of the array, and (2) and the number of possible outcomes. Firstly, a source of contingency can be strengthened by increasing the number of possible outcomes in the array, ceteris paribus. That is—as the number of possible outcomes increases (more technically, as the cardinality of the range of a random variable increases), the objective probability of every outcome decreases, ceteris paribus. For example, if there are 100 possible outcomes to drift or mutagenesis, then each outcome will have a lower probability than if there were 10 outcomes, all else being equal.

Secondly, if a source of contingency has absolute nil bias towards any particular outcome (i.e. uniform shape), then the differences between each and every probability value is minimised. For example, if there are 8 outcomes and if the distribution is absolutely uniform such that the difference between all and every probability value is 0, then the probability of each outcome is \(\dfrac{1}{8}\). In fact, the objective probability of any outcome in a uniform distribution will be given by \(\dfrac{1}{n}\), where n is the number of possible outcomes. However, if the distribution is non-uniform such that there is, at least, one peak, then the differences between each and every probability value (differences between the combinatorically possible pairs) will increase, and the source of contingency becomes weaker. After all, the probability of reaching those outcomes (i.e. the peaks) is higher than the alternatives.

Put in more intuitive terms, a source of contingency is stronger when there is a higher level of equitability between all the different possible outcomes—there is a sense that which outcome will occur is objectively uncertain. Conversely, a source of contingency is weak when there is an outcome that is relatively more probable to occur than alternative outcomes. Different instances of mutagenesis, drift, or any other candidate phenomena, for that matter, will likely differ in their strengths. But, part of the task of identifying sources of contingency involves determining the extent to which a phenomenon or process is a source of contingency, or, in other words, involves determining a candidate source’s degree of strength.

There are, indeed, systematic methods for directly quantifying the level of equitability within the possible outcome array. But, in an effort to avoid overwhelming the present discussion, I shall defer and offer the statistical measure for assessing the strength of a source of contingency later in the “Shannon's information entropy” section. For now, after having outlined the probabilistic conception, I turn to an examination of two groups of evolutionary factors that have been strongly suspected as being sources of contingency, starting with chance variation.

Chance variation

Prior to the modern synthesis, the attribution of chance was relegated to the first of a two-step process consisting of the generation of evolutionary variation and subsequent natural selection acting on that variation. This was not to say that ‘chance’ was not significant enough to influence the outcome of forms, for Darwin’s Orchids (1862) showed precisely that the variations which ‘chanced’ to occur could very much determine ultimate forms (Beatty 2004, 2008). However, following the advent of genetic drift, Lady Luck’s fingers were no longer seen to be limited to the generation of variation, for chance had embodied itself via genetic drift as an alternative second step. In other words, by way of drift, chance was then seen as an alternative mode of evolutionary change to natural selection (Beatty 1984)Footnote 6. In this section, I begin with Darwin’s usage of chance variation, followed by identifying five senses in which chance variation processes are taken to be ‘chancy’, ‘random’, or ‘accidental’ (terms used interchangeably in the literature). As we shall see, not all of these senses engage with evolutionary contingency.

Although the theory of evolution by natural selection is theoretically pillared on the concept of ‘chance variation’, Darwin did little to elaborate on which entities, units, or, objects of evolution were the ‘variants’ in Origin (1872). That is—there was initially no abstract theory of variation (Pence 2015, notes the same point). Darwin, at times, spoke generally of individual organisms (1859) varying by chance whilst Orchids (1862) contained instances in which he referred to the anatomical parts of different orchids as varying by chance. It was not until later when Darwin presented his theory of pangenesis (1868), positing the existence of gemmules, that Darwin had a more complete (though ultimately mistaken) theory of evolutionary change from inheritance to selection. The incipient idea was that gemmules were particles at the centre of an elaborate information transmission mechanism from parent to offspring but of particular significance is that, according to theory, the environment of the parents could influence the gemmules. Ironically, in this way, Darwin’s ‘chance variation’ allowed for his theory to become increasingly use-and-disuse and was not so chancy after allFootnote 7.

However, soon after the publication of the theory of pangenesis, Galton (1871) demonstrated, by way of experimentation on rabbits, that Darwin’s theory of pangenesis was mistaken. Consequently, the theory of pangenesis failed to gain traction and, over time, modern evolutionists have since abandoned this avenue of thought. Instead, following the turn of the century, the so-called modern synthesis authors began to recognise the cohesiveness of Mendelian genetics and Darwin’s theory of evolution by natural selection and thus, produced a series of works synthesizing Mendelian genetics and Darwinism as a theory for biological evolutionary change. These works championed certain key ideas, which eventually came to form the crux of the modern synthesis.

One such idea is that evolution proceeds chiefly on genetic variations, which are either generated by random mutation or inherited from the previous generation—in some cases, only after a process of recombination. The latter refers to a process during meiosis (though recombination happens elsewhere), whereby chromosomal material is reshuffled in one way or another, which has been theorised as an important catalyst in the production of variation in evolutionary history (Barton and Charlesworth 1998). Another idea, which has become a tenet of the modern synthesis, is that mutagenesis proceeds ‘randomly’. By proceeding ‘randomly’, the modern synthesis authors did not mean that there was no physical cause (deterministic or otherwise) to mutations nor that mutations were unaffected by environmental factors. After all, it was already well known during the synthesis that high energy radiations could induce mutations (e.g. Timoféeff-Ressovsky 1935). Rather, they attributed a very particular meaning to ‘random’—one that made sense within the theoretical confines of the modern synthesis. I shall come to explicate this sense in due course.

Random mutation

The sense in which the generation of mutations is random has been discussed by several authors in the philosophy of biology [Plutynski et al. (2016) offers an impressive summary]. As a suitable point of departure, there is the Laplacean sense of ‘chance’ whereby mutations are perceived to be random as a result of one’s epistemic deficiencies in being able to identify the causes of mutations. The view is that there is some aetiology, albeit unknown to us, behind each and every mutation that fully accounts for their occurrence. Darwin, himself, at times advocated this Laplacean sense as evidenced by the following:

I have hitherto sometimes spoken as if the variations ... had been due to chance. This, of course, is a wholly incorrect expression, but it serves to acknowledge plainly our ignorance of the cause of each particular variation. [my emphasis added] (Darwin 1859)

There is no doubt that mutations can be random in the Laplacean sense. That is—though we can sometimes offer compelling physico-chemical stories behind certain mutations (e.g. McClintock 1950), spelling out the aetiology behind mutations is often a molecular feat that is beyond our reach today (Sloan and Fogel 2011). However, the Laplacean sense does not entail that there is no systematic or non-uniform pattern in which mutations actually occur. In other words, although epistemic agents may be ignorant of the causes of mutations, there may very well be particular kinds of mutations that are more probable to occur. Hence, a concession to Laplacean ignorance does little to advance an attribution of directionality to random mutations. Nor would it advance a denial of directionality either. That is—the Laplacean sense of chance also fails to entail any non-uniform pattern for the occurrence of mutations. It is simply neutral with respect to the fact of the matter regarding the directionality of mutations. In this way, the Laplacean sense of random mutations does not have any conceptual bearing on the processes of evolution: if mutations are construed to be random as a result of our ignorance of their causes, then this says nothing about the manner in which genetic variation is produced. Thus, even if random mutations were chancy in the Laplacean sense, then this is no reason to think that it is a source of evolutionary contingency. In other words, it fails the objectivity requirement of a source of contingency.

On the other hand, the dominant sense of ‘random mutations’ in the modern synthesis has theoretical implications within evolutionary theory and, in particular, population genetics. In his consideration of the roles of natural selection and random mutation, Dobzhansky (1970) thought that mutations were random in the sense that whichever mutation occurred was indifferent to whether that mutation was “adaptive” (Ibid.) or evolutionarily fit. Or, as Beatty (1984) put it neatly, mutations are random with respect to fitness.Footnote 8

There is, however, some ambiguity concerning how mutations are random with respect to evolutionary fitness. It is important to see that this modern synthesis sense does not mean that the probabilities of a beneficial, deleterious, and, neutral mutation are equiprobable. After all, Simpson (1944) and Dobzhansky et al. (1977) claimed that an organism that is poorly adapted to a new environment has a greater probability of experiencing a beneficial mutation rather than a deleterious one.Footnote 9 Rather, it was meant that a mutation did not occur because it would fulfil the adaptive needs of the organism. For example, being in a high temperature environment would not causally lead to mutations that are conducive to an organism’s heat tolerance. And so, the claim is a denial of any causal relationship (though my survey of the literature reveals that there is the occasional anti-statistical or anti-correlative claim). In other words, the modern synthesis sense of chance stipulates independence between random mutations and any adaptive benefits they may incur. In population genetics, this sense meant that the mutations that occurred did not track fitness and so, any directional movement of a population through an adaptive landscape (sensu Wright) must be facilitated solely by other processes (e.g. natural selection). In fact, it was stated quite explicitly that random mutation alone was inadequate for any directional evolution towards fitness: “mutation alone, uncontrolled by natural selection, would result in the breakdown and eventual extinction of life, not in adaptive or progressive evolution” (Dobzhansky 1970, p. 65).

However, from the 1980s to 2000s, several papers appeared in the biological literature, which attempted to offer resistance against this sense of chance by appealing to, then, recent empirical evidence. This is pressing because if mutations are, indeed, directional towards adaption, then random mutations will fail to be sources of contingency to the extent that mutagenesis will tend—for more or for less—to lead to the most adaptive mutational outcome(s). The greater the directionality towards adaptation, the weaker the source of contingency. Notable works of the resistance include Cairns et al. (1988), Shapiro (1999), and Jablonka and Lamb (2005), which cite studies that attempt to show that certain mutations, which are adaptive, occur more often than other mutations, which are less adaptive. Cairns et al. (1988) themselves replicate (with modifications) one of the experiments and, subsequently, interpreted their results to be supportive of directed mutagenesis towards adaptation.

Interestingly, of the studies cited (e.g. Shapiro 1984; Hall 1988; Benson 1988), there was one commonality: they were all experimental assays that compared (1) the mutation rate of a mutant in an induced environment in which it is beneficial with (2) the mutation rate of the same mutant in an environment in which it is not beneficial. The rationale is that if the mutation rate was higher when it was beneficial than when it was not, then it can be concluded that mutations were directed toward adaptations. However, such a move is suspect as it pays no regard to certain methodological issues or the existence of alternative explanations (Lenski and Mittler 1993). For example, a wholesale increase in mutation rates in only the ‘beneficial environment’ could produce a disparity in the mutation rates of the new mutant between the two environments, but it would fall short of showing that the mutation occurred because it was adaptive. In other words, there would be an explanation of how the mutant had higher mutation rates in the environment in which it is beneficial, but the mutant would not have necessarily occurred because it was beneficial in that environment. As such, there needs to be an adequate demonstration of some causal link between adaptive need and a rise in mutation rates.

Moreover, in order to estimate the mutation rates, most of these studies employed a technique known as the ‘fluctuation test’. Ironically, the fluctuation test was first pioneered in a 1943 study, by Luria and Delbrück, which became the canonical defence for the modern synthesis tenet that mutations are random with respect to fitness. Luria and Delbrück (and, indeed, many others) interpreted the results of their study to show that E. coli mutated phage resistance before exposure to bacteriophage and not after. And thus, it was concluded that mutations were not directed towards adaptive needs. Cairns et al. (1988), Shapiro (1999), and Jablonka and Lamb (2005) are aware of the Luria and Delbrück study but argue that the study failed to rule out the possibility of there being other mutations–mutations that are either different in kind, different in genome locale, or, present in different organisms—that are directed towards adaptation. In other words, they claim that it is, in principle, possible for sufficiently different mutations to be directed towards adaptation. Their point is that although the Luria and Delbrück experiment may have internal validity with respect to E. coli phage resistance, external validity is yet to be shown and, for this reason, directed mutagenesis may still be possible. Indeed, the three authors go further and claim that it is possible.

Lenski and Mittler (1993), in their evaluation of the studies, take a more reserved position and allege that there are methodological issues (including a lack of proper controls, the existence of alternative hypotheses, etc.) in all of the studies purporting directed mutagenesis. Their conclusion is that, for this reason, there is no compelling case for directed mutagenesis as of yet. Merlin (2010) maintains a similar position though her basis is on conceptual grounds rather than methodological grounds. She begins by defining the modern synthesis sense of random mutations as being an ‘evolutionary chance mutation’.Footnote 10 And, a mutation is not an evolutionary chance mutation if and only if two conditions are fulfilled. In other words, a mutation is directed (towards adaptation) if and only if:

-

1.

the mutation is more probable in an environment where it is beneficial than in another environment where it is deleterious or neutral and,

-

2.

the mutation is more probable in an environment where it is beneficial than other deleterious or neutral mutations (in the same environment) (Merlin 2010)

Given her schema, she takes all of the empirical cases purporting to show directed mutagenesis towards adaptation to fail satisfy, at least, one of the above conditions (Merlin 2010). As such, in concordance with (Lenski and Mittler 1993), her conclusion is that current studies fail to show directed mutagenesis though this is not to say that directed mutagenesis towards adaptation is not possible.

The empirical studies landscape has changed little since (Merlin 2010). And, since the matter of directed mutagenesis toward adaptation is clearly an empirical issue to be settled by the work of biologists, I currently remain neutral with respect to the truth of directed mutagenesis towards adaptation. However, quite crucially, even if the truth of the modern synthesis sense of random mutations is undecided, there can be a failure of a different sense of chance that could threaten their candidacy as sources of contingency.

That is—even if there are no biases towards certain mutations on account of their fitness, certain non-uniform distributive patterns could nonetheless emerge. There can be particular mutations that are favoured on account of their physical differences albeit not their fitness differences. This is to say that, regardless of whether mutations are random with respect to fitness, the process of random mutations can be directional along some non-fitness, but nonetheless physical axis (i.e. towards certain physical attractors). If this is true, then random mutations can result in particular kinds of mutational outcomes more often than its alternatives and hence, would fail to constitute a source of contingency to the appropriate extent. The greater the physical bias towards those outcomes, the weaker the source of contingency. There are, at least, two ways in which certain mutational outcomes can be physically favoured.

The first is that there may be an abundance of mutagens that induce specific mutations such that the probability of occurrence of those mutations is higher than alternatives (regardless of fitness differences, if any). Mutagenesis is achieved mostly either via high energy radiations (e.g. UV light, X-Rays, etc.) or chemical alkylation agents. However, the former method is often ‘fat-fingered’ and induces all kinds of mutations such that there is little specificity as to which mutations occur (e.g. Tillich et al. 2012). In other words, although there is a wholesale increase in mutation rates following intervention, there is no particular mutation that occurs with greater relative probability.

There are both intuitive and abstract reasons for why a wholesale increase in mutation rates is insufficient for any sort of directionality. Intuitively put, if there was an increase in the mutation rates of all mutations, then there would be no directionality towards any limited subset of mutational outcomes. In other words, a wholesale increase in mutation rates would not constitute a bias towards any particular kinds of mutations. Abstractly, and to invoke Merlin’s framework, her second condition clearly stipulates that the probability of a beneficial mutation must be greater than deleterious or neutral mutations in the same environment. In order words, it requires that directed mutagenesis encompass an occurrence bias towards particular mutations (adaptive ones at that, for Merlin). An increase in mutation rates for all mutations in an environment does not constitute a bias. It is also well known that a larger population or larger genome is conducive to higher absolute mutation rates (Wright 1931). As such, a manipulation of population size would increase wholesale mutation rates, but it would, however, not result in directionality since any subsequent rise in mutations will not be disproportionally towards certain mutational outcomes. Mutagens that incur wholesale mutation rates, alone, do nothing for directionality. Mutagen specificity is imperative, lest there be no actual bias towards any particular mutational outcome.

Accordingly, one can consider examples whereby specific mutations are induced by mutagens. The chemical compound ethyl methanesulfonate has been demonstrated to favour \(GC \rightarrow AT\) mutations (Coulondre and Miller 1977; Prakash and Sherman 1973) whilst aflatoxin \(B_1\) almost exclusively favours \(GC\rightarrow TA\) transversions (Aguilar et al. 1993). These two mutagens result in specific mutations on account of certain, currently unarticulated, physio-chemical dispositions. In addition, one significant category of mutagens—base analogues—are, by their very nature, mutation specific. This is because the mechanism of their causing mutagenesis relies on recognising and binding to specific sequences of DNA, which thereby, block the ordinary binding of certain nitrogen bases. Instead, incorrect nucleotide sequences are then inserted opposite these base analogues during DNA replication.

Although the exact reasons for how mutagens induce specific mutations are not entirely known, their existences are well documented. But insofar as these mutagens exist, there will be a bias in the kinds of mutations that occur. The point is that it is possible for two mutations to have varying probabilities of occurrence due to their physical differences and mutagens discriminating according to those physical differences (regardless of fitness differences, if any).

Secondly, DNA error-checking mechanisms are also specific to certain mutations (Dexheimer 2013). DNA error-checking mechanisms are molecular processes that recognise specific nucleotide sequences or deviations from specific nucleotide sequences and, subsequently, modify or destroy the sequence. These mechanisms enable fidelity in DNA replication but also maintain DNA functional integrity outside of replication. Accordingly, if these mechanisms are specific, then only certain types of mutations will be recognised and, subsequently, modified or destroyed (whilst others are left unaltered). This means that there will be a disproportionate number of a certain kind of mutation (i.e. those that are not recognised by the DNA error-checking mechanism). Thus, if two mutations had the same level of fitness but one was the target of the DNA error-checking mechanism due to some physical difference (i.e. nucleotide sequence), then their mutation rates will diverge.Footnote 11

Moreover, DNA error-checking mechanisms can be downregulated in throughput to allow for an increase in mutation rates. These are the so-called mutator mechanisms (Shapiro 1999). Certain microorganisms have been documented to possess mutator mechanisms, whereby it is hypothesised to be an evolutionary advantage to be able to modulate mutation rates on an as-needed basis (Díaz Arenas and Cooper 2013).Footnote 12 The rationale is that by increasing mutation rates, the (offspring of the) bacterium is able to evade immune system defences (Richardson and Stojiljkovic 1999; Ancel et al. 2003). However, insofar as the regulation of mutation rates is specific to certain mutations, then there can be an imbalance of mutations—and one that need not track fitness. All in all, mutagen specificity and error-checking specificity show that certain mutations have a greater probability of occurring than other mutations due, not to their fitness differences, but to their physical differences. Naturally, this leads us to the third sense in which mutations can be considered random.

Mutations are, sometimes, taken to be random with respect to physical differences. In other words, this sense asserts that there is no causal relationship between the mutagensis of certain variants and their physical properties. But, on the contrary, mutagen specificity and DNA error-checking specificity have shown this sense to be false. Certain mutations are more probable to occur due to its physical properties such as to be specifically induced by mutagens or to evade certain error-checking mechanisms.

It is important to note that to assert that mutations are random in this sense is equivalent to asserting that all mutations have equiprobable occurrence (insofar as one is a physicalist regarding mutations). For example, if a mutation were to occur at a specific locus and there were four different mutations possible, then each mutational outcome has a 0.25 objective probability. This would mean that any physical differences between mutations could not entail that certain mutations are more probable than their alternatives. This is the strongest sense of randomness encountered thus far since it asserts that any physical difference is irrelevant and, for this reason, denies the logically weaker senses including directionality towards adaptation.

Although mutagen specificity and DNA error-checking specificity show that mutations are not random with respect to physical differences, it is worthwhile to consider one commonly speculated way to lend support to this sense of random mutations. It involves an appeal to micro-level indeterminism and/or indeterministic quantum mechanics.Footnote 13 Although Fisher did not provision a fully-fledged argument, his intuitions impelled him to endorse a “principle of indeterminism” (1934) for biology in the effort of uniting evolutionary biology with the physics of the time, which had already become increasingly indeterminist on account of Heisenberg (1927). On this note, following Sober’s (1984) lead, Brandon and Carson (1996) discuss indeterminism at the quantum level and consider the possibility of quantum indeterminism percolating up towards the level of mutations. The idea is that since quantum-level processes are inherently indeterministic according to the Copenhagen interpretation, the most micro of the evolutionary processes—random mutations—must also be inherently indeterministic. In other words, the process by which mutations occur is ‘random’ because of lower-level quantum indeterminacy. These so-called percolation arguments continued to be championed thereafter (e.g. Rosenberg 2001a; Stamos 2001; Glymour 2001). However, given that there is difficulty in understanding the causes behind mutations, all of the pro-percolation authors concede that it is not clear how percolation actually occurs; they assert only that random mutations sufficiently resemble the quantum processes to merit indeterminacy. Others vehemently argue against the percolation argument (see Sloan and Fogel 2011), and sever the link between the quantum-level and random mutations. All in all, the jury is still out on the plausibility of this sort of indeterministic chance.

However, even if indeterministic chance from the quantum level does percolate up to the level of mutations, it does not necessarily mean that mutations are random with respect to physical differences. This depends on whether such indeterministic chance is able to override certain sampling processes that are discriminate to physical differences such as the ones provisioned by mutagens in the environment or DNA error-checking mechanisms. In other words, there is no reason to suppose that quantum indeterminacy excludes those discriminate sampling processes. There is a coherent picture of the world whereby both consistently exist. As such, regardless of the outcome of the percolation argument, there is ample evidence (e.g. those demonstrated above) that shows that mutations are not absolutely random with respect to physical differences. That is – there are mutagens that induce the occurrence of specific mutations and DNA error-checking mechanisms that block the occurrence of specific mutations, both on account of certain physical properties.

Contemporary biologists have increasingly become cognizant of the specificity of both mutagens and DNA error checking and, as a result, have distanced themselves from the modern synthesis orthodoxy of mutations being random with respect to physical differences (e.g. Loewe and Hill 2010; Rosenberg 2001b). There is a fifth interpretation, that has had some following in recent years, which is that mutations are random in the sense that there is no physical or chemical bias for where on the strands of DNA mutations occur (see Hartl and Clark 1989). In other words, the various loci (or however else they are segmented) on any given strand of DNA will have an equal probability of being a site of a mutation. Notice that this sense of randomness is not mutually exclusive with any of the previous senses. Random mutations may be chancy in the modern synthesis sense in virtue of this fifth sense.

In fact, the prevalence of this fifth sense is tenuously predicated on this being a biologically crude way of randomising the effects of mutations since the genes of each locus are assumed to have a specific phenotypic role(s)Footnote 14 (Falconer and Mackay 1996). However, this sense of random mutation is also false for there are observed mutation hotspots, which are positions within particular nucleotide sequences where there is a high probability of mutations occurring. The exact mechanisms behind the existence of these mutational hotpots are unclear, but there is a clear bias towards certain loci as the infamous Boer and Glickman study (1998) demonstrated in E. coli.

Having considered five senses of random mutation, there is reason to think mutations fail to be chancy in, at least, two of these senses. Whilst the jury is still out on indeterministic chance and the modern synthesis sense of chance, it is empirically clear that certain mutations have greater probabilities of occurrence due to certain physical differences, and that mutations are more probable to occur in certain hotspot locales. For this reason, random mutations are not, paradigmatically, strong sources of contingency; there are respects in which their strength as sources of contingency is limited. Instances of mutagenesis do not always lead to equiprobable outcomes on account of certain physical biases, and are stronger or weaker sources of contingency depending upon the degree of bias. One may, more formally, evaluate the degree of bias using statistical techniques, which I will come to describe in the “Shannon's information entropy” section.

Recombination

Genetic recombination is a process within meiosis (as well as elsewhere) that results in new genetic variability in offspring due to a physical reshuffling, reorganisation, or, informational transfer of genetic material. More generally, recombination results in a transfer of genetic material between strands of DNA (typically, between different chromosomes). As such, genetic recombination has a role in determining the genetic variability of individuals in a population, whereby subsequent processes like selection or drift are to act upon said material. However, there are reasons for why recombination fails to be ‘chancy’.

Like mutation hotspots, there are recombination hotspots, which are regions on the genome that have a greater probability of recombination than other regions. There is a significant amount of empirical evidence supporting the existence of these hotspots (e.g. Jeffreys and Neumann 2002). It has been theorised that there is an evolutionary advantage to recombination occurring more often where genes are present in higher concentration and occurring less often in areas of the genome where there is a lower density of genes (Barton and Charlesworth 1998). The idea is that, due to a plethora of molecular reasons, linkage disequilibrium can easily occur between two or more polymorphic sites that are undergoing selection. Any such disequilibrium would significantly disrupt selection. However, if there is higher rate of recombination at these sites (thus minimising linkage disequilibrium) then natural selection can work more efficiently (Hey 2004). Empirical studies confirm higher recombination rates in areas with high genetic density (Fullerton et al. 2001; Kong et al. 2002). Given that the existence of recombination hotspots is due to an evolutionary advantage, recombination is not random with respect to fitness and, a fortiori, it is also not random with respect to physical differences. For these reasons, recombination also fails to be a, paradigmatically, strong source of contingency. There are cases in which certain recombinational outcomes have a higher probability of occuring than their alternatives.

To conclude this section, whilst the empirical data is inconclusive regarding whether random mutations are random with respect to fitness, this section showed that there are good reasons to believe that random mutations are not statistically random. More specifically, mutagen specificity, DNA error-checking mechanism specificity, and mutational hotspots demonstrate that some mutational outcomes are more probable due to their physical dispositions.

If a process results in non-equiprobable outcomes, then it is a stronger or weaker source of contingency depending on the degree of bias or the amount of uncertainty at stake. For example, if an instance of mutagenesis is greatly biased towards one mutant, then there is not much uncertainty as to which outcome will occur—the biased one is probable to occur. Strictly speaking, the reasons for a bias towards certain mutational outcomes may be plentiful. The reasons may be adaptive or non-adaptive—but insofar as the process is biased to particular outcomes, then they oppose evolutionary contingency.

Genetic drift

There are a number of disparate processes and outcomes that have come to be known as genetic drift (Millstein (2016) provides a recent taxonomy). Not surprisingly, some of the ‘chances’ of drift have already been encountered in the preceding discussion on chance variation. That is—some senses of chance apply commonly to both chance variation and genetic drift.

For one, biologists and philosophers have also interpreted genetic drift to be chancy in the Laplacean sense. Lande et al. (2003) take drift to “appear to be stochastic or random in time reflecting our ignorance about the detailed causes of individual mortality, reproduction or dispersal” [emphasis added]. Similarly, Rosenberg (1988, 1994) and Horan (1994) argued that there are no objective probabilities in evolution, but only subjective probabilities. For Rosenberg and Horan, since the processes of evolution are deterministic, the probabilities of our theories of evolution are merely epistemic. And, as such, instances of genetic drift are really just bouts of deterministic selection masquerading as drift due to our ignorance about individual births, deaths, etcetera. This sense of chance would not qualify genetic drift as a source of contingency due to a failure of the objectivity requirement. However, conceptual progress and the elucidation of the various species of genetic drift have largely dissipated this type of thinking anyways. For example, Rosenberg no longer thinks that all attributions of drift are due to epistemic ignorance since one species of drift—drift as sampling error—clearly trades in objective probabilities (Bouchard and Rosenberg 2004). The aim of this section is to consider whether some species of drift can be legitimately said to be sources of contingency. I shall conclude that genetic drift as indiscriminate sampling is a legitimate source of contingency, whereas genetic drift as sampling error may or may not be, depending on the contingency claim at hand.

Genetic drift as indiscriminate sampling

Genetic drift has also been characterised as ‘indiscriminate parent sampling’ and ‘indiscriminate gamete sampling’ (Beatty 1984). These processes are chancy due to their ostensibly indiscriminate nature. But there is another distinction that one can make, owing to a subtle ambiguity in the way in which parent and gamete sampling can be indiscriminate. In fact, this ambiguity is inherent in the biological and philosophical literature, as some authors have clearly considered indiscriminate sampling as being indiscriminate to fitness differences (e.g. Shanahan 1992; Gildenhuys 2009; Okasha 2012; Pence 2017) whilst other authors refer to indiscriminate sampling as indiscriminate to physical differences (e.g. Brandon and Carson 1996; Hodge 1987; Beatty 1984, 2002; Millstein 1997, 2002).

This distinction introduces some complications to the present inquiry into whether genetic drift as indiscriminate sampling is a source of contingency. But, as we shall see, the distinction (despite mirroring the distinction between two different senses of random mutation previously considered) does not ultimately carry over a difference as to whether the two types of indiscriminate sampling constitute sources of contingency. That is—both types of indiscriminate sampling are maximally strong sources of contingency.

By way of explaining indiscriminate sampling, Beatty (1984) offers a familiar contrast as a pedagogical device. He states that parents and gametes can be sampled discriminately according to their fitness differences, which just is the process of natural selection. After all, discriminate sampling is the process whereby certain parent individuals, who, for a multitude of possible reasons, are evolutionarily fitter than other parent individuals, and are able to better survive and/or reproduce than other parent individuals. Gametes may undergo a similar discriminate sampling process; that is—certain gametes may better survive and/or reproduce than other gametes on account of fitness differences.

On the other hand, genetic drift, for Beatty (1984), includes the processes by which these parents and gametes are sampled indiscriminately such that there are no particular (kinds of) parents or gametes that are favoured on account of their properties. Beatty (Ibid.) importantly specifies that it is physical properties that are of concern; in other words, sampling is indiscriminate to physical differences as opposed to sampling that would be indiscriminate to fitness differences. But the latter is not entirely without instantiation. Motoo Kimura and the other neutralists invoked the latter sense when they maintained that drift was predominant because most molecular and/or genetic variants had no effect on phenotype (Kimura 1977). The neutralists did not assert that molecular and/or genetic variants were physically indistinguishable, but indistinguishable only in phenotype and fitness (as they did not effect changes to these latter parameters).Footnote 15

However, both drift as indiscriminate sampling to physical differences and indiscriminate sampling to fitness differences are sources of contingency. Firstly, sampling that is indiscriminate to physical differences is, a priori, non-directional. This is because, insofar as one is a physicalist about the units of evolution (e.g. individual parents, gametes, etc.), there is no way in which individual parents or gametes are systematically sampled differentially given that the sampling process is blind to any physical differences. Any divergence from expectation would merely be consequential sampling error similar to the case of a fair coin that results in an unequal proportion of heads and tails (when the sample size is even). Genetic drift as indiscriminate sampling with respect to physical differences, then, is a legitimate source of contingency and, indeed, maximally so for a given number of possible drift outcomes (keeping all other parameters the same). It is maximally strong because the outcomes that can result from such a process must have equal probability since the process is absolutely blind to any property individuals may have. In other words, every individual is on a par, probabilistically-speaking, with regard to their survival and/or reproduction.

What about sampling that is indiscriminate to fitness differences? There are some additional complications: whilst indiscriminate sampling with respect to physical differences is, a fortiori, also indiscriminate to fitness, there seems to be conceptual room for indiscriminate sampling with respect to fitness to be, at the same time, discriminate with respect to physical differences. This could mean that whilst a population of gametes or parents have the same fitness properties, they may possess physical differences that are sufficient to impel differential systematic treatment whereby certain parents or gametes survive and/or reproduce with greater probability than others. In other words, it might seem that drift as indiscriminate sampling with respect to fitness differences can result in an unrepresentative population on account of being discriminate to some physical property. That is—the sampling process may result in unrepresentative sampling along some physical dimension despite the process being indiscriminate with respect to fitness. As such, indiscriminate sampling with respect to fitness differences would not necessarily constitute a source of contingency.

But such a scenario cannot ever obtain for good reason. If there are, indeed, physical properties that are sufficient to impel differential systematic treatment for the persistence and/or reproduction of parents or gametes, then it is also, ex hypothesi, a fitness property. This is because whether a property is one of fitness depends on whether it matters to its survival and/or reproduction. In cases where the sampling process, itself, pertains to survival or reproduction, then whatever property it is discriminate towards is necessarily one of fitness.

This is not to say that all physical properties are fitness properties for this would be absurd; for one, physical differences obtain in synonymous mutations but the same amino acid is nonetheless produced. And, in most cases, the difference of having an extra strand of hair could hardly matter to fitness. However, the point is that a physical property that impels differential treatment in survival or reproduction is, by definition, also a fitness property. Hence, if there is a sampling process (for persistence or reproduction) that is discriminate to those physical properties, then it is also a sampling process that is discriminate to fitness properties. In fact, it would just count as a case of natural selection. Contrapositively, if there is a sampling process (for persistence or reproduction) that is indiscriminate to fitness, then it is also indiscriminate to physical properties. Accordingly, although there may be a logical distinction between the two types of sampling processes, there is no empirical one insofar as the sampling is concerned with persistence and/or reproduction. Thus, just as drift as indiscriminate sampling with respect to physics is a maximally strong source of contingency, drift as indiscriminate sampling with respect to fitness is also a maximally strong source of contingency.

Genetic drift as sampling error

Stephens (2004) argues that drift is directional because it eventually results in homozygosity. That is—given enough time, drift will fix one allele in the population. In response, Brandon (2006) argues that this hardly constitutes directionality since an epistemic agent could not possibly predict which allele will ultimately become fixed. Joining in on the debate, Filler (2009) argues that Stephens’ directionality is a valid sense of directionality, but one that is logically weak.

Whilst I agree with Filler, it is clear that this logically weaker sense of directionality (i.e. resulting in homozygosity) does not challenge ordinary specifications of evolutionary contingency. That is—although it asserts that there is a 1.0 probability of reaching homozygosity (a certain type of outcome), that there will be some homozygosity is not an outcome that contingency-theorists are ordinarily concerned with. Wong (2019, 2020a) has argued that contingency theses ought to be fully specified according to dimensions of modal range and subject (i.e. the evolutionary outcome at hand). Following this framework, Stephens’ directionality of drift challenges only a contingency thesis with a narrowly-specified subject, and a very broad modal range with an unlimited time period. More specifically, it is concerned with a contingency thesis that asserts that heterozygotic outcomes could occur. Naturally, the evidential threshold of denying this thesis is low since, insofar as a process does not lead to heterozygosity eventually, then the process is not a source of contingency. Thus, if directionality were to challenge a contingency thesis that is specified in a way to be interesting, then it must be logically stronger as to prescribe certain, logically stronger, evolutionary outcomes to a sufficiently greater probability (e.g. \(>0.5\) when there are only two outcomes). Else, genetic drift is not directional in an appropriate sense to threaten evolutionary contingency, ordinarily construed.

To sum up, genetic drift as indiscriminate sampling is a source of contingency and is maximally strong for a given number of possible drift outcomes. This is because these sampling processes are, a priori, statistically random. However, genetic drift as (consequent) sampling error can be directional in certain senses (i.e. aiming towards homozygosity). However, whether this directionality is enough to be in tension with contingency claims as to fail to constitute a source of contingency depends on how those contingency claims are specified in their modal range indices and outcomes.

Sources of contingency versus sources of directionality

Within a modal range, a legitimate source of contingency may not be sufficient to result in an outcome that is highly evolutionarily contingent. This is because sources of contingency will almost always be in the presence of other sources of contingency and/or, what might be called, sources of directionality though these are really just two sides of the same coin. An evolutionary factor is a source of directionality to the extent that it fails to be a source of contingency. The various sources of contingency and directionality will interact together in determining the total objective probability of an outcome’s evolution.Footnote 16 And, in some cases, a source of contingency can be overridden by a strong source of directionality.

An example of a strong source of directionality is artificial selection. So, suppose that we encounter an instance of random mutation that is a genuine source of contingency. Despite its being a source of contingency, the resultant evolutionary outcome could eventually be abated by a source of directionality like artificial selection. That is—even after several bouts of random mutations in the population, artificial selection would just repeatedly ensure the survival and/or reproduction of a specific variant, regardless of the probability that the variant or its alternatives arise in the population. But the point is that just because a source of contingency is subject to some counteraction by a source of directionality does not mean there was no source of contingency in the first place. This is akin to how the displacement of a Newtonian ball can be dependent on a whole variety of forces, other than gravity, without having to exclude the existance of gravitational force. Much like Newtonian paradigm, the various sources of contingency and sources of directionality ought to be ‘summed’ together in order to determine the resultant outcome.

This sort of interactive pluralism is precisely the sort of spirit that Gould tried to invoke in his series of works condemning radical adaptationism (Gould 1977; Gould and Lewontin 1979). According to Gould, a “hardening” (1983) of the modern synthesis has led to a systematic trivialisation of the processes of mutations, drift, and inheritance, whilst mistakenly placing increasing reverence on natural selection in determining form. Much has already been said by others about adaptationism and the critique of it but, for our purposes here, it should be fitting to point out that Gould’s mission was not to banish natural selection from good evolutionary theory, but merely to put some reigns on it. Natural selection and, for that matter, sources of contingency should be understood, not in isolation, but in an arena where there could very well be other evolutionary factors at hand. Just like the question of where a classic Newtonian ball would rest, we must consider the various factors at hand: gravity, wind resistance, lift, inertia, et cetera.

In this paper, I have considered only a handful of candidate sources of contingency, but, in principle, there may be many more that impinge.Footnote 17 The moral of the story is that the totality of sources of contingency and source of directionality must be considered in order to determine the evolutionary contingency of an outcome. To say merely that something is a source of contingency is not necessarily to say that its outcomes are evolutionarily contingent. Whether this is true depends on whether there are other factors at hand impinging.

But it is for this very reason that it may be sometimes difficult to ascertain whether an evolutionary factor is indeed a source of contingency or a source of directionality. That is—in some cases, there may be conflating factors. As is the norm in any physical kinematic study, the totality of forces must be decomposed into several factors, which may each have varying directionalities and magnitudes. Likewise, in order to test for sources of contingency and sources of directionality, they must be made the independent variable in some experimental way. The thought is to render a contended source in an ‘experimental vacuum’. Supposing that such sources can be isolated, we can calculate their strength of source using some formal statistical methods. This brings us to the next section.

Shannon’s information entropy

Under the probabilistic conception of a source of contingency, there were, notably, two properties that determined the strength of a source of contingency: (1) the probability distribution of the possible outcome array, and (2) the number of outcomes in that array. However, it was not clear how one was to quantify the strength of a source of contingency. Yet, this is part and parcel of the task of identifying sources of contingency. And, moreover, it was not obvious how one is to compare the strengths of two different sources of contingency when they differed in one or more of these properties. That is—it was not easy to say whether a particular source of contingency was stronger than another.

In this section, with the help of statistical methods, I offer a systematic means of determining the strength of a source of contingency. To begin, notice that both the probability distribution and the number of possible outcomes covaries with the collective differences between each and every one of the probability values. The collective difference is the summation of all combinatorically possible values of a random variable X and, as such, some function of it denotes the equitability of a probability distribution. As the shape diverges from uniformity, the collective differences increase. And, as the number of possible outcomes increase, so does the collective difference. Thus, an appropriate statistical measure for the strength of a source of contingency must be some function involving both the probability distribution and number of possible outcomes.

Following Shannon’s influential work in informational theory (1948), one can import a measure called information entropy (not thermodynamic entropy) for our purposes here since, as we shall see, it suffices to denote the strength of a source of contingency on account of fulfilling four criteria. Entropy has a marked history in informational theory, machine learning, and computer science as, under one valid interpretation, it constitutes the amount of information conveyed by revealing that a random variable X has a particular \(x_i\) value. It has also been adapted into biological and economical contexts. In the former, it is instantiated as the ‘Shannon index’, a widely taught statistic for ecological species diversity. However, in addition to being a measure of information conveyed or ecological species diversity, Shannon’s entropy is also an equally valid measure of uncertainty (the converse of equitability), and so, suits the purposes here.

With respect to the aim of quantifying the strength of a source of contingency, other statistical measures or so-called indices of diversity are inappropriate as they are either (1) concerned with differences from a central tendency (e.g. standard deviation, variance) and, as such, do not tell us the relationships of each probability value to the other probability values, or, (2) tell us how often the probability values differ from each other [Perry and Kader’s unlikability measure (2005)]. Rather, what is desired is some measure of spread that answers the question of how much each and every value in a probability distribution differ from each other. In other words, it ought to capture the magnitude of the differences of each probability value from the others. Abstractly put, it ought to satisfy the following four criteria:

-

1.

The measure is maximised when the probability distribution is uniform (i.e. the values are equiprobable).

-

2.

The measure is minimised as the probability distribution diverges from uniformity. In other words, as the probability values become less equitable, the measure minimises.

-

3.

As n increases, the measure should also increase, ceteris paribus. e.g. a uniform probability distribution with \(n+1\) has higher entropy than a uniform entropy distribution with n.

-

4.

Adding an outcome with 0 probability should not affect the measure. I.e. \(H(x_1,\ldots , x_n)=H(x_1,\ldots ,x_n, 0)\)

Due to our specialised requirements, only one statistical measure, to my knowledge, can perform such a task: Shannon’s information entropy as it fulfils all four requirements. To see this, consider its formulation (1948):

Firstly, it should be easy to see that a uniform probability distribution will have maximum entropy for a given number of outcomes. As established previously, each probability value in a uniform distribution will be \(\dfrac{1}{n}\), where n is the number of outcomes. So, x will be \(\dfrac{1}{n}\), and plugging in the numbers would give us the highest entropy for any given \(x_i\). When probabilities are equal, there is a maximum degree of uncertainty as to which outcome will occur. (This is the type of scenario embodied by genetic drift as indiscriminate sampling.) Criterion (1) is, therefore, met.

On the other hand, a probability distribution with only one outcome with a probability of 1 will have entropy of 0. In this case, no summation is needed since there is only one value. The p(x) would be 1, and the log of 1 is 0, therefore, \(H=0\). Entropy is minimised when there is only one outcome. As such, probability distributions in between absolute uniformity and absolute uni-modalism will have intermediate entropies. So, criterion (2) is also met.

Recall that taking into account the number of possible outcomes was also important. Entropy, unlike other measures, is able to do this since there will be an additional summation operation. So, as n increases, so does entropy. Abstractly, it does so by lowering the probabilities of the other values. So, criterion (3) is also fulfilled. Finally, if the probability distribution contains additional values of 0, the entropy is not affected. This makes intuitive sense as a non-possible outcome should not affect the strength of a source of contingency. On the other hand, a measure like standard deviation would fail this condition.

To apply entropy, let us invoke the example of mutagenesis presented in the “Chance variation” section, which had the following probability distribution: